Video description method based on dual-path fractal network and LSTM

A technology of video description and fractal network, applied in the field of video description and deep learning

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0053] The present invention will be further described in detail below in conjunction with the embodiments and the accompanying drawings, but the embodiments of the present invention are not limited thereto.

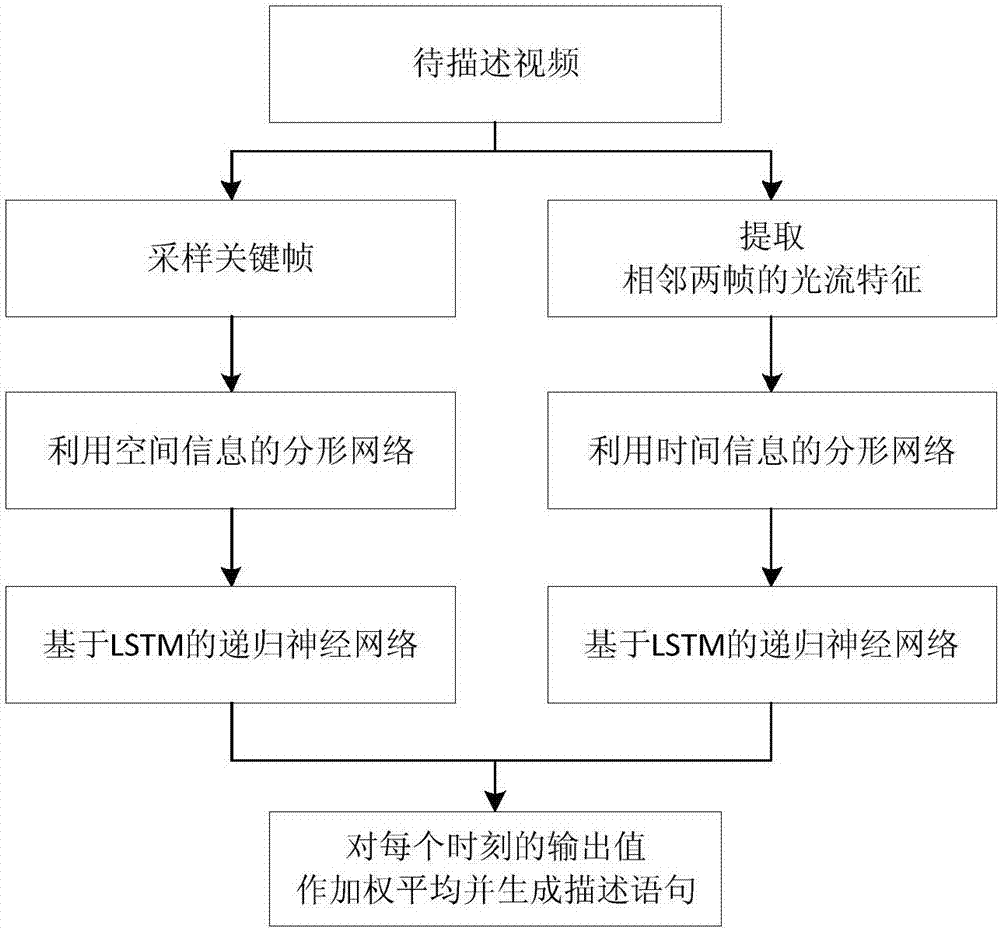

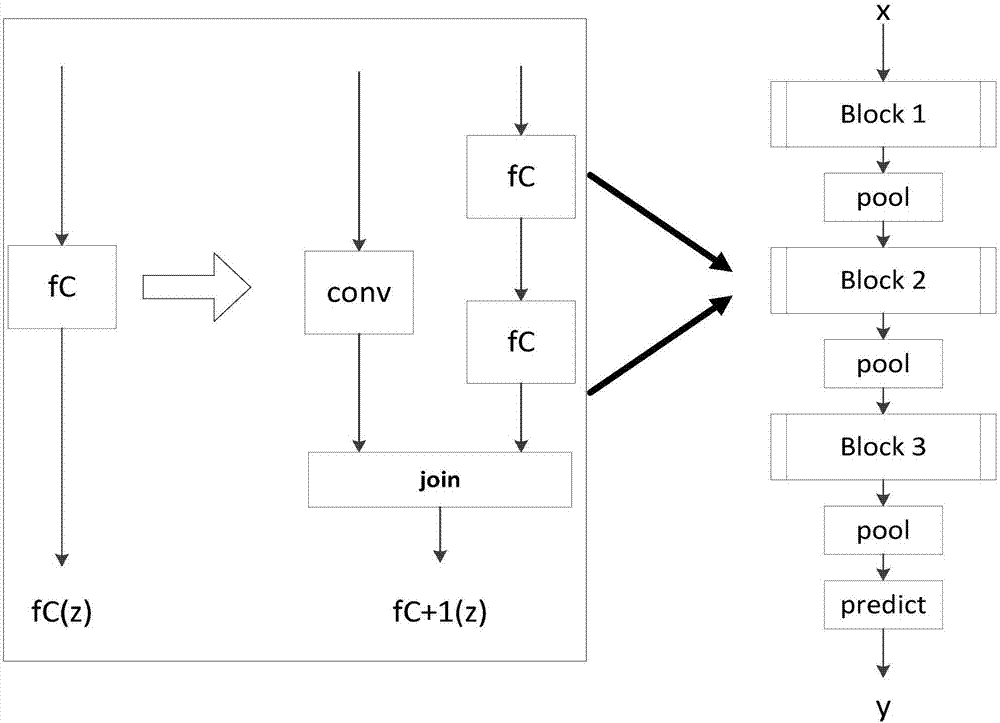

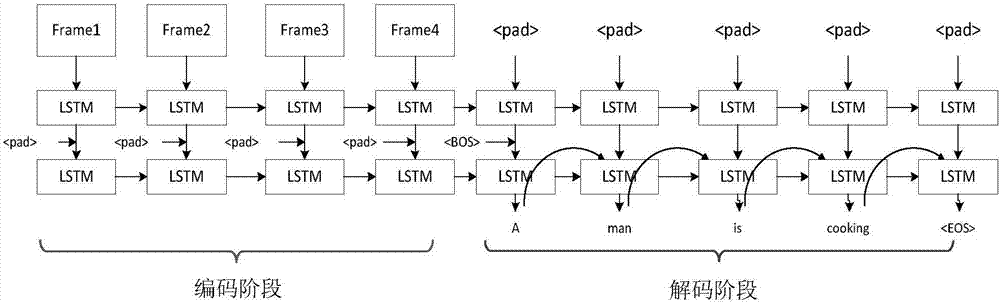

[0054] Sampling the key frames of the video to be described, and extracting the optical flow features between two adjacent frames of the original video, and then learning and obtaining the high-level feature expressions of the key frames and optical flow features through two fractal networks, and then input them into two Based on the recurrent neural network model of the LSTM unit, the output values of the two independent recurrent neural network models at each moment are weighted and averaged to obtain the description sentence corresponding to the video.

[0055] figure 1 It is an overall flowchart of the present invention, comprising the following steps:

[0056] (1) Sampling the key frame of the video to be described, and extracting the optical flow features betwee...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com