Speech emotion recognition method through fusion of feature assessment and multi-layer perceptron

A technology of speech emotion recognition and multi-layer perceptron, which is applied in speech recognition, character and pattern recognition, speech analysis, etc., can solve the problems of reduced classification accuracy, complicated implementation process, reduced training and recognition efficiency, etc., to achieve improved classification Accuracy, simple implementation process, avoiding the effect of training process

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0044] The present invention will be further described below in conjunction with the accompanying drawings and specific preferred embodiments, but the protection scope of the present invention is not limited thereby.

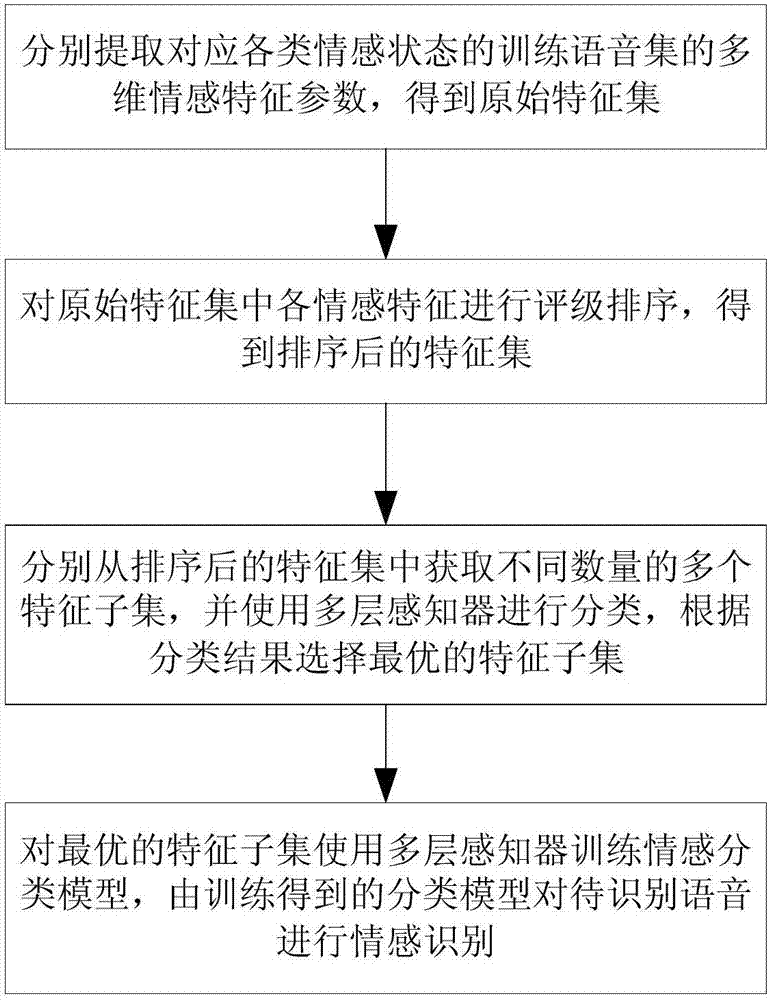

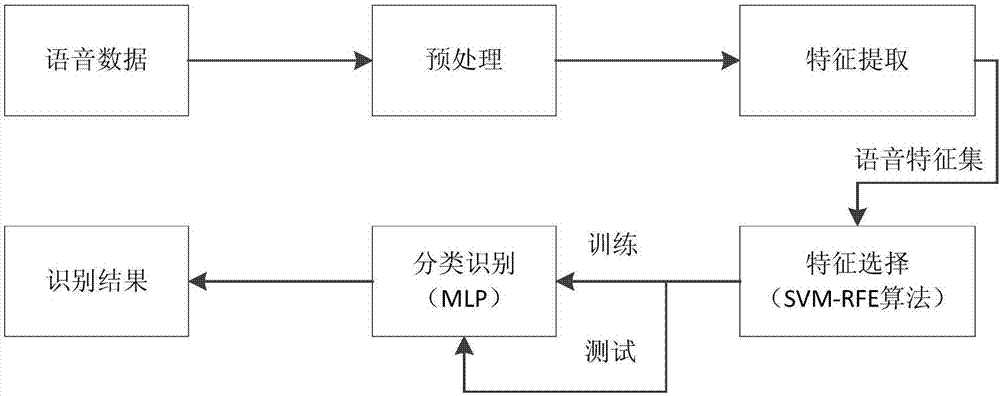

[0045] Such as figure 1 , 2 As shown, the speech emotion recognition method of this embodiment fusion feature evaluation and multi-layer perceptron, the steps include:

[0046] S1. Feature extraction: Extract the multi-dimensional emotional feature parameters of the training speech sets corresponding to various emotional states to obtain the original feature set;

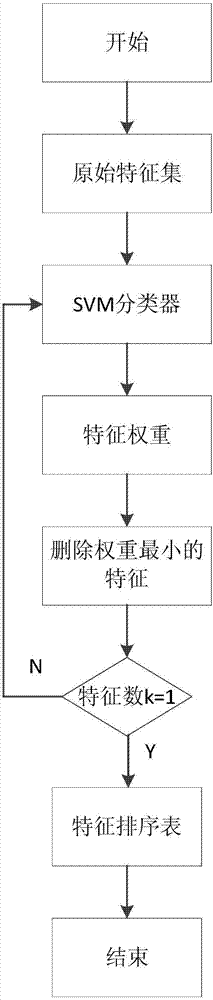

[0047] S2. Feature evaluation: Rating and sorting the emotional feature parameters in the original feature set to obtain the sorted feature set;

[0048] S3. Optimal feature set selection: Obtain different numbers of emotional feature parameters from the sorted feature sets to form multiple feature subsets, and use the multi-layer perceptron MLP to classify each feature subset, and select the optimal ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com