Multi-view language recognition method based on unidirectional self-tagging auxiliary information

An auxiliary information and multi-view technology, applied in speech recognition, speech analysis, instruments, etc., can solve problems such as the flat auxiliary features at the word level, the inability to improve the effect, and the single type of auxiliary features of the multi-view language model.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

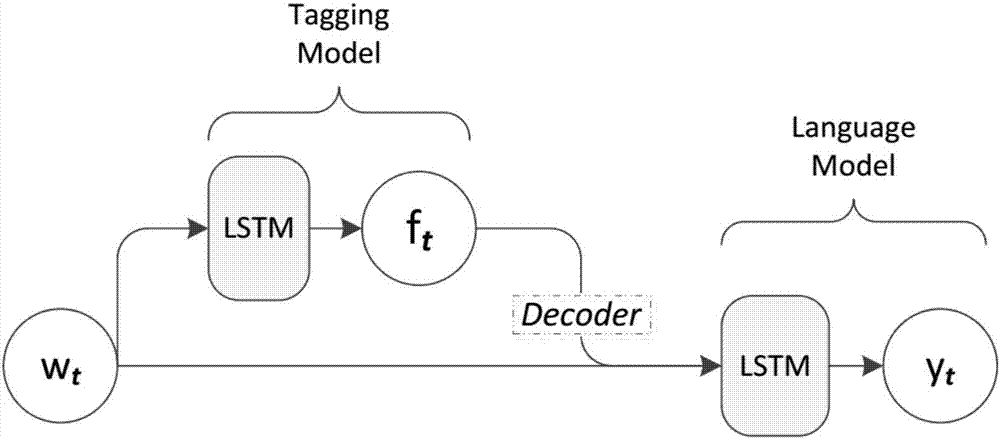

[0021] Such as figure 1 As shown, this embodiment includes: an annotation model and a multi-view language model used to generate auxiliary vectors containing only previous information at the word level, wherein: the annotation model converts the annotation features of bidirectional information in the information to be identified into unidirectional The characteristics of the information, the labeling model determines the classification and labeling of the input word, and its output together with the word vector is used as the input of the language model and forms a multi-view structure.

[0022] The information to be identified w t It is a one-dimensional array with only one position being 1 and the other bits being 0, where t is the current moment, and the information to be recognized is used as the input of the labeling model and the language model at the same time.

[0023] The labeling model adopts a recurrent neural network (RNN) with a long-short time change (LSTM) unit...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com