Adaptive simultaneous sparse representation-based robust target tracking method

A sparse representation and target tracking technology, applied in image data processing, instrument, character and pattern recognition, etc., can solve the problems of high feature extraction requirements, weakening the performance of the classifier, and inability to accurately search, to reduce redundant template vectors, Reduce the effect of noise, reduce the effect of dimension

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0049] In the following, the robust target tracking method based on adaptive simultaneous sparse representation of the present invention will be further explained in conjunction with specific embodiments.

[0050] The robust target tracking method based on adaptive simultaneous sparse representation includes the following steps:

[0051] S1, according to the magnitude of the Laplace noise energy, adaptively establish a simultaneous sparse tracking model

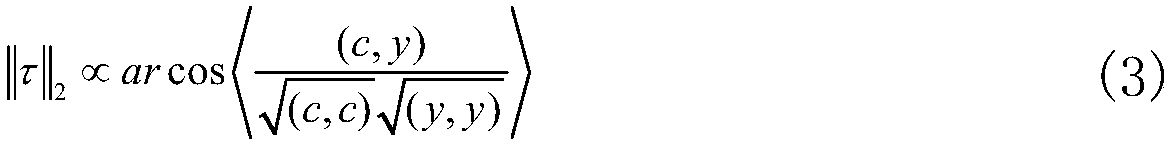

[0052] Contrast Laplace Mean Noise||S|| 2 With the given noise energy threshold τ, and based on the comparison results, the simultaneous sparse tracking model is established adaptively:

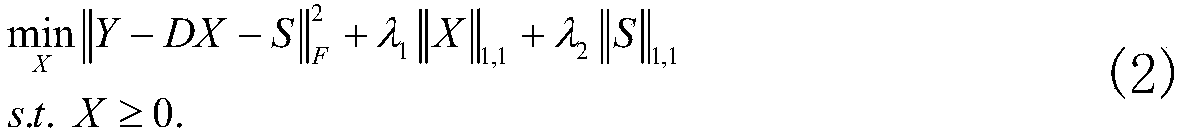

[0053] When ||S|| 2 When ≤τ, the simultaneous sparse tracking model is:

[0054]

[0055] When ||S|| 2 > At τ, the simultaneous sparse tracking model is:

[0056]

[0057] Among them, the definition D=[T,I] represents the tracking template, I represents the trivial template, the image collection of the given target template

[0058] T=[T 1 ,T 2 ,...,T n...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com