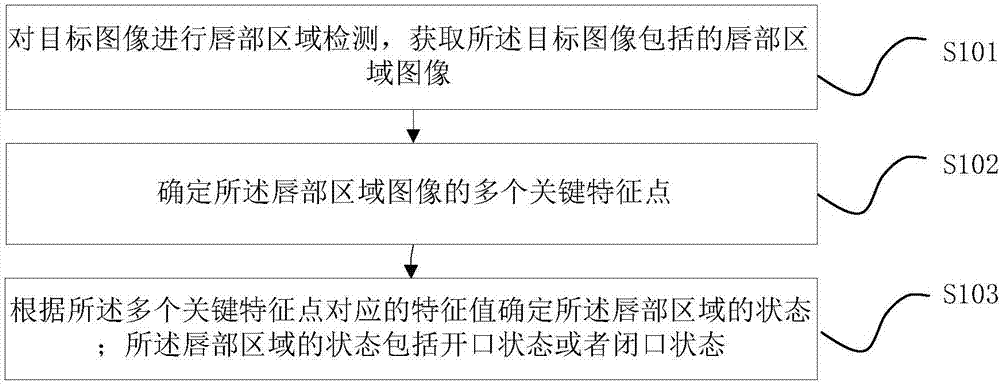

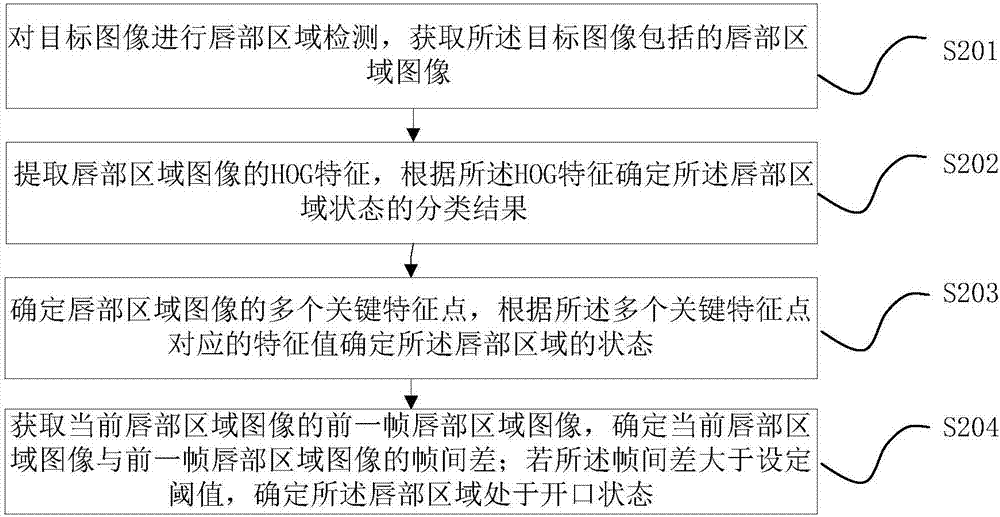

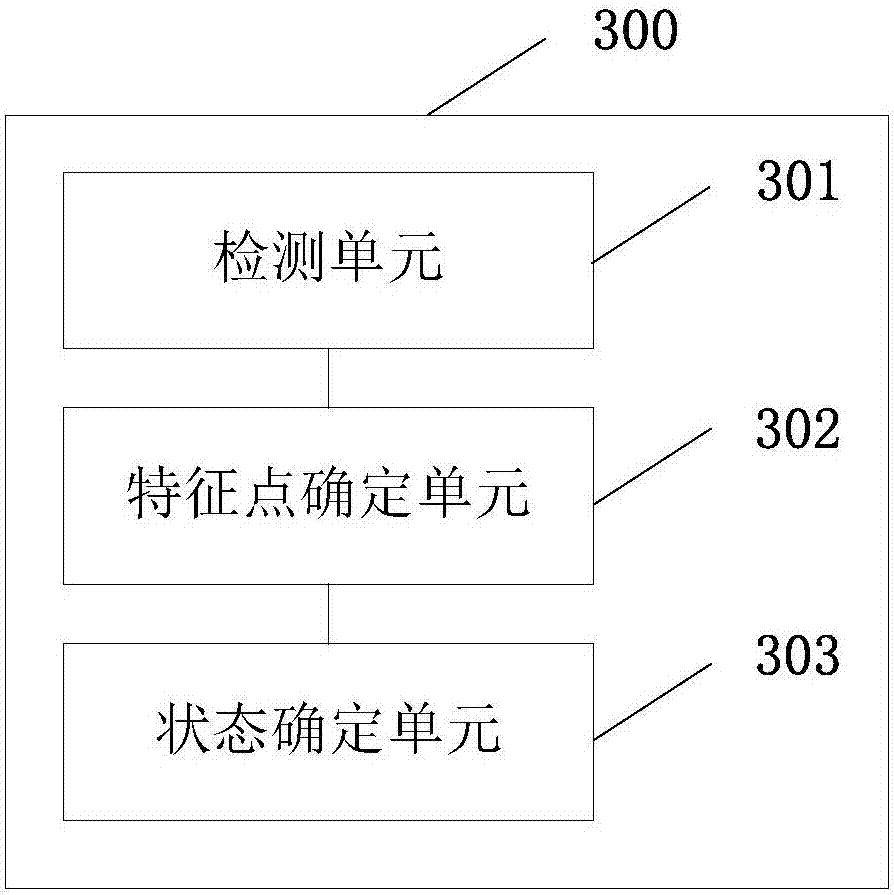

Method and device for detecting lip state

A state detection and lip technology, applied in the computer field, can solve the problems of noise data interference and low recognition efficiency, and achieve the effect of high recognition accuracy, reducing interference and removing influence

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0016] When implementing the present invention, the applicant found that when performing lip state detection, it can be detected through changes in received voice data or additional external sensors such as infrared sensors, but these methods require additional equipment or sensors, and cannot be detected through Pure image visual information for lip state detection.

[0017] The embodiment of the present invention provides a lip state detection method and device, which can effectively detect the state of the lip area, with high recognition accuracy and low cost, and can effectively remove the influence of noise data such as silent frames on the lip language recognition results, reducing Interference, improve data processing efficiency.

[0018] In order to enable those skilled in the art to better understand the technical solutions in the present invention, the technical solutions in the embodiments of the present invention will be clearly and completely described below in co...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com