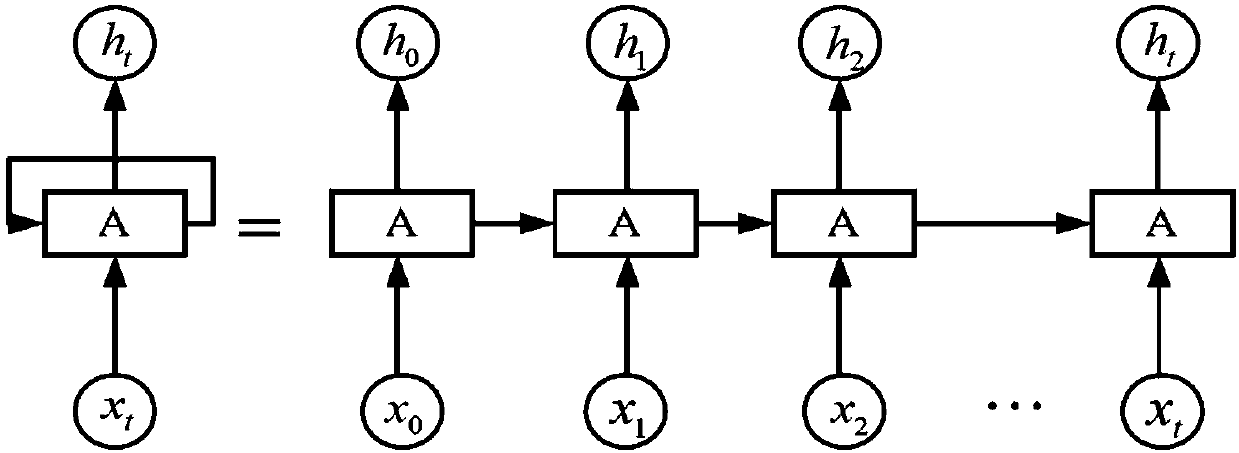

GRU based recurrent neural network multi-label learning method

A recursive neural network and neural network technology, applied in the field of multi-label learning of recurrent neural network based on GRU, can solve the problems of complex structure, disappearing gradient, and inability to effectively learn the basic characteristics of samples, and achieve simple structure and improved The effect of accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

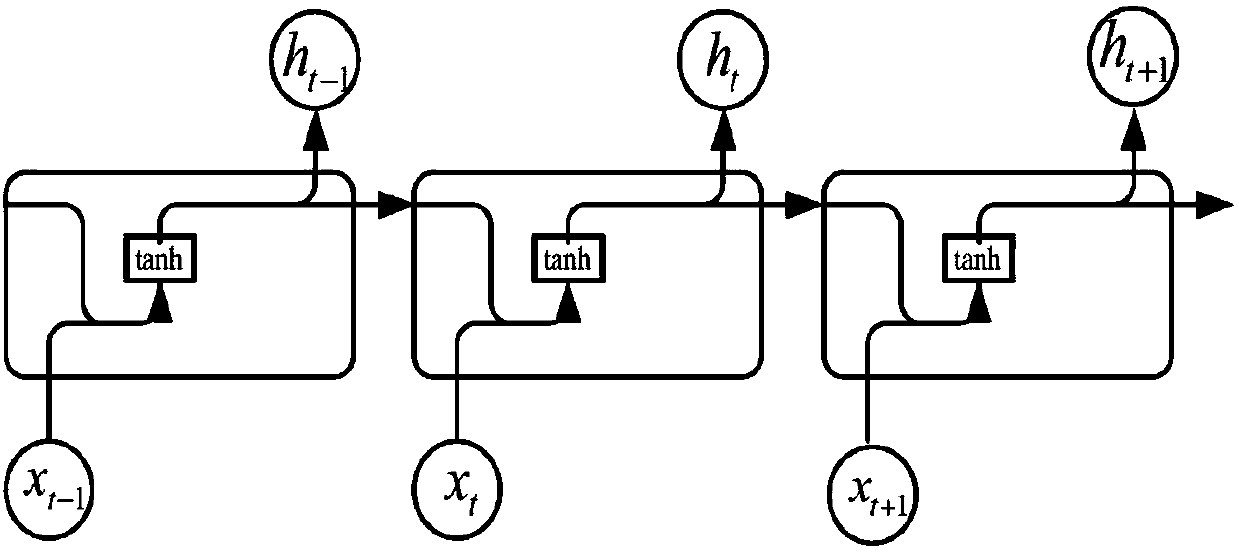

Method used

Image

Examples

Embodiment Construction

[0033] In order to make the technical solution of the present invention clearer and clearer, the following will be further described in detail with reference to the accompanying drawings. It should be understood that the specific embodiments described here are only used to explain the present invention, and are not intended to limit the present invention.

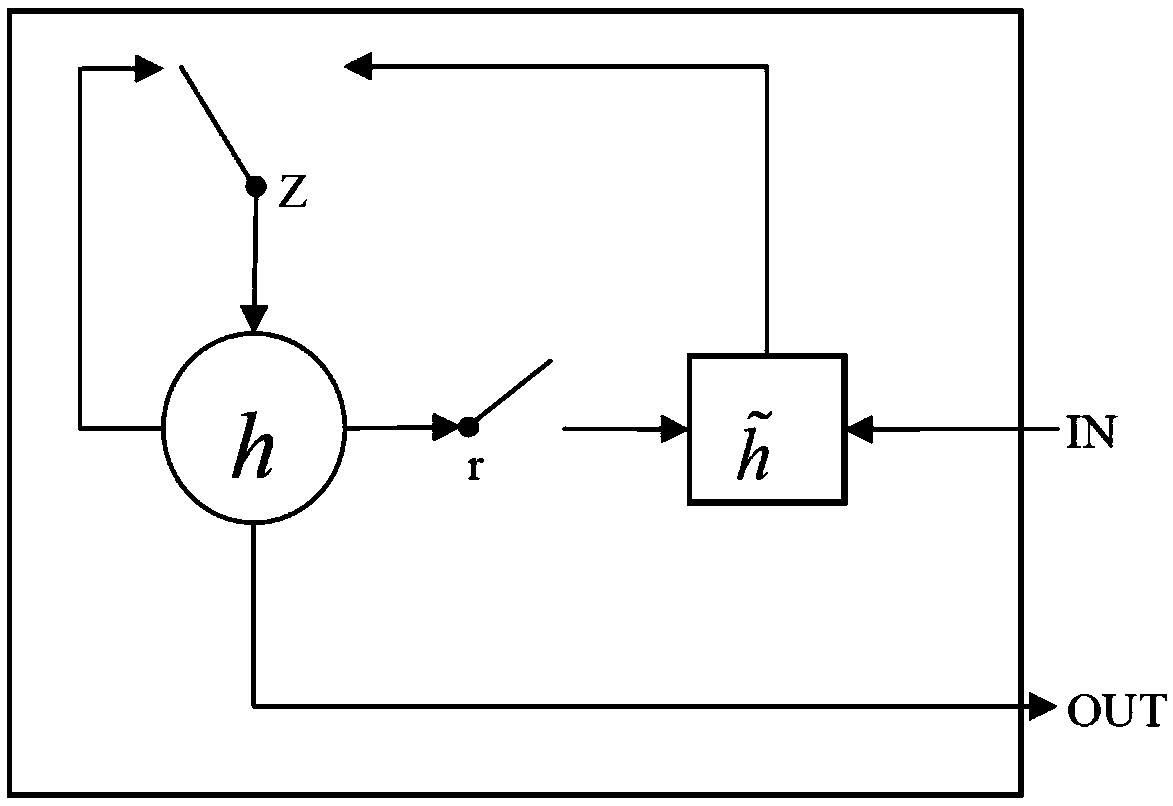

[0034] Figure 4 is a schematic diagram of the GRU-based RNN multi-label classifier in the present invention, through which the context vector h can be obtained T , and then output through the softmax layer Then use the multi-vector label y i Construct the loss function L of the i-th sample label pair i , the specific implementation process is as follows:

[0035] Suppose the sample label pair Contains N training samples, where the sample the y i is sample x i The multi-label vector of , We take the sample x i Do normalization so that its value is in [0, 1]. first put x i Zero mean, and then use variance stan...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com