GRU (Gated Recurrent Unit)-CRF (Conditional Random Fields) conference name recognition method based on language model

A technology of GRU-CRF and language model, which is applied in the field of named entity recognition combined with GRU and conditional random field, to achieve improved effects, reasonable labeling sequence, and more effective labeling sequence

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0025] In order to make the purpose, technical solution and characteristics of the present invention more clear, the specific implementation of the method will be further described below.

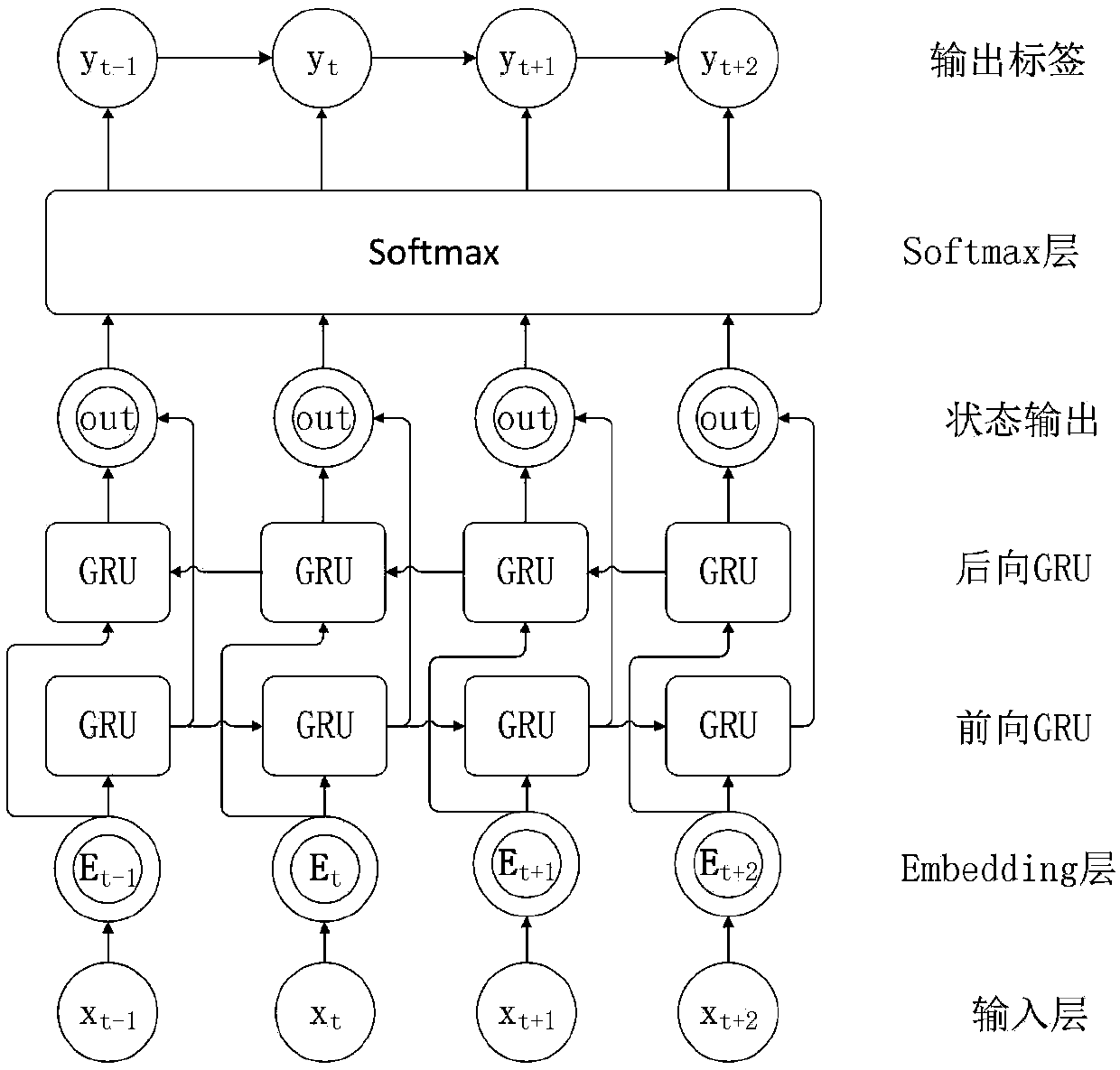

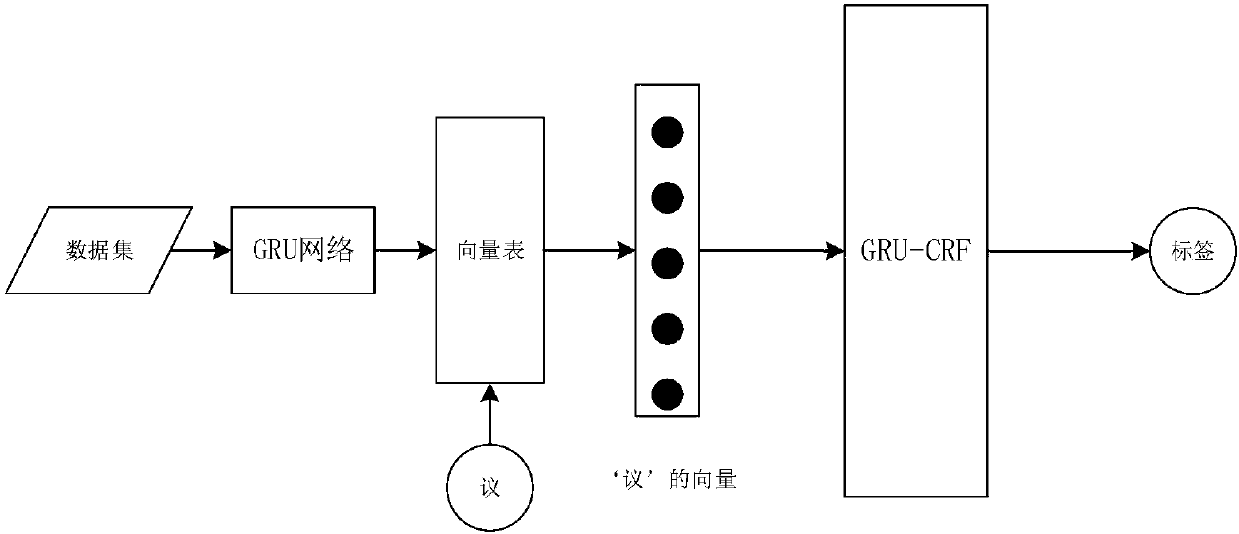

[0026] Both the recognition model and the language model of the present invention use GRU, and the method of combining GRU and CRF is adopted in the recognition model. Compared with other methods, the advantages of the present invention are:

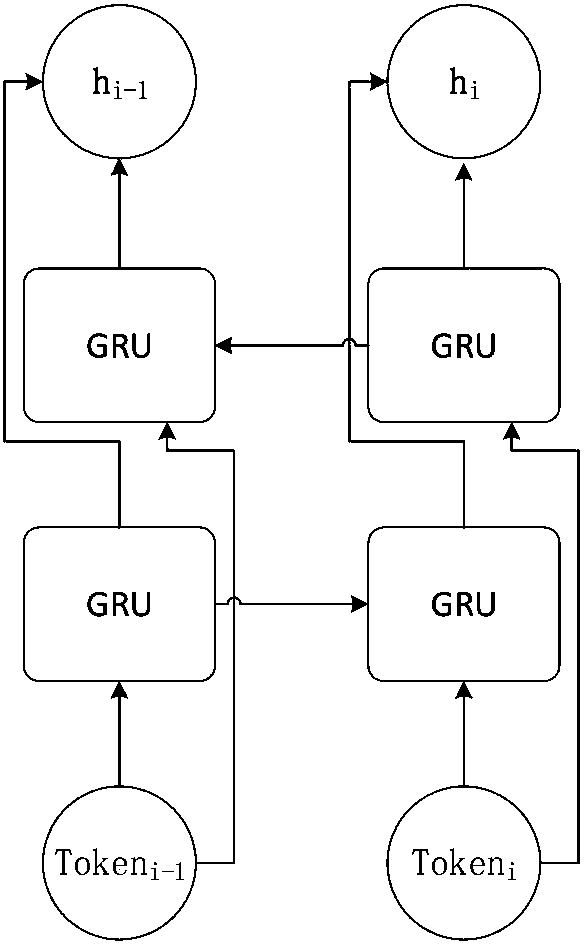

[0027] As a variant of cyclic neural network, GRU has the advantages of cyclic neural network and is suitable for processing sequence data such as natural language. At the same time, theoretically speaking, GRU has fewer parameters, which is more computationally efficient than LSTM and requires relatively less training data.

[0028] GRU can automatically learn low-level features and high-level concepts without requiring tedious human work such as feature engineering or domain knowledge. It is an end-to-end recognition method.

[0029] Named entity r...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com