Dual model fusion-based video behavior segmentation method and apparatus

A video segmentation and behavioral technology, applied in character and pattern recognition, instruments, computer components, etc., can solve problems such as inability to recognize semantics

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0045] According to the following detailed description of specific embodiments of the application in conjunction with the accompanying drawings, those skilled in the art will be more aware of the above and other objectives, advantages and features of the application.

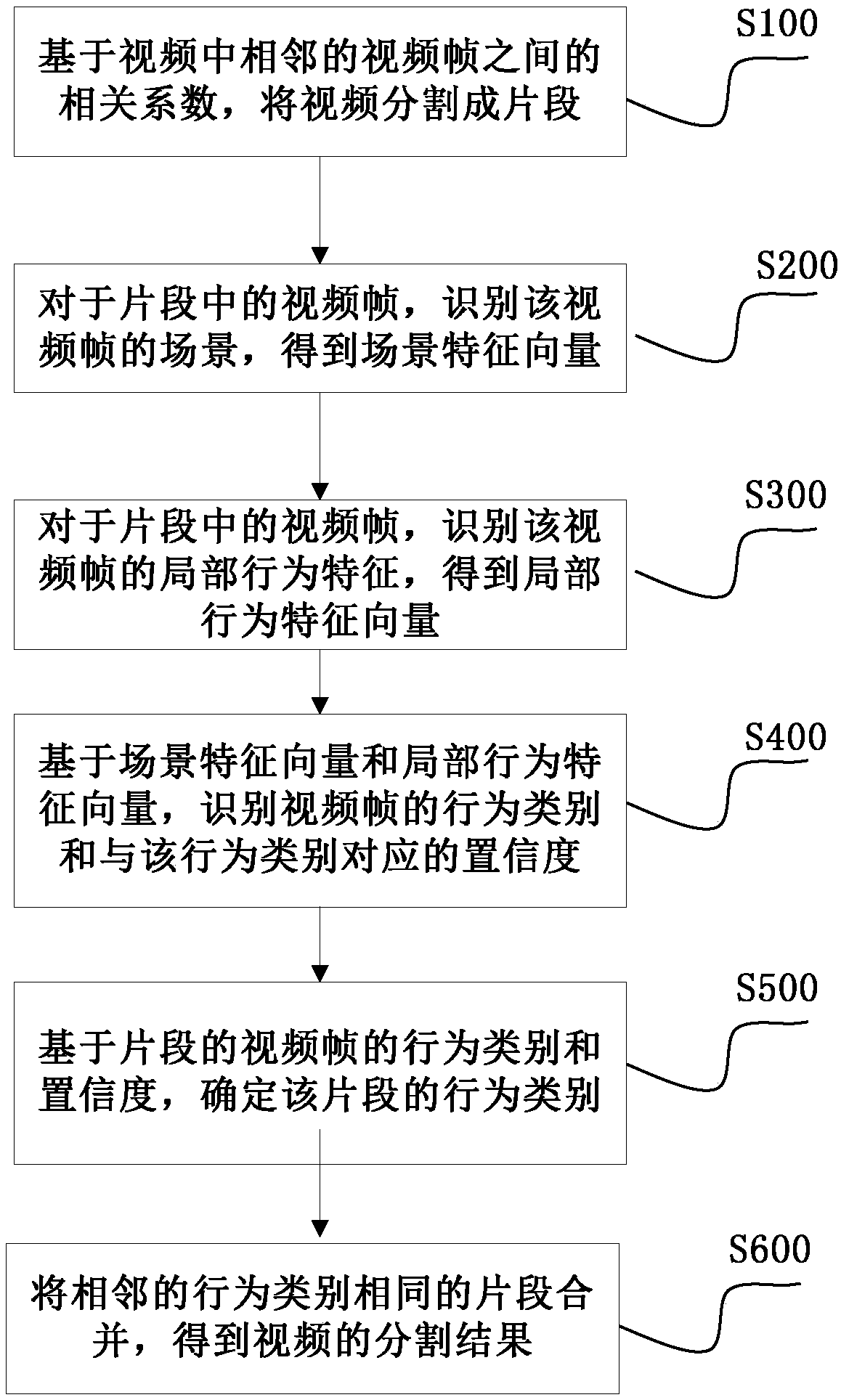

[0046] Embodiments of the present application provide a video segmentation method, figure 1 is a schematic flowchart of an embodiment of the video segmentation method according to the present application. The method can include:

[0047] S100 segment segmentation step: segment the video into segments based on correlation coefficients between adjacent video frames in the video;

[0048] S200 scene identification step: for the video frame in the segment, identify the scene of the video frame to obtain the scene feature vector;

[0049] S300 local behavior feature recognition step: for the video frame in the segment, identify the local behavior feature of the video frame to obtain a local behavior feature vector;...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com