Fusion network driving environment perception model based on convolution and atrous convolution structure

A technology that integrates the network and the driving environment. It is applied in the field of advanced driver assistance. It can solve the problems of large amount of calculation, high cost, and many repeated calculations. Effect

Active Publication Date: 2022-06-10

SOUTHEAST UNIV

View PDF4 Cites 0 Cited by

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

[0005] In order to solve the above problems, the present invention provides a fusion network driving environment perception model based on convolution and atrous convolution structure, which solves the problem that the current driving environment perception model has a large amount of calculation, repeated calculations, a single-task model solves a single problem, and the semantic segmentation model does not Semantic segmentation data set requirements are too high (the cost of pixel-level data labeling is too high), and the problem of multi-task driving environment perception cannot be completed at the same time. To achieve this goal, the present invention provides a fusion network driving environment based on convolution and hole convolution structure Perceptual model, concrete steps are as follows, it is characterized in that:

Method used

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

View moreImage

Smart Image Click on the blue labels to locate them in the text.

Smart ImageViewing Examples

Examples

Experimental program

Comparison scheme

Effect test

Embodiment Construction

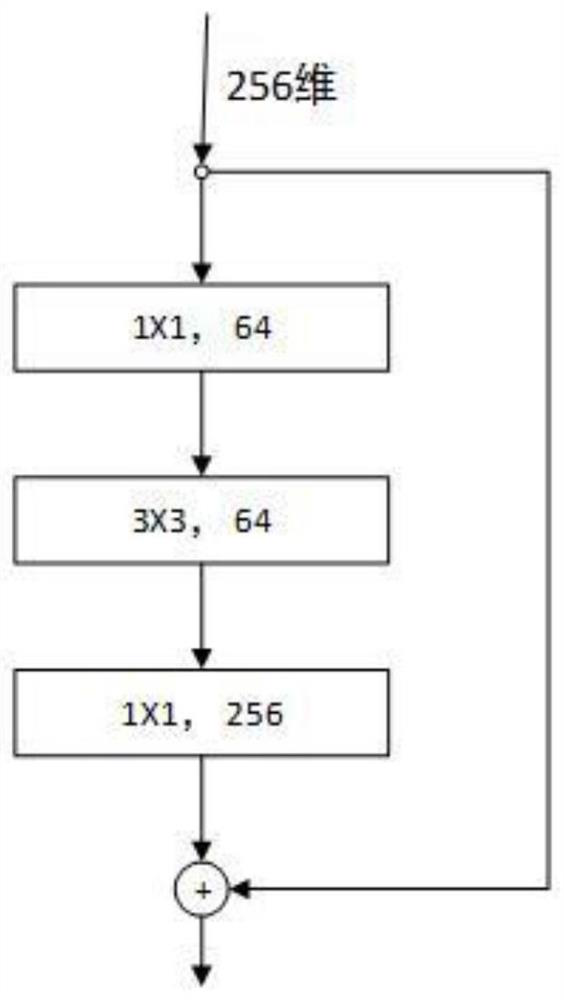

[0036] (5) block (1×1, 256, 3×3, 256, 1×1, 1024), where the first layer convolution stride is 2

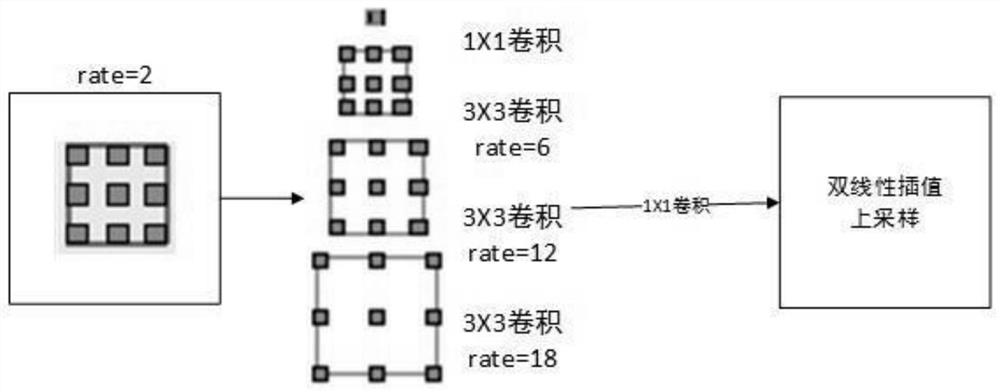

[0044] wherein, dilate-conv2d represents atrous convolution. Atrous convolution refers to the sparse convolution kernel, as shown in Figure 3, the empty convolution

[0045] Among them, the image pyramid consists of a common convolution with a convolution kernel of 1 × 1 and a convolution kernel of 3 × 3, and the sampling rate is 6,

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

Login to View More PUM

Login to View More

Login to View More Abstract

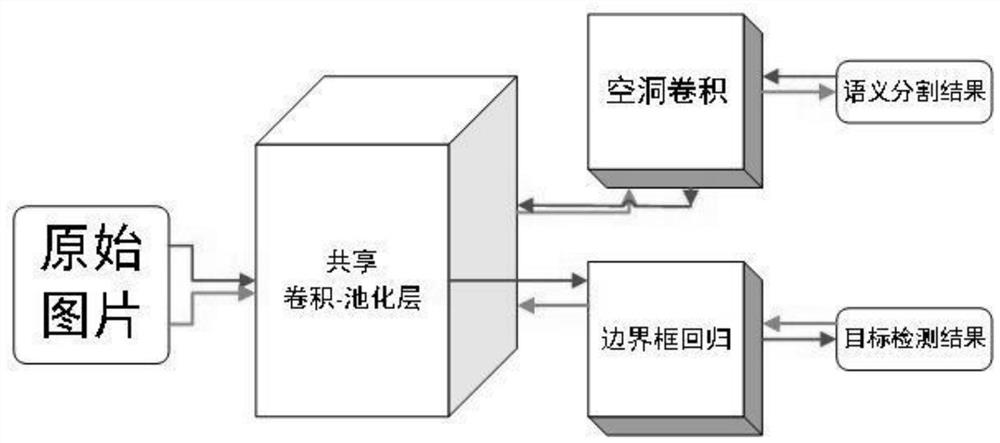

A fusion network driving environment perception model based on convolution and dilated convolution structure, which simultaneously realizes target detection and semantic segmentation. The video image of the road environment is captured by the forward-looking camera system installed on the vehicle; the underlying feature map of the image is obtained by using the residual network model; the fusion network is designed, including two sub-modules of target detection and semantic segmentation, which share the underlying feature map. Among them, the target detection module is responsible for predicting the target frame and category confidence, and the semantic segmentation module is responsible for pixel-level prediction for each category. Select appropriate loss functions for the two modules, and alternately train the perception models to converge in both modules; finally, use the joint loss function to train the two modules at the same time to obtain the final perception model. The present invention can simultaneously complete target detection and semantic segmentation with a small amount of calculation, and the perception model uses a large amount of data of target detection to assist the semantic segmentation module to learn the image distribution rule.

Description

Fusion network driving environment perception model based on convolution and atrous convolution structure technical field The present invention relates to the technical field of advanced automobile driver assistance, in particular to a kind of convolution based on convolution and hole convolution Structured Fusion Network Driving Environment Perception Model. Background technique The driving environment perception function is the Advanced Driver Assistance System ADAS (Advanced Driver Assistance System). an important function of the Assistance System. Existing driving environment perception mainly includes target detection (interested in target, such as pedestrians, vehicles, bicycles, traffic signs, etc., to get the location information and category information of the target in the image) and semantic segmentation (labeling a category for each pixel of the image). Driving environment perception can be used to assist driving driving decisions to reduce the occurr...

Claims

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

Login to View More Application Information

Patent Timeline

Login to View More

Login to View More Patent Type & Authority Patents(China)

IPC IPC(8): G06V20/56G06V10/82G06N3/04G06N3/08

CPCG06N3/084G06V20/56G06N3/045

Inventor 秦文虎张仕超

Owner SOUTHEAST UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com