UAV slam method based on hybrid visual odometry and multi-scale map

A technology of visual odometer and UAV, which is applied in the direction of navigation computing tools, navigation, instruments, etc., can solve the problems that are not suitable, the feature point method and the direct method cannot well adapt to the positioning requirements of UAV, and achieve relief Calculating pressure, realizing real-time pose estimation and environment perception, and improving safety performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0058] The present invention will be described in detail below with reference to the accompanying drawings.

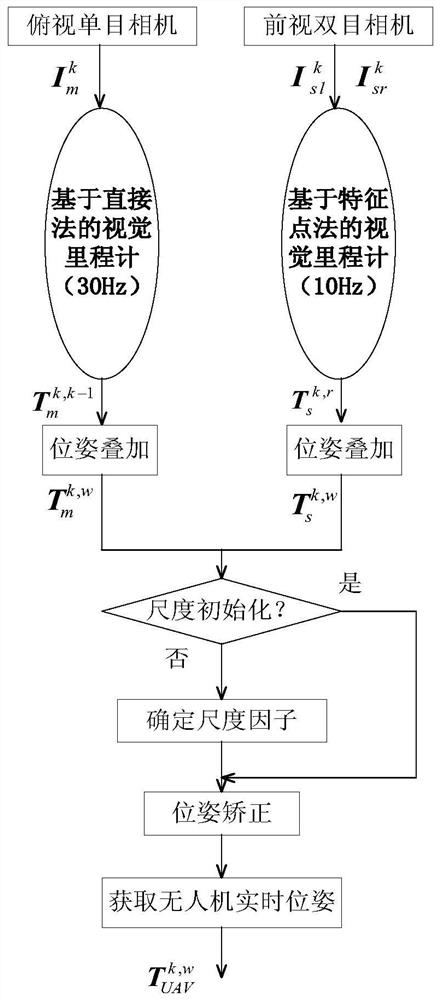

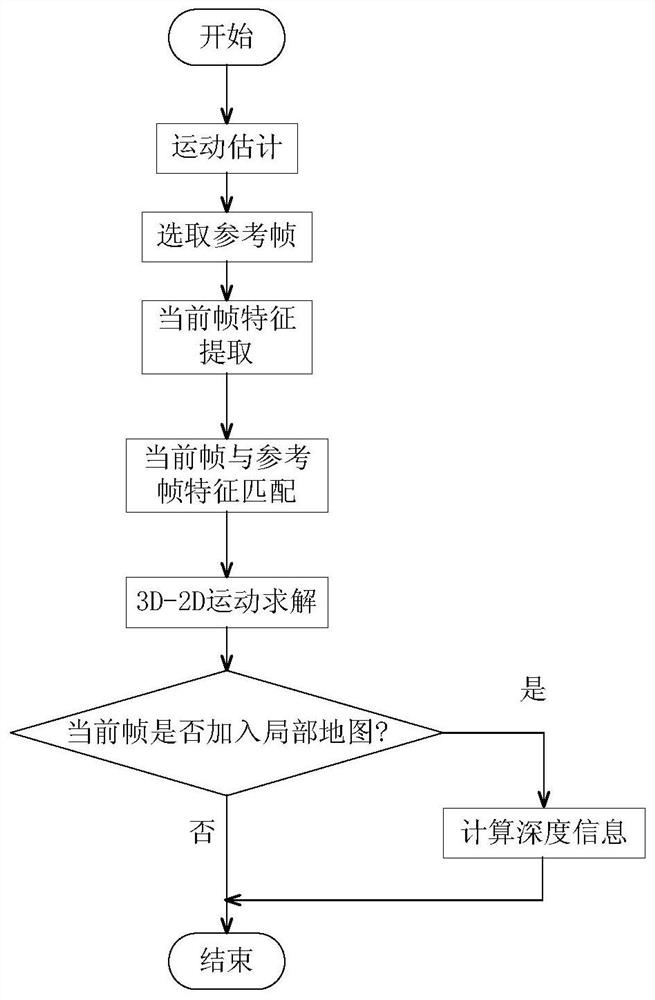

[0059] figure 1 A system block diagram of a mixed visual milemeter of the present invention, a mixed visual milemeter contains two threads based on a single-visual visual milemeter based on a direct method and a biopsy milemeter based on the feature point method. The drone platform is equipped with a single-eyed camera and a front-view binocular camera. The single-grade camera acquires 30 frames per second, and the two-machine camera acquires 10 frames per second. The airborne computer simultaneously turns on two threads, and the main thread runs a visual milemeter based on the direct method at a frequency of 30 Hz, and the other thread runs a visual milemeter based on the feature point method at a frequency of 10 Hz. If you get the synchronized single-grade camera image, Image corresponding to the left and right camera of the double-discipline camera will As an input ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com