dual-light image fusion model based on a depth convolution antagonism generation network DCGAN

A technology of deep convolution and image fusion, which is applied in graphics and image conversion, image data processing, character and pattern recognition, etc., can solve problems such as poor image fusion effect, and achieve the effect of reducing hardware pressure

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0032] The present invention will be described in further detail below in conjunction with the accompanying drawings.

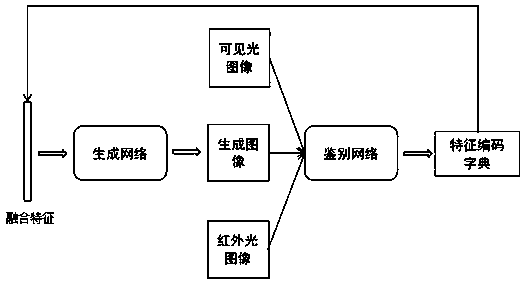

[0033] like figure 1 Shown is a dual-light image fusion model based on deep convolutional confrontation generation network DCGAN. This model fuses visible light images and infrared light images of the same object. The establishment process of this model includes the following steps:

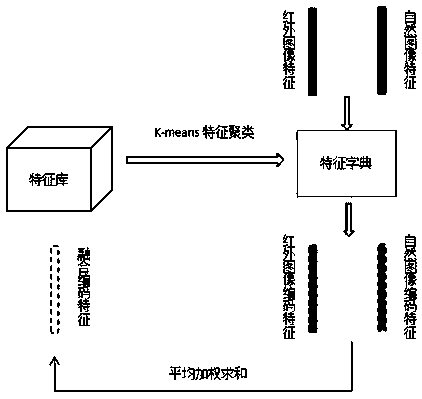

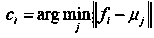

[0034] Step 1. Discrimination network extracts features: First, a large number of visible light images and infrared light images of the object are scaled to the same size according to the image ratio to form an image training library; then a convolutional neural network with the vgg network as the initial parameter is constructed as the discrimination network , use the image library to train the identification network, so that the identification network can effectively distinguish between infrared light images and visible light images; then input the visible light images and inf...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com