Patents

Literature

78results about How to "Improve fusion quality" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Adaptive and synergic fill welding method and apparatus

Owner:EDISON WELDING INSTITUTE INC

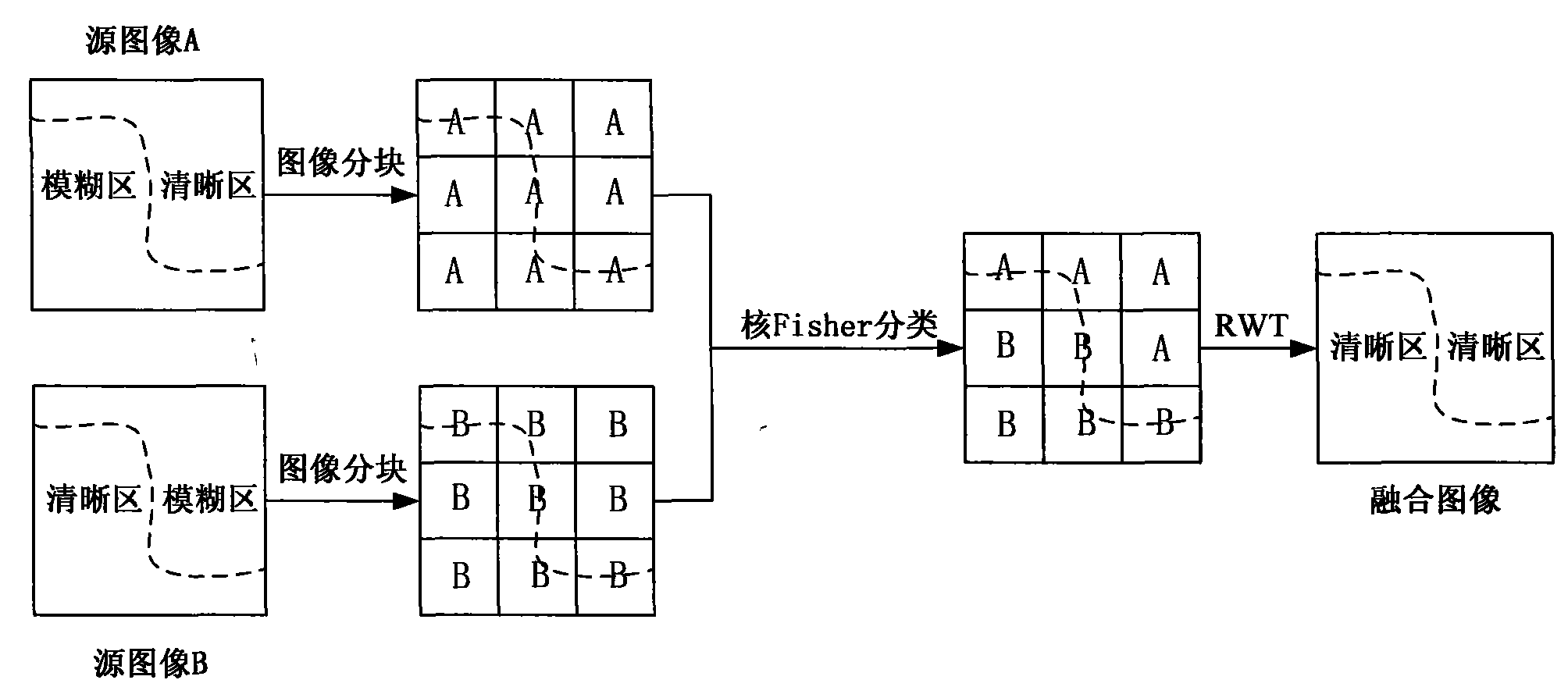

Multi-focusing image fusion method utilizing core Fisher classification and redundant wavelet transformation

InactiveCN101630405AEasy to integrateGood calculation efficiencyImage enhancementCorrelation coefficientSpatial correlation

The invention discloses a multi-focusing image fusion method utilizing core Fisher classification and redundant wavelet transformation. The method comprises the following steps: firstly, carrying out image block segmentation on source images and calculating definition characteristics of each image block; secondly, taking part of areas of the source images as a training sample and obtaining various parameters of a core Fisher classifier after training; thirdly, utilizing the known core Fisher classifier to obtain preliminary fusion images; and finally, utilizing redundant wavelet transformation and space correlation coefficients to carry out fusion processing on the image blocks positioned at the junction of the clear and fuzzy areas of the source images to obtain final fusion images. The invention has better image fusion performance, does not have obvious blocking artifacts and artifacts in fusion results, obtains better compromise between the effective enhancement of the image fusion quality and the reduction of the calculation quantity and can be used in the subsequent image processing and display. When wavelet decomposition layers with less number are adopted, the invention is more suitable for an occasion with higher real-time requirement.

Owner:CHONGQING SURVEY INST

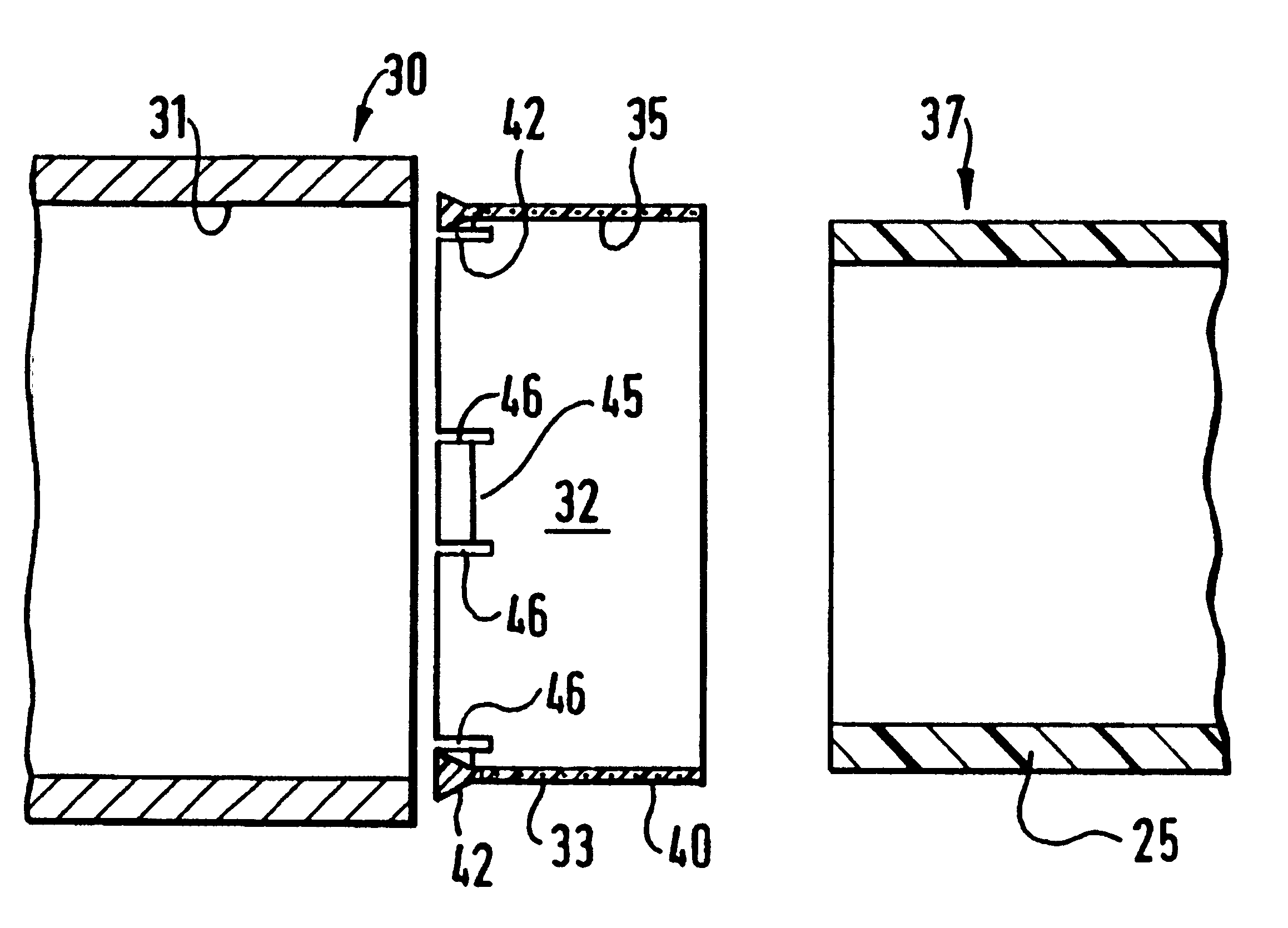

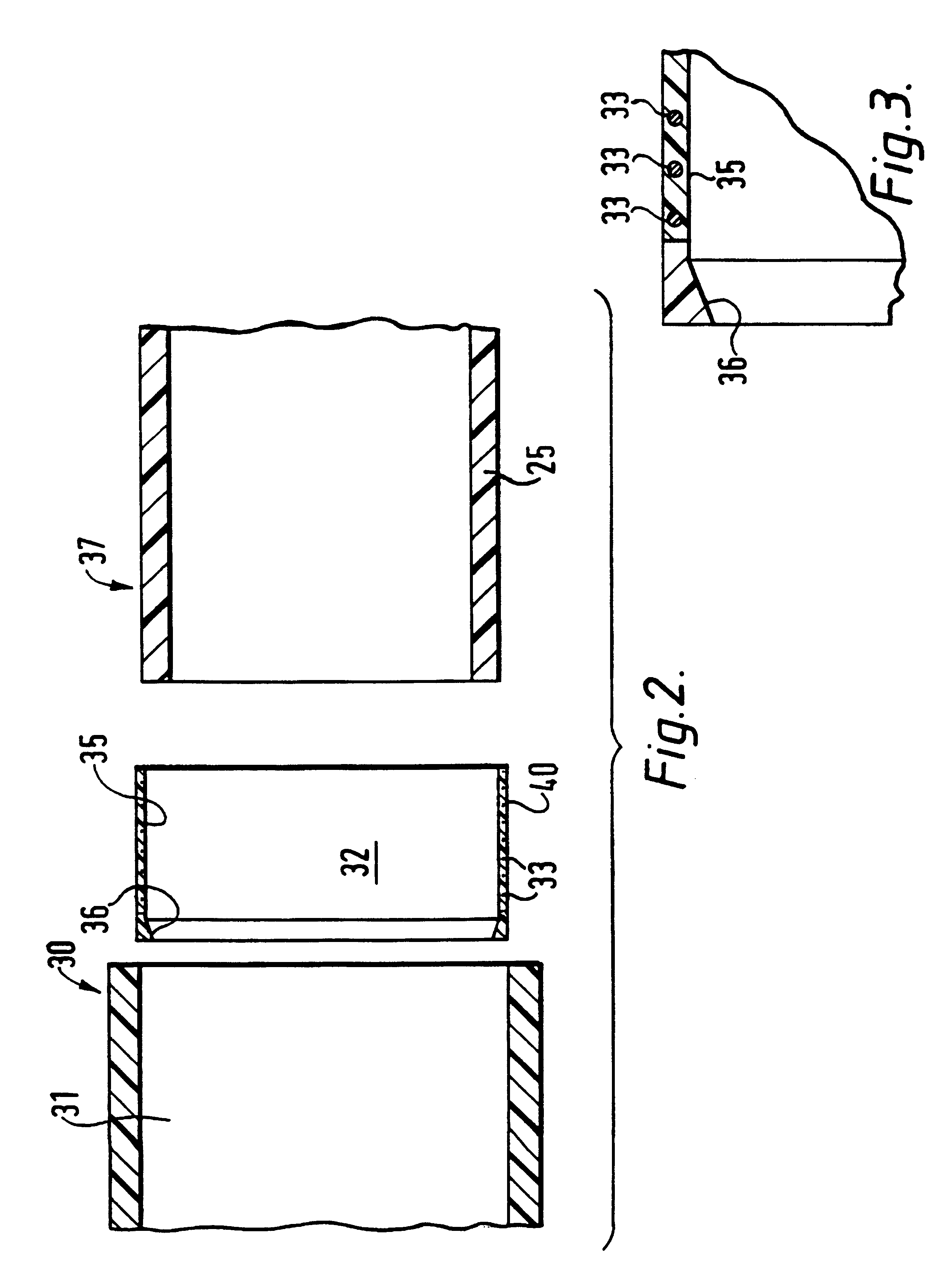

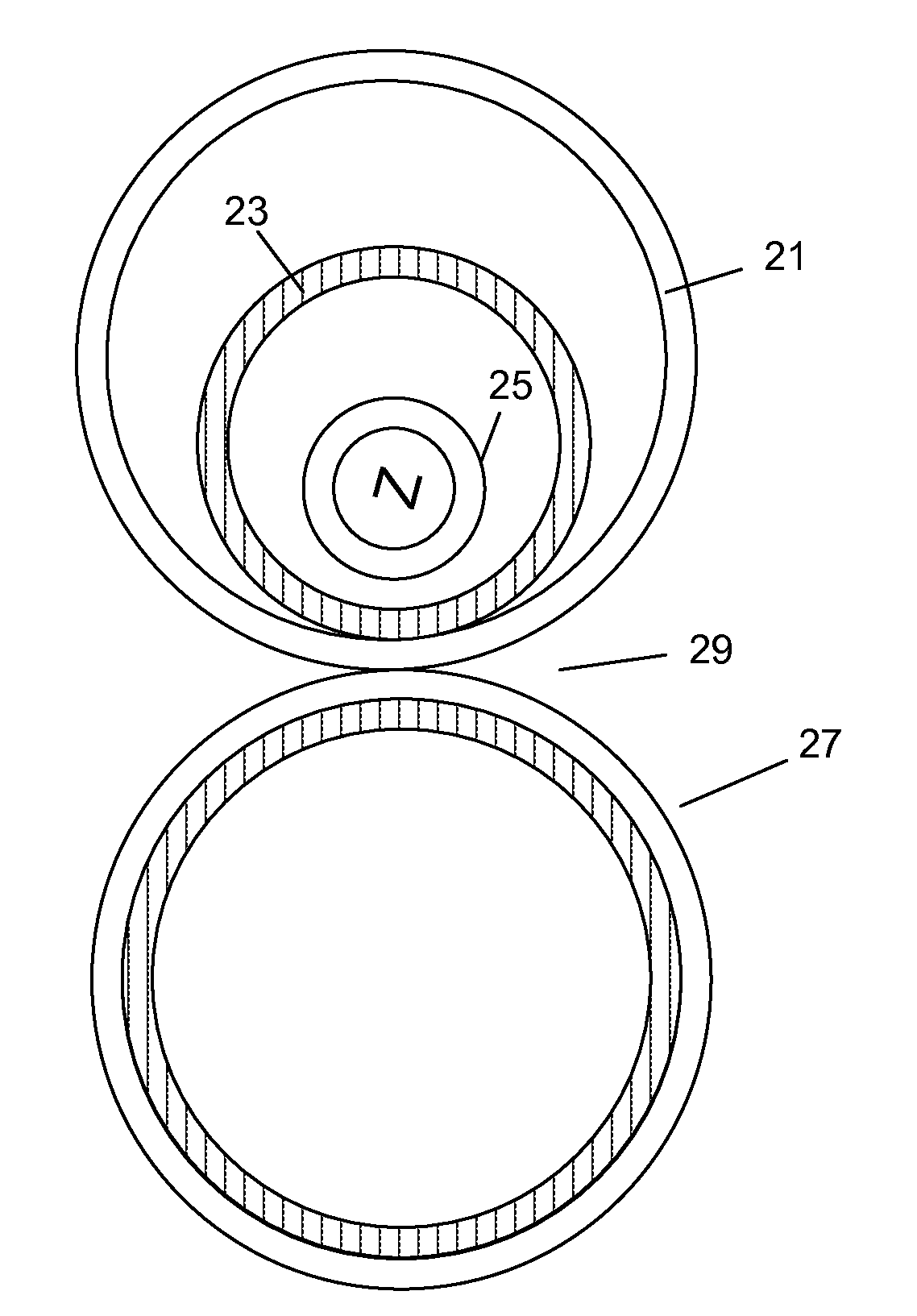

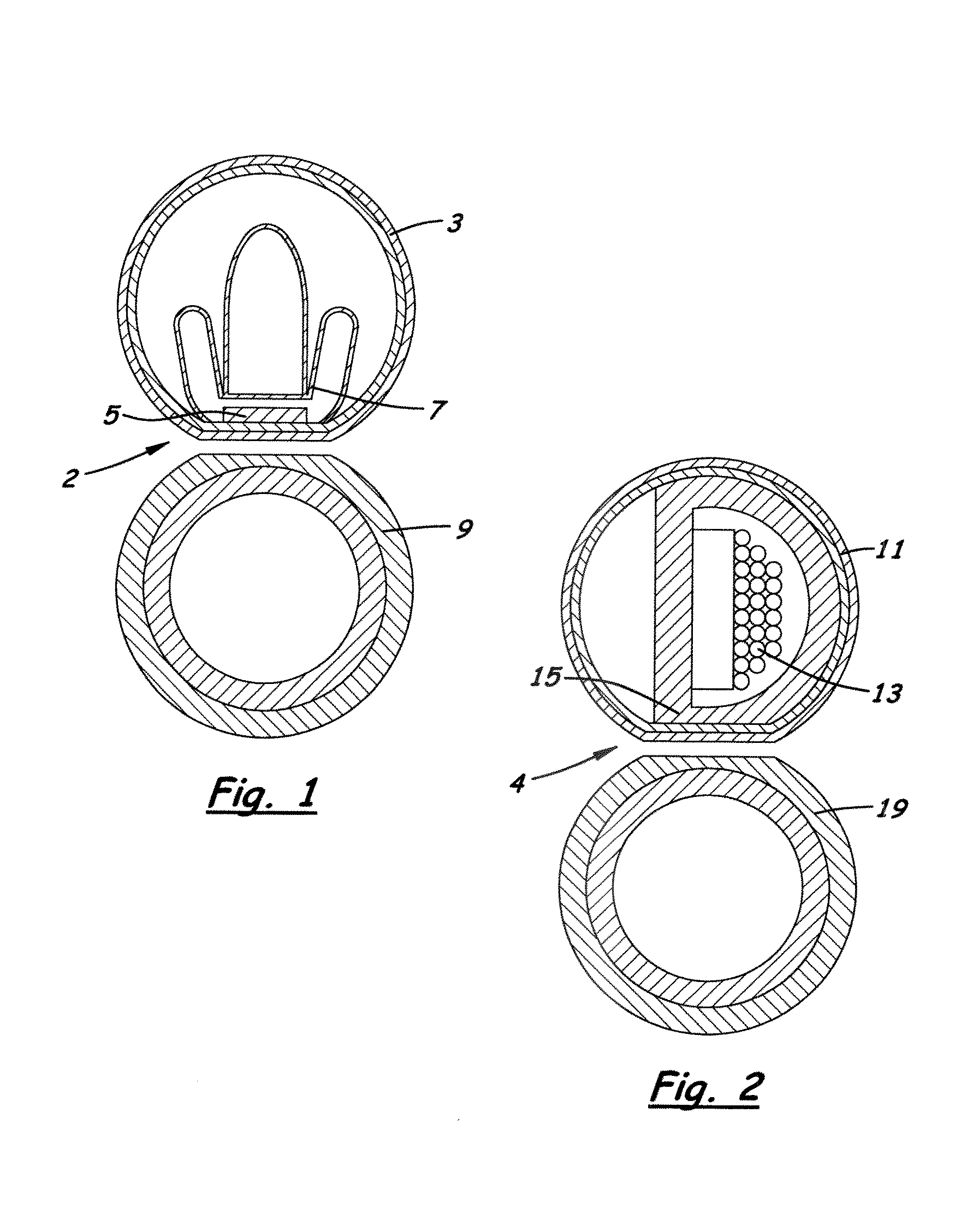

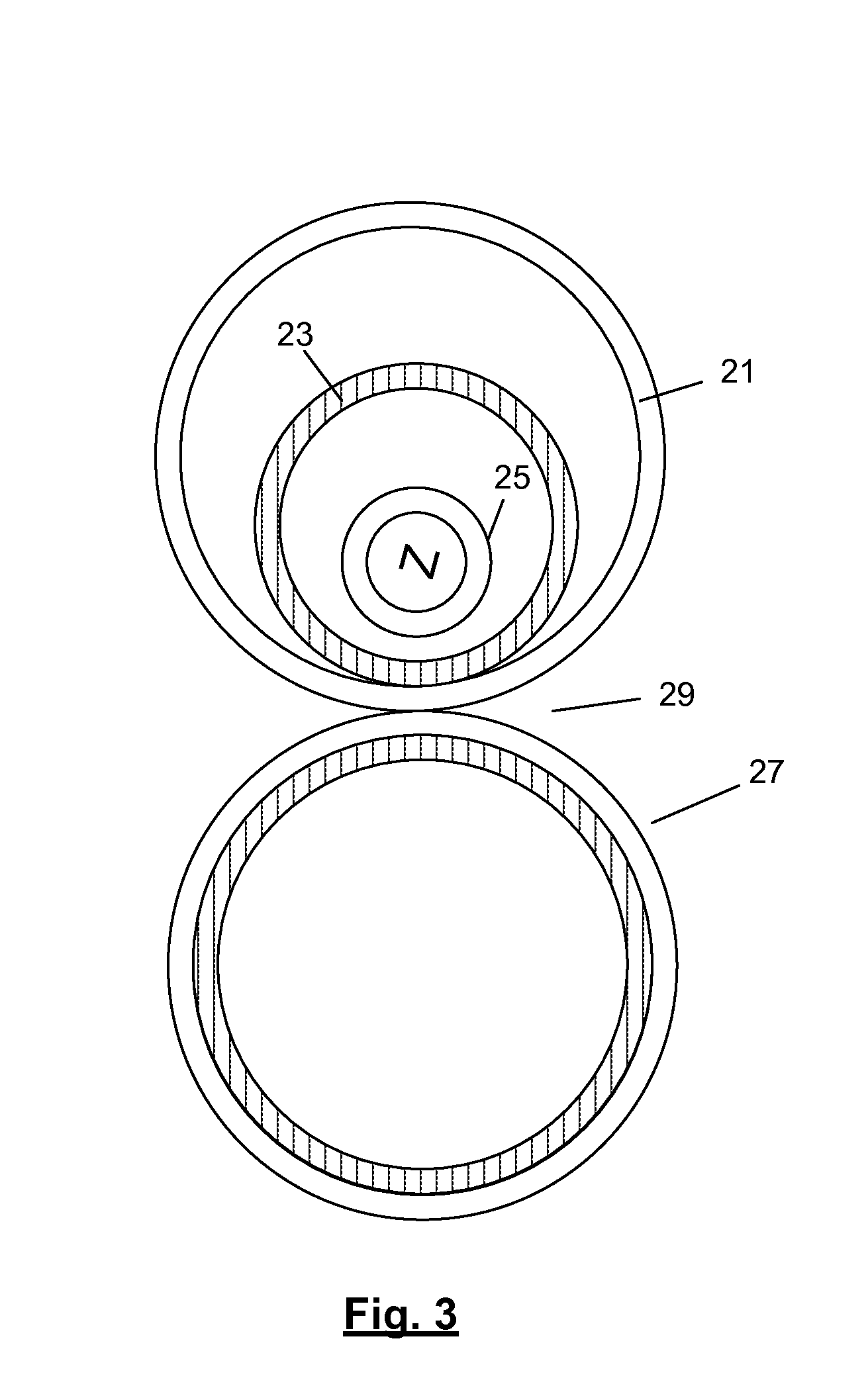

Apparatus and method for fusion joining a pipe and fittings

InactiveUS6193834B1Quickly and easily field-installedImprove usabilityLamination ancillary operationsLaminationEngineeringFlange

An apparatus for fusing a pipe to a fitting includes an induction heating element wholly encased or coated with a flusible thermoplastic polymeric material, a fitting having a socket or a flange. The induction heating element is adapted to be inserted into said socket or mounted on said flange, the fitting and the element together defining a throughbore, a portion of a pipe adapted to be inserted into the throughbore or mountable on said flange. The apparatus also includes a locating and maintaining member on the induction heating element with one or more projections adapted to be snap-fitted on the fitting, the pipe or both, and capable of locating and maintaining the position of the heating element relative to the fitting or the member portion. The induction fusion element is capable of fusing the fitting and the pipe portion.

Owner:UPONOR LTD

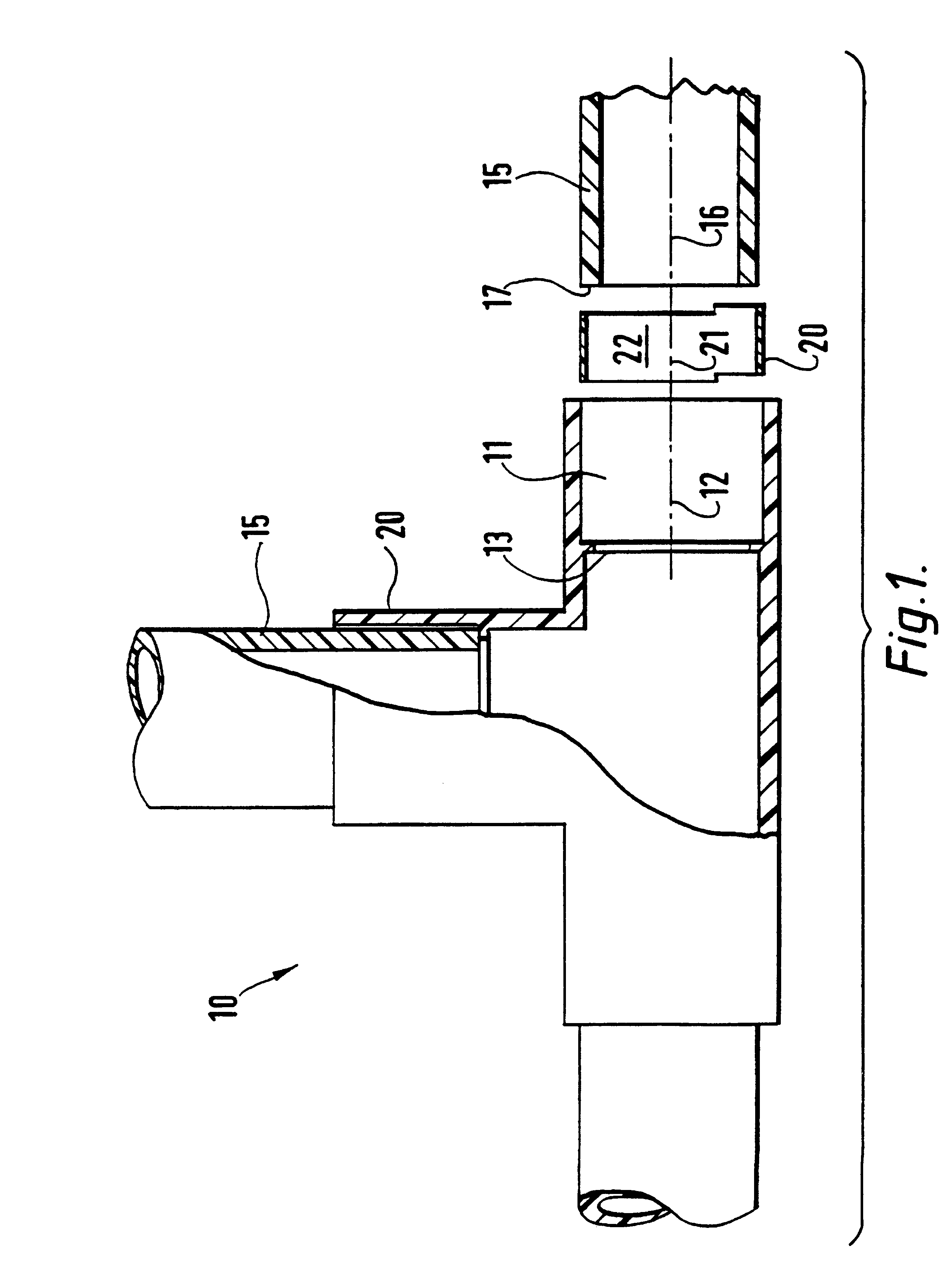

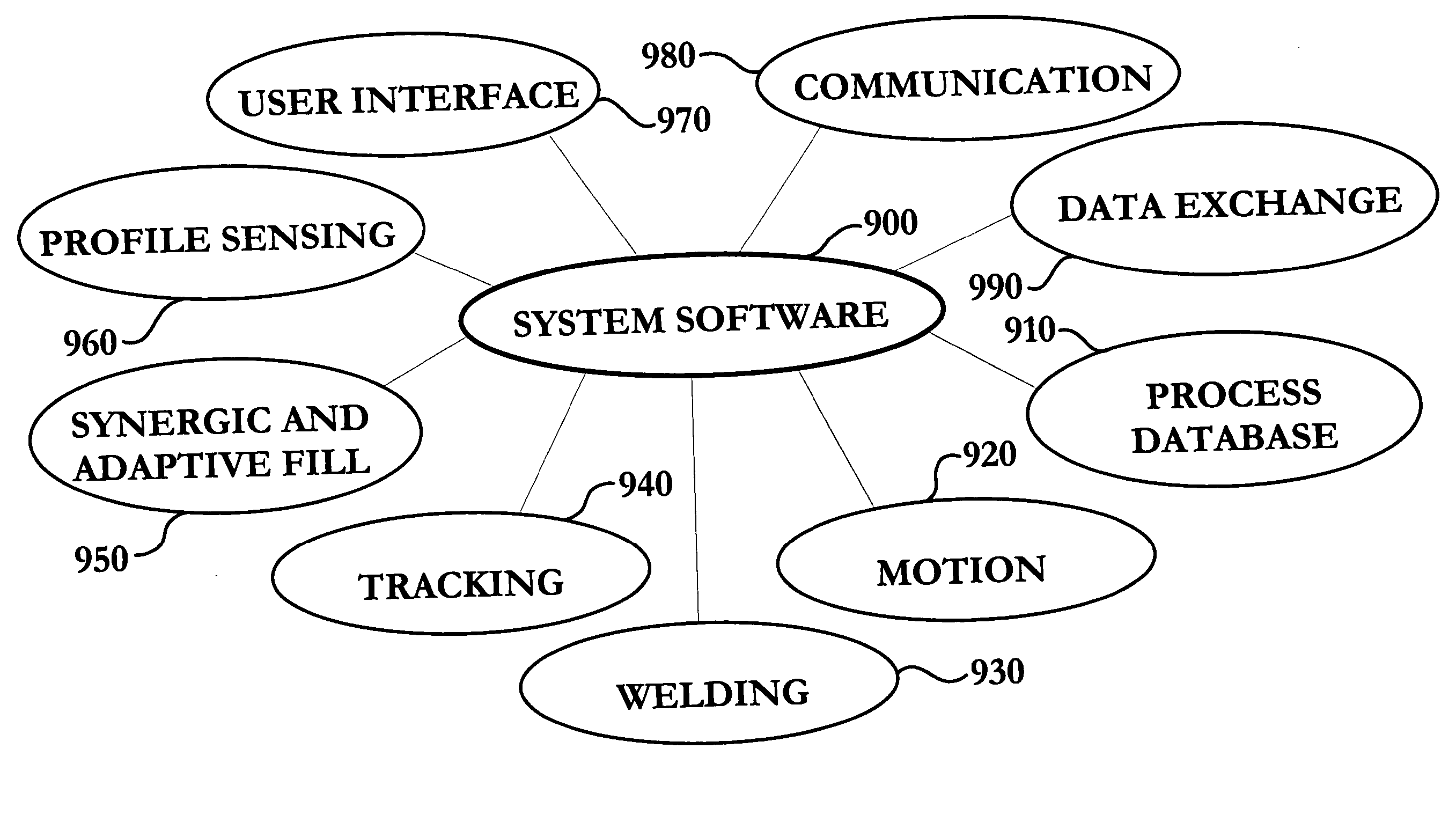

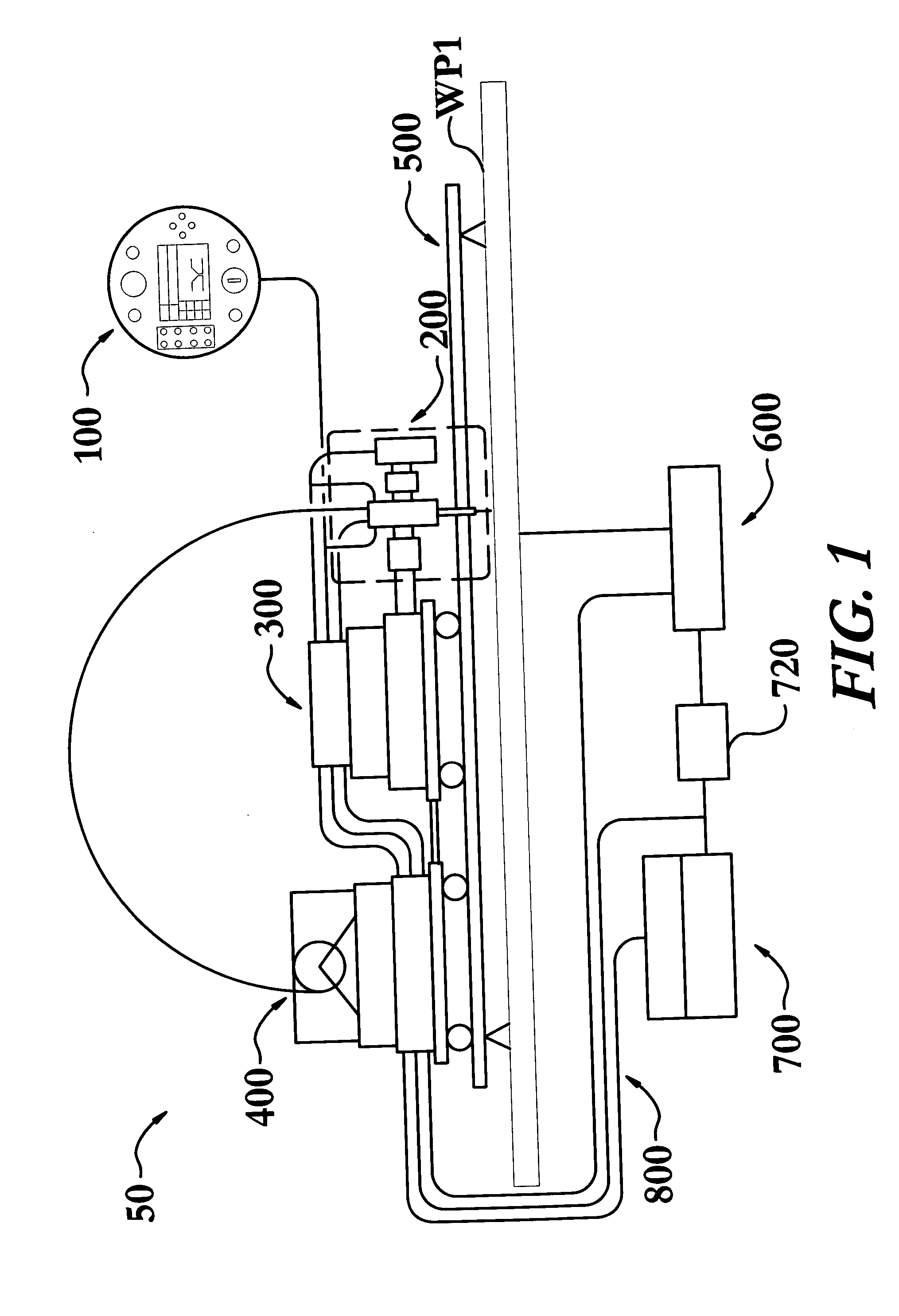

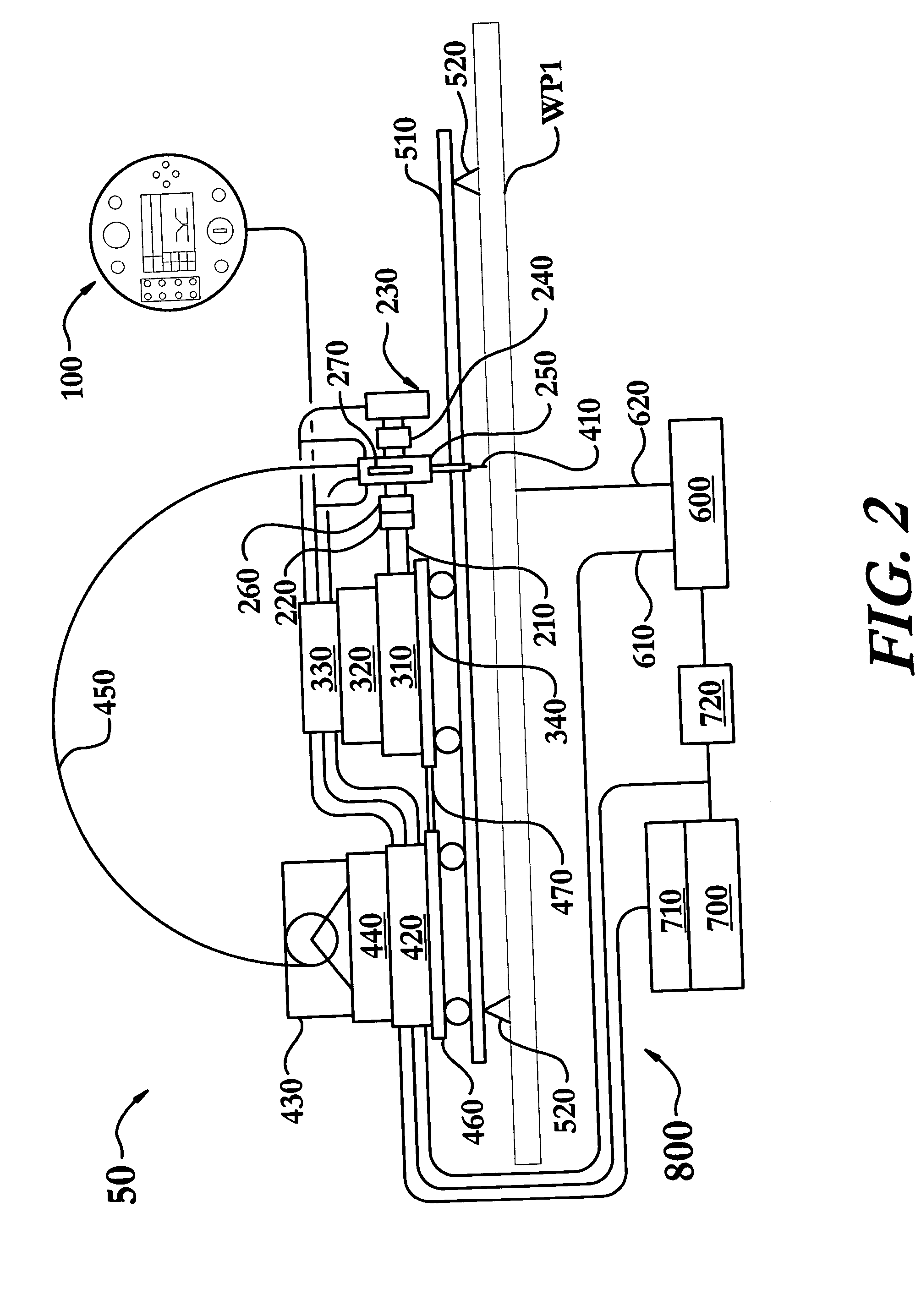

Adaptive and synergic fill welding method and apparatus

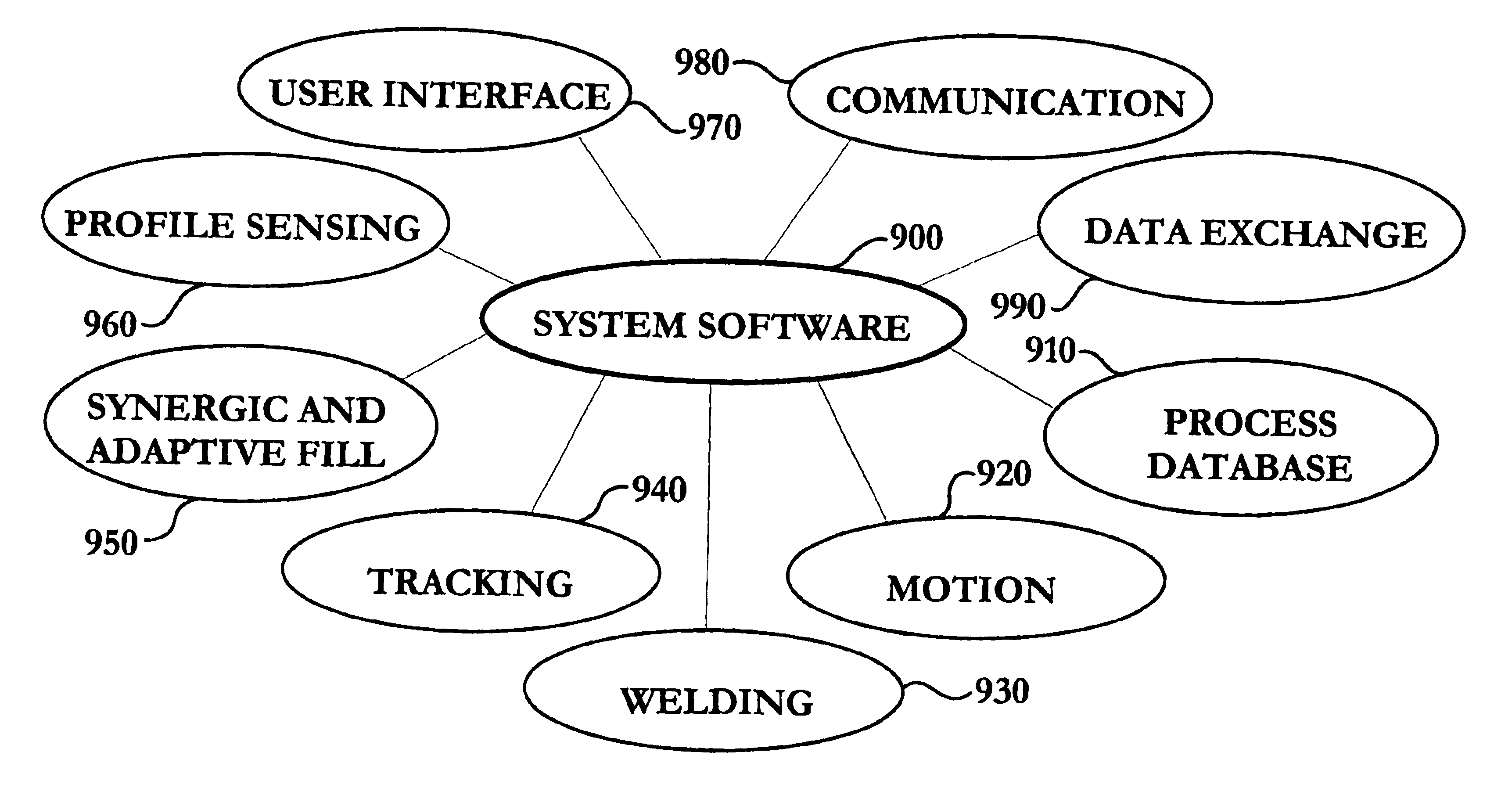

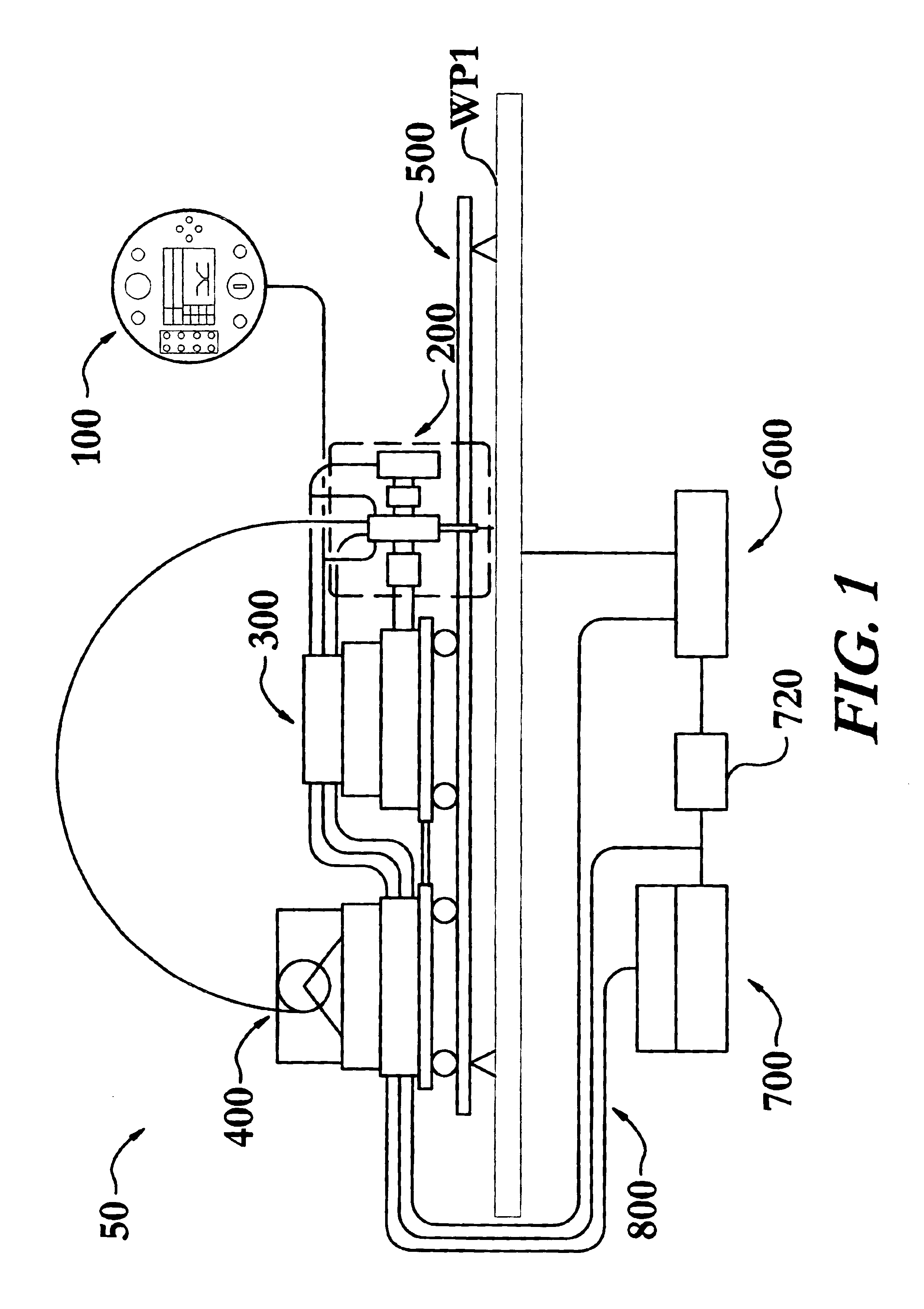

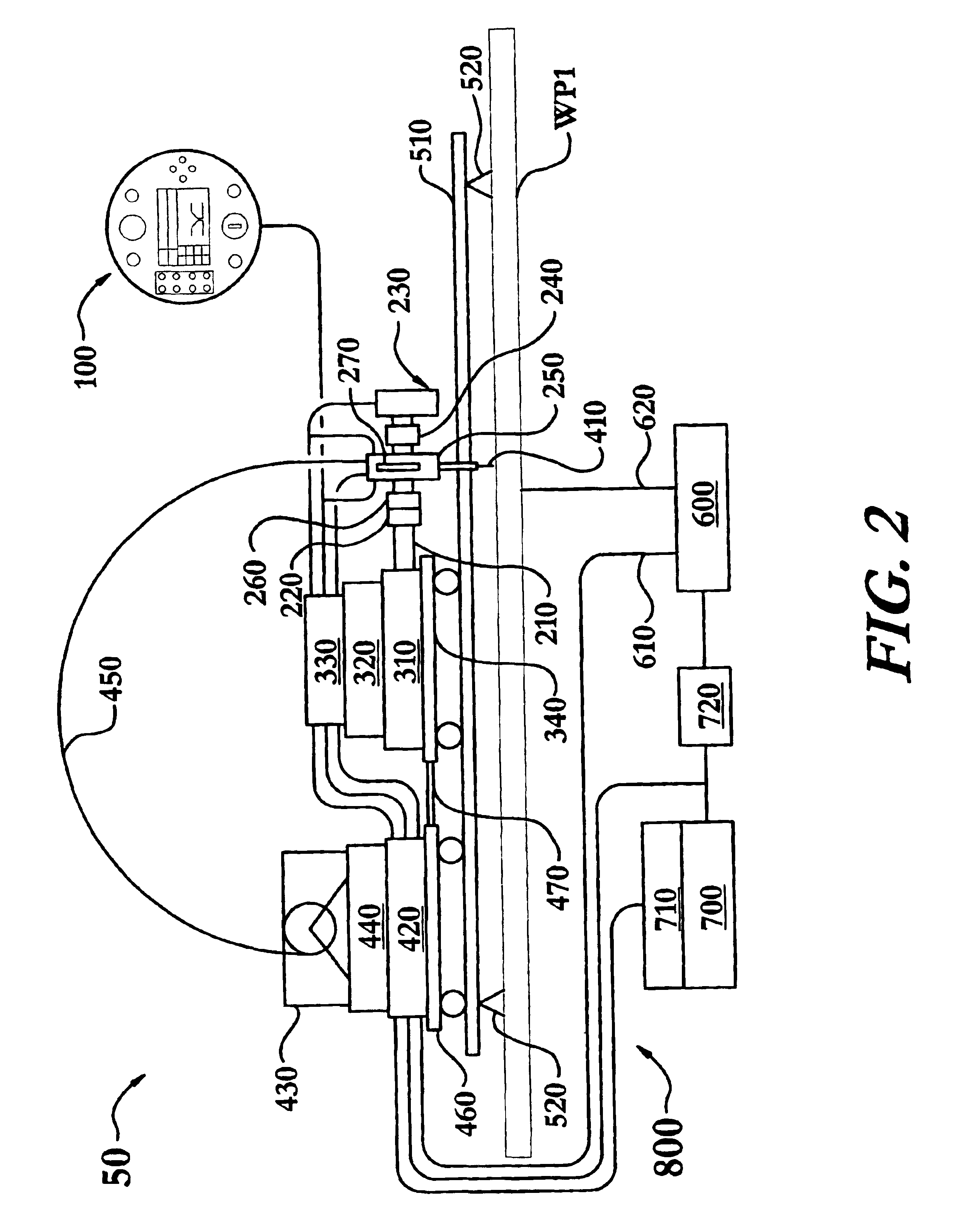

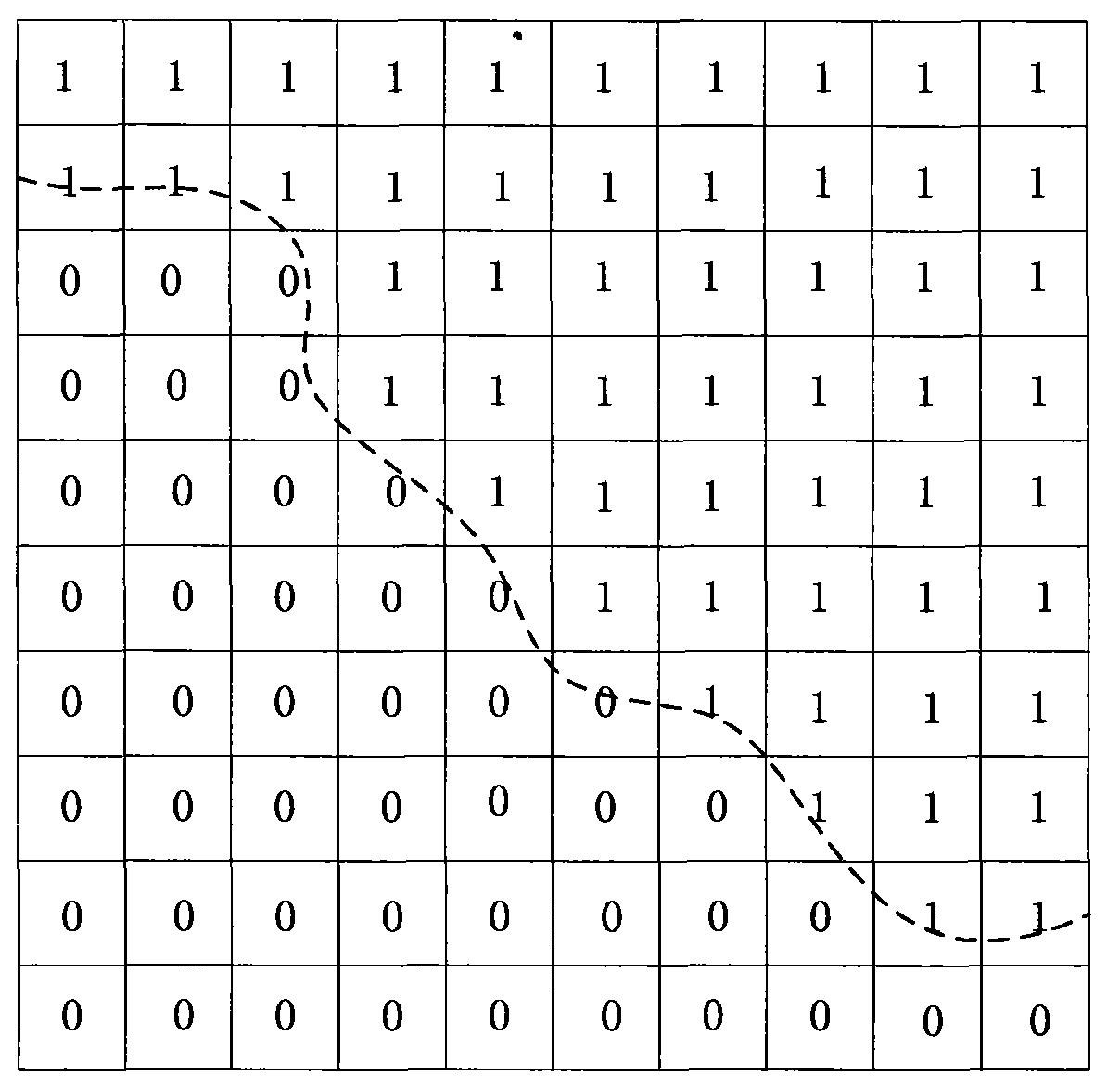

An adaptive and synergic fill welding method and apparatus enables automatic, or adaptive fill, and user directed, or synergic fill, modes to provide improved fusion quality, by ensuring that base metal dilution of a weld remains within a predetermined range. The apparatus includes a means for profiling and tracking a joint, and multi-part adjustable welding means. In adaptive fill mode the method automatically varies a plurality of welding parameters in response to measured variations such as joint width between work pieces. In synergic fill mode, the method enables a user to vary multiple welding parameters in response to joint variations by adjusting a single variable, a synergic fill number, which may be controlled by means of a user interface pendent. The multiple welding parameters may include predetermined wire feed speed, torch travel speed, welding voltage and current, torch oscillation width, dwell time, and a plurality of bead size parameters.

Owner:EDISON WELDING INSTITUTE INC

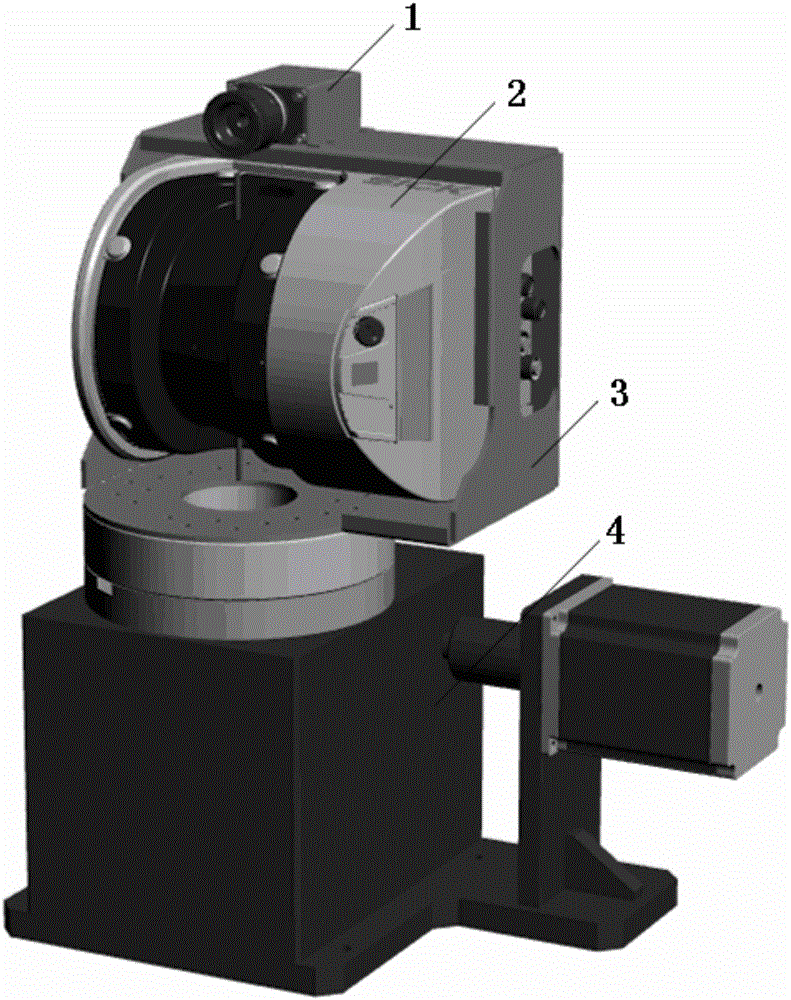

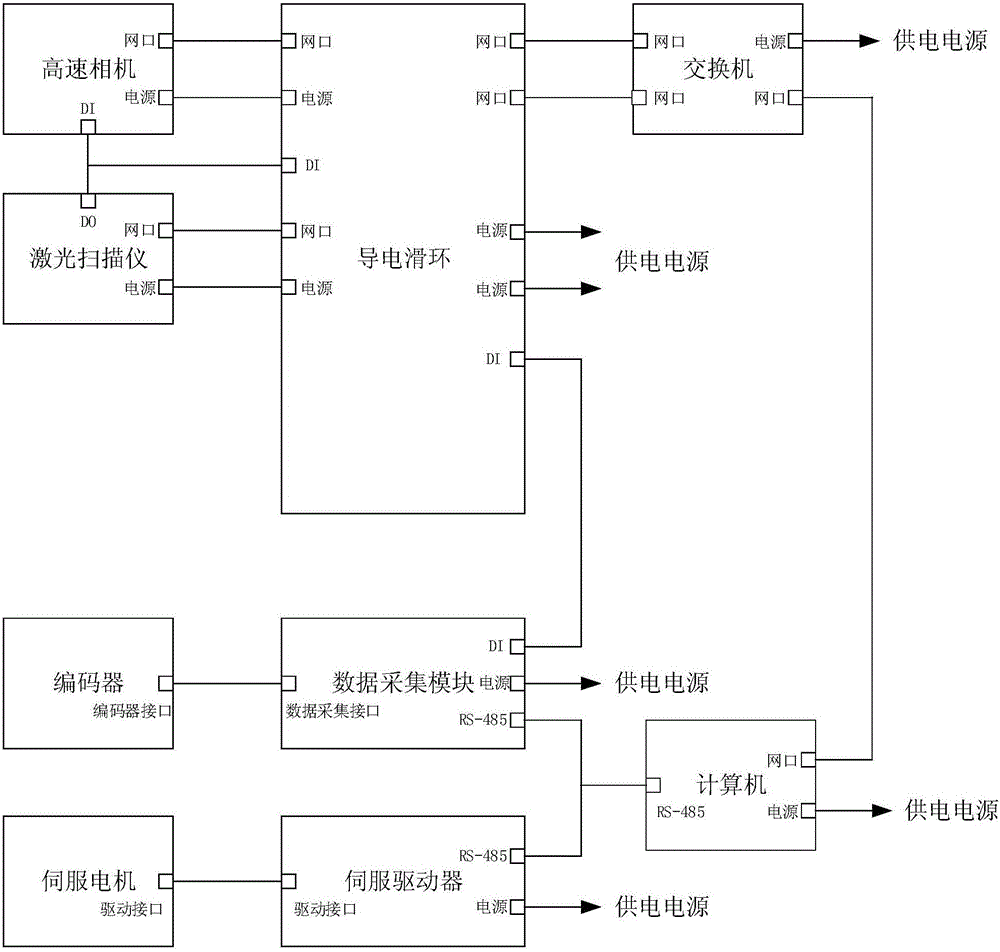

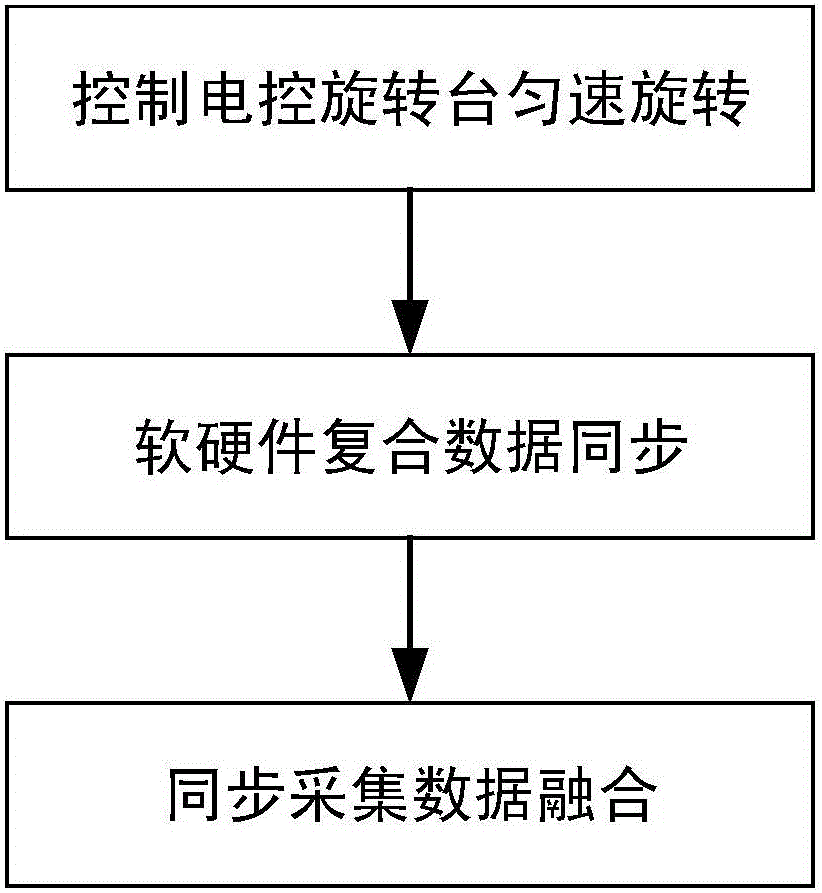

Omnidirectional three-dimensional laser color scanning system and method thereof

ActiveCN105928457AHigh synchronization accuracyImprove fusion qualityUsing optical meansData synchronizationPoint cloud

The invention relates to the technical field of three-dimensional color point cloud data processing and three-dimensional scene reconstruction, and provides an omnidirectional three-dimensional laser color scanning system and a method thereof. The system comprises a laser scanner, a high speed camera, an electrically controlled rotary table, a conductive slip ring, an encoder, a data acquisition module, a switch, a servo drive, a computer and an equipment support. The method comprises the steps that 1, the electrically controlled rotary table is controlled to uniformly rotate; 2, hardware and software composite data are synchronized; and 3, synchronous acquisition data are fused. According to the invention, the software and hardware compound data synchronization method is used to synchronously acquire laser point cloud, a two-dimensional image and a rotation angle in real time; the synchronization precision is high; data fusion is carried out on the laser point cloud and the two-dimensional image of each synchronization moment to acquire the three-dimensional color point cloud data of a scene in real time; data acquisition is in real time; and data fusion is carried out on the two-dimensional image of each synchronization moment, which has the advantages of enormous image information and high fusion quality.

Owner:北京汉德图像设备有限公司

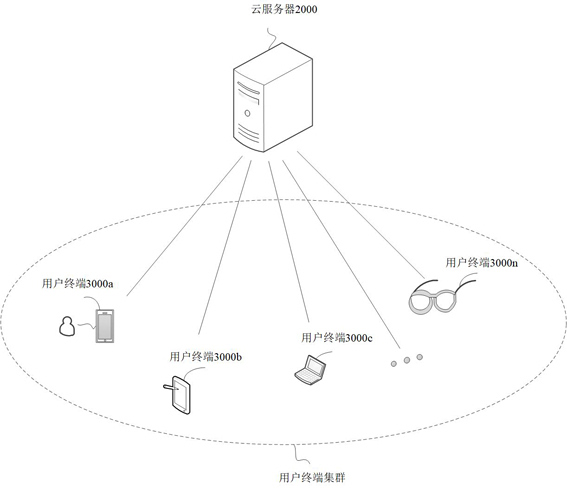

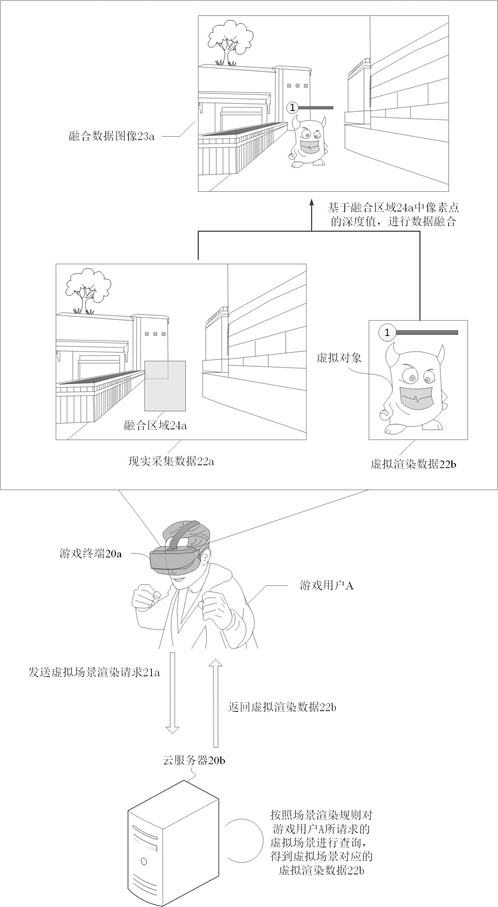

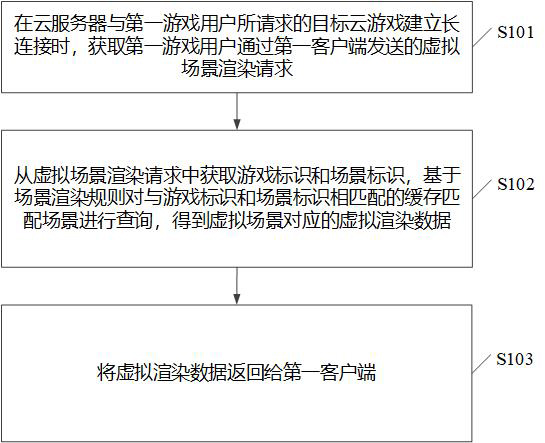

Game data processing method and device and storage medium

ActiveCN112316424AImprove fusion efficiencyQuality improvementVideo gamesImage data processingComputer graphics (images)Engineering

The embodiment of the invention discloses a game data processing method and device and a storage medium, and the method comprises the steps: obtaining a virtual scene rendering request sent by a firstgame user through a first client when a cloud server builds a long connection with a target cloud game; obtaining a game identifier of the target cloud game and a scene identifier of the virtual scene from the virtual scene rendering request, and querying a cache matching scene matched with the game identifier and the scene identifier based on a scene rendering rule to obtain virtual rendering data corresponding to the virtual scene; returning the virtual rendering data to the first client, thereby enabling the first client to perform data fusion on the reality acquisition data and the virtual rendering data based on the depth value associated with the virtual scene when the first client acquires the reality acquisition data in the reality environment where the first game user is located,and obtaining a fused data image. By adopting the method and the device, the rendering time delay can be reduced, and the data fusion efficiency and quality can be improved in an augmented reality scene.

Owner:TENCENT TECH (SHENZHEN) CO LTD

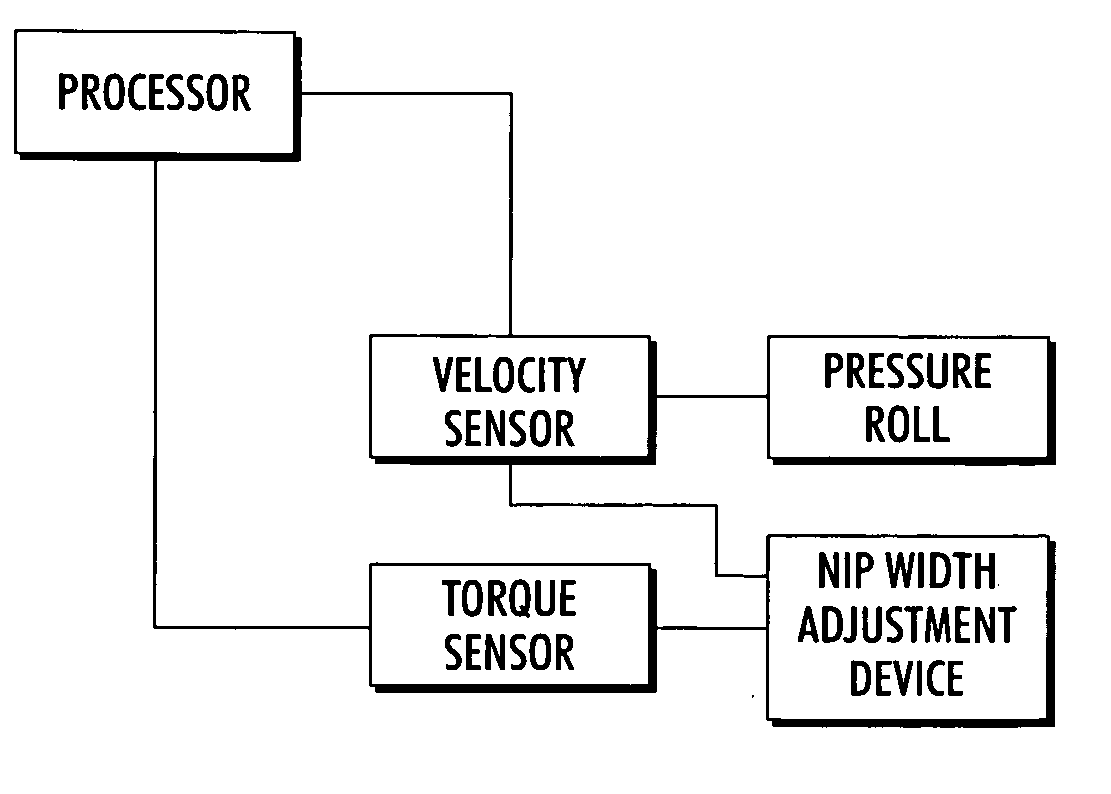

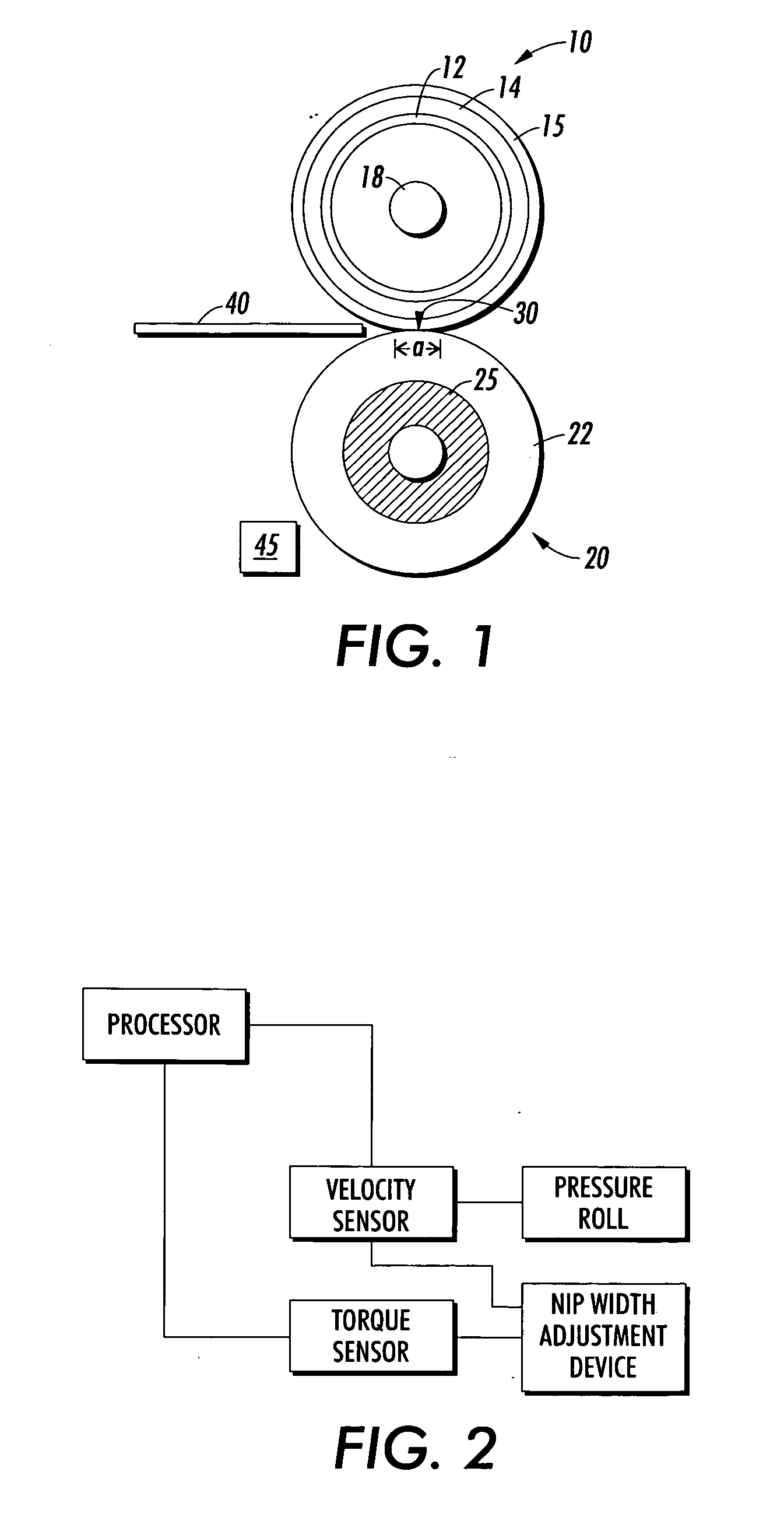

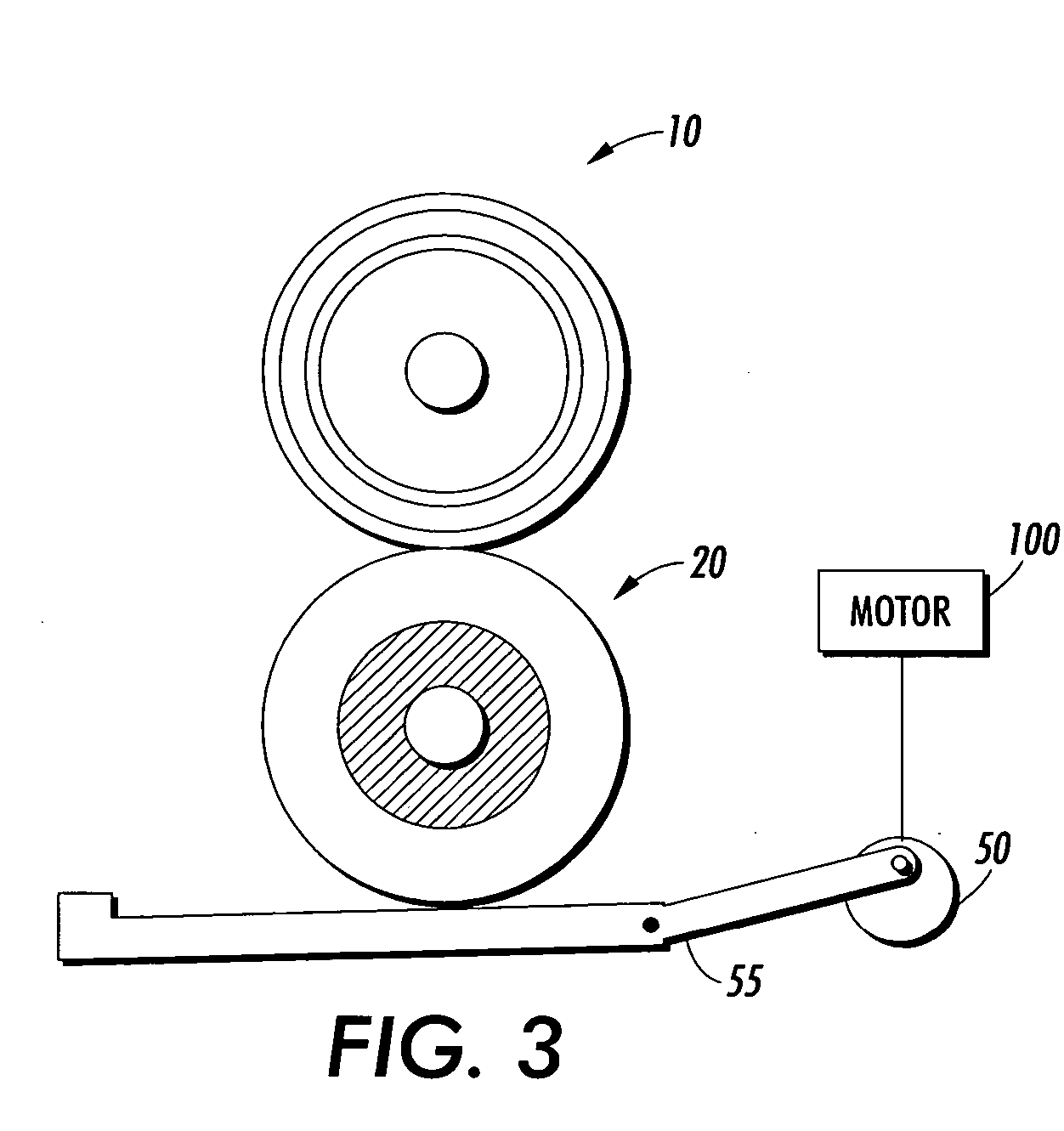

Closed loop control of nip pressure in a fuser system

ActiveUS20050220473A1Uniform nip widthImprove fusion qualityOhmic-resistance heatingElectrographic process apparatusClosed loopMechanical engineering

A fuser system of a xerographic device, including a fuser member and a pressure member in which the pressure member is made to exert pressure upon the fuser member so as to form a nip having a nip width between the fuser member and the pressure member, wherein the nip width is set to within a specification nip width range, a drive system for driving said fuser member relative to said pressure roll; a sensor for monitoring the torque of said drive system; a processor in communication with the sensor that receives torque data from the sensor, wherein the processor determines a current nip pressure uniformity from the torque data and compares the current nip pressure uniformity to the specification nip pressure uniformity range, and a nip pressure adjustment device in communication with the processor, which adjusts the current nip pressure uniformity to be within the specification nip pressure uniformity range.

Owner:XEROX CORP

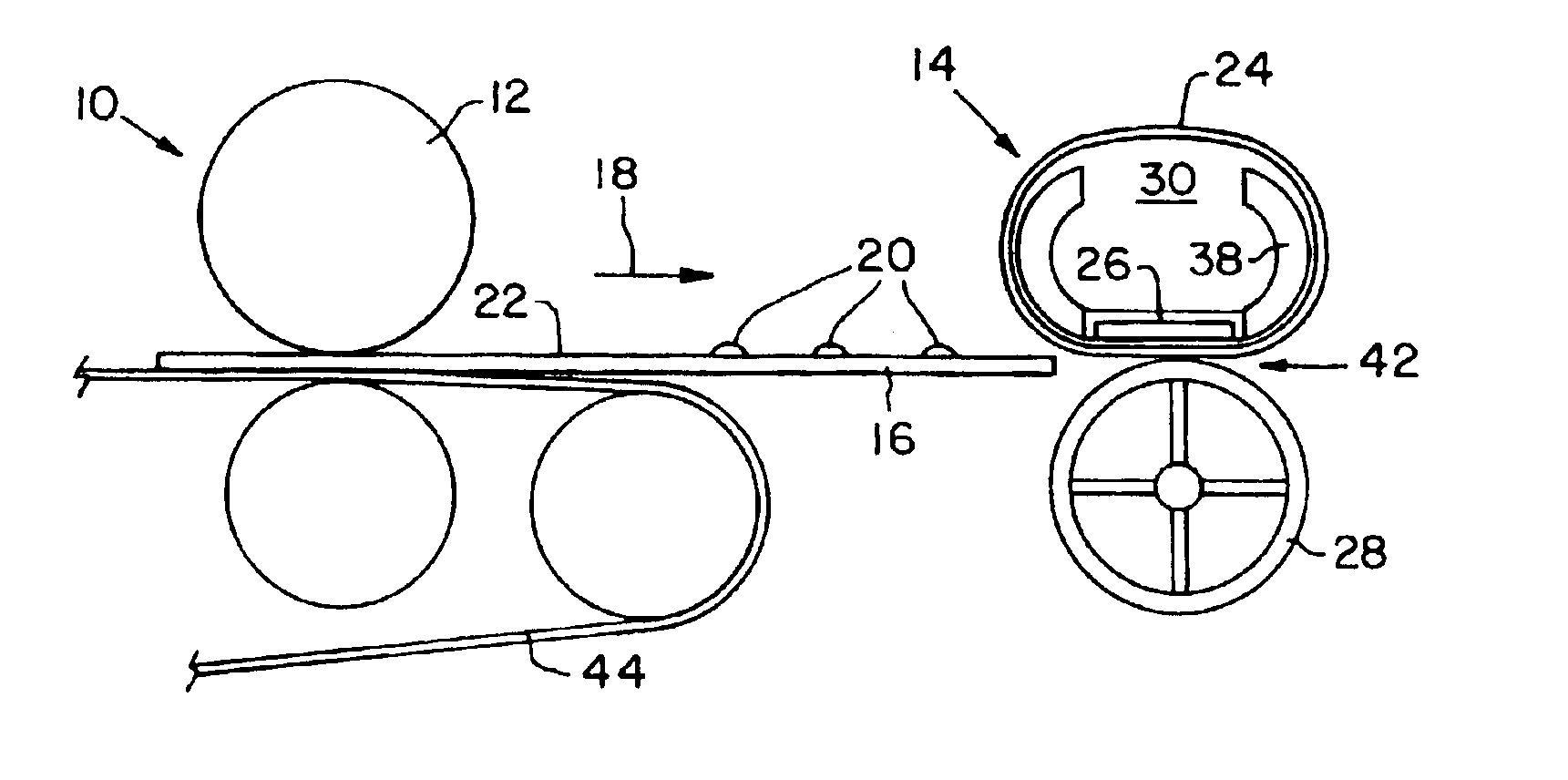

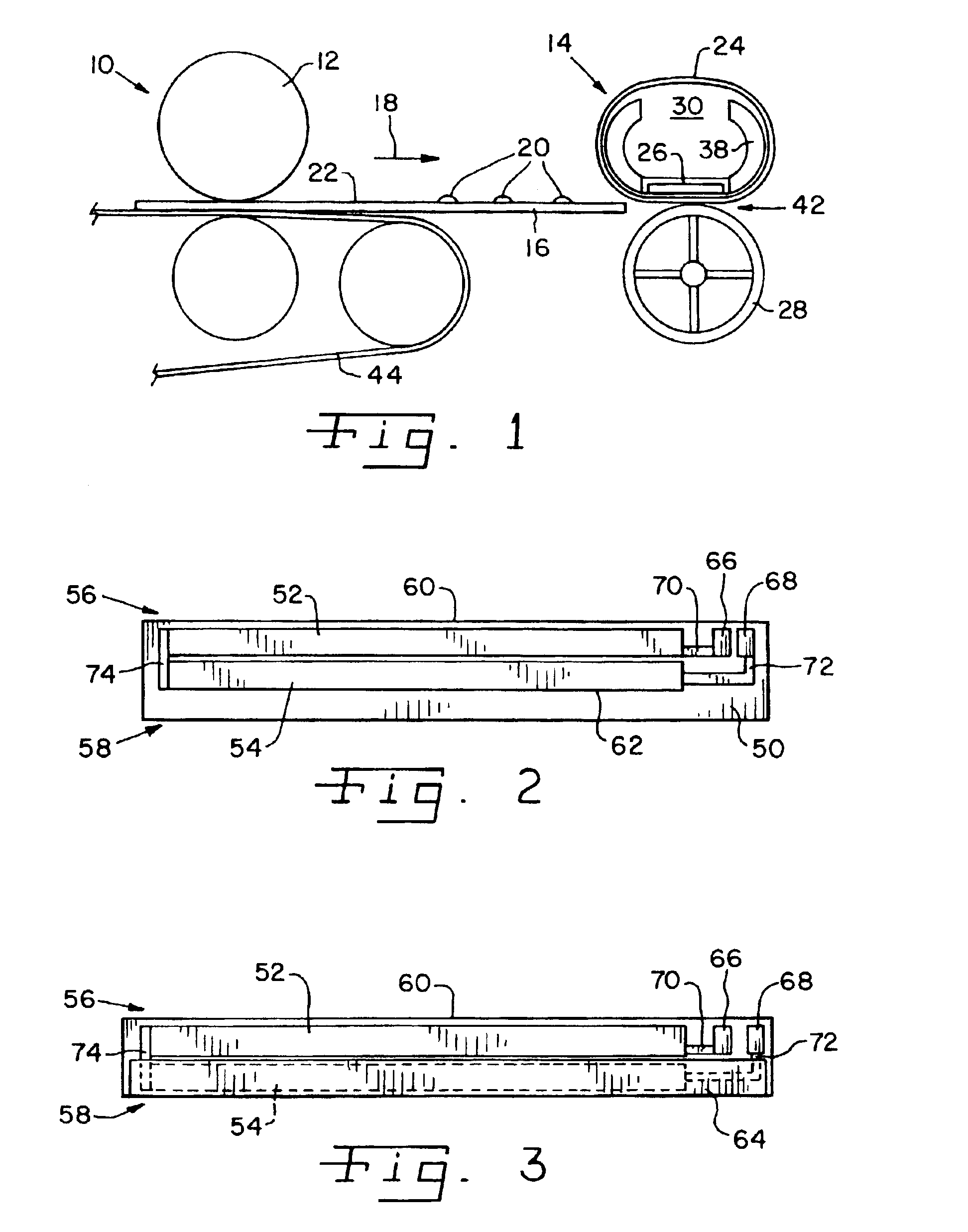

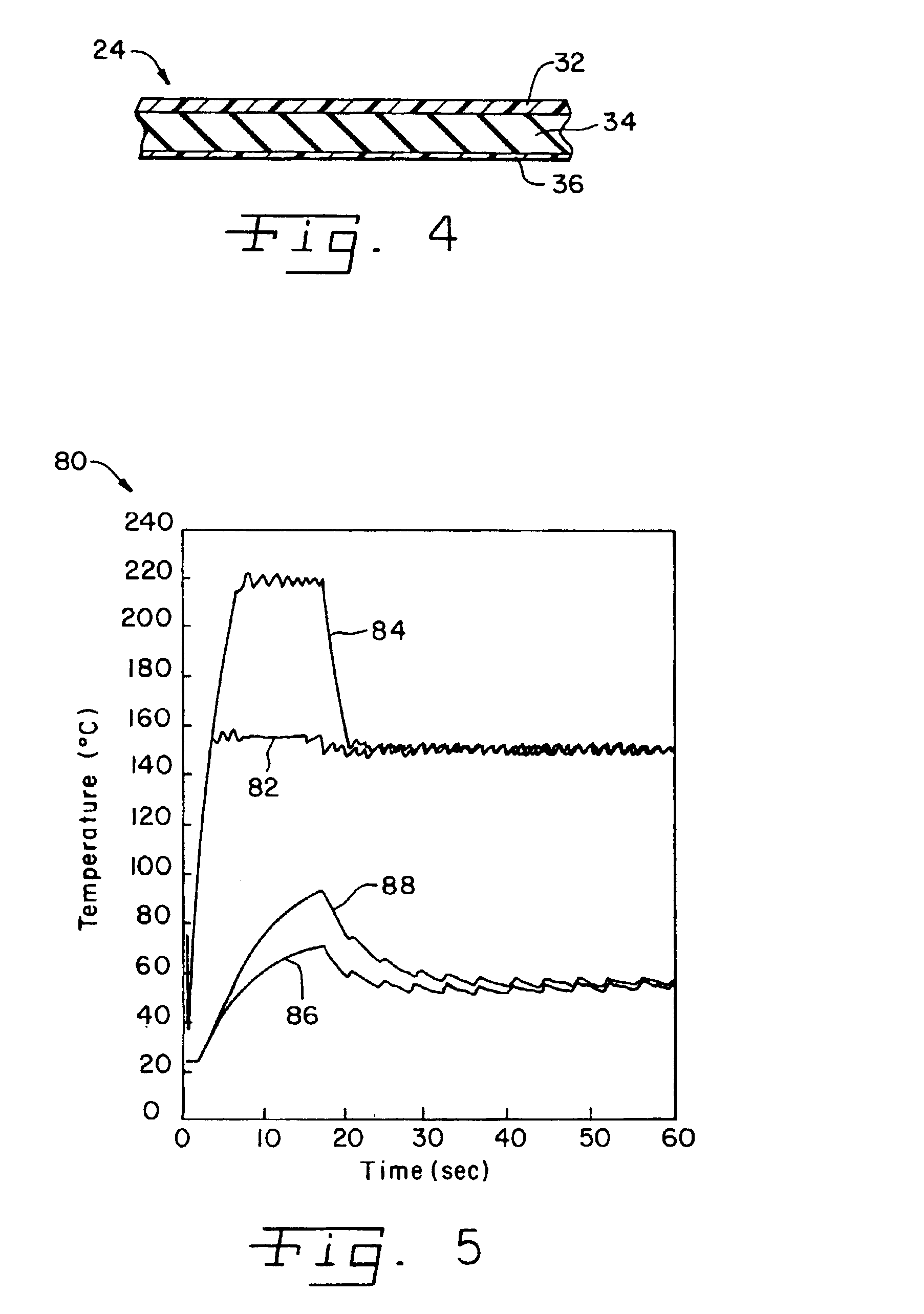

Belt fuser for a color electrophotographic printer

InactiveUS6879803B2Improve fusion qualityReduced toner offsetOhmic-resistance heatingElectrographic process apparatusPrint mediaInner loop

A fuser for fusing an image to print media in a color electrophotographic printer. The fuser includes an endless idling belt defining an inner loop, and a ceramic heater positioned in contact with the belt, within the inner loop. A pressure roller defines a nip with the belt. The belt includes a compliant layer for conforming to variations in toner pile height. The heater is configured to provide a cooler nip exit and a hotter nip entrance.

Owner:LEXMARK INT INC

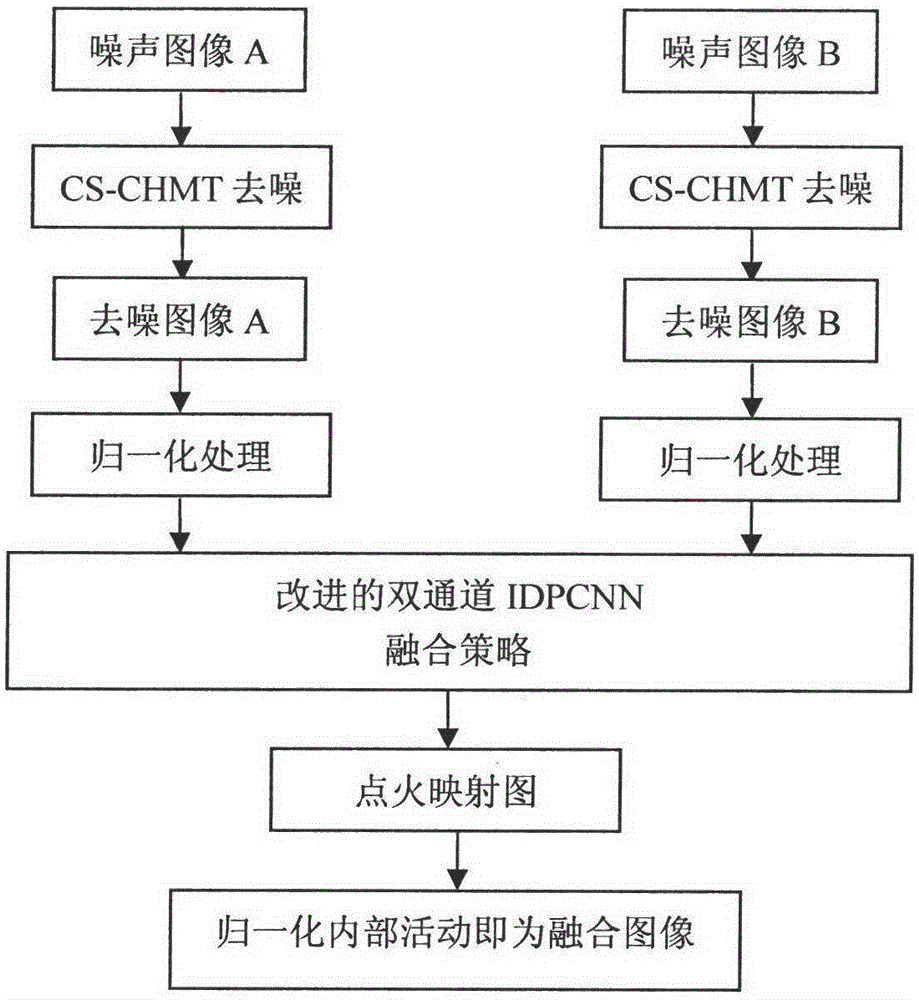

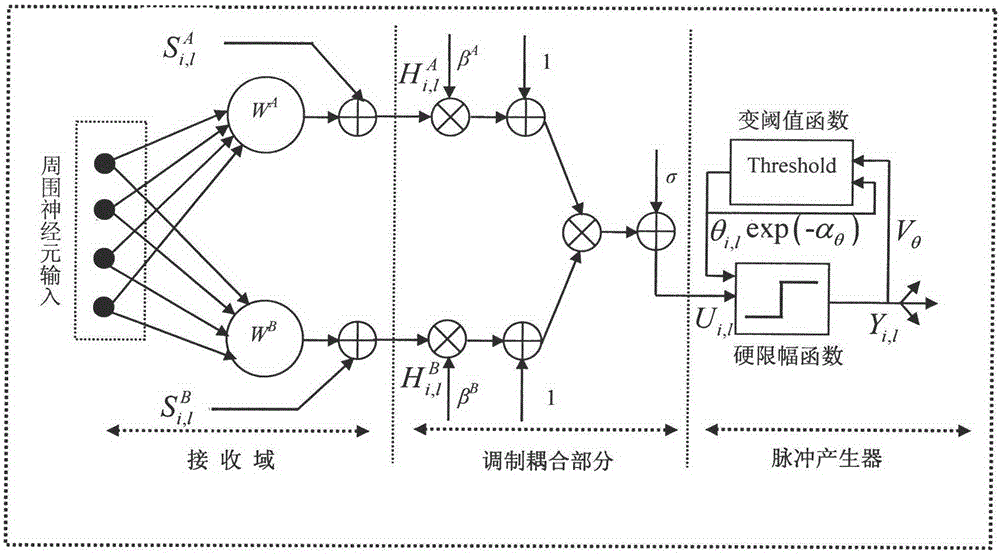

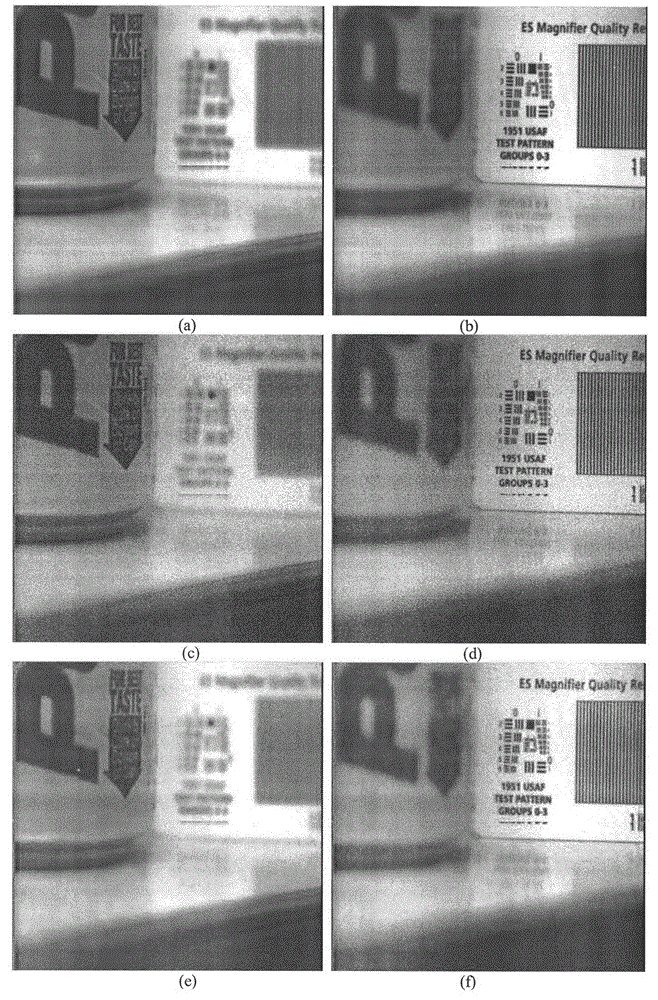

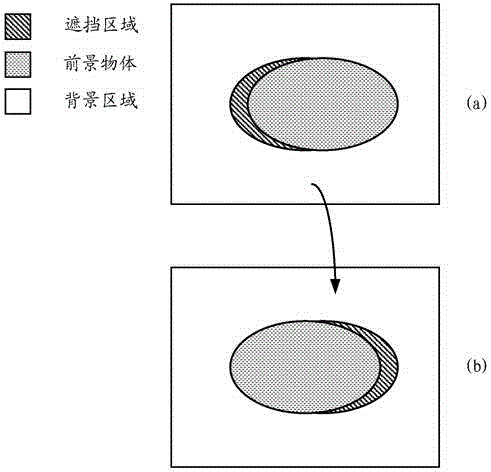

Multi-focus noise image fusion method based on CS-CHMT and IDPCNN

InactiveCN104008536ASuppression of distortionMake up for the pseudo-Gibbs effectImage enhancementPattern recognitionImage denoising

The invention discloses a multi-focus noise image fusion method based on a cycle spinning-Contourlet domain hidden Markov tree model (CS-CHMT) and an improved dual-channel pulse-coupled neural network (IDPCNN). First, two multi-focus images containing a certain level of Gauss white noise are de-noised by use of the CS-CHMT model, and on the basis, a fusion strategy is designed by use of the IDPCNN to obtain a final fused image. According to the invention, by making use of the directional sensitivity of Contourlet transform height and the advantages of anisotropy, performing image de-noising by use of a hidden Markov tree (HMT) model, and introducing a cycle spinning technology to effectively suppress the pseudo Gibbs effect of the images near singular points, the PSNR value of de-noised images is improved. Compared with the traditional multi-focus image fusion method, the improved IDPCNN fusion method can effectively preserve more detailed information characterizing image features, greatly improve the quality of fused images and further improve the visual effect, and has real-time performance.

Owner:无锡金帆钻凿设备股份有限公司

Image merging method and apparatus

ActiveCN106803899AReduce blurReduce ghostingTelevision system detailsColor television detailsParallax

The embodiments of the invention disclose an image merging method and apparatus. The method includes the following steps: acquiring two images to be merged, the two images have an overlapped region which is divided into at least two candidate merging regions; based on light stream vector between the two images, determining the light stream vector mapping difference corresponding to each candidate merging region of the two candidate merging regions; on the basis of the light stream vector mapping difference corresponding to each one of the at least two respective candidate merging regions, selecting an object merging region of the two images from the at least two candidate merging regions; and merging the two images in the object merging region so as to obtain a merged image of the two images. According to the embodiments of the invention, the image merging method and apparatus can reduce fuzziness or double images caused by optical parallax.

Owner:HUAWEI TECH CO LTD

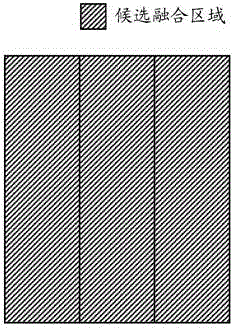

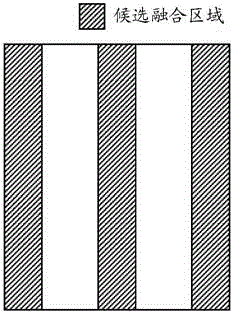

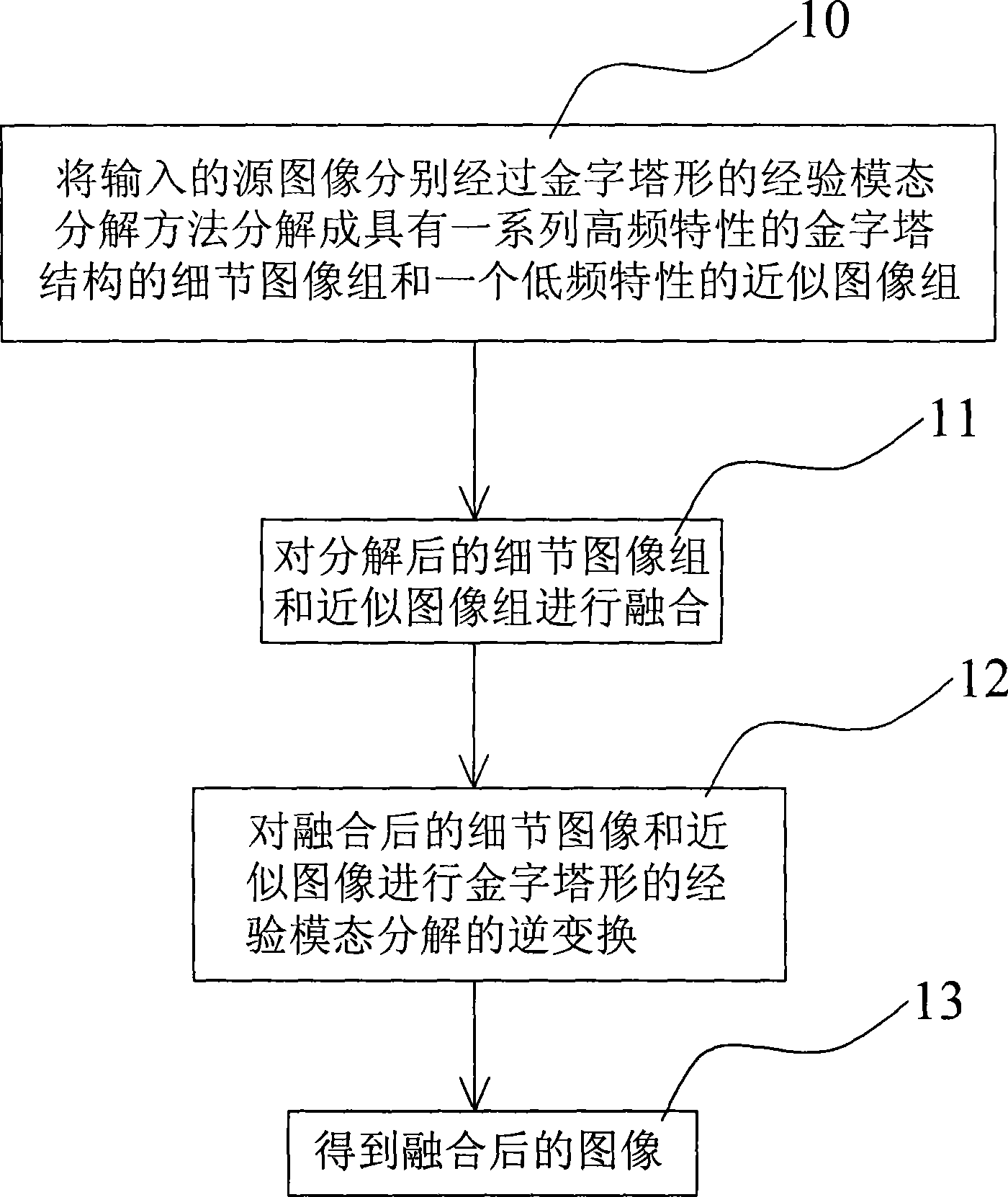

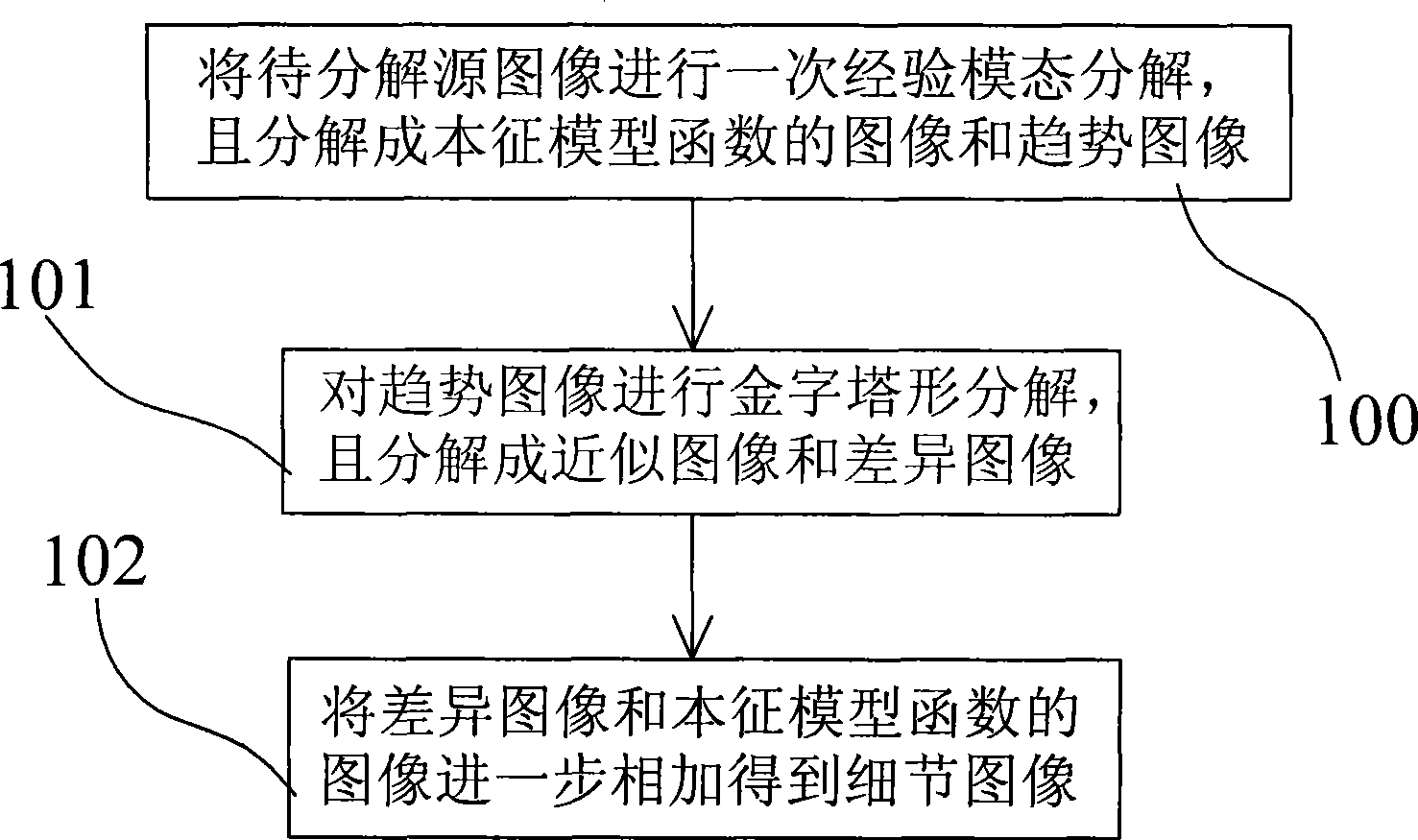

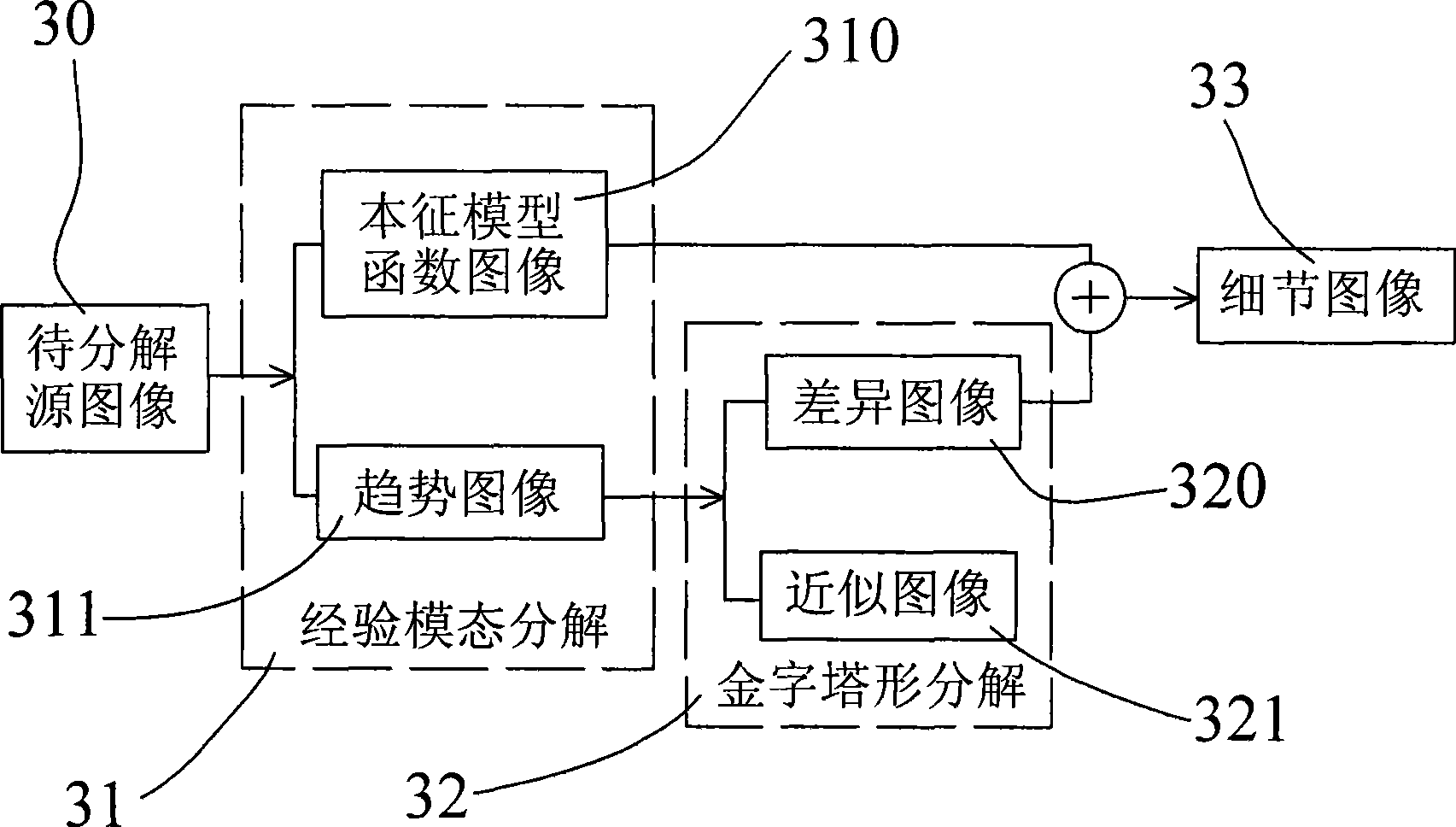

Pyramidal empirical modal analyze image merge method

ActiveCN101447072ADecomposition means low redundancyReduce redundancyImage enhancementComputer visionSource image

The present invention disclose a pyramidal empirical modal analyze image merge method, includes, first analyzing input source image to a series of detail image group with high frequency characteristic and pyramid structure and a similar image group with low frequency characteristic through pyramidal empirical modal analyze method; second merging analyzed detail image and similar image; at last executing inverse transforming of pyramidal empirical modal analysis to merged detail image and similar image to get merged image, wherein, pyramidal empirical modal analyze method includes one time empirical modal analyzing the image to intrinsic pattern function image and trend image; analyzing the tread image through the pyramidal and generate similar image and difference image; adding the difference image with the intrinsic pattern function image to get the detail image. The advantages of the invention lies in low redundance, quick analyze and calculate speed, and high quality of image merging.

Owner:TSINGHUA UNIV

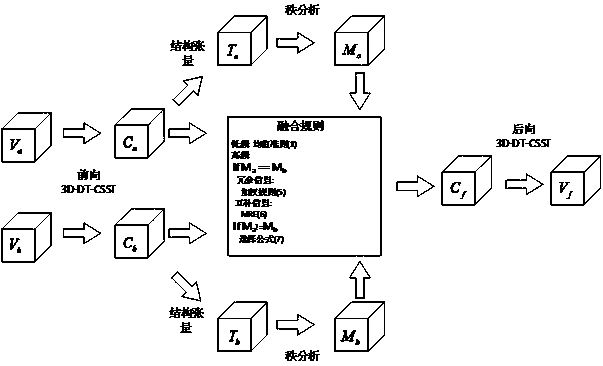

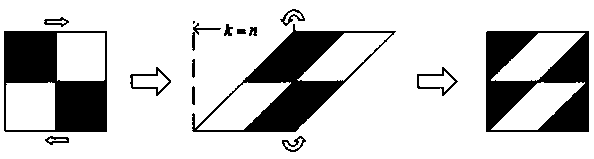

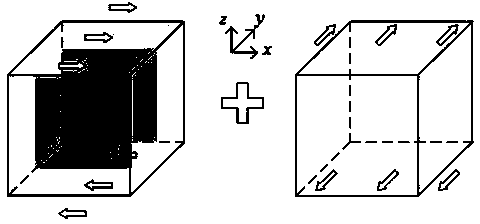

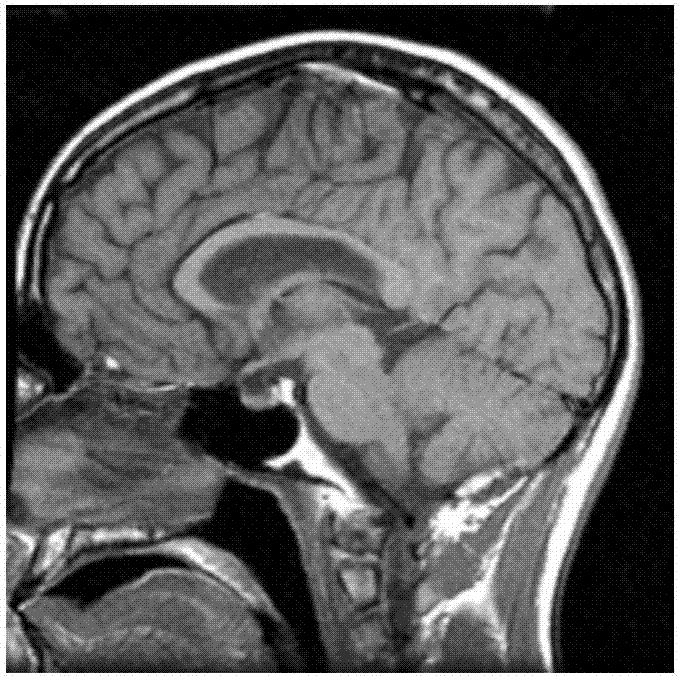

Feature-level medical image fusion method based on 3D (three dimension) shearlet transform

ActiveCN103985109AAccurate Direction InformationImprove fusion qualityImage enhancementGeometric image transformationImaging processingDual tree

The invention discloses a feature-level medical image fusion method based on 3D (three dimension) shearlet transform, belonging to the technical field of medical image processing and application. The feature-level medical image fusion method mainly comprises the following steps: 1, performing 3D-D-CSST (three dimensional-discrete-compact shearlet transform) or 3D-DT-CSST (three dimensional dual-tree compact shearlet transform) on two images to obtain transformation coefficient images Ca and Cb; 2, performing image fusion on transformation coefficients to obtain a fusion coefficient Cf; and 3, performing DWT or DTCWT inverse transformation, performing backward shear transformation on the transformed image to obtain a fusion image Vf. According to the feature-level medical image fusion method, the problems that the quality of a fused image is relatively low and information which is partially important and is not remarkable is easily ignored are solved.

Owner:UNIV OF ELECTRONIC SCI & TECH OF CHINA

Object and scene fusion method based on IHS (Intensity, Hue, Saturation) transform

InactiveCN102436666ALower conditionsEasy to implementImage analysis2D-image generationVideo processingHue

The invention discloses an object and scene fusion method based on IHS (Intensity, Hue, Saturation) transform. The method comprises the following steps: firstly using the IHS transform and the intensity fusion to form a light mask of an image; and then achieving the aim of the fusion of the object and the scene by recovering object details in the light mask. The invention provides three schemes by respectively using a standard IHS transform technology, an SFIM (Smoothing Filter-based Intensity Modulation) technology and a wavelet technology as an intensity fusion tool. In the method, a to-be-fused image is free from rectification, less fusion conditions are needed, the method can be realized easily, the fusion of the object and the scene can be realized by only considering the intensity fusion; the method has fast fusion speed and great potential in the video processing, and can be used for maximally reducing the color distortion generated in fusion process; and meanwhile, the method has wider use since different intensity fusion algorithms can be implanted to form different fusion schemes.

Owner:SHANGHAI UNIV

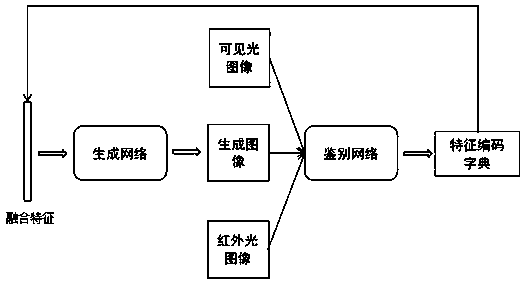

dual-light image fusion model based on a depth convolution antagonism generation network DCGAN

PendingCN109360146AAvoid high demandDouble Fusion GuaranteedGeometric image transformationCharacter and pattern recognitionFeature extractionNetwork generation

The invention discloses a dual-light image fusion model based on a depth convolution antagonism generation network DCGAN. The model extracts image features of the same target under visible light and infrared light through a depth discrimination convolution network, and sparsely encodes the two image features according to the same feature dictionary; the dual-light image fusion model extracts imagefeatures of the same target under visible light and infrared light through a depth discrimination convolution network. Then the coding feature is fused and used as the input data of the depth convolution generation network, so that the generation network generates the fusion image. Finally, the error training model between the fusion feature and the coding fusion feature is used to generate the dual-light fusion image. The model uses depth learning network to extract and encode the features of visible and infrared images, and the feature points of the two images can be automatically matched by fusing the features of the encoding. The model of the invention can be called at any time after training, and the double-light image with high fusion quality can be automatically generated by inputting visible light image and infrared light image at the same time.

Owner:STATE GRID GANSU ELECTRIC POWER CORP +1

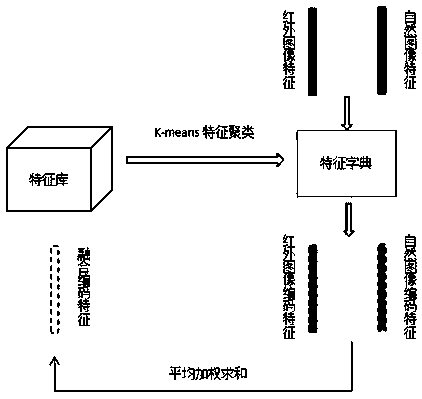

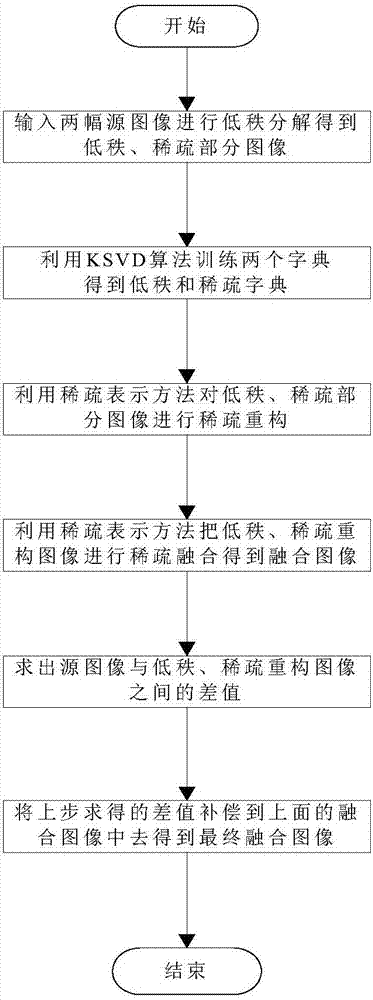

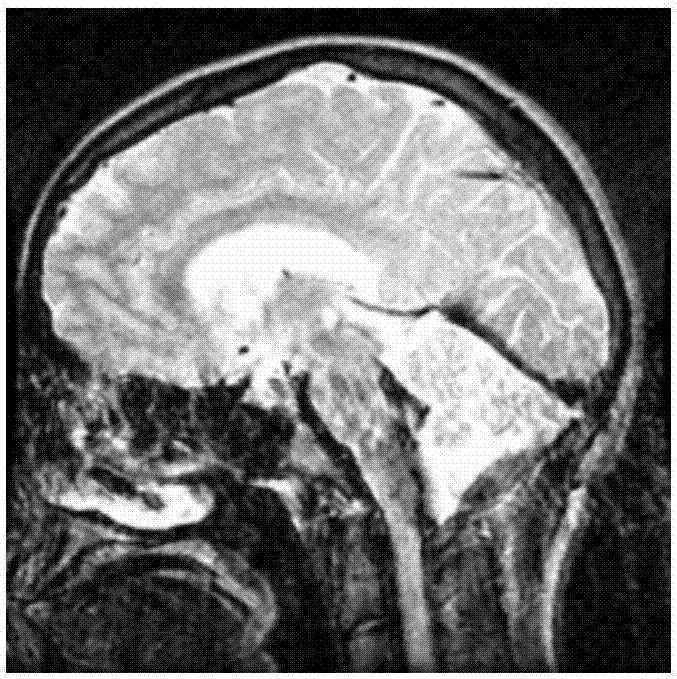

Multi-mode medical image fusion method based on low-rank decomposition and sparse representation

ActiveCN107292858AImprove fusion qualityEasy to handleImage enhancementImage analysisSingular value decompositionPattern recognition

The present invention discloses a multi-mode medical image fusion method based on low-rank decomposition and sparse representation. The method includes the following steps that: two different multi-mode medical images to be fused are subjected to low-rank decomposition, so that a low-rank part image and a sparse part image are obtained respectively; a KSVD (K-means singular value decomposition) algorithm is adopted to train a selected non-medical image set so that a low-rank dictionary can be obtained, and the KSVD (K-means singular value decomposition) algorithm is utilized to perform low-rank decomposition on the selected non-medical image set, so that a sparse part image set can be obtained, and the sparse part image set is trained, so that a sparse dictionary can be obtained; a sparse representation method is adopted to sparsely reconstruct the low-rank part image and the sparse part image, so that a low-rank reconstructed image and a sparse reconstructed image can be obtained; the sparse representation method is adopted to sparsely fuse the low-rank reconstructed image and the sparse reconstructed image, so that a fused image can be obtained; the difference values of the two multi-mode medical images and the sparse reconstructed image and the low-rank reconstructed image are calculated; and the difference values are added into the fused image, so that a final sparse fused image can be obtained. The subjective and objective evaluation indexes of the multi-mode medical image fusion method of the invention are better than the indexes of a traditional fusion method.

Owner:云南联合视觉科技有限公司

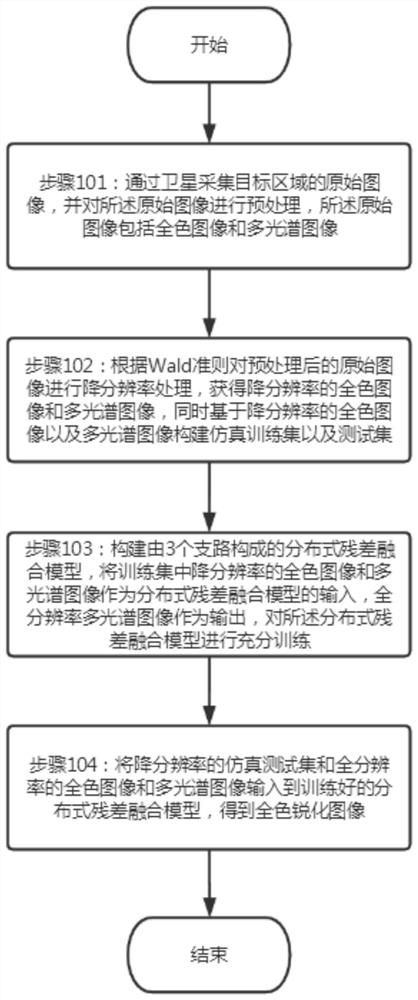

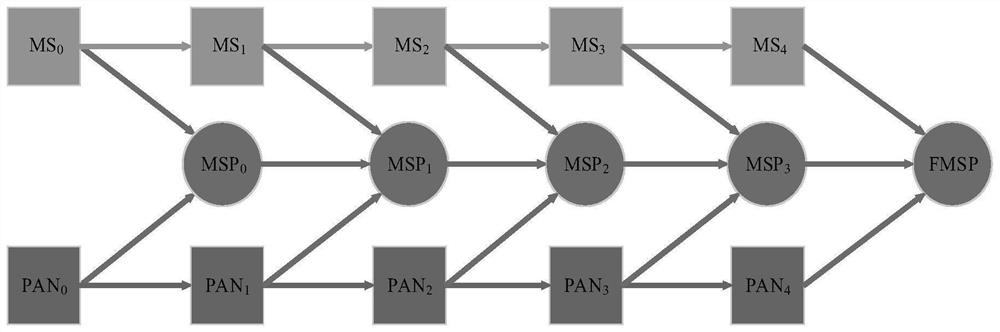

Remote sensing panchromatic and multispectral image distributed fusion method based on residual network

ActiveCN113222835AImprove spatial resolutionImprove fusion qualityImage enhancementImage analysisHigh spatial resolutionImage resolution

The invention provides a remote sensing panchromatic and multispectral image distributed fusion method based on a residual network, which mainly solves the problems of spectrum distortion, low spatial resolution and low fusion quality in the prior art, and comprises the following steps: collecting an original image of a target area through a satellite, and preprocessing the original image; constructing a simulation training set and a test set by using the preprocessed panchromatic image and the preprocessed multispectral image according to a Wald criterion, constructing a residual network-based distributed fusion model consisting of three branches, and fully training a network by taking the panchromatic image and the multispectral image of the training set as input of the network; and inputting panchromatic and multispectral images to be fused into the trained fusion network to obtain a fused image. According to the method, the features of different scales of different branches are used for fusion, more spectral information and spatial information are reserved, the method has better performance in the aspects of improving the spatial resolution and reserving the spectral information, and the fusion quality is improved.

Owner:HAINAN UNIVERSITY

SAR image fusion method based on multiple-dimension geometric analysis

ActiveCN101441766AImprove fusion qualityLittle detailImage enhancementImaging processingMultiscale geometric analysis

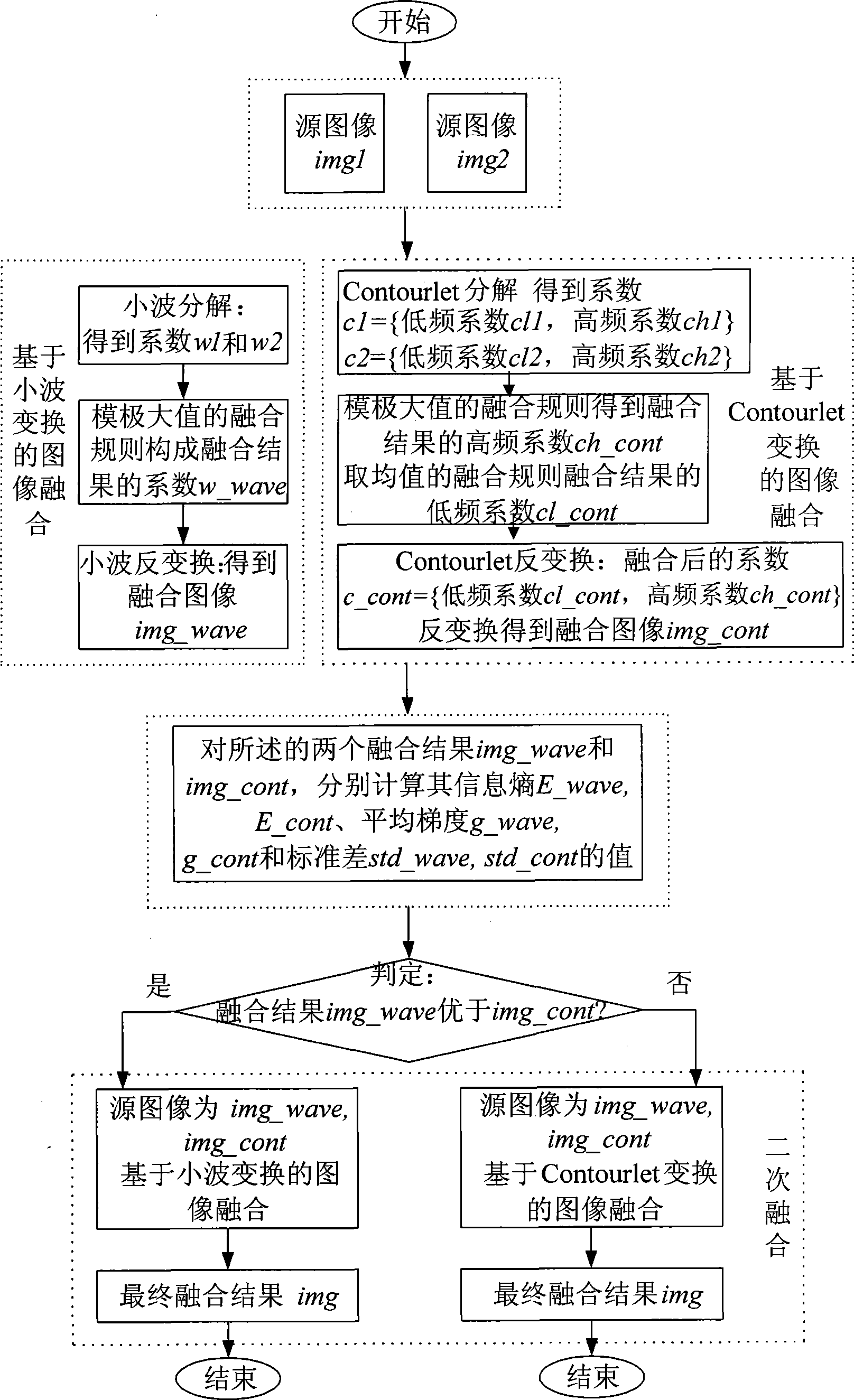

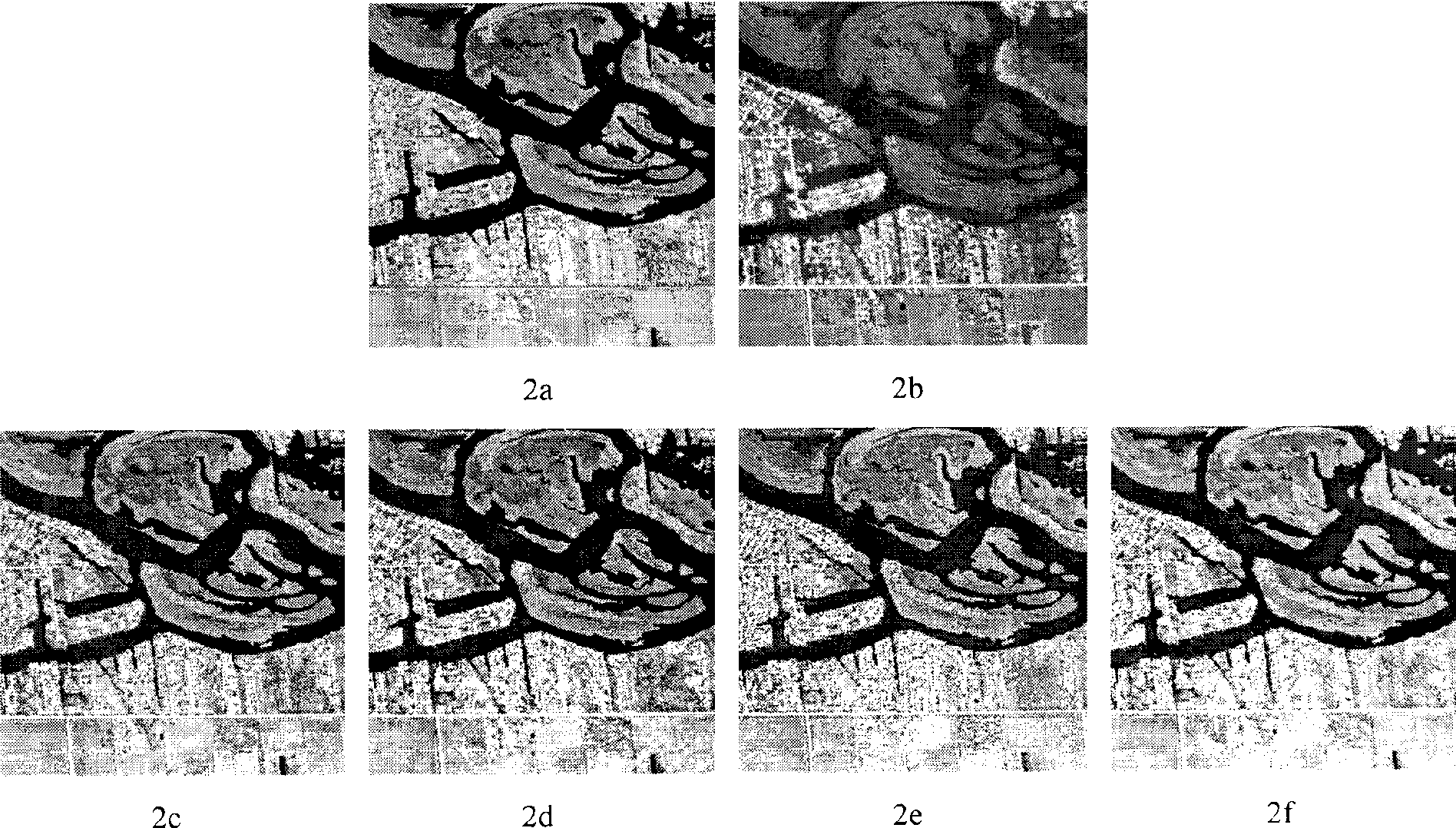

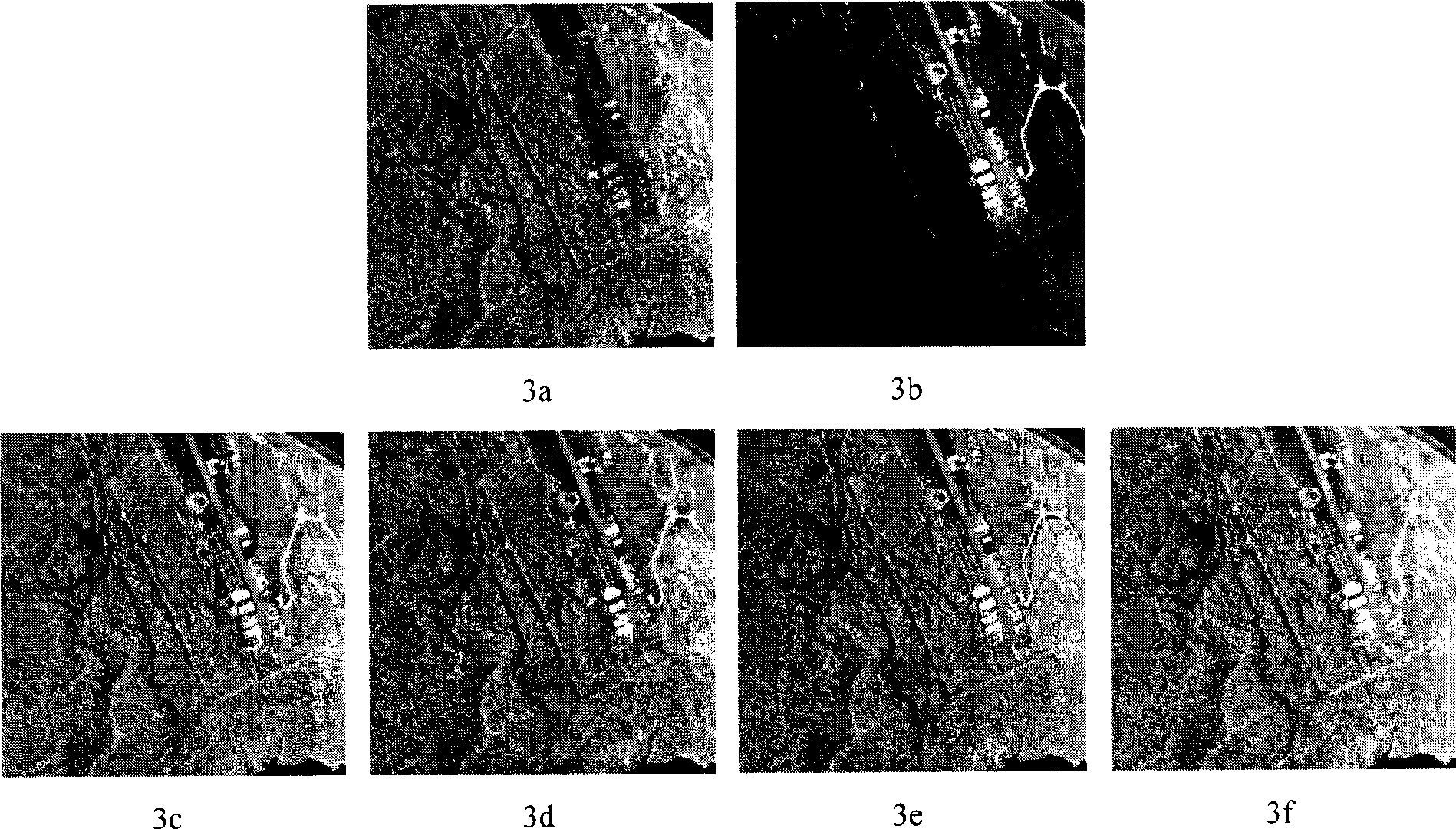

The invention discloses an SAR image fusion method based on a multi-scale geometric analysis tool and belongs to the field of image processing technology; and the method mainly solves the problems that the prior fusion method has phenomenon of fuzzy and massive detailed compositions. The method is realized through the following steps: (1) two source images are subjected to image fusion respectively based on wavelet transformation to obtain a fusion result img_wave; (2) the two source images are subjected to image fusion based on Contourlet conversion to obtain a fusion result img_cont; (3) information entropy, average gradient and standard deviation of the two fusion results img_wave and img_cont are calculated respectively; (4) the information entropy, average gradient and standard deviation of the image fusion result img_wave based on wavelet transformation and the information entropy, average gradient and standard deviation of the image fusion result img_cont based on Contourlet conversion are compared so as to judge the quality of the fusion results; and (5) according to a judgment result, secondary fusion is selected. The SAR image fusion method improves the information content of the fused image, ensures the definition of the image and can be used for fusion of an SAR image, a natural image and a medical image.

Owner:XIDIAN UNIV

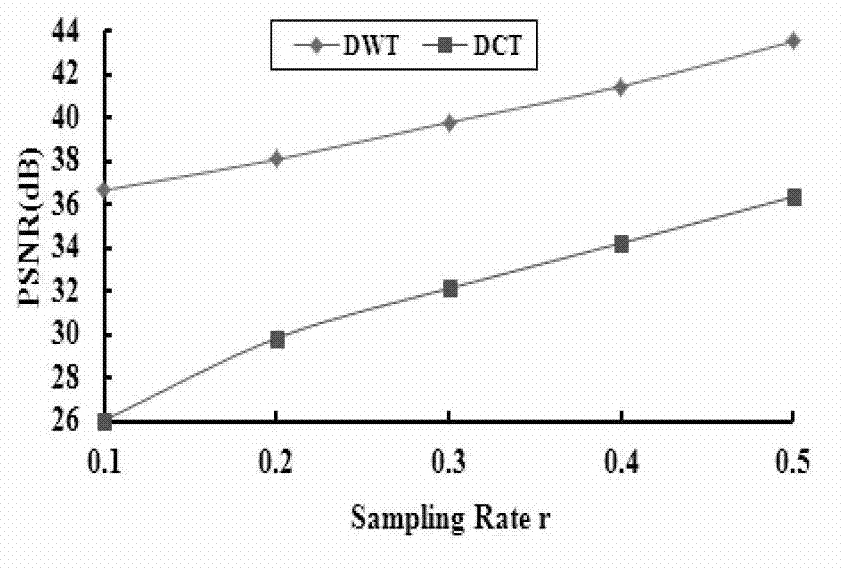

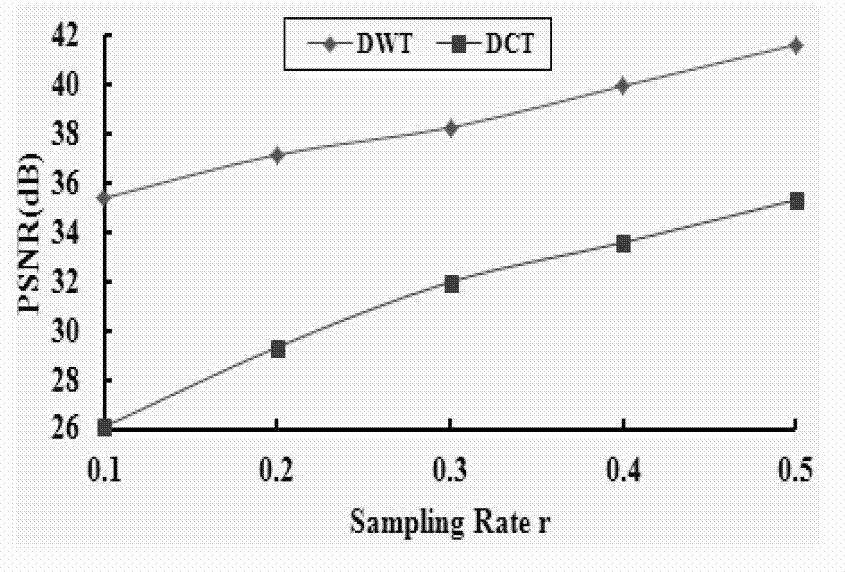

Method and device for multi-focus image fusion based on compressed sensing

InactiveCN103164850AImprove fusion qualityFast convergenceImage enhancementSignal-to-noise ratio (imaging)Image fusion

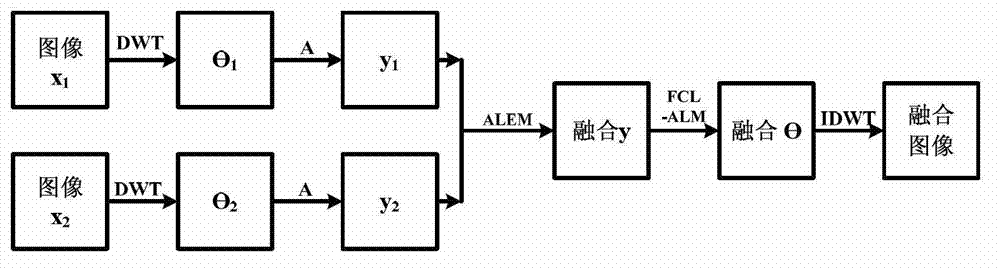

The invention discloses a method for multi-focus image fusion based on compressed sensing, and belongs to the technical field of image signal processing. The method includes the steps: firstly, respectively sampling two images to be fused in a compressed manner to obtain observation vectors of the two images; secondly, fusing the observation vectors of the two images to obtain fusion observation vectors; and thirdly, reconstructing a fused image of the two images to be fused by the aid of the fusion observation vectors. The observation vectors are fused by the aid of ALEM (adaptive local energy measure) fusion rules, and the fused image is reconstructed by the aid of an FCLALM (fast continuous linear augmentation Lagrangian method). The invention further discloses a device for multi-focus image fusion based on compressed sensing. Compared with the prior art, the method and the device have the advantages that higher image fusion quality can be achieved, and signal-to-noise ratio and convergence speed are higher.

Owner:NANJING UNIV OF POSTS & TELECOMM

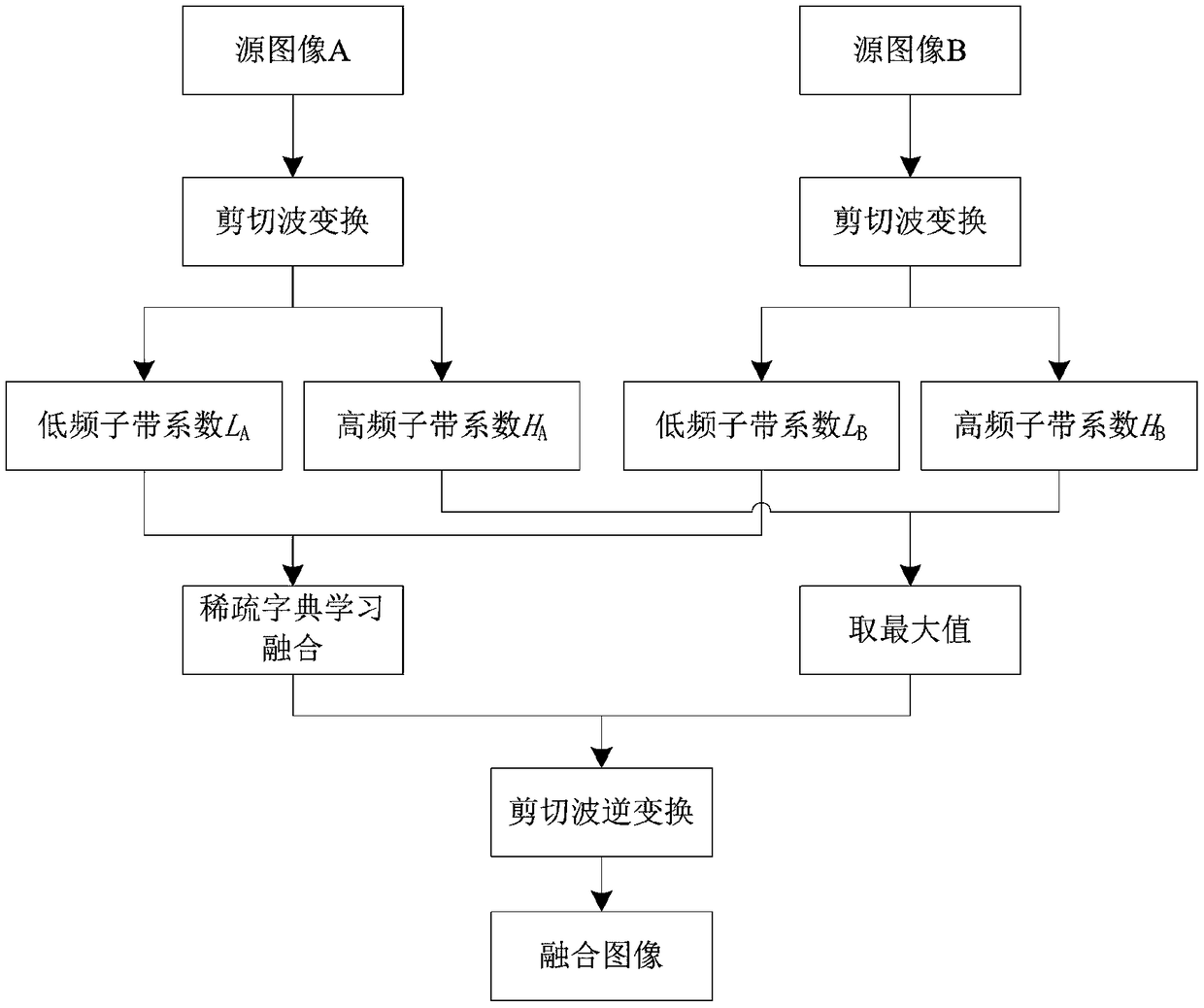

Image fusion method based on sparse dictionary learning and shear waves

InactiveCN109191416AAccurate captureDoes not introduce pseudo-Gibbs effectsImage enhancementImage analysisDictionary learningSource image

The invention discloses an image fusion method based on sparse dictionary learning and shear waves. The image fusion method based on sparse dictionary learning and shearing waves comprises the following steps: step 1, shearing wave transformation is carried out on two registered source images to obtain corresponding low-frequency sub-band coefficients and high-frequency sub-band coefficients; step2, the low-frequency sub-band coefficients are subjected to sparse dictionary learning and fused to obtain low-frequency fusion coefficients; step 3, the high-frequency sub-band coefficients are fused by adopting an absolute value and a maximum value method of the directional sub-band under the same scale to obtain the high-frequency fusion coefficients; step 4, shear wave inverse transformationis performed on the low-frequency fusion coefficient and the high-frequency fusion coefficient to obtain a fusion image. The invention can decompose the image into more directional sub-bands, providesmore directional sub-band information for subsequent image fusion processing, can accurately capture the edge information in the image, and finally improves the image fusion quality.

Owner:XIDIAN UNIV

Belt Fuser for an Electrophotographic Printer

ActiveUS20100303524A1Overcome disadvantagesImprove fusion qualityElectrographic process apparatusEngineeringRadiant heat

A fuser and method of use for an electrophotographic imaging device that includes a lamp heater assembly; an endless fusing belt having a flexible tubular configuration and being positioned about the lamp heater assembly and spaced outwardly therefrom; a transparent or translucent pressure tube having an elongated tubular body and a pair of opposite ends, the body being substantially transparent to passage of radiant heat therethrough; a pressure tube support assembly having a frame and a pair of bearings mounted on the frame spaced apart from one another and supporting the pressure tube at the opposite ends of the tubular body thereof such that the tubular body of the pressure tube is positioned around the lamp heater assembly and inside the fusing belt and enables radiant heat generated by the lamp heater to pass through the transparent or translucent pressure tube and heat the fusing belt

Owner:LEXMARK INT INC

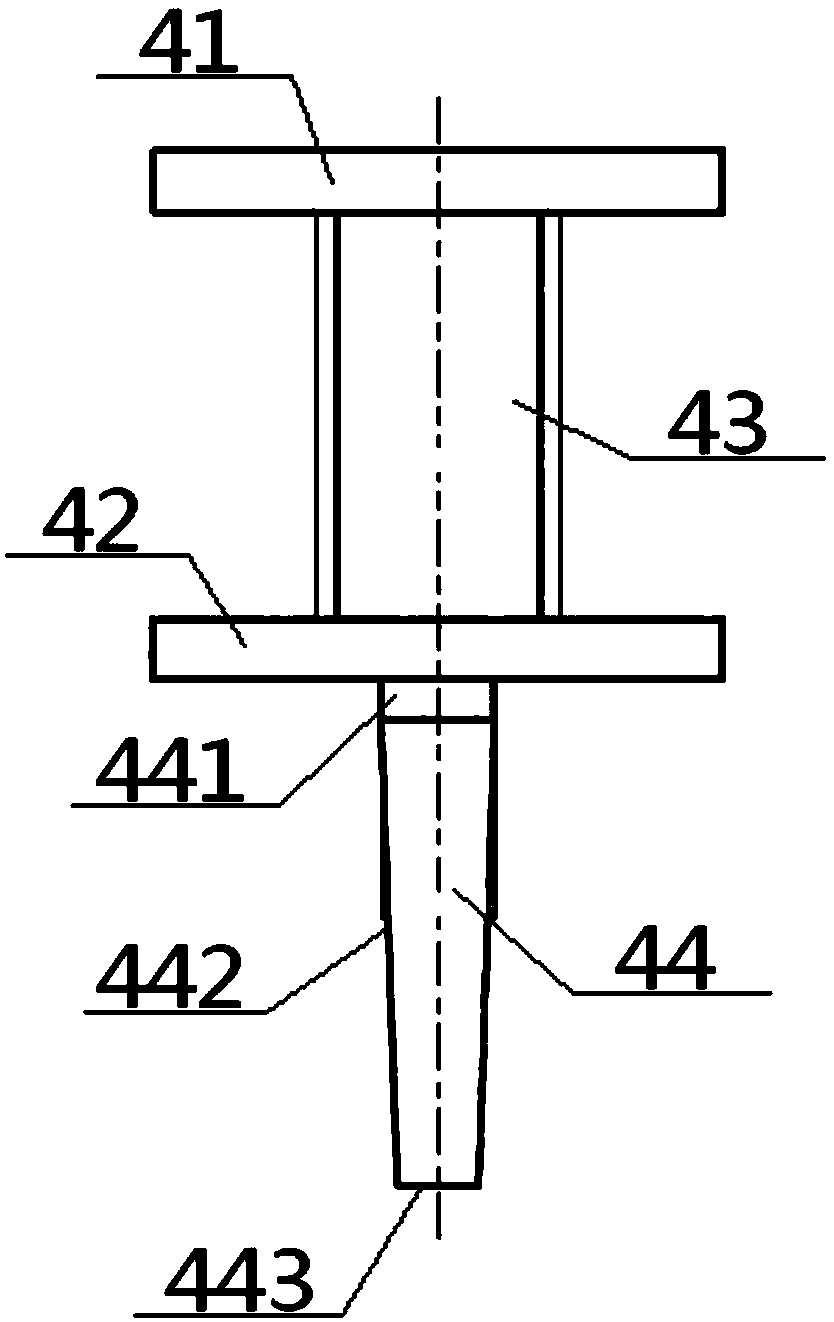

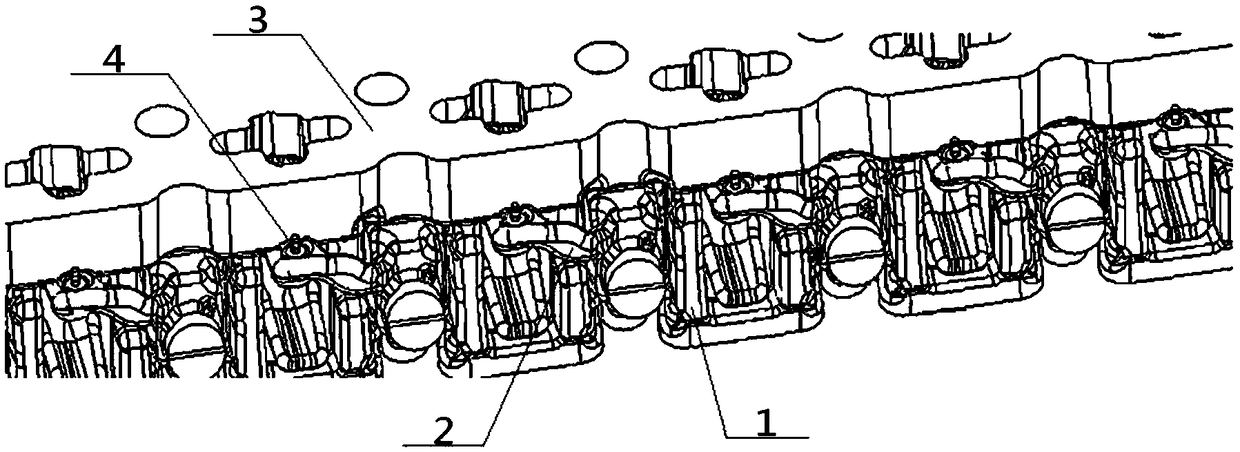

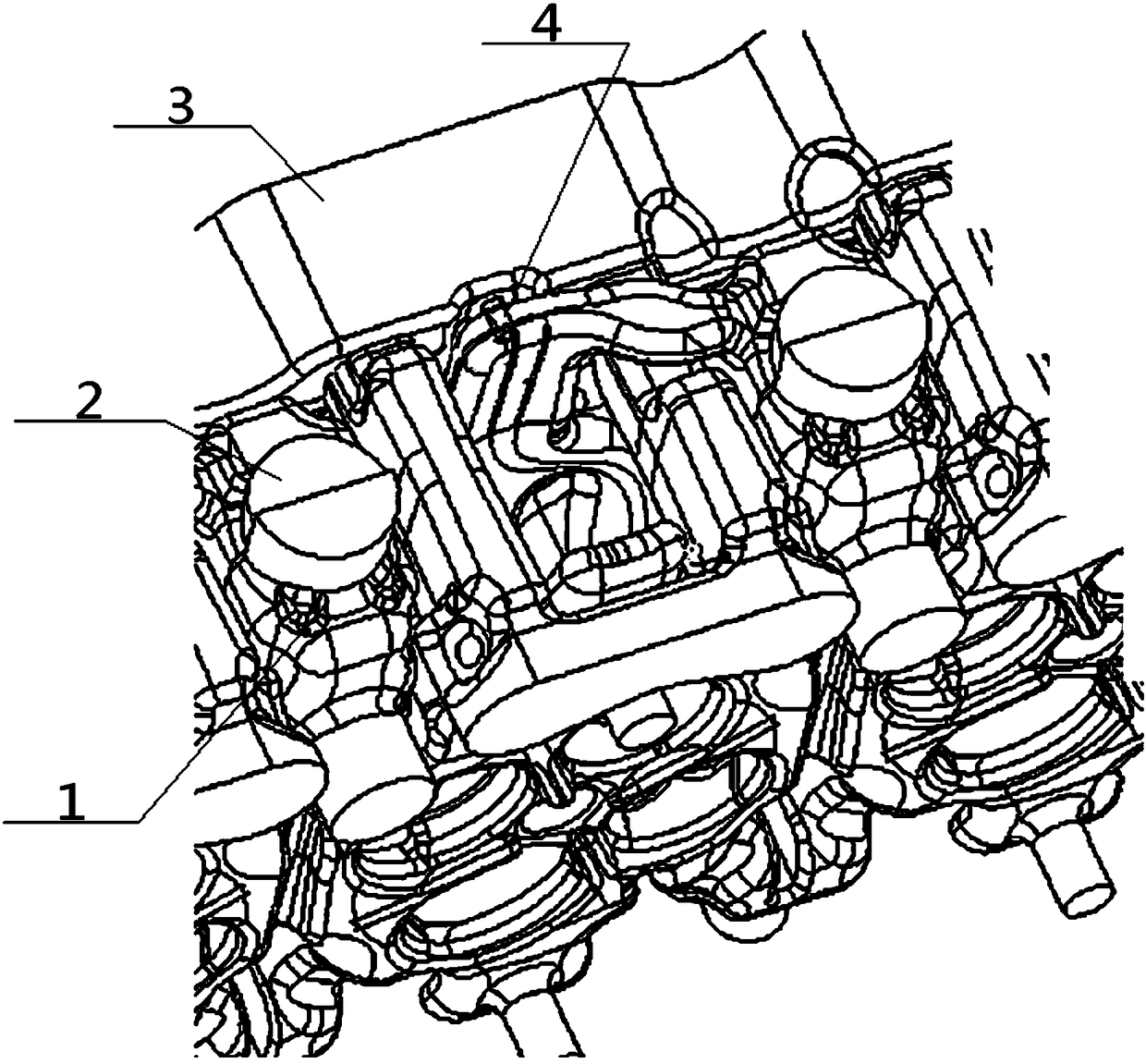

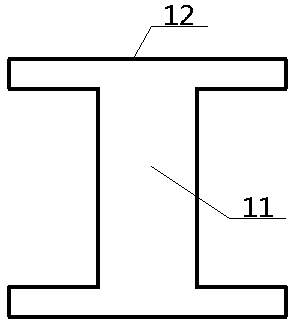

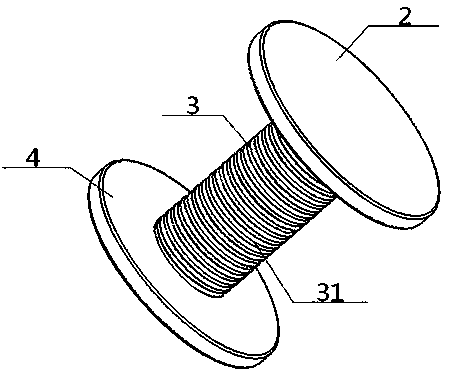

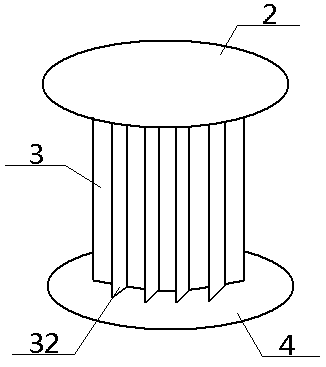

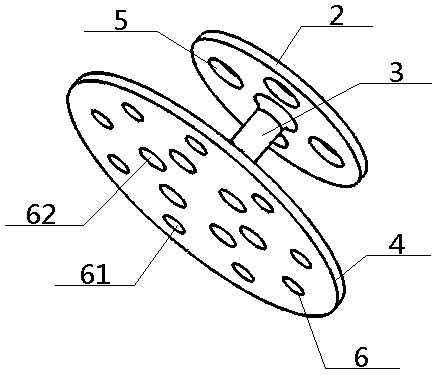

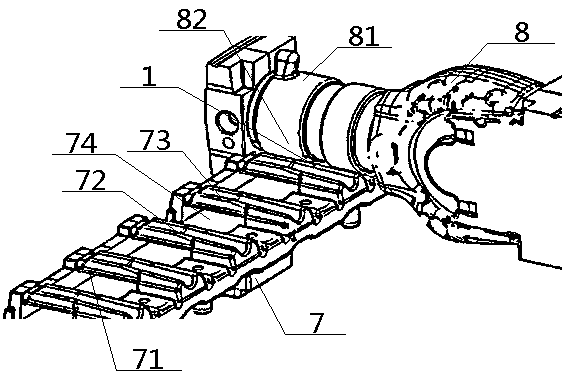

Positioning chaplet for casting, application process thereof and combined core

PendingCN109290529ASimple structureReduce the difficulty of operationFoundry mouldsFoundry coresEngineeringWater jacket

The invention provides a positioning chaplet for casting. The positioning chaplet comprises an upper supporting seat, a connecting rod, a lower supporting seat and a positioning pin which are sequentially connected from top to bottom, wherein the connecting rod is provided with rough threads, a combined core where the chaplet is located comprises an oil tank core, upper water jacket cores and lower water jacket cores which are sequentially arranged from top to bottom, a plurality of chaplet bodies are arranged between the oil tank core and the upper water jacket cores, a plurality of upper supporting points are arranged on the bottom surface of the oil tank core so as to be in supporting fit with the upper supporting seat in the chaplet, a plurality of lower positioning holes are formed inthe upper water jacket cores so as to be inserted and matched with the positioning pin, and the positions of the lower positioning holes are preferably selected as the positions of hot joints in theupper water jacket cores. According to the design, the structure is simple, the positioning effect is good, the heat joint distribution can be reduced, and the shrinkage defect can be eliminated.

Owner:DONGFENG COMML VEHICLE CO LTD

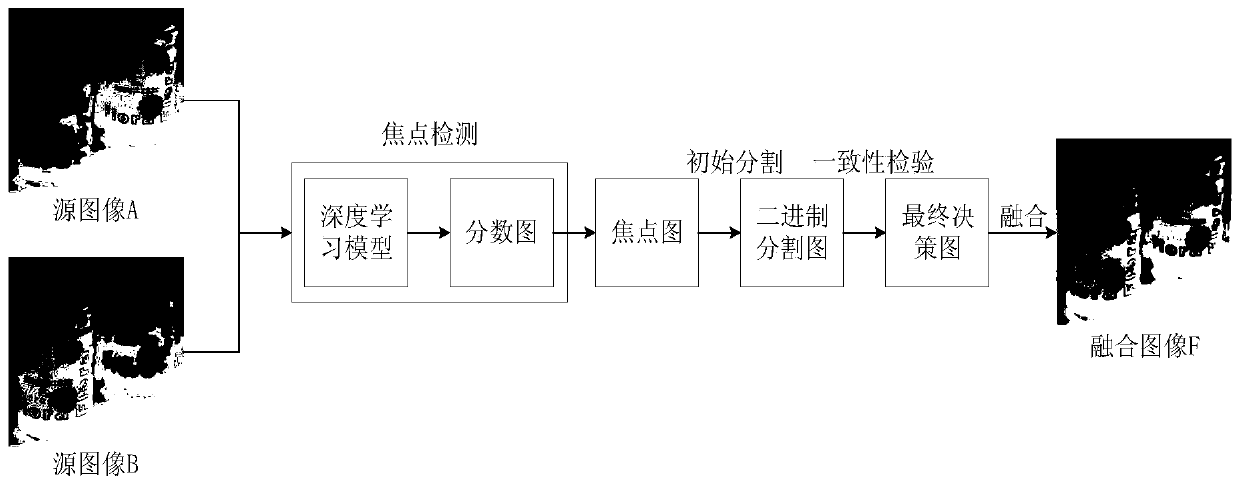

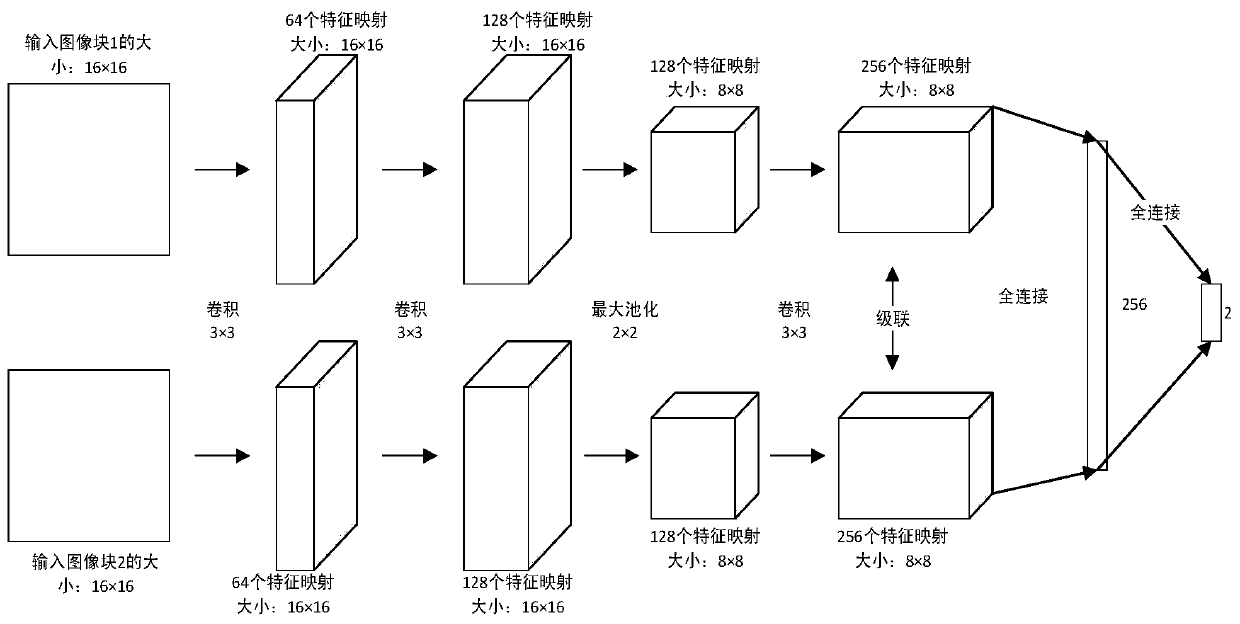

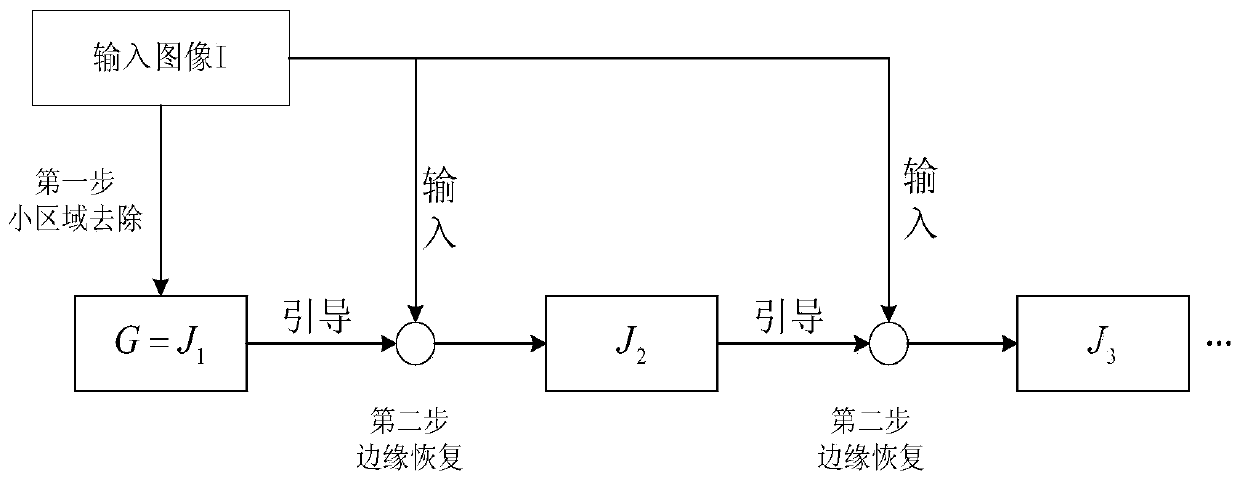

Image fusion method based on convolutional neural network and dynamic guided filtering

InactiveCN110555820AImprove fusion qualityQuality improvementImage enhancementImage analysisSource imageComputer vision

The invention relates to a new multi-focus image fusion method based on a convolutional neural network and dynamic guided filtering. By constructing a convolutional neural network for focus detection,direct mapping from a source image to a focus image is generated, manual operation is avoided, pixel distribution maps of a focusing area and a non-focusing area are obtained, and then a high-qualityfused image is obtained through dynamic guided filtering operation of small area removal and edge reservation.

Owner:NORTHWESTERN POLYTECHNICAL UNIV +1

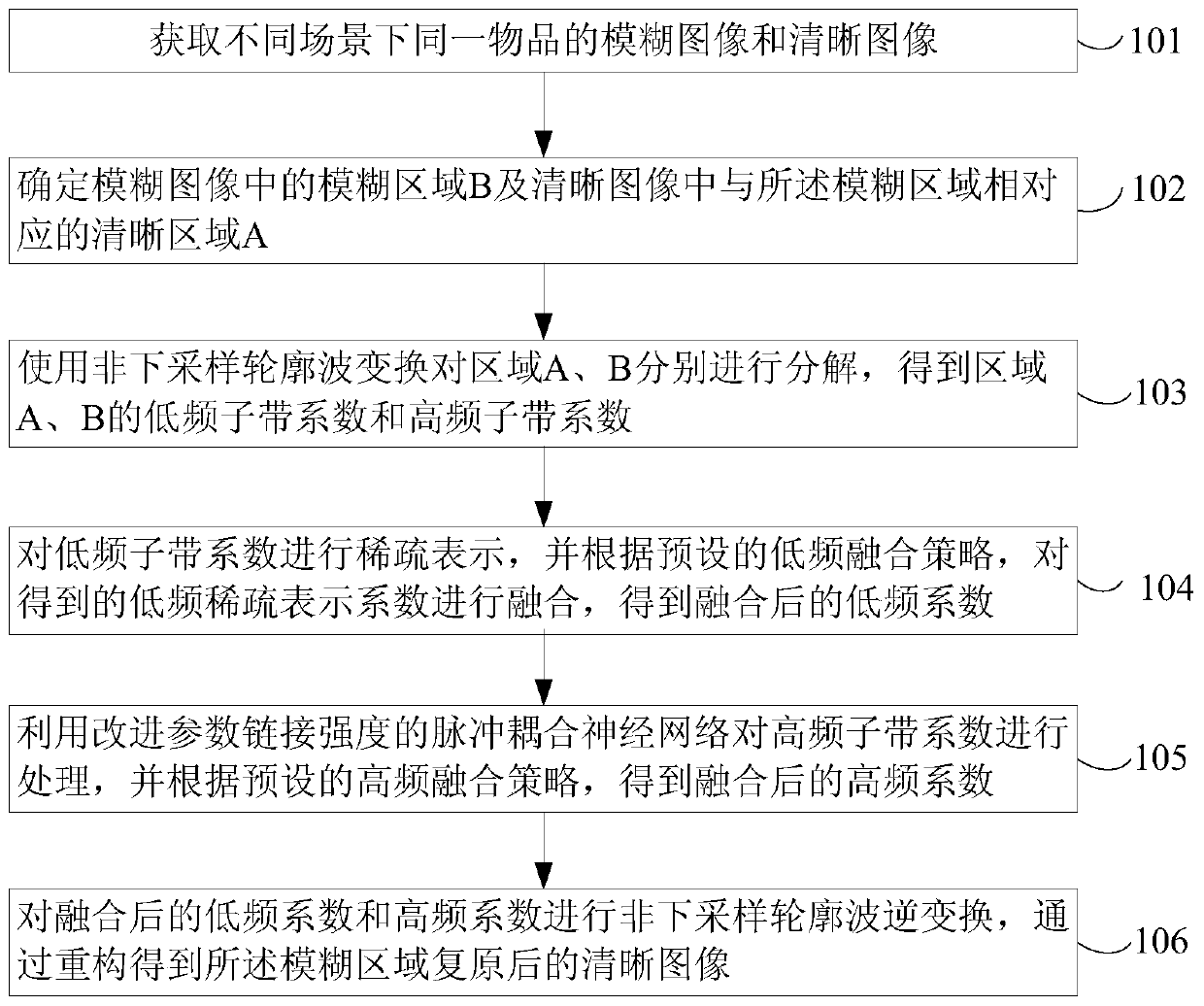

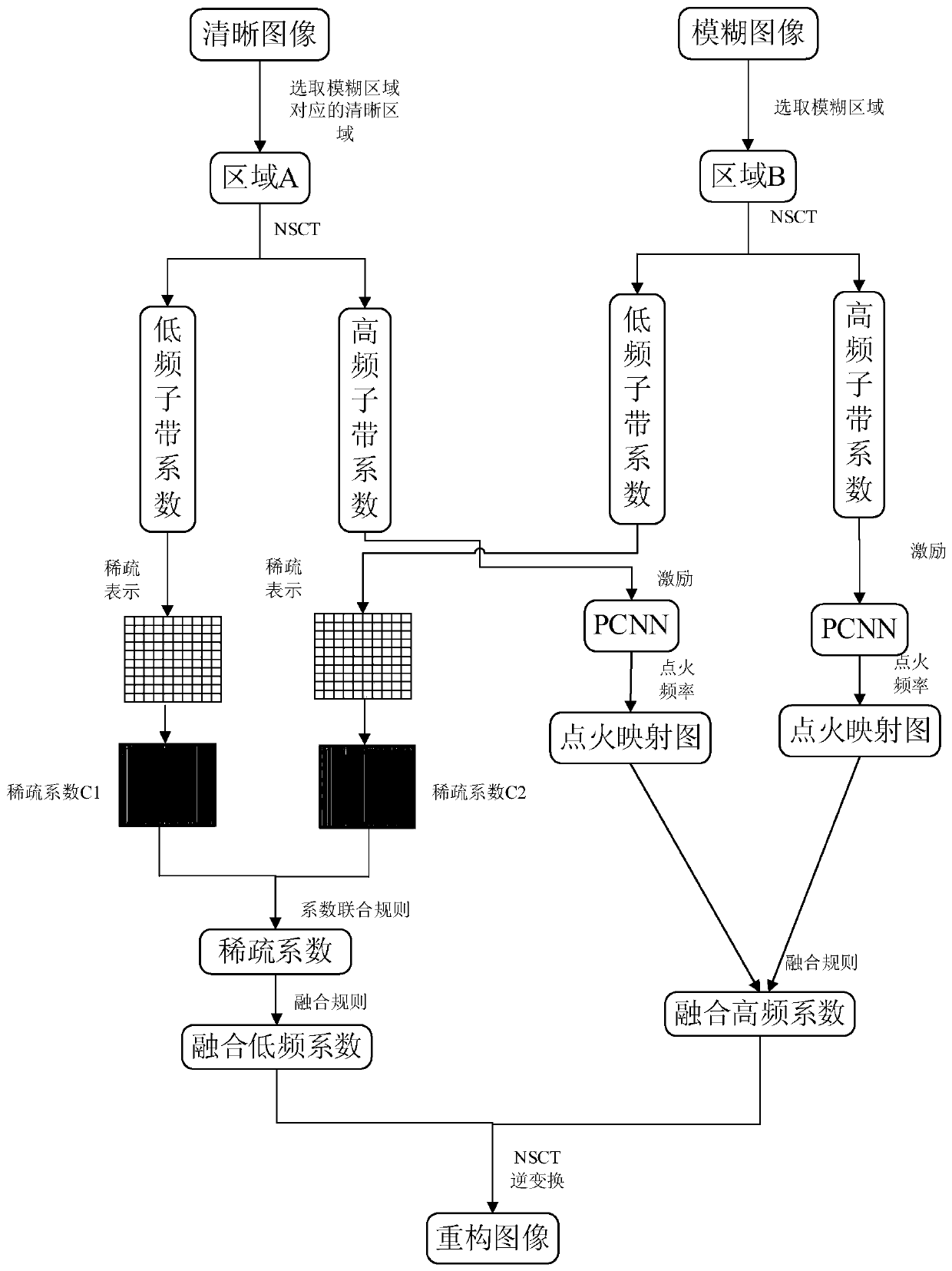

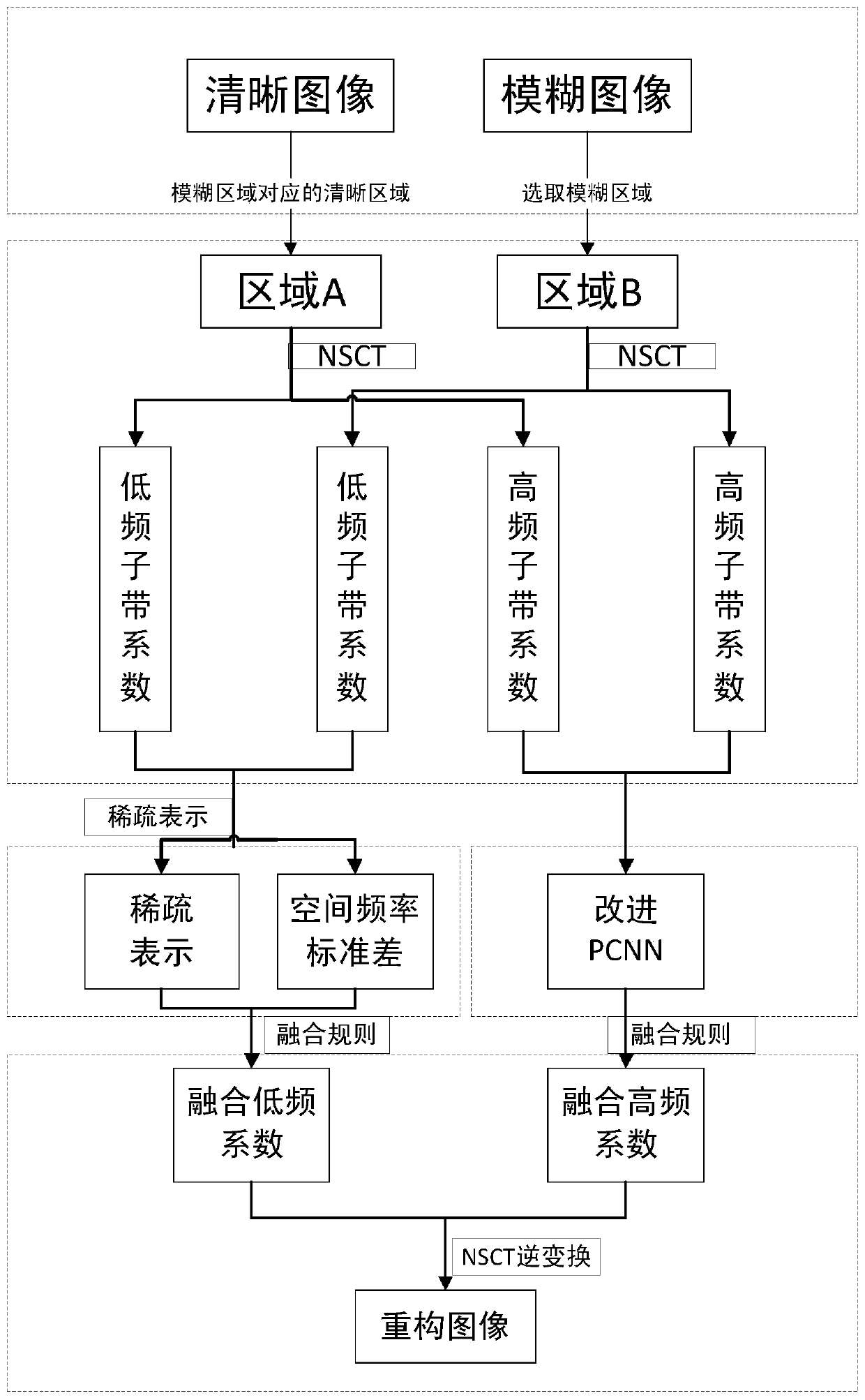

An image restoration method and device based on an NSCT transform domain

ActiveCN109949258AImprove fusion qualityImprove fidelityImage enhancementImaging processingContourlet

The invention provides an image restoration method and device based on an NSCT transform domain, and the restoration effect of an image is enhanced. The method comprises the steps of obtaining blurredimages and clear images of the same article under different scenes; determining a blurred area B in the blurred image and a clear area A corresponding to the blurred area in the clear image; respectively decomposing the regions A and B by using non-subsampled contourlet transform to obtain low-frequency and high-frequency sub-band coefficients of the regions A and B; performing sparse representation on the low-frequency sub-band coefficients, and fusing the obtained low-frequency sparse representation coefficients to obtain fused low-frequency coefficients; Processing the high-frequency sub-band coefficient by using a pulse coupling neural network with improved parameter link strength, obtaining a fused high-frequency coefficient according to a preset high-frequency fusion strategy; performing non-downsampling contourlet inverse transformation on the fused low-frequency coefficient and high-frequency coefficient, and obtaining a clear image after restoration of the fuzzy region through reconstruction. The invention relates to the field of image processing.

Owner:UNIV OF SCI & TECH BEIJING

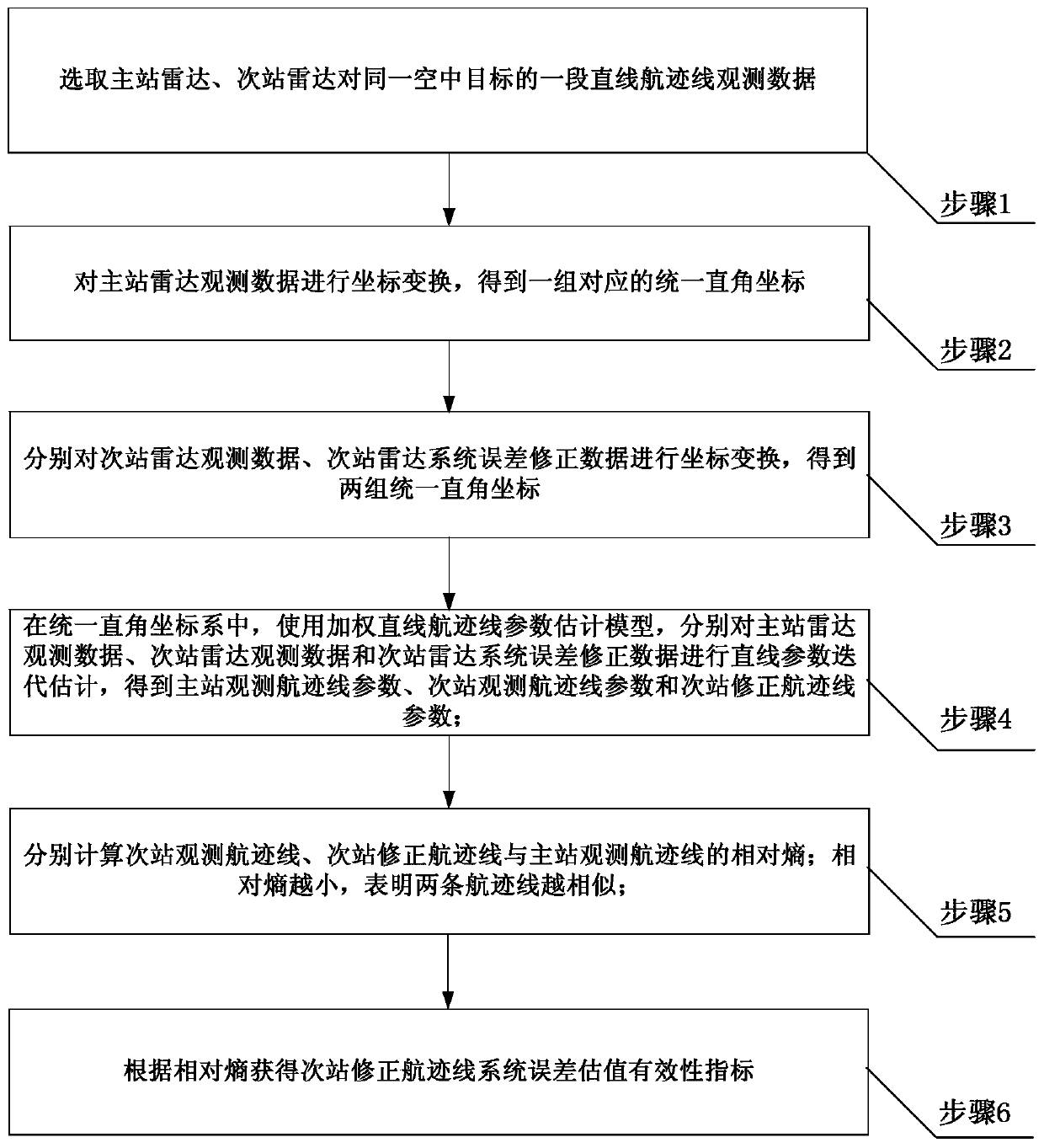

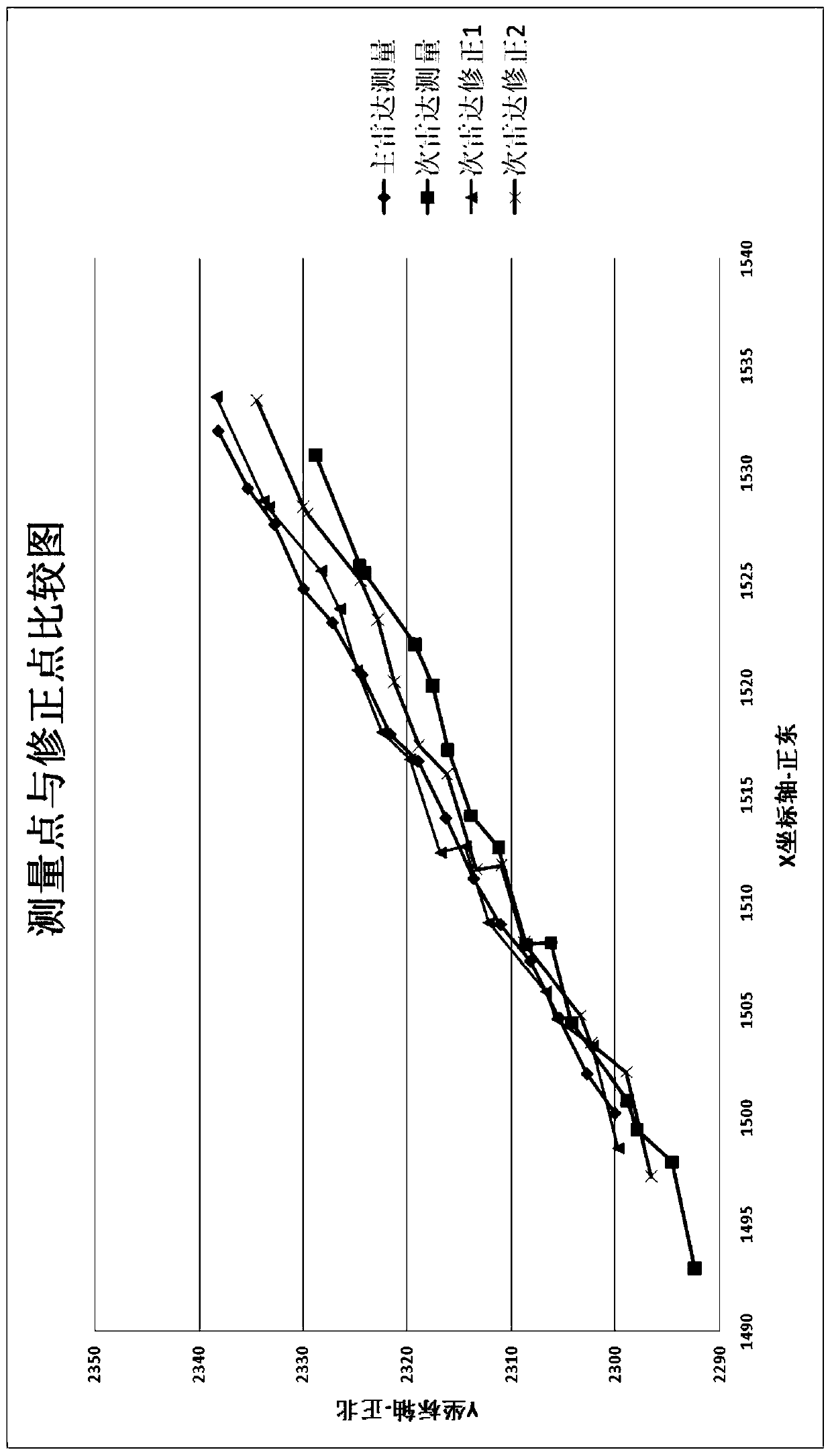

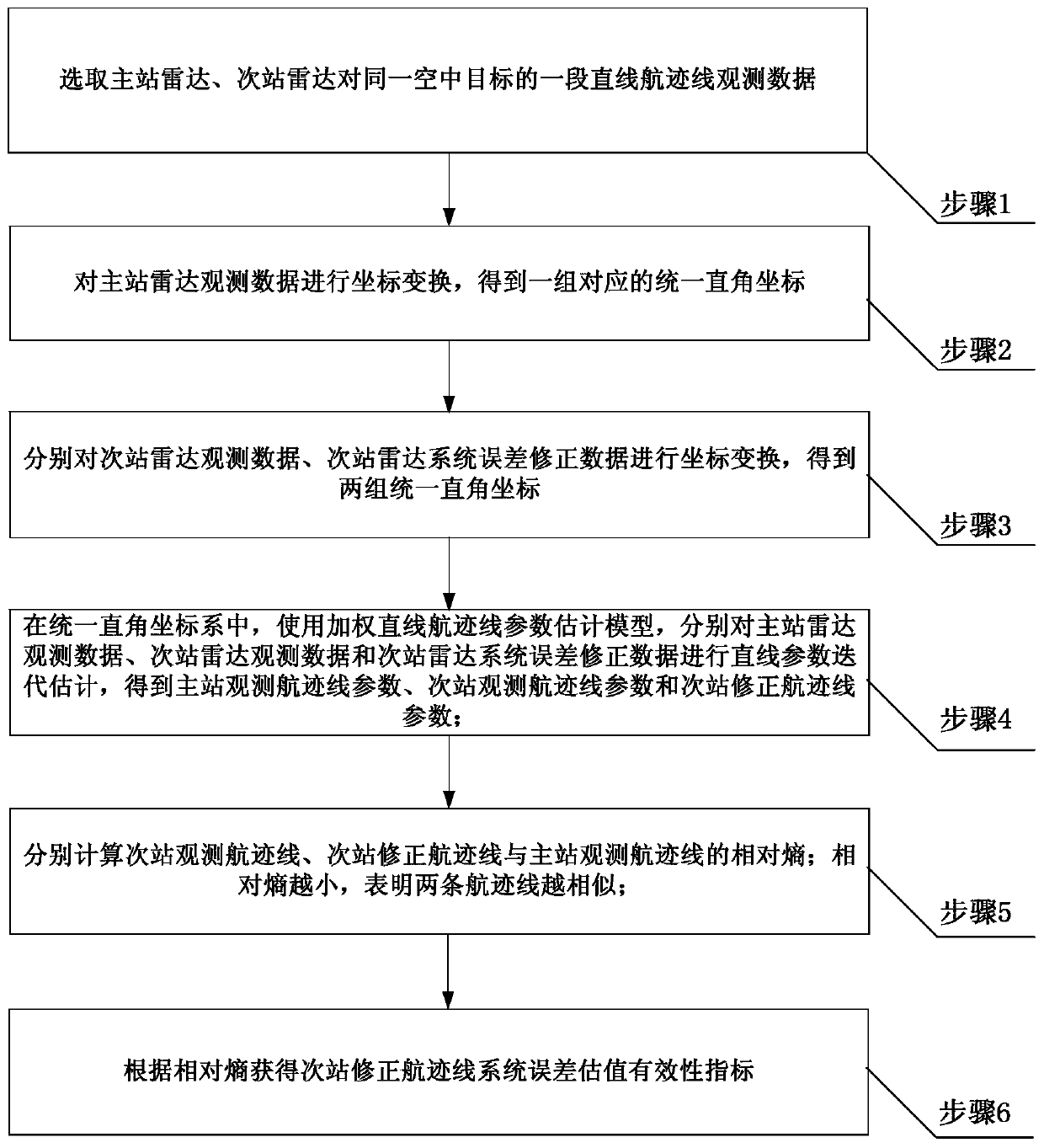

Method for evaluating validity of estimated value of radar relative system error

ActiveCN110045342APrinciple method scienceThe implementation steps are reasonableWave based measurement systemsObservation dataRadar observations

The invention belongs to the technical field of multi-radar data fusion, and particularly relates to a method for evaluating the validity of an estimated value of a radar relative system error. According to the method provided by the invention, a group of observation data of a typical route target by primary and secondary station radars is selected, after central unified rectangular coordinate conversion, linear parameter iterative estimation is performed on two radar observation track lines, a system error correction track line of the secondary station radar by using an unweighted linear track line parameter estimation model and a weighted linear track line parameter estimation model successively, and a validity index of the estimated value of a system error of a secondary station correction track line is constructed by calculating relative entropies with a primary station observation track line before and after the system error of a secondary station observation track line is corrected, so as to evaluate the validity and the correction effect of the estimated value of the system error. According to the method provided by the invention, the principle method is scientific, and theimplementation steps are reasonable. Compared with the traditional system error validity evaluation method, the complexity is simplified while improving the accuracy and the operability, and the engineering implementation is facilitated.

Owner:STRATEGIC EARLY WARNING RES INST OF THE PEOPLES LIBERATION ARMY AIR FORCE RES INST

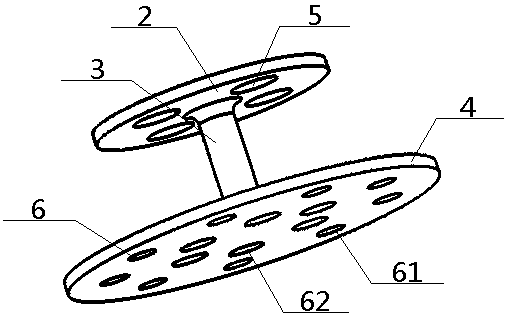

Fusible chaplet for thin-walled iron casting, application method and combined core

PendingCN109277538AImprove mechanical propertiesImprove fusion effectFoundry mouldsFoundry coresRound barThin walled

A fusible chaplet for a thin-walled iron casting comprises an inner copper body and an outer tin layer wrapping the exterior of the copper body. The inner copper body is made of copper, and the outertin layer is made of tin; the inner copper body comprises a binding post, and an upper supporting seat and a lower supporting seat which are vertically connected to the two ends of the binding post; acombined core comprises an upper water jacket core, round bar cores and chaplets, and one upper water jacket core is provided; a plurality of round bar cores and chaplets are provided, and the roundbar cores correspond to the chaplets one by one; the top surface of the upper water jacket core is provided with a plurality of core units arranged continuously, and one round bar core is arranged above each core unit correspondingly; and one chaplet is arranged between each round bar core and each core unit. According to the design, not only can the more complete fusion between the chaplets and the casting be ensured, but also impurities are not introduced; and the anti-oxidation effect is better.

Owner:DONGFENG COMML VEHICLE CO LTD

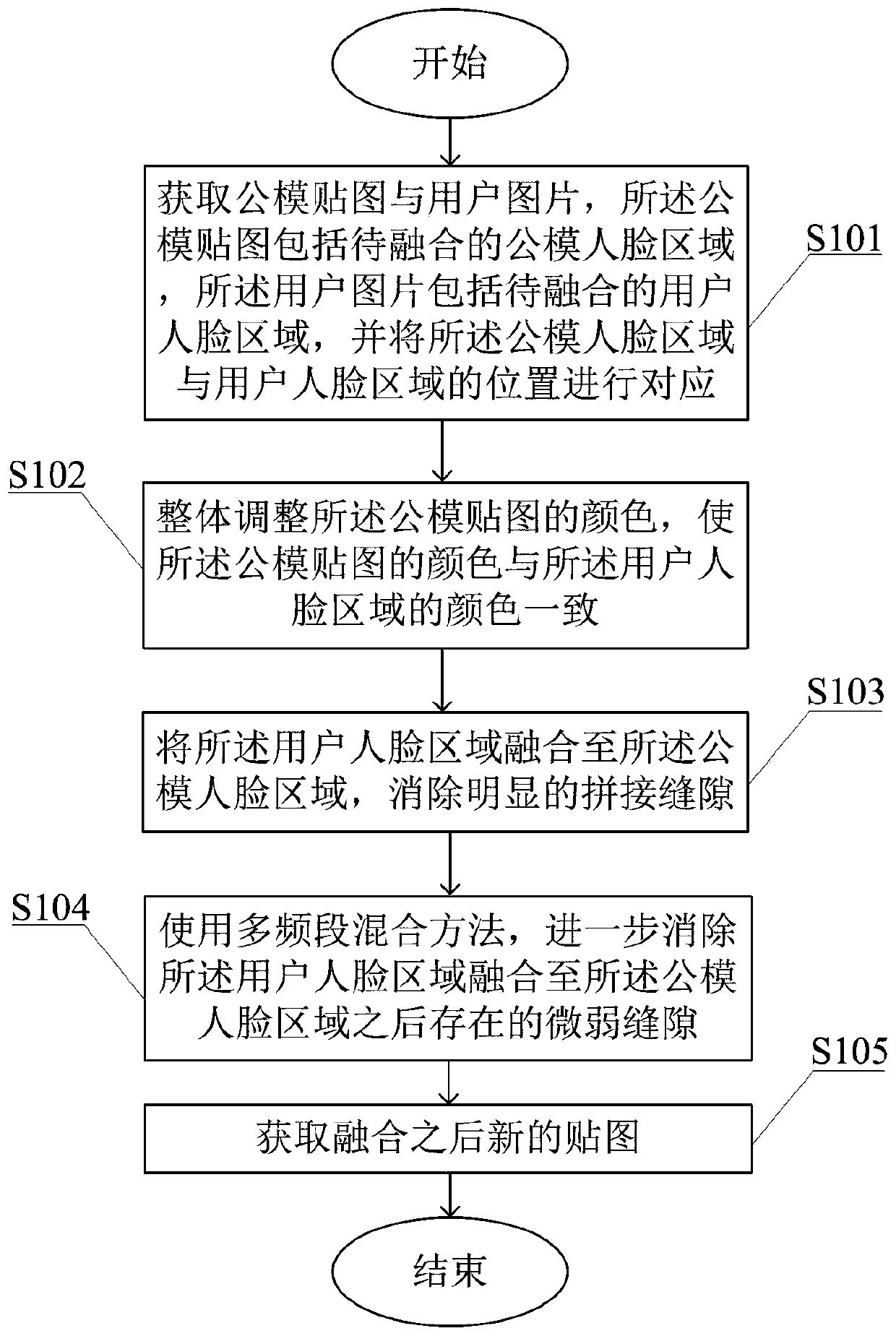

Three-dimensional face model map fusion method and computer processing equipment

PendingCN110232730AImprove texture blending effectEasy to integrateImage enhancementImage analysisMulti bandComputer graphics (images)

The invention discloses a three-dimensional face model map fusion method. The three-dimensional face model map fusion method comprises the following steps: obtaining a male model map and a user picture; integrally adjusting the color of the male mold map to enable the color of the male mold map to be consistent with the color of the face area of the user; fusing the user face area to the male moldface area, and eliminating obvious splicing gaps; using a multi-band mixing method to further eliminate a weak gap existing after the user face area is fused to the male model face area; and obtaining a new map after fusion. According to the three-dimensional face model texture fusion method, manual interaction is not needed in the whole process from preprocessing to final three-dimensional facemap fusion, and full-automatic operation is achieved. According to the three-dimensional face model texture fusion method, on the actual effect, detail defects possibly existing in three-dimensional face model map fusion are processed.

Owner:SHENZHEN THREE D ARTIFICIAL INTELLIGENCE THCHNOLOGY CO LTD

Chaplet for engine cylinder block casting, application process of chaplet and combination core with chaplet

A chaplet for engine cylinder block casting comprises an upper support, a middle connection rod and a lower support. The bottom middle of the upper support is connected with the top middle of the lower support via the connection rod; the upper support is provided with a plurality of upper fluxing holes that run through the upper support; the lower support is provided with a plurality of lower fluxing holes that run through the lower support; melting points of all materials to manufacture the upper support, the middle connection rod and the lower support are lower than the temperature of molteniron injected for mold filling for casting. The chaplet is small in size and requires little heat for melting; melting quality is high; cracks rarely occur.

Owner:DONGFENG COMML VEHICLE CO LTD

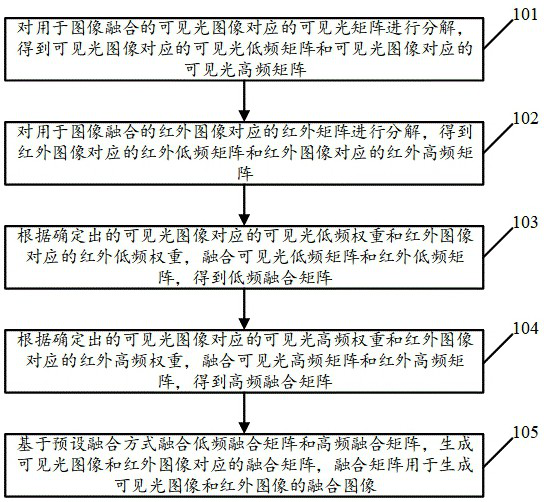

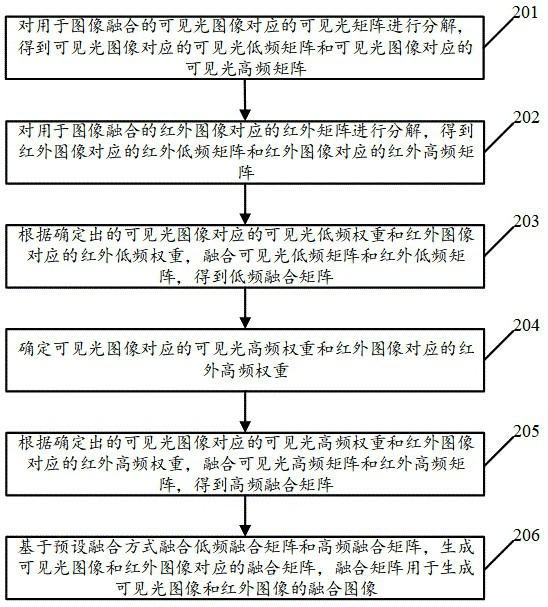

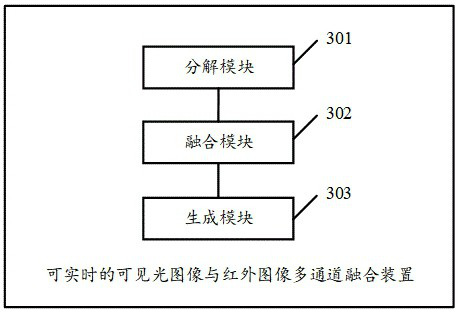

Real-time visible light image and infrared image multi-channel fusion method and device

ActiveCN114677316ALarge amount of informationHigh pixel intensityImage enhancementImage analysisMaterials scienceImage texture

The invention discloses a real-time visible light image and infrared image multi-channel fusion method and device, and the method comprises the steps: decomposing a visible light matrix corresponding to a visible light image to obtain a visible light low-frequency matrix and a visible light high-frequency matrix, and decomposing an infrared matrix corresponding to an infrared image to obtain a visible light low-frequency matrix and a visible light high-frequency matrix; obtaining an infrared low-frequency matrix and an infrared high-frequency matrix; according to the visible light low-frequency weight and the infrared low-frequency weight, fusing the visible light low-frequency matrix and the infrared low-frequency matrix to obtain a low-frequency fusion matrix, and according to the visible light high-frequency weight and the infrared high-frequency weight, fusing the visible light high-frequency matrix and the infrared high-frequency matrix to obtain a high-frequency fusion matrix; and fusing the low-frequency fusion matrix and the high-frequency fusion matrix based on a preset fusion mode to generate a fusion matrix corresponding to the visible light image and the infrared image. Visibly, the visible light image and the infrared image can be fused by implementing the method, so that the resolution of the main body content and the background area in the image is improved, and meanwhile, the meticulous degree of the image texture is improved.

Owner:深圳鼎匠科技有限公司

Technology method for welding brass H62 and low-alloy cast steel G20Mn5 dissimilar materials

The invention discloses a technology method for welding brass H62 and low-alloy cast steel G20Mn5 dissimilar materials. A manual argon tungsten-arc welding method and an S211 silicon bronze welding wire with the diameter of phi 2.5 are adopted, preheating is performed at 150-200 DEG C before welding, the situation that the interlayer temperature is higher than the preheating temperature in the welding process is guaranteed, heat is preserved for 2 hours after welding, and then dye penetrant inspection is performed. The argon tungsten-arc welding method is adopted, the technological means is simple, welding forming is attractive, the fusion quality of the root of a welding joint is good, the requirement for the skill level of workers is not high, the welding quality is stable, the production efficiency is improved, and the production cost is reduced.

Owner:XUZHOU XCMG MINING MACHINERY CO LTD

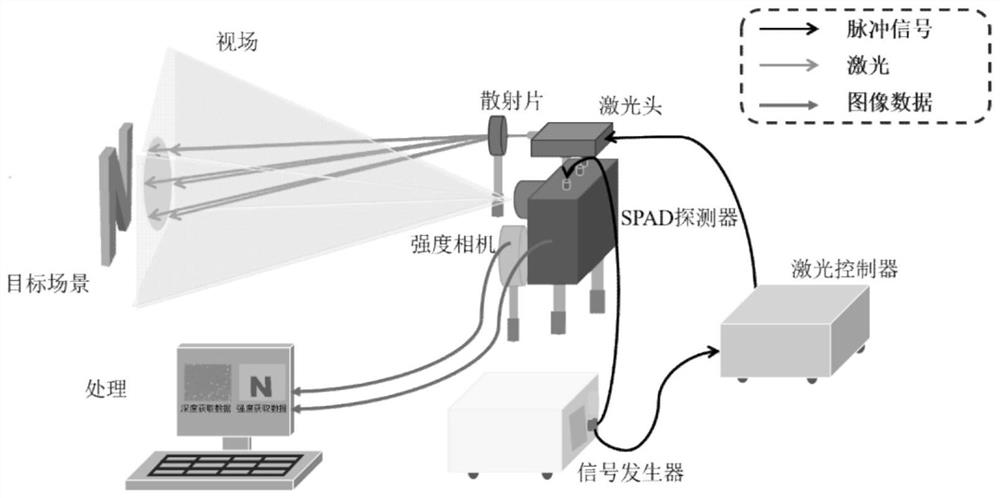

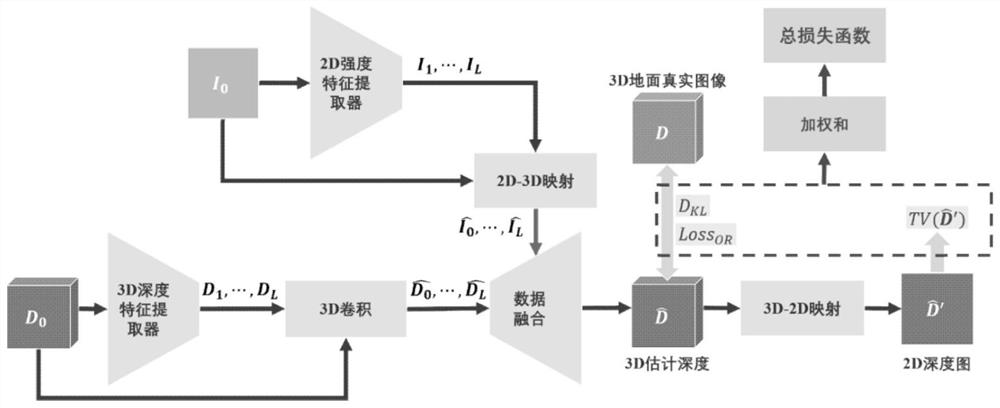

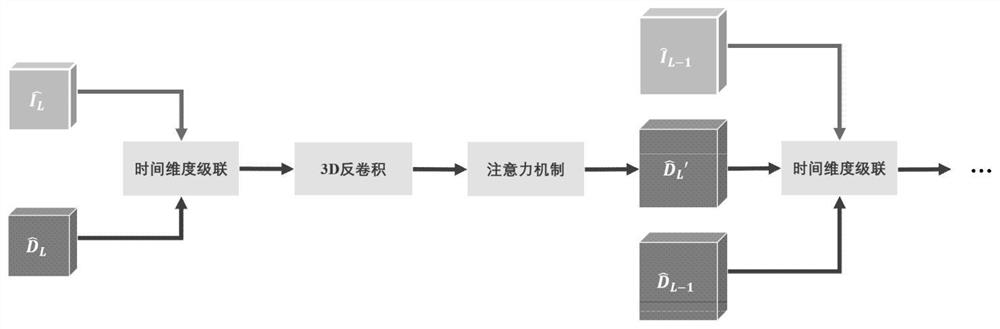

Sensor fusion depth reconstruction data driving method based on attention mechanism

PendingCN113989343AGood visual quality of imagesImprove signal-to-noise ratioImage enhancementImage analysisNeural net architectureSensor fusion

The invention discloses a sensor fusion depth reconstruction data driving method based on an attention mechanism. According to the method, a convolutional neural network structure is designed for an SPAD array detector with a resolution of 32 * 32, and a low-resolution TCSPC histogram is mapped to a high-resolution depth map under the guidance of an intensity map; and a network adopts a multi-scale method to extract input features and fuses depth data and intensity data based on an attention model. In addition, a loss function combination is designed and is suitable for a network for processing TCSPC histogram data. According to the method, a spatial resolution of depth original data can be successfully improved by four times, the depth reconstruction effect of the method is verified on simulation data and collected data, and the method is superior to other algorithms in quality and data indexes.

Owner:NANJING UNIV OF SCI & TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com