Sensor fusion depth reconstruction data driving method based on attention mechanism

A technology for reconstructing data and driving methods, applied in image data processing, instruments, image analysis, etc., can solve problems such as inability to meet application real-time requirements, and achieve the effect of improving data fusion quality, improving image visual quality, and reducing manual intervention.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

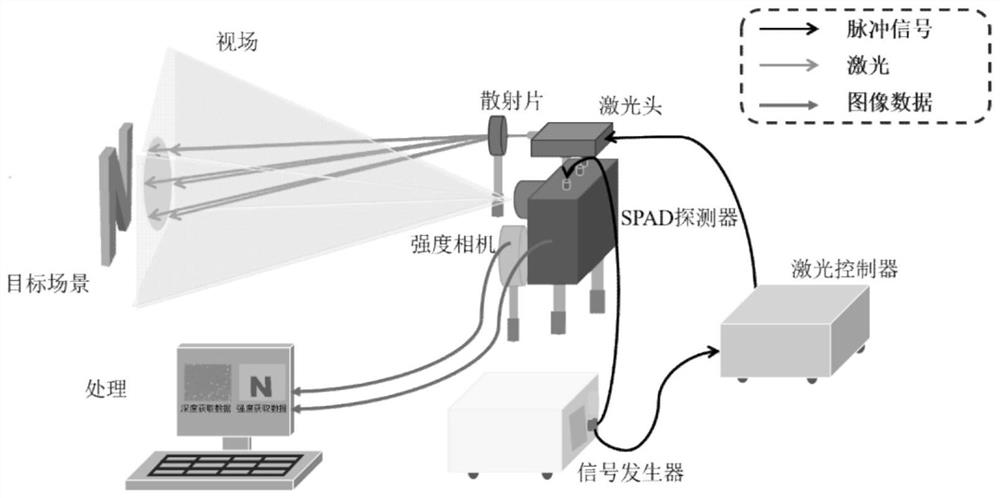

[0031] A sensor fusion depth reconstruction data-driven method based on attention mechanism, suitable for photon counting 3D imaging lidar system, the specific steps are as follows:

[0032] The first step is to construct a sensor fusion network based on attention mechanism and train it;

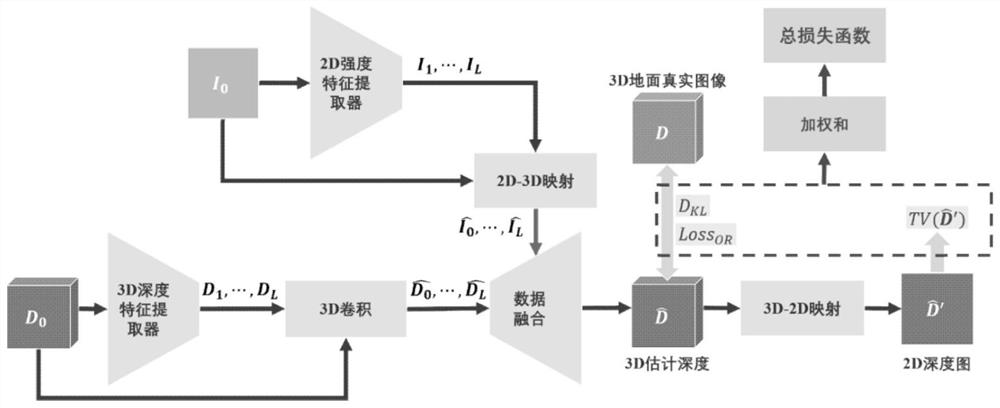

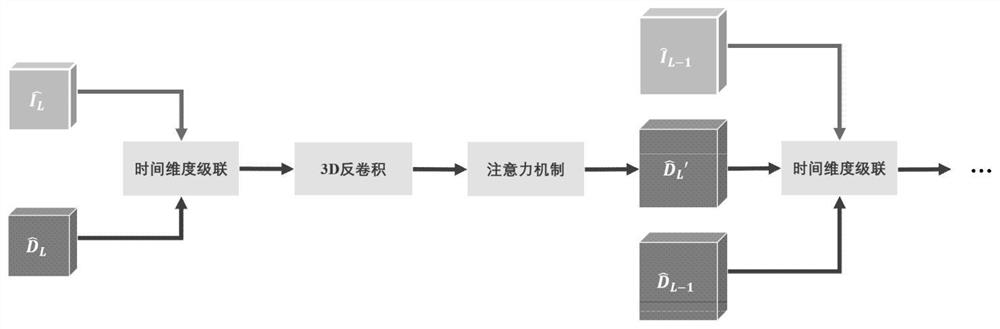

[0033]In a further embodiment, the sensor fusion network based on the attention mechanism includes a feature extraction module and a fusion reconstruction module, the main function of which is to process the input low-resolution noisy depth data under the guidance of high-resolution intensity information. Denoising and upsampling. The feature extraction module is used to extract multi-scale features in SPAD measurement data and intensity data, so that the network can learn rich hierarchical features of different scales, and better adapt to fine and large-scale upsampling; the feature extraction module obtains multi-scale features The intensity features and depth features of the correspondin...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com