Virtual reality image processing method and device

An image processing device and virtual reality technology, which is applied in the field of virtual reality image processing, can solve problems such as occupation and large bandwidth, and achieve the effects of reducing compression loss, increasing bandwidth compression rate, and saving transmission bits

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

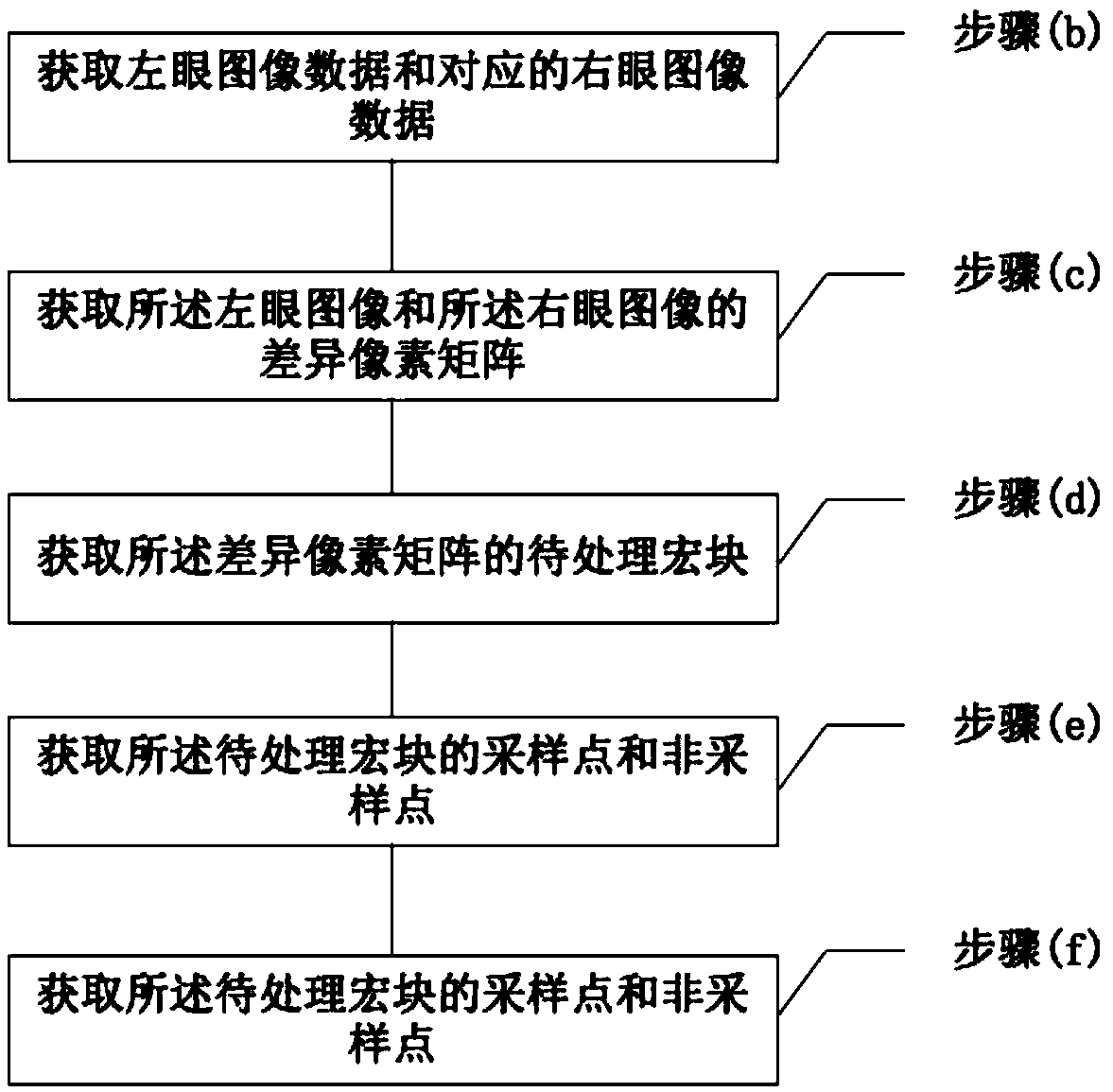

[0044] See figure 1 , figure 1 This is a schematic flowchart of a virtual reality image processing method provided by an embodiment of the present invention; the image processing method includes the following steps:

[0045] (a) Obtain left-eye image data and corresponding right-eye image data;

[0046] (b) Obtain the difference pixel matrix of the left-eye image and the right-eye image;

[0047] (c) Obtain the macroblock to be processed of the difference pixel matrix;

[0048] (d) Obtain sampling points and non-sampling points of the macroblock to be processed;

[0049] (e) Obtain the prediction residuals of the sampling point and the non-sampling point;

[0050] (f) Obtain the distribution type of the prediction residual of the macroblock to be processed;

[0051] (g) Obtain the quantized residual of the macroblock to be processed according to the distribution type.

[0052] Among them, step (b) includes:

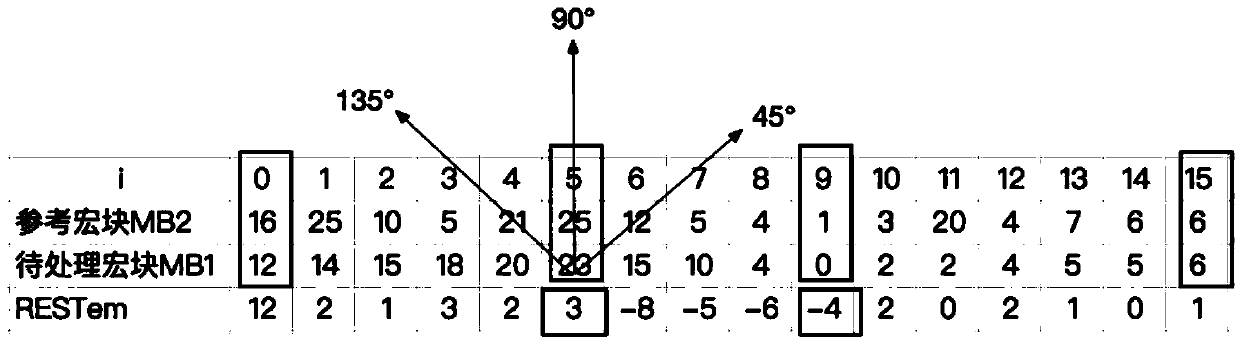

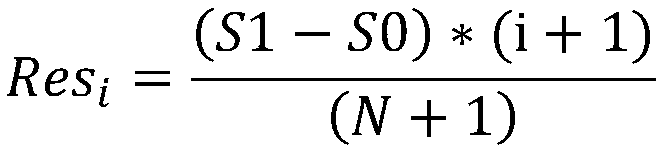

[0053] The difference pixel matrix is obtained by making a difference between ea...

Embodiment 2

[0079] See again figure 1 On the basis of the above-mentioned embodiments, this embodiment focuses on a detailed description of a virtual reality image processing method. Specifically, the method includes the following steps:

[0080] (S01) Acquire left-eye image data and right-eye image data obtained at any time on the host of the virtual reality device;

[0081] Among them, the left-eye image data and the right-eye image data can be two image pixel matrices of the same size. Let the two pixel matrices be the left-eye pixel matrix A and the right-eye pixel matrix B respectively. The sizes of A and B are both m× n, where both m and n are integers greater than 0, m represents the number of rows of the pixel matrix, and n represents the number of columns of the pixel matrix.

[0082] (S02) Make a difference between the pixel value in the left-eye image data and the corresponding pixel value in the right-eye image data to obtain a difference pixel matrix;

[0083] Among them, the differ...

Embodiment 3

[0164] Based on the above embodiments, this embodiment focuses on a detailed description of a virtual reality image processing device. The virtual reality image processing device includes a processor and a storage medium, and the processor and the storage medium are used to execute the above embodiment. The virtual reality image processing method of the first and second embodiments, wherein the storage medium is used to store related variables of the virtual reality image processing method, and the processor is used to execute the virtual reality image processing method.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com