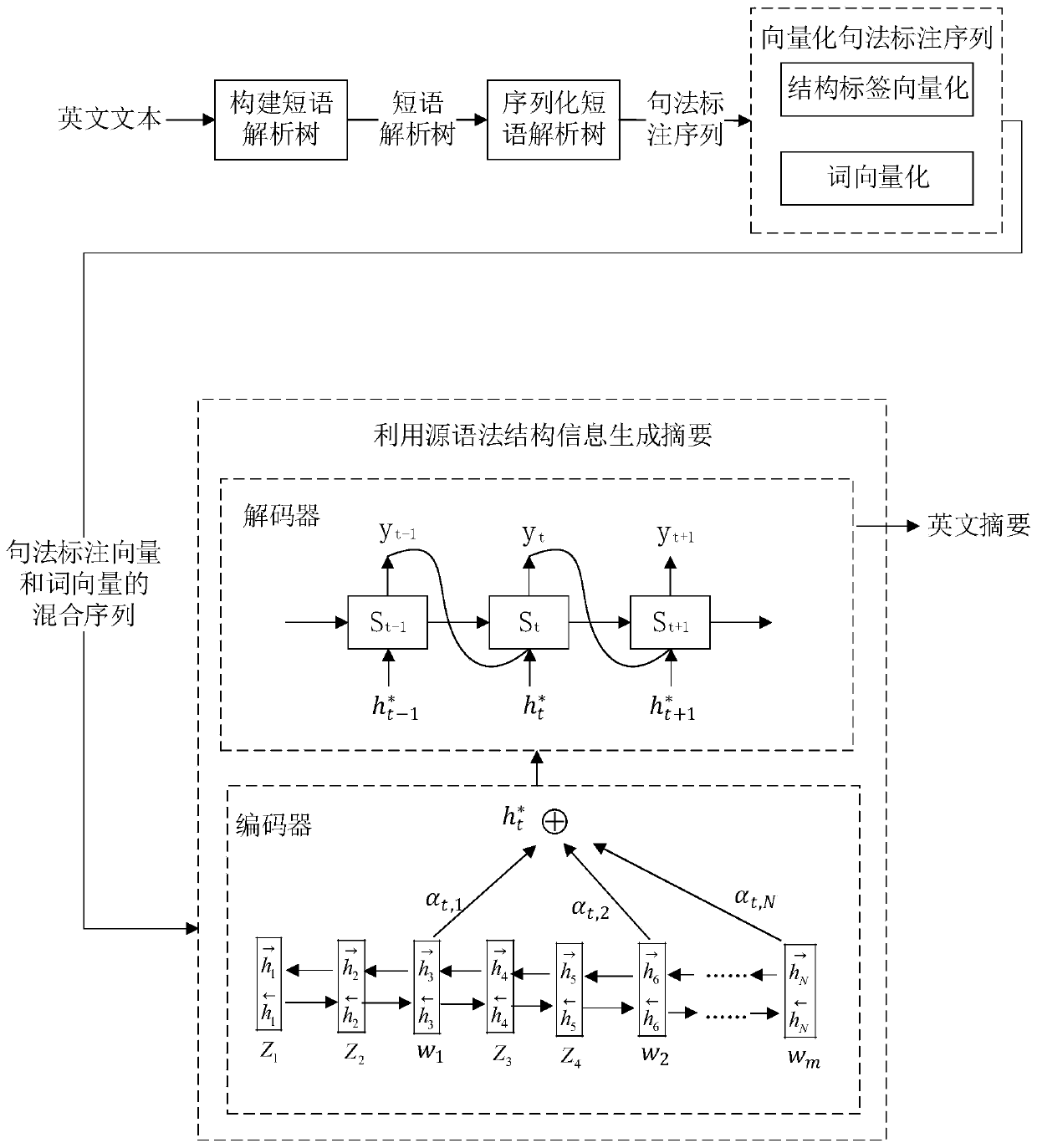

A generation type text abstract method fusing a sequence grammar annotation framework

A technology of fusion sequence and generative type, applied in special data processing applications, instruments, electronic digital data processing, etc., can solve the problem that the abstract does not meet the grammar rules, and achieve the effect of strong readability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

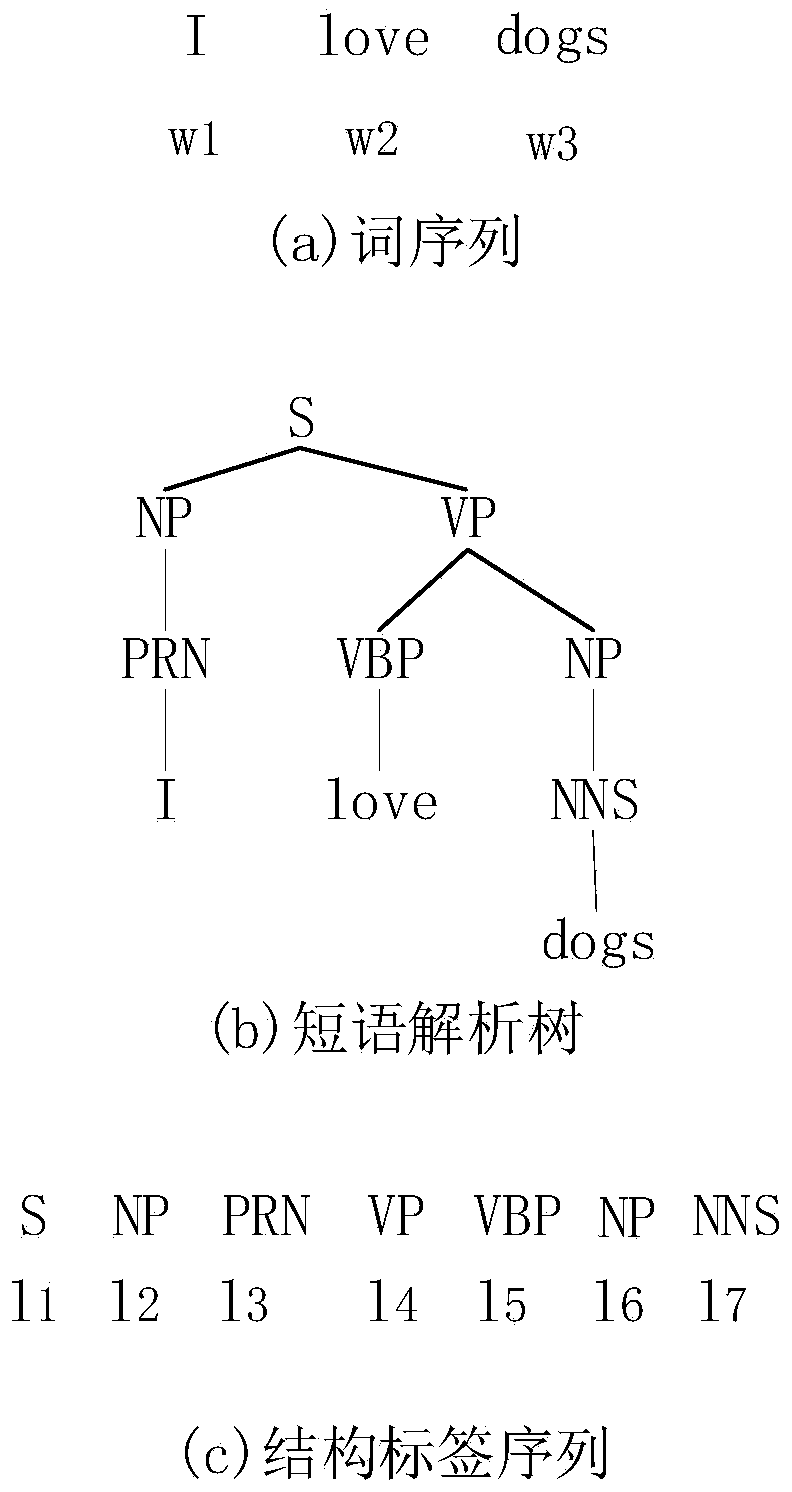

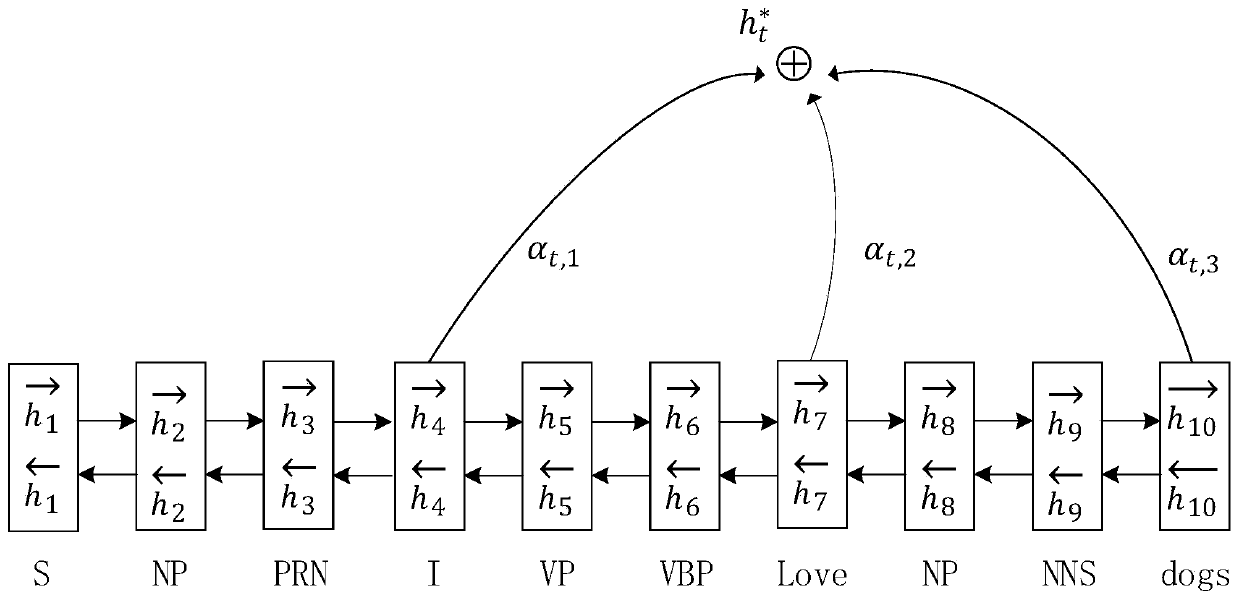

Method used

Image

Examples

Embodiment Construction

[0024] In order to better illustrate the purpose and advantages of the present invention, the implementation of the method of the present invention will be further described in detail below in conjunction with examples.

[0025] Experiments use the CNN / Daily Mail dataset, which contains online news articles (average 781 token tokens) and multi-sentence summaries (average 3.75 sentences or 56 token tokens). The dataset has a total of 287,226 training pairs, 13,368 validation pairs, and 11,490 testing pairs.

[0026] During the experiment, the maximum sentence length of the source end is 400, and the maximum sentence length of the target end is 100. The maximum vocabulary frequency limit for source and target is 50,000 words. All superclass words are uniformly marked as UNK. In addition, the vocabulary dimension is set to 128, the encoder and decoder hidden layer dimensions are set to 256, the encoder and decoder vocabulary size is 50000, the number of batch gradients is 16, a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com