Double-flow video generation method based on different feature spaces of text

A feature space, two-stream technology, applied in the fields of natural language processing, computer vision, and pattern recognition, can solve problems such as difficult high-quality generation, overestimation of the learning ability of a single model, and insufficient understanding of the model

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0079] Below in conjunction with accompanying drawing, further describe the present invention through embodiment, but do not limit the scope of the present invention in any way.

[0080] The present invention provides a dual-stream video generation method based on different feature spaces of the text. By separating the spatial features and temporal features contained in the text and modeling these features in a dual-stream manner, the learning ability of the specified features is maximized. , and use the way of confrontation training to optimize the generation results.

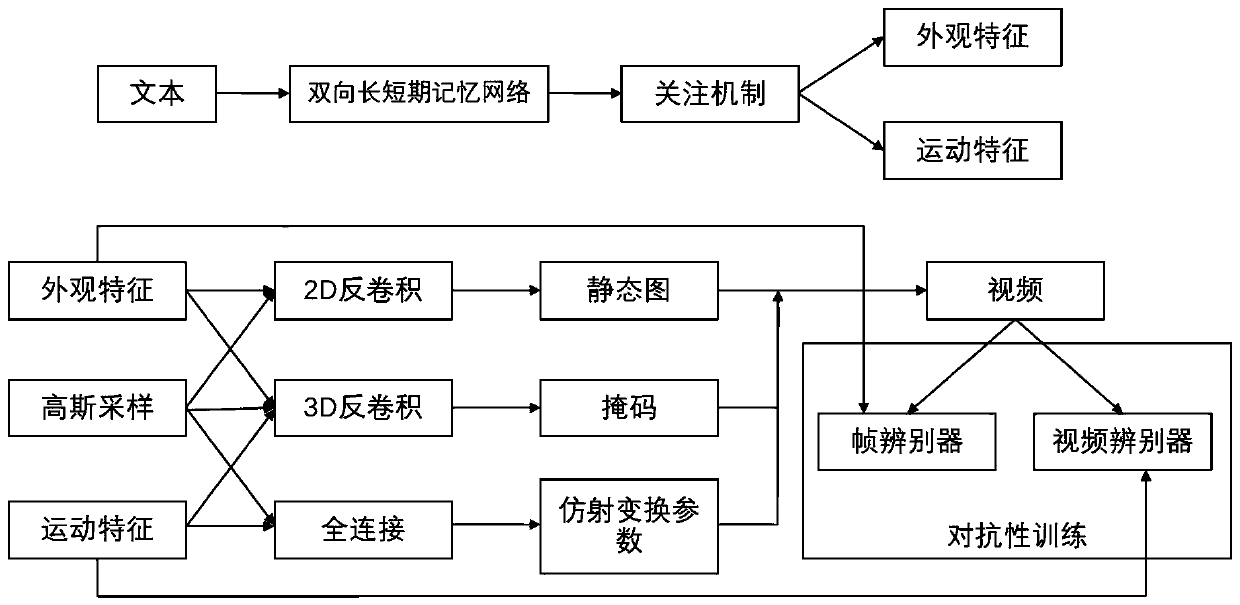

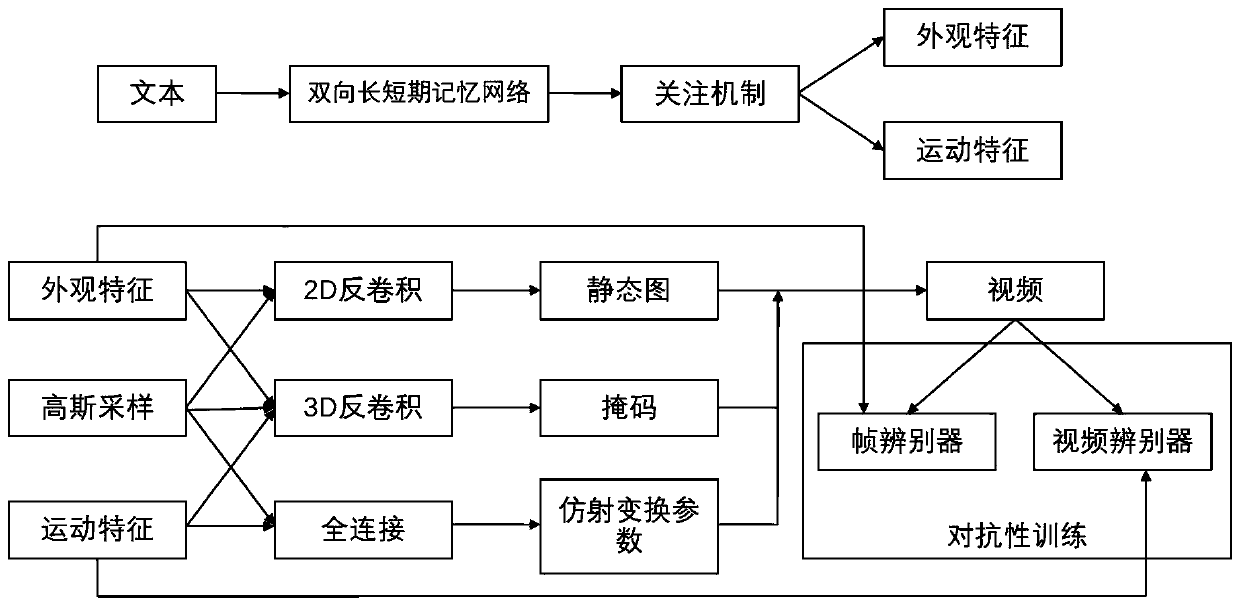

[0081] The method provided by the present invention includes: a text feature extraction process, a dual-stream video generation process and an adversarial training process; figure 1 Shown is the flow process of method provided by the present invention, and concrete steps are as follows:

[0082] 1. Perform text feature extraction and separation, see steps 11)-13)

[0083] 11) Use the bidirectional long short...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com