Dynamic load balancing method, system and terminal

A technology of dynamic load and balancing method, applied in resource allocation, program control design, instrument, etc., can solve problems such as low computing performance, no consideration of GPU performance fluctuation, low performance, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

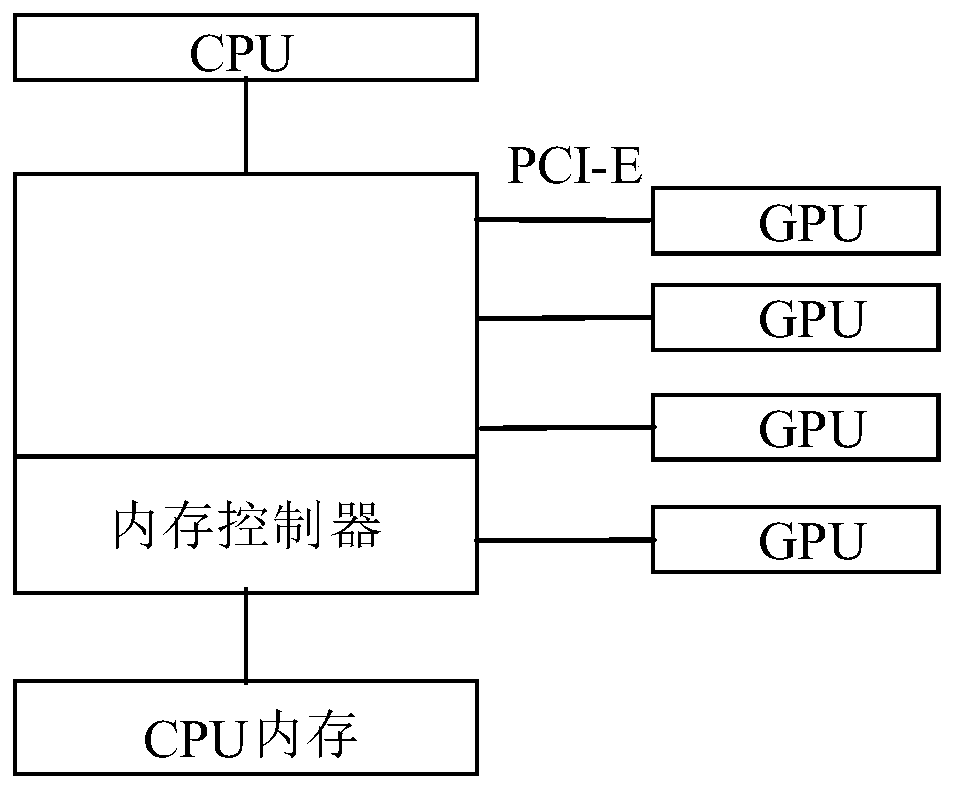

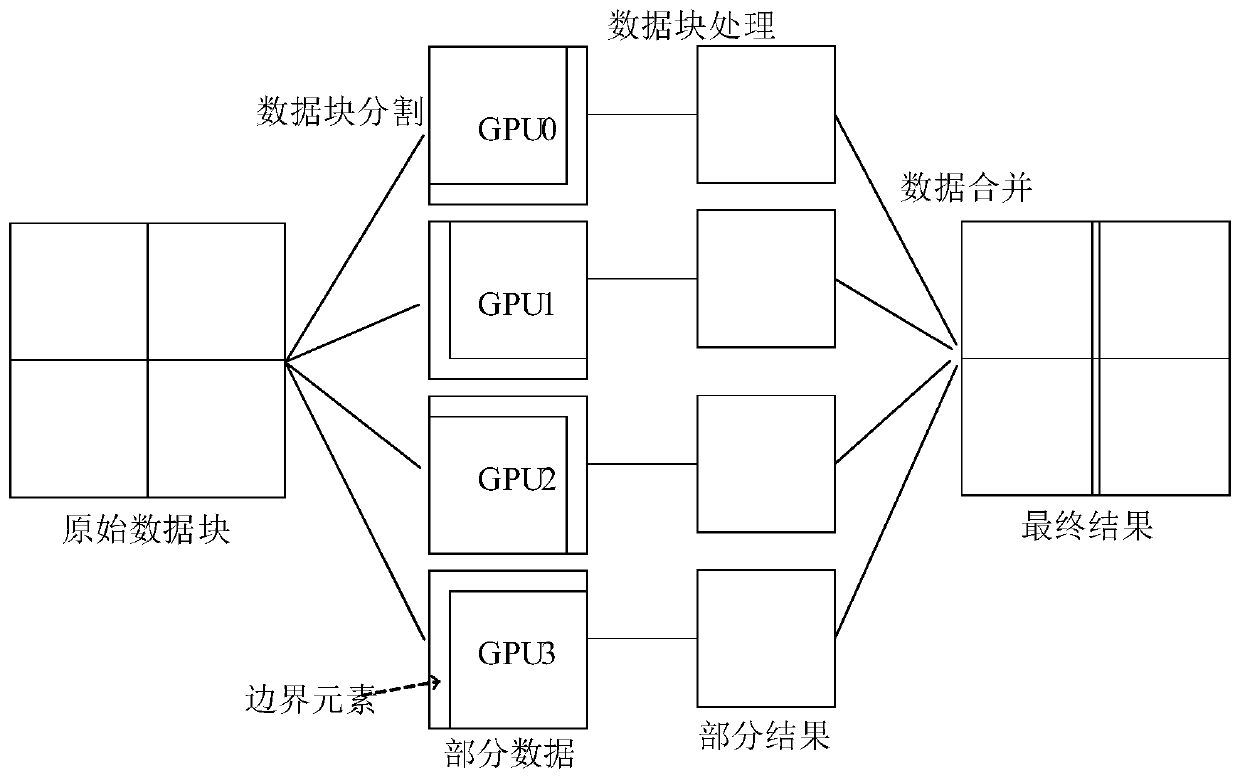

[0026] The scheme will be described below in conjunction with the accompanying drawings and specific implementation methods.

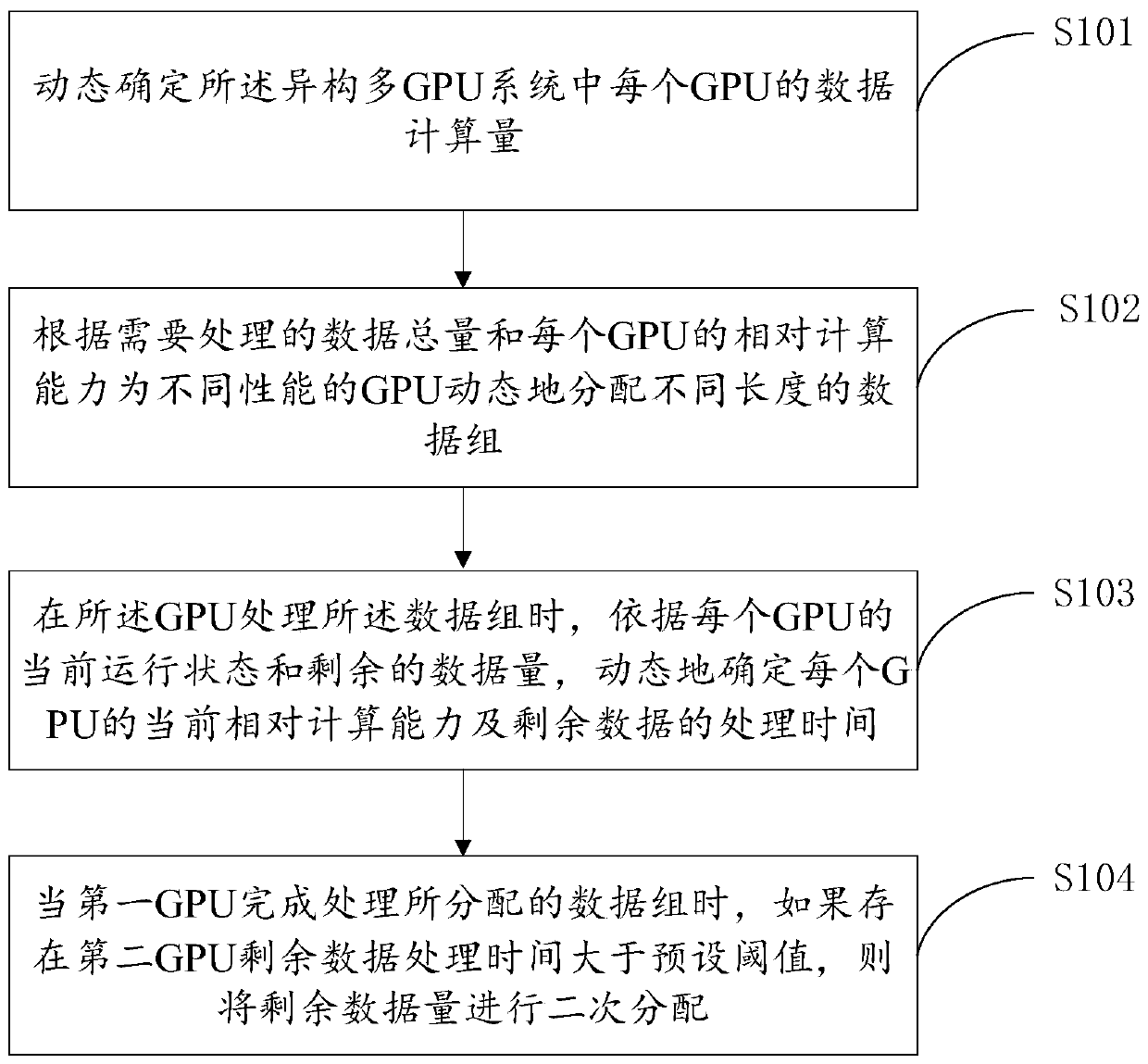

[0027] image 3 For a schematic flow diagram of a dynamic load balancing method provided in the embodiment of the present application, see image 3 , the method includes:

[0028] S101. Dynamically determine a data computation amount of each GPU in the heterogeneous multi-GPU system.

[0029] Determine each GPU as an independent computing node, and predict the relative computing power of each computing node through the fuzzy neural network.

[0030] A schematic example, in a heterogeneous multi-GPU system, assuming there are m GPUs, then corresponding to m computing nodes NODE={N 1 , N 2 ,...,N m}, for the original large data block, initially divide a set of unit data blocks of the same size DATA={D 1 ,D 2 ,...,D n}. The purpose of load balancing is to establish a mapping from the unit data block set DATA to the computing device set NODE, and ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com