GPU instruction submitting server based on service

A server and instruction technology, applied in the direction of processor architecture/configuration, program synchronization, multi-programming device, etc., can solve the problem of unable to submit instructions in time, and achieve the effect of high operation efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0027] The present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments.

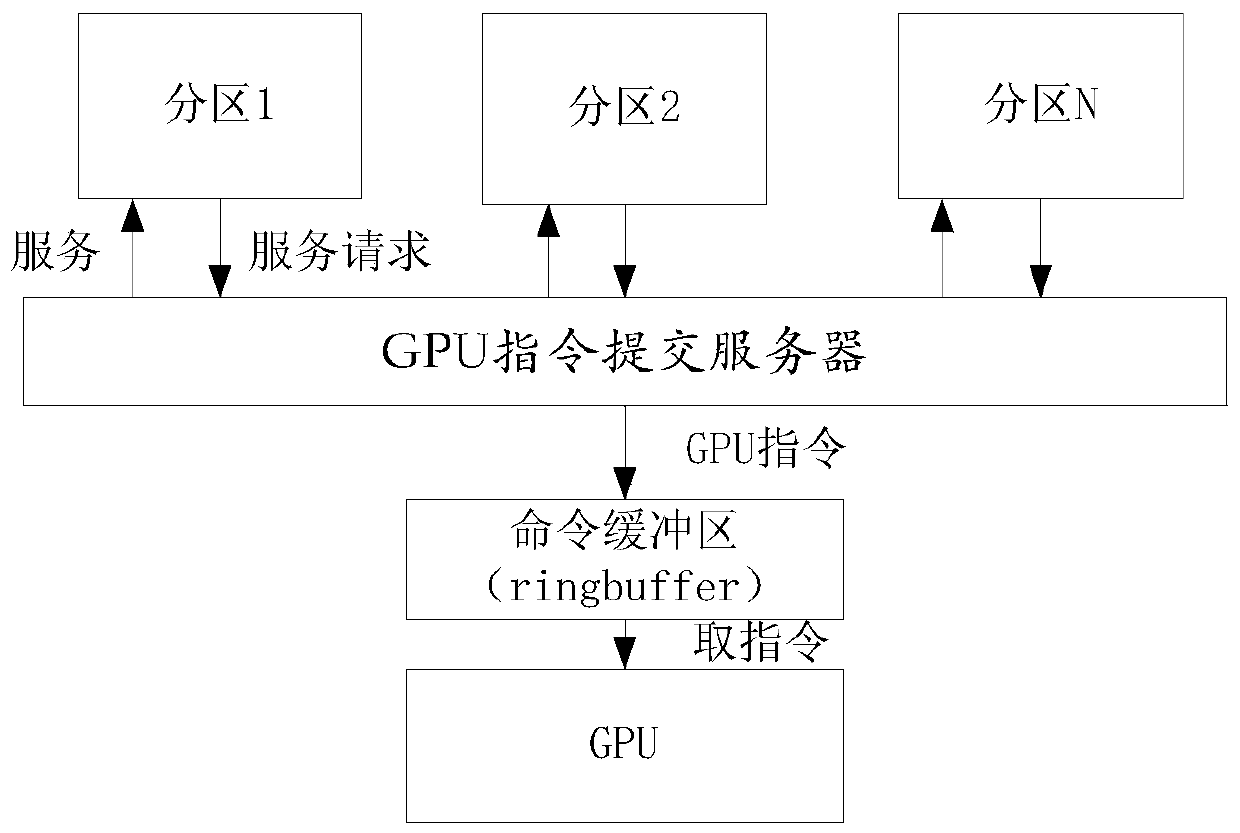

[0028] The service-based GPU command submission server shown in this embodiment runs in the kernel state of the operating system, and is responsible for receiving command requests sent by partitions, and interpreting and executing them. Each partition no longer directly sends GPU commands to the ringbuffer, but the GPU commands are submitted to the server to interact with the ringbuffer. see figure 1 As shown, the service-based GPU instruction submission server is used to perform the following program steps:

[0029] Step 1: Complete initialization after power on.

[0030] Step 2: Run the system kernel mode, and loop to check whether there is a partition CPU request to submit GPU instructions, and if so, go to step 3.

[0031] Step 3: Receive the GPU instruction submitted by the CPU of the partition. Partitioned CPUs need to submit GPU ins...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com