A Video Splicing Method Based on Image Semantic Segmentation

A technology of semantic segmentation and video splicing, which is applied in the field of image processing to achieve the effect of high-quality splicing

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

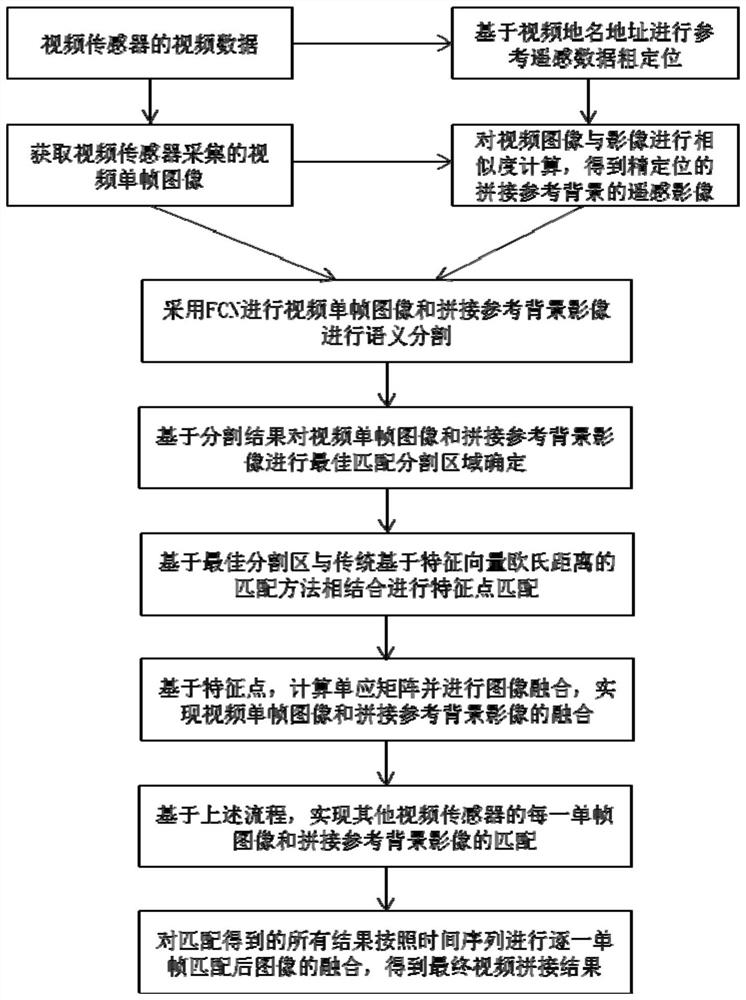

Method used

Image

Examples

Embodiment 2

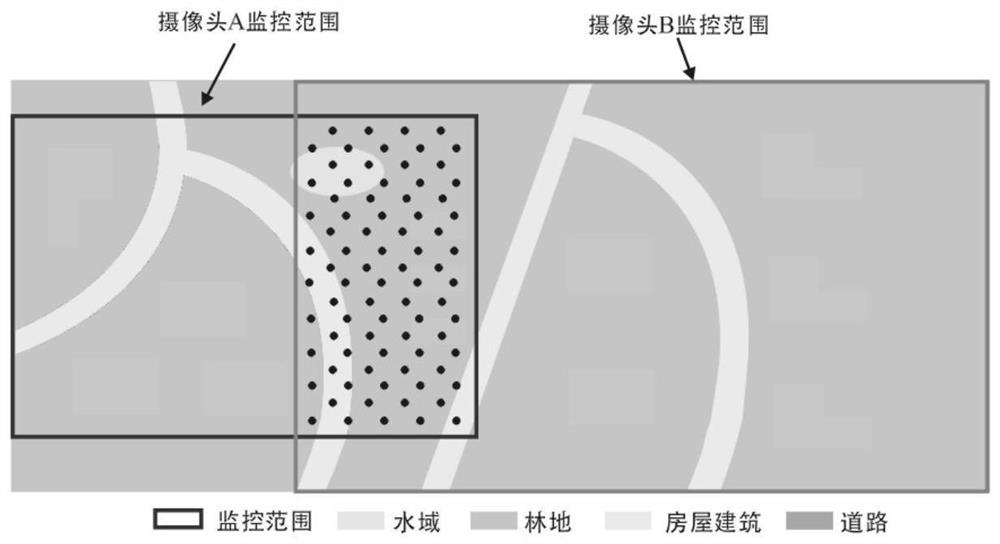

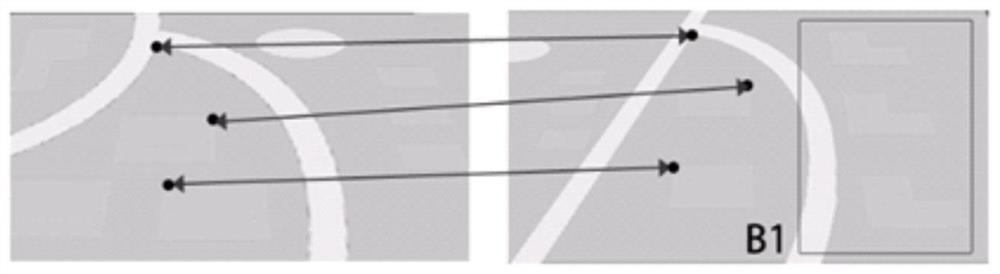

[0103] Such as Figure 2 to Figure 4As shown, in this embodiment, video splicing is actually a technique of seamlessly splicing multiple video sequences with overlapping parts into wide-view or even panoramic videos. Among them, video splicing of fixed multi-cameras in static scenes is the most common, such as fixed-angle traffic surveillance cameras, indoor surveillance cameras, etc. The common method of static video stitching is to select matching feature points with the same characteristics in multiple video overlapping areas, and then use the feature points to perform video geometric transformation and fusion stitching. Therefore, the more accurate and more feature points, the better the matching and stitching effect. Well, a large overlapping area can better meet this requirement, so in this case, it is necessary to avoid the overlapping area being too small. However, under normal circumstances, static video images have their own characteristics, such as traffic surveill...

Embodiment 3

[0109] In this embodiment, in order to better illustrate the effects of the present invention, a comparison of splicing effects in the same environment is carried out between the traditional splicing method SIFT and the method of the present invention using actual data. The experimental environment is an Intel Core i7-6700K processor with a main frequency of 4.00GHz and a memory of 16GB. It is programmed in C++ and uses the Caffe deep learning framework.

[0110] In this embodiment, the experimental data selects 54 typical intersections in Linyi City, Shandong Province, and the video data of 132 high-definition cameras, and the video image size is 1920x1080 pixels; the earth observation remote sensing data selects high-resolution orthophoto images with a resolution of 0.1m. The video frames of 100 high-definition cameras and 36 orthophotos of the intersection area are used to make the training set, the video frames of 20 high-definition cameras and 10 orthophotos of the interse...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com