Fusion and compression method of multi-source neural machine translation model

A technology of machine translation and compression methods, applied in neural learning methods, biological neural network models, neural architectures, etc., can solve the problems of poor quality of auxiliary corpus, many parameters, dependence, etc., to achieve BLEU value improvement, high accuracy, large The effect of compression

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

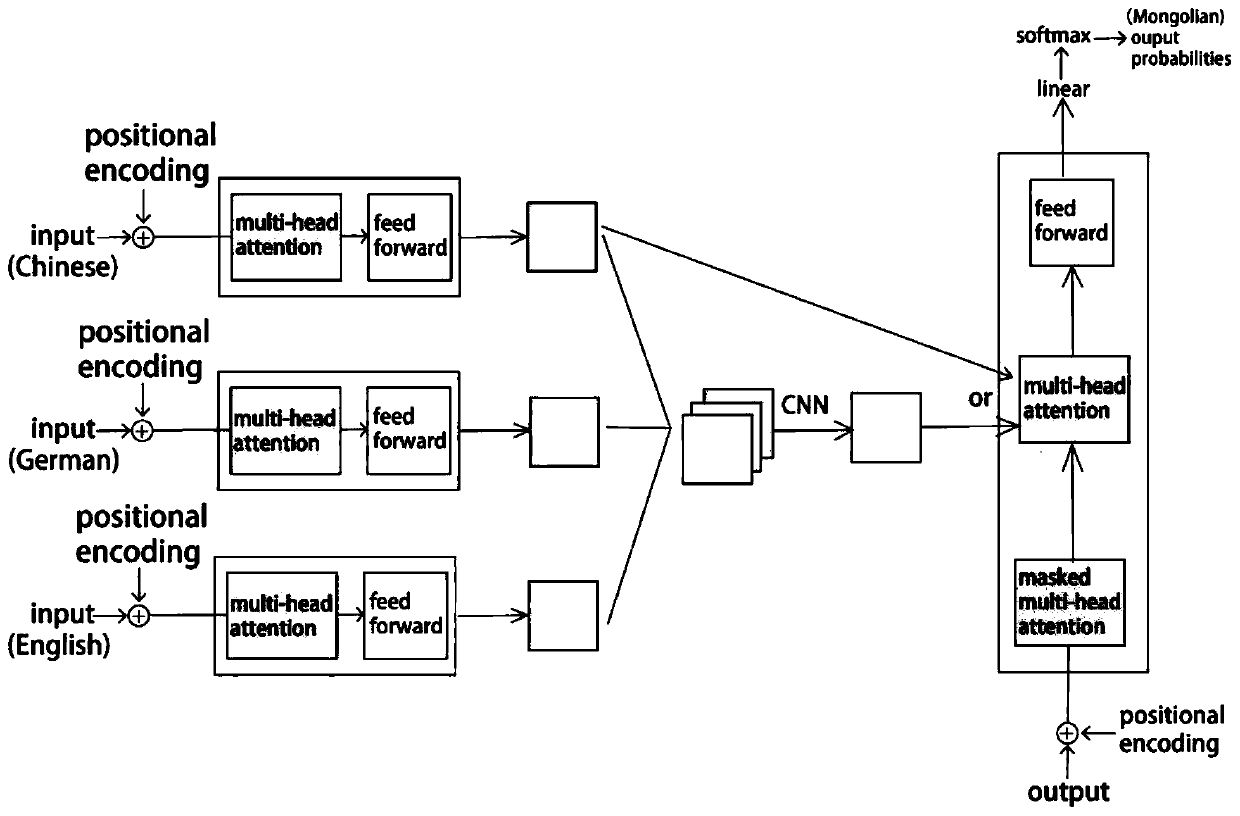

[0041] The present invention is applicable to the neural machine translation task under the condition that the source language resources are abundant and the target language resources are scarce. For example, Chinese, English, German and other language resources are abundant, and there are many mature translation systems. However, in the translation task from Chinese to Mongolian, the parallel corpus between the two is scarce, and it is difficult to directly train an effective translation system. Based on this, the present invention utilizes Chinese corpus and Chinese-English, Chinese-German translation system to obtain parallel English and German corpus, utilizes three encoders to encode three kinds of source languages (Chinese, English and German), and the result that obtains is fused so that decoded by the decoder. Using this method allows the translation model to learn more language information and optimize the translation effect.

[0042] Here, the background of the sp...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com