Patents

Literature

161 results about "Parallel corpora" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Parallel Corpora. The term parallel corpora is typically used in linguistic circles to refer to texts that are translations of each other. And the term comparable corpora refers to texts in two languages that are similar in content, but are not translations.

Systems and methods for using anchor text as parallel corpora for cross-language information retrieval

InactiveUS7146358B1Quality improvementLess translationData processing applicationsWeb data indexingDocumentation procedureAmbiguity

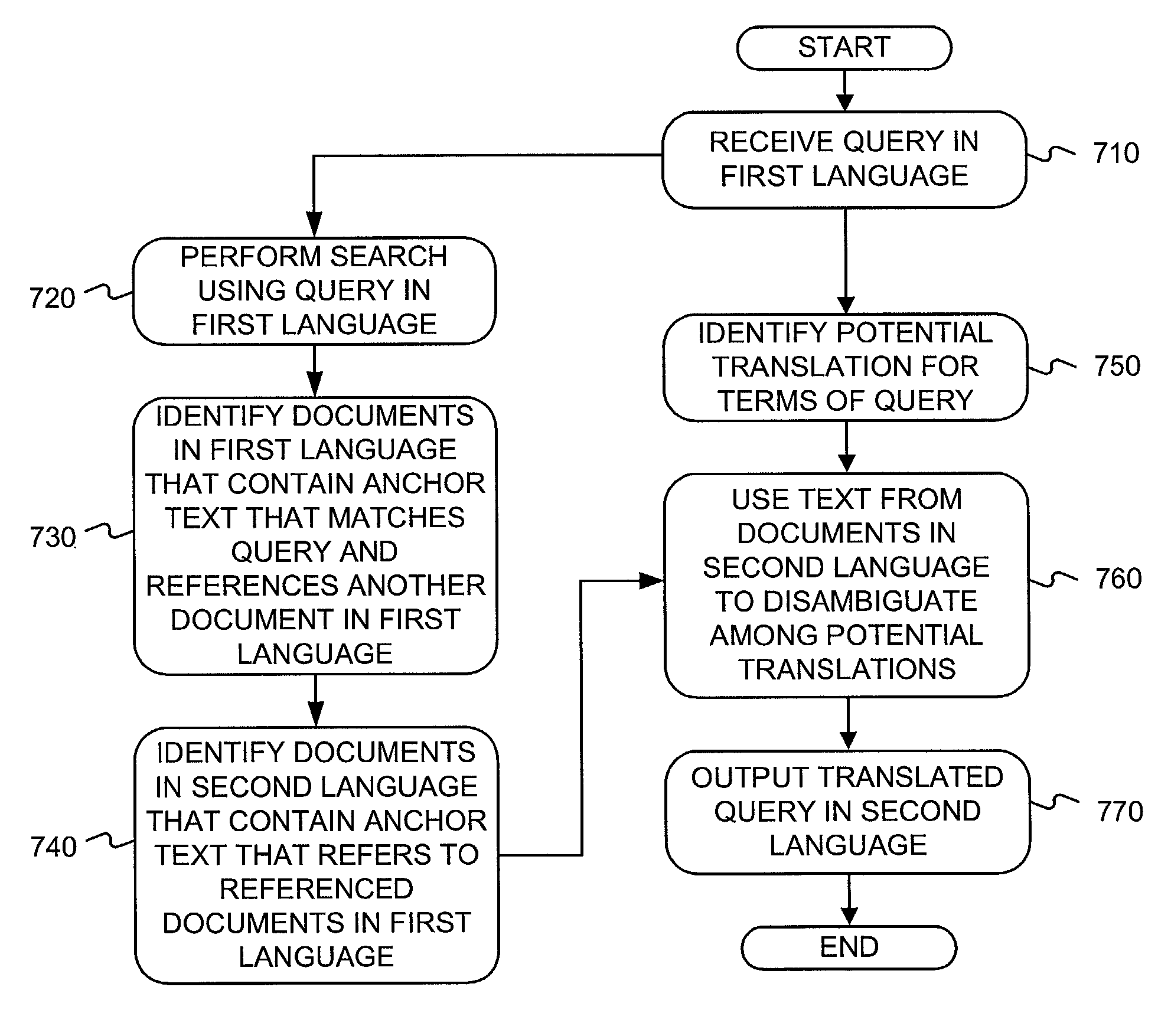

A system performs cross-language query translations. The system receives a search query that includes terms in a first language and determines possible translations of the terms of the search query into a second language. The system also locates documents for use as parallel corpora to aid in the translation by: (1) locating documents in the first language that contain references that match the terms of the search query and identify documents in the second language; (2) locating documents in the first language that contain references that match the terms of the query and refer to other documents in the first language and identify documents in the second language that contain references to the other documents; or (3) locating documents in the first language that match the terms of the query and identify documents in the second language that contain references to the documents in the first language. The system may use the second language documents as parallel corpora to disambiguate among the possible translations of the terms of the search query and identify one of the possible translations as a likely translation of the search query into the second language.

Owner:GOOGLE LLC

Multi-domain machine translation model adaptation

InactiveUS20140200878A1Evaluate qualityNatural language translationSpecial data processing applicationsParallel corporaText string

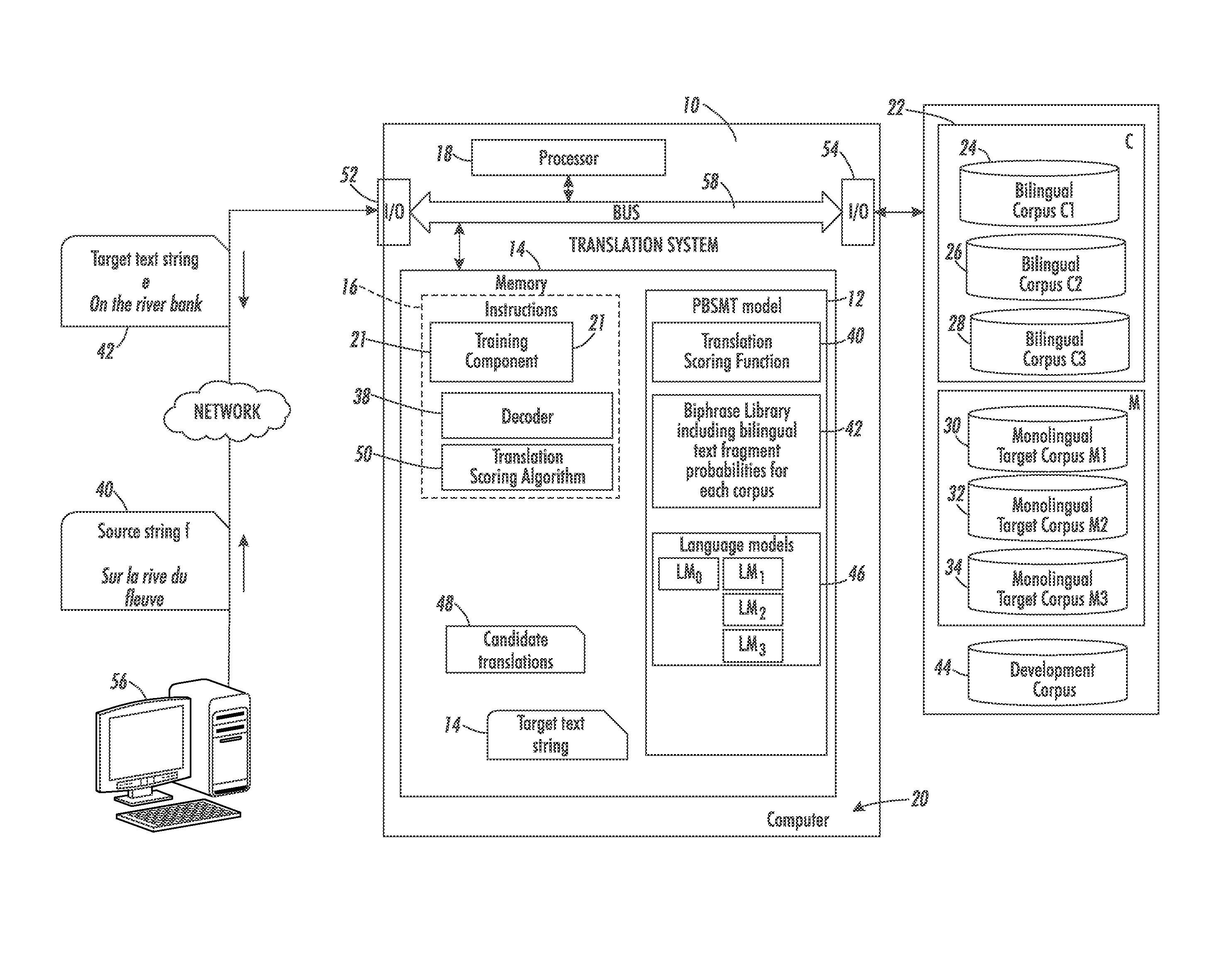

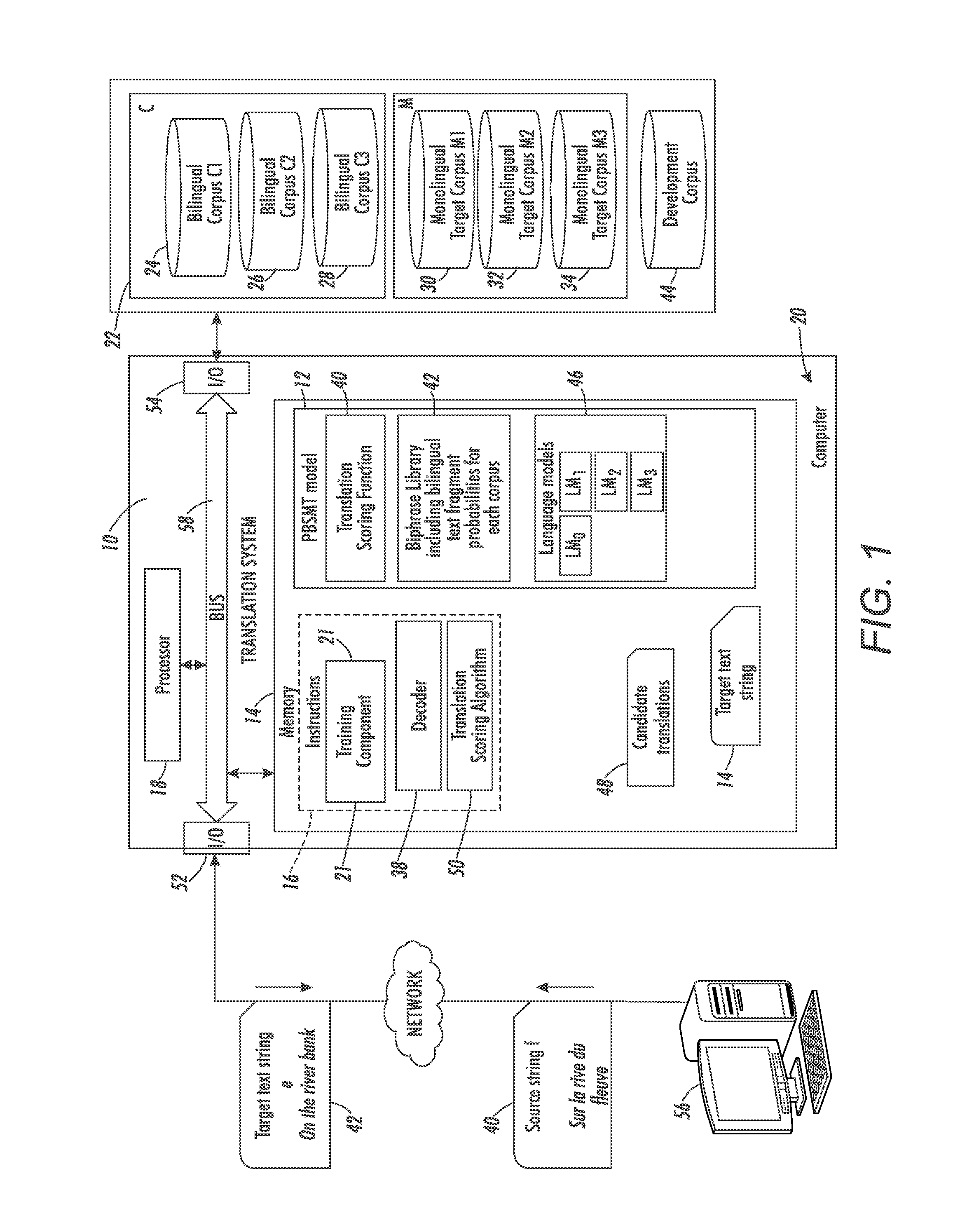

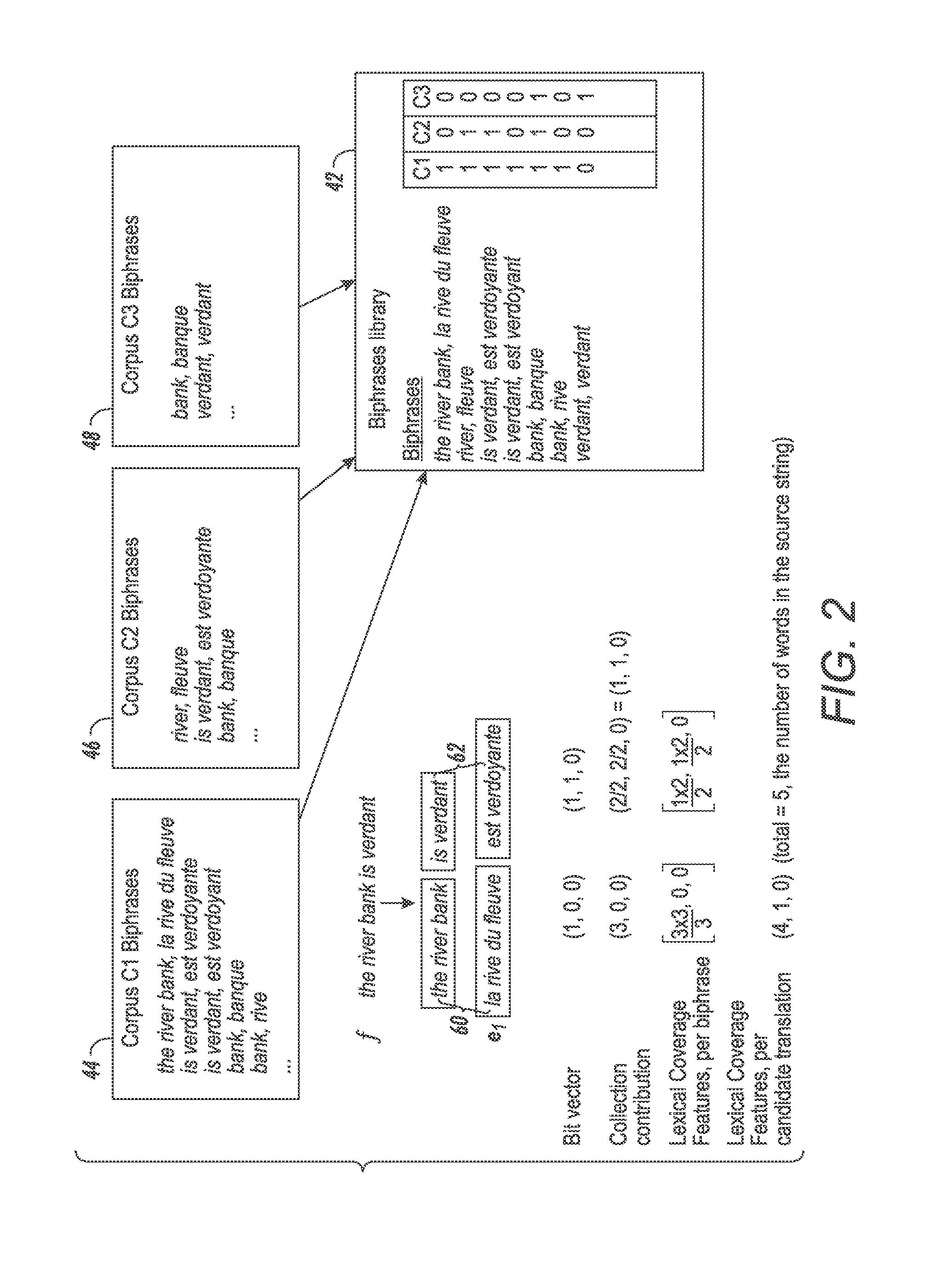

A method adapted to multiple corpora includes training a statistical machine translation model which outputs a score for a candidate translation, in a target language, of a text string in a source language. The training includes learning a weight for each of a set of lexical coverage features that are aggregated in the statistical machine translation model. The lexical coverage features include a lexical coverage feature for each of a plurality of parallel corpora. Each of the lexical coverage features represents a relative number of words of the text string for which the respective parallel corpus contributed a biphrase to the candidate translation. The method may also include learning a weight for each of a plurality of language model features, the language model features comprising one language model feature for each of the domains.

Owner:XEROX CORP

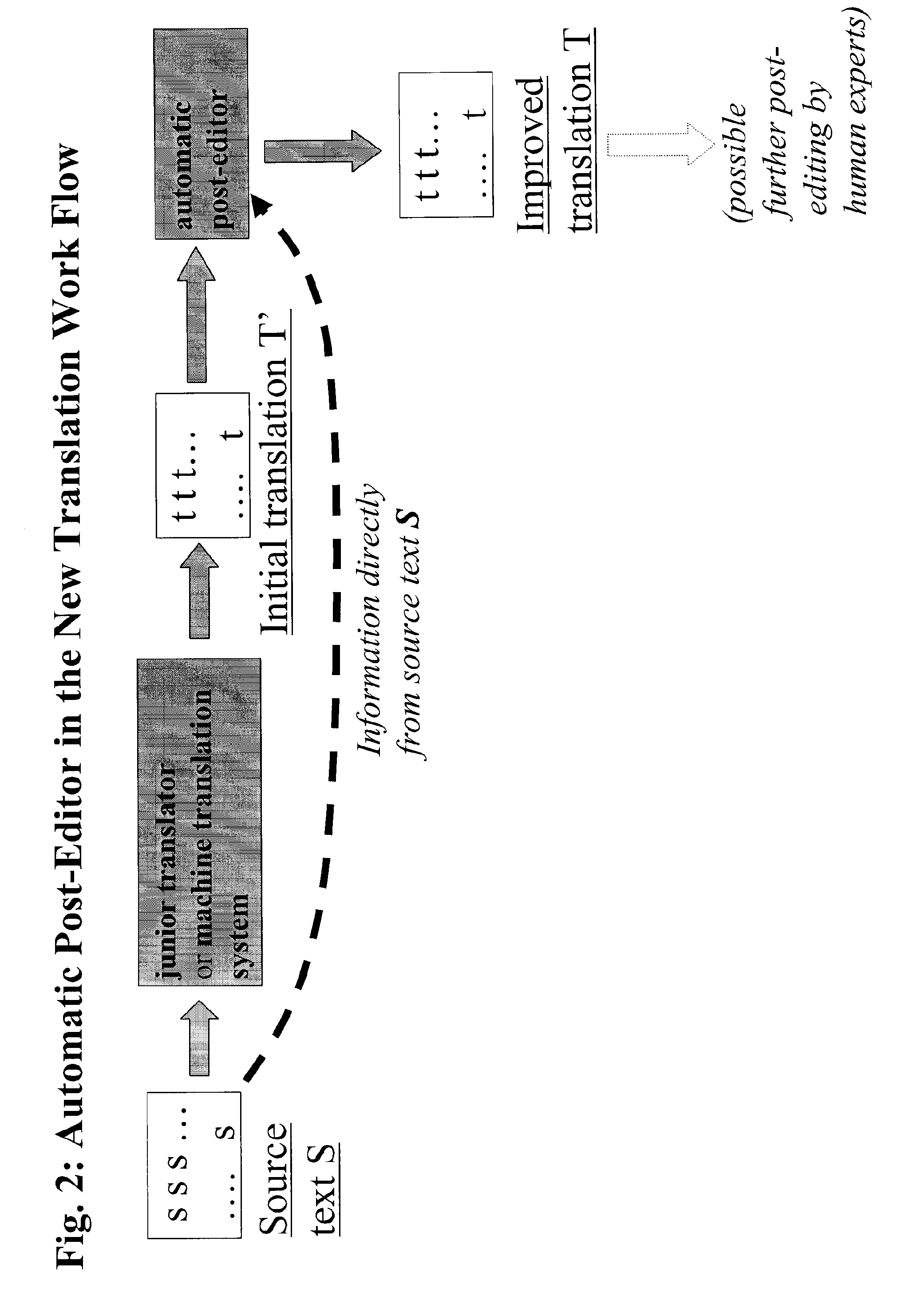

Means and method for automatic post-editing of translations

InactiveUS20090326913A1Natural language translationSpecial data processing applicationsSentence pairParallel corpora

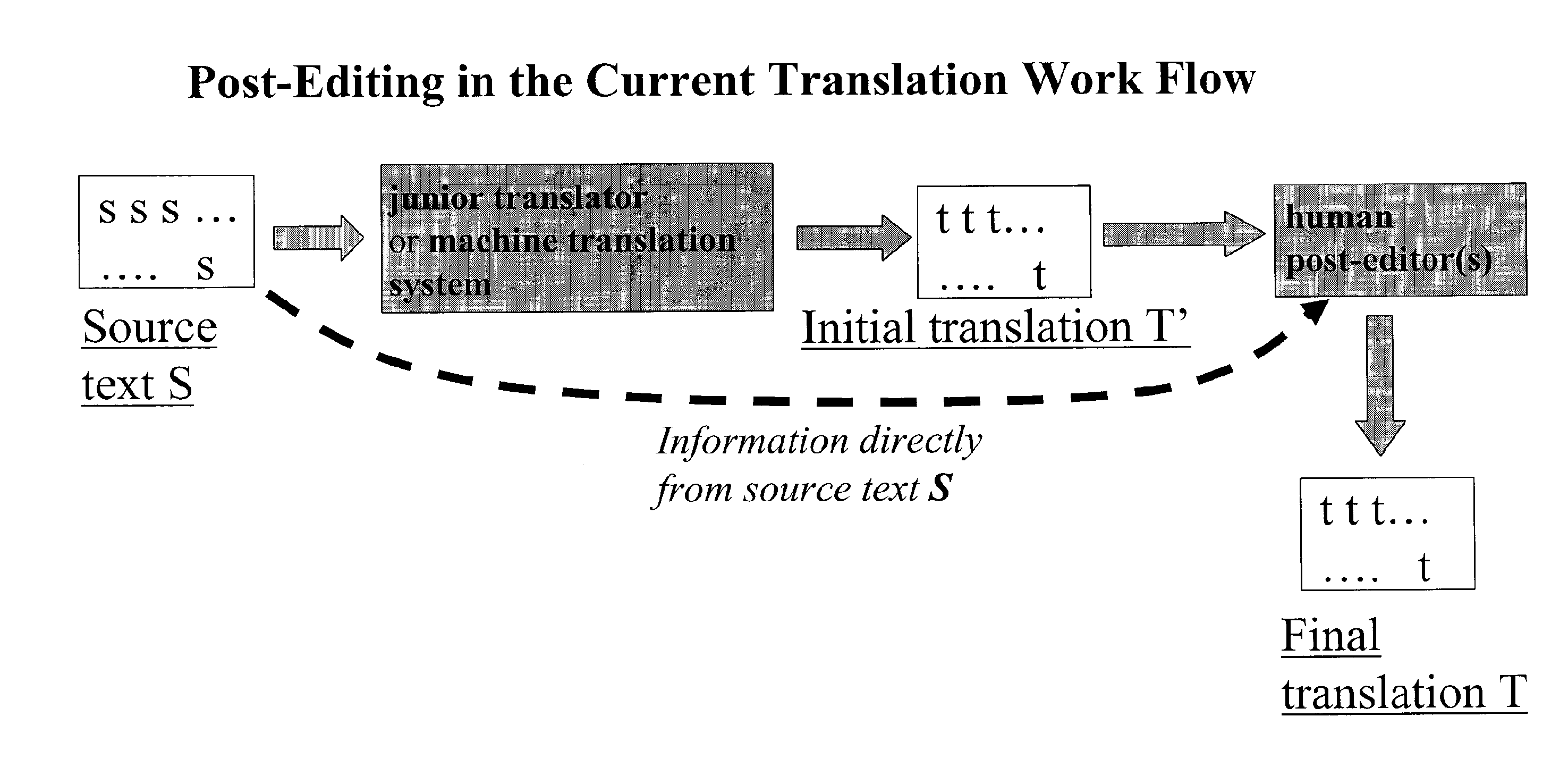

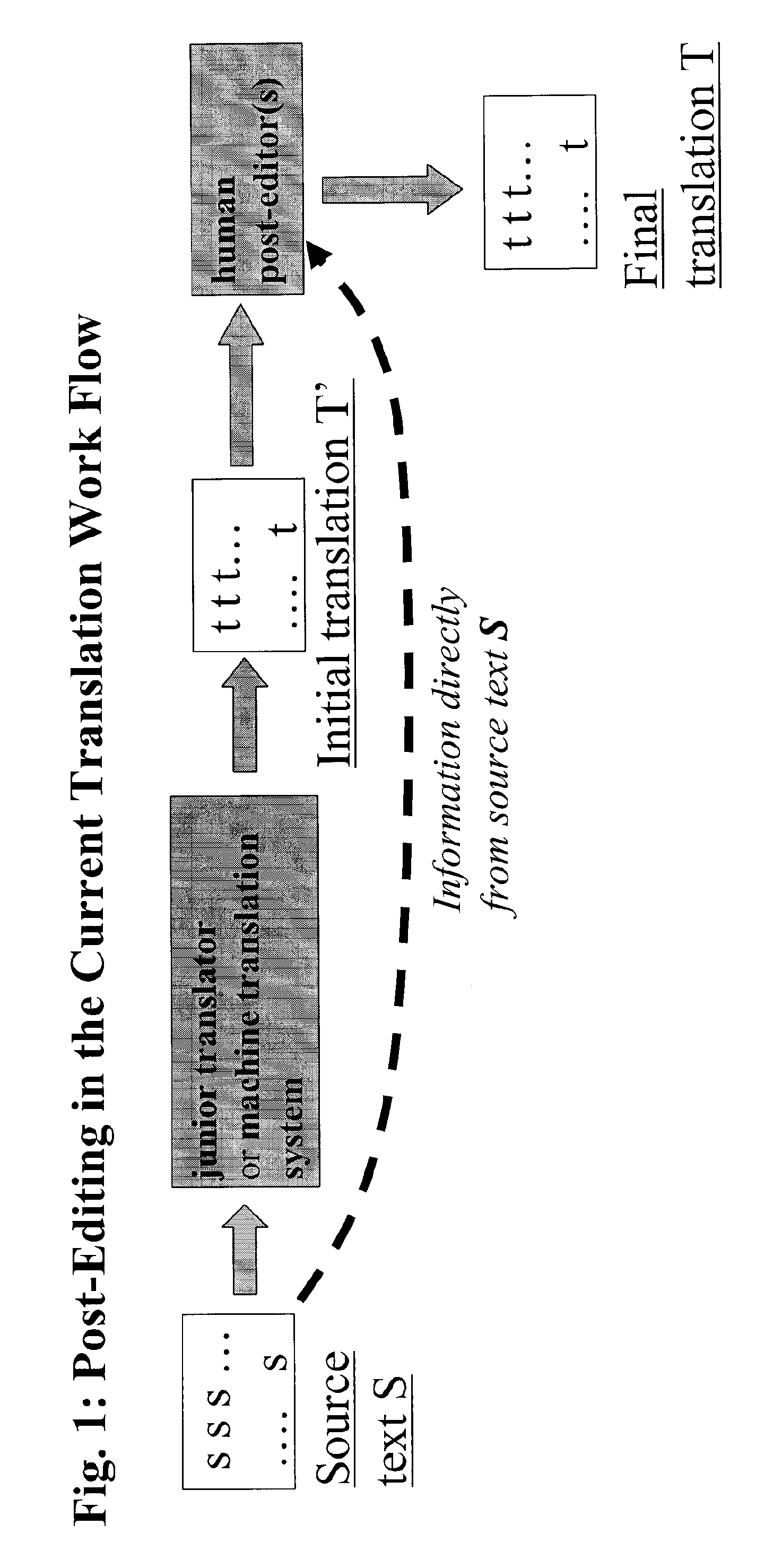

The invention relates to a method and a means for automatically post-editing a translated text. A source language text is translated into an initial target language text. This initial target language text is then post-edited by an automatic post-editor into an improved target language text. The automatic post-editor is trained on a sentence aligned parallel corpus created from sentence pairs T′ and T, where T′ is an initial training translation of a source training language text, and T is second, independently derived, training translation of a source training language text.

Owner:NAT RES COUNCIL OF CANADA

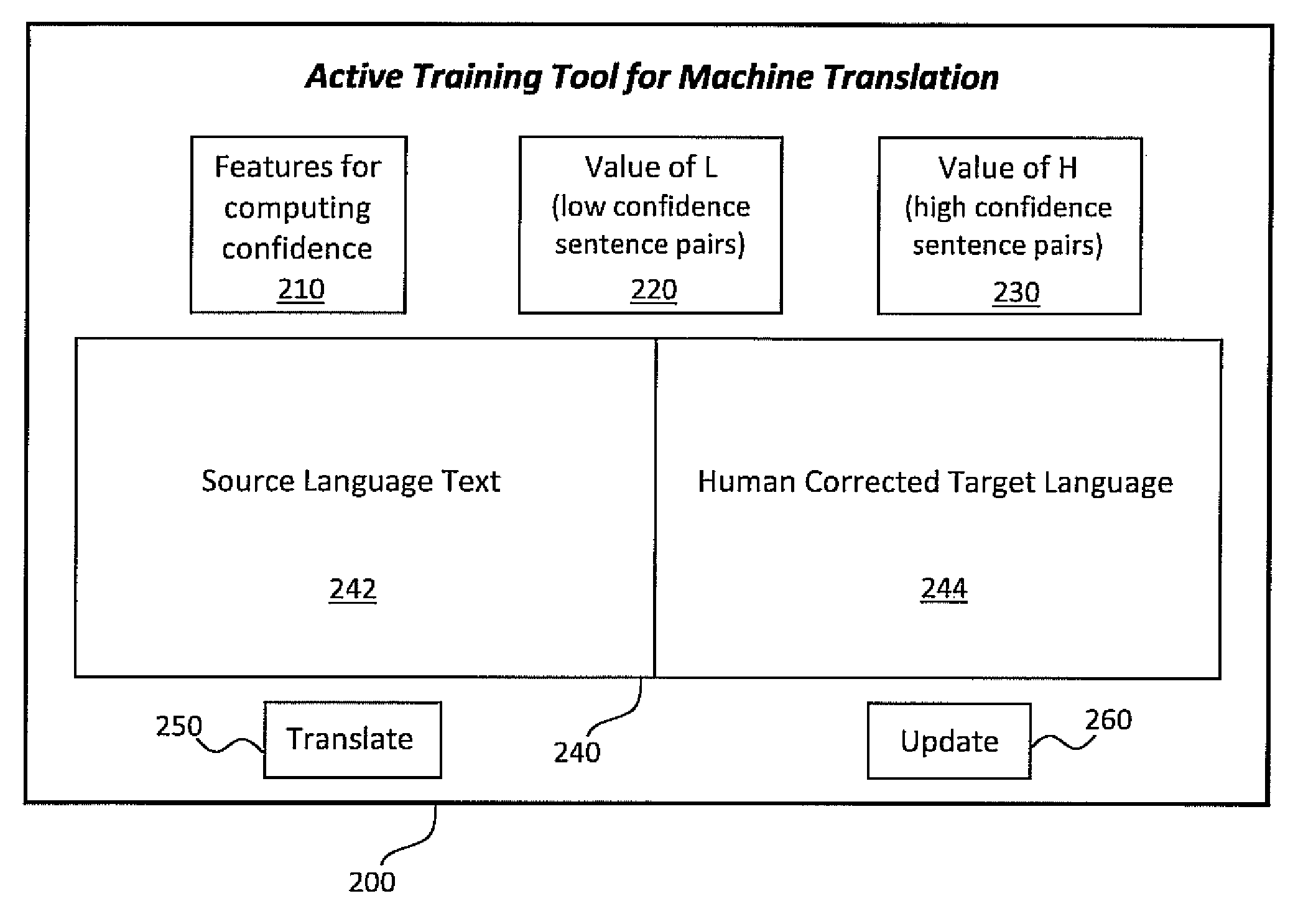

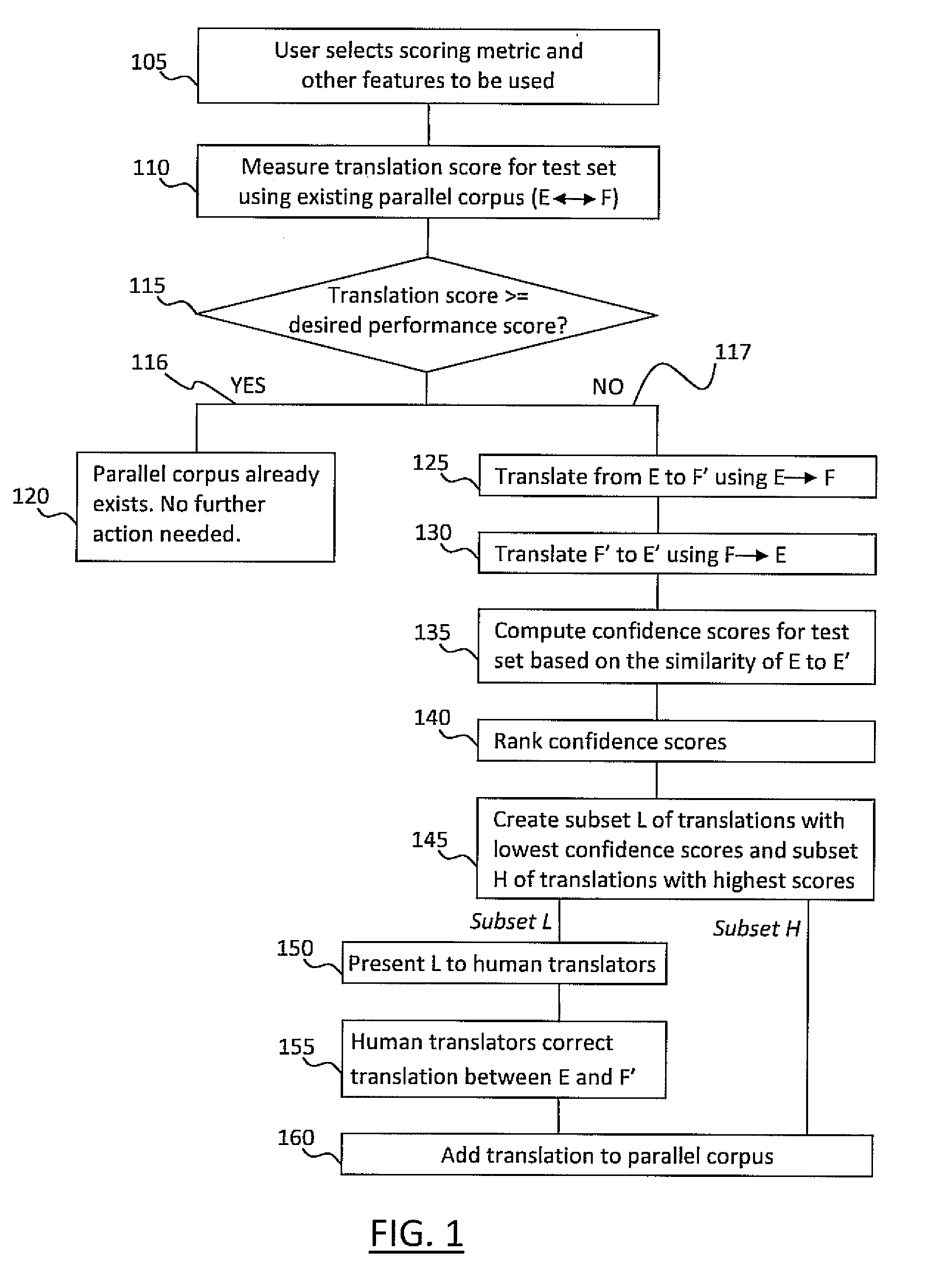

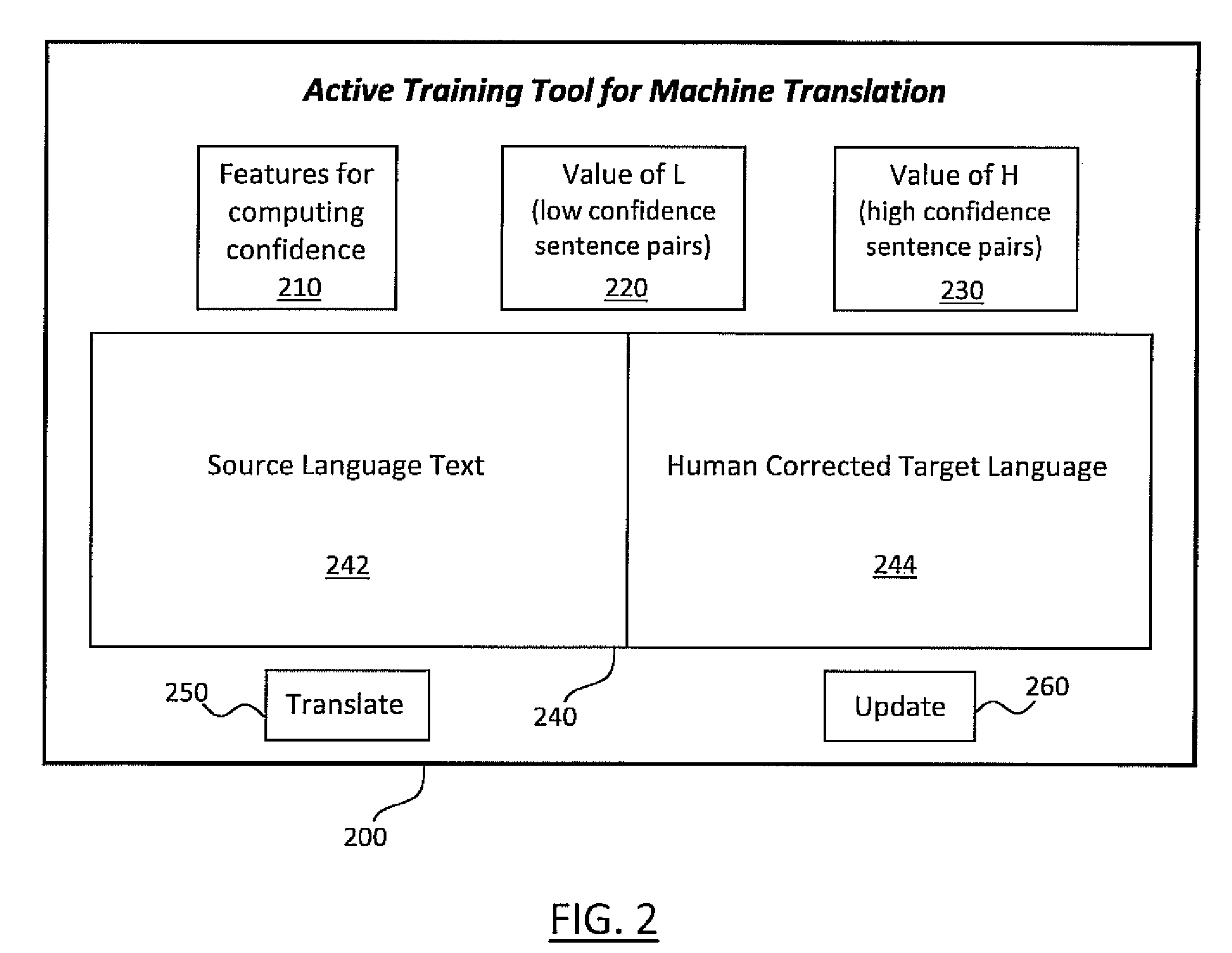

Active learning systems and methods for rapid porting of machine translation systems to new language pairs or new domains

InactiveUS20110022381A1Increase speedLow costNatural language translationDigital data processing detailsMachine translation systemProactive learning

Systems and methods for active learning of statistical machine translation systems through dynamic creation and updating of parallel corpus. The systems and methods provided create accurate parallel corpus entries from a test set of sentences, words, phrases, etc. by calculating confidence scores for particular translations. Translations with high confidence scores are added directly to the corpus and the translations with low confidence scores are presented to human translations for corrections.

Owner:IBM CORP

Method for building parallel corpora

InactiveUS20080262826A1Natural language translationSpecial data processing applicationsTraining phaseCo-occurrence

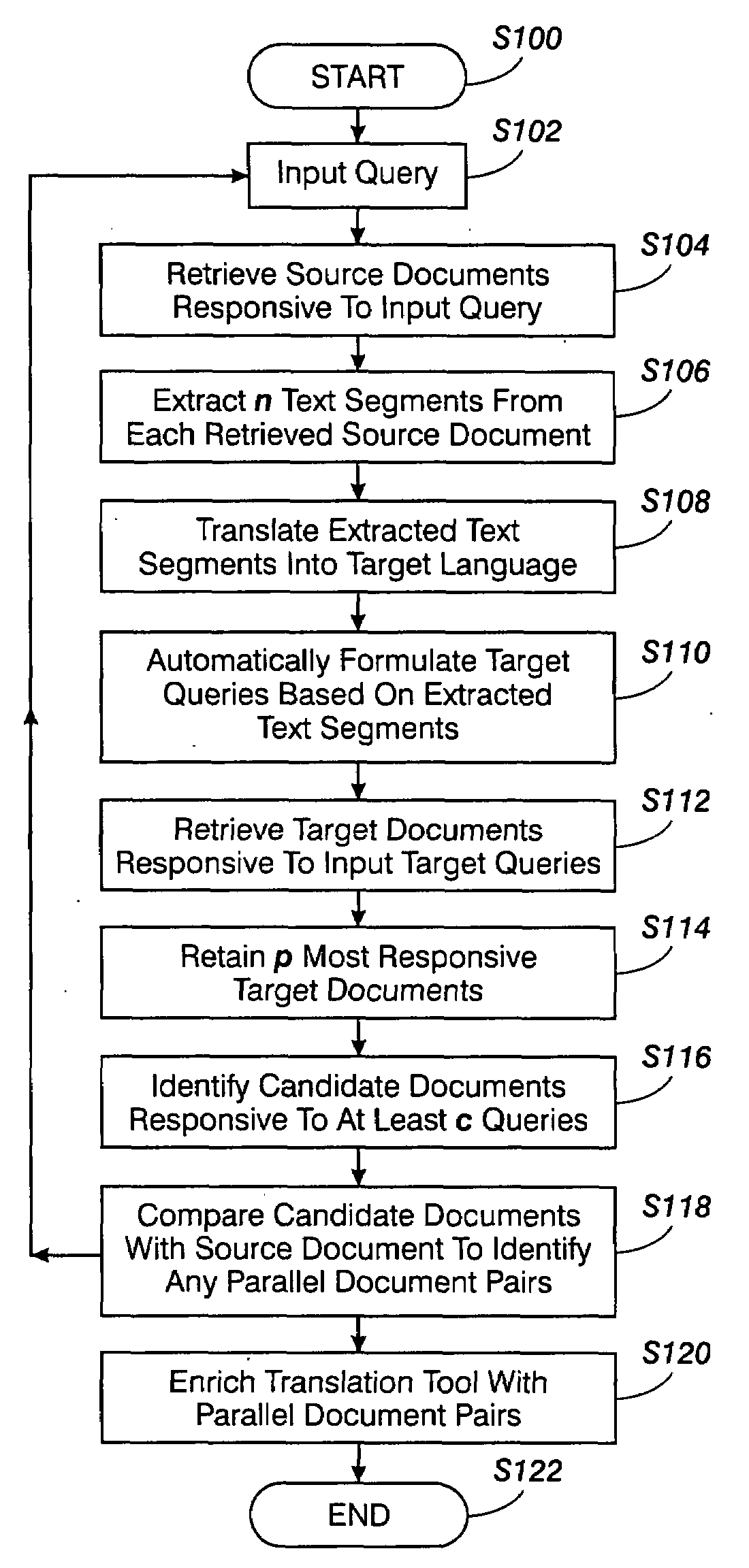

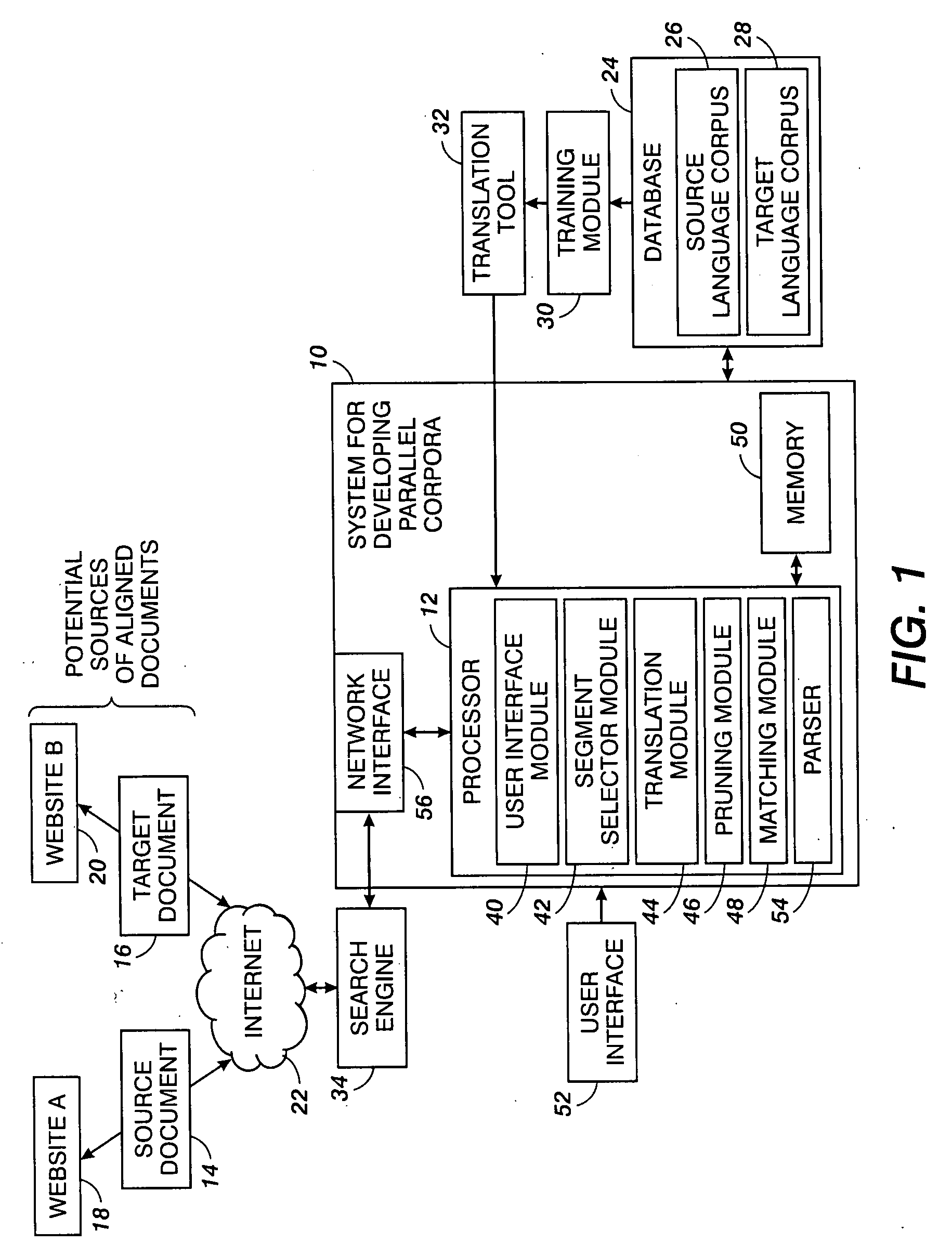

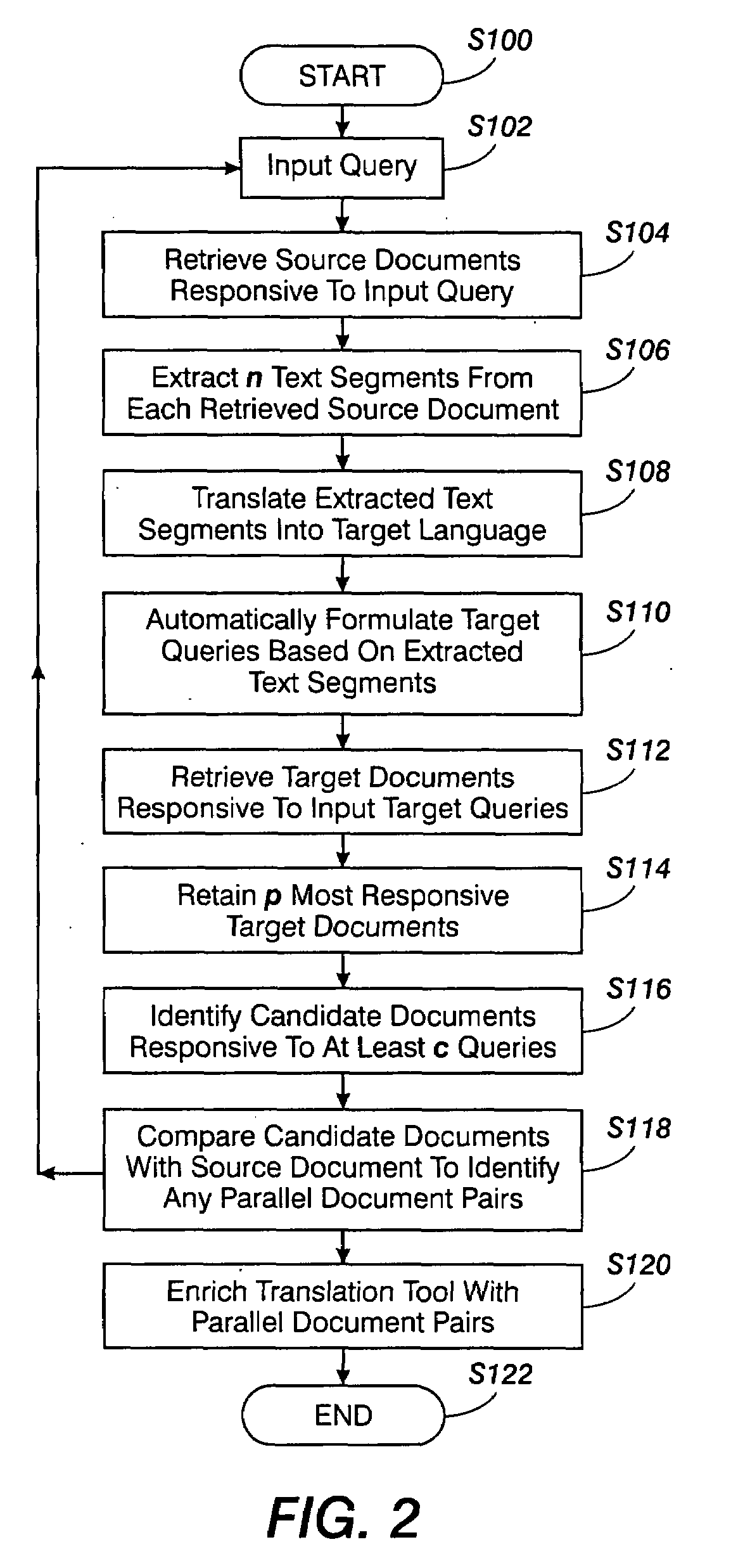

A method for identifying documents for enriching a statistical translation tool includes retrieving a source document which is responsive to a source language query that may be specific to a selected domain. A set of text segments is extracted from the retrieved source document and translated into corresponding target language segments with a statistical translation tool to be enriched. Target language queries based on the target language segments are formulated. Sets of target documents responsive to the target language queries are retrieved. The sets of retrieved target documents are filtered, including identifying any candidate documents which meet a selection criterion that is based on co-occurrence of a document in a plurality of the sets. The candidate documents, where found, are compared with the retrieved source document for determining whether any of the candidate documents match the source document. Matching documents can then be stored and used at their turn in a training phase for enriching the translation tool.

Owner:XEROX CORP

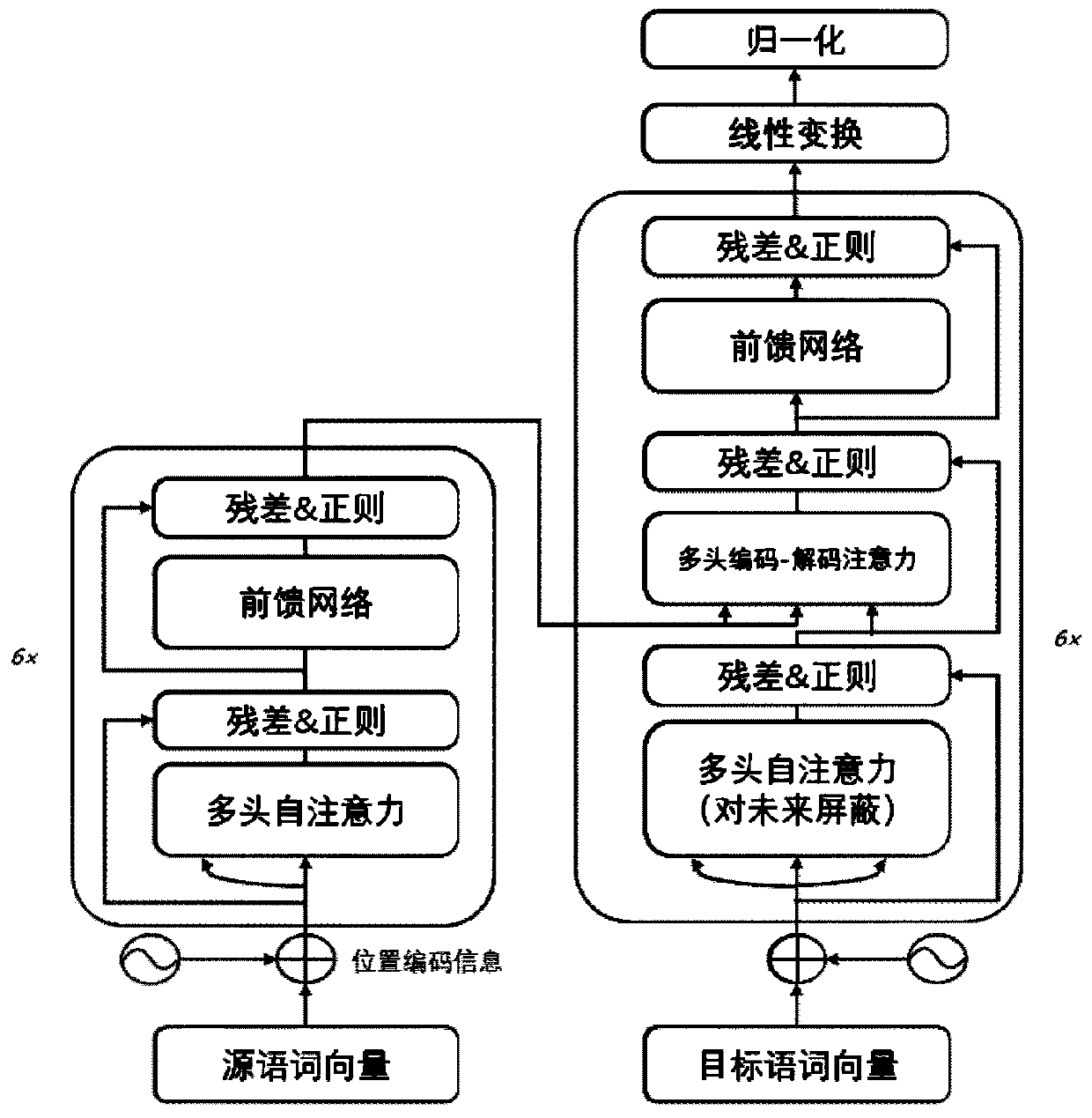

Multi-language-pair neural network machine translation method and system

InactiveCN108563640ASave resourcesReduce sizeNatural language translationSpecial data processing applicationsMulti languageAlgorithm

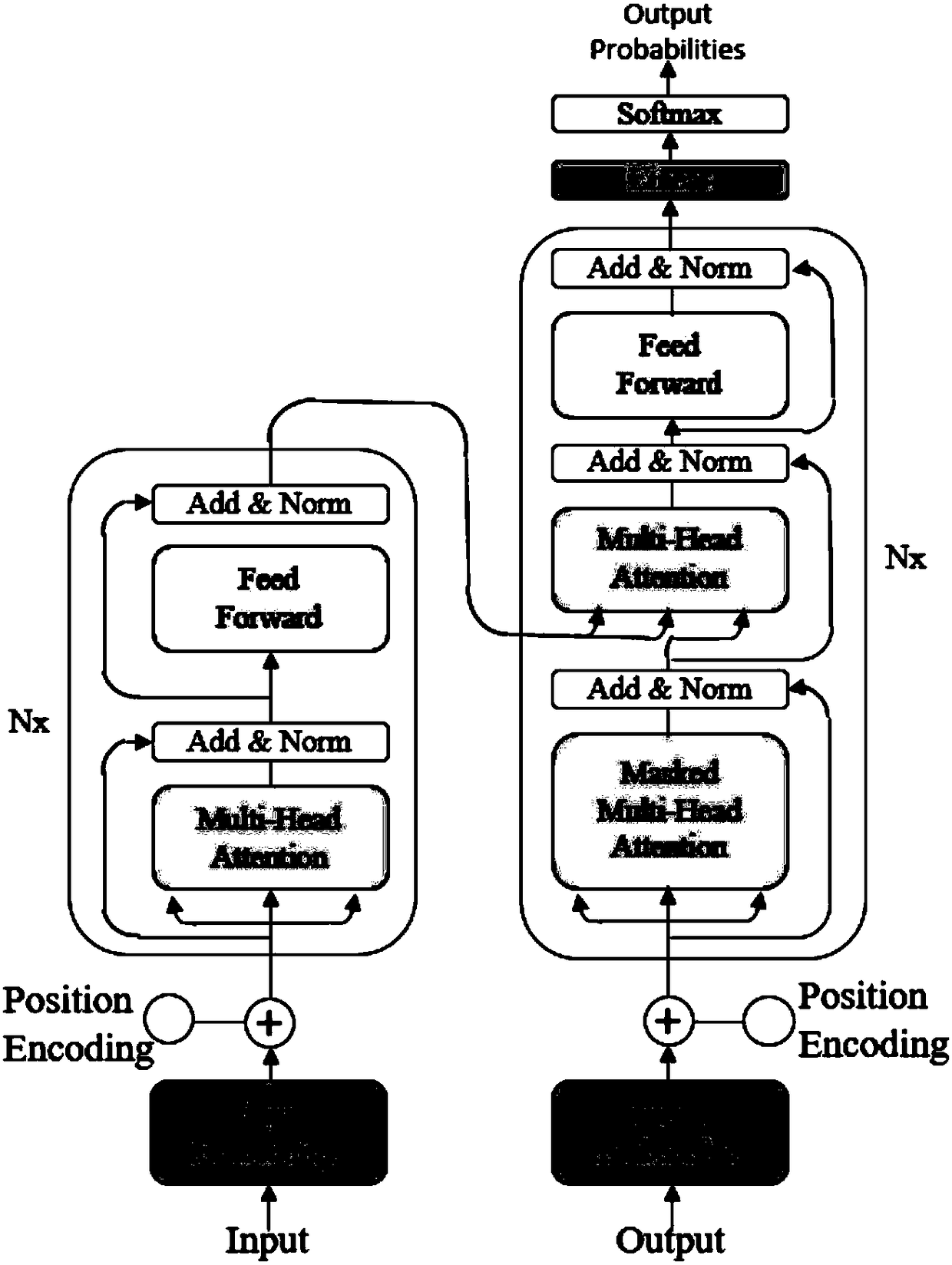

The invention belongs to the technical field of computer software and discloses a multi-language-pair neural network machine translation method and system. A plurality of bilingual parallel corpora ofa same language system are utilized and mapped to a same high-dimensional vector space after byte pair encoding, so that multiple languages share a same semantic space, the size of a word list is reduced, model parameters are reduced, and convergence of a model is accelerated. Words of a same language family are in the same vector space, more information can be learned mutually, the information which can not be learned through only certain bilingual parallel corpora can be learnt, and the quality of word vectors is improved. The machine translation system can be used for translation in the language direction without direct bilingual parallel corpora, and the translation quality in the scarce parallel corpus translation direction is greatly improved through mutual information learning. Meanwhile, the same model is used for translation for the translation direction low in utilization rate, occupation of a server is reduced, and the utilization rate of the server is increased.

Owner:GLOBAL TONE COMM TECH

Method and apparatus for paraphrase acquisition

InactiveUS20130103390A1Natural language data processingSpecial data processing applicationsParaphraseAlgorithm

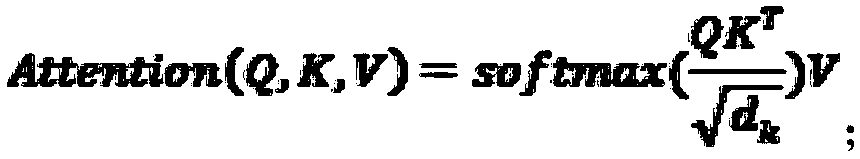

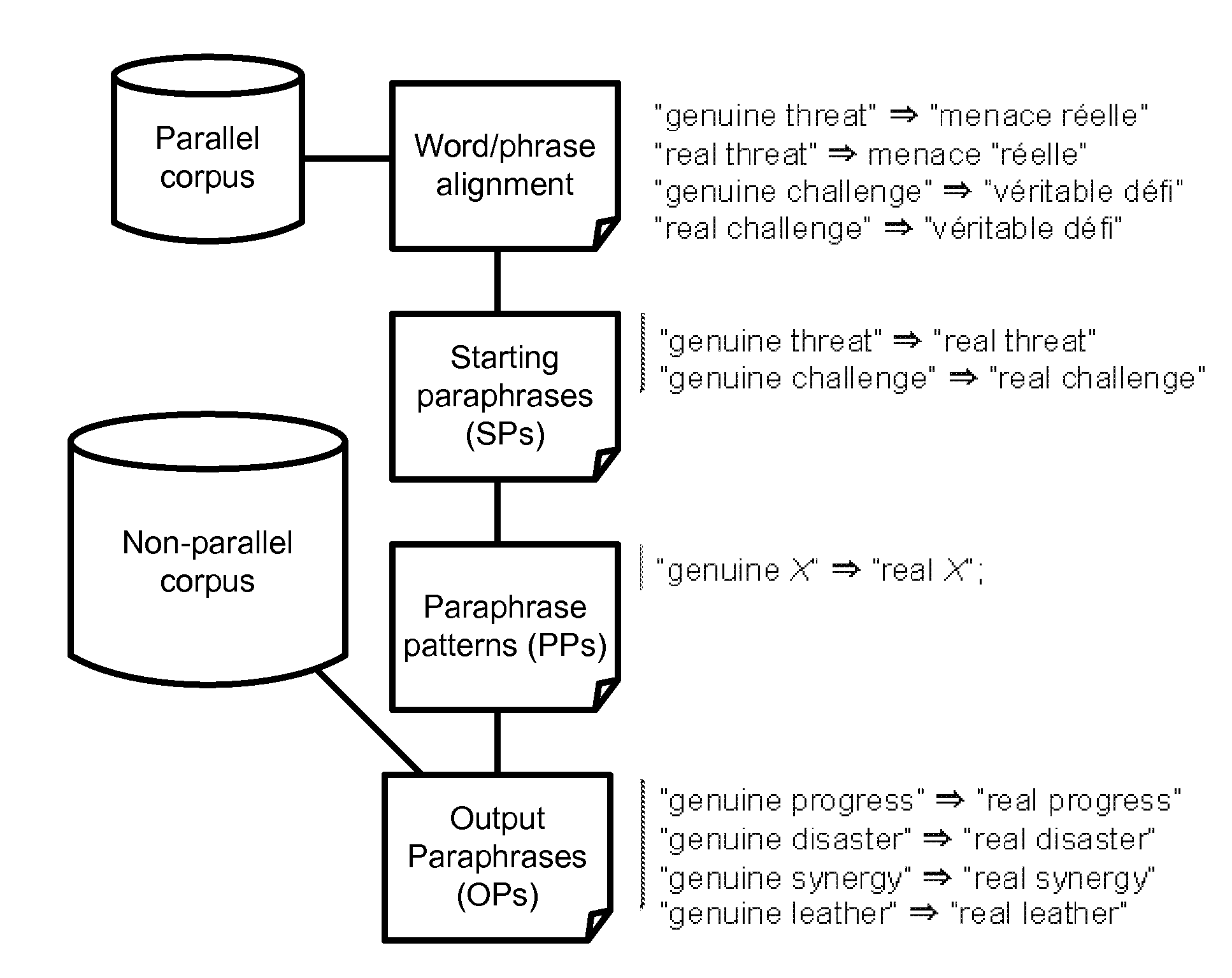

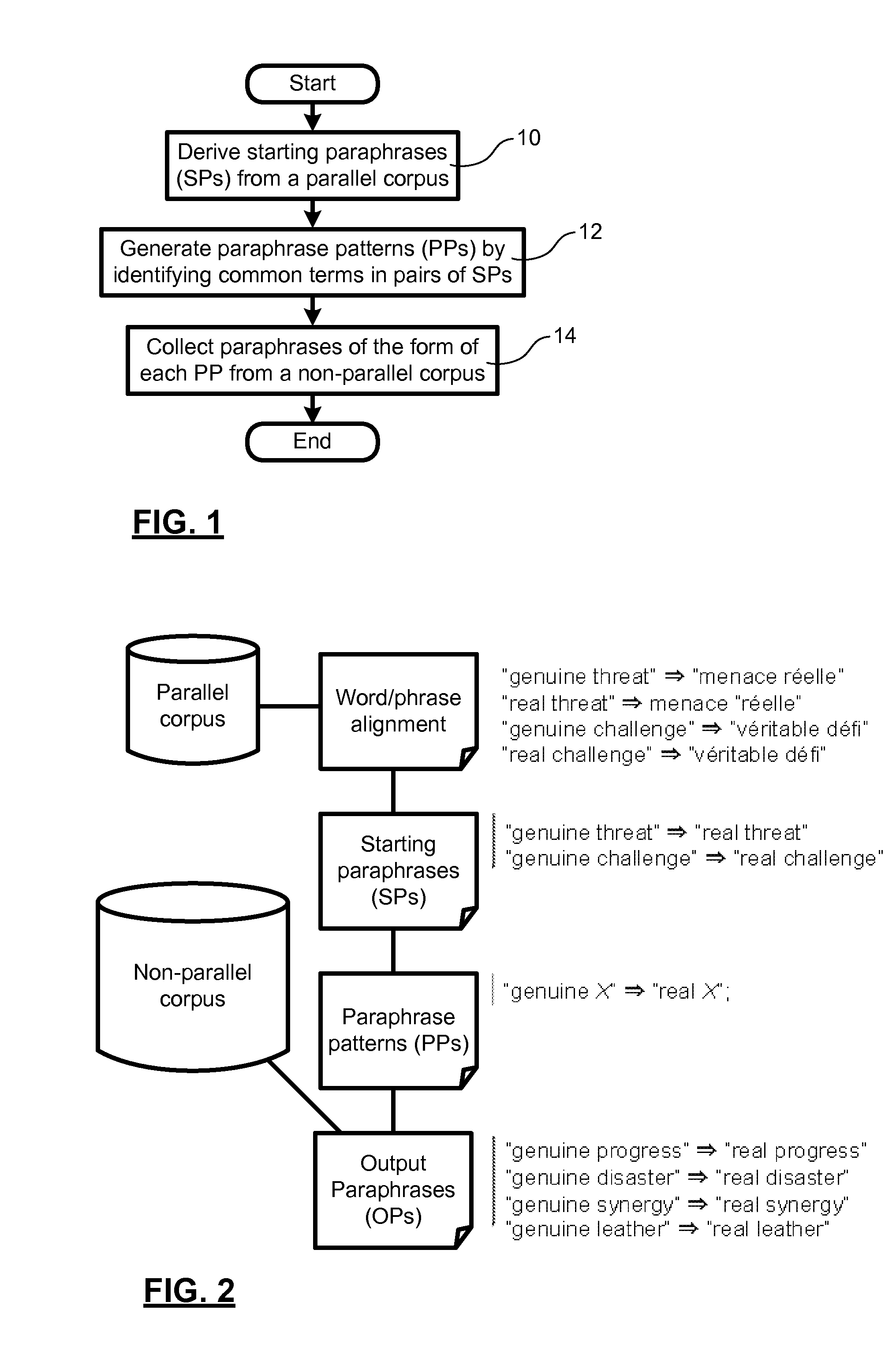

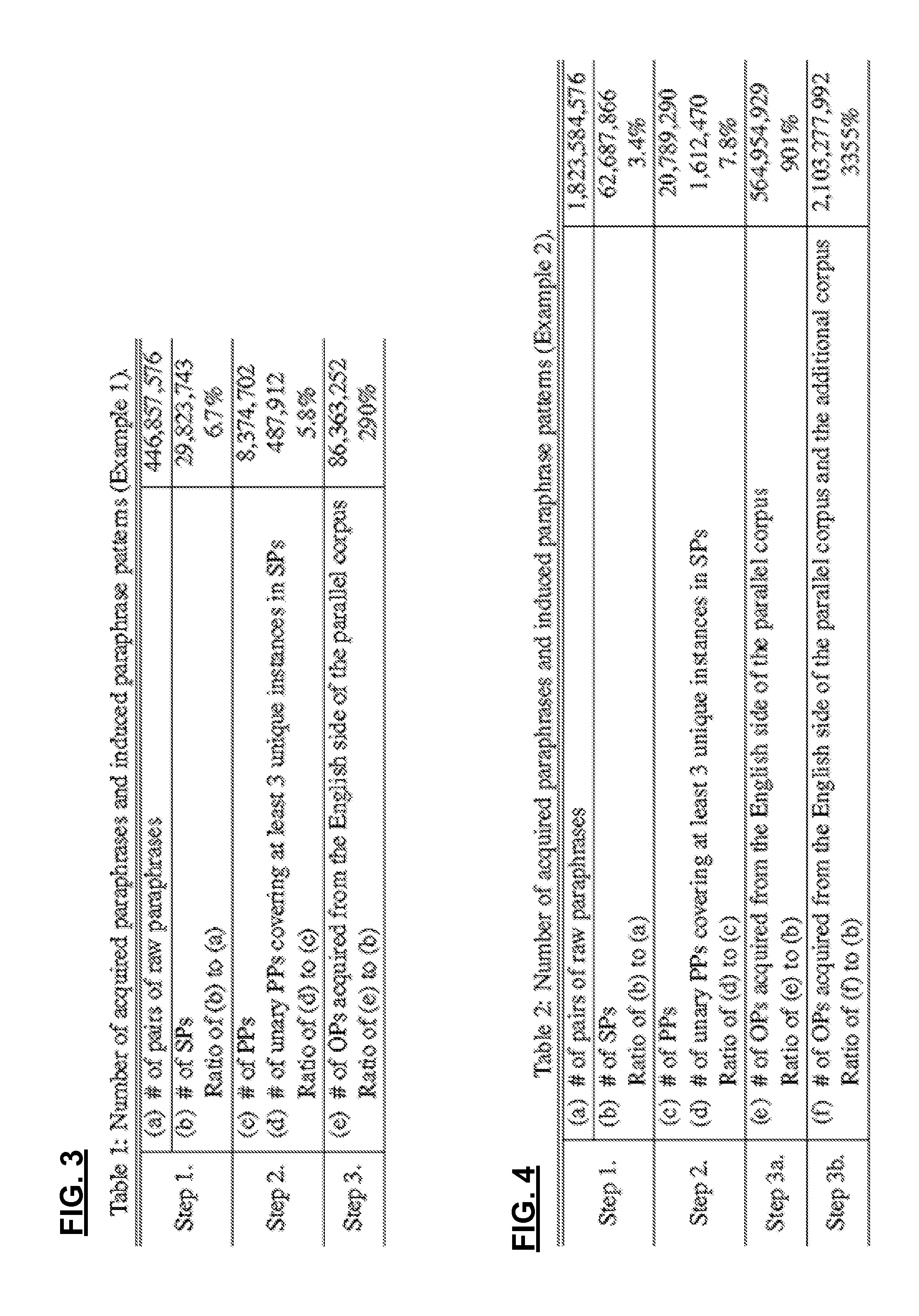

A computer based natural language processing method for identifying paraphrases in corpora using statistical analysis comprises deriving a set of starting paraphrases (SPs) from a parallel corpus, each SP having at least two phrases that are phrase aligned; generating a set of paraphrase patterns (PPs) by identifying shared terms within two aligned phrases of an SP, and defining a PP having slots in place of the shared terms, in right hand side (RHS) and left hand side (LHS) expressions; and collecting output paraphrases (OPs) by identifying instances of the PPs in a non-parallel corpus. By using the reliably derived paraphrase information from a small parallel corpus to generate the PPs, and extending the range of instances of the PPs over the large non-parallel corpus, better coverage of the paraphrases in the language and fewer errors are encountered.

Owner:NAT RES COUNCIL OF CANADA

Neural machine translation method oriented to small language

ActiveCN110334361AMake up for the lack of corpusImprove translation performanceNatural language translationNeural architecturesDiscriminatorAlgorithm

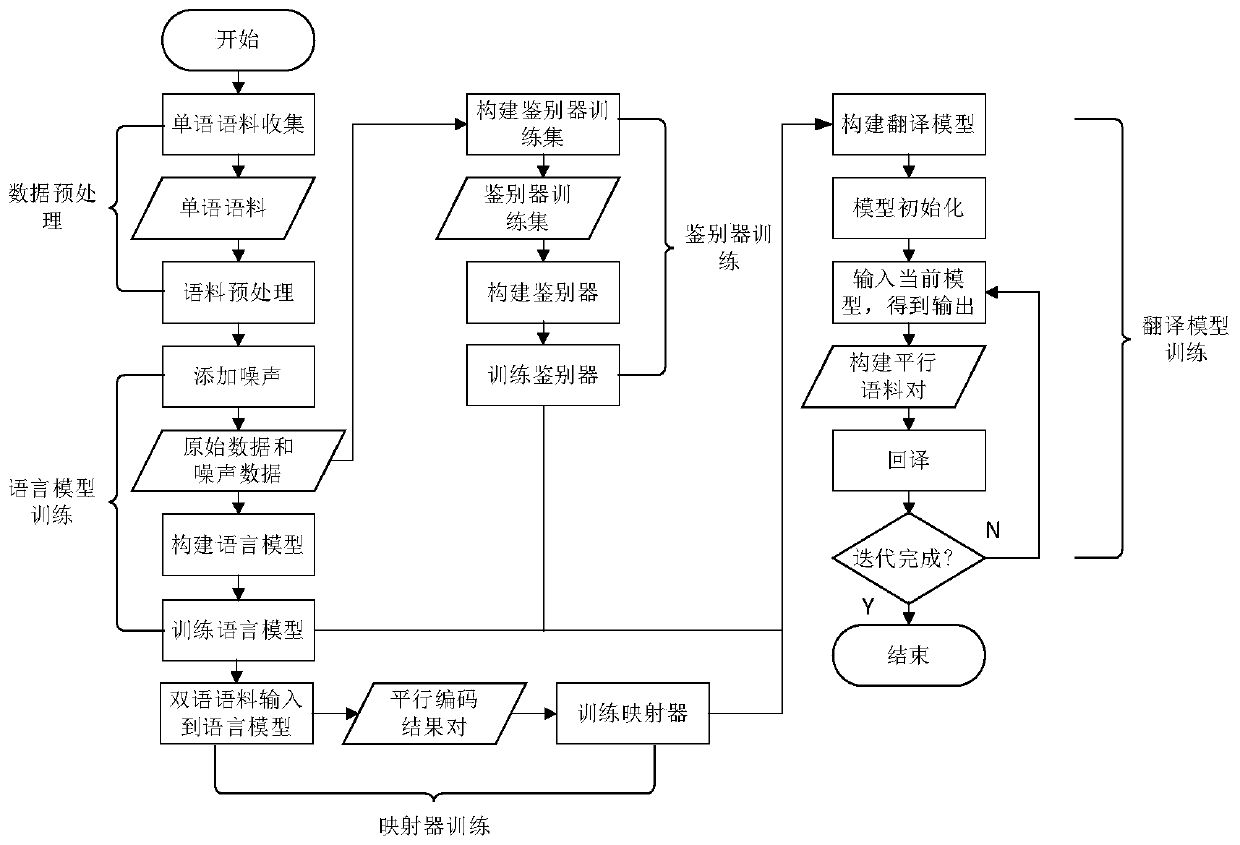

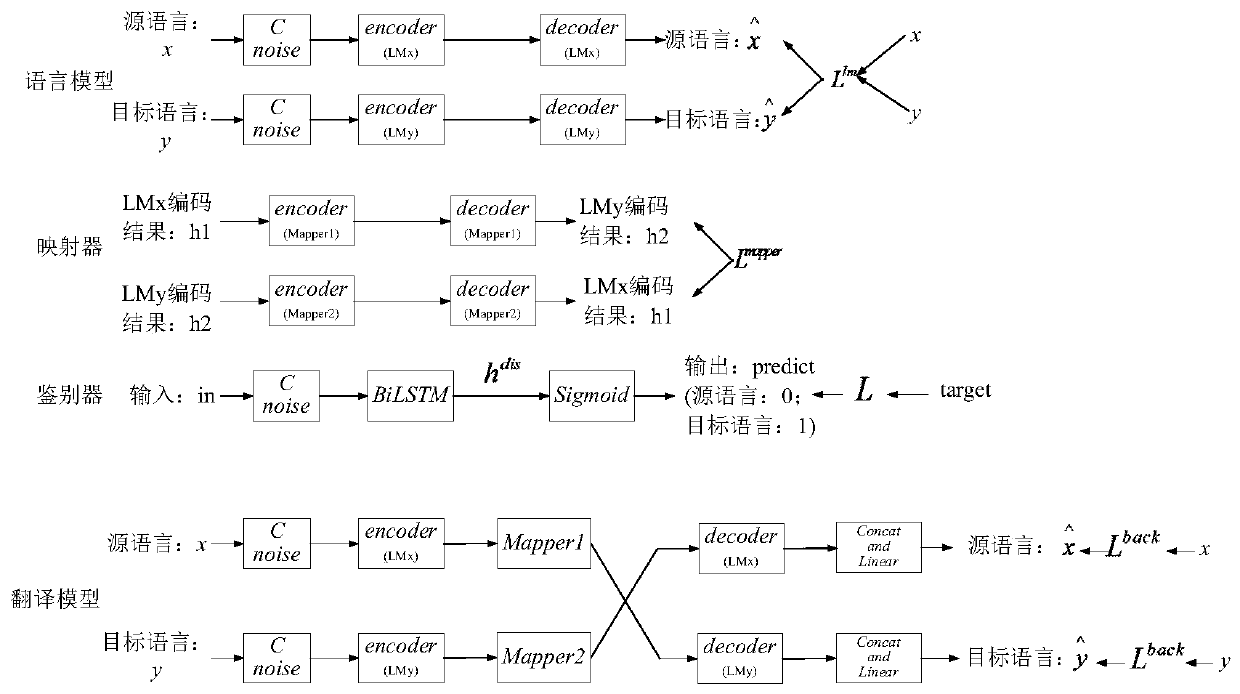

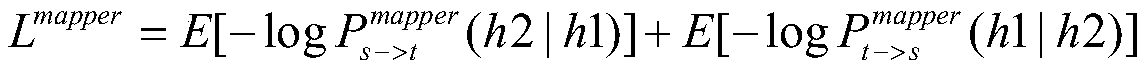

The invention relates to the technical field of neural machine translation, and discloses a neural machine translation method oriented to a small language. The method solves the problem of neural machine translation under the condition of lack of parallel corpora. According to the method, a neural machine translation model is constructed and trained through the following steps: 1, obtaining and preprocessing monolingual corpora; 2, respectively training language models of a source language and a target language by utilizing the monolingual corpus; 3, respectively training mapper used for mapping the encoding result of one language to the space of the other language by utilizing the encoding results of the bilingual parallel corpus in the parallel corpus of the small language in the language models of the source language and the target language; 4, training a discriminator model by utilizing the monolingual corpus; and 5, training a translation model by utilizing the language model, themapper, the discriminator model, the bilingual parallel corpus and the monolingual corpus. The method is suitable for translation between small languages only having small-scale parallel corpora.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

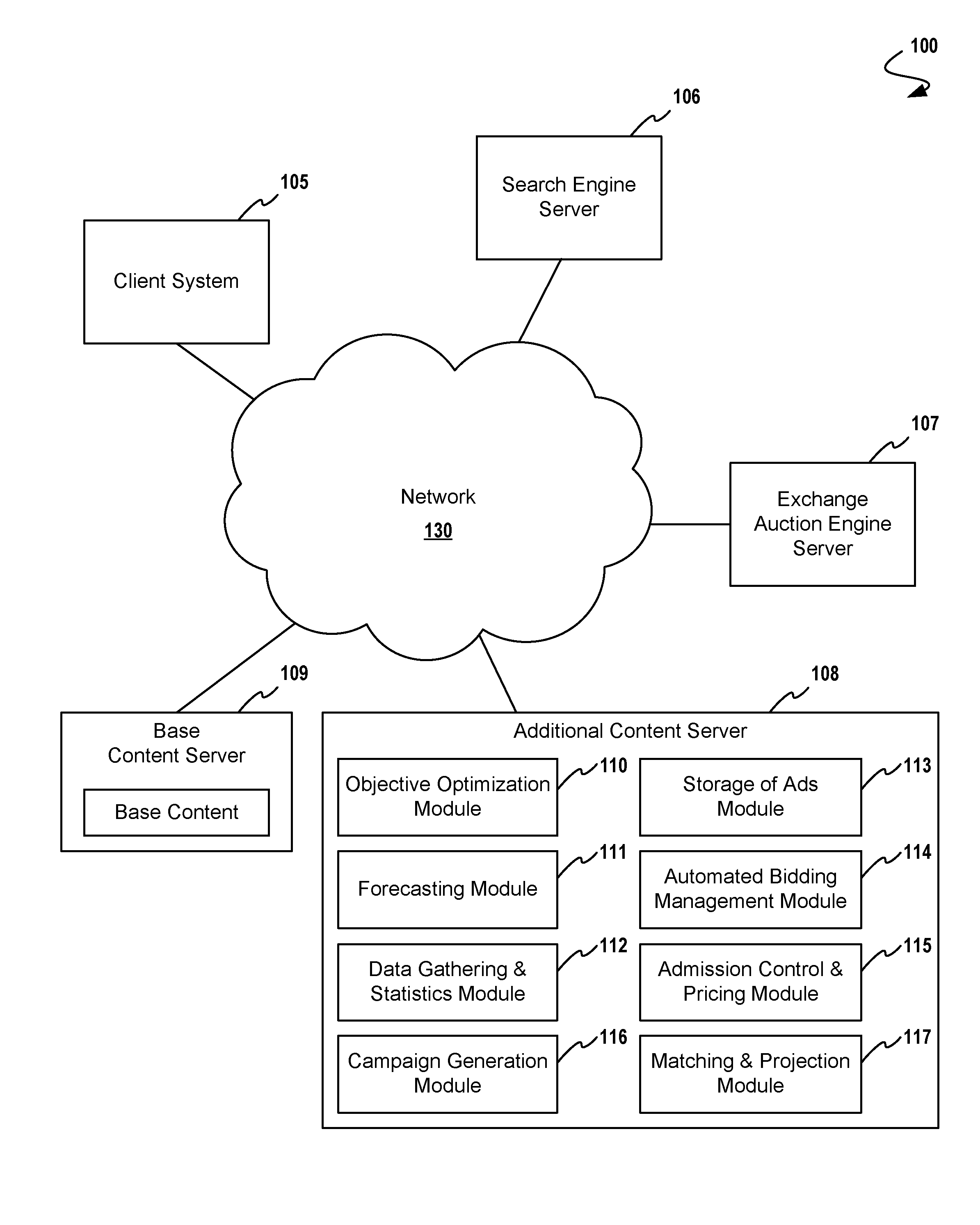

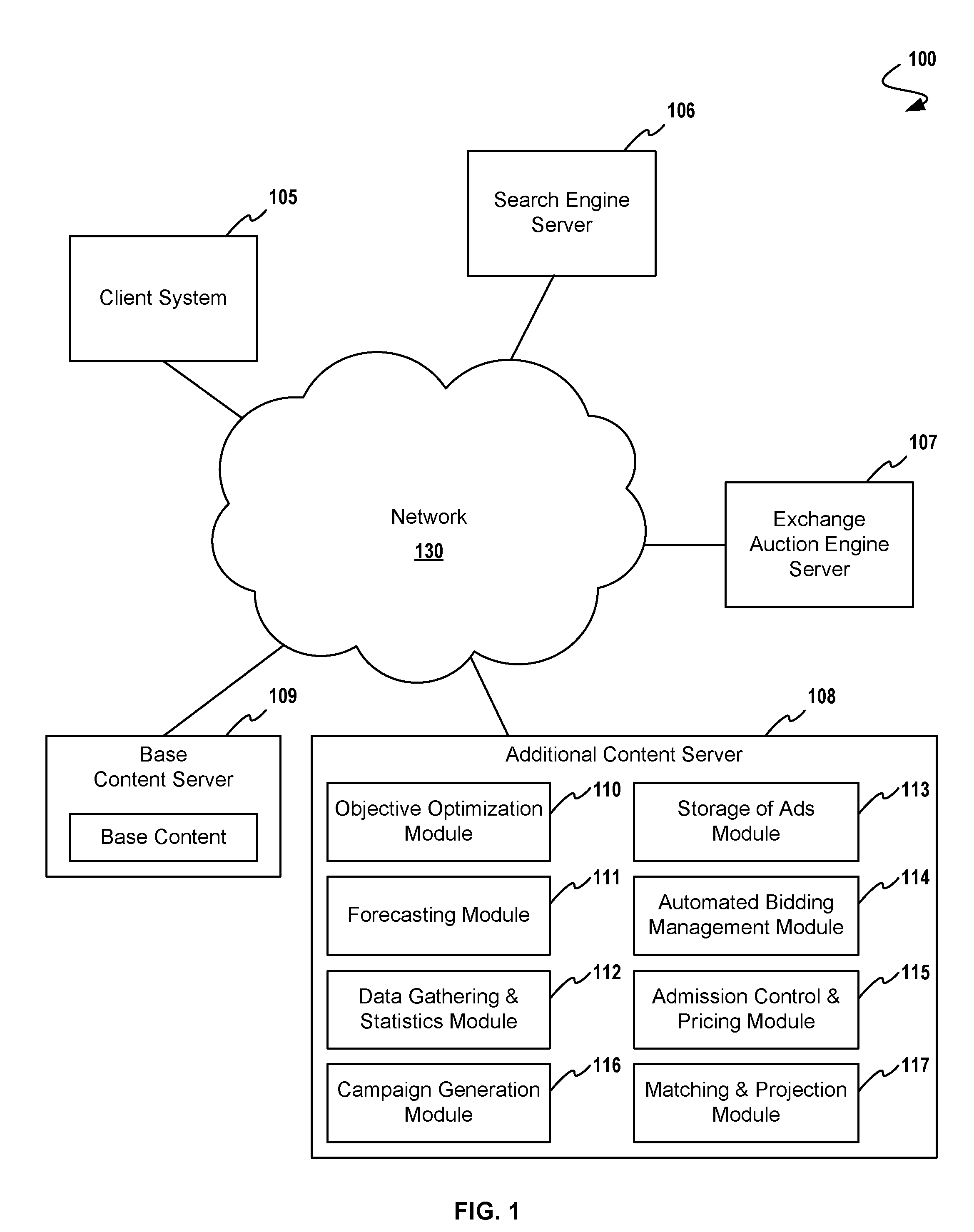

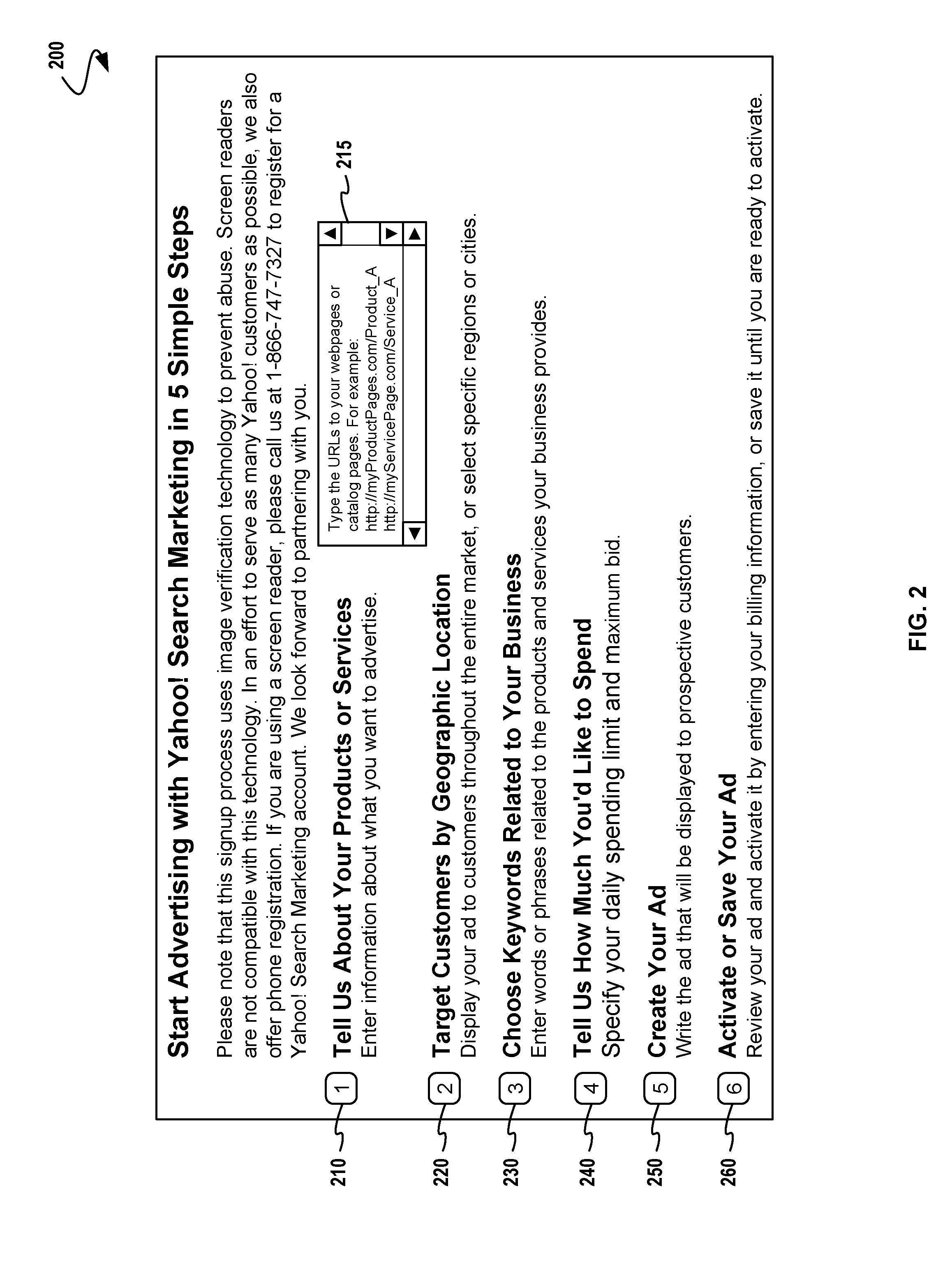

Automatic Generation of Bid Phrases for Online Advertising

InactiveUS20110258054A1Natural language translationAdvertisementsOnline advertisingTranslation table

Automatic generation of bid phrases for online advertising comprising storing a computer code representation of a landing page for use with a language model and a translation model (with a parallel corpus) to produce a set of candidate bid phrases that probabilistically correspond to the landing page, and / or to web search phrases. Operations include extracting a set of raw candidate bid phrases from a landing page, generating a set of translated candidate bid phrases using a parallel corpus in conjunction with the raw candidate bid phrases. In order to score and / or reduce the number of candidate bid phrases, a translation table is used to capture the probability that a bid phrase from the raw bid phrases is generated from a bid phrase from the set of translated candidate bid phrases. Scoring and ranking operations reduce the translated candidate bid phrases to just those most relevant to the landing page inputs.

Owner:YAHOO INC

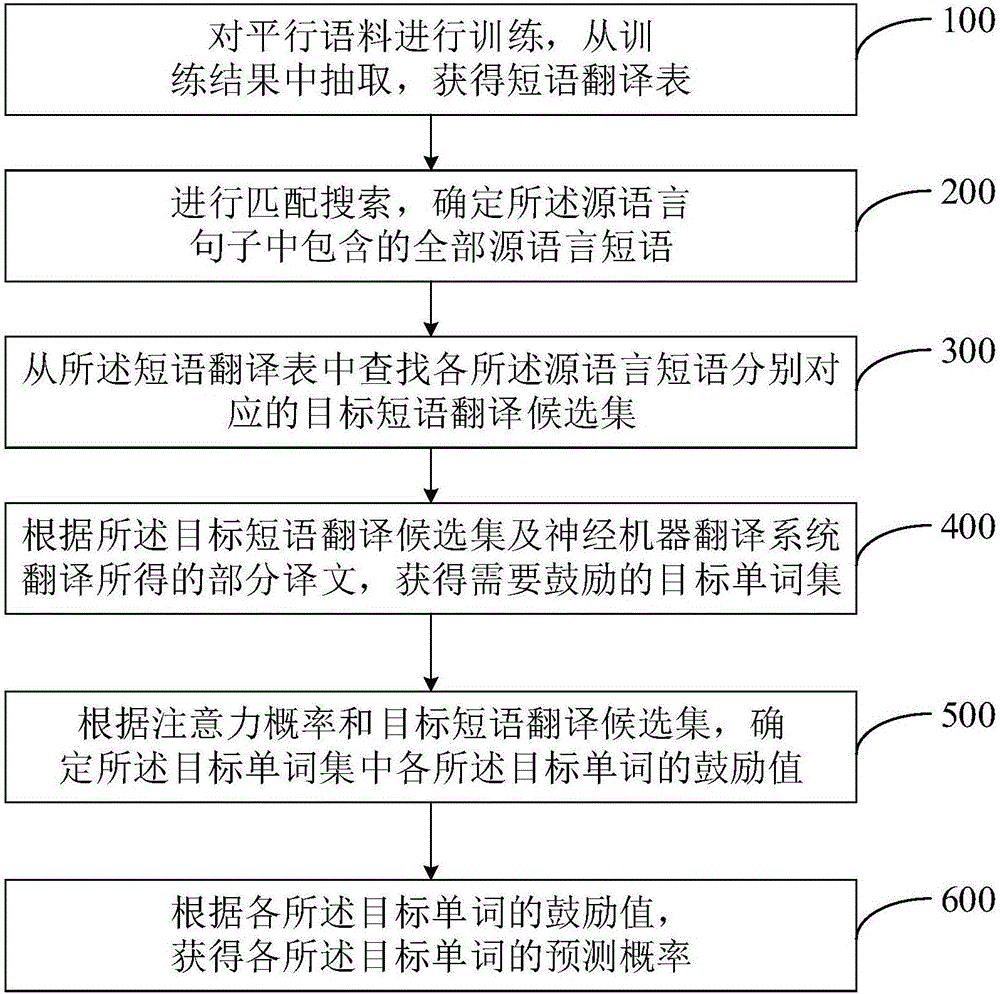

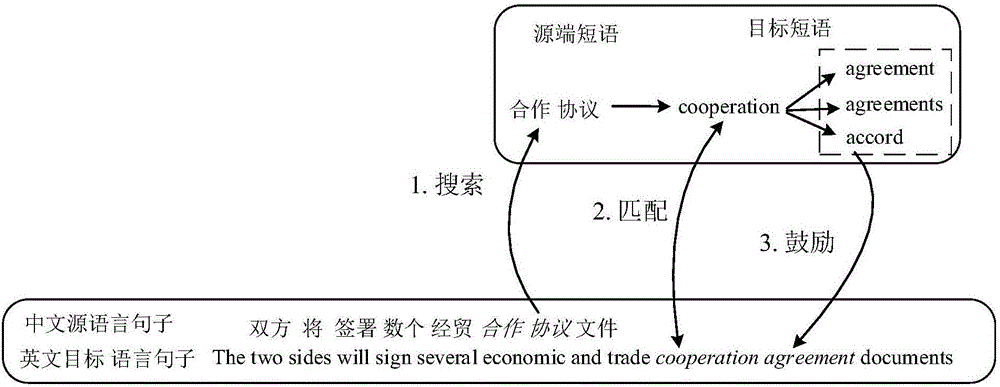

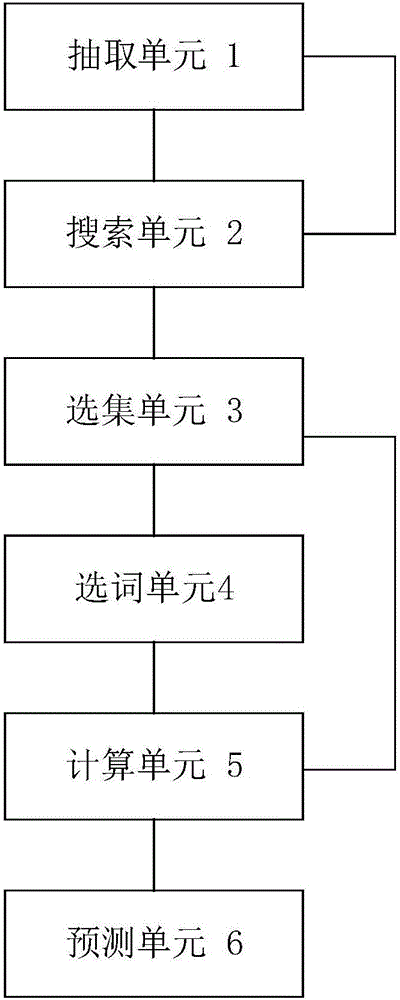

Word forecasting method and system based on nerve machine translation system

ActiveCN106844352AAccurately Obtain Predicted ProbabilitiesNatural language translationNeural architecturesSentence pairPrediction probability

The invention relates to a word forecasting method and system based on a nerve machine translation system. The word forecasting method includes the steps that parallel corpora are trained, extracting is carried out from the training result, and a phrase translation table is obtained; source language sentences in any parallel sentence pairs are subjected to matching searching, and all source language phrases contained in the source language sentences are determined; target phrase translation candidate sets corresponding to all the source language phrases respectively are found from the phrase translation table; part of obtained translations are translated according to the target phrase translation candidate sets and the nerve machine translation system, and target word sets needing to be encouraged are obtained; encouragement values of all target words in the target word sets are determined according to the attention probability and the target phrase translation candidate sets which are based on the nerve machine translation system; the prediction probability of all the target words is obtained according to the encouragement values of all the target words. The encouragement values of the target words are obtained in the mode that the phrase translation table is introduced and added into a nerve translation model, and therefore the prediction probability of the target words can be increased.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

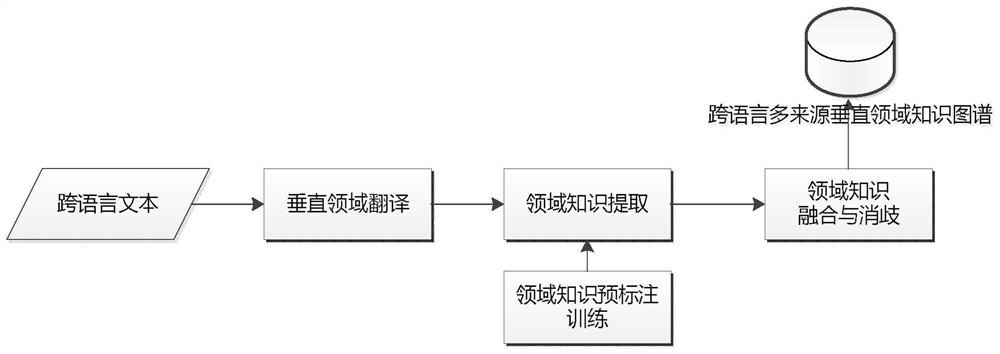

Cross-language multi-source vertical domain knowledge graph construction method

ActiveCN112199511AImplement automatic translationImplement pre-labeled trainingNatural language translationSpecial data processing applicationsData setTheoretical computer science

The invention discloses a cross-language multi-source vertical domain knowledge graph construction method, and relates to the technical field of knowledge engineering. According to the technical scheme, the method comprises the steps that vertical domain translation completes parallel corpus construction through content and link analysis according to input cross-language texts, domain dictionaries, domain term libraries, domain materials and data, and automatic translation of foreign language texts is achieved based on a trained translation model on the basis of preprocessing; domain knowledgepre-annotation training realizes active learning annotation based on text word segmentation and text clustering, to-be-annotated corpus screening based on an analysis topic is completed, and a confirmed service annotation data set is generated; an optimal algorithm is selected, and semantic feature extraction and entity relationship extraction are completed based on deep learning in combination with vertical domain translation data and an actual scene; and domain knowledge fusion and disambiguation are performed on knowledge from different sources through network equivalent entity combination, so that the cross-language multi-source vertical domain knowledge graph can be obtained.

Owner:10TH RES INST OF CETC

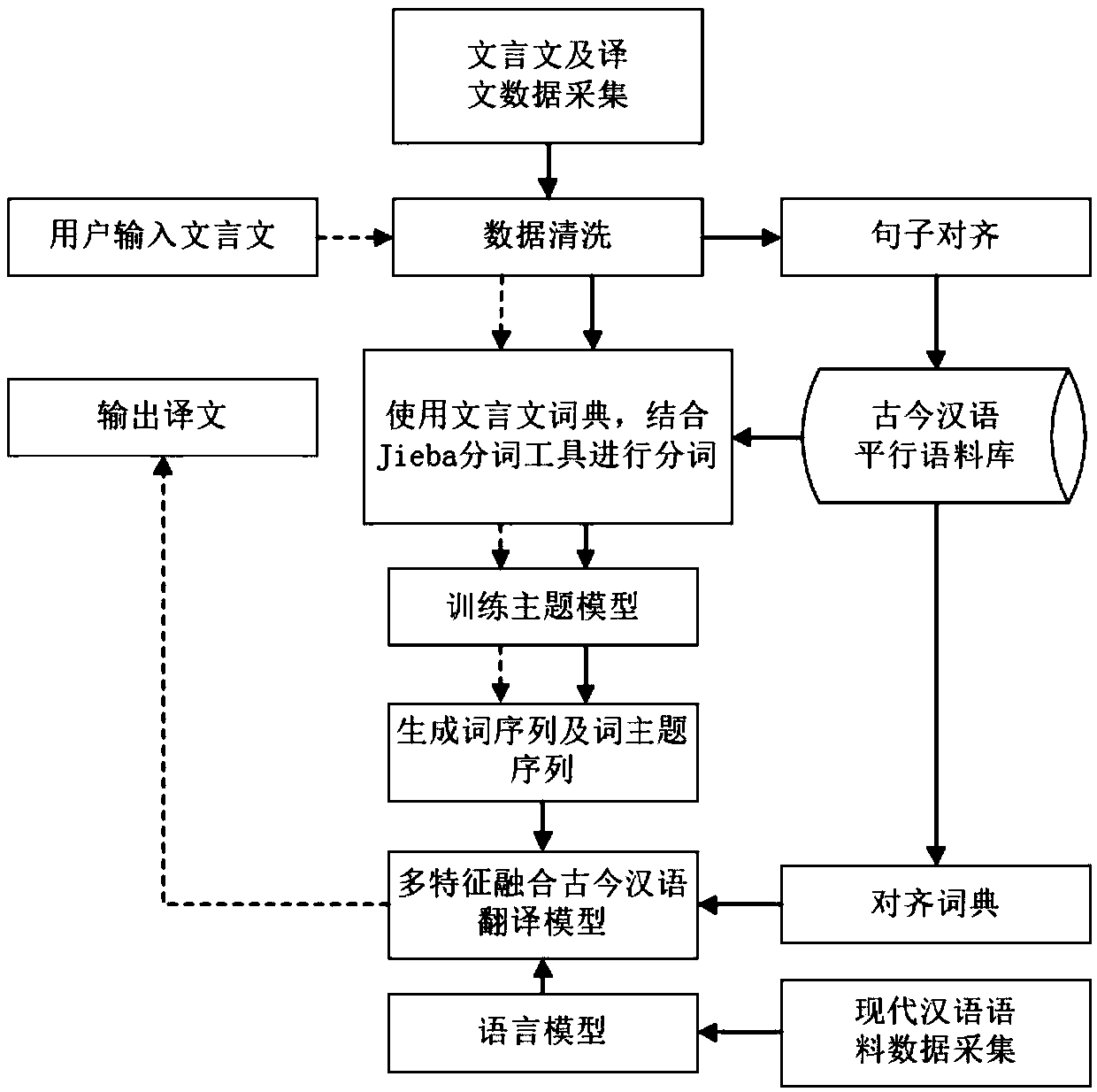

An ancient Chinese automatic translation method based on multi-feature fusion

ActiveCN109684648AIncreased accuracySolve the problem of unregistered wordsNatural language translationSpecial data processing applicationsWord listSentence pair

The invention discloses an ancient Chinese automatic translation method based on multi-feature fusion. The method comprises the following steps: 1) collecting a text, modern text translation data of the text, a text word list and modern Chinese monolingual corpus data; And 2) cleaning the data and constructing an ancient Chinese parallel corpus by using a sentence alignment method. And 3) carryingout word segmentation on the modern text and the ancient text by using a Chinese word segmentation tool; 4) performing topic modeling on the ancient text corpus to generate topics-Word distribution and word-Subject conditional probability distribution 5) using the modern Chinese monolingual corpus to train to obtain a modern Chinese language model; And obtaining an aligned dictionary by using ancient Chinese parallel corpora. 6) on the basis of the attention-based recurrent neural network translation model, fusing statistical machine translation characteristics such as a language model and analignment dictionary, and using an ancient Chinese parallel sentence pair and a word topic sequence training model, and 7) inputting a to-be-translated text by a user, and obtaining a modern text translation by using the model obtained by training in the step 6).

Owner:ZHEJIANG UNIV

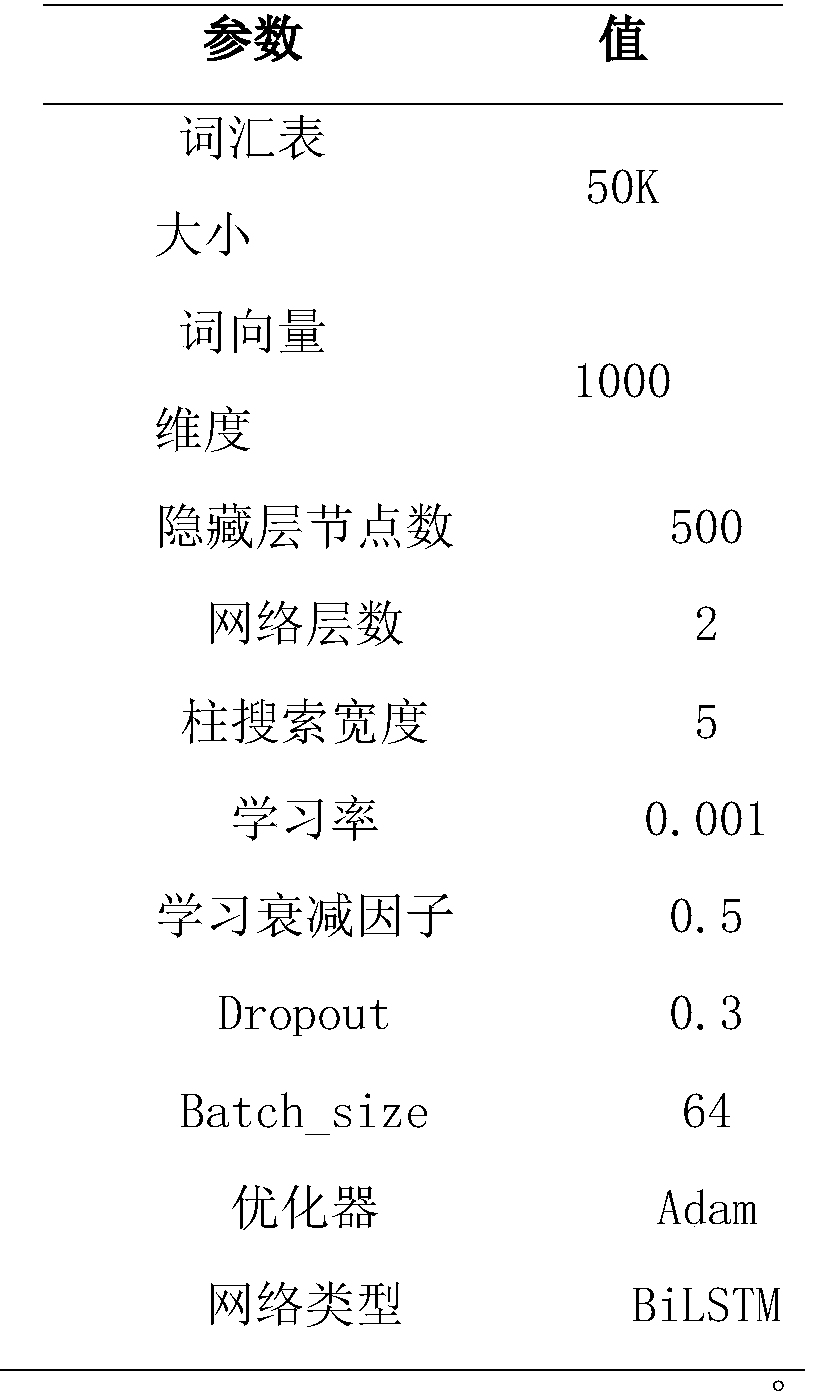

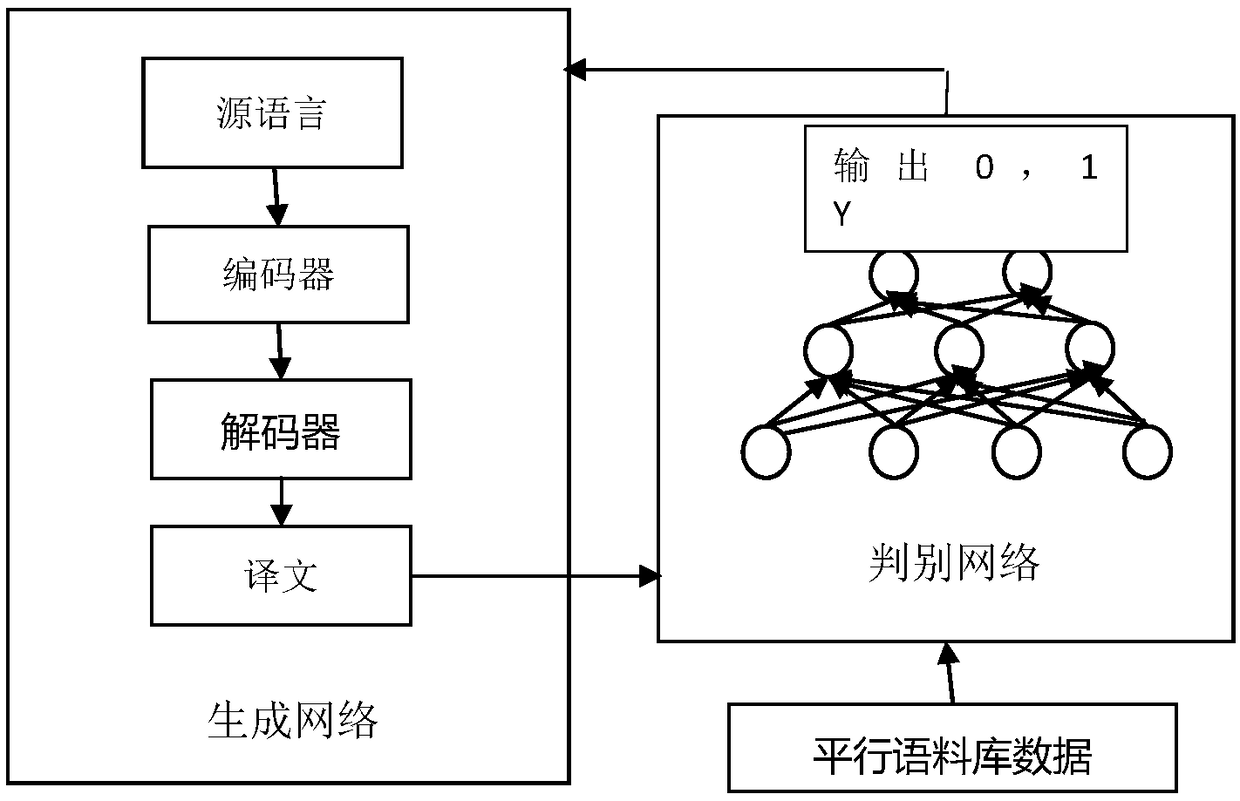

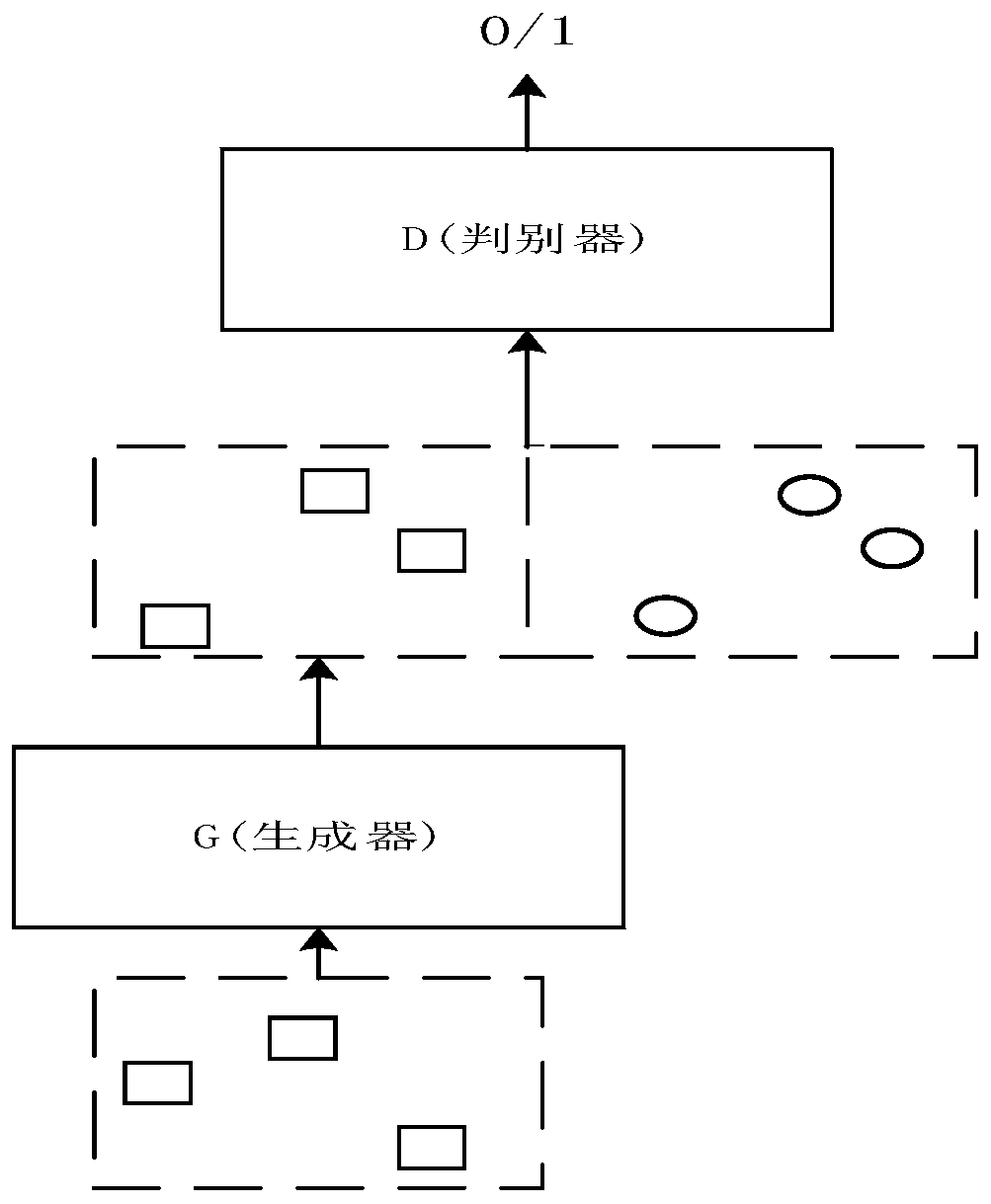

A Mongolian-Chinese machine translation method based on anti-neural network

InactiveCN108897740ANatural language translationSpecial data processing applicationsAlgorithmParallel corpora

A Mongolian-Chinese translation method based on an anti-neural network includes: introducing a discriminating network D that competes with a generation network G on the basis of the original machine translation generation network G, wherein the discriminating network D mainly implements binary classification of the output of the generation network G; determining a translation of a target language,and returning a value 1 if the translation is from a trained parallel corpus; and returning a value 0 if the translation is from a machine translation result of the generated network G. When the probability distribution of real data is not (hard to) calculate (such as the source language parallel corpus data is less), the training mechanism that the generator and the discriminator compete with each other can enable the generator to approximate the probability distribution that is difficult to calculate.

Owner:INNER MONGOLIA UNIV OF TECH

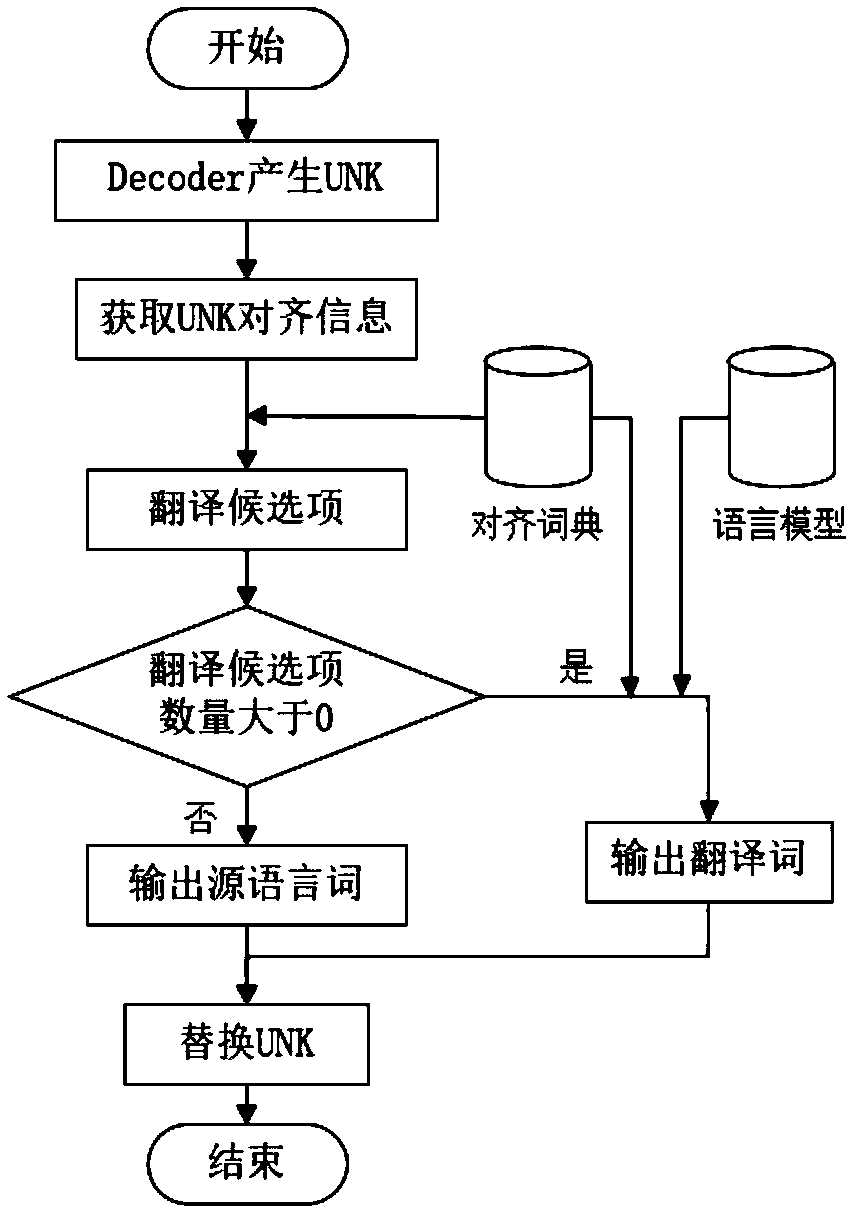

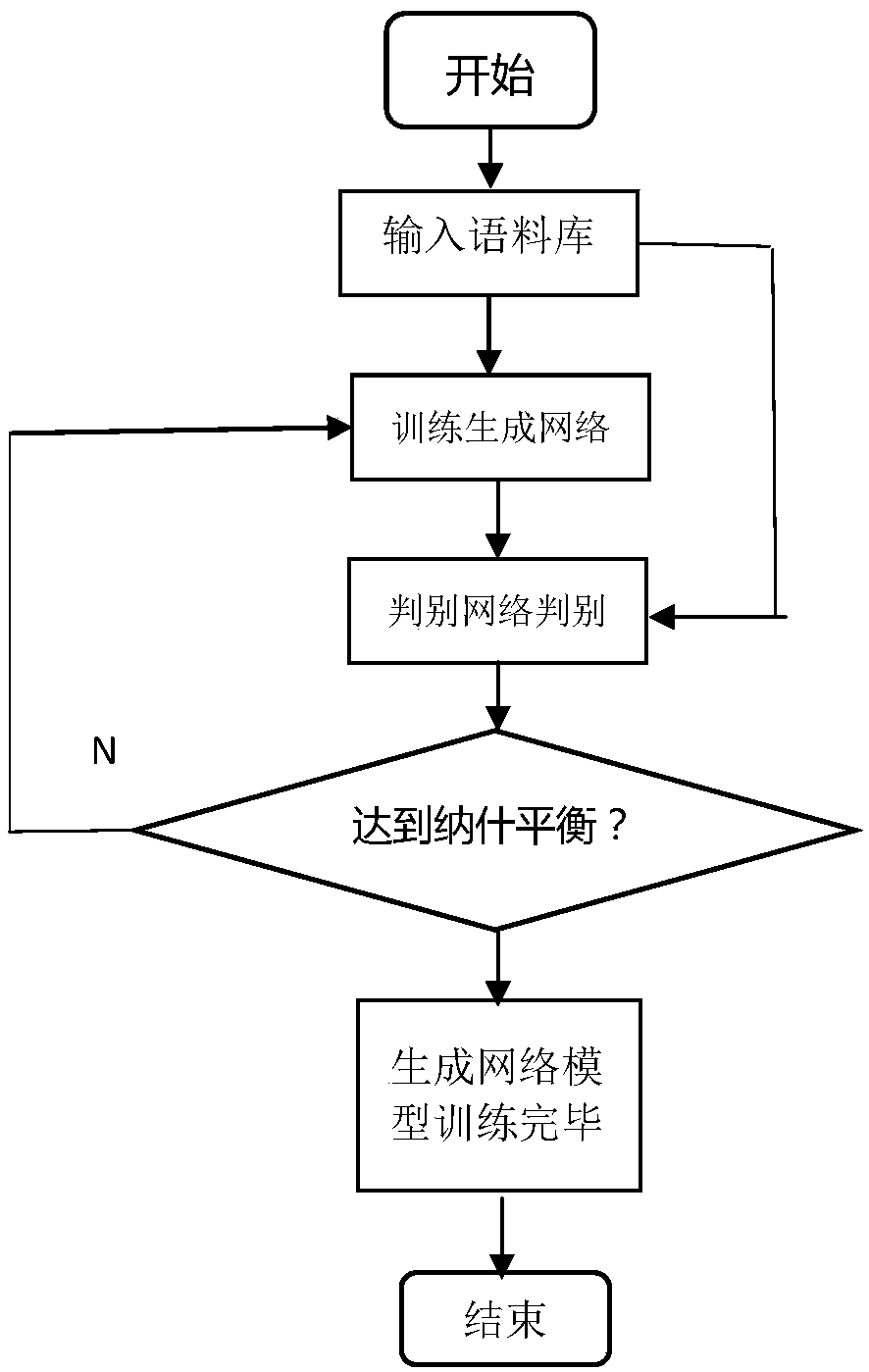

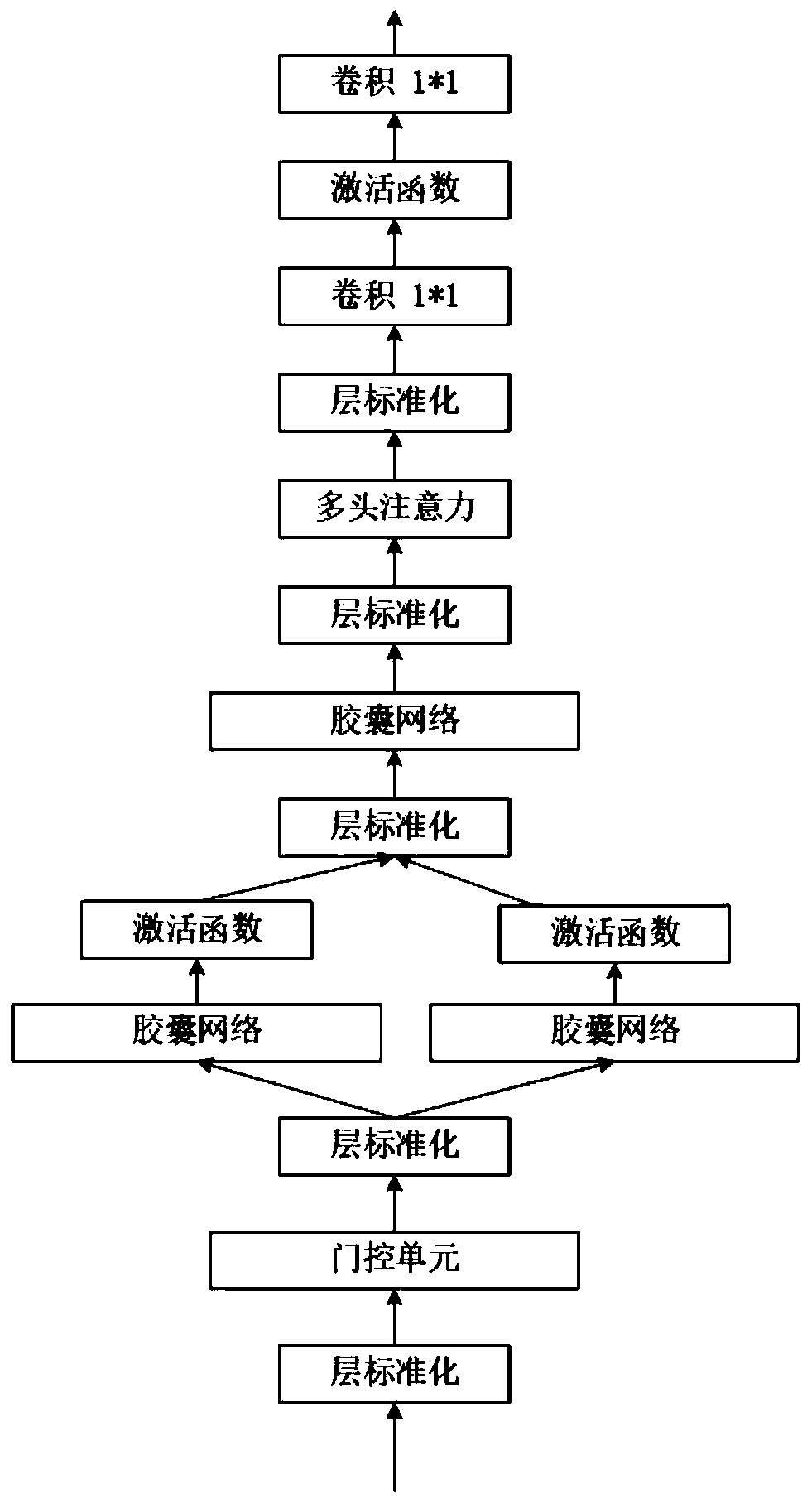

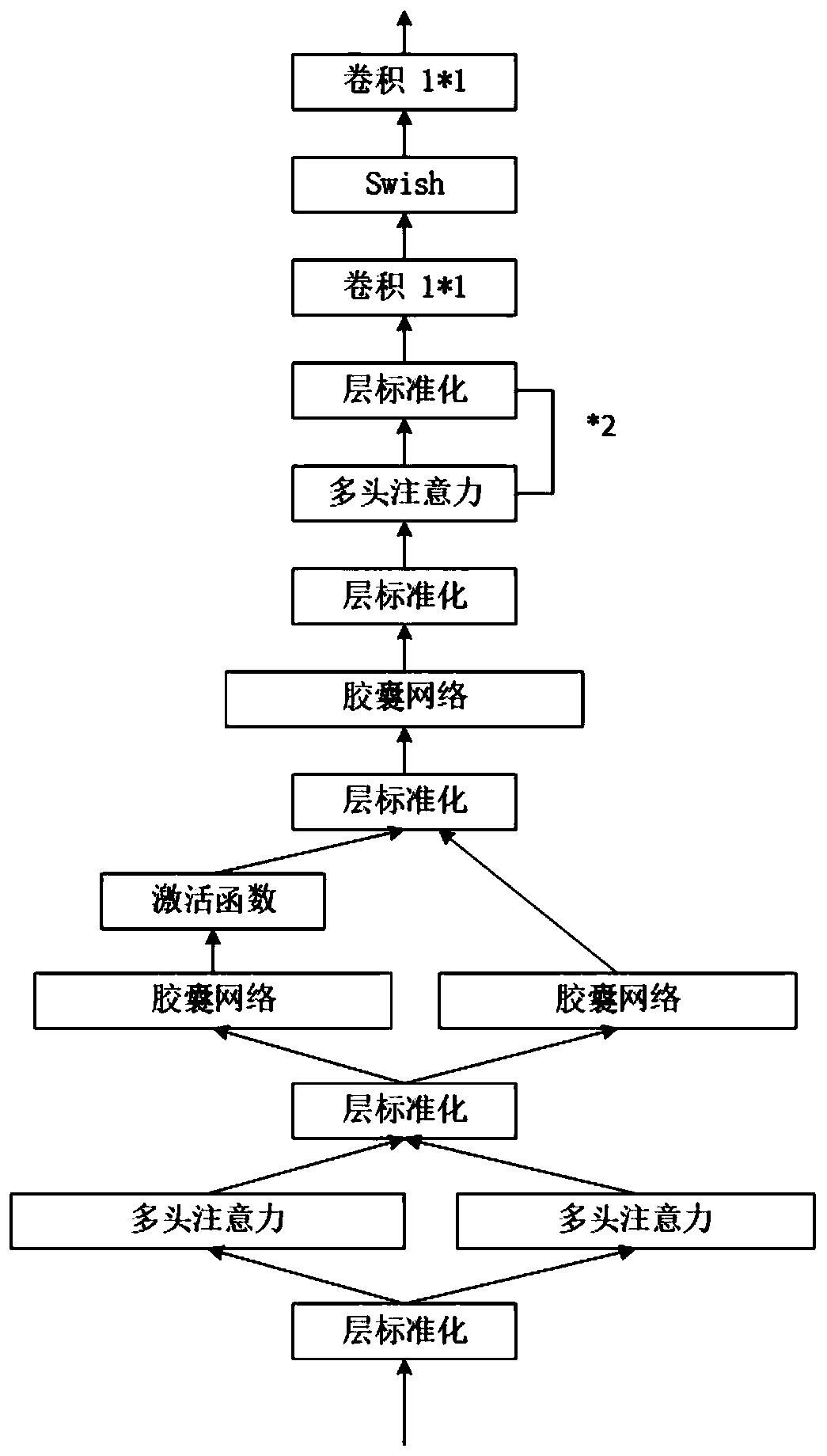

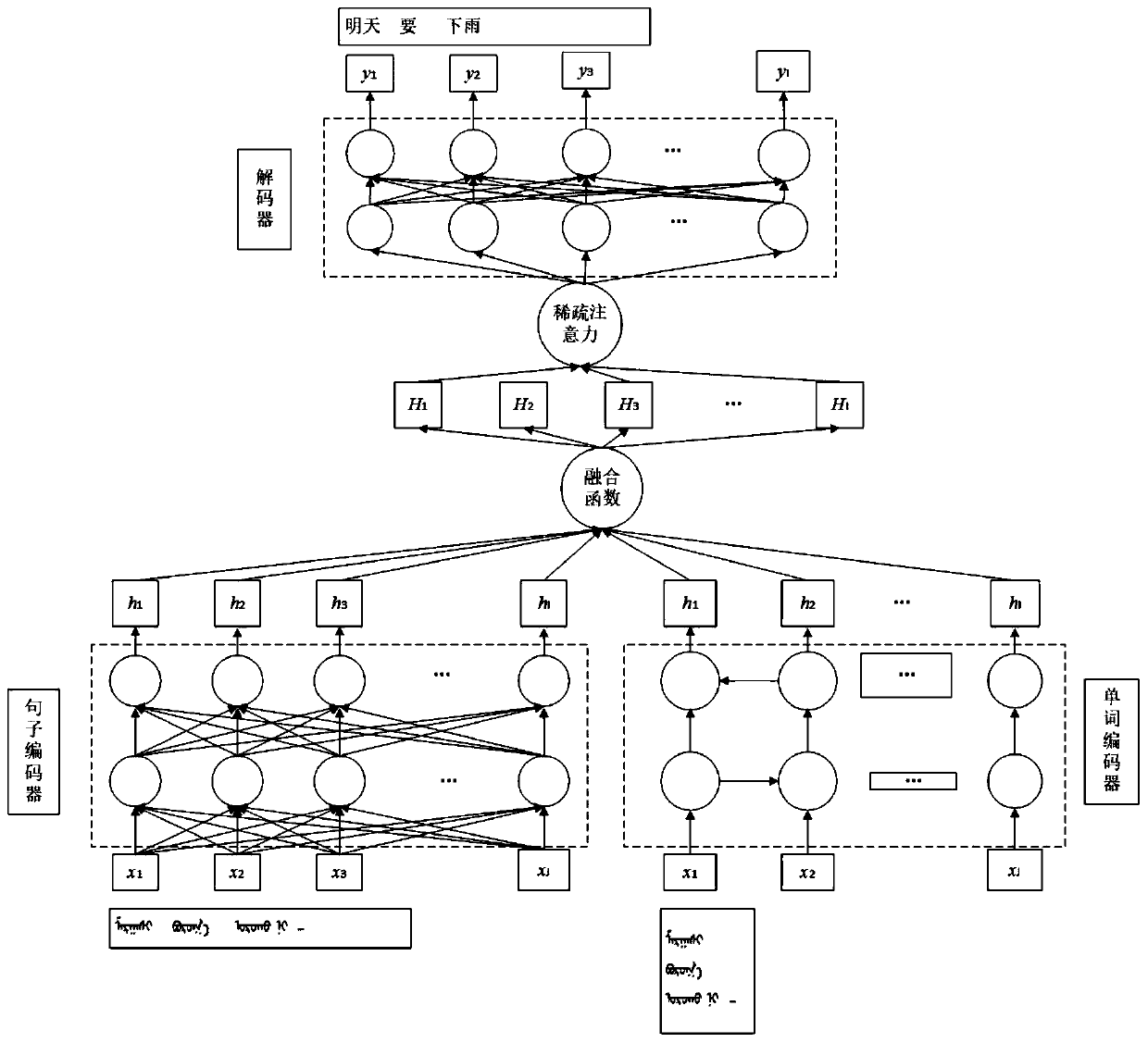

Method for constructing Mongolian-Chinese parallel corpora by utilizing generative adversarial network to improve Mongolian-Chinese translation quality

ActiveCN110598221ANeural architecturesSpecial data processing applicationsNatural language processingDiscriminator

The invention discloses a method for constructing Mongolian-Chinese parallel corpora by utilizing a generative adversarial network to improve Mongolian-Chinese translation quality. The generative adversarial network comprises a generator and a discriminator. The generator uses a hybrid encoder to encode a source language sentence Mongolian into vector representation and converts the representationinto target language sentence Chinese by using a decoder based on a bidirectional Transformer in combination with a sparse attention mechanism. Therefore, Mongolian sentences and more Mongolian-Chinese parallel corpora which are closer to human translation are generated. In a discriminator, the difference between the Chinese sentence generated by the generator and human translation is judged; andadversarial training is performed on the generator and the discriminator until the discriminator considers that the Chinese sentence generated by the generator is very similar to the human translation, a high-quality Mongolian-Chinese machine translation system and a large number of Mongolian-Chinese parallel data sets are acquired and Mongolian-Chinese translation is performed by using the Mongolian-Chinese machine translation system. According to the method, the problems that Mongolian-Chinese parallel data sets are severely deficient and NMT cannot guarantee naturalness, sufficiency and accuracy of translation results are solved.

Owner:INNER MONGOLIA UNIV OF TECH

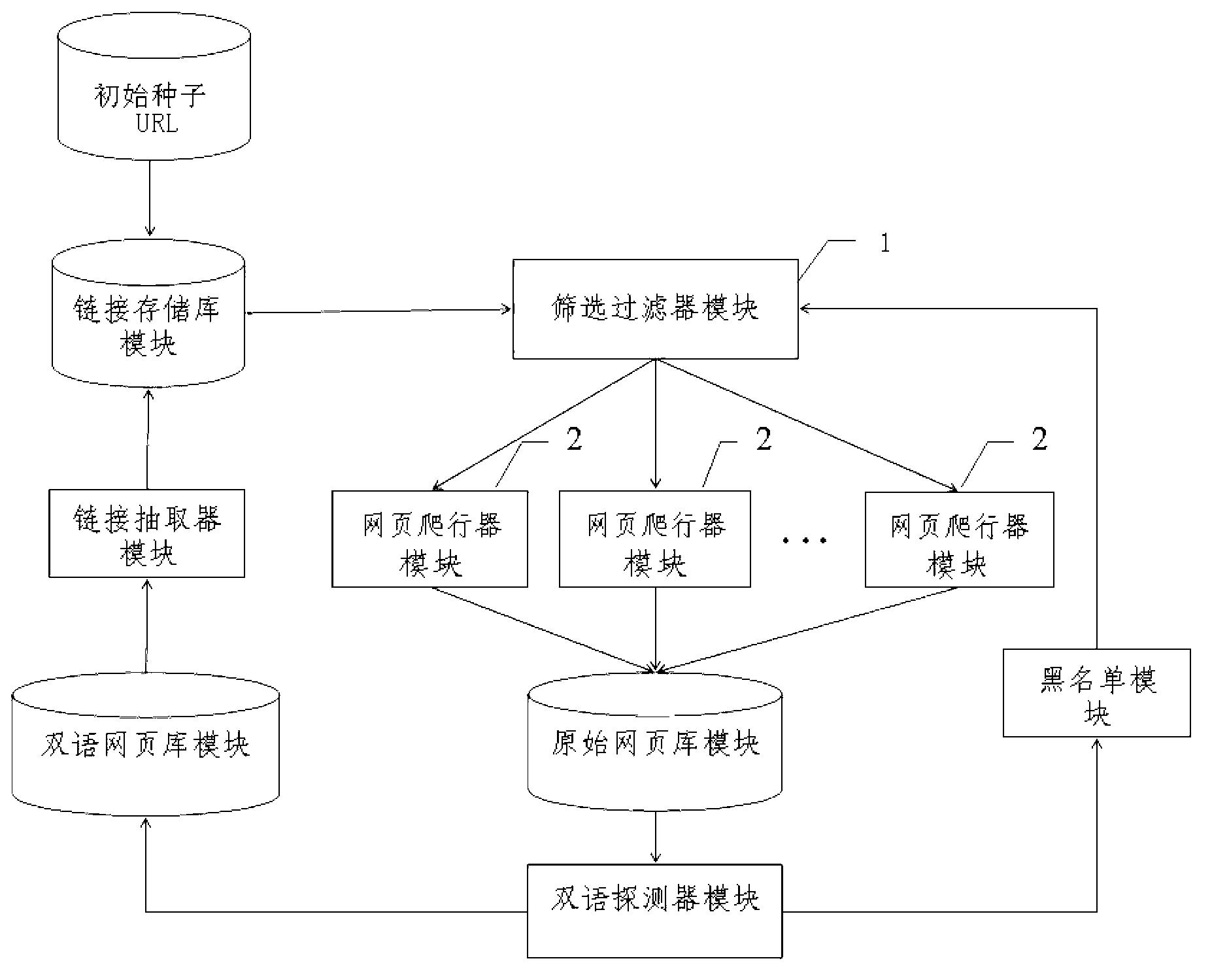

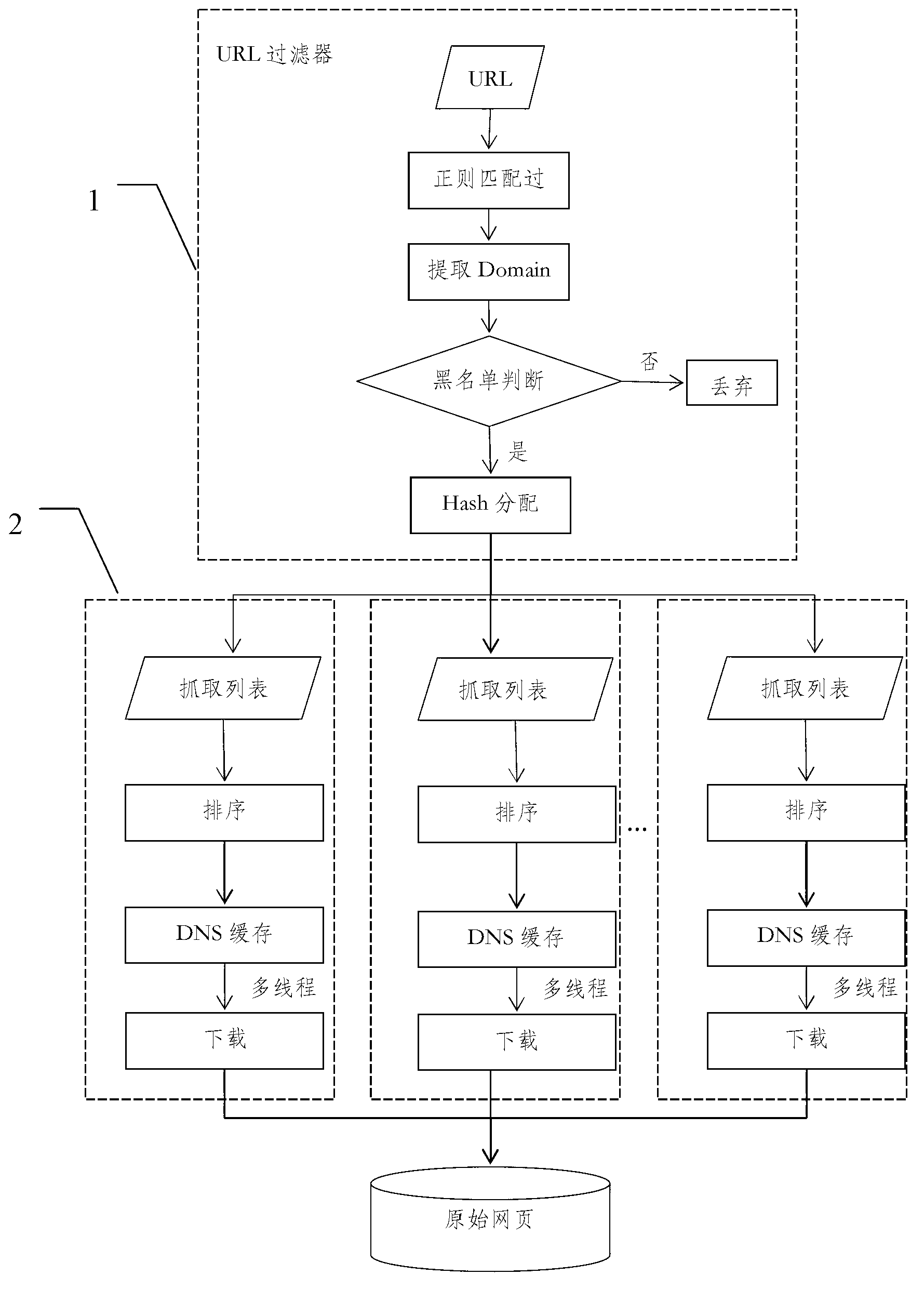

Distributed acquisition system facing web bilingual parallel corpora resources

InactiveCN103020043AImprove crawling efficiencySave computing resourcesTransmissionSpecial data processing applicationsThe InternetWeb page

A distributed acquisition system facing web bilingual parallel corpora resources relates to the technical field of corpora acquisition, and solves the problems that the conventional system is low in crawling scale, less in corpora acquiring ways, and lower in crawling efficiency. The system comprises an interlinking memory pool module, a screening filter module, a webpage crawl device module, an original webpage library module, a bilingual detection module, a blacklist module, a bilingual webpage library module and an interlinking withdrawal device module. The invention overcomes the technical defects in the conventional technical field, adopts the Internet as a corpora acquisition target, can effectively solve the resource occupation conflicting problem of a distributed system, can provide a universal design framework for a bilingual parallel corpora acquisition system, can dynamically add non-bilingual sites into a blacklist unceasingly, can effectively grab parallel corpora in the Internet, and can greatly improve the bilingual corpora grabbing efficiency.

Owner:HARBIN INST OF TECH

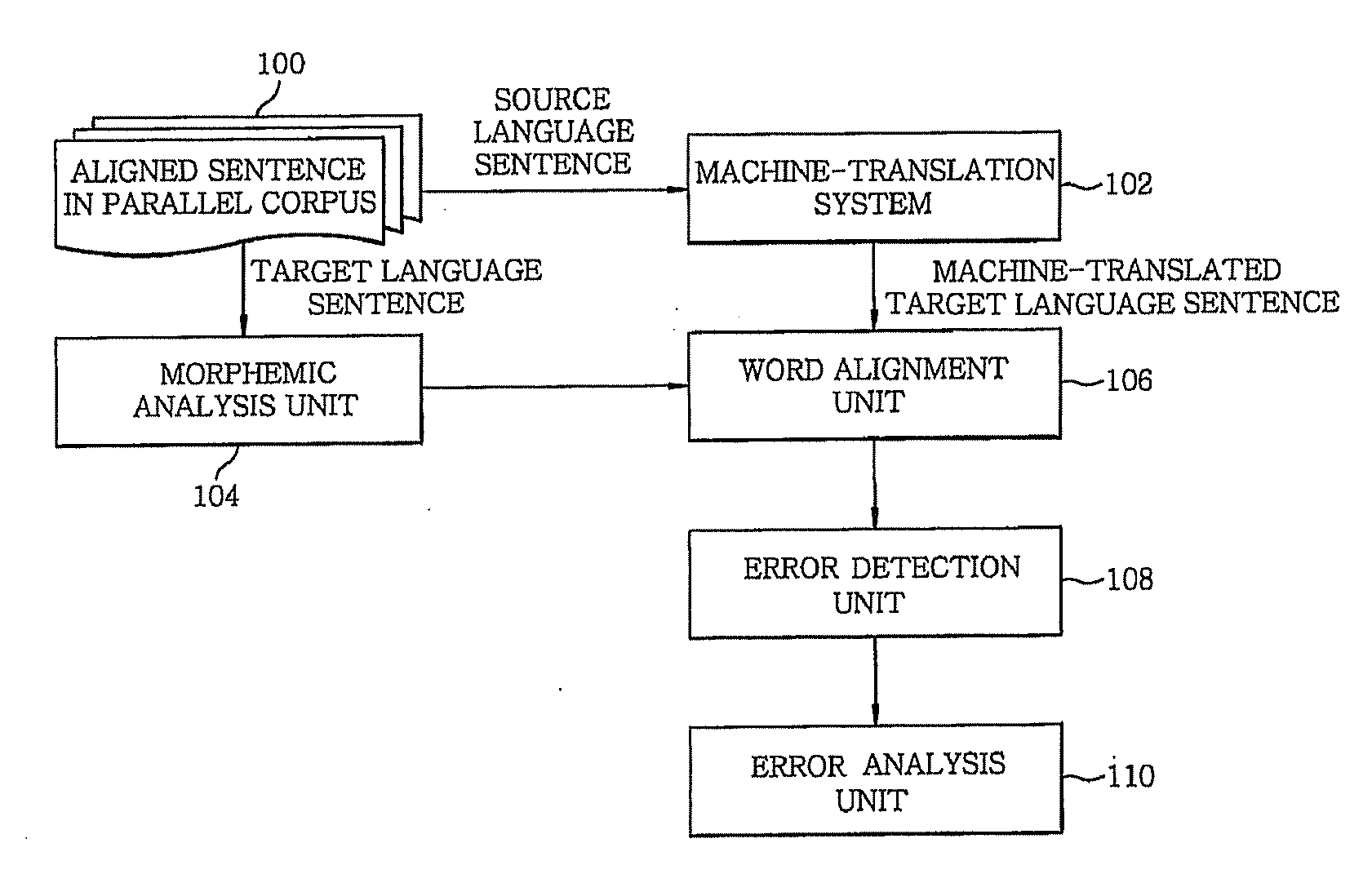

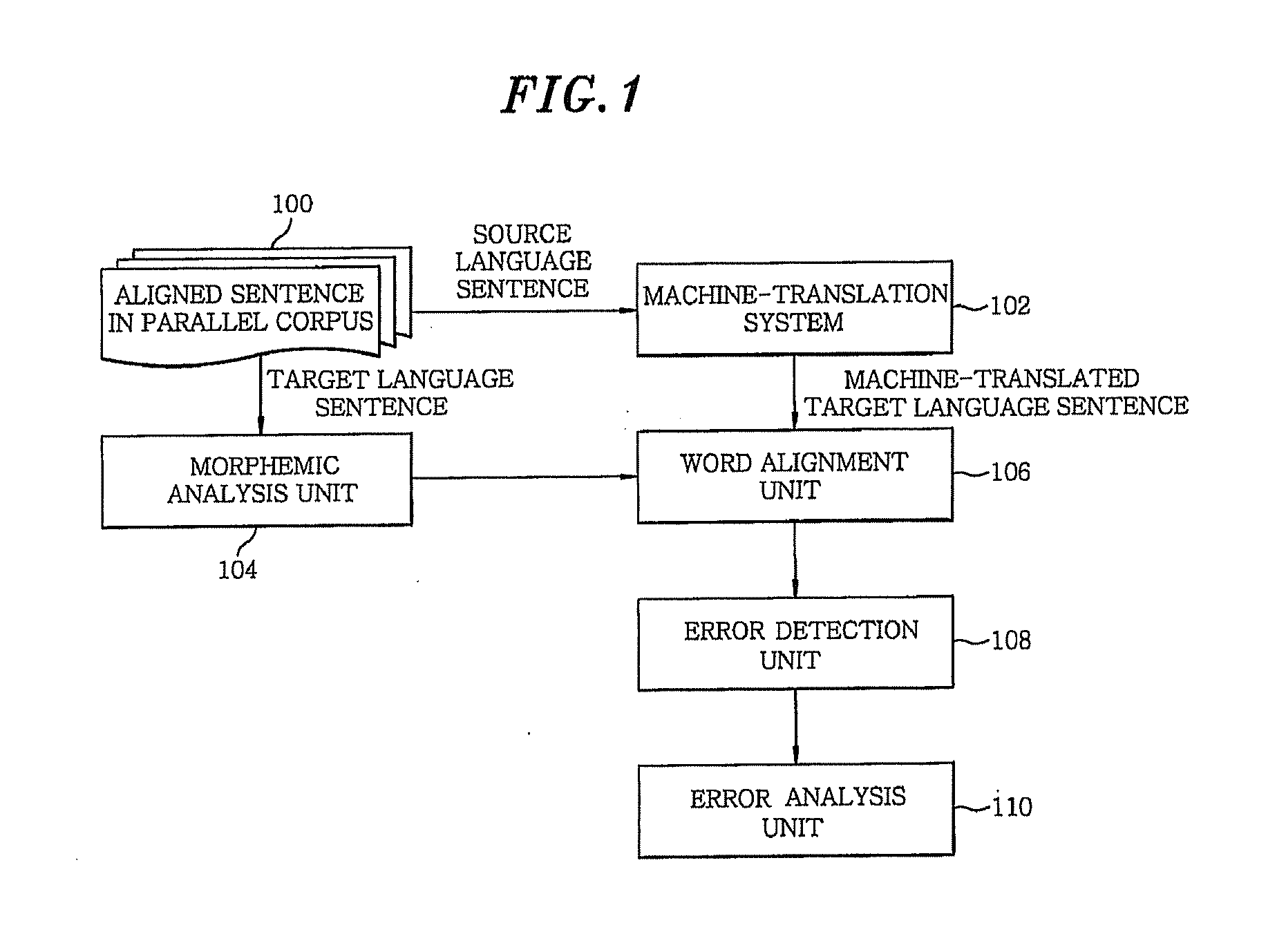

Method and apparatus for detecting errors in machine translation using parallel corpus

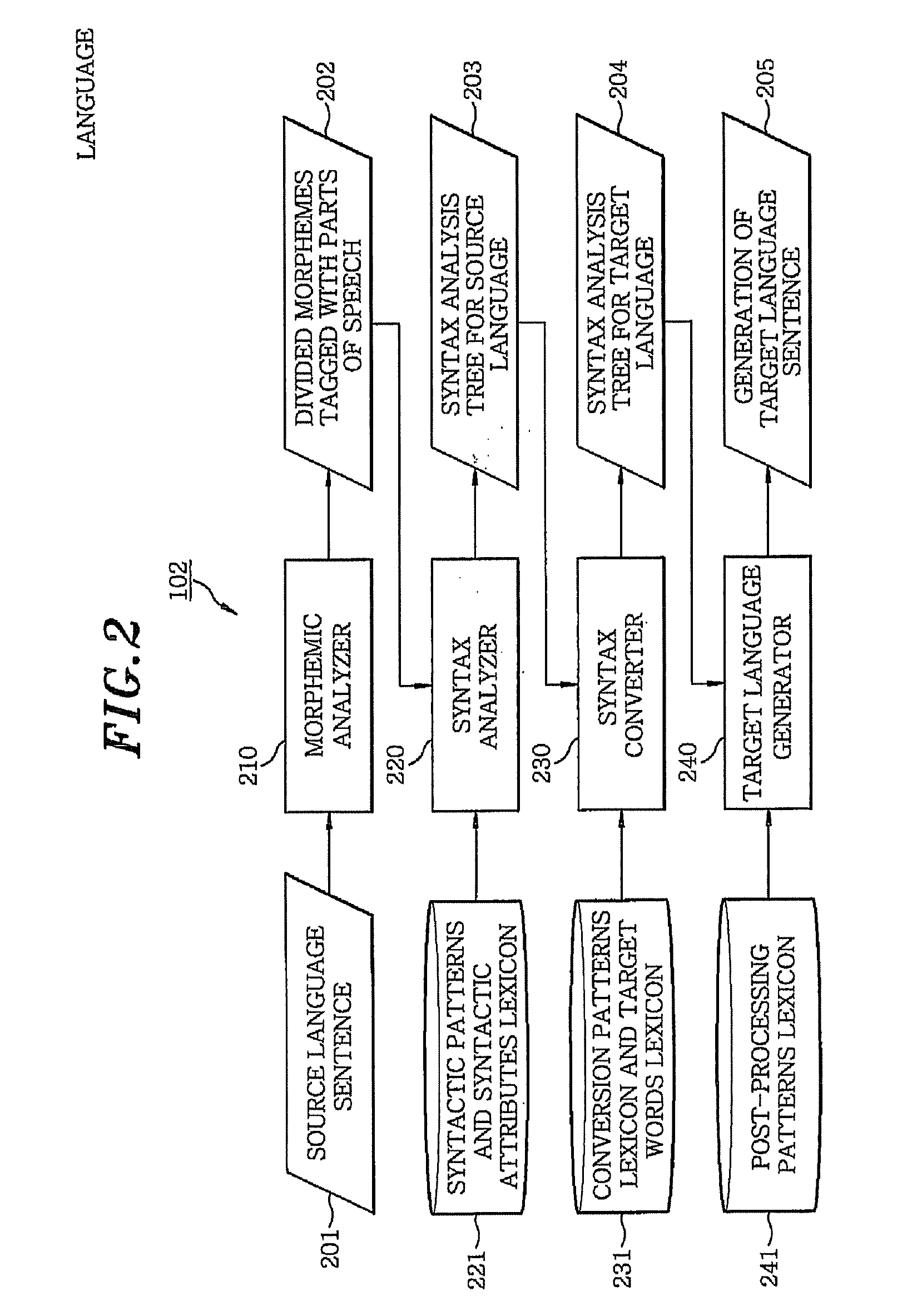

ActiveUS20100070261A1Improve performanceNatural language translationSpecial data processing applicationsType errorMorpheme

A method for automatically detecting errors in machine translation using a parallel corpus includes analyzing morphemes of a target language sentence in the parallel corpus and a machine-translated target language sentence, corresponding to a source language sentence, to classify the morphemes into words; aligning by words and decoding, respectively, a group of the source language sentence and the machine-translated target language sentence, and a group of the source language sentence and the target language sentence in the parallel corpus; classifying by types errors in the machine-translated target language sentence by making a comparison, word by word, between the decoded target language sentence in the parallel corpus and the decoded machine-translated target language sentence; and computing error information in the machine-translated target language sentence by examining a frequency of occurrence of the classified error types.

Owner:ELECTRONICS & TELECOMM RES INST

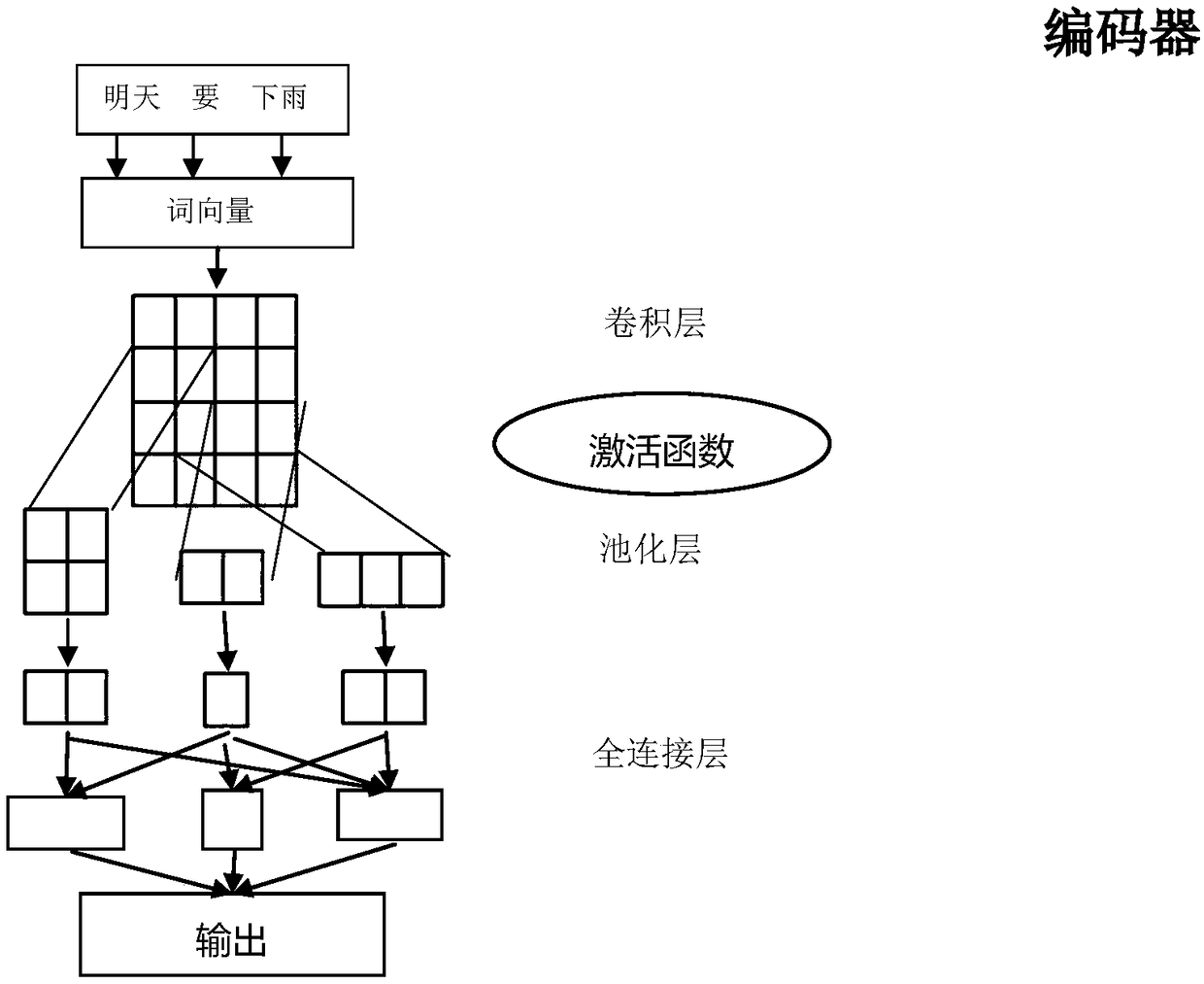

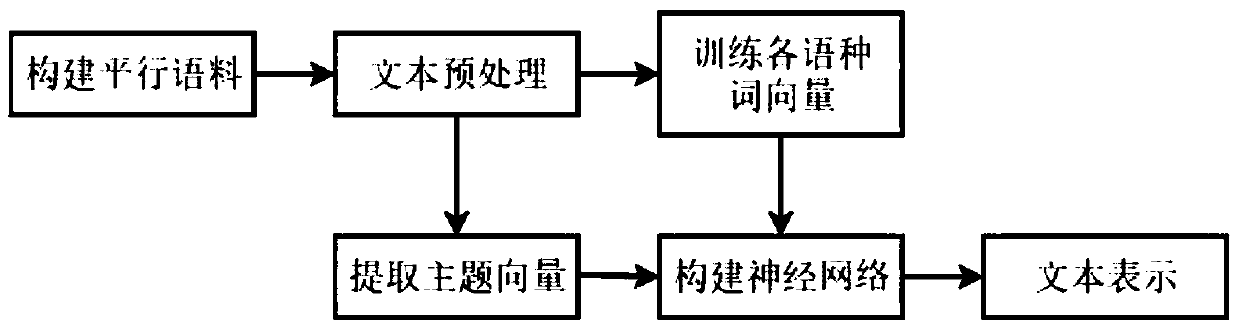

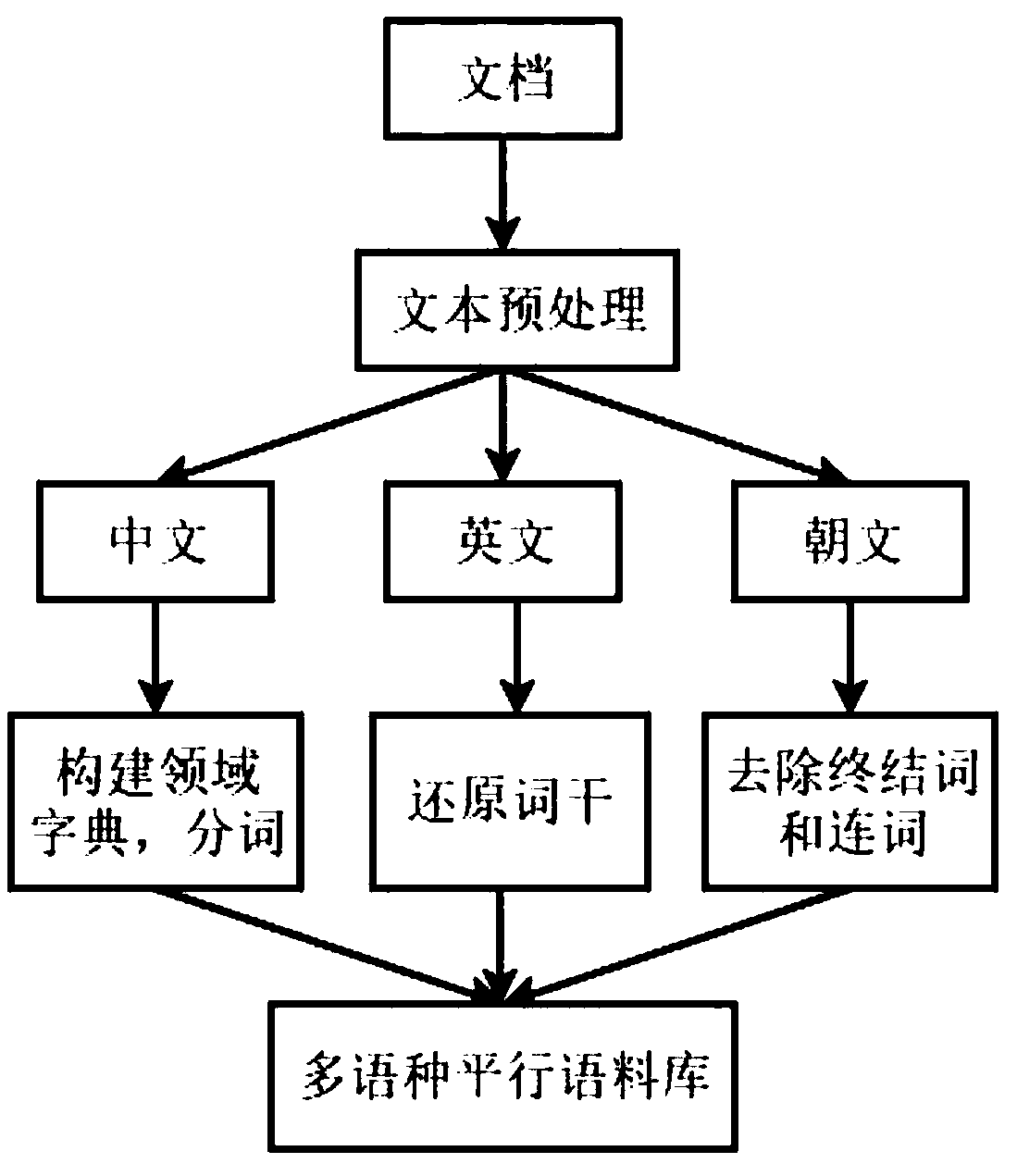

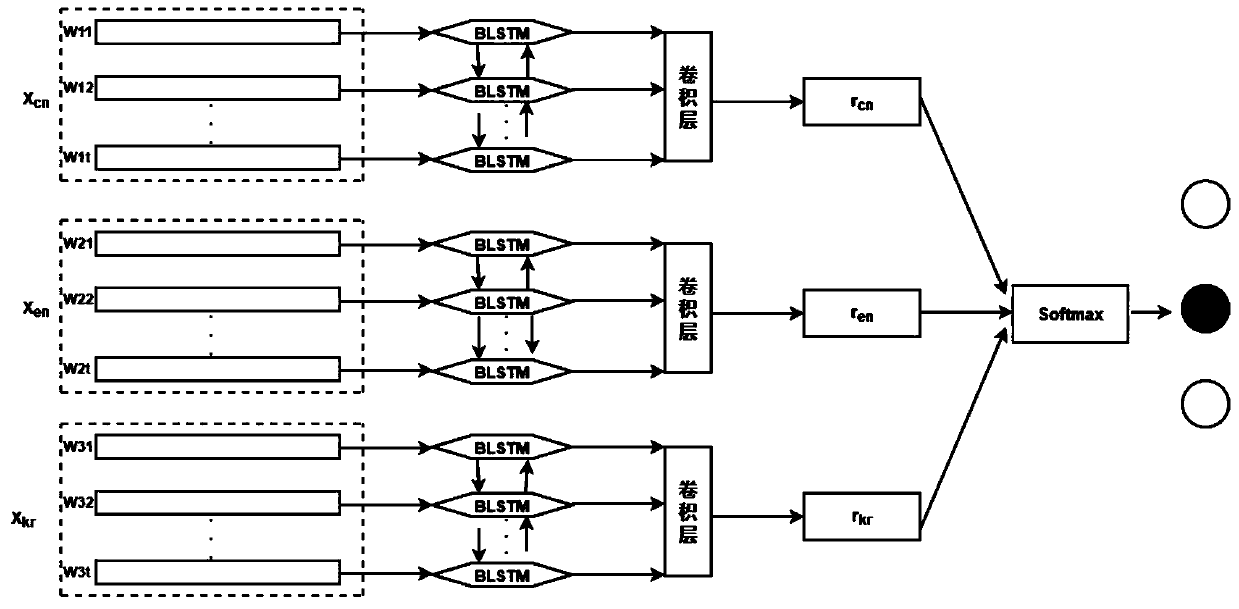

A multilingual text classification method fusing theme information and BiLSTM-CNN

PendingCN109885686ASolve language problemsEfficient use ofCharacter and pattern recognitionSpecial data processing applicationsMulti languageText categorization

The invention relates to the technical field of text classification in natural language processing, in particular to a multilingual text classification method fusing topic information and BiLSTM-CNN,which comprises the following specific implementation processes of firstly, collecting multilingual parallel corpora of Chinese and English to construct a parallel corpus; preprocessing each languagetext in the corpus; utilizing a word embedding technology to train word vectors of all languages; extracting text topic vectors of all languages by utilizing a topic model; and establishing a neural network model suitable for multilingual, fusing theme information, and carrying out multilingual text representation. The text classification method solves the language obstacles, has very high adaptability, can meet the requirement of multi-language text classification, and is high in practicability.

Owner:YANBIAN UNIV

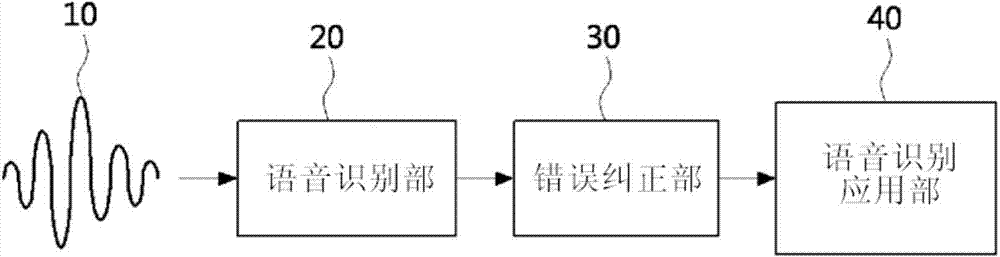

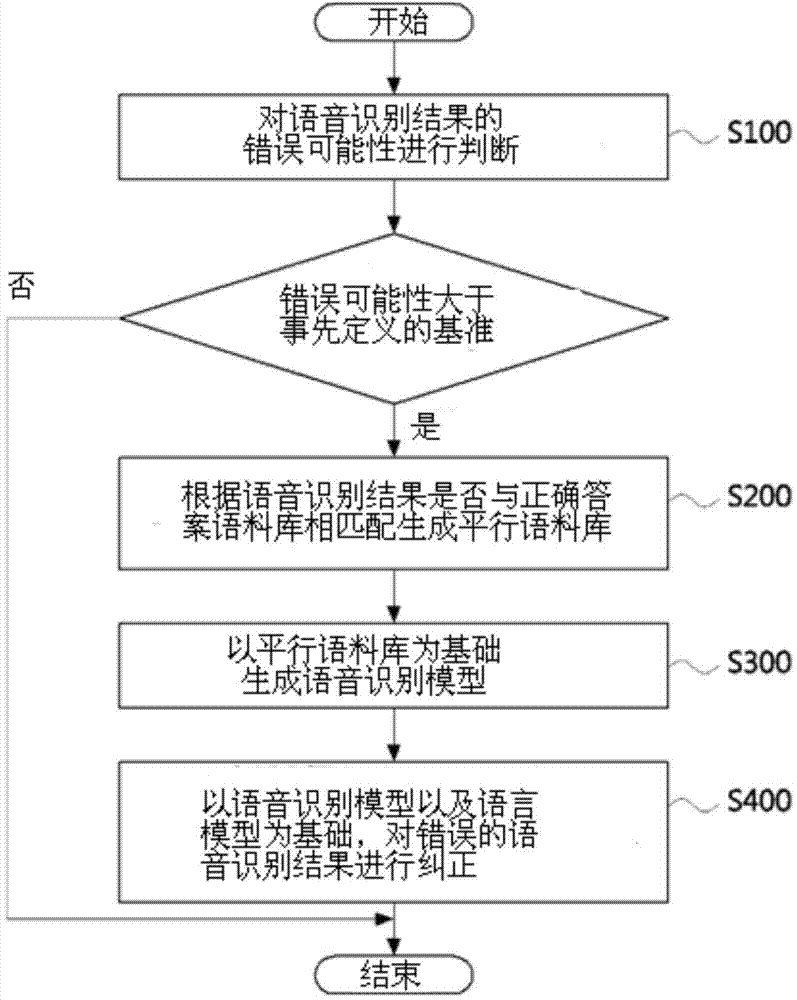

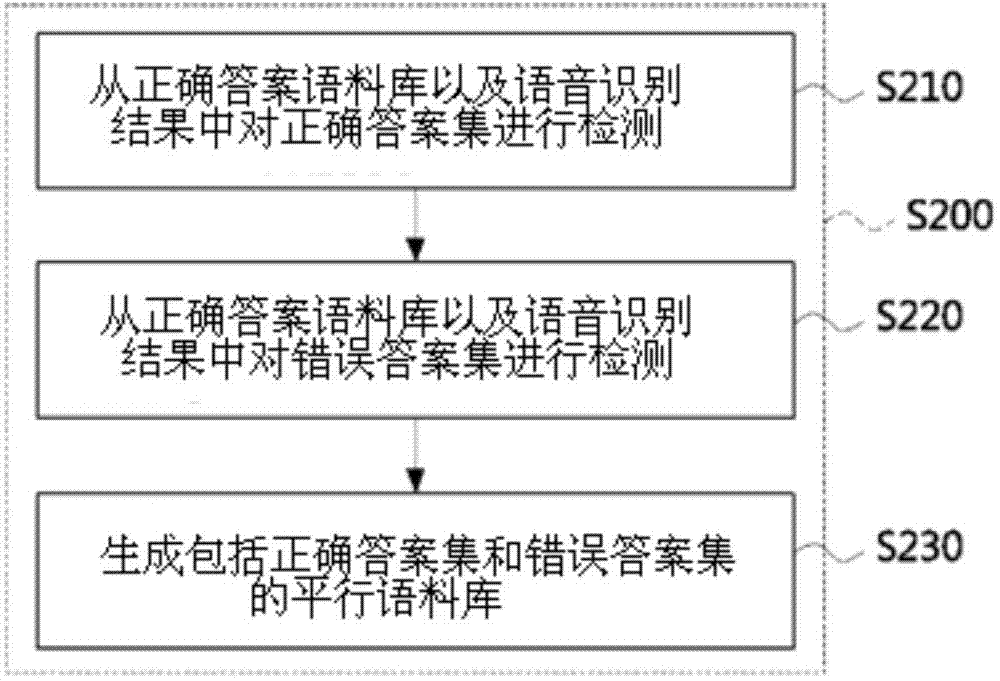

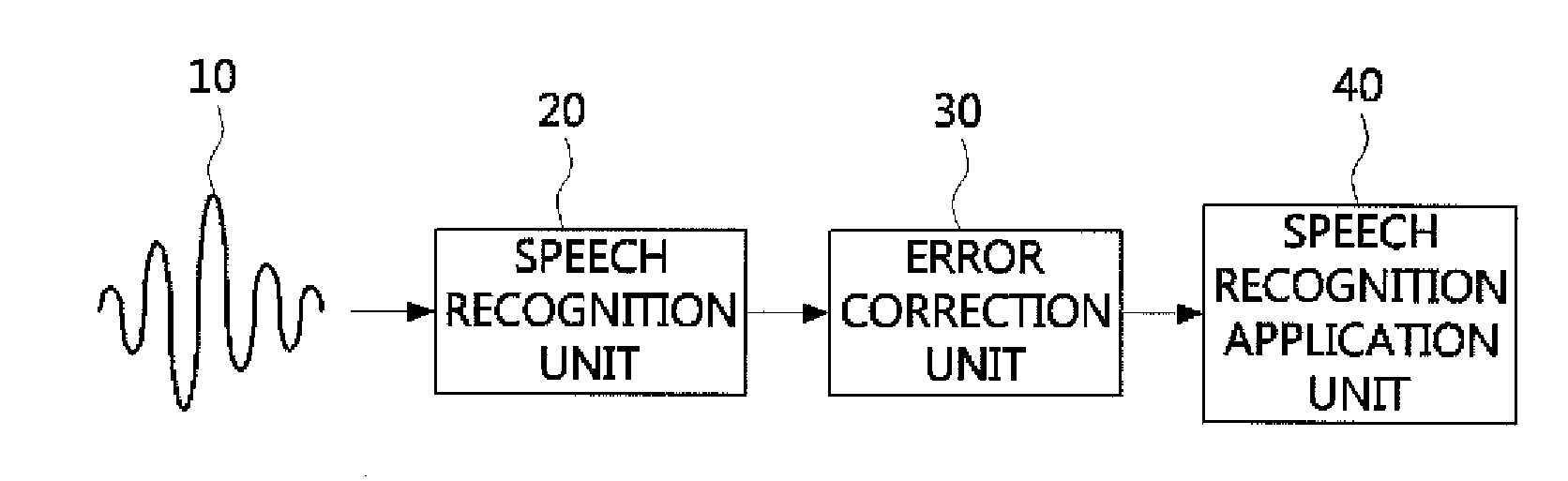

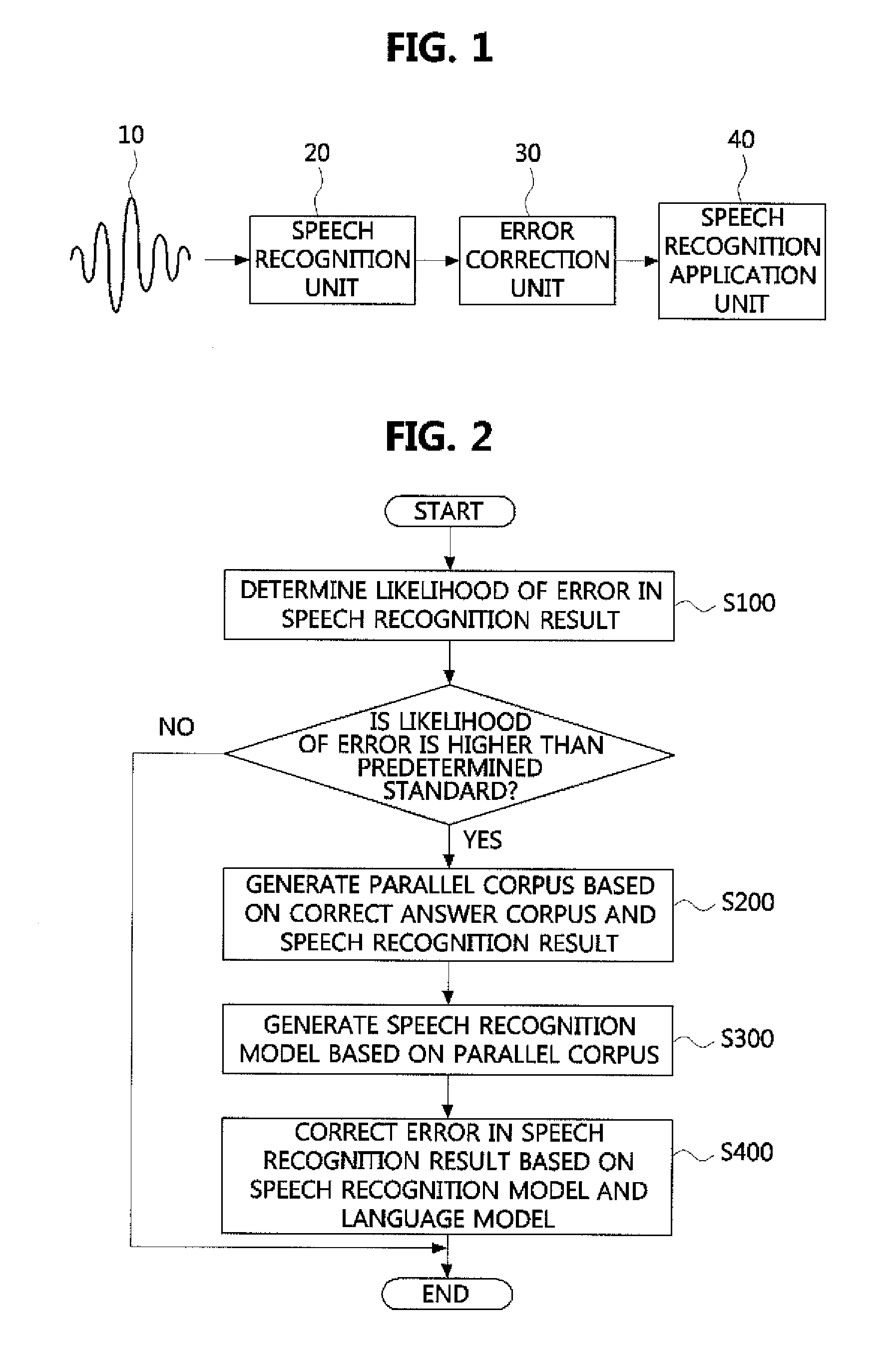

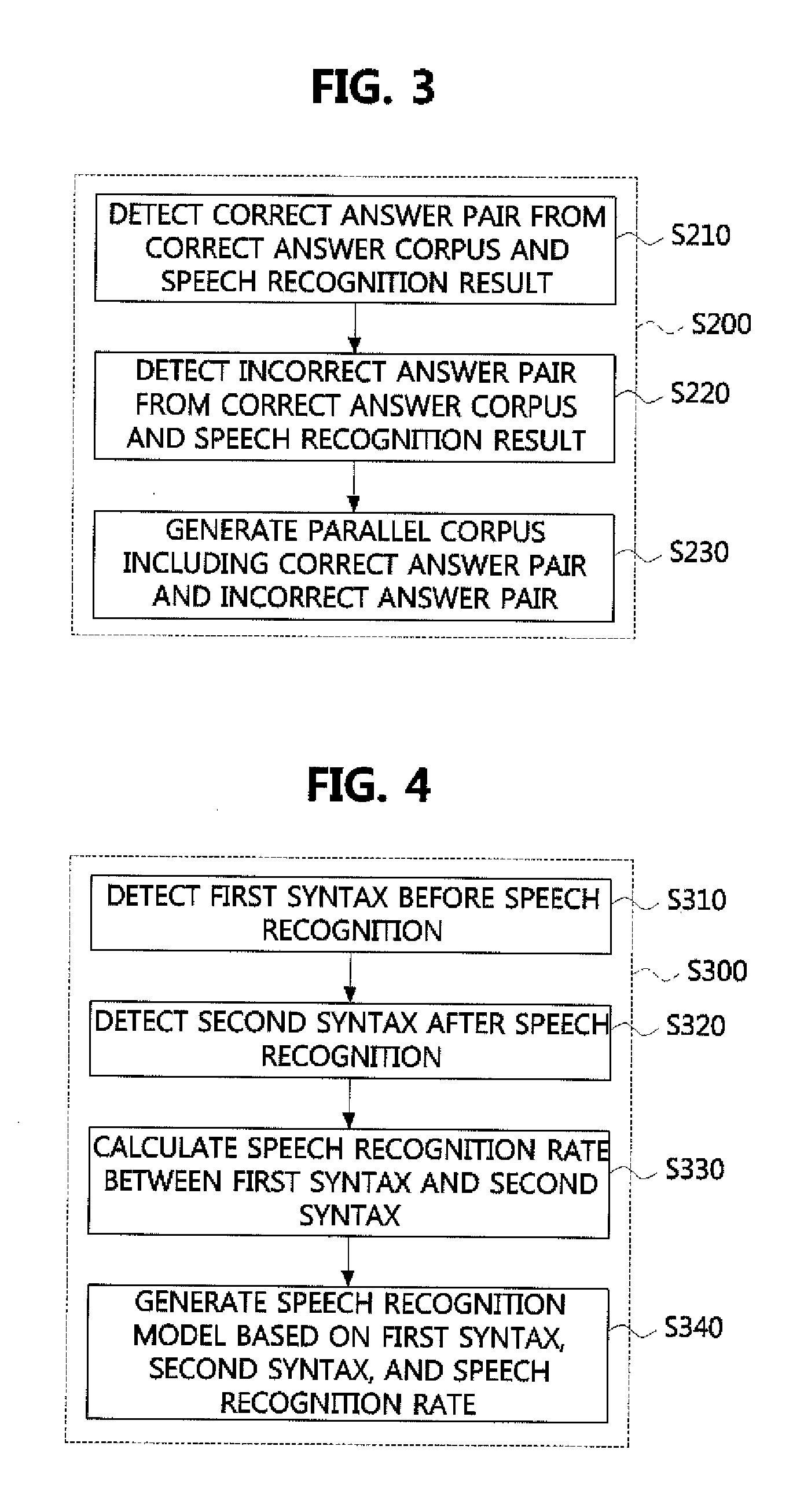

Method and apparatus for correcting speech recognition error

Disclosed are a method and anapparatus for correcting a speech recognition error. The speech recognition error correction method includes determining a likelihood that a speech recognition result is erroneous, and if the likelihood that the speech recognition result is erroneous is higher than a predetermined standard, generating a parallel corpus according to whether the speech recognition result matches the correct answer corpus, generating a speech recognition model based on the parallel corpus, and correcting an erroneous speech recognition result based on the speech recognition model and the language model. Accordingly, speech recognition errors are corrected.

Owner:POSTECH ACAD IND FOUND

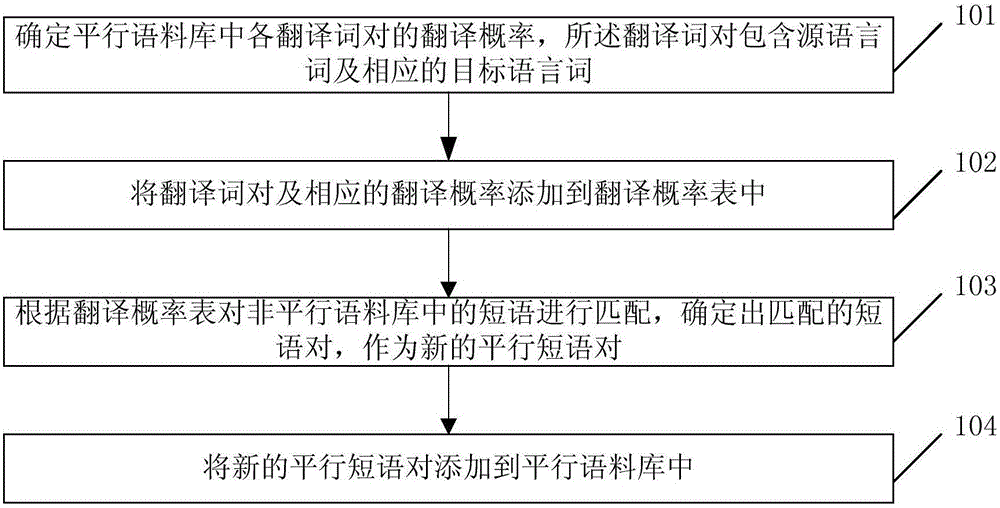

Parallel corpus construction method and device

The present invention discloses a parallel corpus construction method and device, wherein the method includes : determining a translation probability of each translation word pair in the parallel corpus and including source language words and corresponding target language words; adding the translation word pairs and the corresponding translation probability to a translation probability table; matching phrases in a non-parallel corpus according to the translation probability, and determining matched phases as new parallel phase pairs; and adding the new parallel phases to the parallel corpus. According to the scheme of the present invention, parallel phrase pairs based on the non-parallel corpus can be trained, and the scale of the parallel corpus can be increased.

Owner:TSINGHUA UNIV

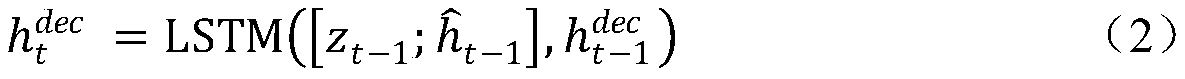

Neural machine translation decoding acceleration method based on non-autoregression

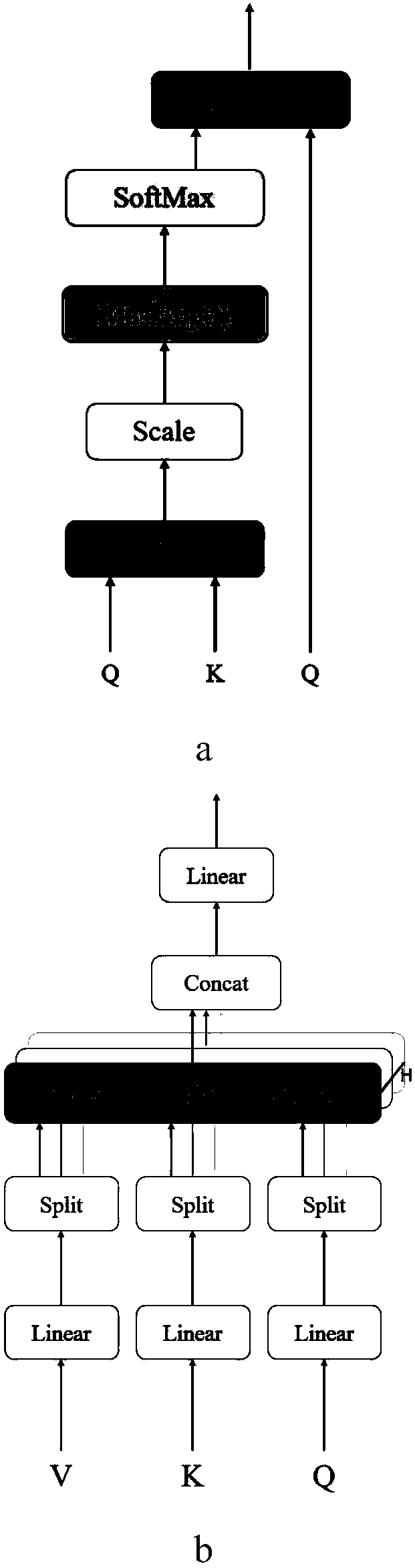

ActiveCN111382582AAlleviate the multimodal problemDoes not slow down inferenceNatural language translationEnergy efficient computingPattern recognitionHidden layer

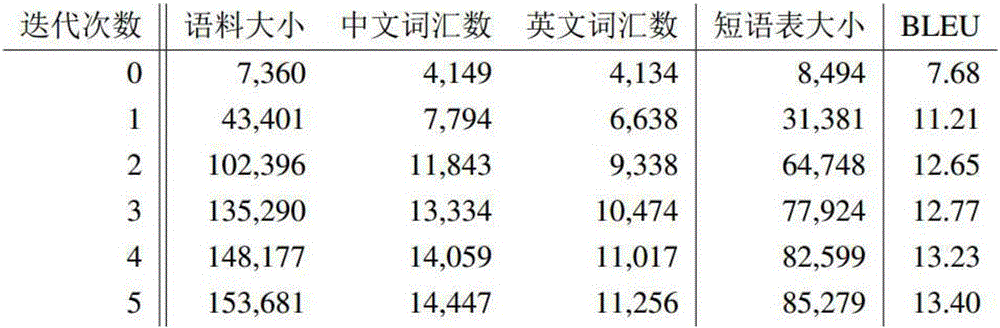

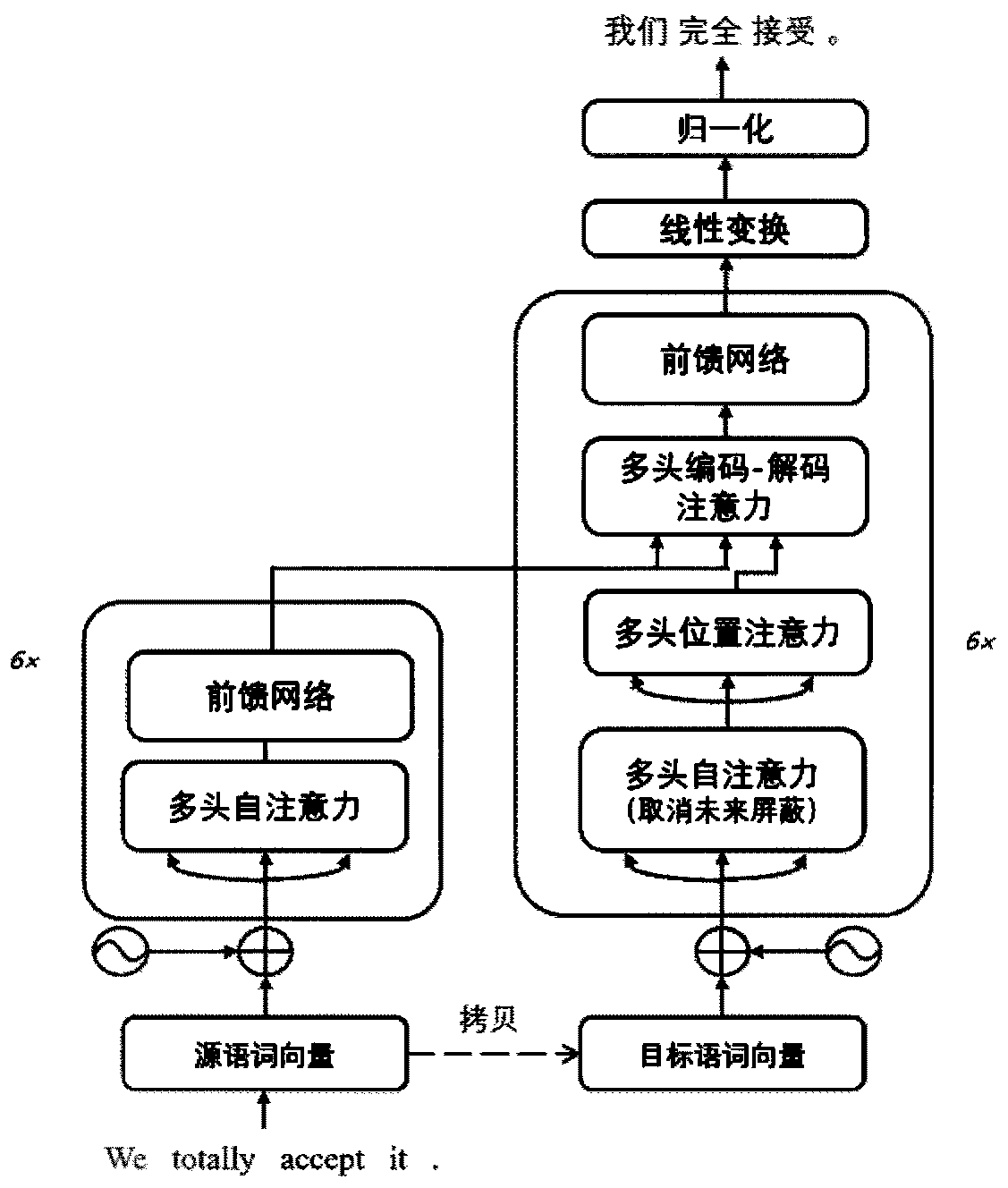

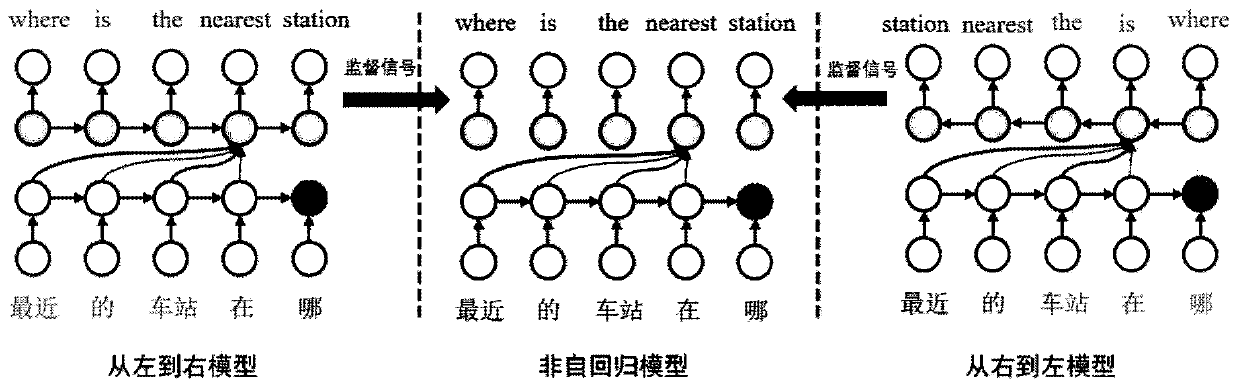

The invention discloses a neural machine translation decoding acceleration method based on non-autoregression. The neural machine translation decoding acceleration method comprises the steps of: constructing an autoregression neural machine translation model through employing a Transformer model based on an autoattention mechanism; constructing training parallel corpora, generating a machine translation word list, and training the two models from left to right and from right to left until convergence; constructing a non-autoregression machine translation model; acquiring codec attention and hidden layer states of a left-to-right autoregression translation model and a right-to-left autoregression translation model; calculating the difference between the output and the output corresponding to the autoregressive model, and taking the difference as additional loss for model training; extracting source language sentence information, and predicting a corresponding target language sentence bya decoder; and calculating the loss of predicted distribution and real data distribution, decoding translation results with different lengths, and further acquiring an optimal translation result. According to the neural machine translation decoding acceleration method, knowledge in the regression model is fully utilized, and 8.6 times of speed increase can be obtained under the condition of low performance loss.

Owner:沈阳雅译网络技术有限公司

Systems and methods for using anchor text as parallel corpora for cross-language information retrieval

InactiveUS7814103B1Quality improvementLess translationData processing applicationsWeb data indexingAmbiguityCross-language information retrieval

Owner:GOOGLE LLC

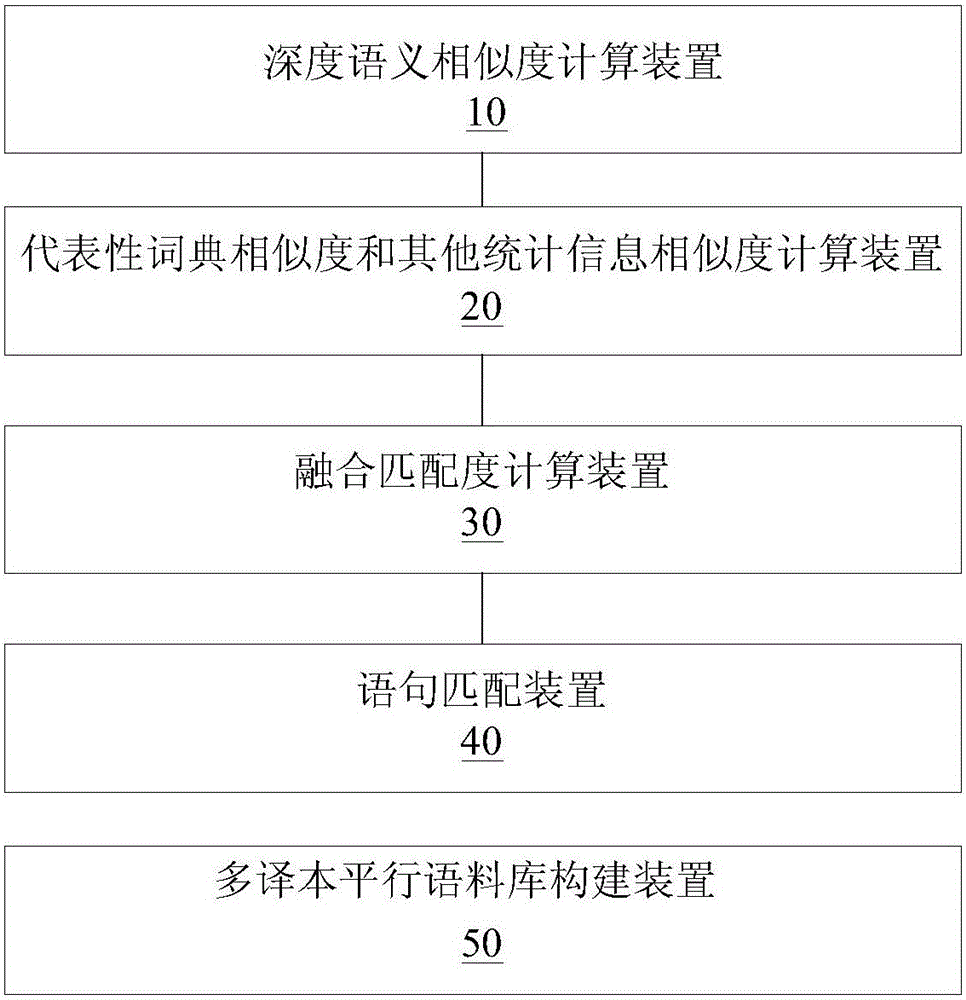

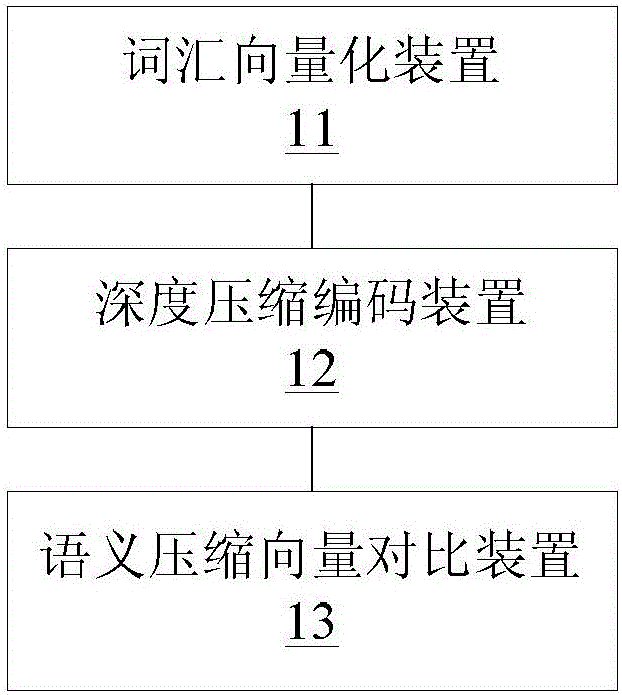

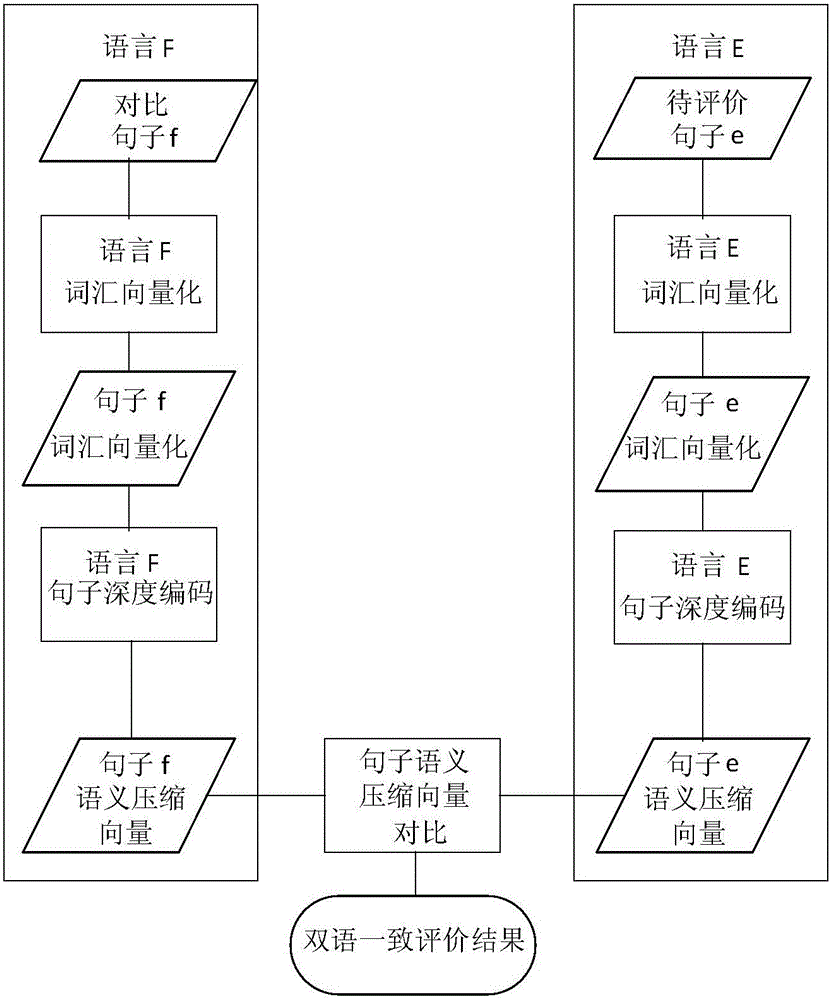

Multi-translation parallel corpus construction system

ActiveCN105843801AHigh precisionAchieve alignmentNatural language translationSpecial data processing applicationsParallel corporaCalculator

The present invention provides a multi-translation parallel corpus construction system. The system comprises: a depth semantic similarity-degree calculator, used for separately calculating a depth semantic similarity degree between a source language text sentence and a to-be-matched sentence of each translation among multiple translations; a representative dictionary similarity-degree and other statistical information similarity-degree calculator; a fusion matching-degree calculator, used for calculating a fusion matching degree between the source language text sentence and the to-be-matched sentence of each translation among the multiple translations; a sentence matching apparatus, used for performing sentence matching on a source language text and each translation according to the fusion matching degree, wherein fusion matching degrees between the source language text and other translations among the multiple translations are referred to during matching; and a multi-translation parallel corpus construction apparatus, used for constructing a multi-translation parallel corpus according to a matching result. The technical scheme above implements construction of the multi-translation parallel corpus and improves precision of corpus alignment, and the multi-translation parallel corpus constructed by the scheme has robustness.

Owner:BEIJING LANGUAGE AND CULTURE UNIVERSITY

Method and apparatus for correcting speech recognition error

InactiveUS20140163975A1Improve speech recognition accuracySpeech recognitionParallel corporaCorrection method

Disclosed are a speech recognition error correction method and an apparatus thereof. The speech recognition error correction method includes determining a likelihood that a speech recognition result is erroneous, and if the likelihood that the speech recognition result is erroneous is higher than a predetermined standard, generating a parallel corpus according to whether the speech recognition result matches the correct answer corpus, generating a speech recognition model based on the parallel corpus, and correcting an erroneous speech recognition result based on the speech recognition model and the language model. Accordingly, speech recognition errors are corrected.

Owner:POSTECH ACAD IND FOUND

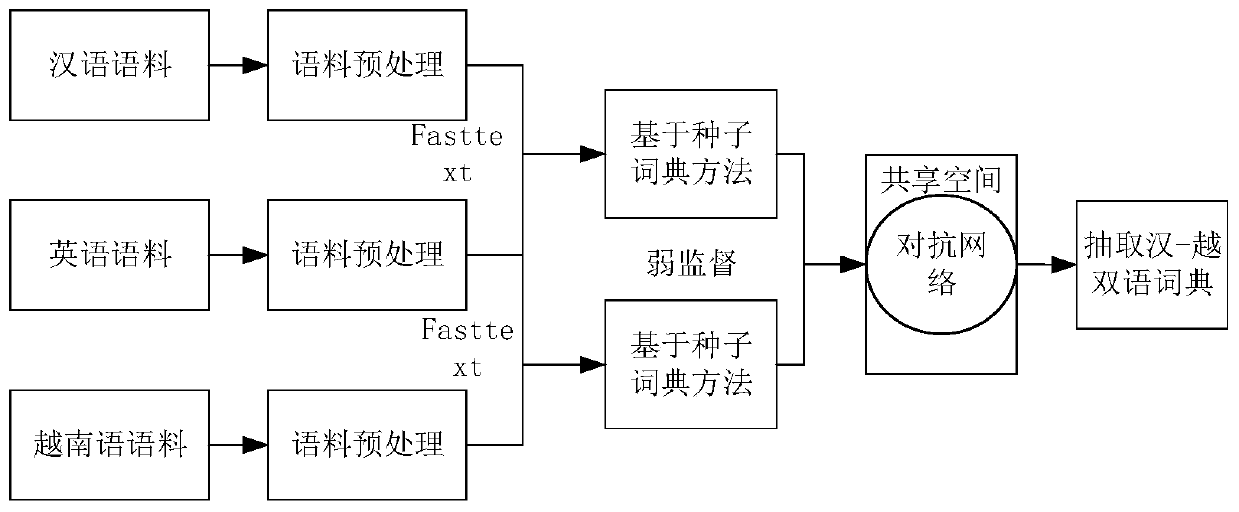

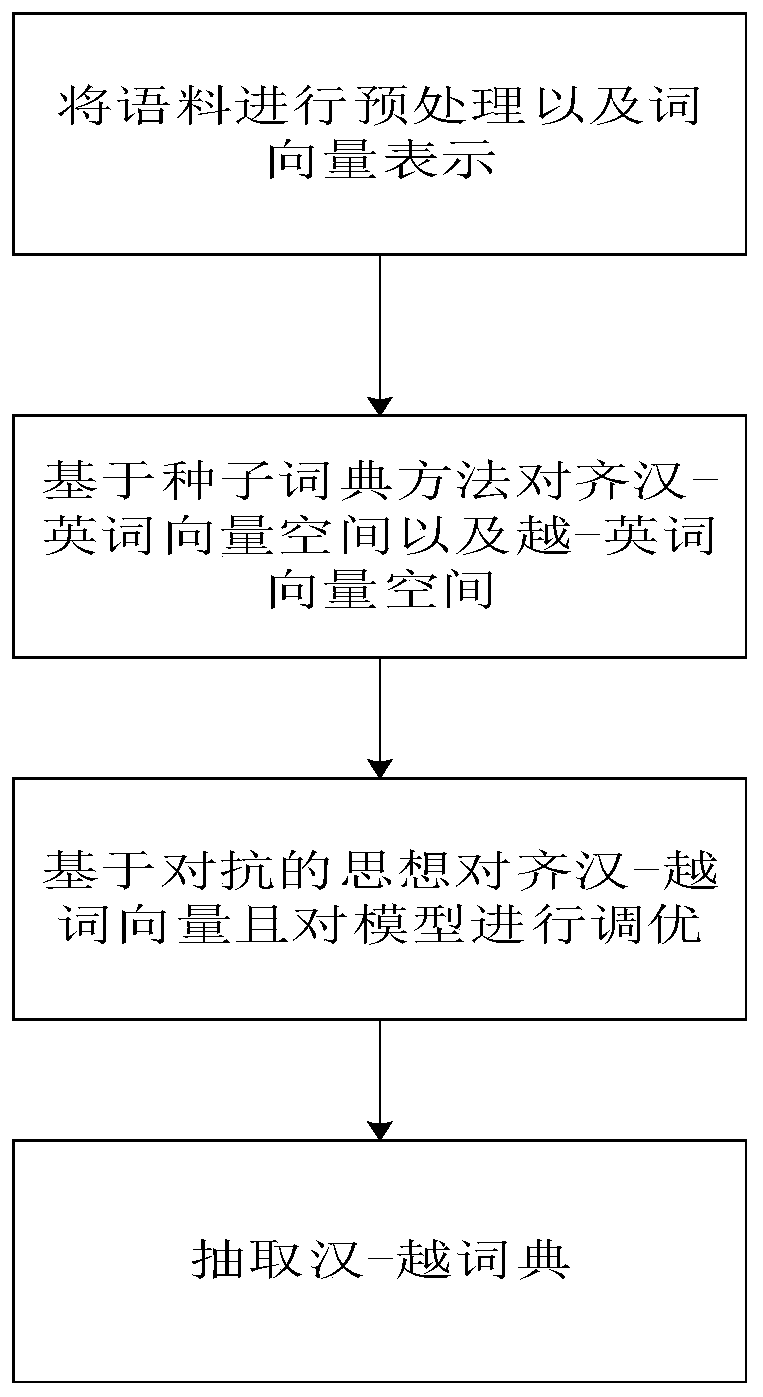

Weak supervision Chinese-Vietnamese bilingual dictionary construction method based on English pivot

ActiveCN111310480AReduce language differencesAvoid dependenceNatural language translationWeb data indexingBilingual dictionaryChinese word

The invention relates to a weak supervision Chinese-Vietnamese bilingual dictionary construction method based on an English pivot, and belongs to the technical field of natural language processing. The method comprises the following steps of: respectively collecting monolingual corpora of Chinese, English and Vietnamese and preprocessing the corpora; aligning the Chinese word vectors to an Englishword vector sharing space based on a seed dictionary method; learning a mapping relationship between the Chinese word vectors through an adversarial network in the English word vector sharing space;and different extraction strategies are adopted to extract the Han-cross dictionary. According to the method, the accuracy of automatically constructing the Han-crossing dictionary is greatly improved. The problems that in an existing Chinese-Vietnamese bilingual dictionary construction method, parallel corpora, seed dictionaries and the like are very scarce and difficult to label, and an existingmethod is poor in construction effect are solved.

Owner:KUNMING UNIV OF SCI & TECH

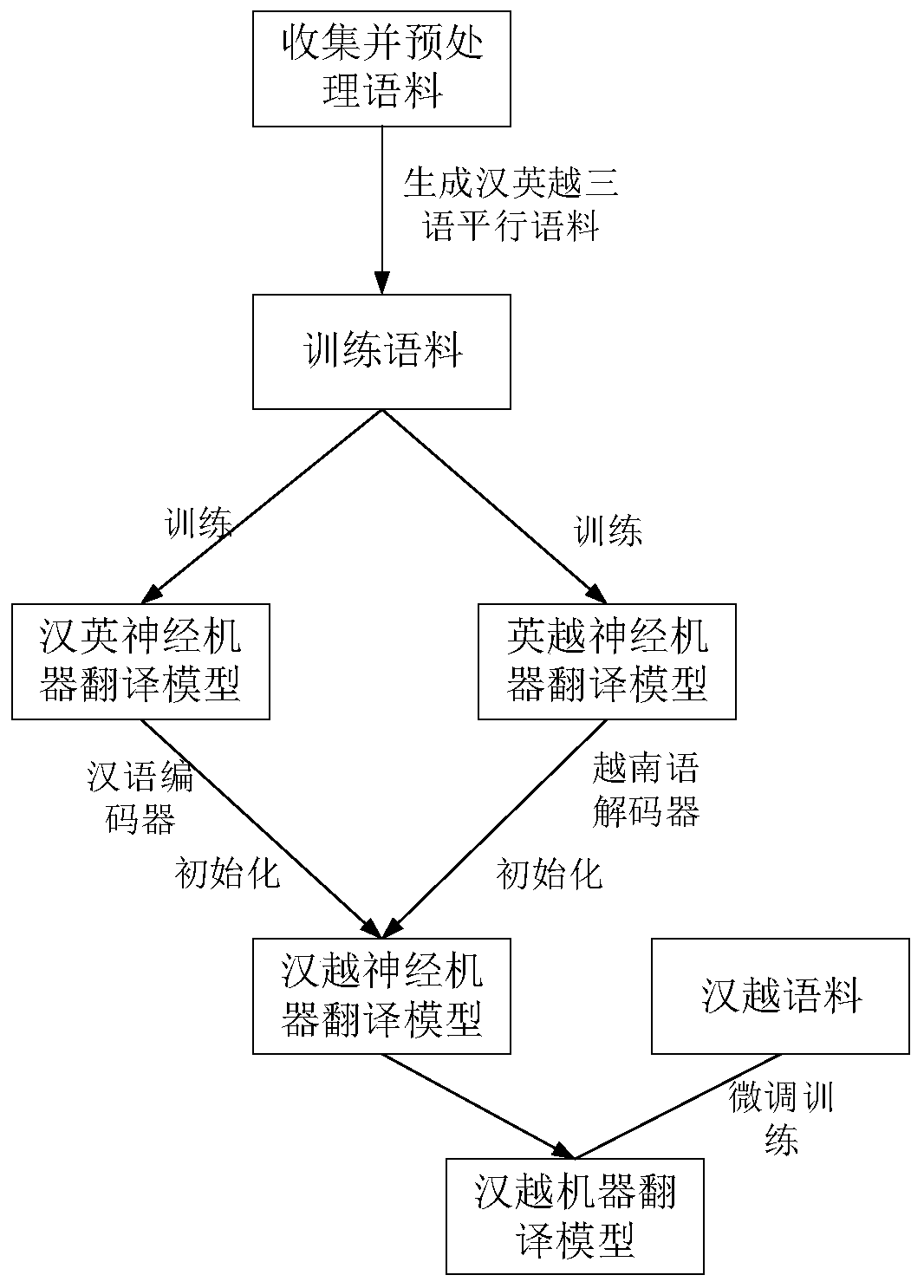

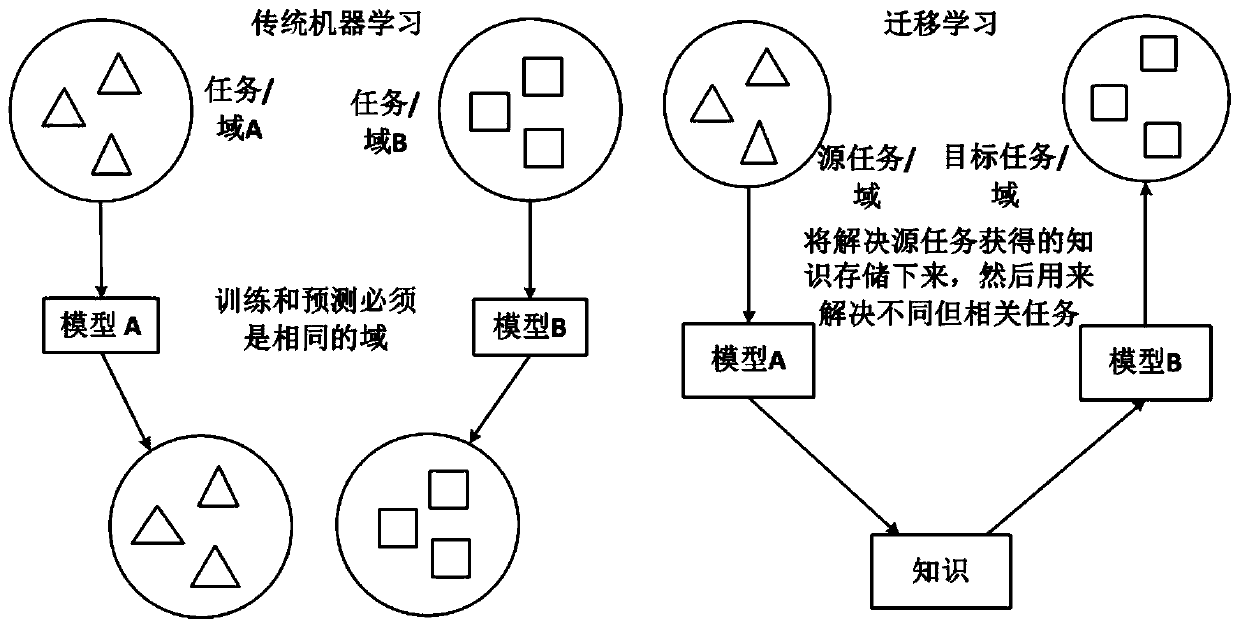

Transfer learning-based Chinese-Vietnamese neural machine translation method

ActiveCN110472252ARelevantImprove performanceNeural learning methodsSpecial data processing applicationsLearning basedSentence pair

The invention relates to a transfer learning-based Chinese-Vietnamese neural machine translation method, and belongs to the technical field of natural language processing. The method comprises the following steps of: corpus collection and preprocessing: collecting and preprocessing parallel corpora of Chinese-Vietnamese sentence pairs, English-Vietnamese sentence pairs and Chinese-English sentencepairs; generating Chinese-English-Vietnamese trilingual parallel corpora by using the Chinese-English and English-Vietnamese parallel corpora; training a Chinese-English neural machine translation model and an English-Vietnamese neural machine translation model, and initializing parameters of the Chinese-Vietnamese neural machine translation model by using parameters of the pre-trained model; andcarrying out fine adjustment training on the initialized Chinese-Vietnamese neural machine translation model by using the Chinese-Vietnamese parallel corpus to obtain the Chinese-Vietnamese neural machine translation model to carry out Chinese-Vietnamese neural machine translation. According to the method, the performance of the Chinese-Vietnamese neural machine translation can be effectively improved.

Owner:KUNMING UNIV OF SCI & TECH

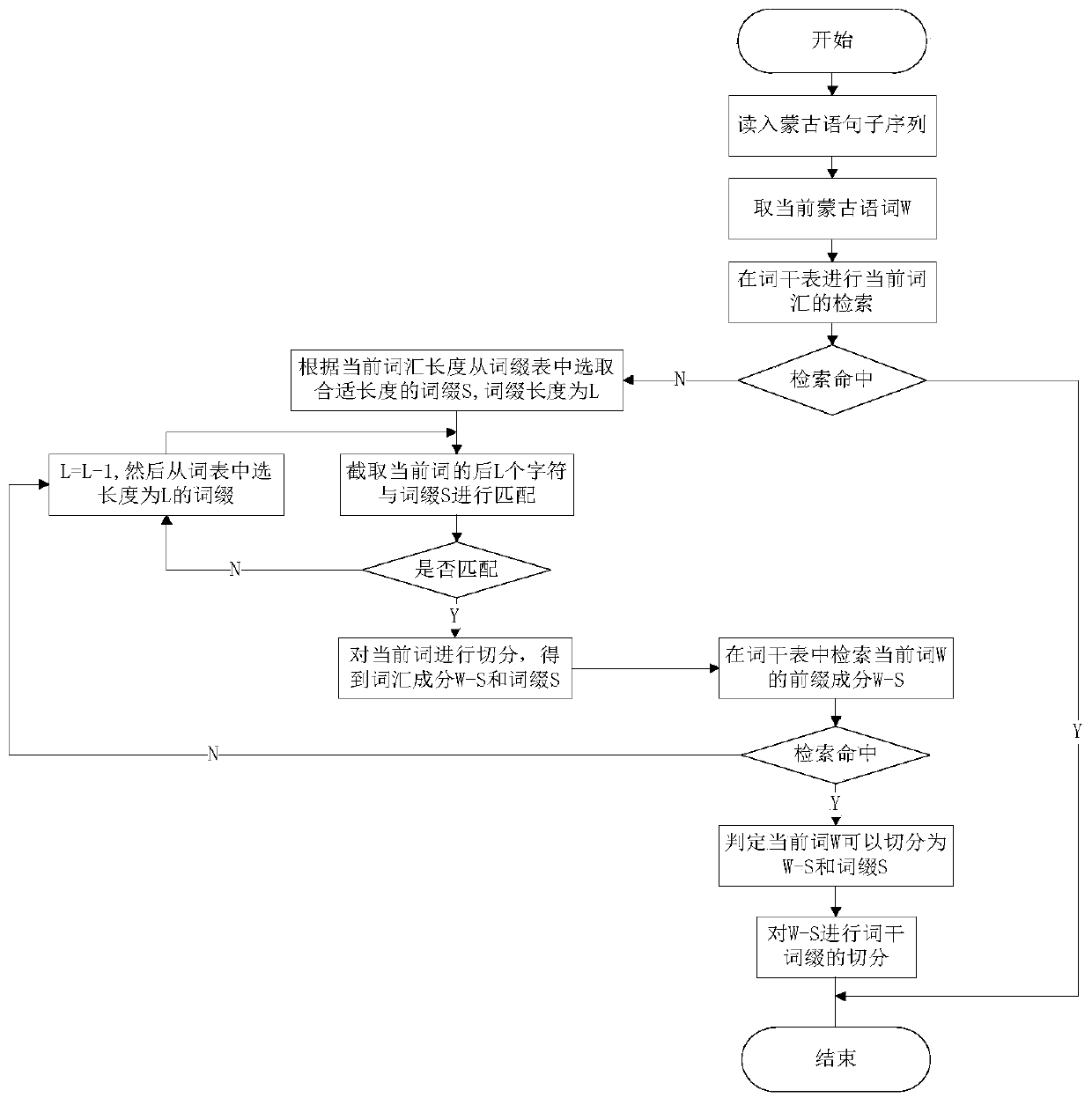

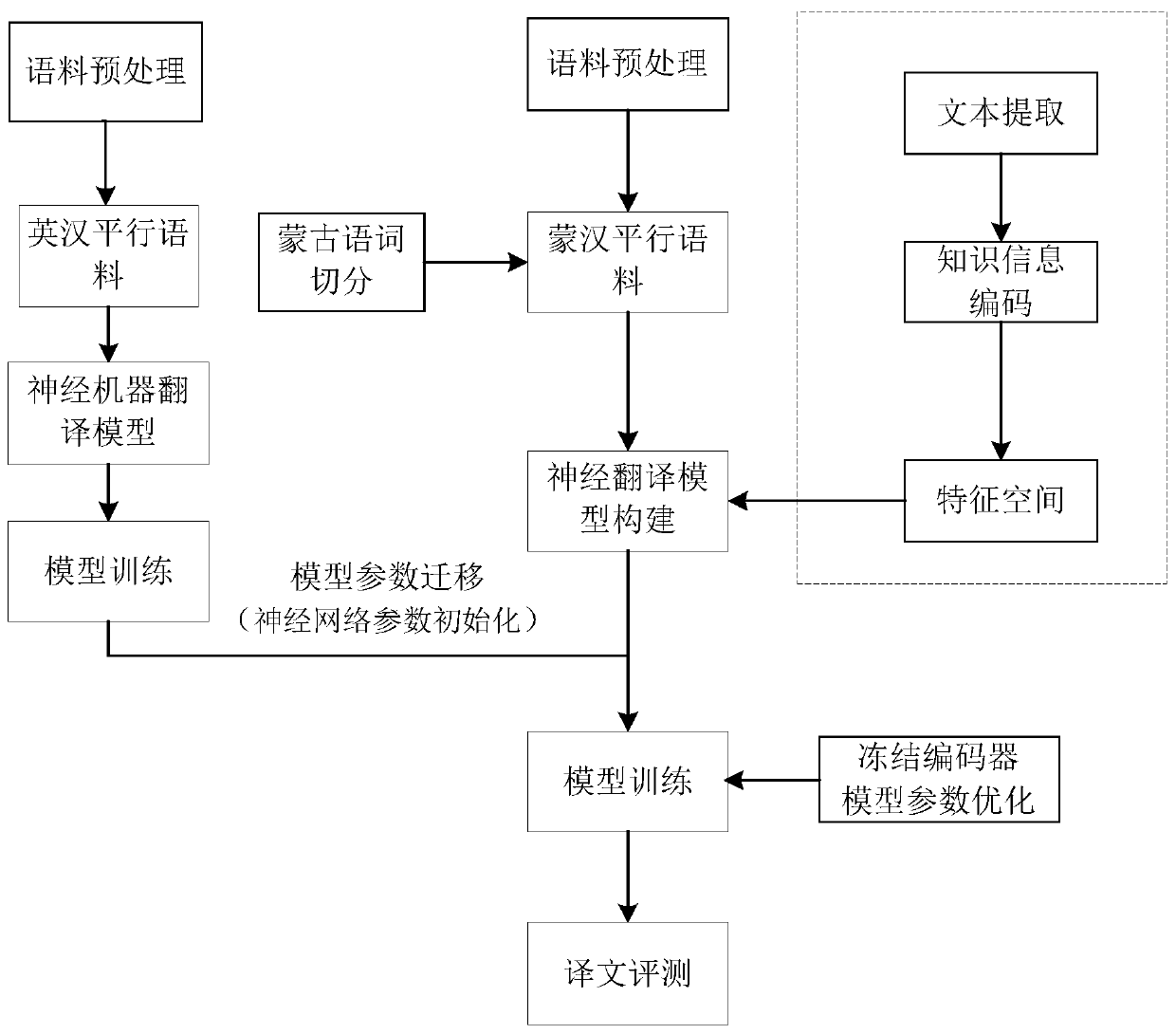

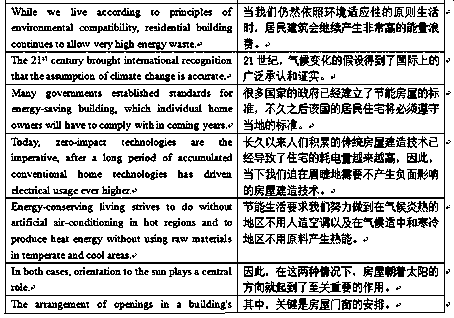

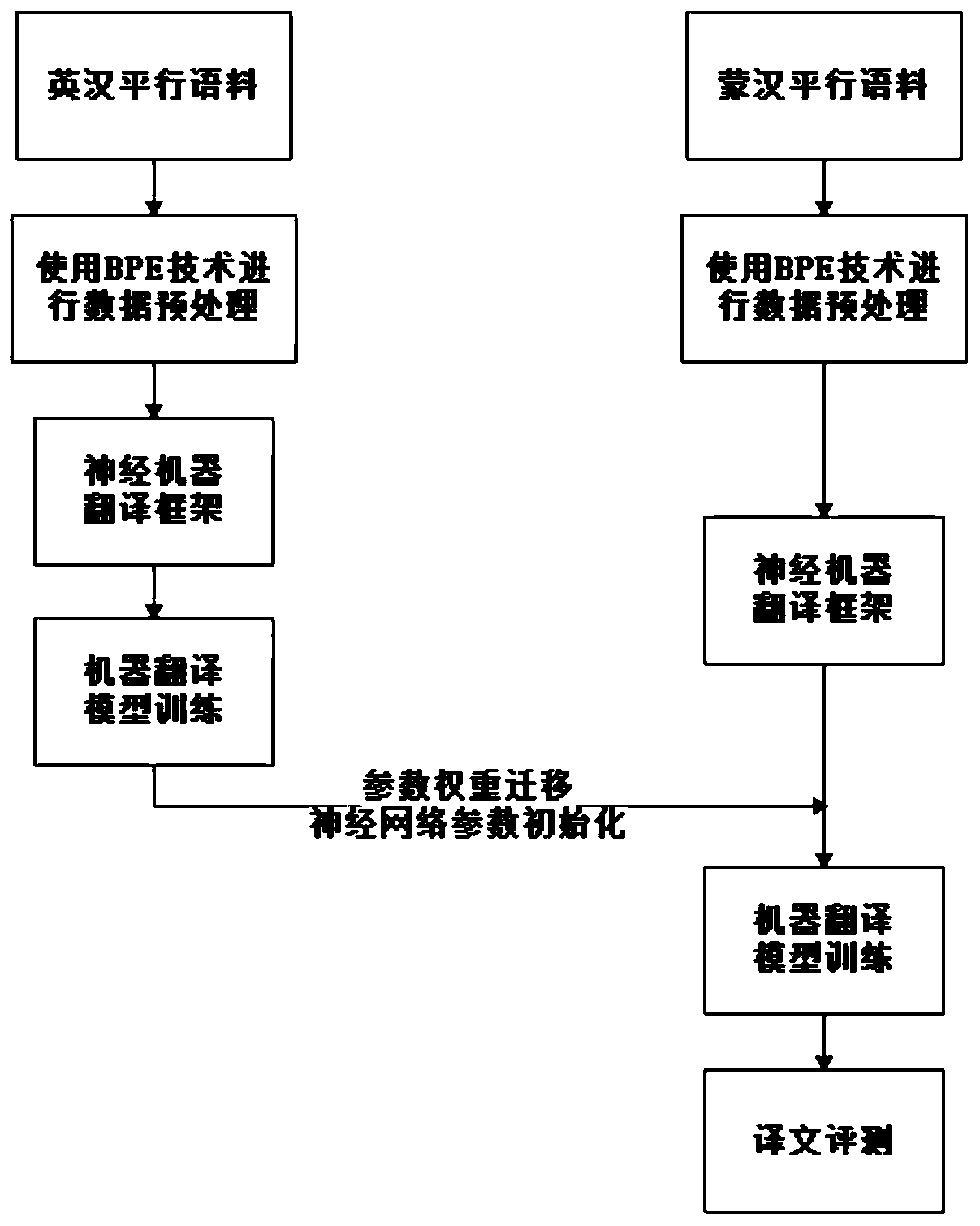

Mongolian-Chinese translation method based on transfer learning

InactiveCN110688862AQuality improvementReduce unregistered wordsNatural language translationNeural architecturesParallel corporaSyntax

The method is provided for solving the problems of low translation quality and poor translation effect of existing Mongolian-Chinese machine translation. Mongolian belongs to a low-resource language,collection of a large number of Mongolian-Chinese parallel bilingual corpora is extremely difficult, and the problem is effectively solved through the thought of integrating transfer learning and priori knowledge in the method. Transfer learning is a method for solving problems in different but related fields by using existing knowledge. The method comprises the steps that firstly, large-scale English-Chinese parallel corpora are used for training based on a neural machine translation framework; secondly, translation model parameter weights trained by large-scale English-Chinese parallel corpora are migrated into a Mongolian-Chinese neural machine translation framework; thirdly, rich vocabulary, syntax and other related knowledge representation information obtained through large-scale corpus training are fused into a Mongolian-Chinese neural machine translation model; and finally, a neural machine translation model is trained by utilizing the existing Mongolian-Chinese parallel corpus.

Owner:INNER MONGOLIA UNIV OF TECH

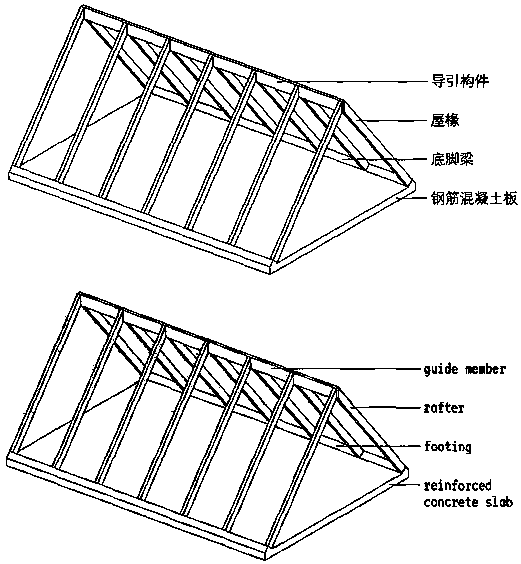

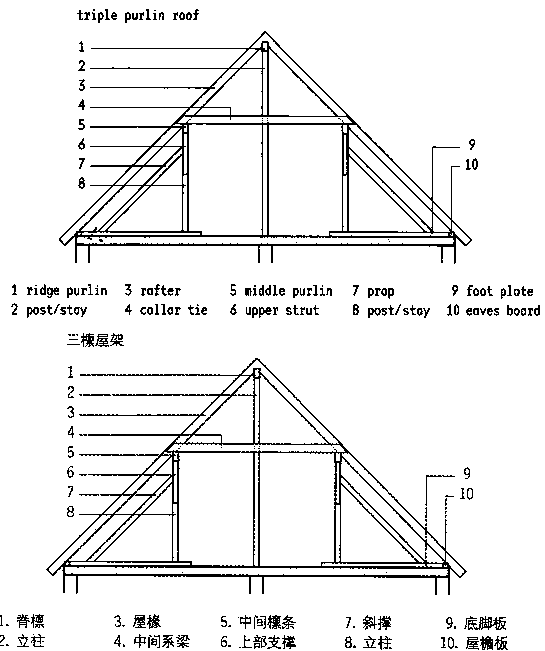

Construction method of constructional engineering multi-mode bilingual parallel corpus

ActiveCN110046261AExact matchReasonable designSpecial data processing applicationsWeb data queryingParallel corporaSyntax

The invention belongs to the technical field of data processing, and particularly relates to a construction method of a constructional engineering multi-mode bilingual parallel corpus. The method comprises the steps of performing corpus screening, corpus extracting, proofreading, corpus segmenting, aligning and denoising to obtain a parallel corpus, updating the corpus and expanding the capacity,so that the rich comparison samples are provided for the building vocabularies, the meaning of the retrieved vocabularies or syntax is related to the building, and some useless meanings are excluded;a large number of bilingual translation samples are provided for a user, the segmentation is fine, the precision is high, the retrieved vocabularies or syntactic meanings are all related to the building, some useless meanings are eliminated, and a large number of building bilingual translation samples are provided for the user.

Owner:SHANDONG JIANZHU UNIV

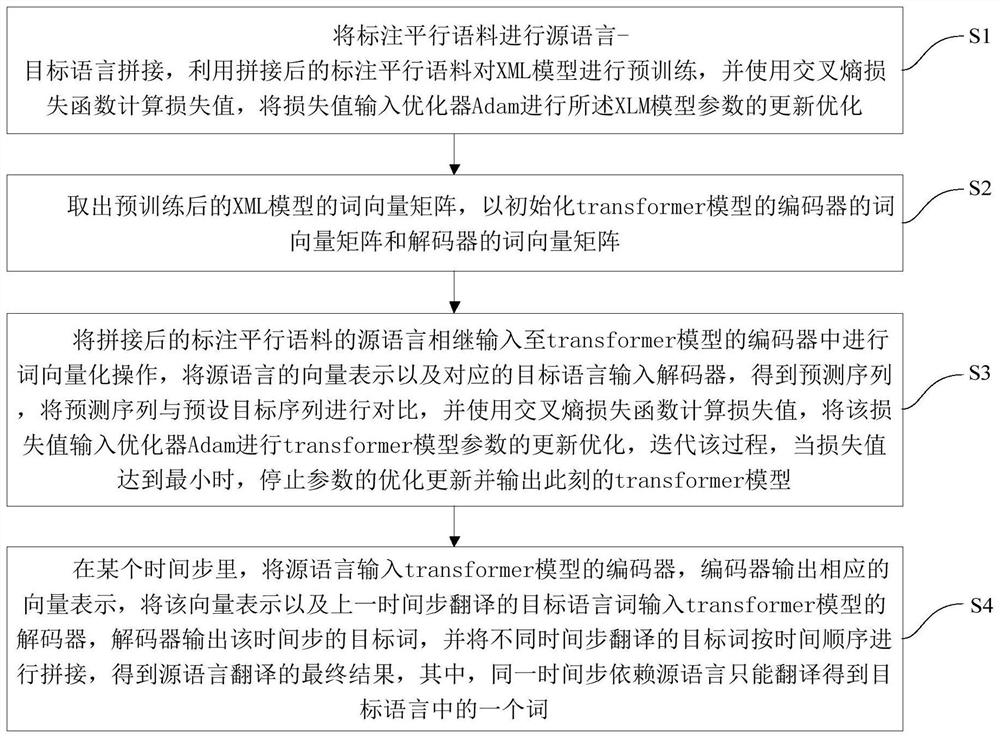

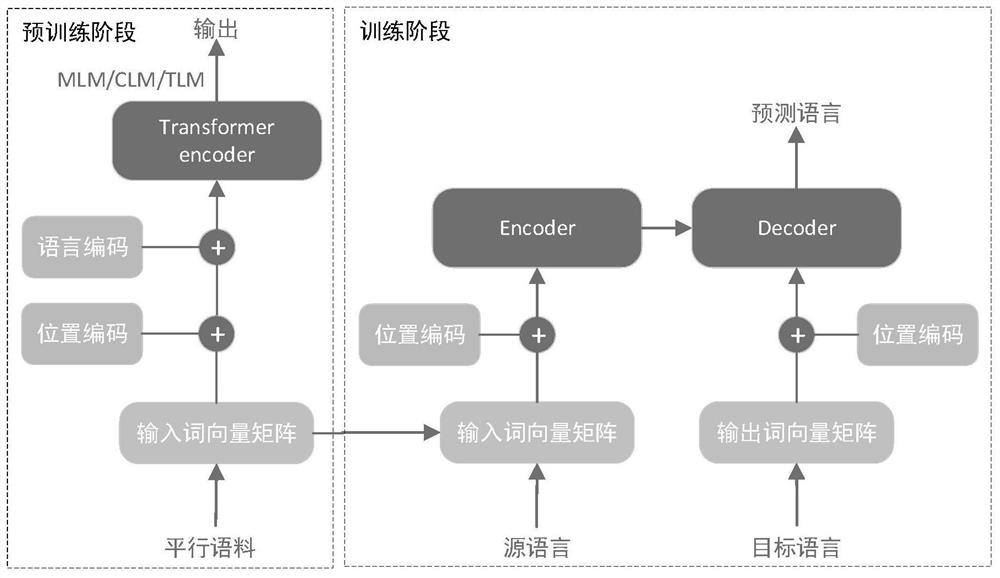

Neural machine translation method based on pre-training bilingual word vector

InactiveCN113297841AImprove machine translationNatural language translationNeural architecturesPattern recognitionAlgorithm

The invention discloses a neural machine translation method based on a pre-training bilingual word vector. The method comprises the following steps of: performing'source language-target language 'splicing on labeled and aligned parallel corpora as input of an XLM (X-Language Model) for pre-training; training: taking a bilingual word vector matrix obtained by pre-training to initialize a translation model; inputting a source language into an encoder, inputting vector representation of source language encoding and a corresponding target language into a decoder, outputting a prediction sequence, comparing the prediction sequence with a corresponding target sequence, calculating a loss value, and inputting the loss value into an optimizer to optimize translation model parameters; predicting: in a certain time step, inputting a source language into an optimized encoder, outputting corresponding vector representation by the encoder, inputting the vector representation and a target language word translated by the previous time step into a decoder, outputting a target word of the time step by the decoder, splicing the target words translated by different time steps according to a time sequence, and obtaining a source language translation result. Machine translation effect of low-resource languages is improved.

Owner:HARBIN INST OF TECH

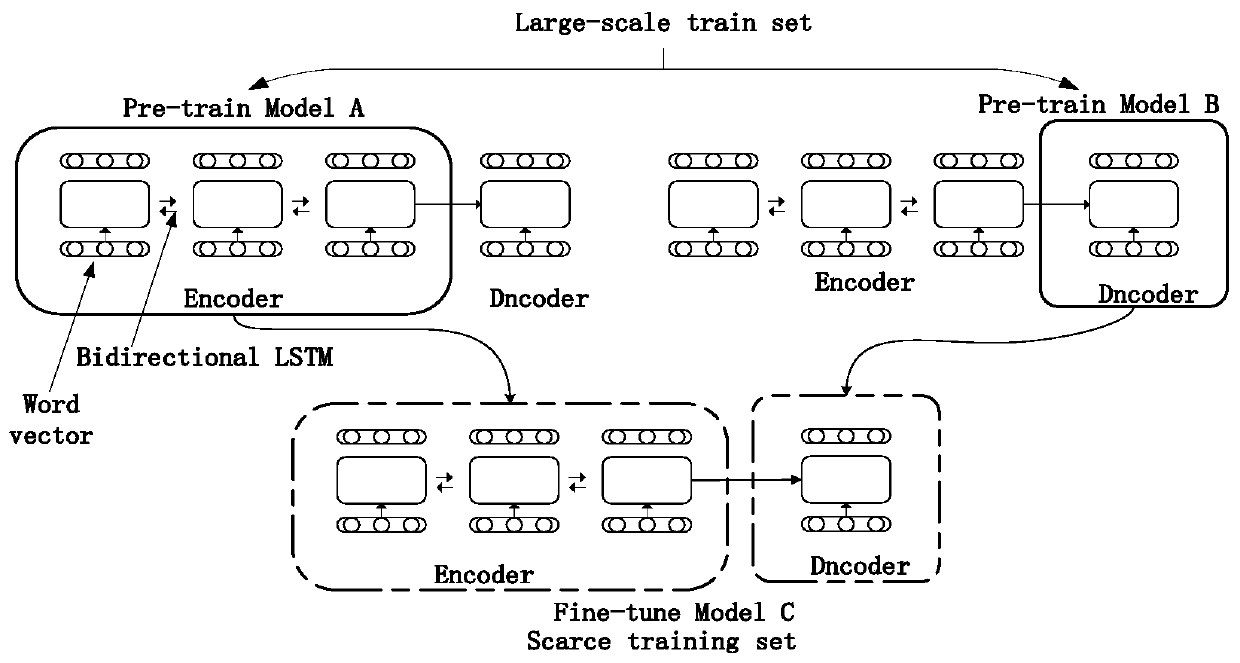

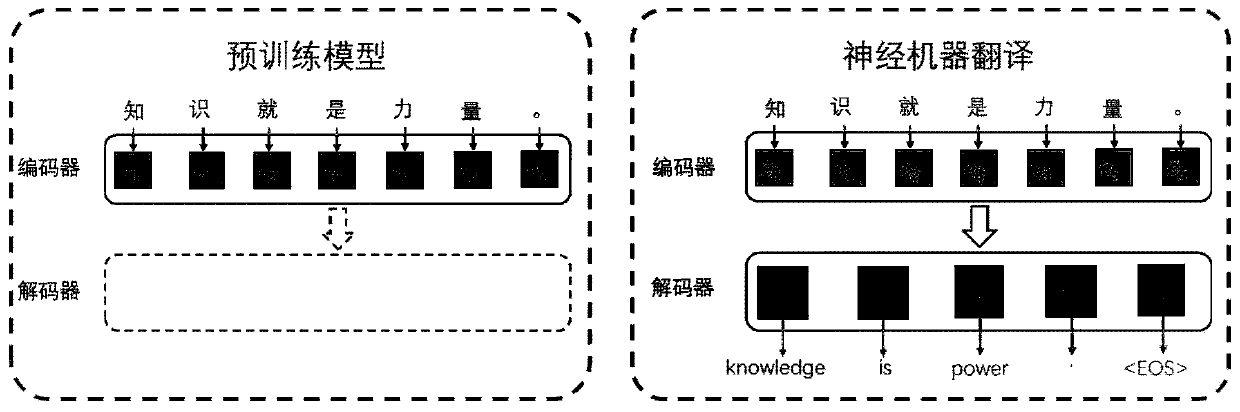

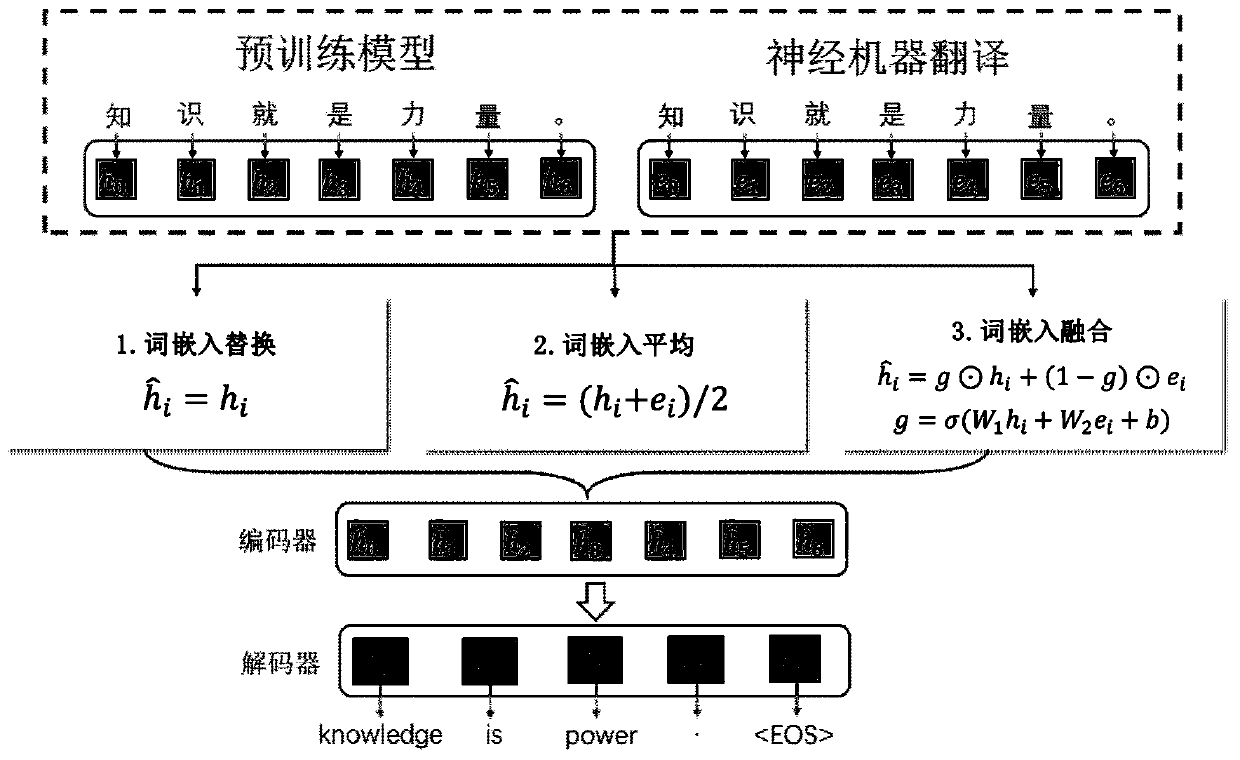

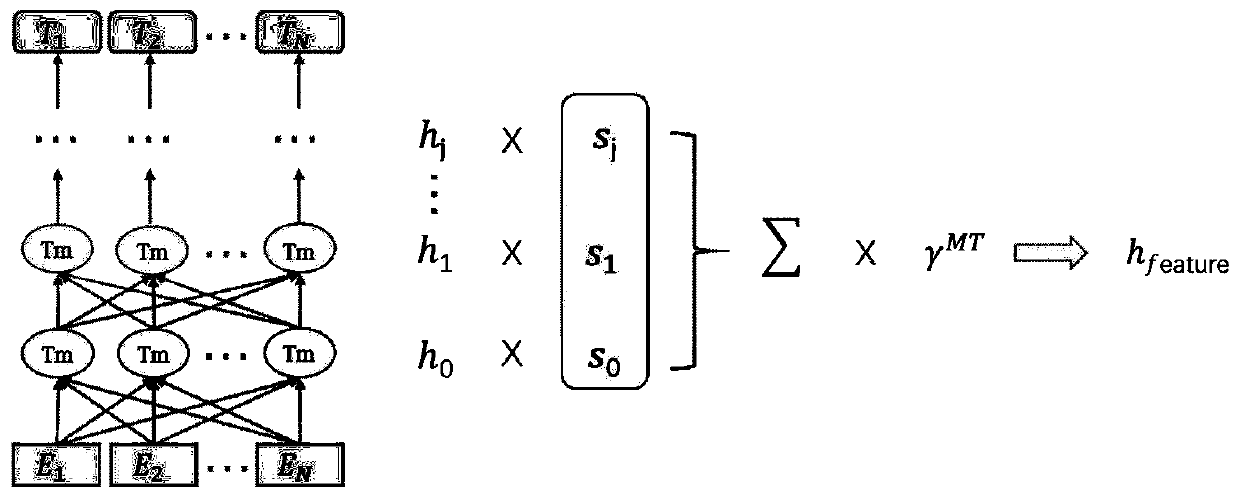

Scarce resource neural machine translation training method based on pre-training

ActiveCN111178094AAvoid polysemy problems that embedding cannot resolveSimplify the training processNatural language translationNeural architecturesHidden layerAlgorithm

The invention discloses a scarce resource neural machine translation training method based on pre-training, which comprises the following steps of: constructing massive monolingual corpora, and performing word segmentation and sub-word segmentation preprocessing flows to obtain converged model parameters; constructing parallel corpora, randomly initializing parameters of a neural machine translation model, and enabling the sizes of a word embedding layer and a hidden layer of the neural machine translation model to be the same as that of a pre-trained language model; integrating the pre-training model into a neural machine translation model; training the neural machine translation model through the parallel corpora, so that a generated target statement is more similar to a real translationresult, and the training process of the neural machine translation model is completed; and sending the source statement input by the user into a neural machine translation model, and generating a translation result by the neural machine translation model through greedy search or bundle search. According to the method, knowledge in the monolingual data is fully utilized, and compared with a randomly initialized neural machine translation model, the translation performance can be obviously improved.

Owner:沈阳雅译网络技术有限公司

Mongolian-Chinese machine translation system based on byte pair coding technology

InactiveCN110674646AReduce the number of unregistered wordsSolve the problem of unregistered wordsNatural language translationNeural architecturesAlgorithmMachine translation system

The invention discloses a Mongolian-Chinese machine translation system based on a byte pair coding technology. Firstly, English-Chinese parallel corpora and Mongolian-Chinese parallel corpora are preprocessed through the BPE technology, English words, Mongolian words and Chinese words are all divided into single characters, then the occurrence frequency of character pairs is counted within the range of the words, and the character pair with the highest occurrence frequency is stored every time till the circulation frequency is finished. Secondly, the preprocessed English-Chinese parallel corpus is used for training based on a neural machine translation framework; and then, the preprocessed translation model parameter weight trained by the English-Chinese parallel corpus is migrated into aMongolian-Chinese neural machine translation framework, and a neural machine translation model is trained by utilizing the preprocessed Mongolian-Chinese parallel corpus to obtain a Mongolian-Chineseneural machine translation prototype system based on a byte pair coding technology. And finally, the BLEU value of the translation of the system and the BLEU value of the translation of the statistical machine are compared and evaluated to achieve the purpose of finally improving the translation performance of the Mongolian-Chinese machine.

Owner:INNER MONGOLIA UNIV OF TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com