Scarce resource neural machine translation training method based on pre-training

A technology of machine translation and training methods, applied in the field of neural machine translation training, can solve the problems of poor translation effect of neural machine translation models, avoid polysemy problems, simplify the training process, and improve the robustness.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0052] The present invention will be further elaborated below in conjunction with the accompanying drawings of the description.

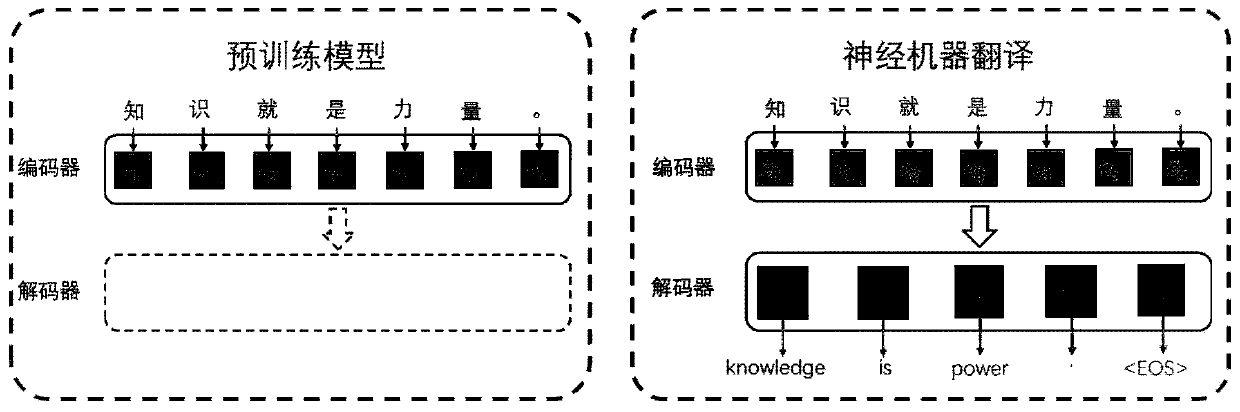

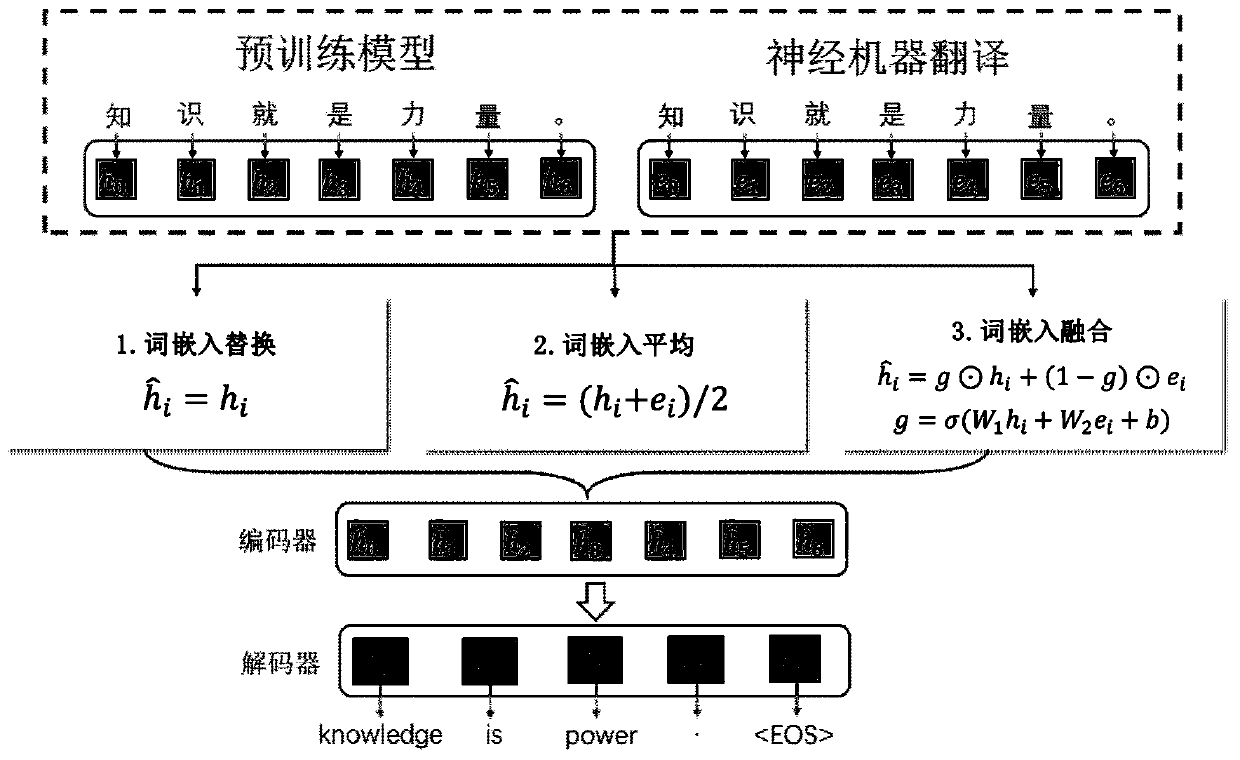

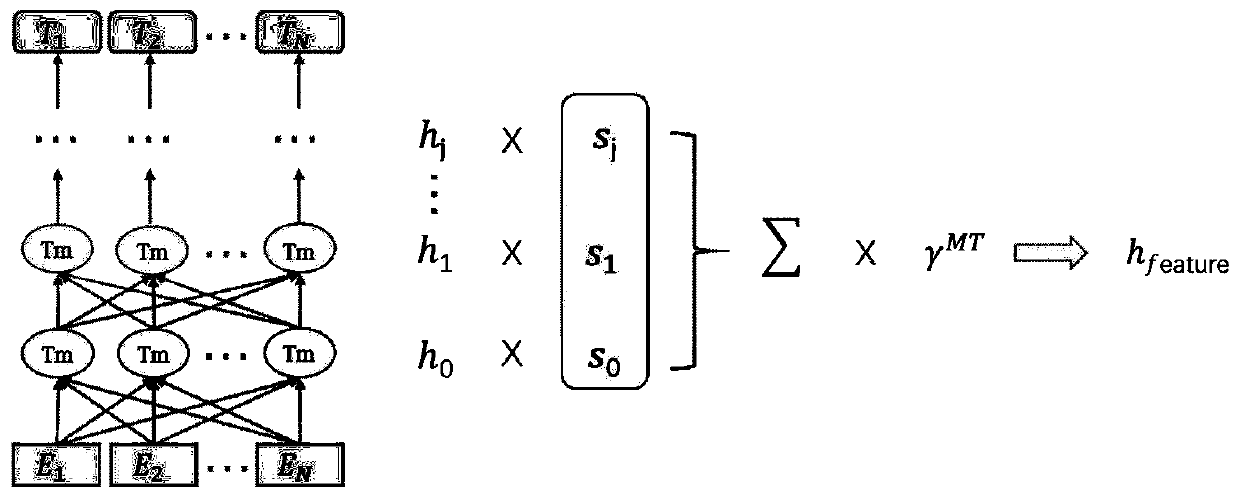

[0053] The method of the invention optimizes the training process of scarce resource machine translation from the knowledge in the integrated pre-training model. This method uses massive monolingual data to pre-train the language model without increasing bilingual data, and integrates the information of the pre-trained model into the neural machine translation model, aiming to reduce the dependence of machine translation on bilingual corpus, and to Achieving high-quality translation performance in scarce resource scenarios.

[0054] The present invention proposes a machine translation training method based on pre-training scarce resources, comprising the following steps:

[0055] 1) Construct massive monolingual corpus, perform word segmentation and sub-word segmentation preprocessing process, use monolingual corpus pre-training language model base...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com