Information processing method and device and storage medium

An information processing method and technology of an information processing device, which are applied in the fields of electrical digital data processing, special data processing applications, natural language data processing, etc., can solve the problems of strong randomness of word embedding initialization scheme and long word embedding training period, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

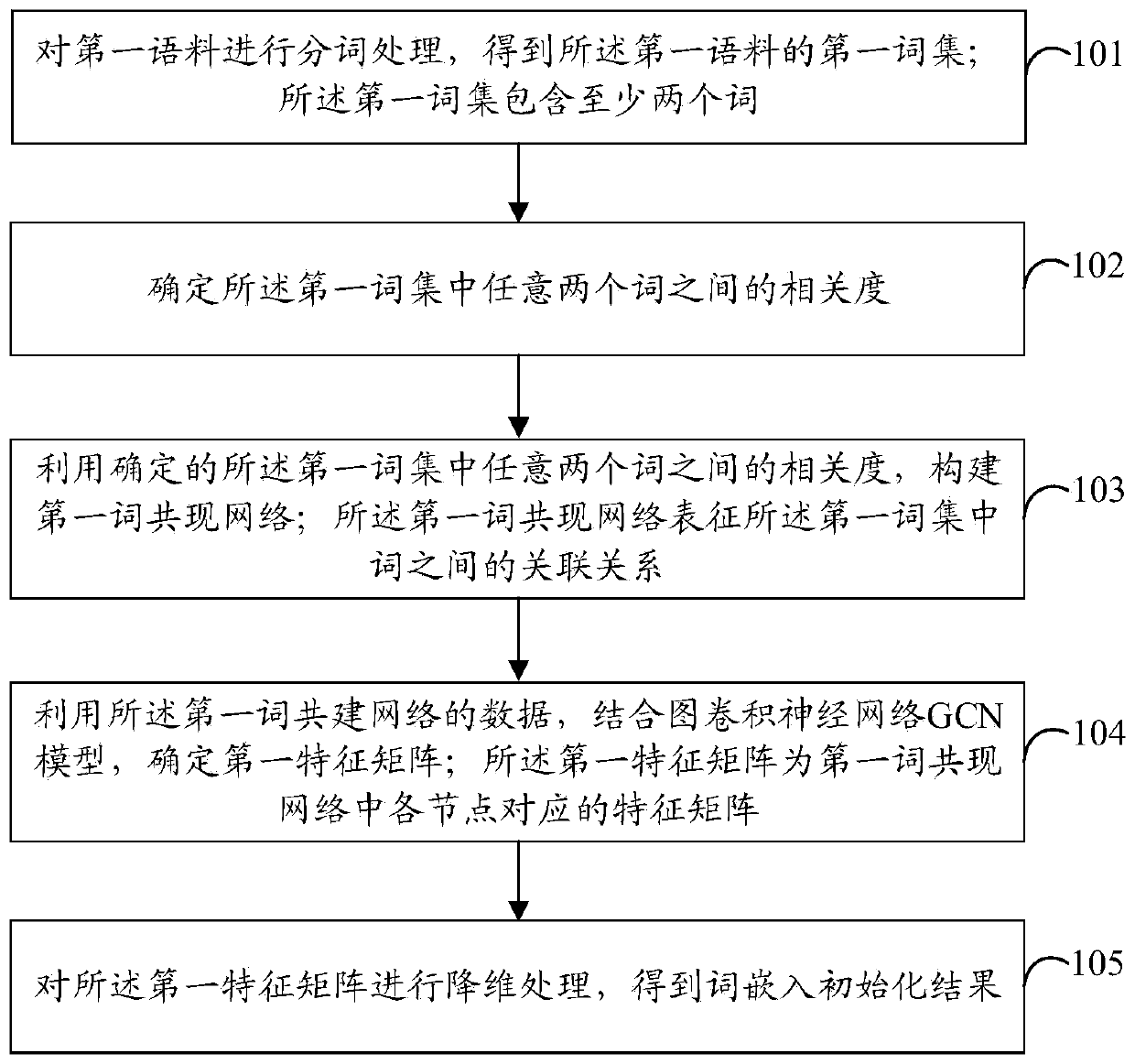

Method used

Image

Examples

Embodiment Construction

[0041] In order to make the purpose, technical solutions and advantages of the embodiments of the present invention clearer, the technical solutions in the embodiments of the present invention will be clearly and completely described below in conjunction with the drawings in the embodiments of the present invention. Obviously, the described embodiments It is a part of embodiments of the present invention, but not all embodiments.

[0042] In related technologies, word embedding models and training focus on how to design network structures and loss functions after obtaining randomly initialized word embedding results to obtain satisfactory embedding layer parameters. For example, the corresponding network structure in Word2Vec is to use the context vector to predict the central word vector or the central word vector to predict the context vector, and the corresponding network structure in Bert or Xlnet is to use the network visible words to predict hidden words or Predict the n...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com