Internal concurrent I/O scheduling method and system for partitions of data server side

A technology of a data server and a scheduling method, applied in the computer field, can solve problems such as unfriendly performance of execution units

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0054] The specific embodiments of the present invention will be described in detail below with reference to the accompanying drawings.

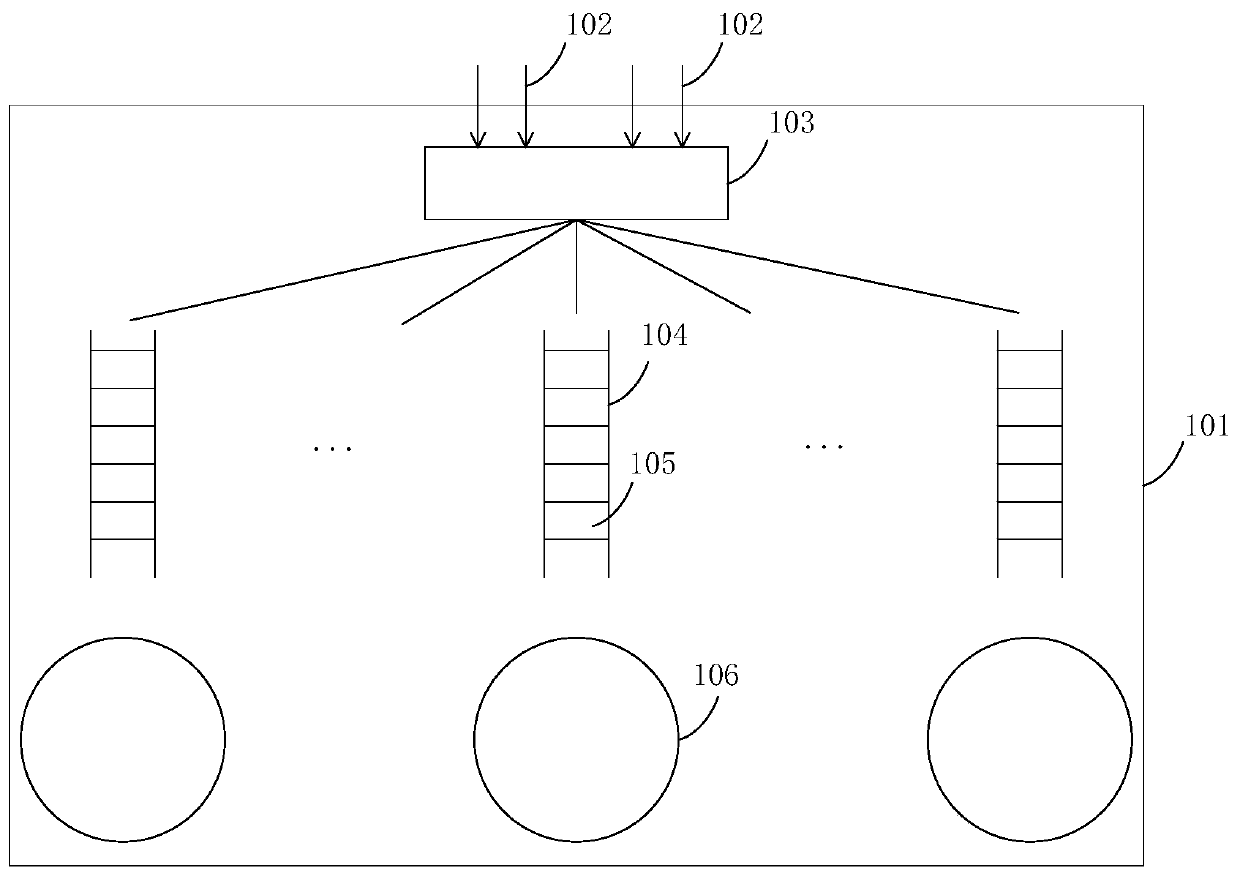

[0055] figure 1 A schematic diagram of processing IO requests received from the network for a data server in a storage system in the prior art. The data server 101 receives the network IO request 102, is distributed by the IO request distributor 103, and enters the corresponding request queue 104. In order to ensure the consistency of the data, the IO request distributor 103 will ensure that the information about the same file or the same file The IO requests of the same fragment enter the same request queue, and the requests 105 in the request queue are organized in a FIFO manner. Each request queue corresponds to a request execution unit 106, and the request execution unit 106 processes the requests one by one in a FIFO manner. Requests in the queue.

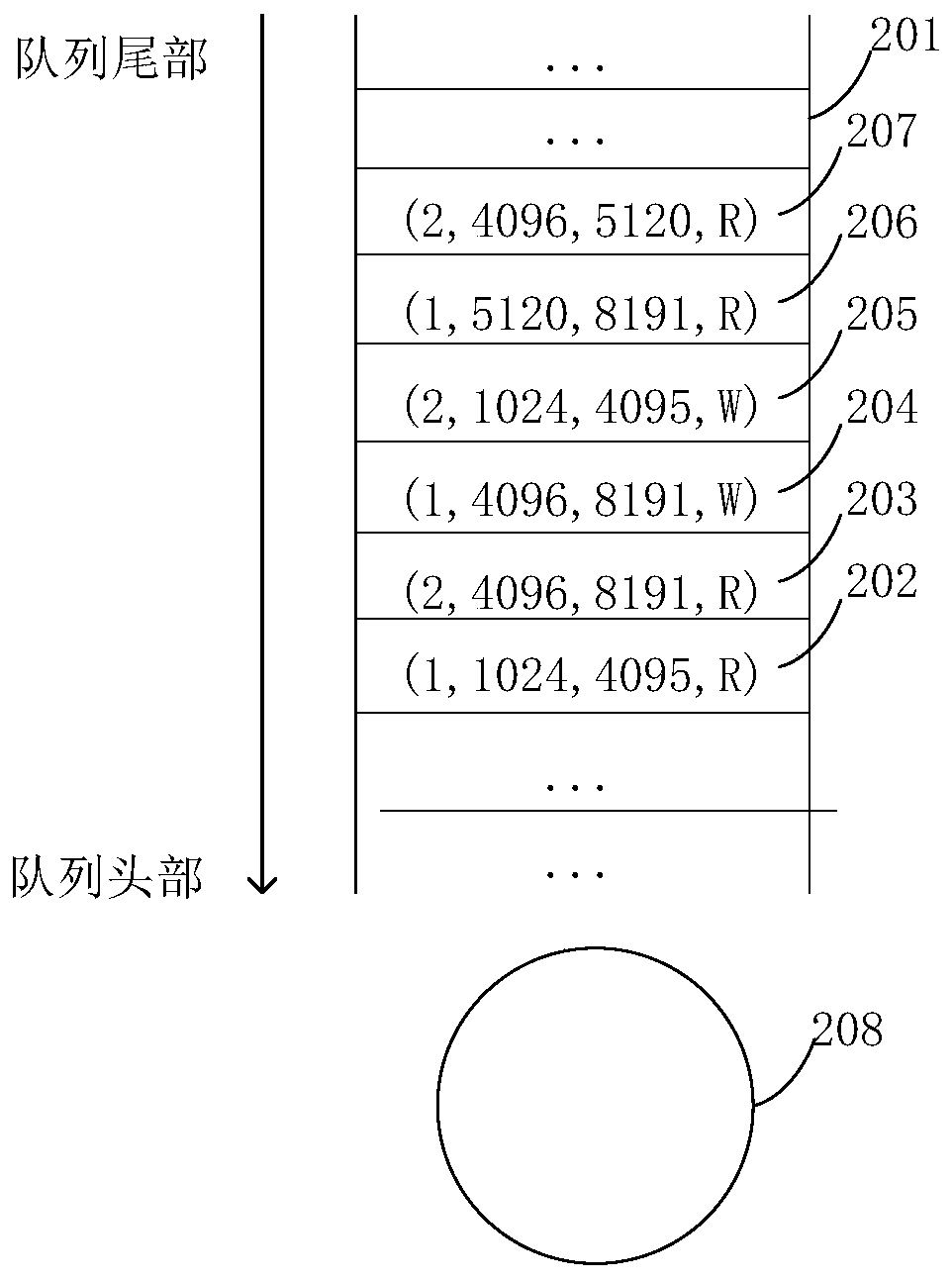

[0056] figure 2 A schematic diagram showing the performance problems of the existing storag...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com