Parallel Access to Volatile Memory by Processing Device for Machine Learning

A processing device, technology of machine learning, applied in the field of memory system

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

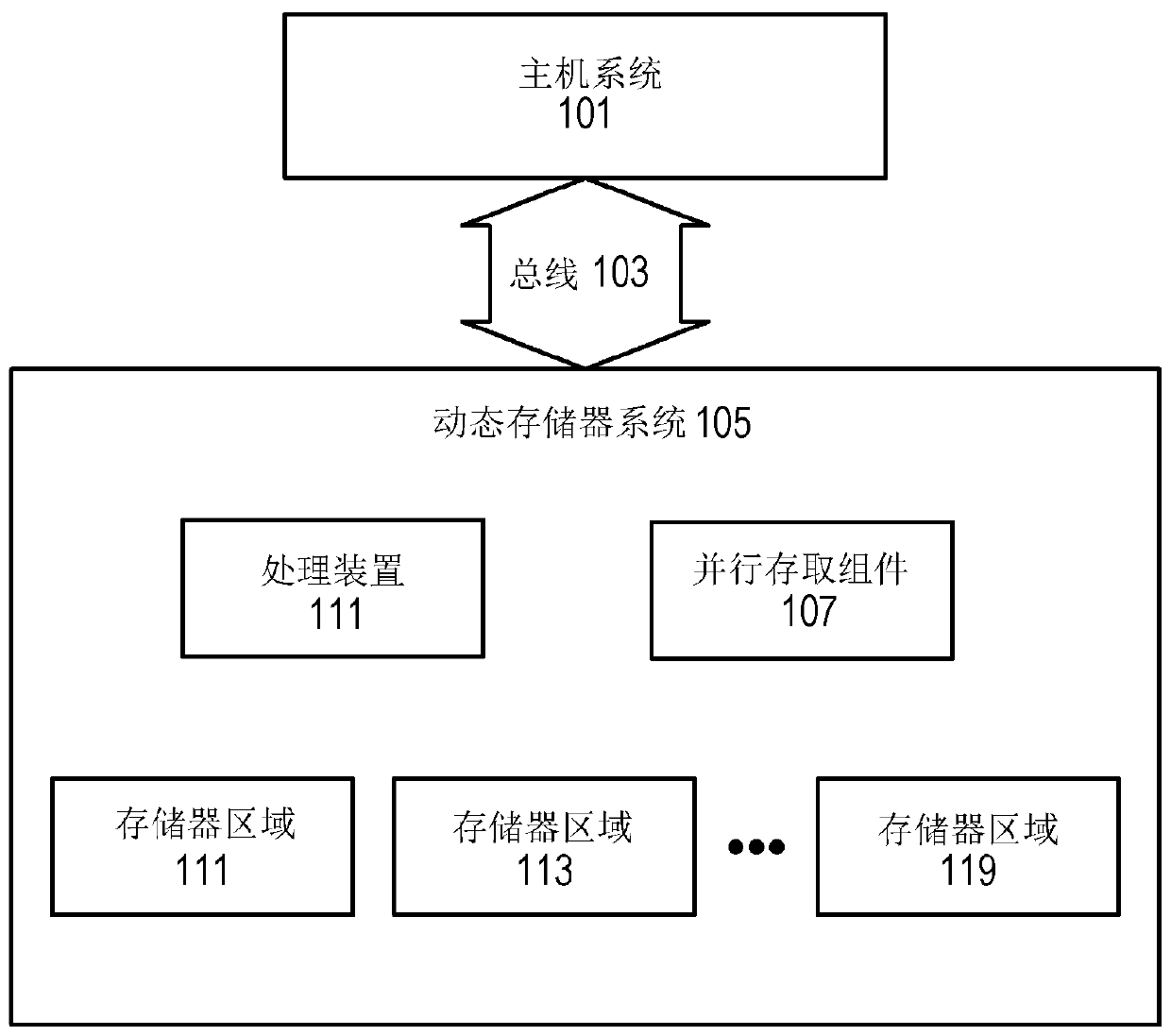

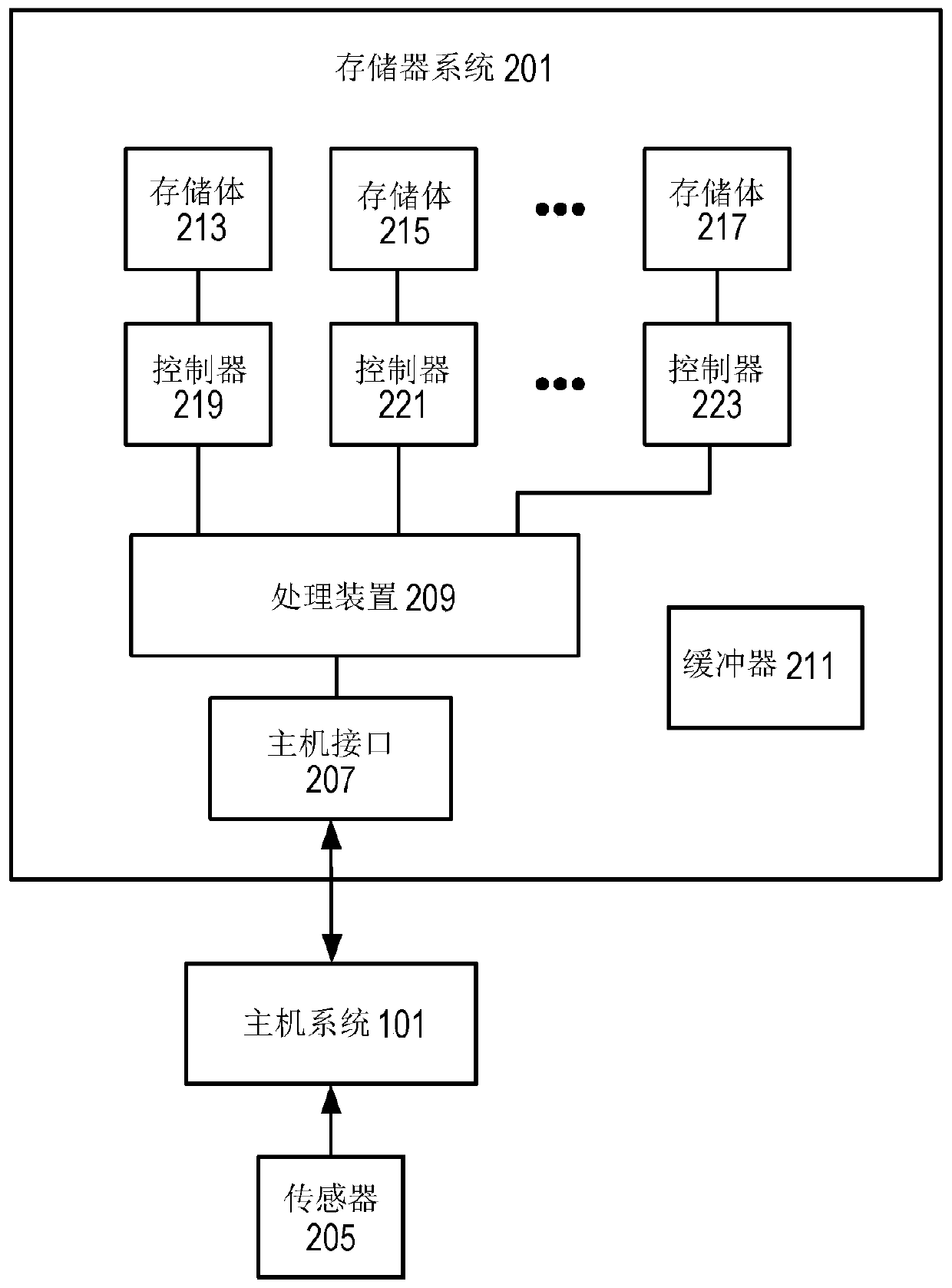

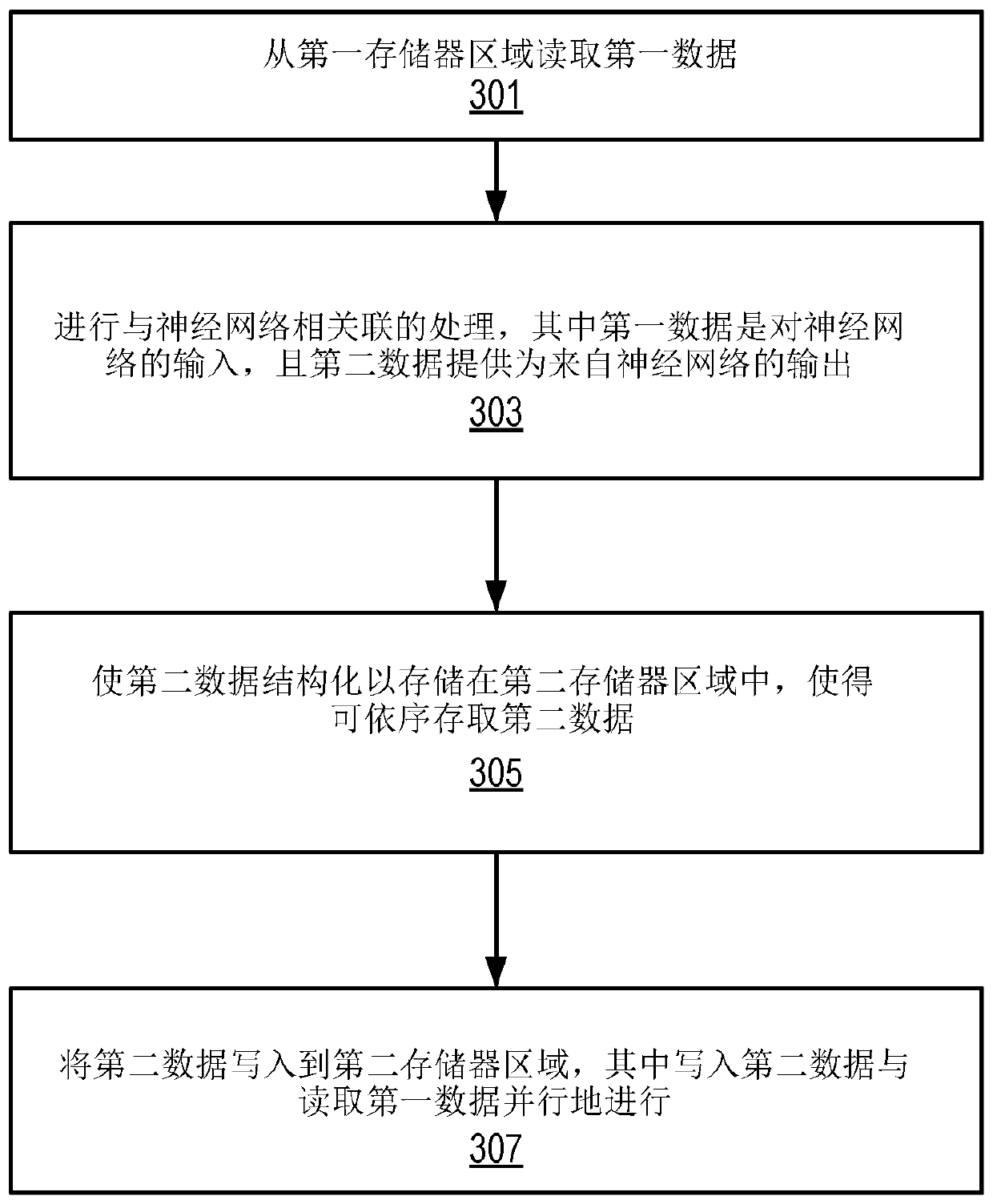

[0017] At least some aspects of the present disclosure relate to parallel access to volatile memory by a processing device that supports processing of machine learning (eg, neural networks).

[0018] Deep learning machines, such as those that support the processing of Convolutional Neural Networks (CNNs), process to determine an extremely large number of operations per second. For example, input / output data, deep learning network training parameters, and intermediate results are continuously fetched from and stored in one or more memory devices (eg, DRAM). DRAM-type memories are typically used due to their cost advantages when large storage densities are involved (eg, storage densities greater than 100MB). In one example of a deep learning hardware system, a computing unit (eg, a system on a chip (SOC), FPGA, CPU, or GPU) is attached to a memory device (eg, a DRAM device).

[0019] It has been recognized that existing machine learning architectures (eg, as used in deep learni...

PUM

Login to view more

Login to view more Abstract

Description

Claims

Application Information

Login to view more

Login to view more - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap