Model training method based on federated learning

A model training and federation technology, applied in the information field, can solve problems such as the inability to obtain the gradient of a single node

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach

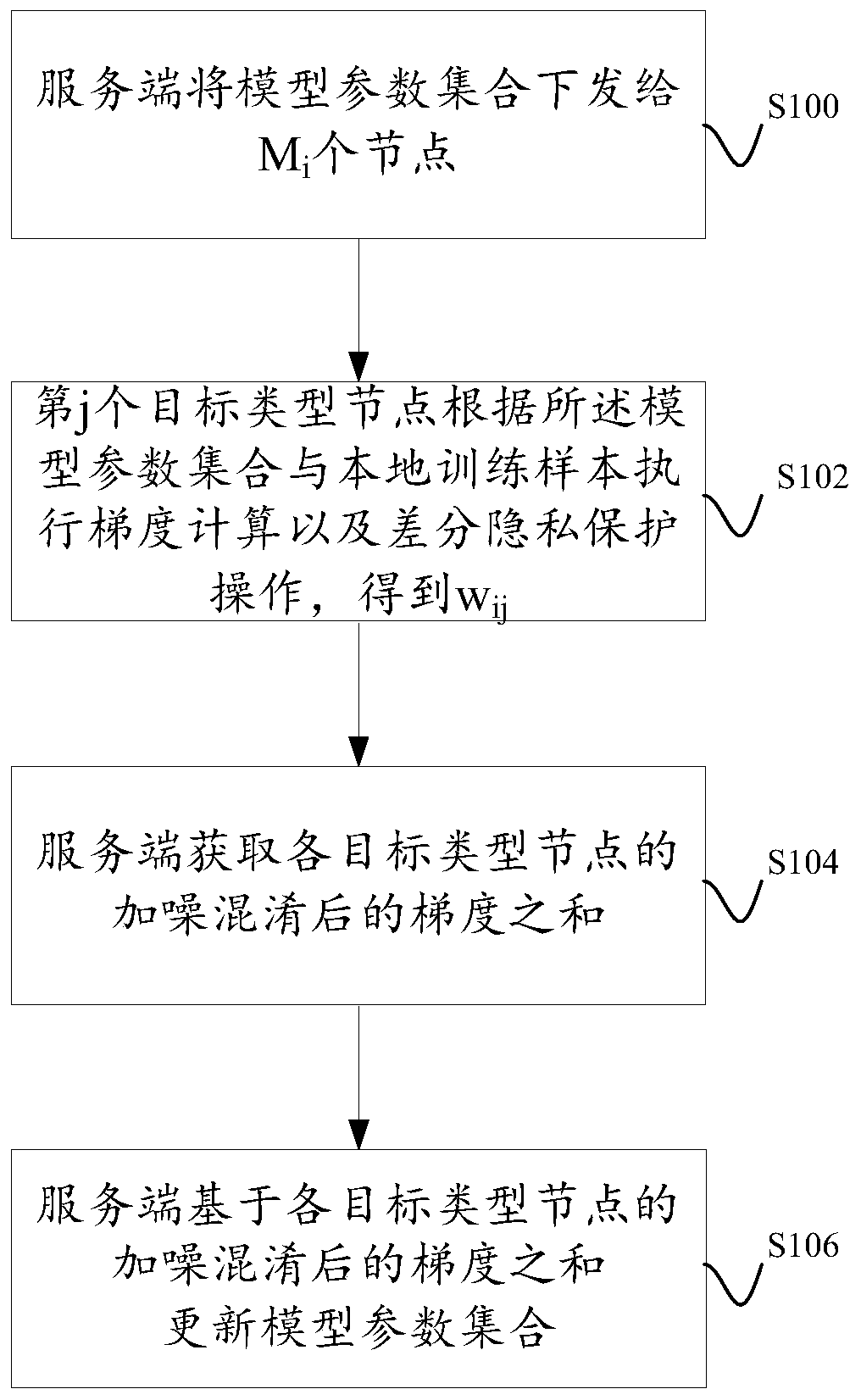

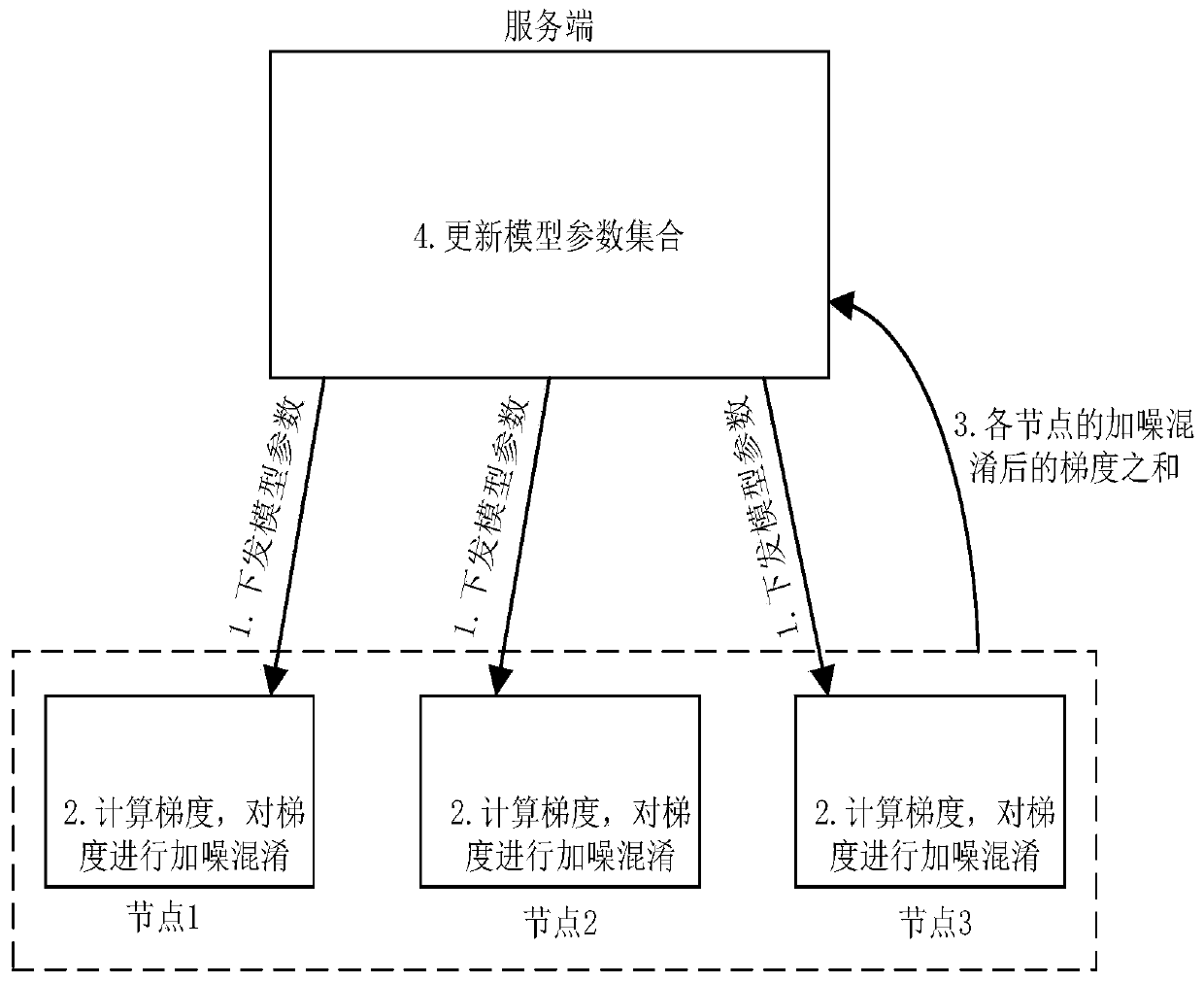

[0055] 1. The jth target type node performs gradient calculation according to the model parameter set and local training samples to obtain the gradient w ij * , and operate through differential privacy protection, to w ij * Add data interference term k ij , get w ij .

[0056] 2. The jth target type node adds interference to the model parameter set through differential privacy protection operation, and performs gradient calculation according to the model parameter set after interference and local training samples, and obtains wi j .

[0057] 3. The jth target type node adds interference to the local training samples through the differential privacy protection operation, and performs gradient calculation according to the disturbed local training samples and the model parameter set, and obtains w ij .

[0058] S104: The server acquires

[0059] In the embodiment of this specification, the server can obtain the Moreover, due to the restriction of the SA protocol, the ...

Embodiment approach

[0066] 2.1. The server starts from the Q i Collect at least T from target type nodes i A sub-private key set of a target type node, assemble the collected sub-private key set into a private key, and decrypt

[0067] 2.2. The server will issued to at least T i target type nodes; for the at least T i Each target type node in the target type node, the target type node uses its own sub-private key set to decrypt Obtain the decryption result and upload it to the server; the server is responsible for the at least T i Summarize the decryption results uploaded by target type nodes respectively, and get

[0068] S106: The server is based on Update the collection of model parameters.

[0069] Assuming that in the embodiment of this specification, the learning rate specified for the gradient descent method is α, the total number of samples used in the i-th iteration is d, and the model parameter set is denoted as θ, then the following formula can be used to update θ to obta...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com