Video super-resolution reconstruction method based on multi-frame fusion optical flow

A super-resolution reconstruction and high-resolution technology, applied in the field of video super-resolution reconstruction based on multi-frame fusion optical flow and spatio-temporal residual dense blocks, can solve the problem of increasing computing costs, limiting performance, and ignoring the temporal correlation of video frames and other issues to achieve good results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

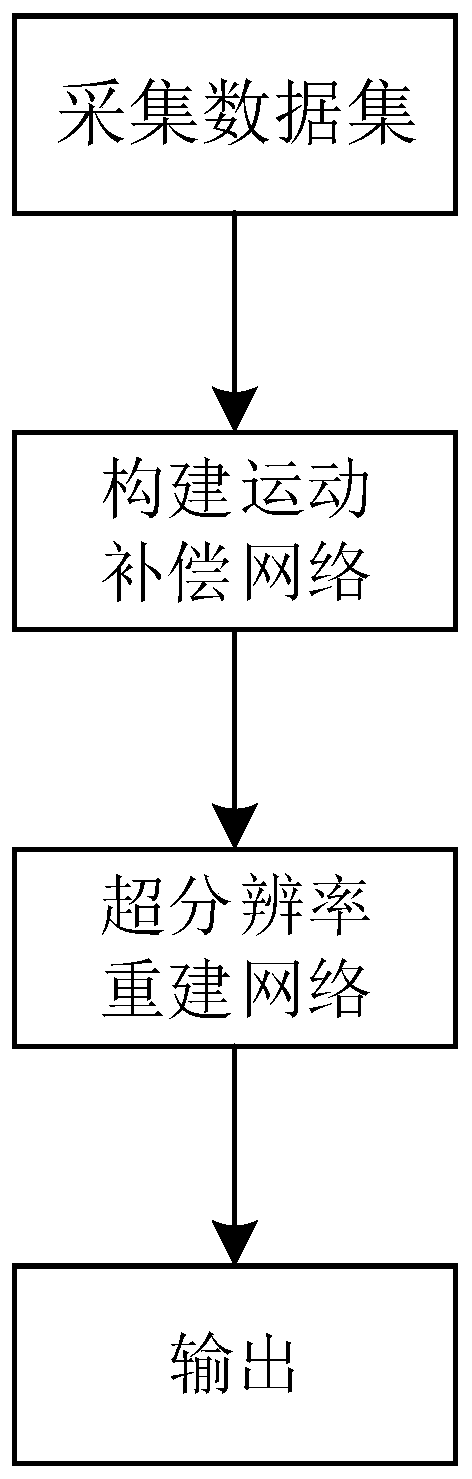

[0031] Taking 30 random scenes in the CDVL data set as an example as a high-resolution data set, the video super-resolution reconstruction method based on multi-frame fusion optical flow of the present embodiment consists of the following steps (see figure 1 ):

[0032] (1) Dataset preprocessing

[0033] In the 30 scenes of the high-resolution dataset, 20 frames are reserved for each scene, and the RGB space of each frame is converted to the Y space according to the following formula to obtain a single-channel high-resolution video frame.

[0034] Y=0.257R+0.504G+0.098B+16

[0035] Among them, R, G, and B are three channels.

[0036] A high-resolution video frame with a length of 540 and a width of 960 is intercepted from the same position in the high-resolution video frame as a learning target, and the downsampling method is used to reduce it by 4 times to obtain a low-resolution video frame with a length of 135 and a width of 240 , is the input to the network and normaliz...

PUM

Login to view more

Login to view more Abstract

Description

Claims

Application Information

Login to view more

Login to view more - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap