A dynamic decoding method and system for neural machine translation based on entropy

A technology of machine translation and decoding methods, applied in the fields of natural language processing and neural machine translation, which can solve problems such as error accumulation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

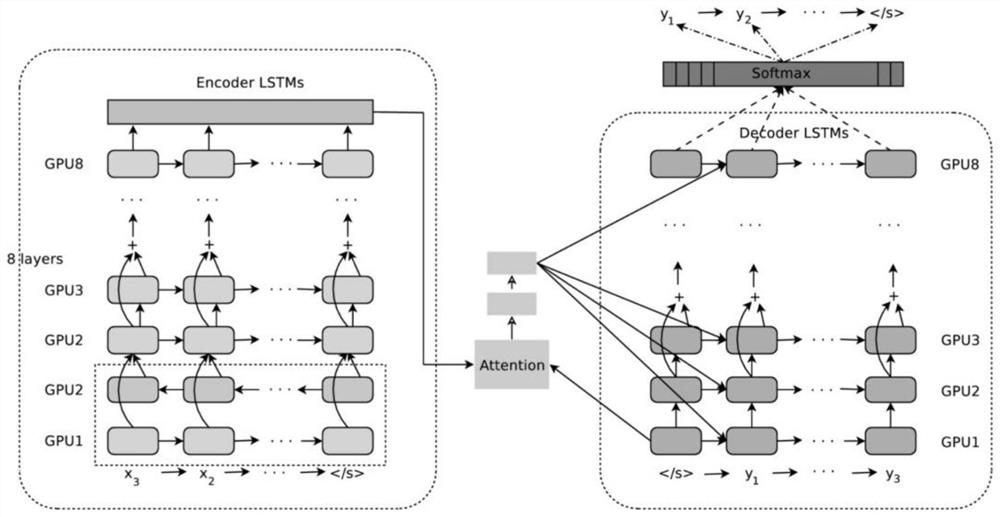

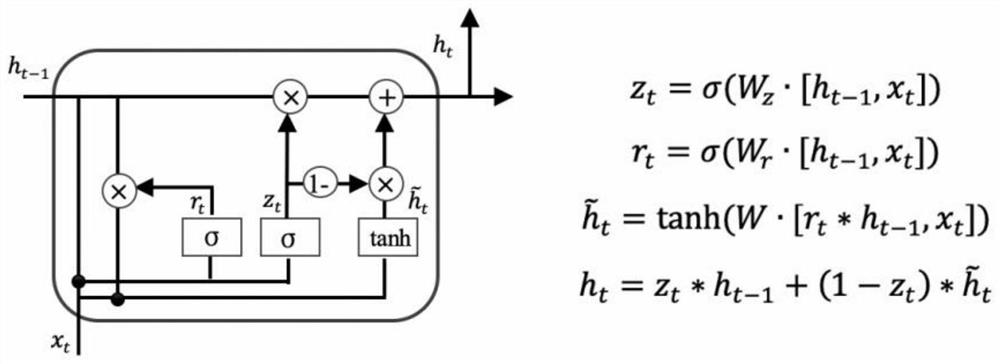

Method used

Image

Examples

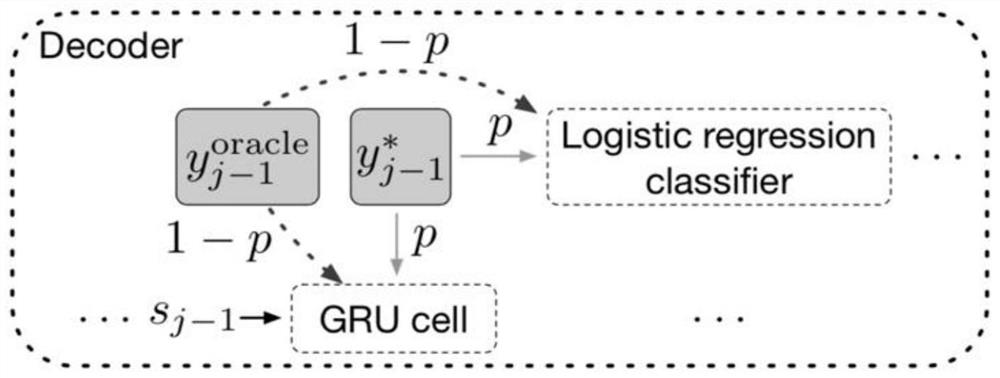

Embodiment Construction

[0071] The inventor analyzed the relationship between the entropy value of a sentence and the BLEU value when conducting neural machine translation technology research, and found that the average entropy value of words in a sentence with a high BLEU value is smaller than the average entropy value of words in a sentence with a low BLEU value , and the BLEU value of the sentence with low entropy value is higher than the BLEU value of the sentence with high entropy value. The inventor finds that there is a correlation between the entropy value of the sentence and the BLEU value by calculating the Pearson coefficient. Therefore, the present invention proposes that in each time step of the decoding phase of the training process, not only must a certain probability be sampled to select real words or predicted words to obtain context information, but also to calculate the entropy value according to the prediction result of the previous time step, and then according to the entropy The...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com