Collaborative training method based on domain self-adaptation

A collaborative training and adaptive technology, applied in the field of model training, can solve problems such as performance degradation, achieve the effect of reducing the dependence on human resources, reducing demand, and improving target detection capabilities

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

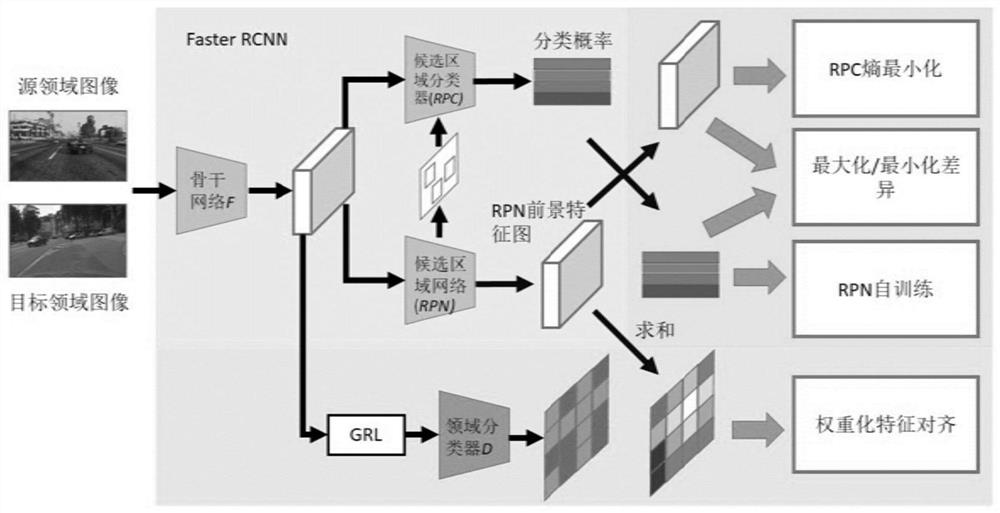

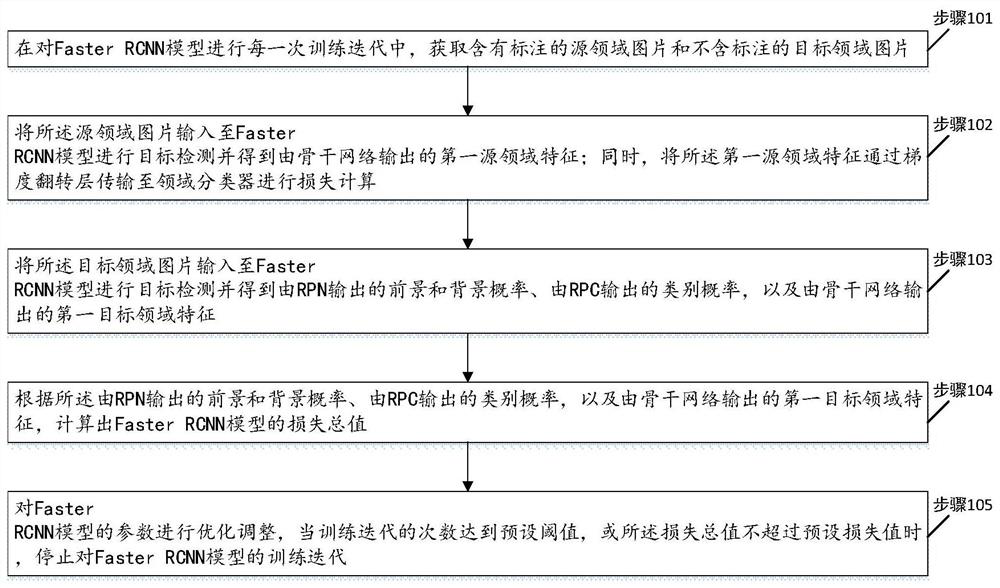

[0046] Please refer to figure 2 , a flow chart of the steps of a domain-adaptive-based collaborative training method provided by an embodiment of the present invention, including steps 101 to 105, each step is specifically as follows:

[0047] Step 101 , in each training iteration of the Faster RCNN model, obtain a labeled source domain image and an unlabeled target domain image.

[0048] Step 102, input the source domain image into the Faster RCNN model for target detection and obtain the first source domain features output by the backbone network; at the same time, transmit the first source domain features to the domain classifier through the gradient flip layer for further Loss calculation.

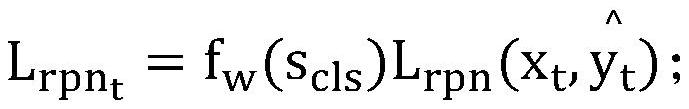

[0049] Specifically, input the source domain pictures and their annotations into Faster RCNN, and perform loss calculation and training in the same way as the original Faster RCNN. At the same time, the features extracted from the source domain pictures by the backbone network are s...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com