Patents

Literature

1621 results about "Classifier (UML)" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A classifier is a category of Unified Modeling Language (UML) elements that have some common features, such as attributes or methods.

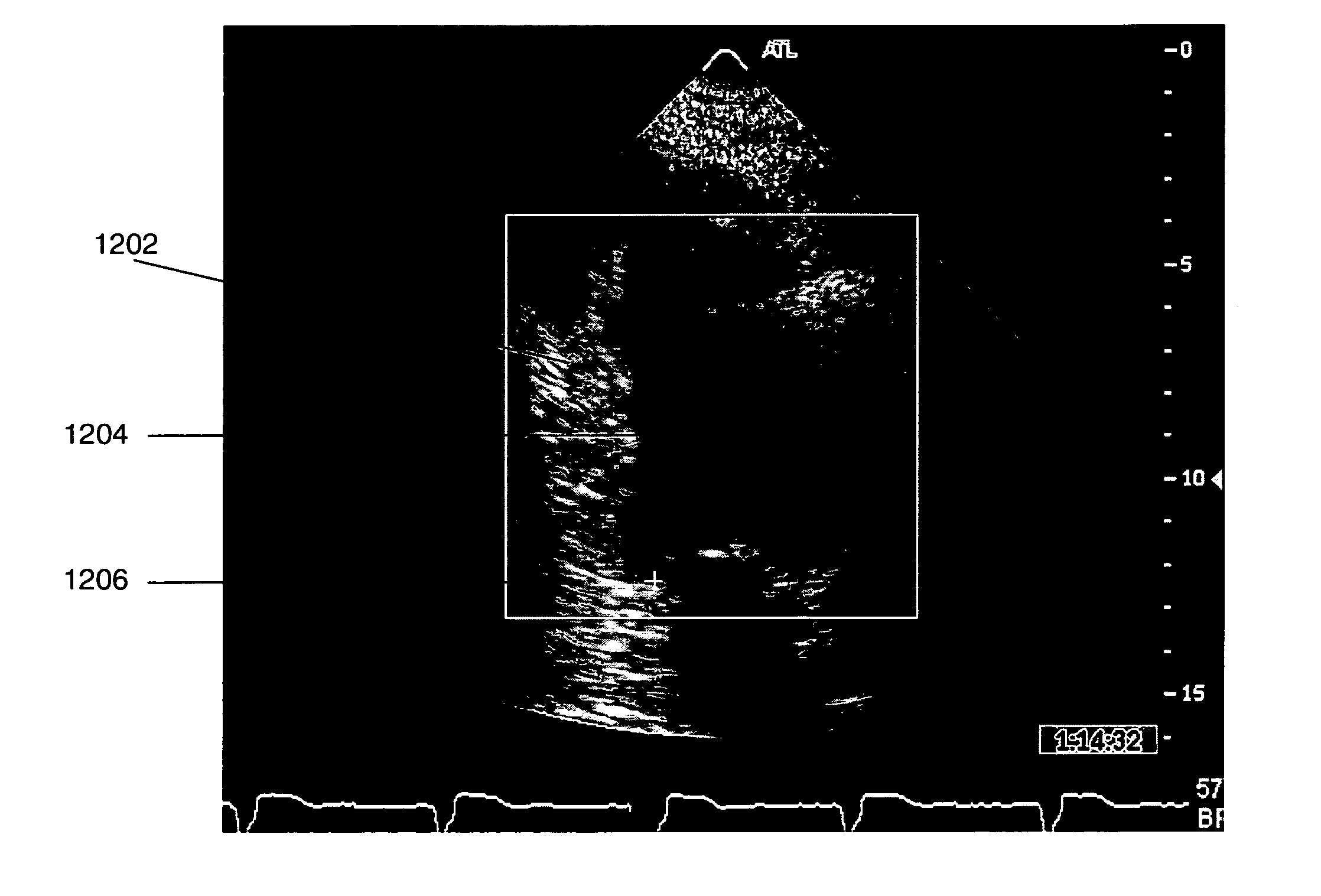

Medical Premonitory Event Estimation

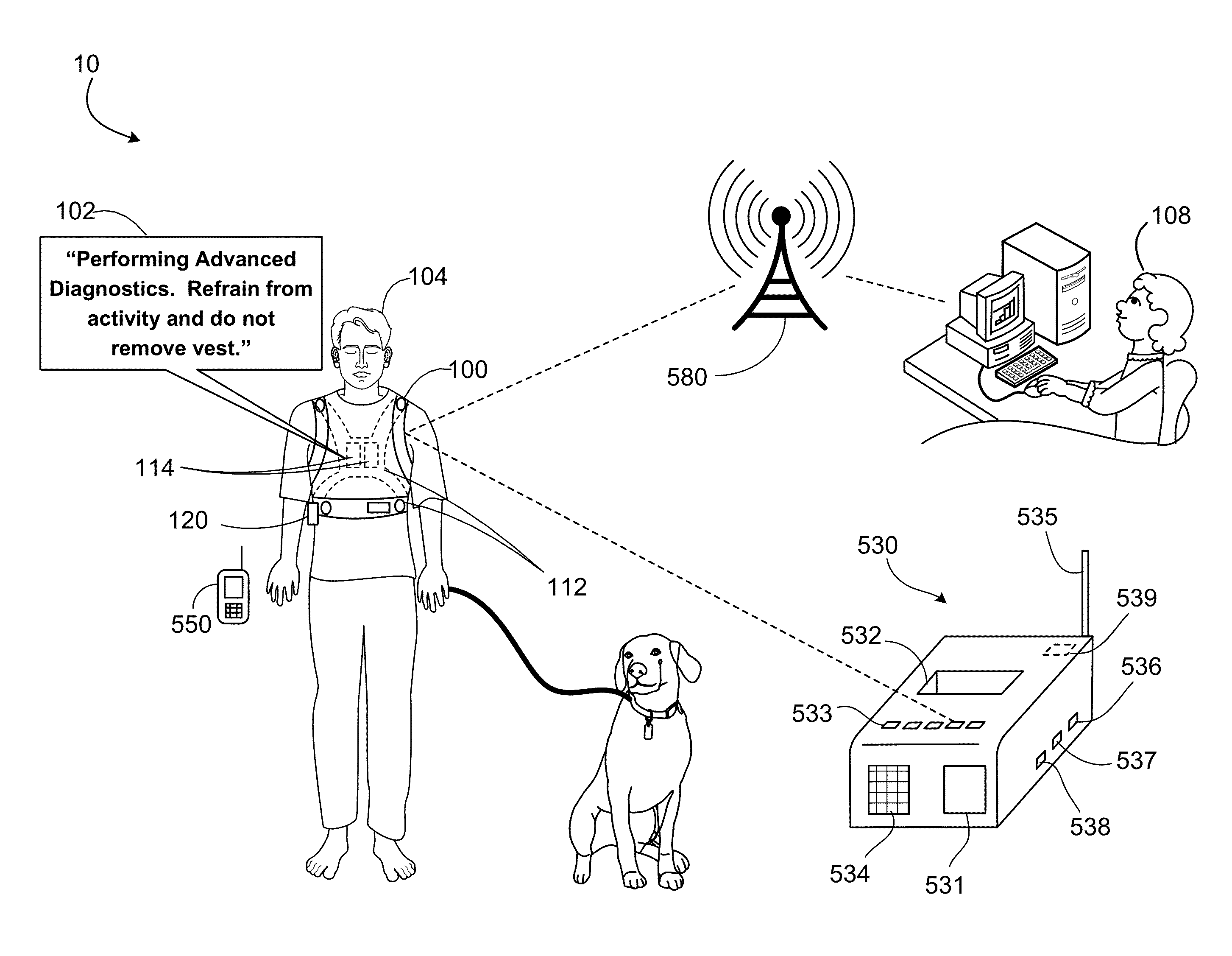

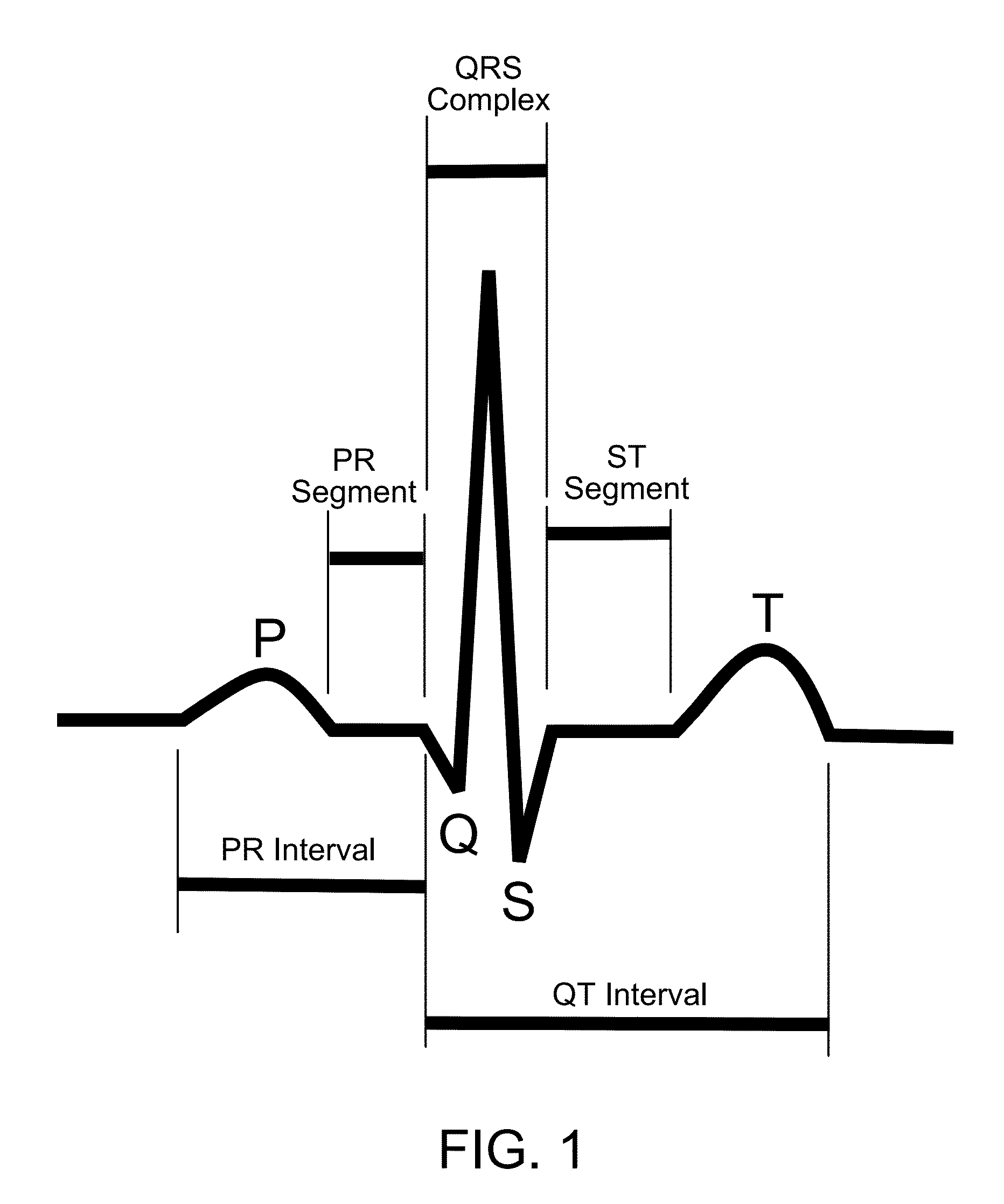

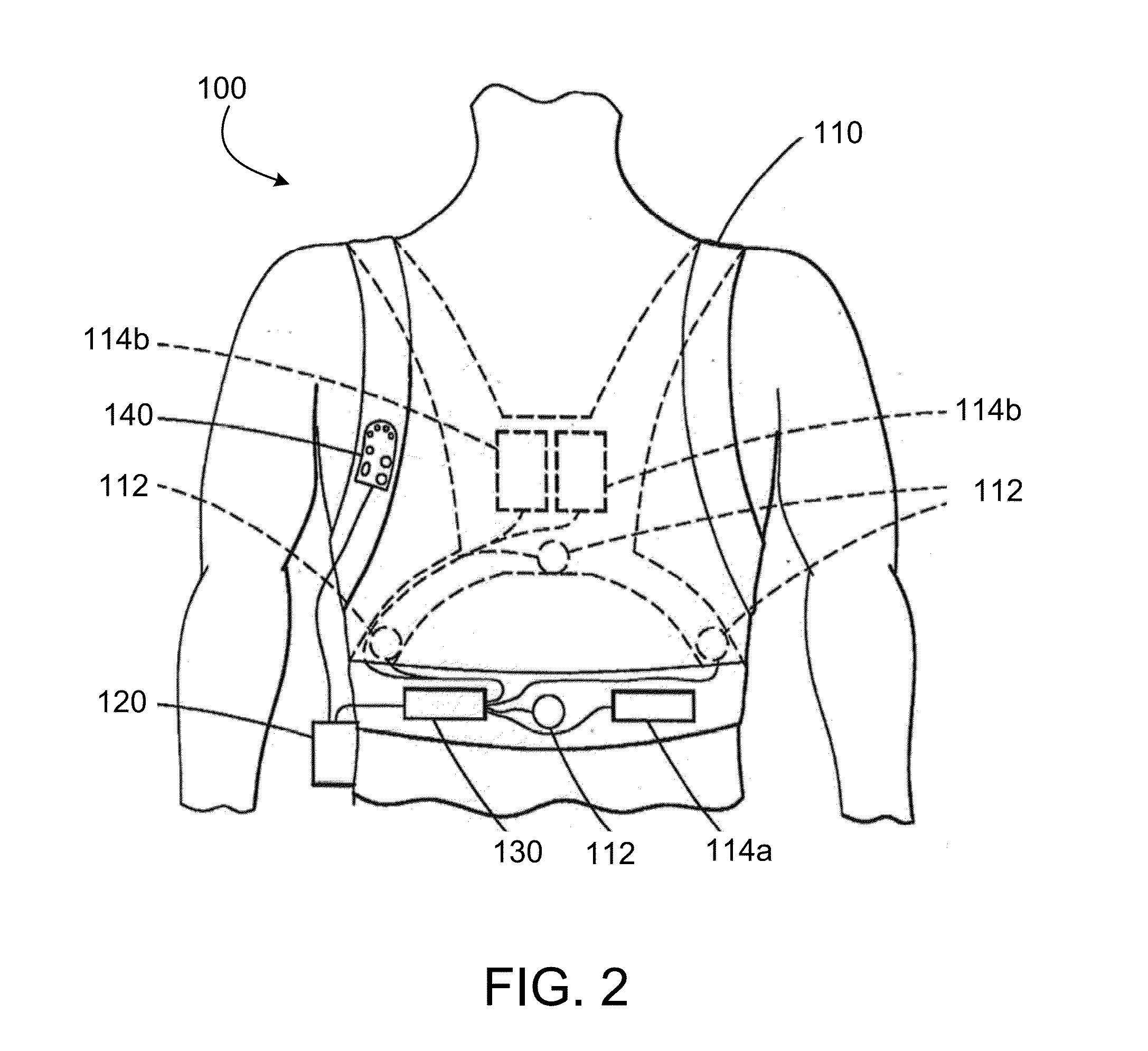

A system and method for medical premonitory event estimation includes one or more processors to perform operations comprising: acquiring a first set of physiological information of a subject, and a second set of physiological information of the subject received during a second period of time; calculating first and second risk scores associated with estimating a risk of a potential cardiac arrhythmia event for the subject based on applying the first and second sets of physiological information to one or more machine learning classifier models, providing at least the first and second risk scores associated with the potential cardiac arrhythmia event as a time changing series of risk scores, and classifying the first and second risk scores associated with estimating the risk of the potential cardiac arrhythmia event for the subject based on the one or more thresholds.

Owner:ZOLL MEDICAL CORPORATION

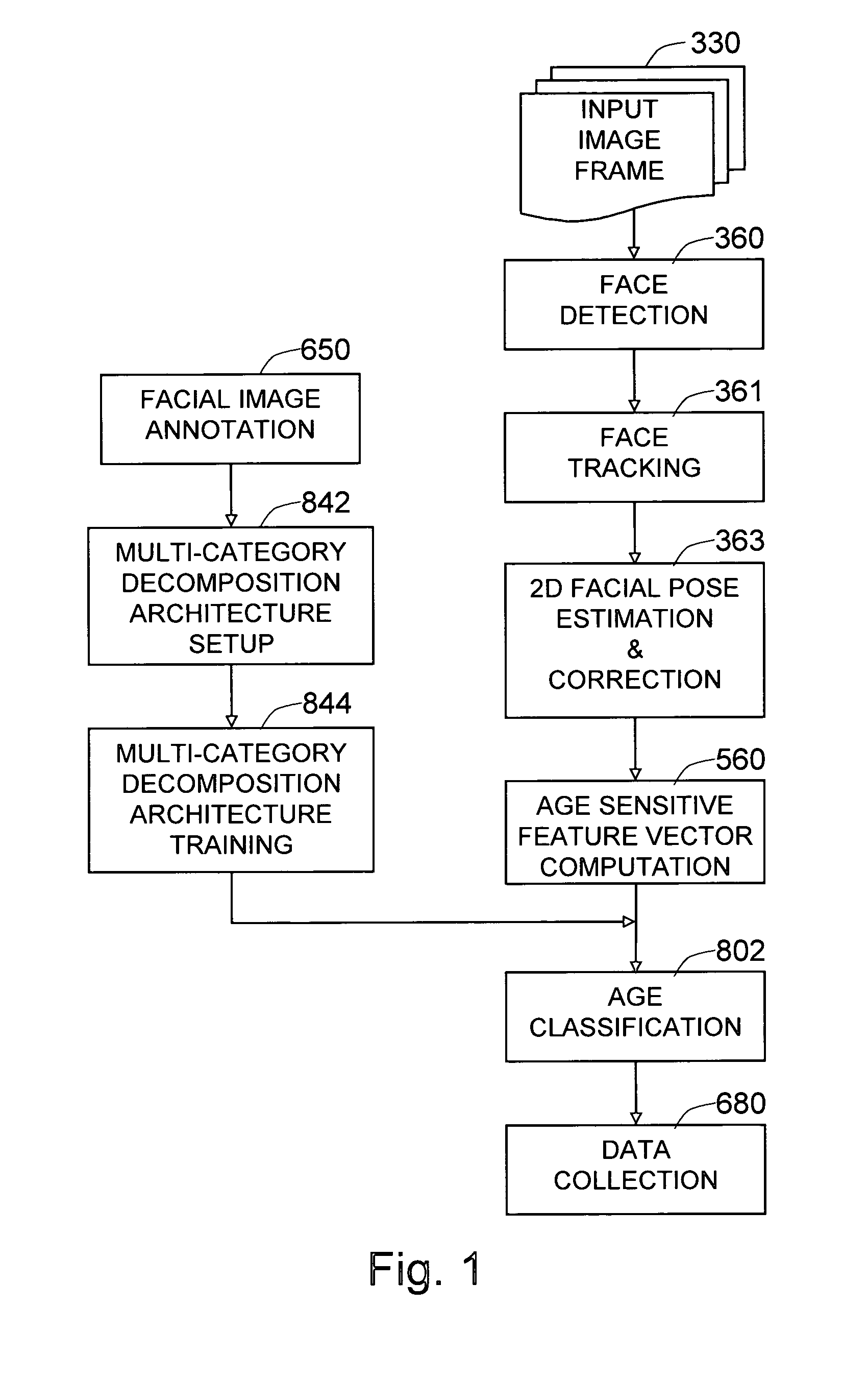

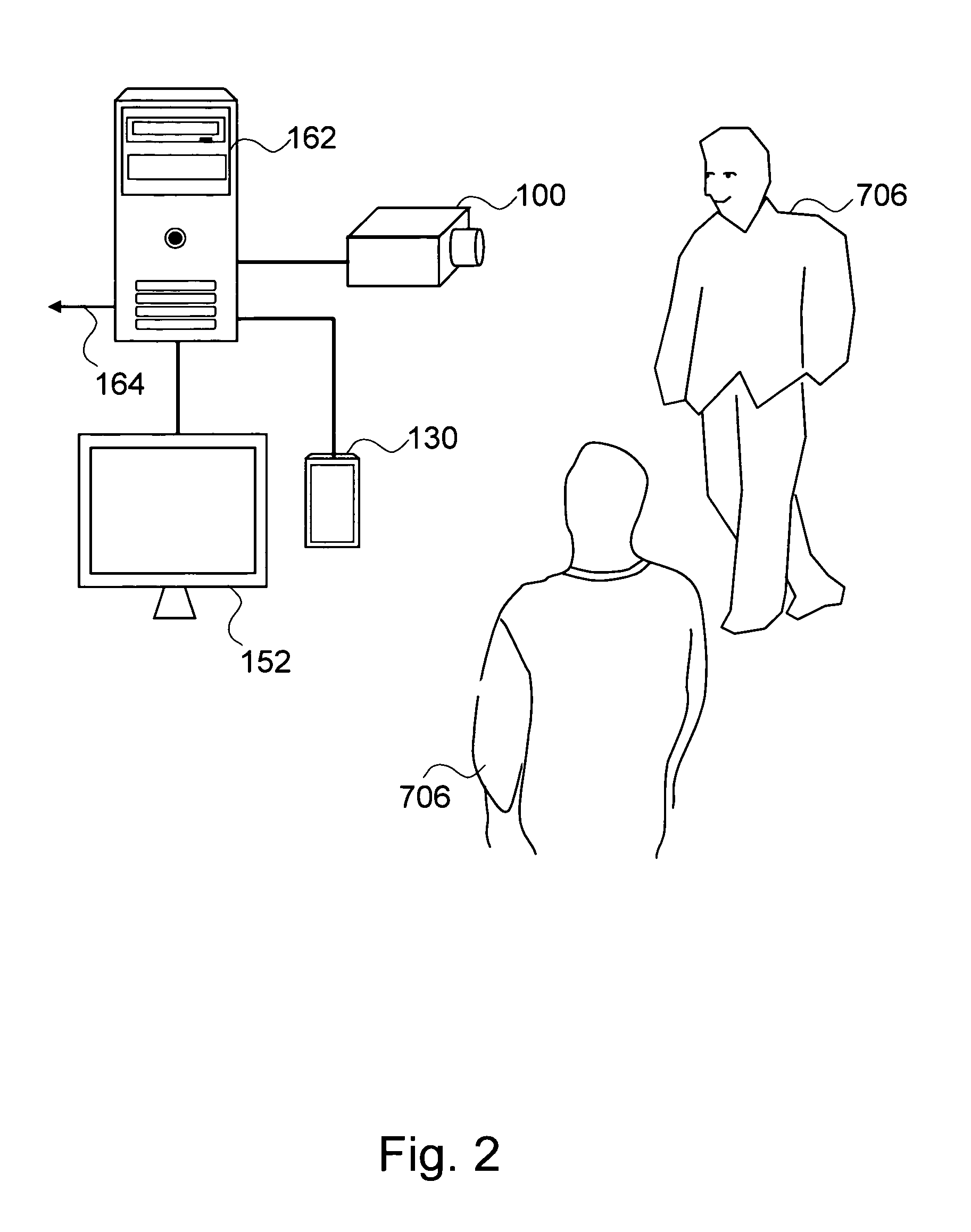

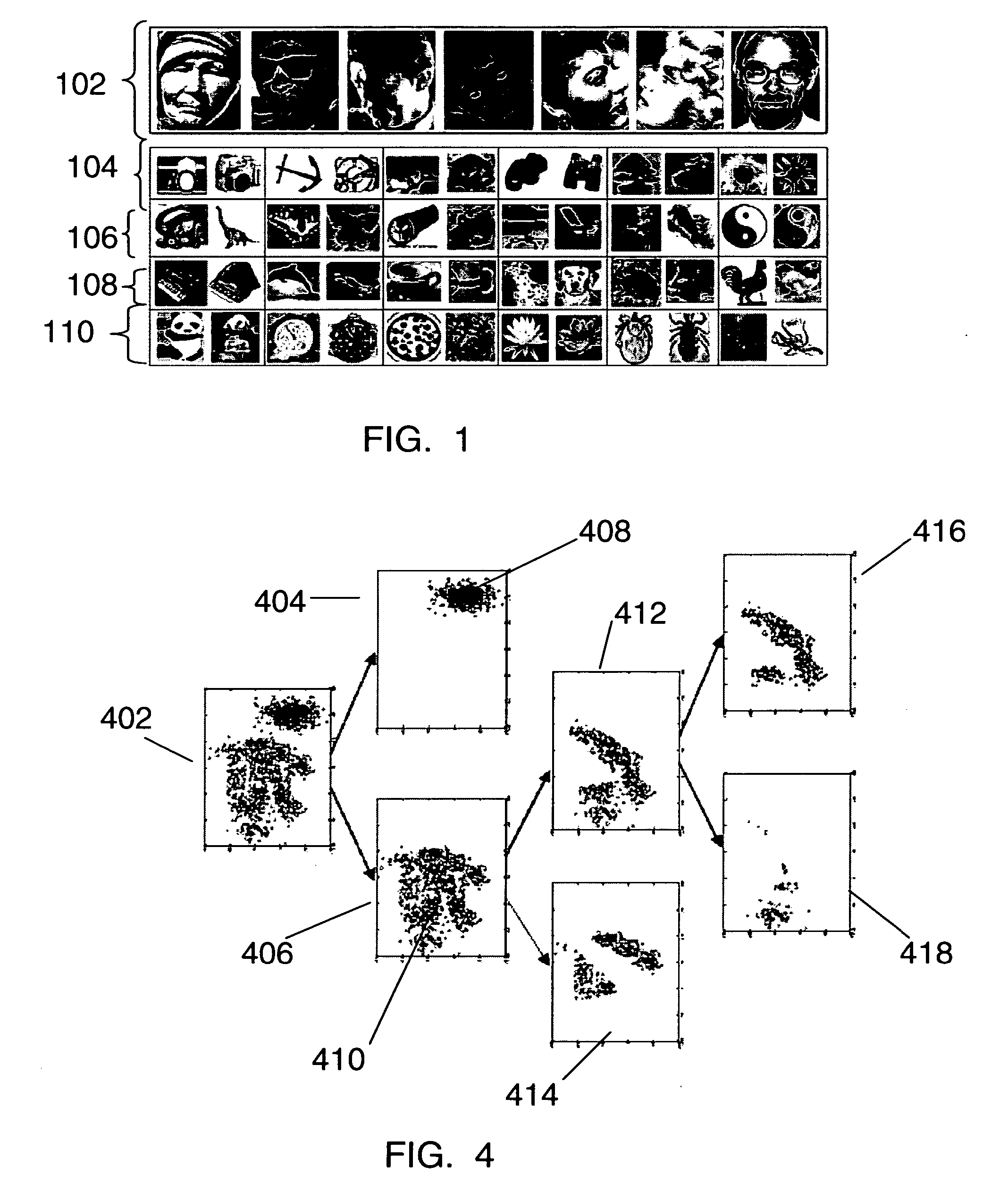

Method and system for determining the age category of people based on facial images

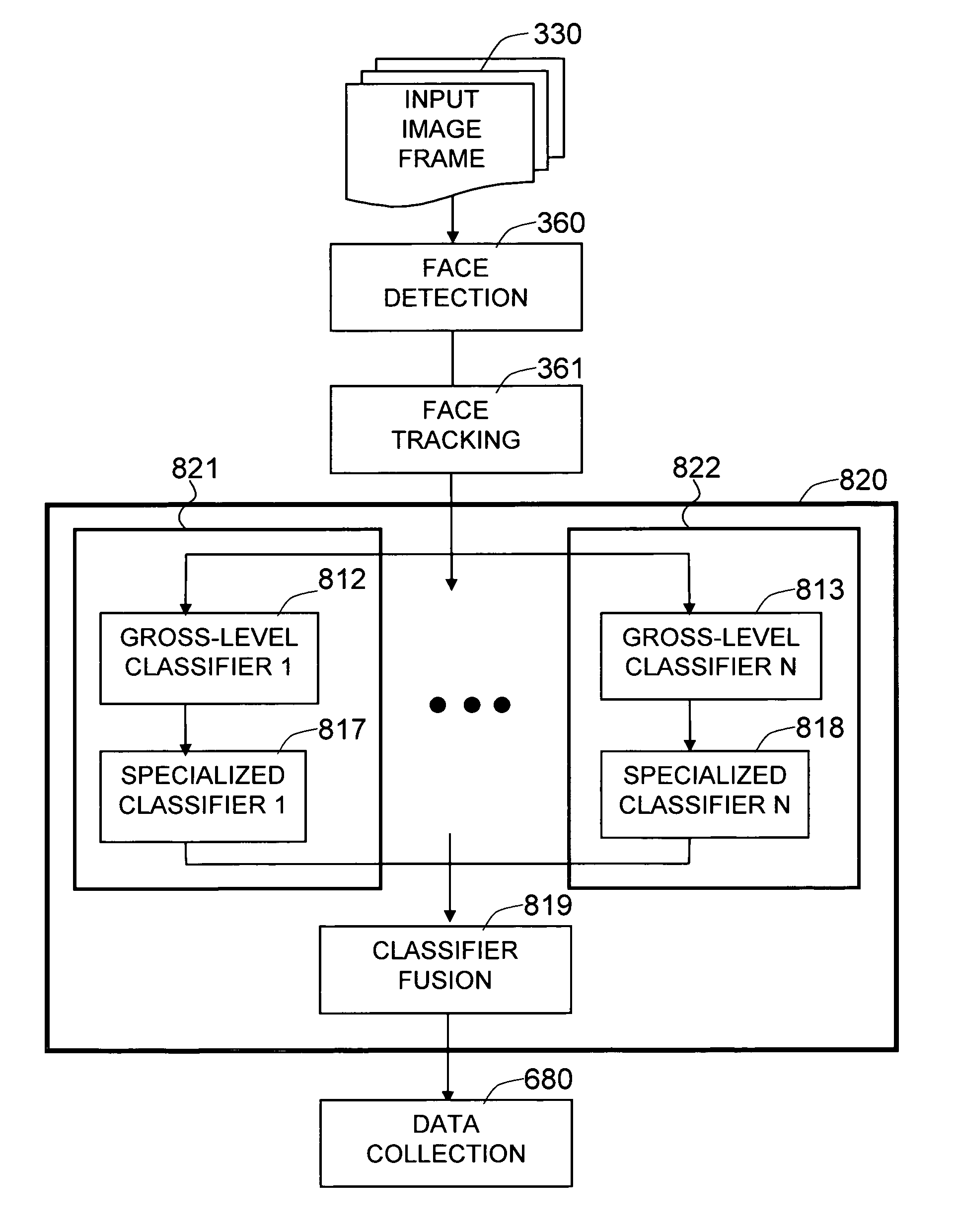

The present invention is a system and method for performing age classification or age estimation based on the facial images of people, using multi-category decomposition architecture of classifiers. In the multi-category decomposition architecture, which is a hybrid multi-classifier architecture specialized to age classification, the task of learning the concept of age against significant within-class variations, is handled by decomposing the set of facial images into auxiliary demographics classes, and the age classification is performed by an array of classifiers where each classifier, called an auxiliary class machine, is specialized to the given auxiliary class. The facial image data is annotated to assign the gender and ethnicity labels as well as the age labels. Each auxiliary class machine is trained to output both the given auxiliary class membership likelihood and the age group likelihoods. Faces are detected from the input image and individually tracked. Age sensitive feature vectors are extracted from the tracked faces and are fed to all of the auxiliary class machines to compute the desired likelihood outputs. The outputs from all of the auxiliary class machines are combined in a manner to make a final decision on the age of the given face.

Owner:VIDEOMINING CORP

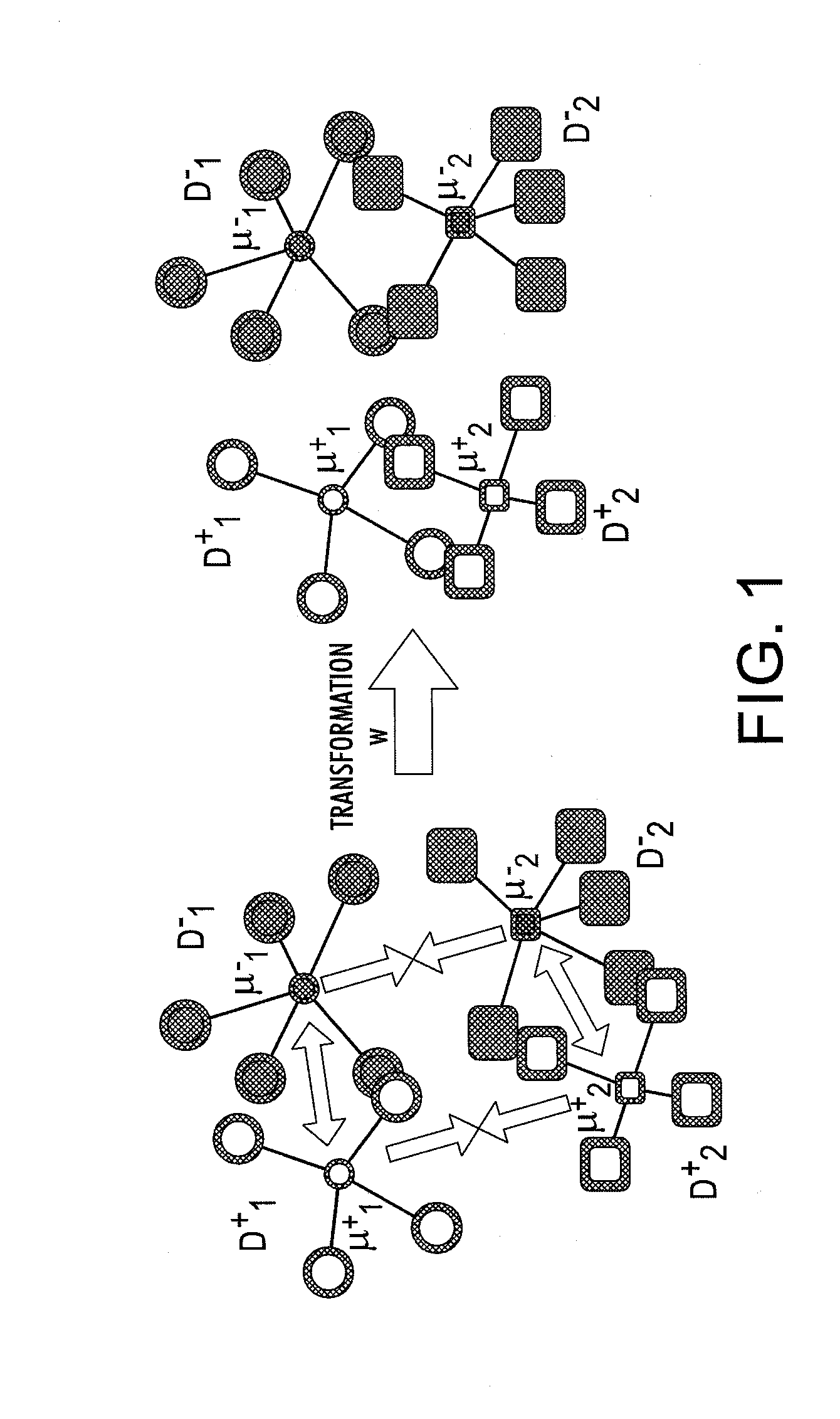

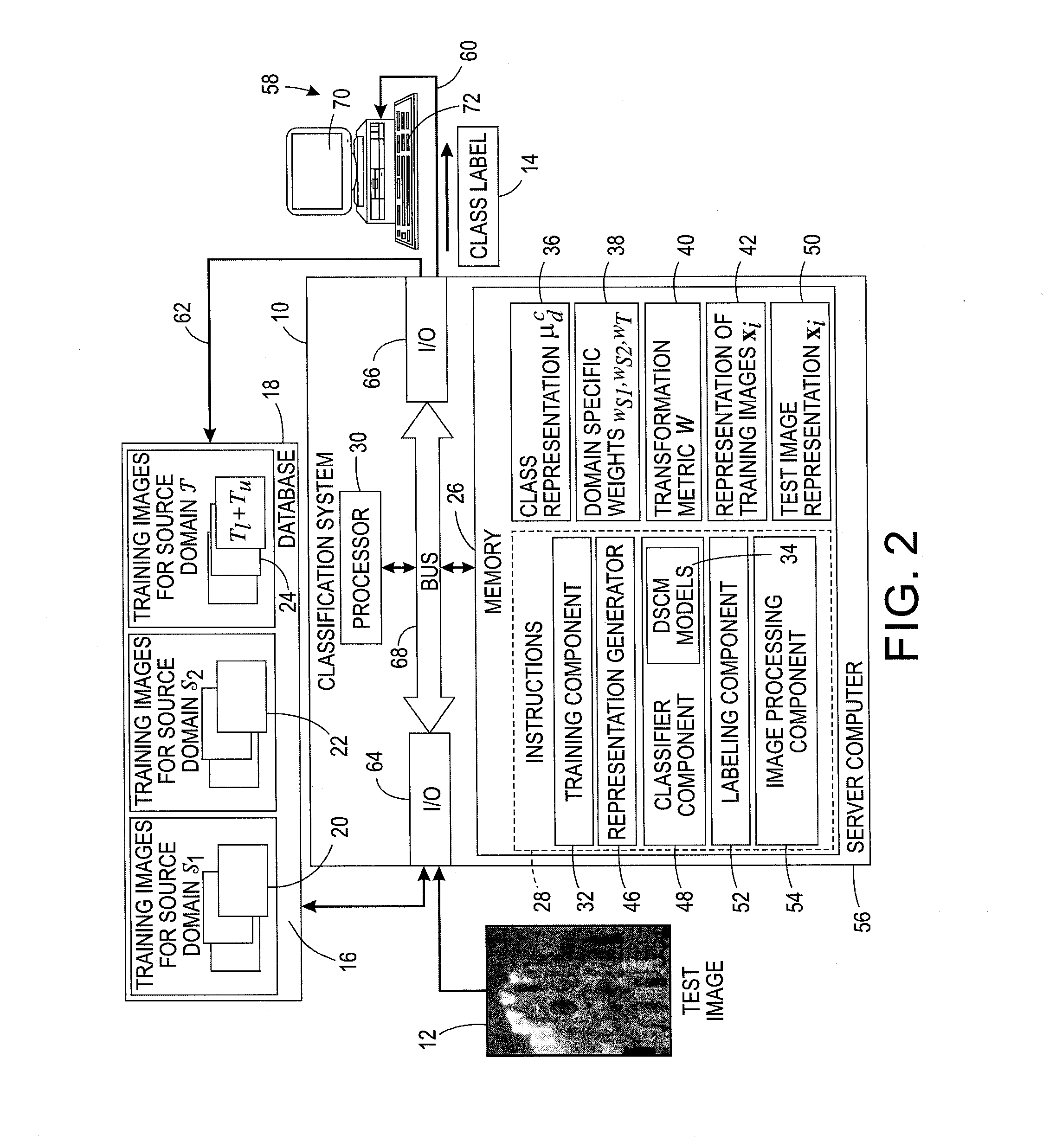

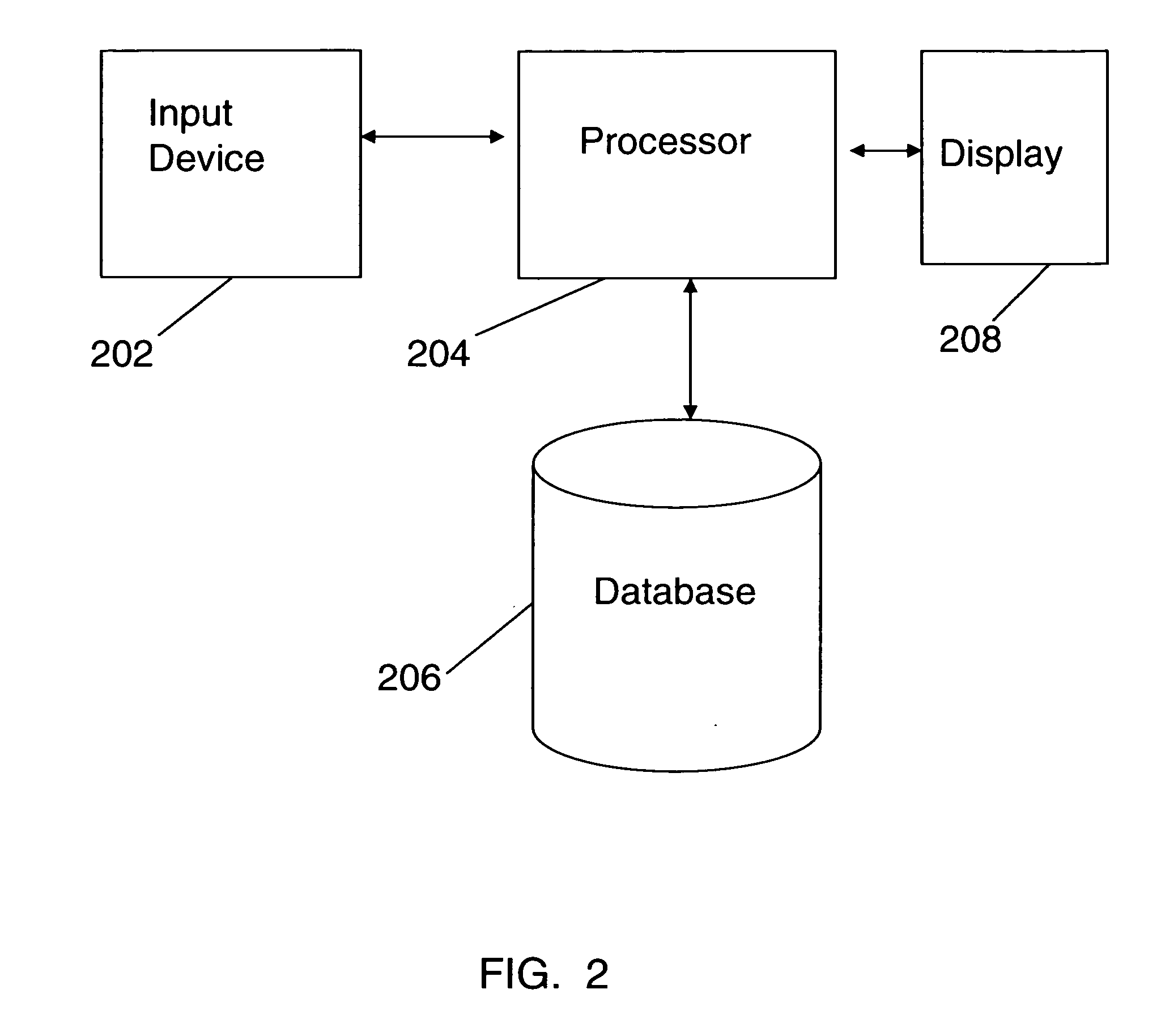

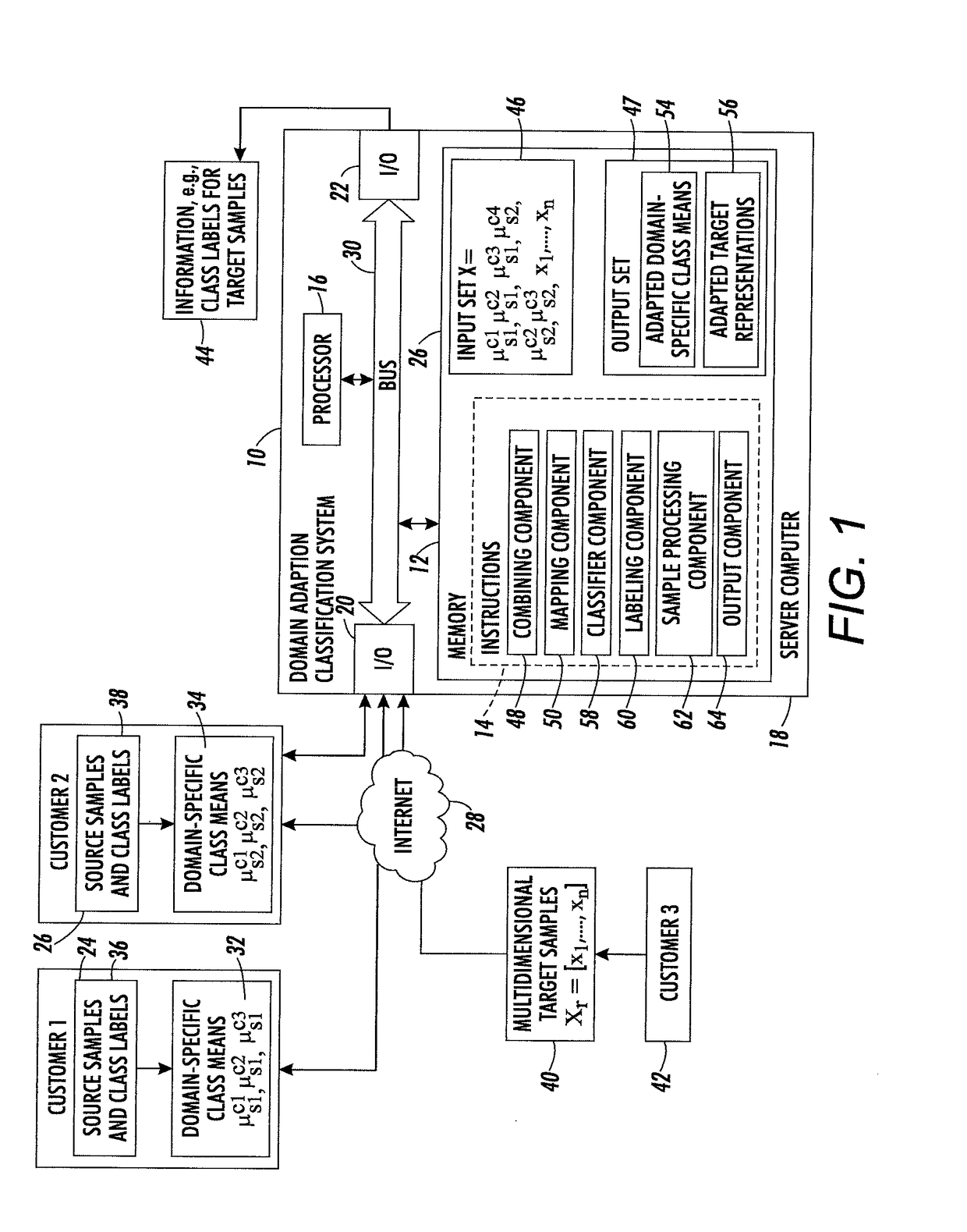

System for domain adaptation with a domain-specific class means classifier

A classification system includes memory which stores, for each of a set of classes, a classifier model for assigning a class probability to a test sample from a target domain. The classifier model has been learned with training samples from the target domain and from at least one source domain. Each classifier model models the respective class as a mixture of components, the component mixture including a component for each source domain and a component for the target domain. Each component is a function of a distance between the test sample and a domain-specific class representation which is derived from the training samples of the respective domain that are labeled with the class, each of the components in the mixture being weighted by a respective mixture weight. Instructions, implemented by a processor, are provided for labeling the test sample based on the class probabilities assigned by the classifier models.

Owner:XEROX CORP

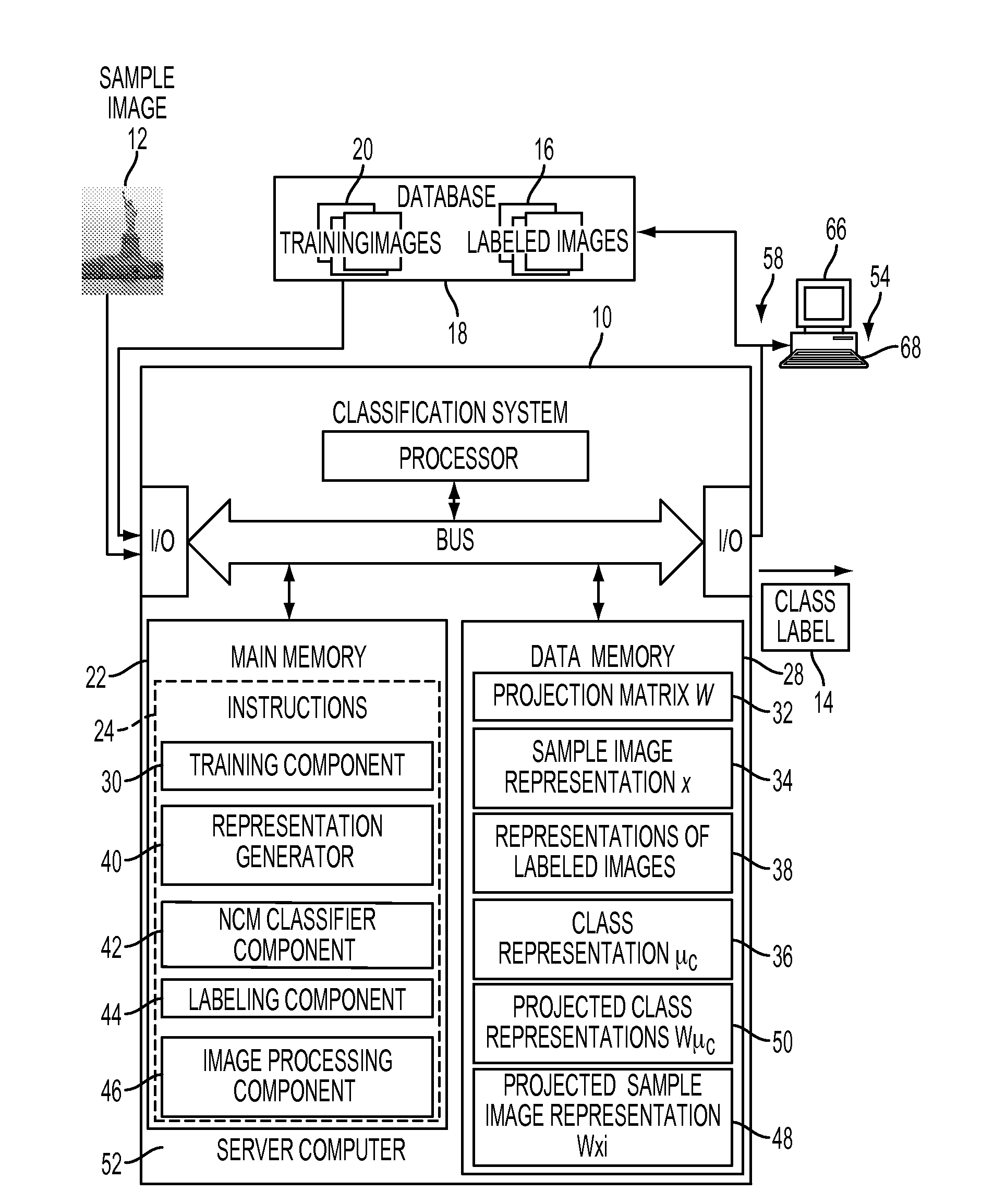

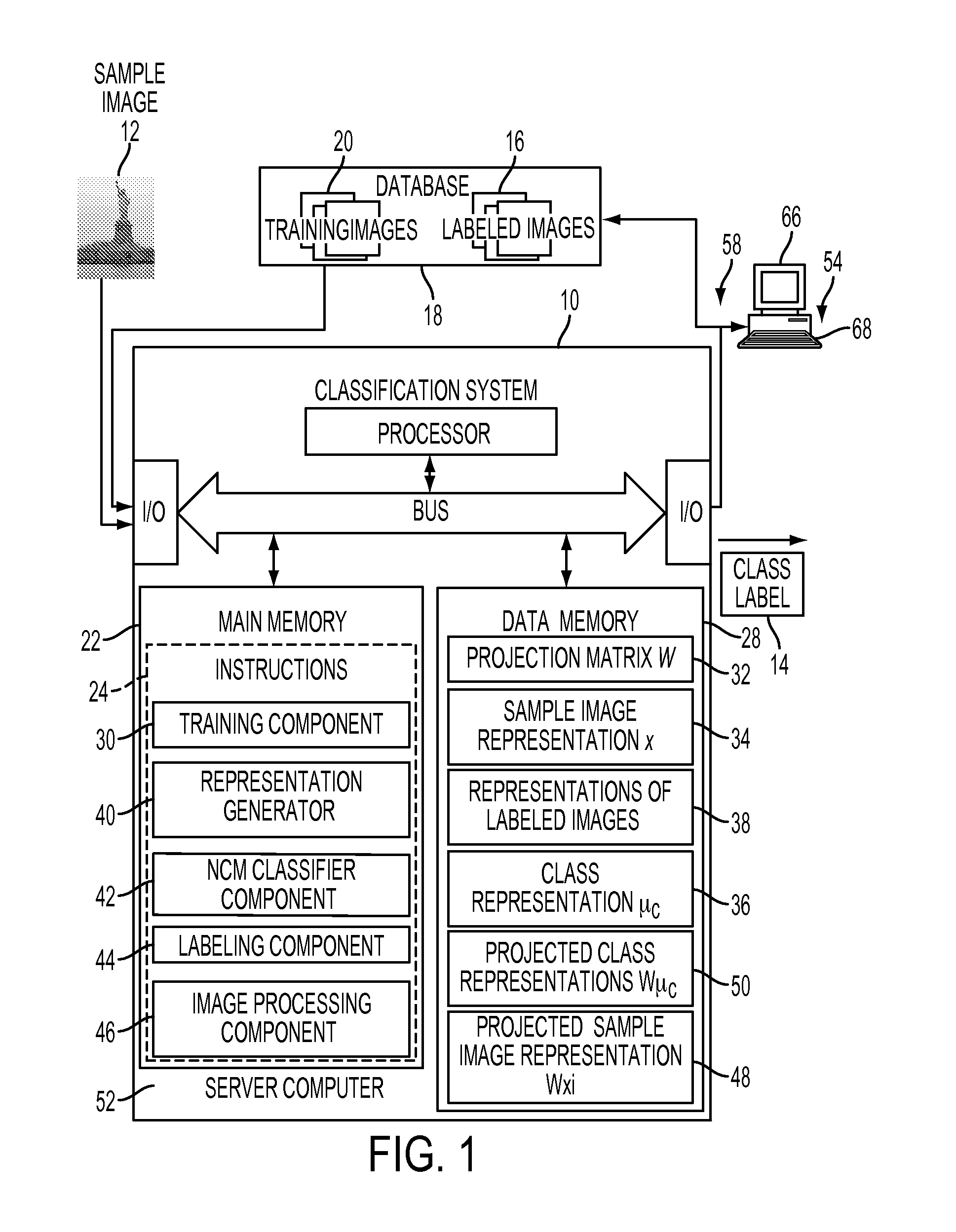

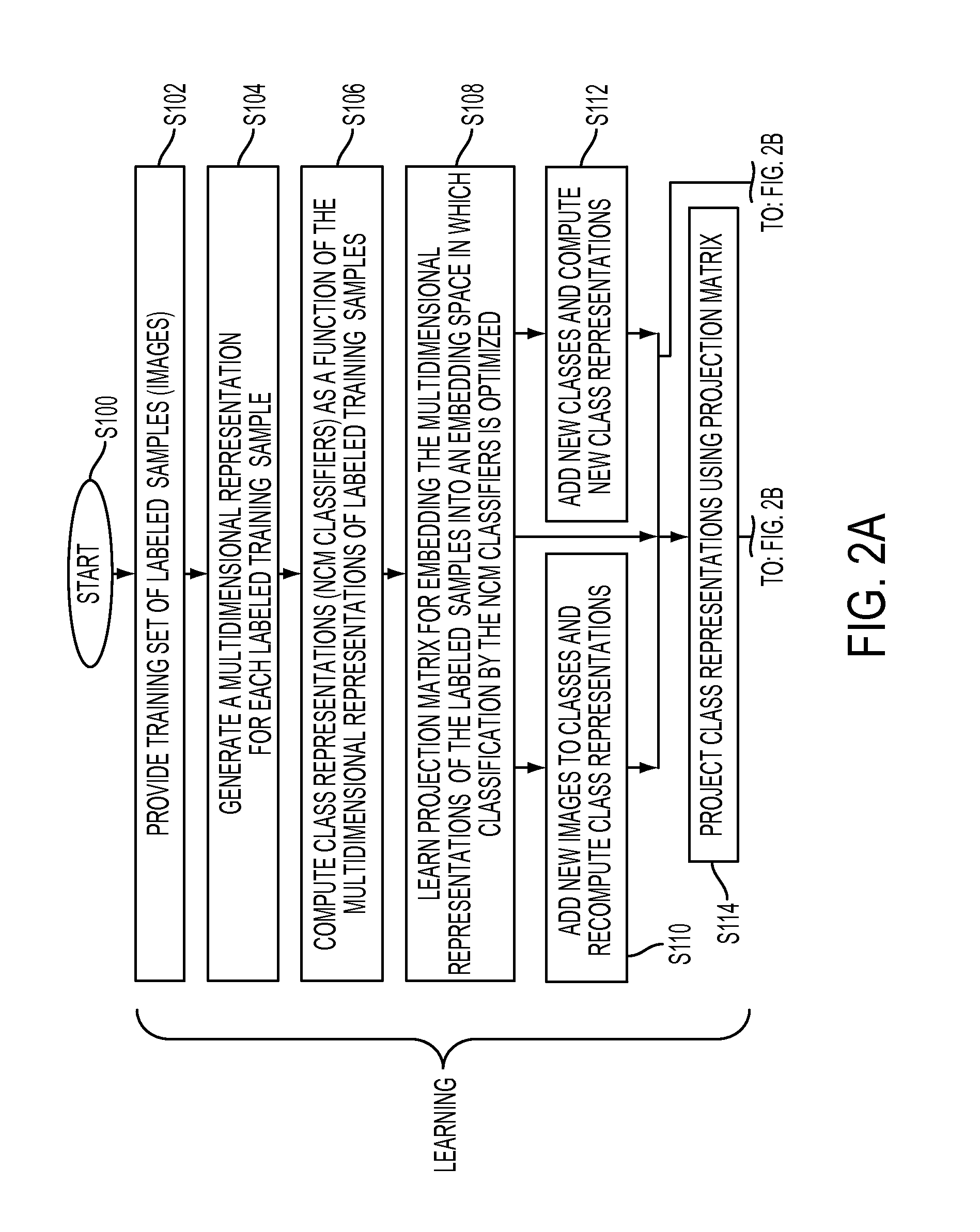

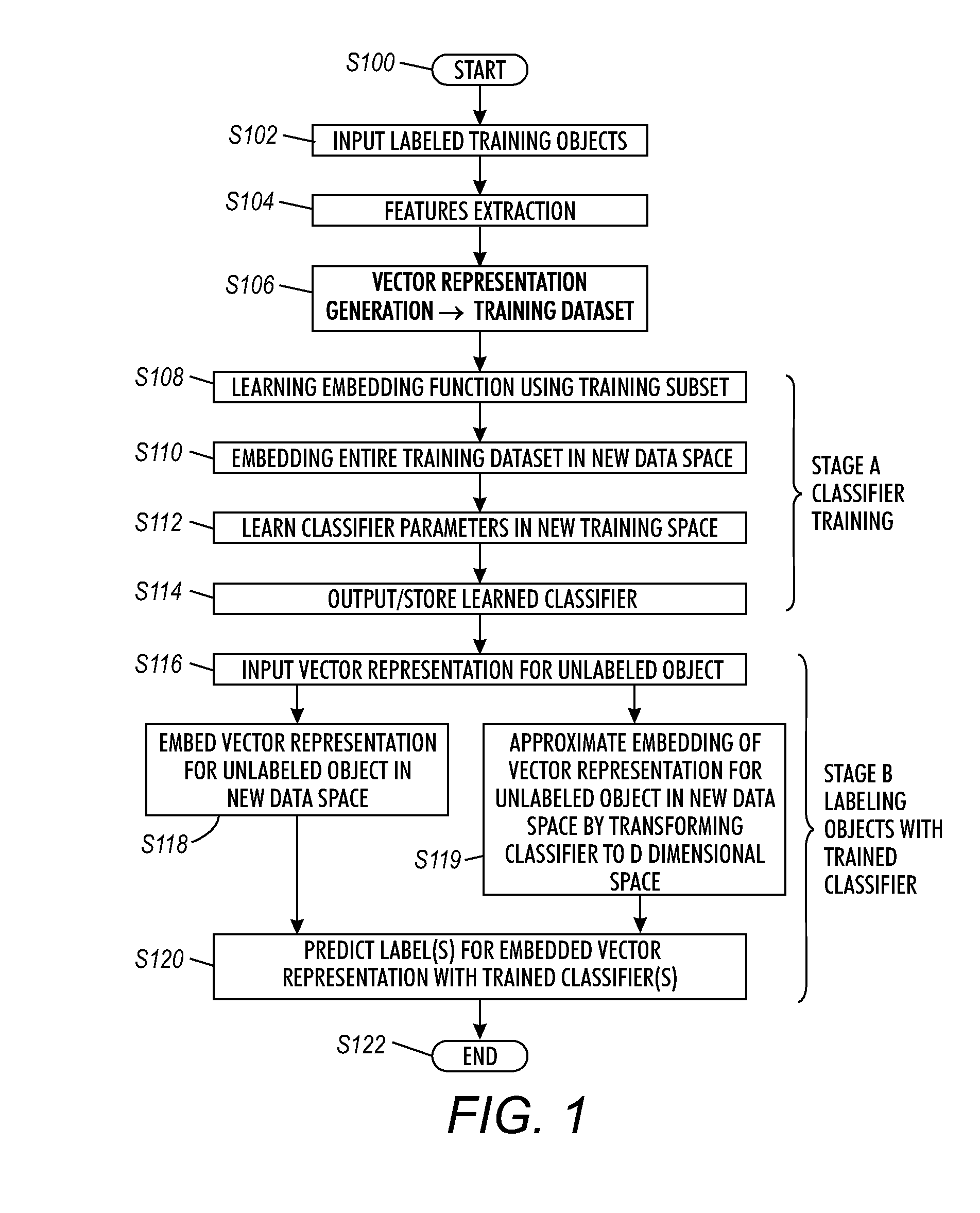

Metric learning for nearest class mean classifiers

A classification system and method enable improvements to classification with nearest class mean classifiers by computing a comparison measure between a multidimensional representation of a new sample and a respective multidimensional class representation embedded into a space of lower dimensionality than that of the multidimensional representations. The embedding is performed with a projection that has been learned on labeled samples to optimize classification with respect to multidimensional class representations for classes which may be the same or different from those used subsequently for classification. Each multidimensional class representation is computed as a function of a set of multidimensional representations of labeled samples, each labeled with the respective class. A class is assigned to the new sample based on the computed comparison measures.

Owner:XEROX CORP

Text categorization toolkit

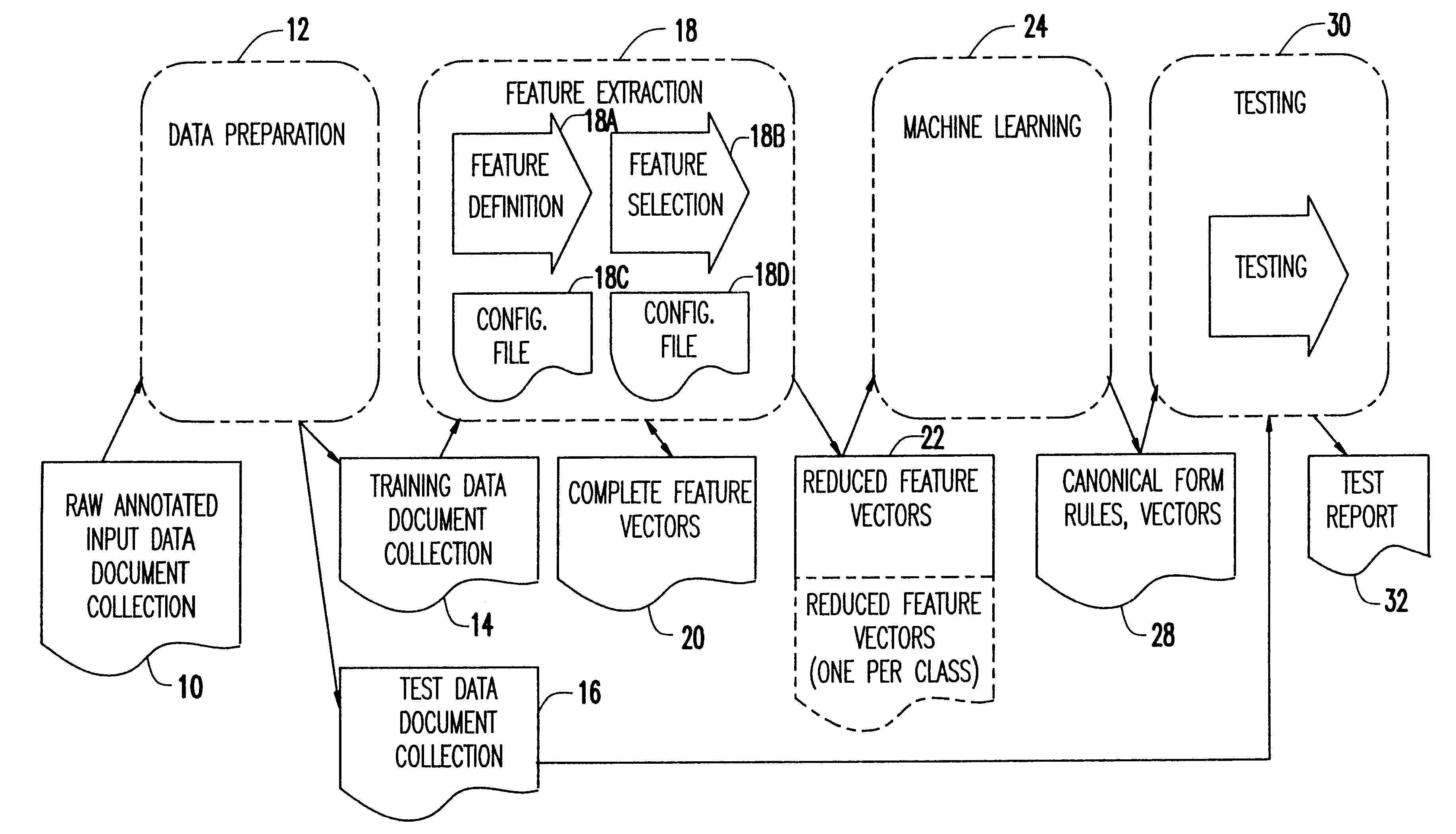

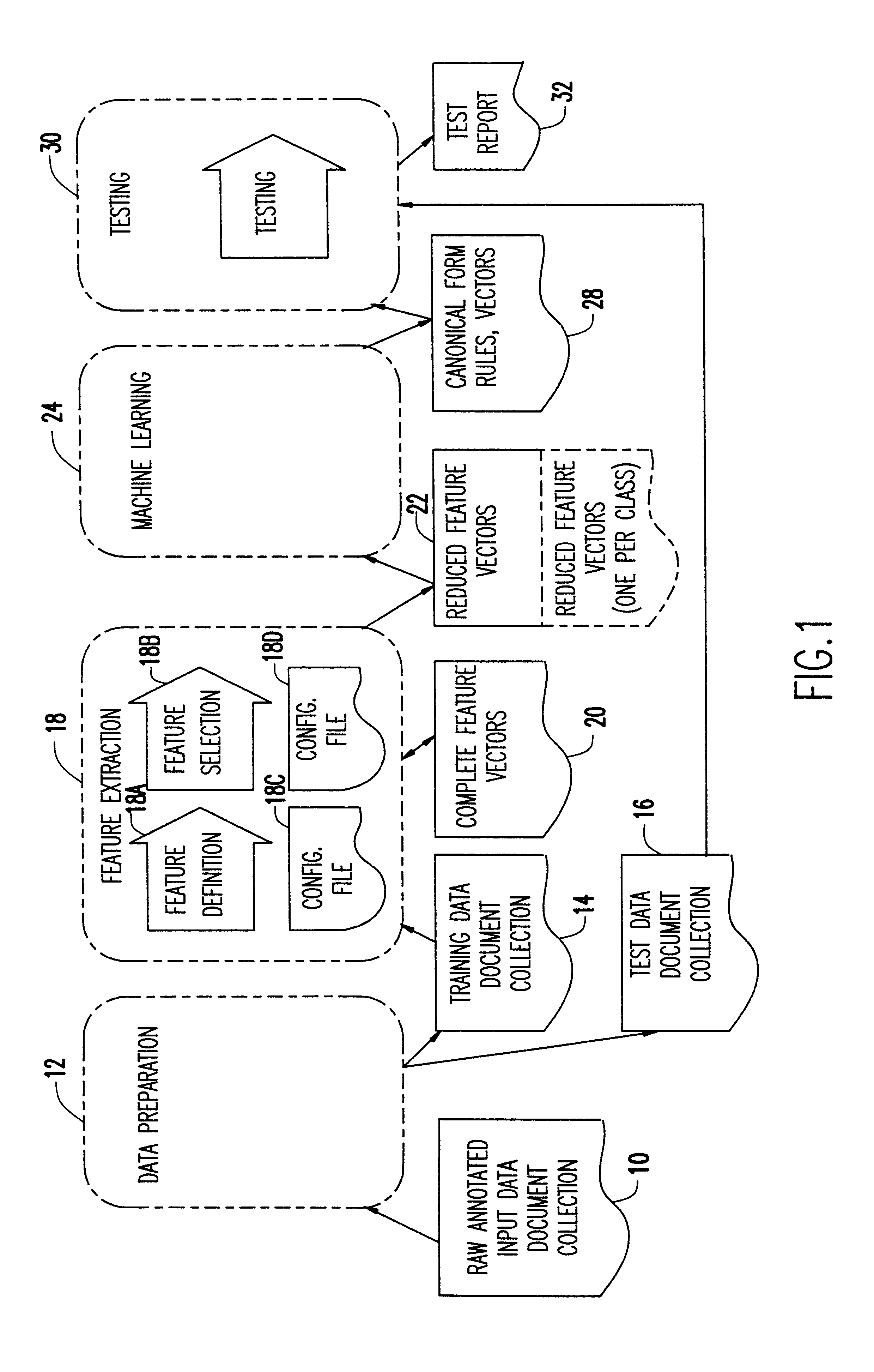

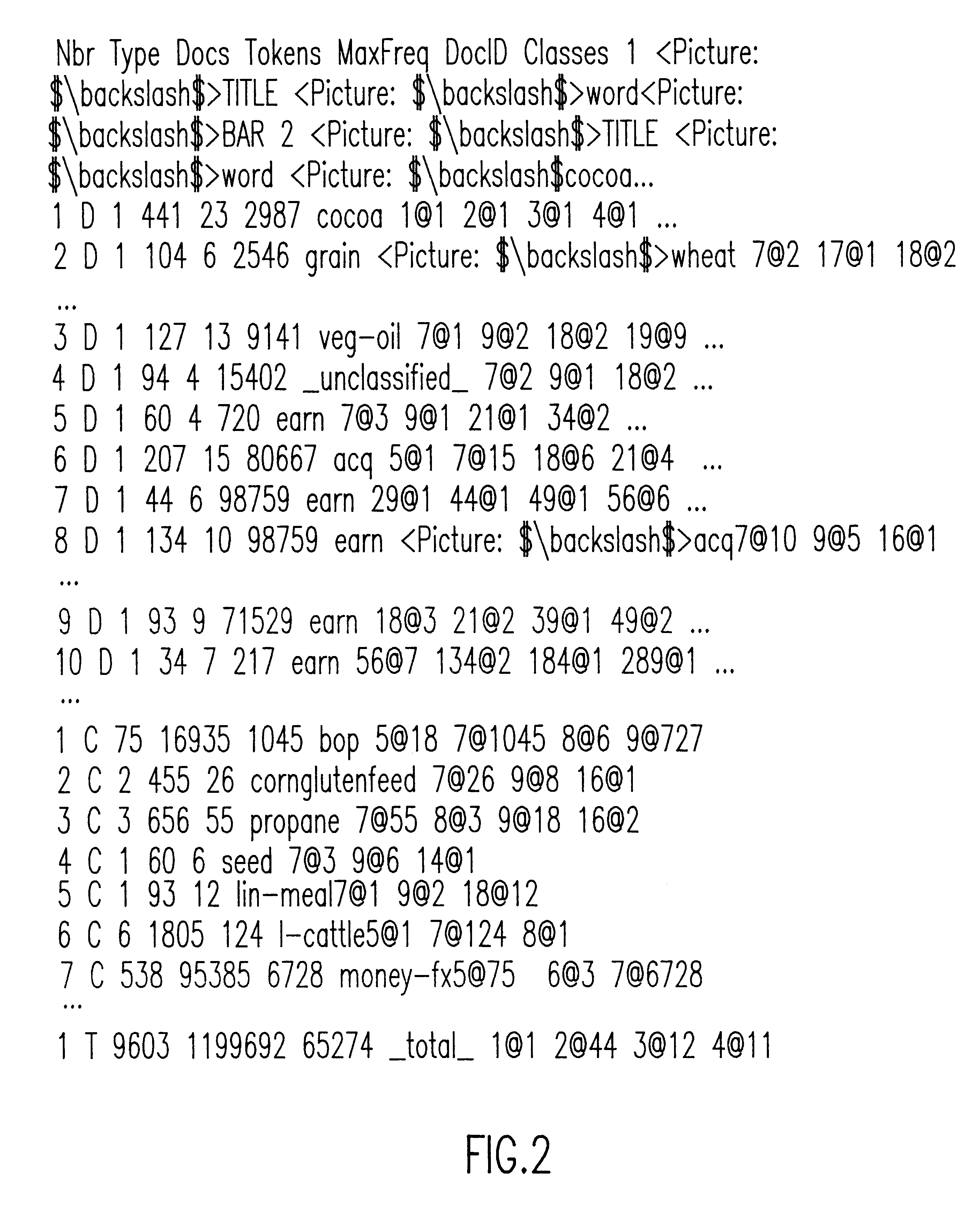

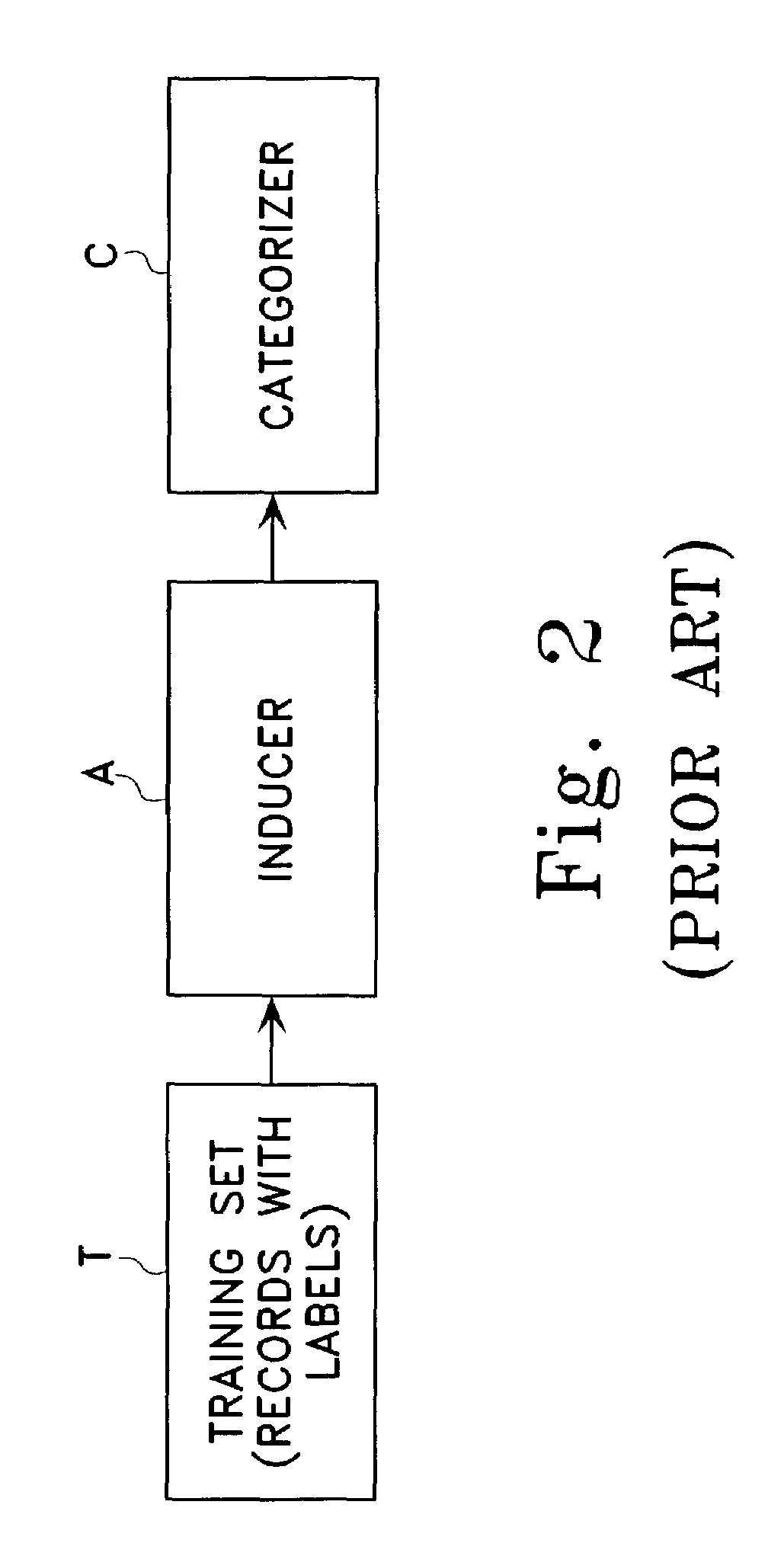

InactiveUS6212532B1Data processing applicationsDigital data information retrievalFeature extractionText categorization

A module information extraction system capable of extracting information from natural language documents. The system includes a plurality of interchangeable modules including a data preparation module for preparing a first set of raw data having class labels to be tested, the data preparation module being selected from a first type of the interchangeable modules. The system further includes a feature extraction module for extracting features from the raw data received from the data preparation module and storing the features in a vector format, the feature extraction module being selected from a second type of the interchangeable modules. A core classification module is also provided for applying a learning algorithm to the stored vector format and producing therefrom a resulting classifier, the core classification module being selected from a third type of the interchangeable modules. A testing module compares the resulting classifier to a set of preassigned classes, where the testing module is selected from a fourth type of the interchangeable modules, where the testing module tests a second set of raw data having class labels received by the data preparation module to determine the degree to which the class labels of the second set of raw data approximately corresponds to the resulting classifier.

Owner:IBM CORP

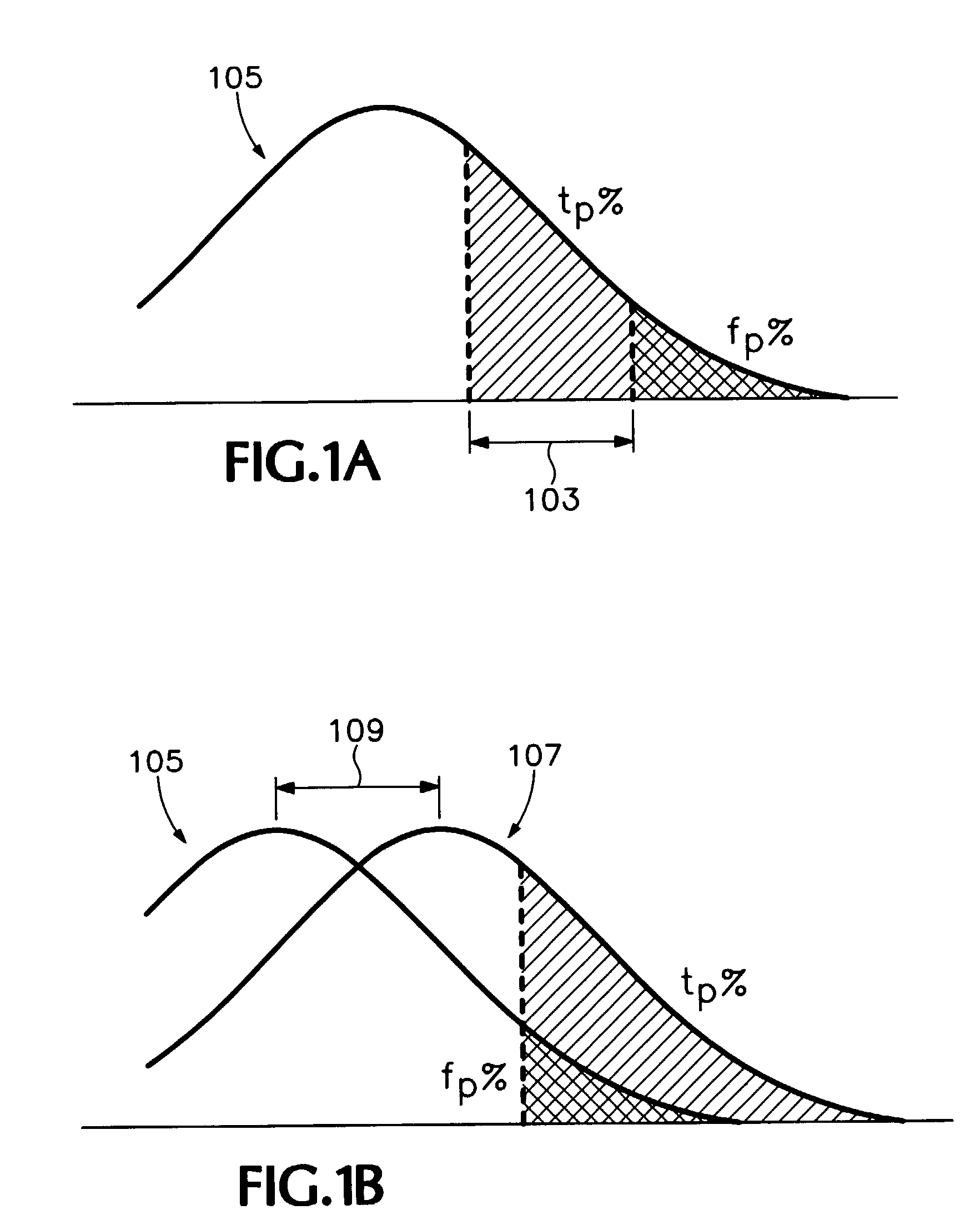

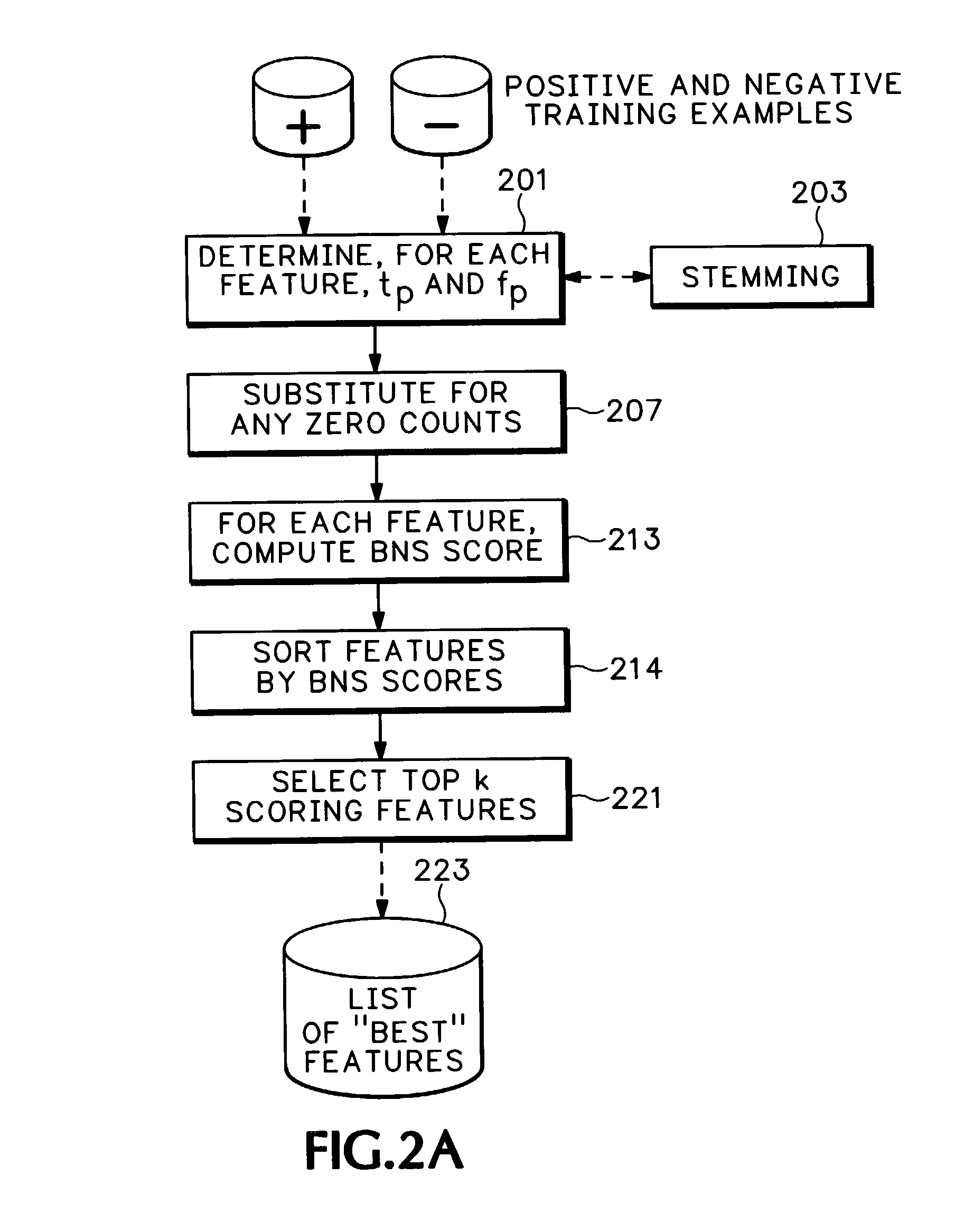

Feature selection for two-class classification systems

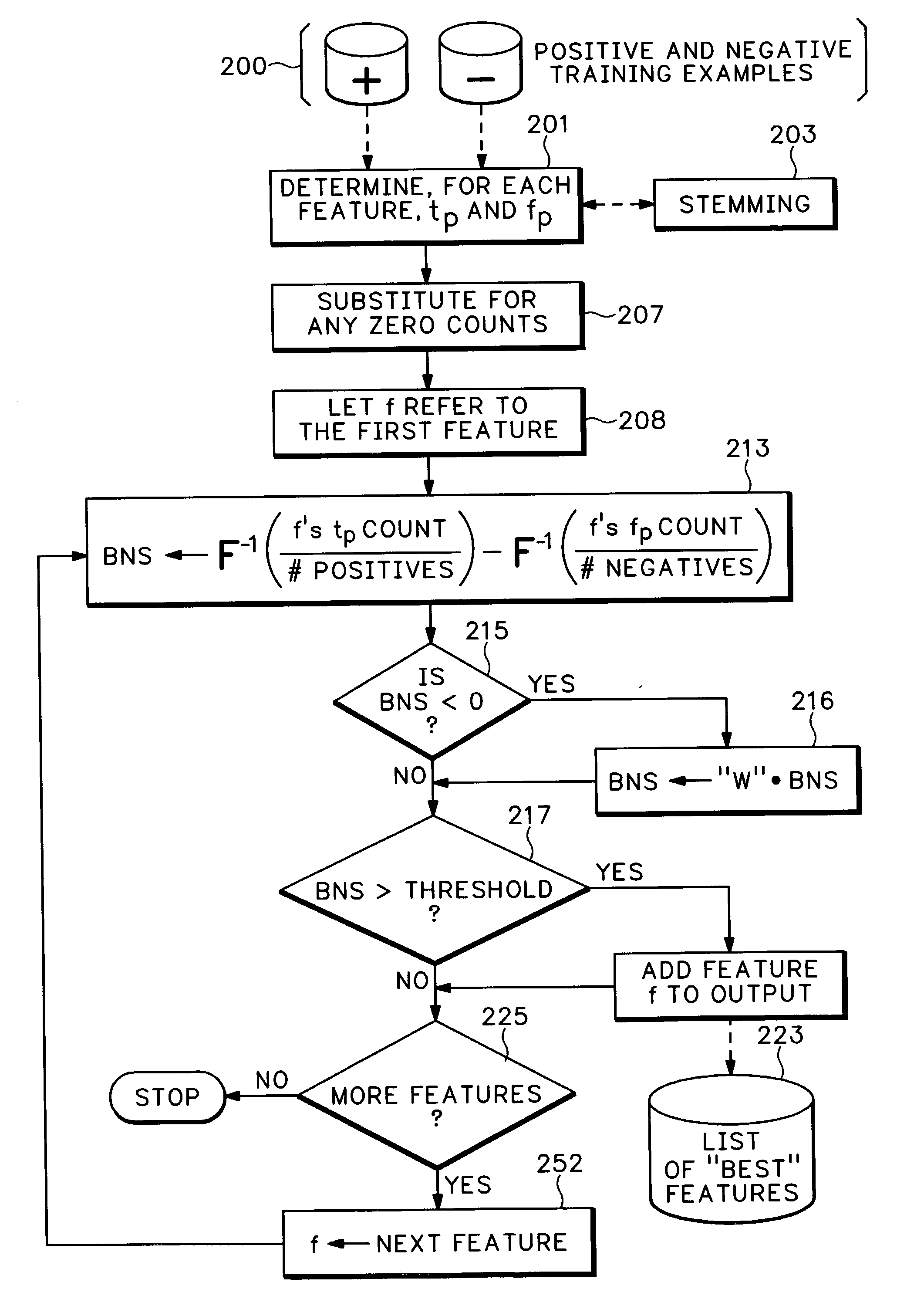

ActiveUS20040059697A1Improve performanceDigital data processing detailsDigital computer detailsText categorizationFeature selection

A two-class analysis system for summarizing features and determining features appropriate to use in training a classifier related to a data mining operation. Exemplary embodiments describe how to select features which will be suited to training a classifier used for a two-class text classification problem. Bi-Normal Separation methods are defined wherein there is a measure of inverse cumulative distribution function of a standard probability distribution and representative of a difference between occurrences of the feature between said each class. In addition to training a classifier, the system provides a means of summarizing differences between classes.

Owner:MICRO FOCUS LLC

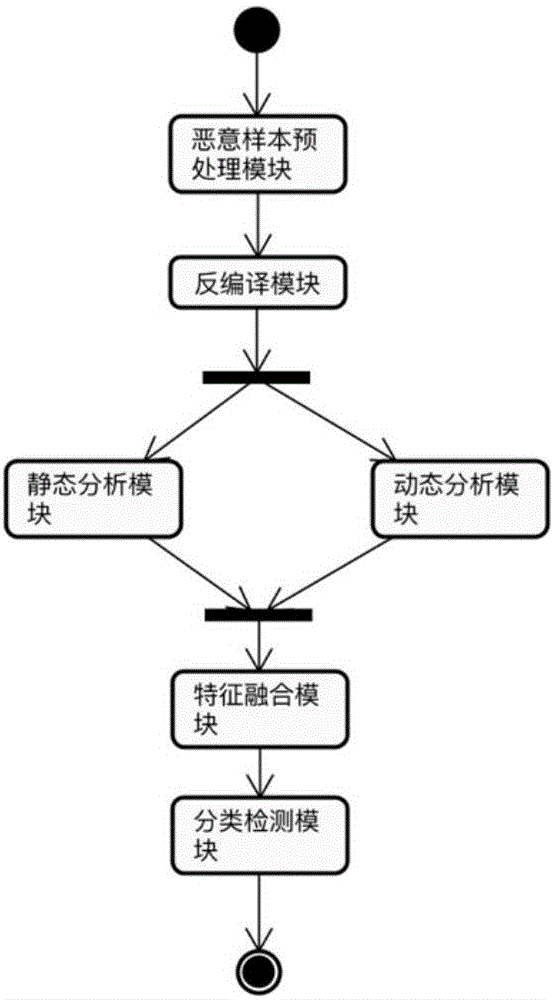

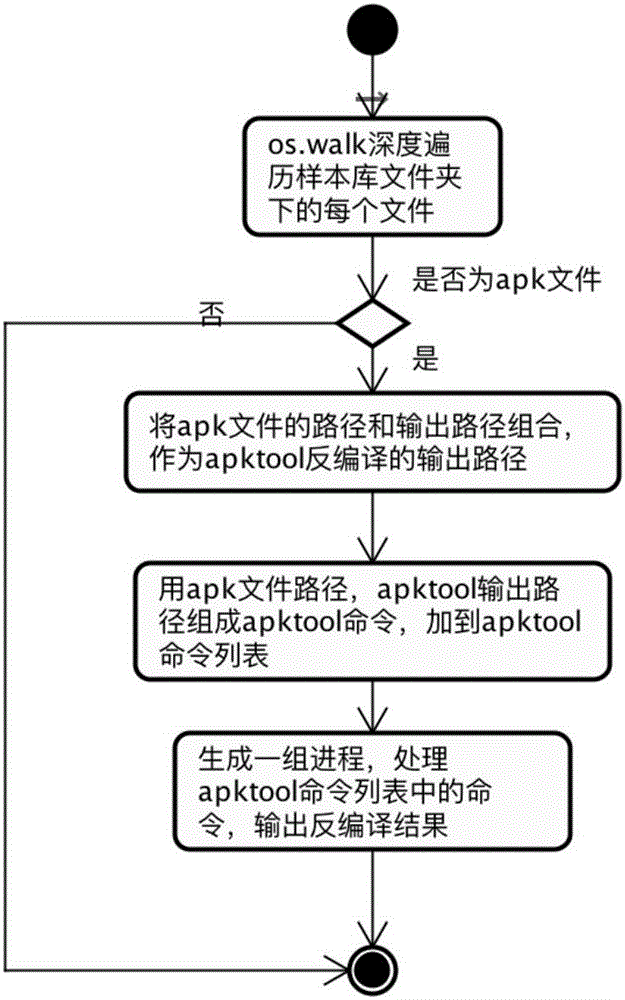

Android malicious application detection method and system based on multi-feature fusion

ActiveCN107180192AImprove detection accuracyReflect malicious appsCharacter and pattern recognitionPlatform integrity maintainanceFeature vectorAnalytical problem

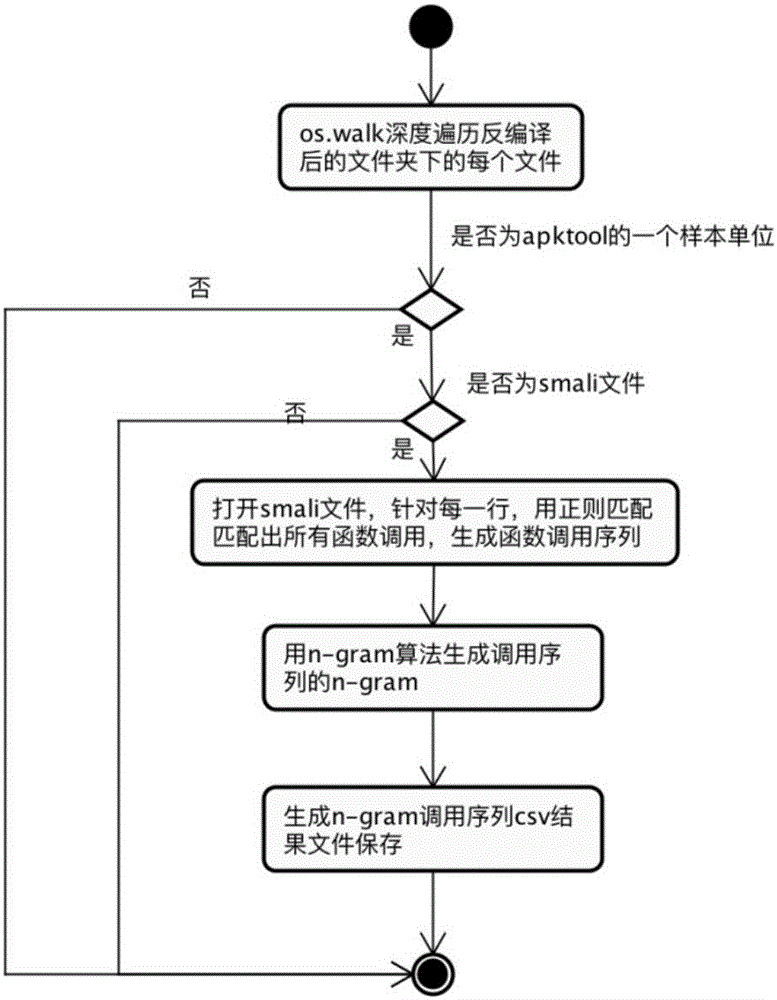

The invention discloses an Android malicious application detection method and system based on multi-feature fusion. The method comprises the following steps that: carrying out decompilation on an Android application sample to obtain a decompilation file; extracting static features from the decompilation file; operating the Android application sample in an Android simulator to extract dynamic features; carrying out feature mapping on the static features and the dynamic features by the text Hash mapping part of a locality sensitive Hash algorithm, mapping to a low-dimensional feature space to obtain a fused feature vector; and on the basis of the fused feature vector, utilizing a machine learning classification algorithm to train to obtain a classifier, and utilizing the classifier to carry out classification detection. By use of the method, the high-dimensional feature analysis problem of a malicious code rare sample family can be solved, and detection accuracy is improved.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

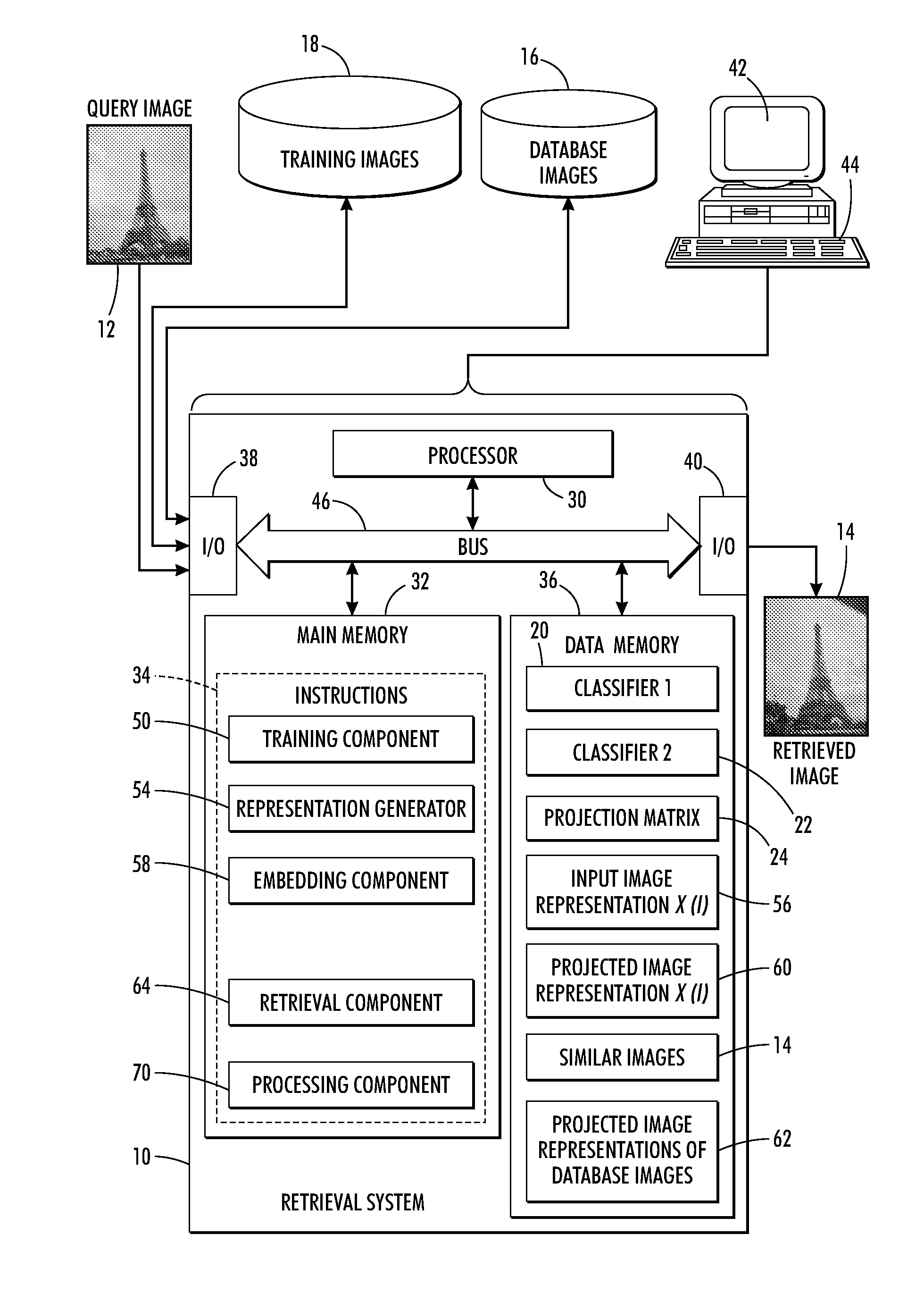

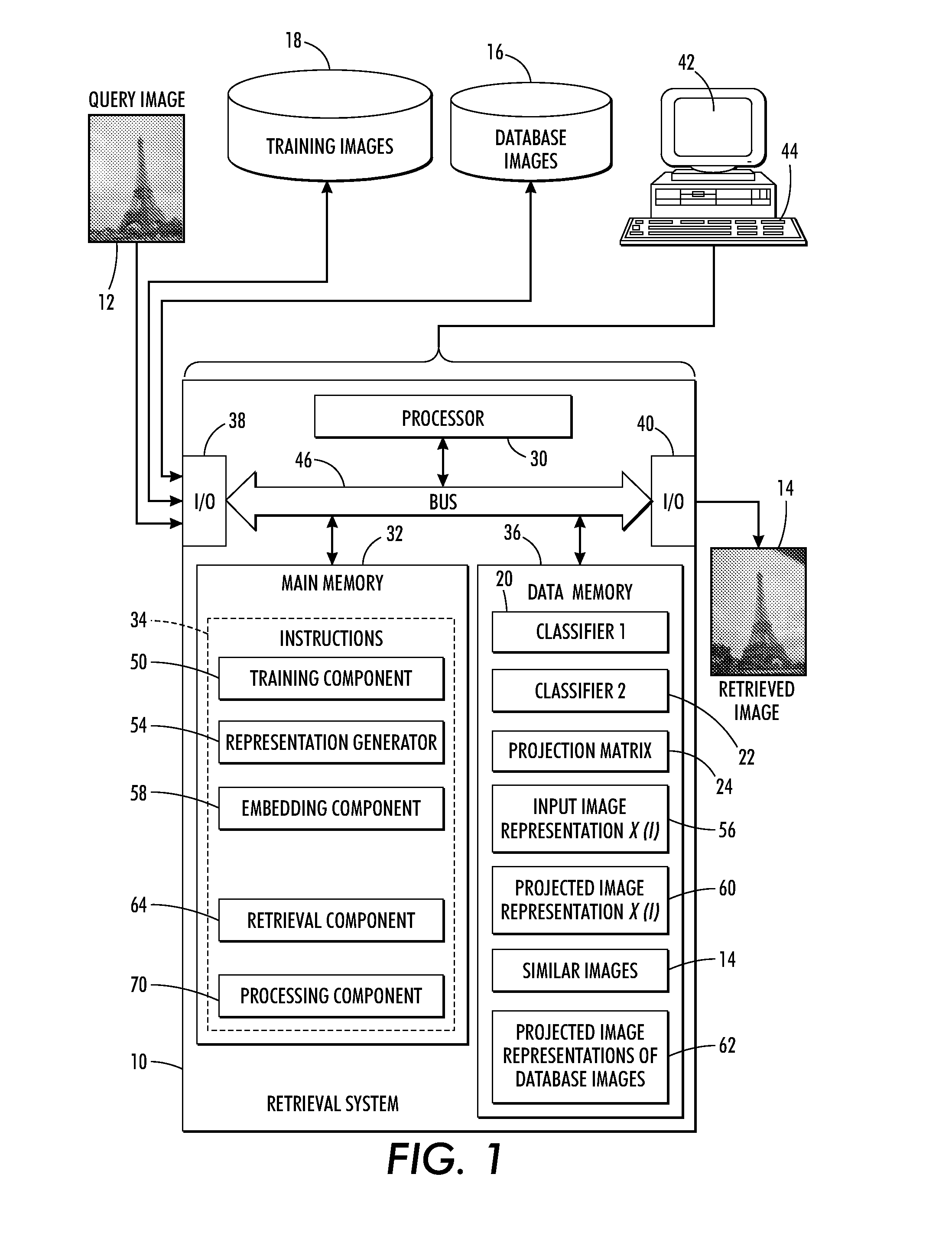

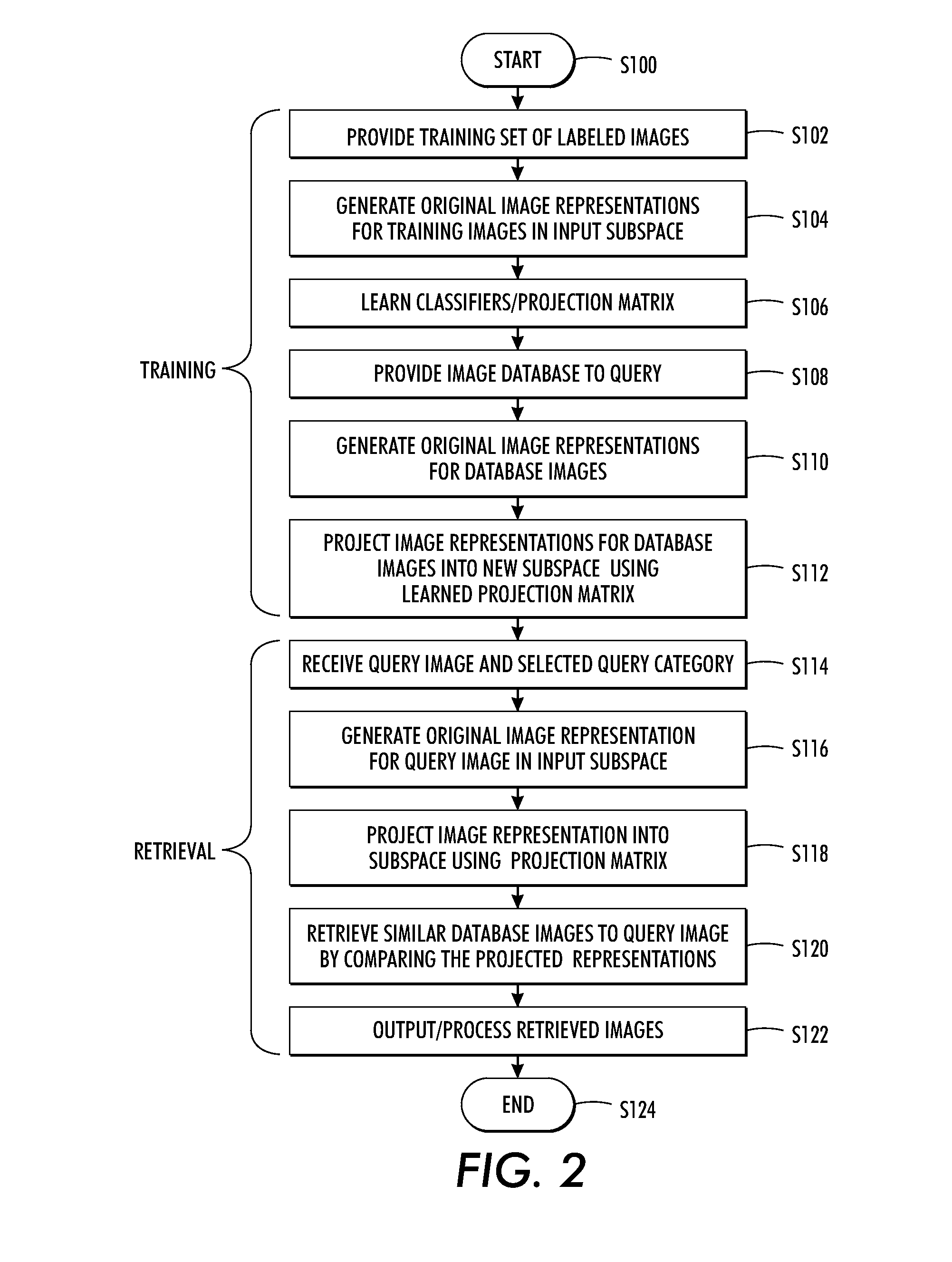

Retrieval system and method leveraging category-level labels

InactiveUS20130290222A1Digital data processing detailsDigital computer detailsComputer visionMachine learning

An instance-level retrieval method and system are provided. A representation of a query image is embedded in a multi-dimensional space using a learned projection. The projection is learned using category-labeled training data to optimize a classification rate on the training data. The joint learning of the projection and the classifiers improves the computation of similarity / distance between images by embedding them in a subspace where the similarity computation outputs more accurate results. An input query image can thus be used to retrieve similar instances in a database by computing the comparison measure in the embedding space.

Owner:XEROX CORP

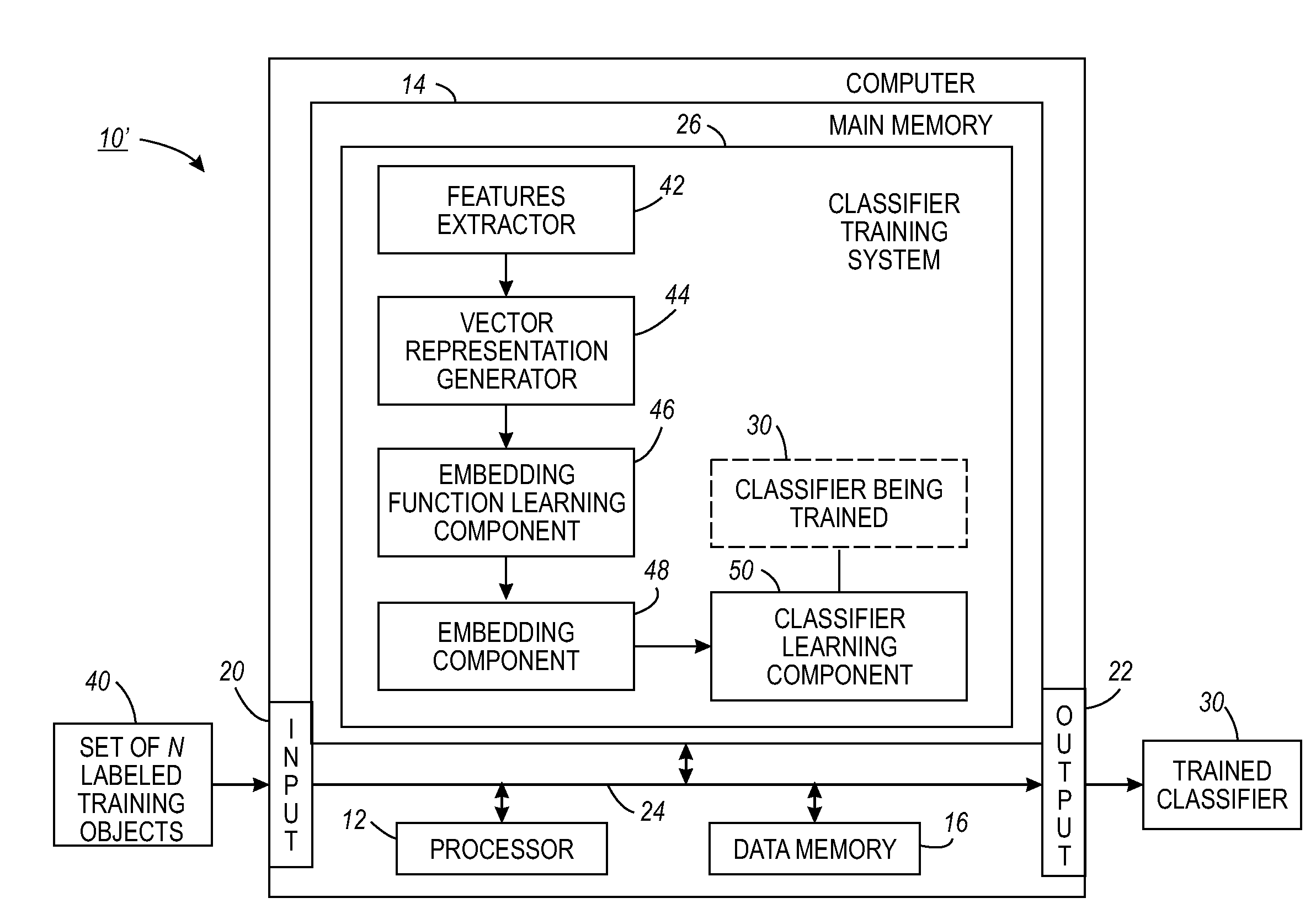

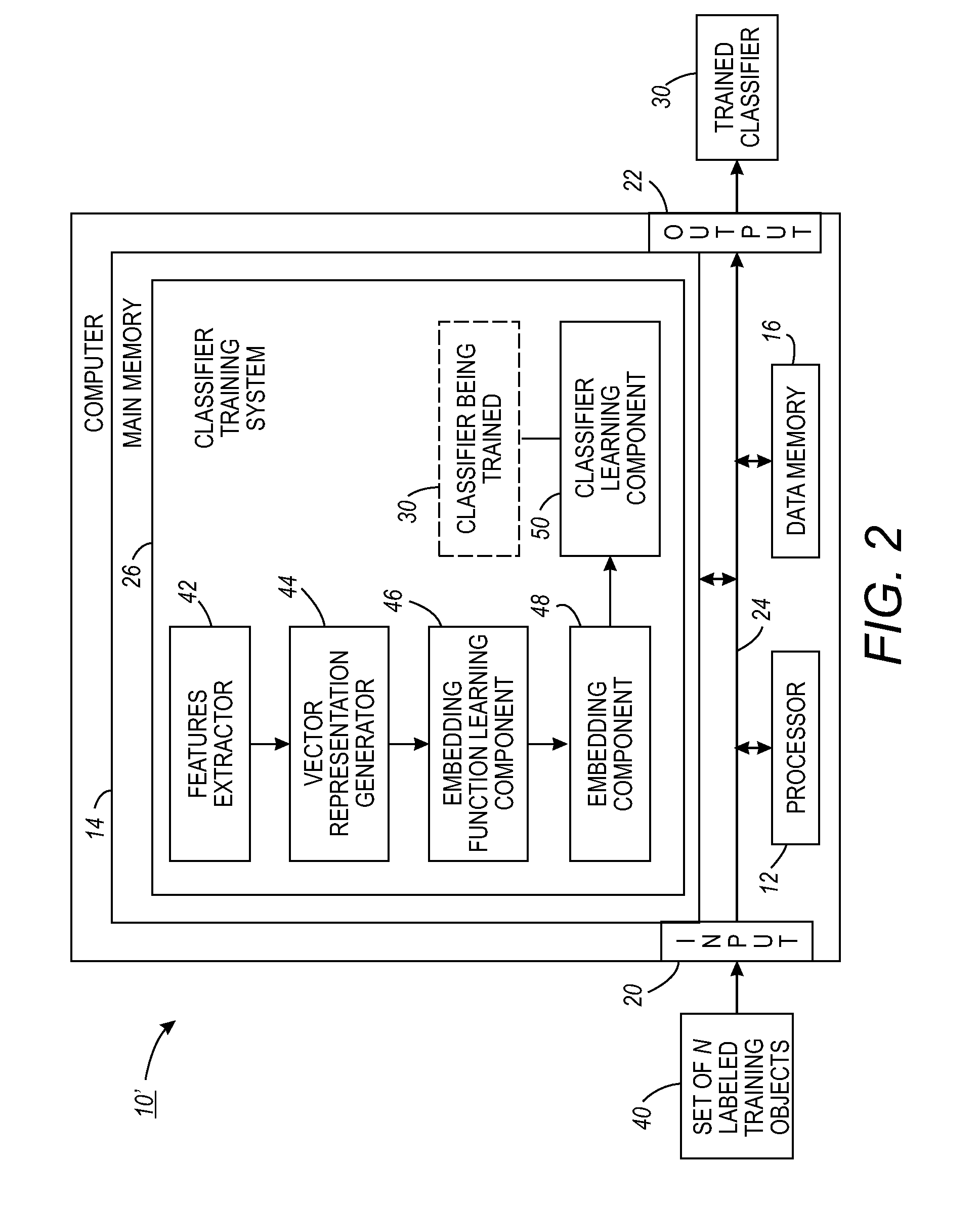

Training a classifier by dimension-wise embedding of training data

A classifier training method and apparatus for training, a linear classifier trained by the method, and its use, are disclosed. In training the linear classifier, signatures for a set of training samples, such as images, in the form of multi-dimension vectors in a first multi-dimensional space, are converted to a second multi-dimension space, of the same or higher dimensionality than the first multi-dimension space, by applying a set of embedding functions, one for each dimension of the vector space. A linear classifier is trained in the second multi-dimension space. The linear classifier can approximate the accuracy of a non-linear classifier in the original space when predicting labels for new samples, but with lower computation cost in the learning phase.

Owner:XEROX CORP

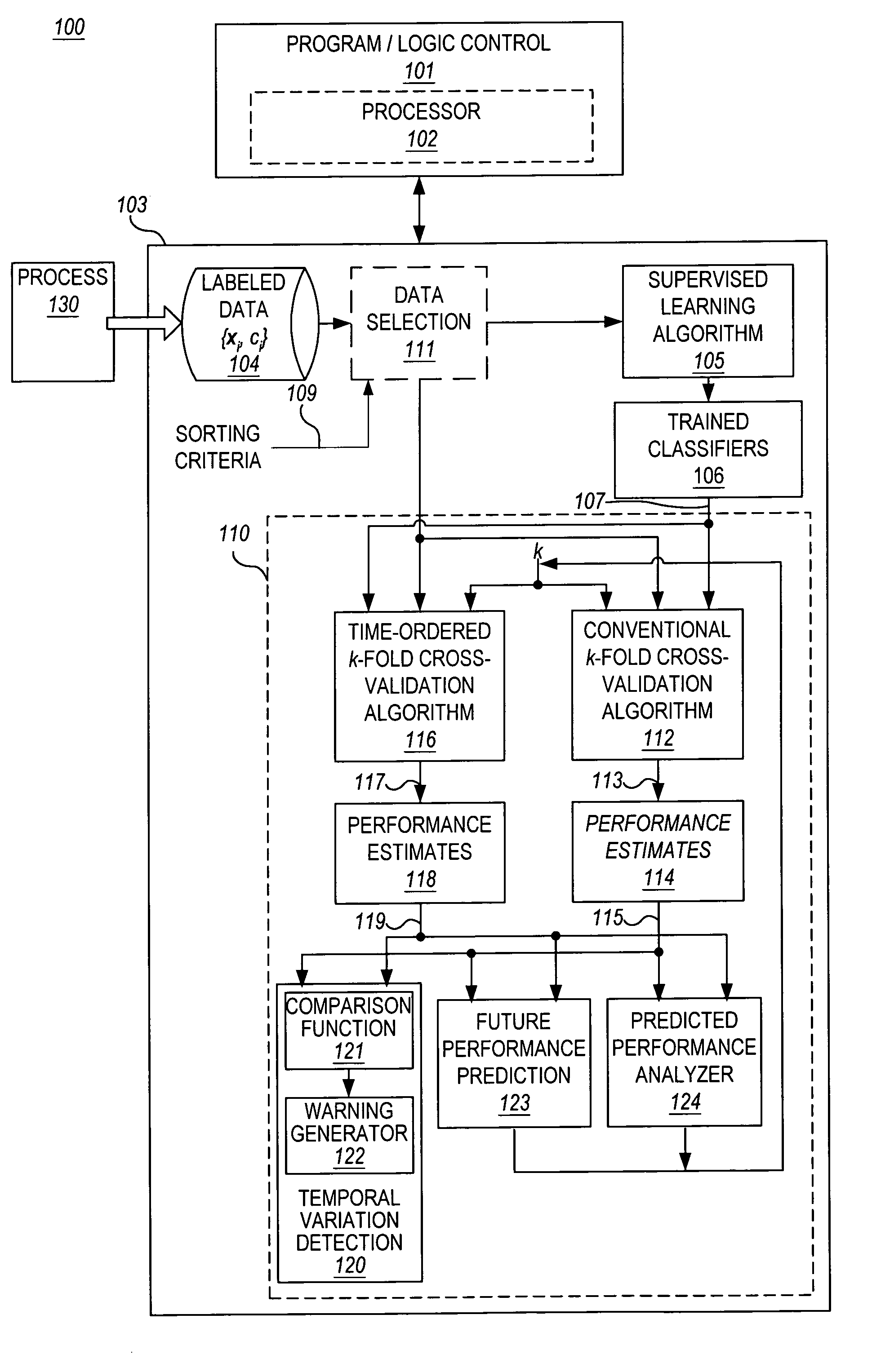

Methods and apparatus for detecting temporal process variation and for managing and predicting performance of automatic classifiers

InactiveUS20060074828A1Digital computer detailsCharacter and pattern recognitionData miningPredicting performance

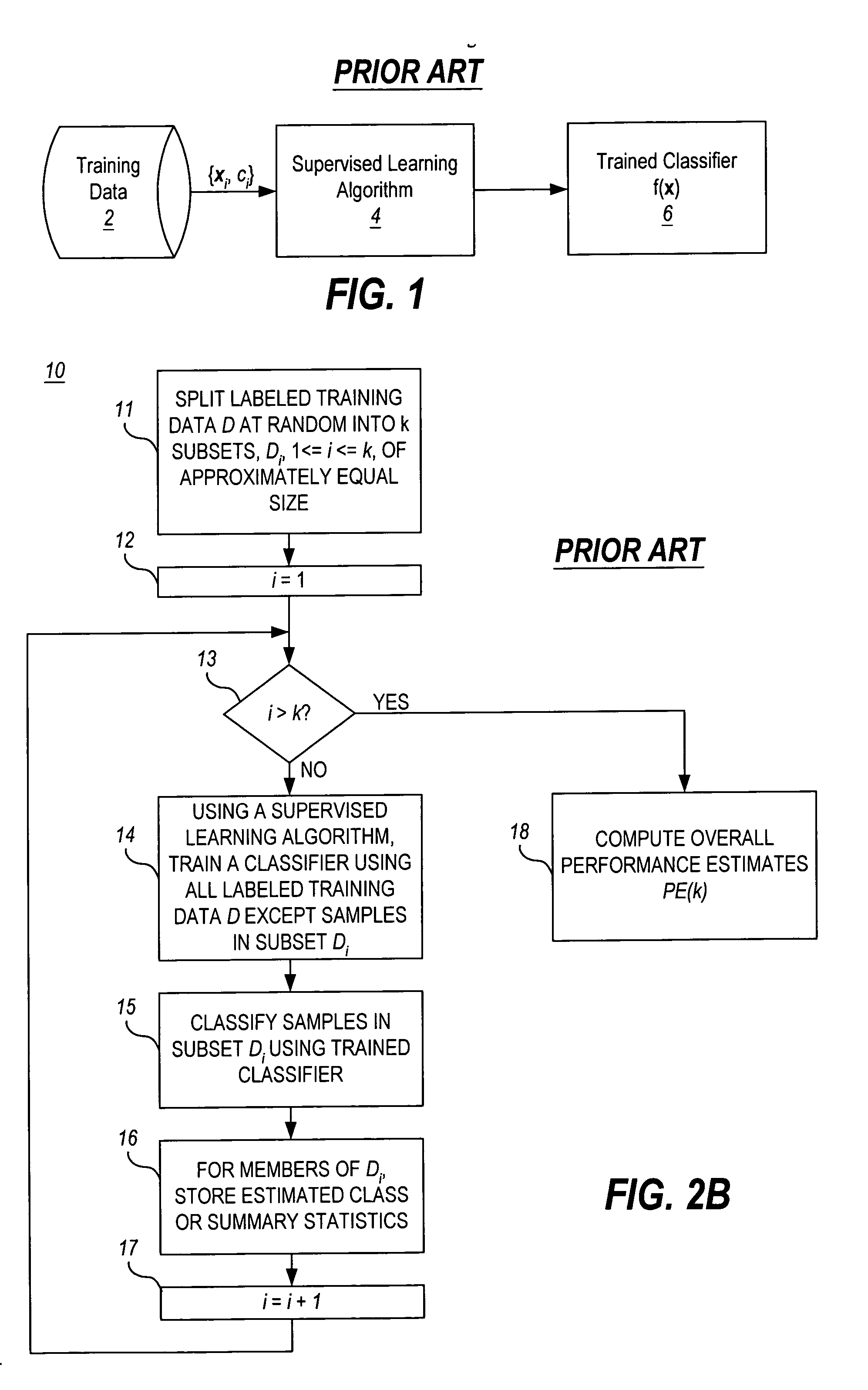

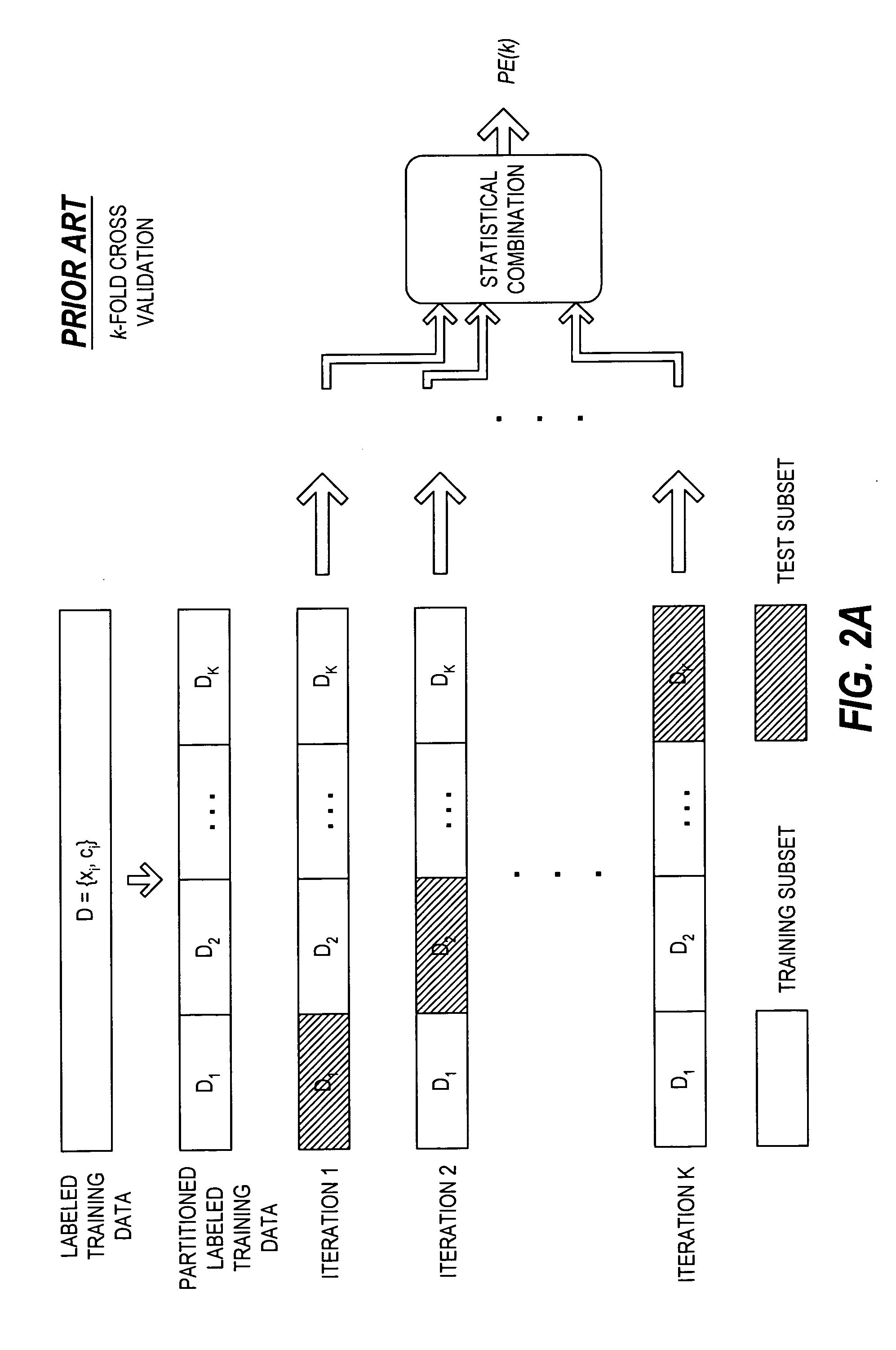

Techniques for detecting temporal process variation and for managing and predicting performance of automatic classifiers applied to such processes using performance estimates based on temporal ordering of the samples are presented.

Owner:AGILENT TECH INC

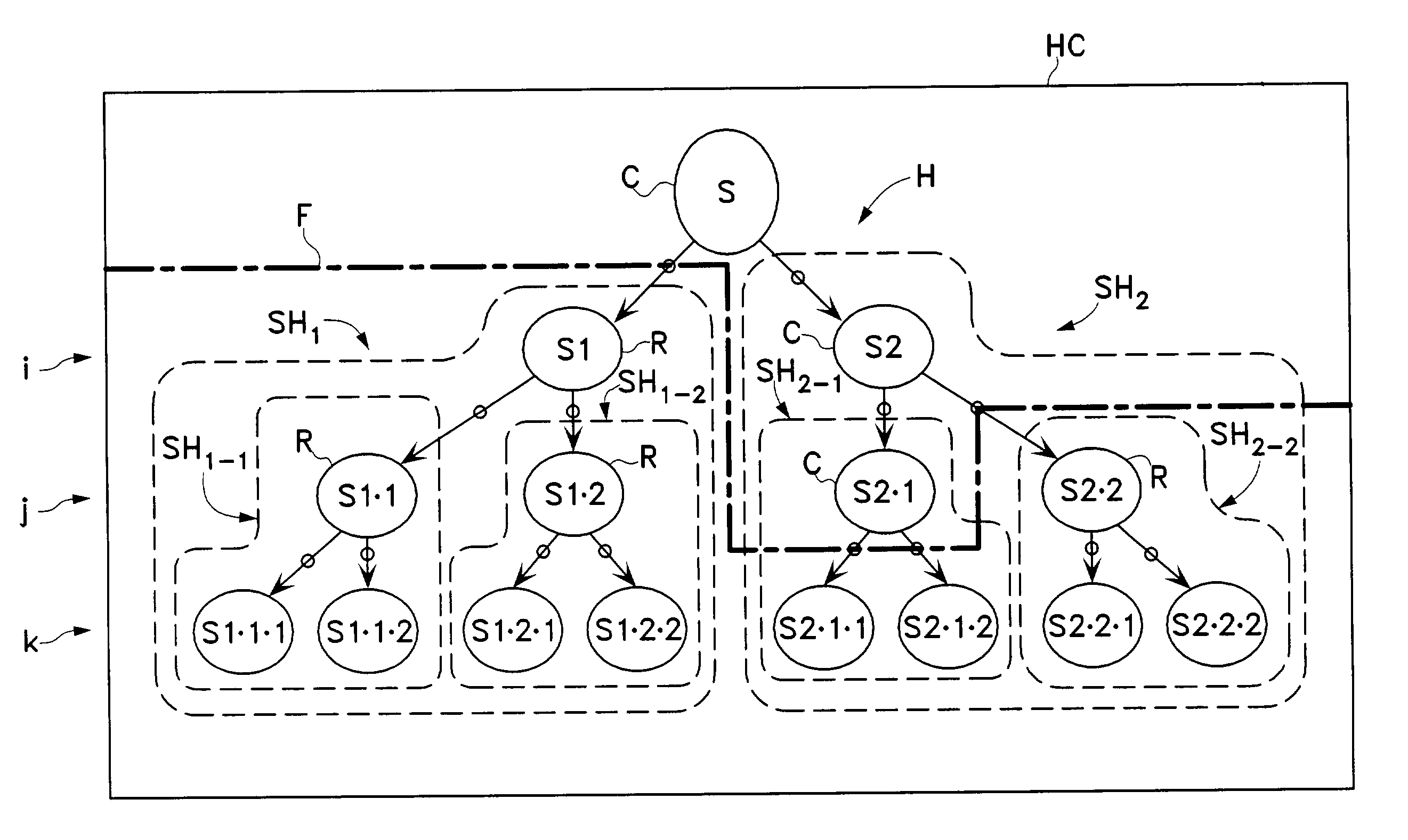

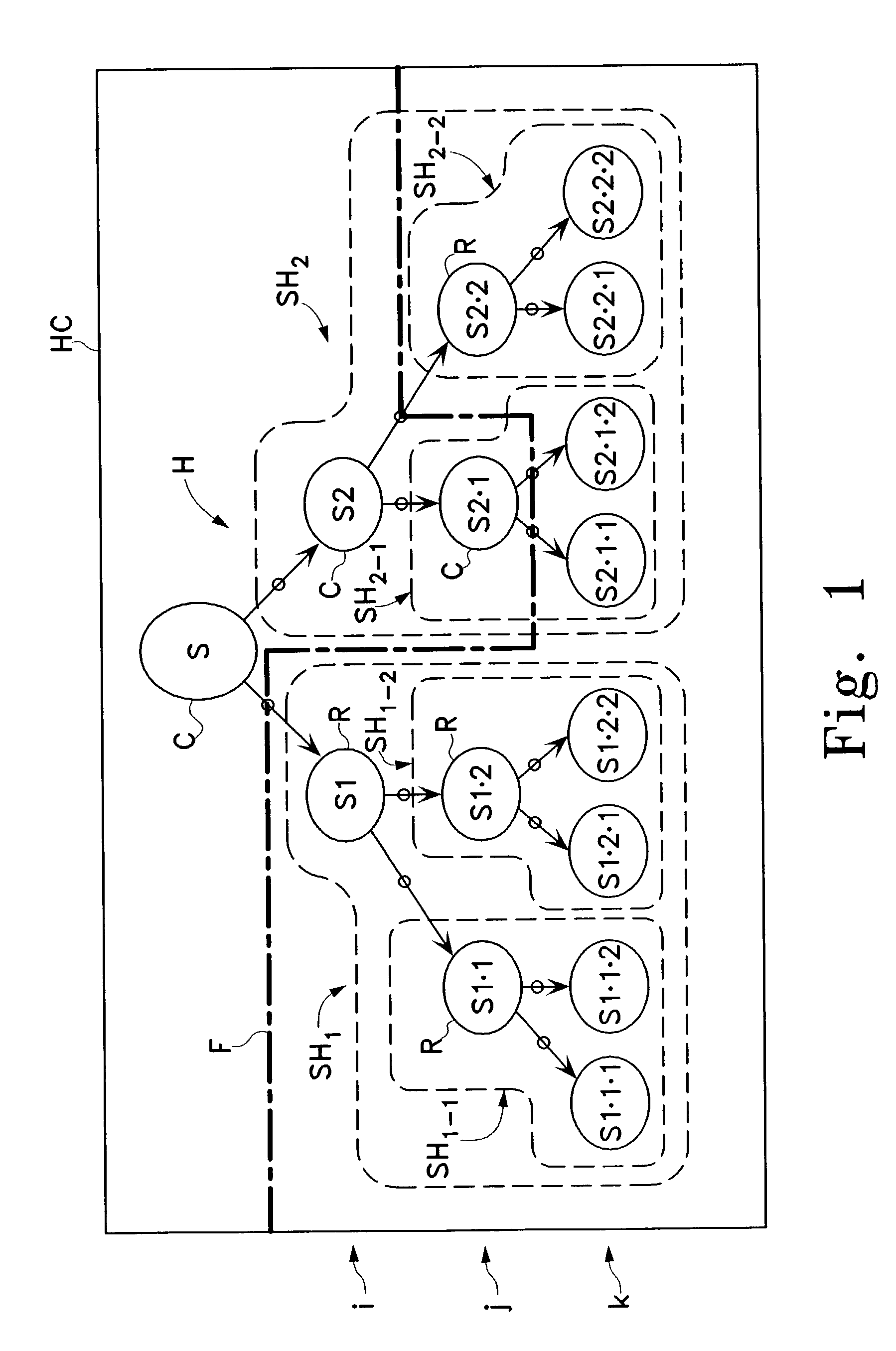

Hierarchical categorization method and system with automatic local selection of classifiers

InactiveUS7349917B2Sufficient dataSufficient performanceData processing applicationsDigital data processing detailsClassification ruleLocal selection

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

Hierarchical categorization method and system with automatic local selection of classifiers

InactiveUS20040064464A1Solve the lack of training dataSufficient performanceData processing applicationsDigital data processing detailsClassification ruleLocal selection

The present invention relates generally to the classification of items into categories, and more generally, to the automatic selection of different classifiers at different places within a hierarchy of categories. An exemplary hierarchical categorization method uses a hybrid of different classification technologies, with training-data based machine-learning classifiers preferably being used in those portions of the hierarchy above a dynamically defined boundary in which adequate training data is available, and with a-priori classification rules not requiring any such training-data being used below that boundary, thereby providing a novel hybrid categorization technology that is capable of leveraging the strengths of its components. In particular, it enables the use of human-authored rules in those finely divided portions towards the bottom of the hierarchy involving relatively close decisions for which it is not practical to create in advance sufficient training data to ensure accurate classification by known machine-learning algorithms, while still facilitating eventual change-over within the hierarchy to machine learning algorithms as sufficient training data becomes available to ensure acceptable performance in a particular sub-portion of the hierarchy.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

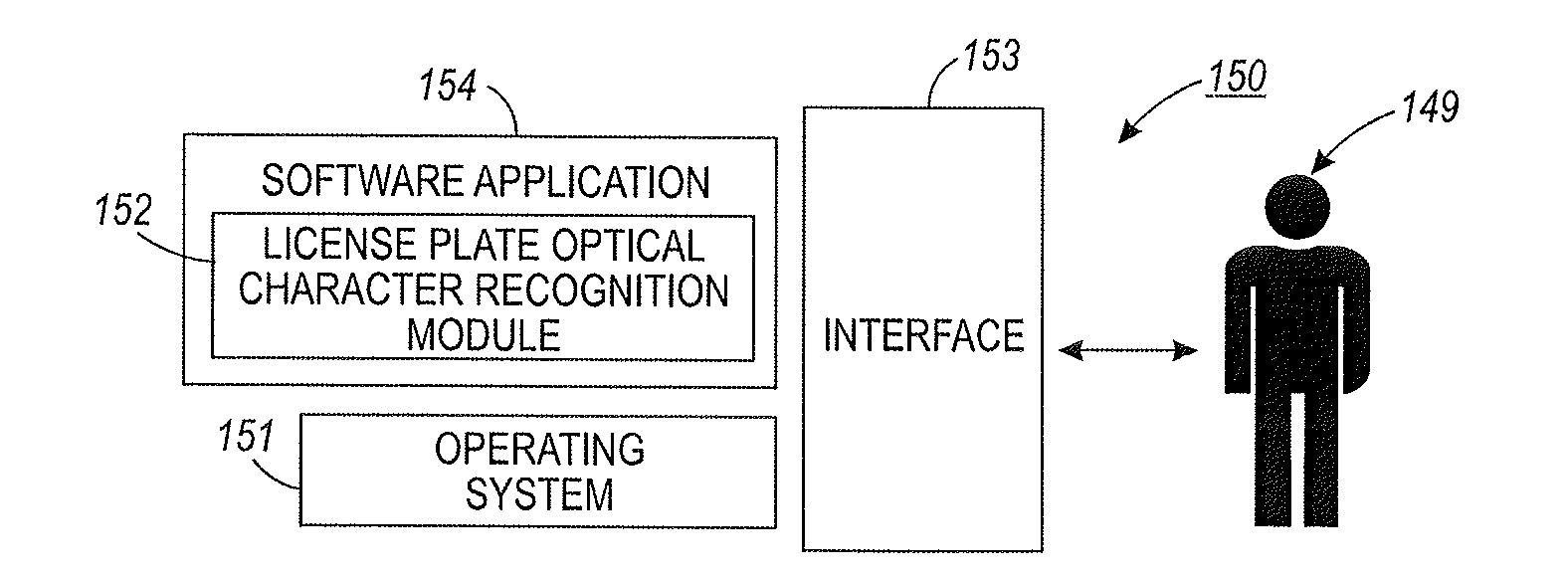

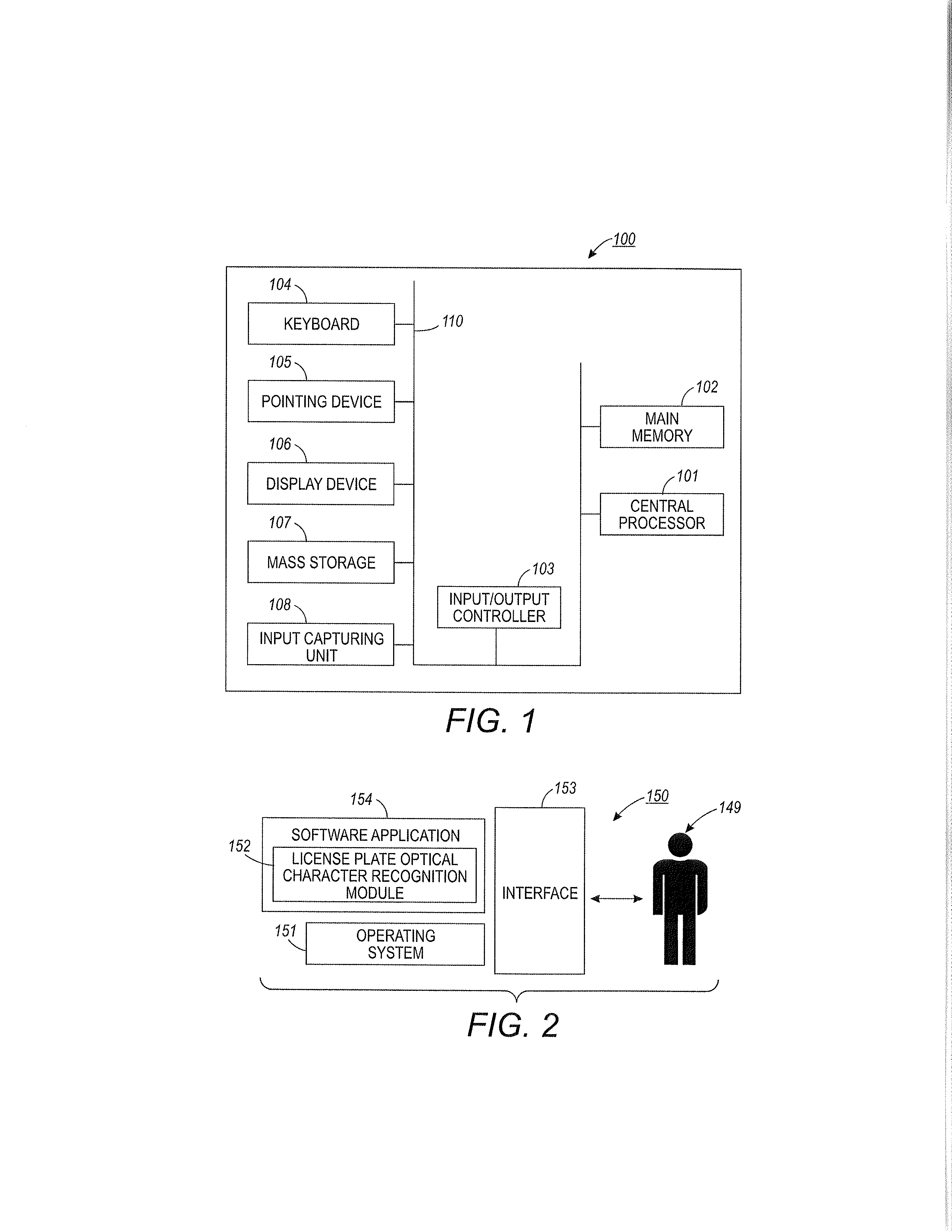

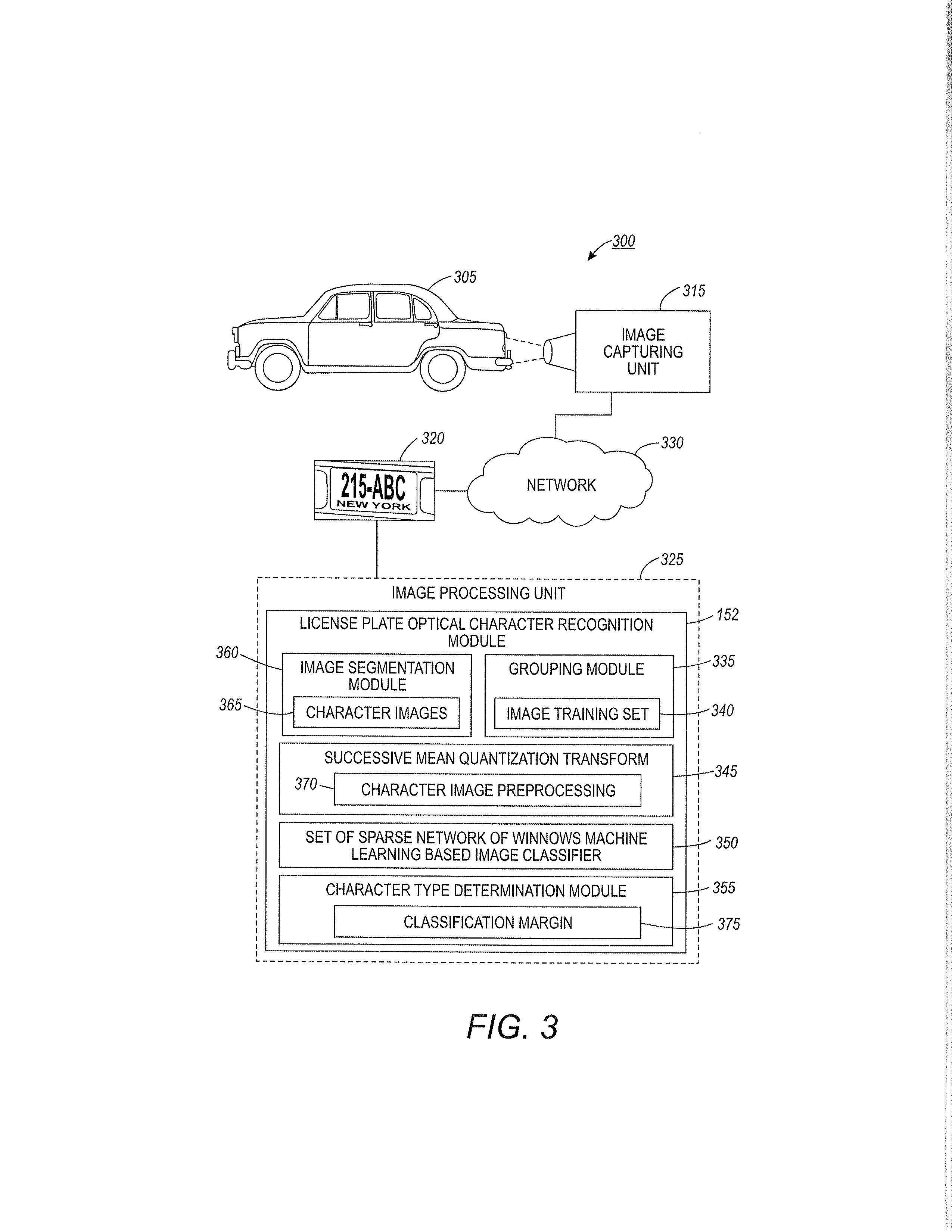

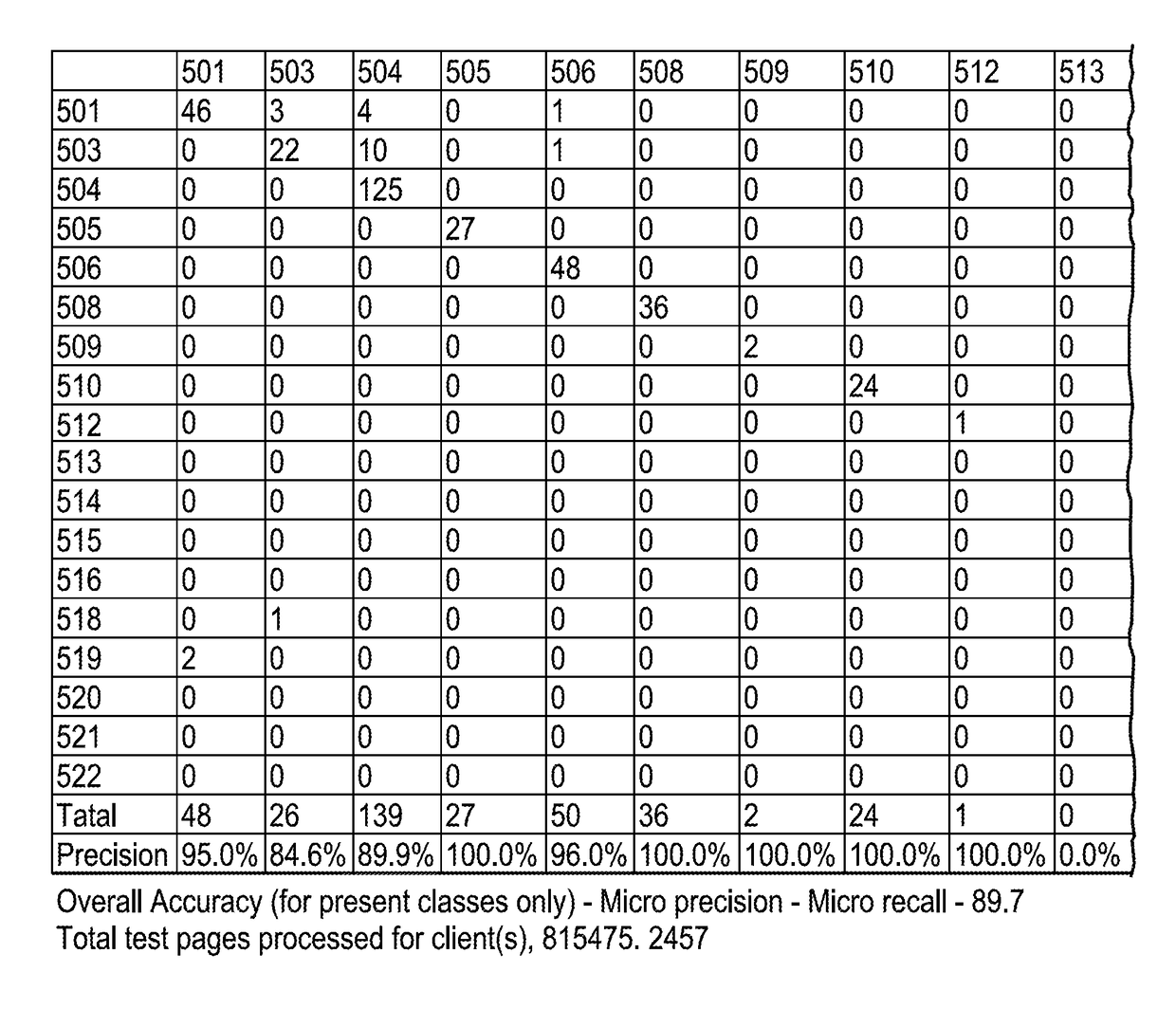

License plate optical character recognition method and system

ActiveUS20130182910A1Efficient managementImprove performanceCharacter recognitionImage segmentationOptical character recognition

A method and system for recognizing a license plate character utilizing a machine learning classifier. A license plate image with respect to a vehicle can be captured by an image capturing unit and the license plate image can be segmented into license plate character images. The character image can be preprocessed to remove a local background variation in the image and to define a local feature utilizing a quantization transformation. A classification margin for each character image can be identified utilizing a set of machine learning classifiers each binary in nature, for the character image. Each binary classifier can be trained utilizing a character sample as a positive class and all other characters as well as non-character images as a negative class. The character type associated with the classifier with a largest classification margin can be determined and the OCR result can be declared.

Owner:CONDUENT BUSINESS SERVICES LLC

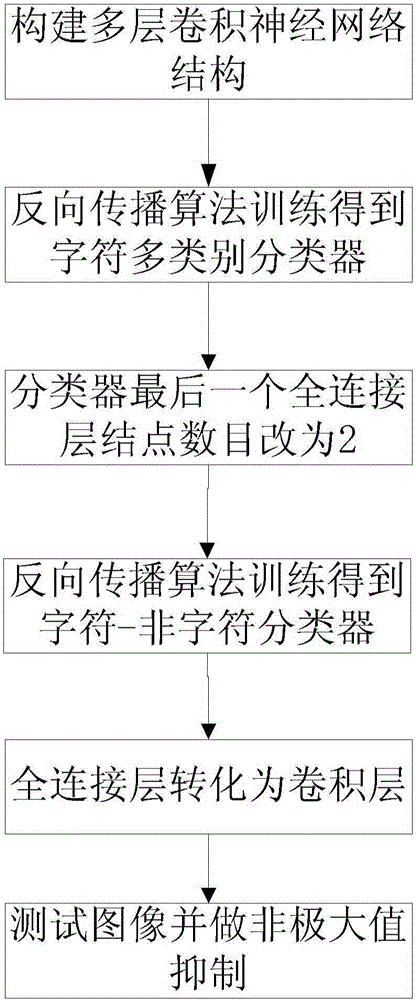

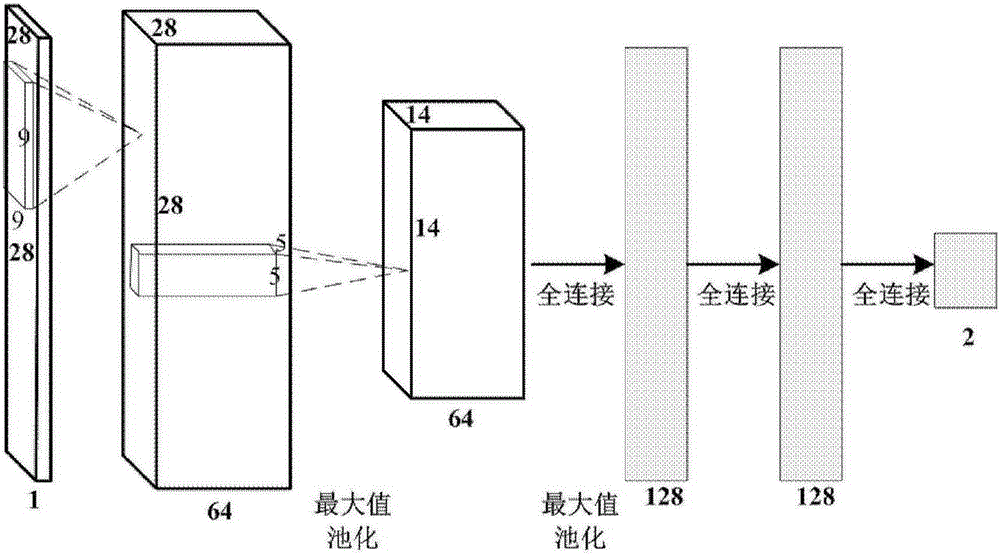

Character detection method and device based on deep learning

ActiveCN105184312AFind out quickly and efficientlyRobustCharacter and pattern recognitionNeural learning methodsFeature extractionAlgorithm

The invention discloses a character detection method and device based on deep learning. The method comprises the steps: designing a multilayer convolution neural network structure, and enabling each character to serve as a class, thereby forming a multi-class classification problem; employing a counter propagation algorithm for the training of a convolution neural network, so as to recognize a single character; minimizing a target function of the network in a supervision manner, and obtaining a character recognition model; finally employing a front-end feature extracting layer for weight initialization, changing the node number of a last full-connection layer into two, enabling a network to become a two-class classification model, and employing character and non-character samples for training the network. Through the above steps, one character detection classifier can complete all operation. During testing, the full-connection layer is converted into a convolution layer. A given input image needs to be scanned through a multi-dimension sliding window, and a character probability graph is obtained. A final character region is obtained through non-maximum-value inhibition.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI +1

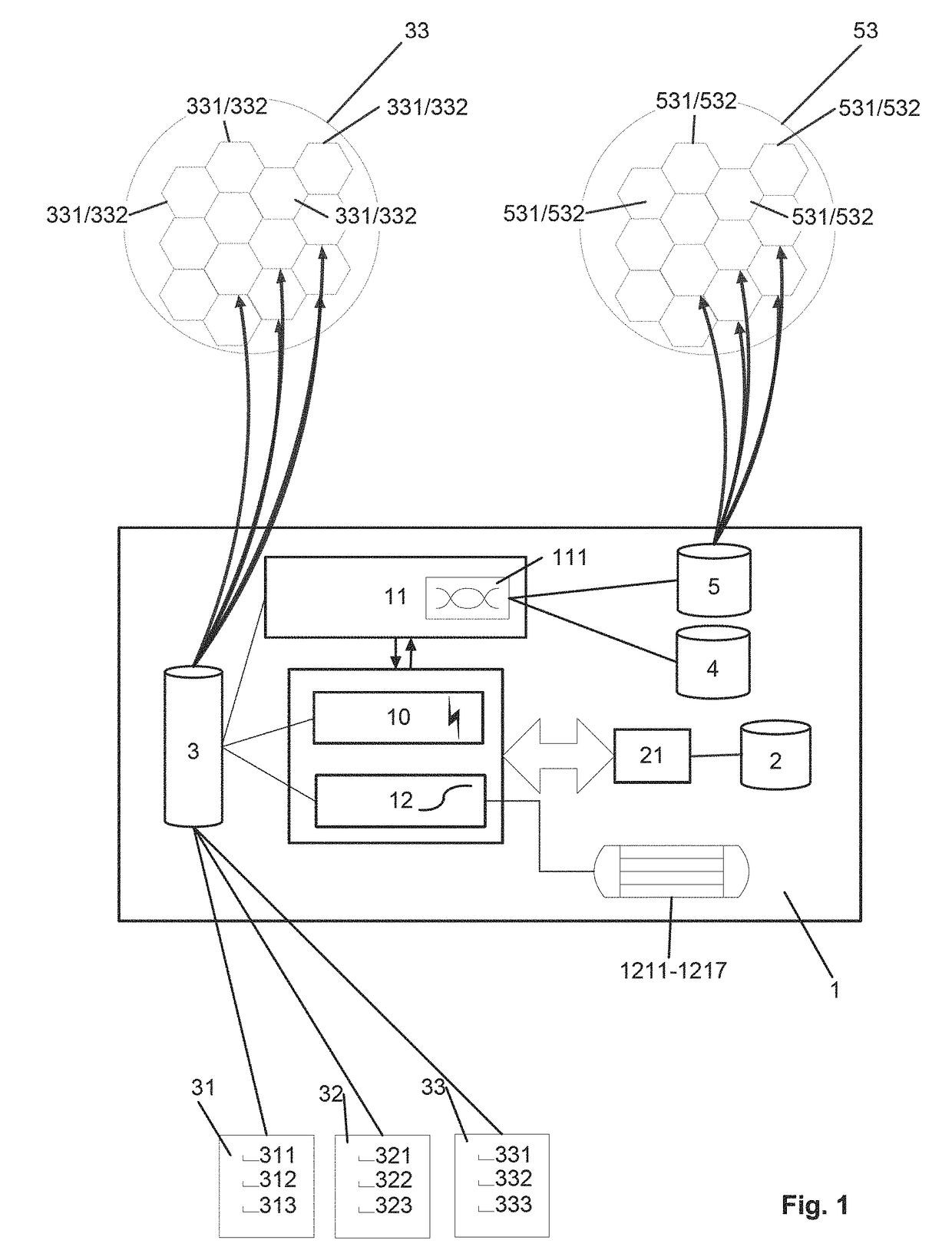

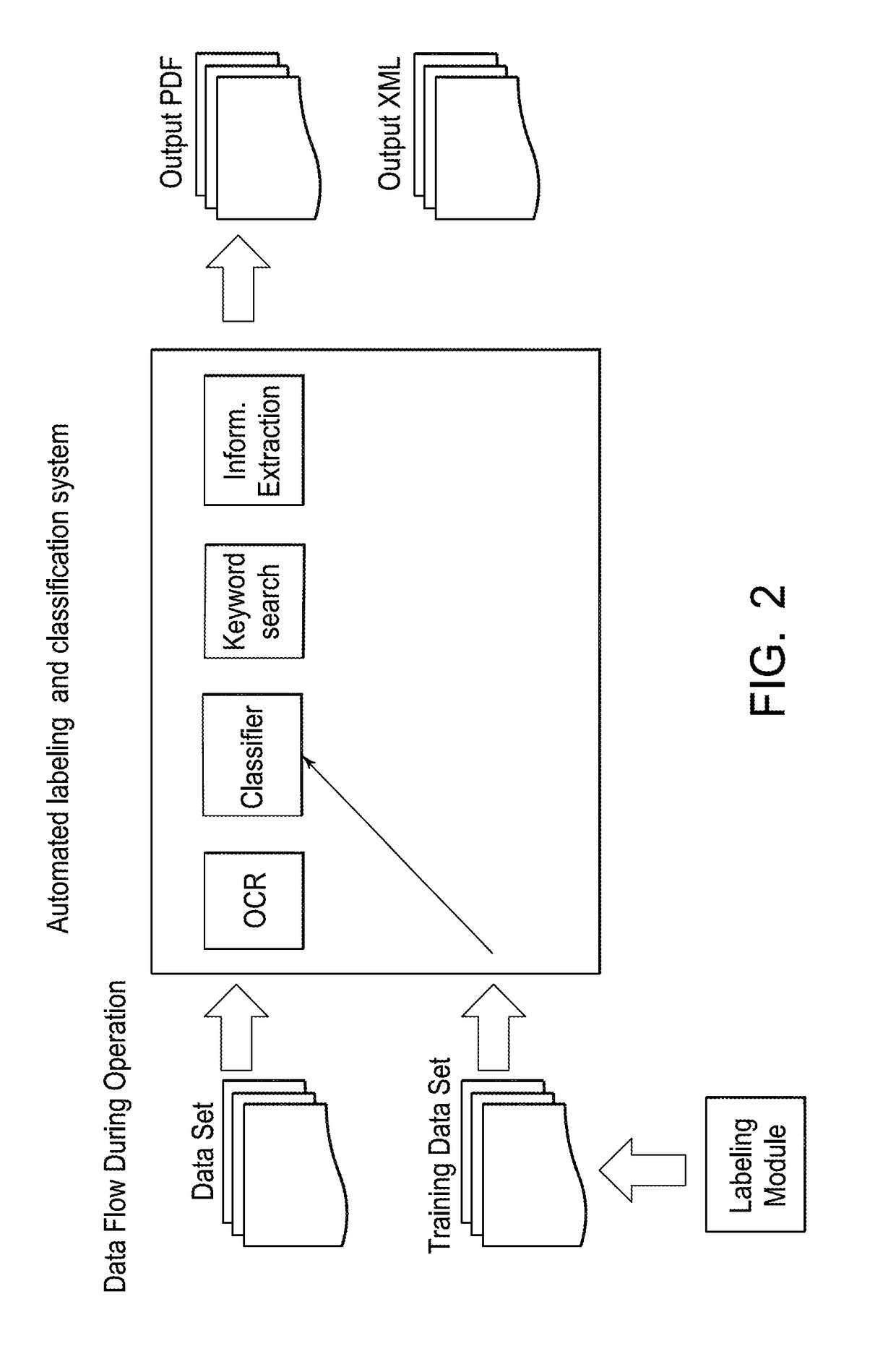

Data extraction engine for structured, semi-structured and unstructured data with automated labeling and classification of data patterns or data elements therein, and corresponding method thereof

ActiveUS20180114142A1Easy to implementNovel level of efficiencyKernel methodsCharacter and pattern recognitionData ingestionFeature vector

A fully or semi-automated, integrated learning, labeling and classification system and method have closed, self-sustaining pattern recognition, labeling and classification operation, wherein unclassified data sets are selected and converted to an assembly of graphic and text data forming compound data sets that are to be classified. By means of feature vectors, which can be automatically generated, a machine learning classifier is trained for improving the classification operation of the automated system during training as a measure of the classification performance if the automated labeling and classification system is applied to unlabeled and unclassified data sets, and wherein unclassified data sets are classified automatically by applying the machine learning classifier of the system to the compound data set of the unclassified data sets.

Owner:SWISS REINSURANCE CO LTD

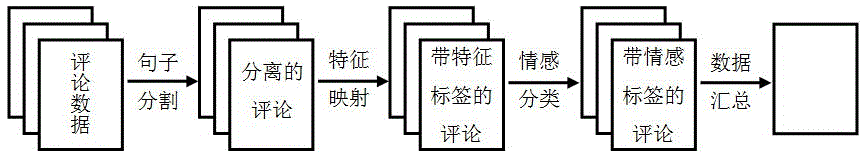

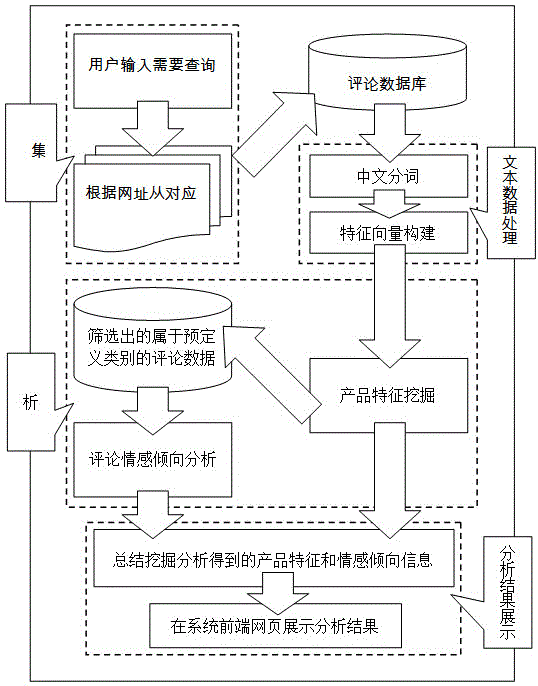

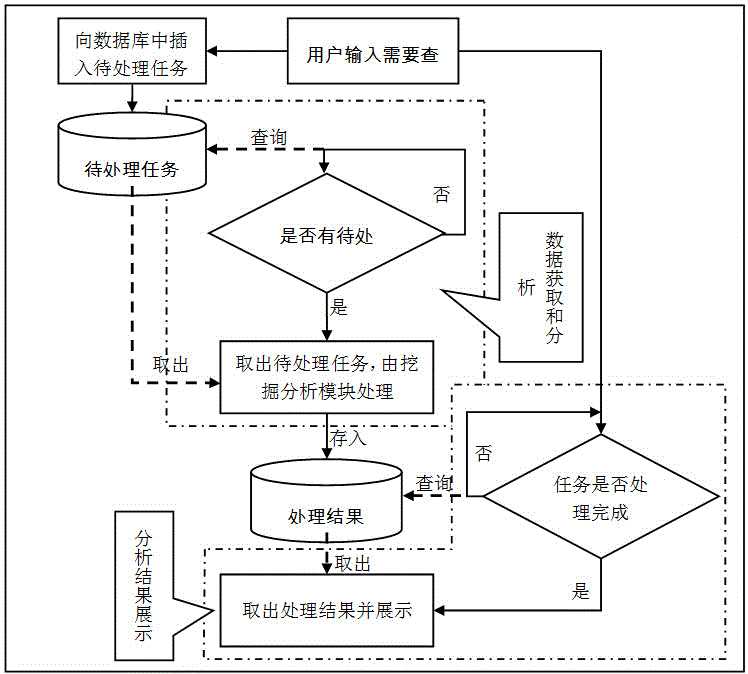

Product comment analyzing method and system with learning supervising function

InactiveCN105550269AReduce precisionMeet the needsData miningSpecial data processing applicationsData dredgingMultiple category

The invention belongs to the data mining and natural language processing technical field, specifically a product comment analyzing method and system with learning supervising function. According to the method, multiple categories (product features) are artificially defined aiming at a specific product; firstly, product feature aspect classification is carried out to collected user comments by a machine learning and training classifier successively; then emotion analysis is carried out to the comment texts classified by a training classifier; and finally quantitative comments on each feature of the product are summarized for a user through comprehensively counting the product features related to a great deal of comment texts and corresponding emotional tendencies. The method and the system of the invention are relatively efficient, rapid, simple and convenient; the classified contents are all user concerned contents; the analysis result is provided to the user visually and clearly; and the work of viewing a great deal of comments can be removed.

Owner:FUDAN UNIV

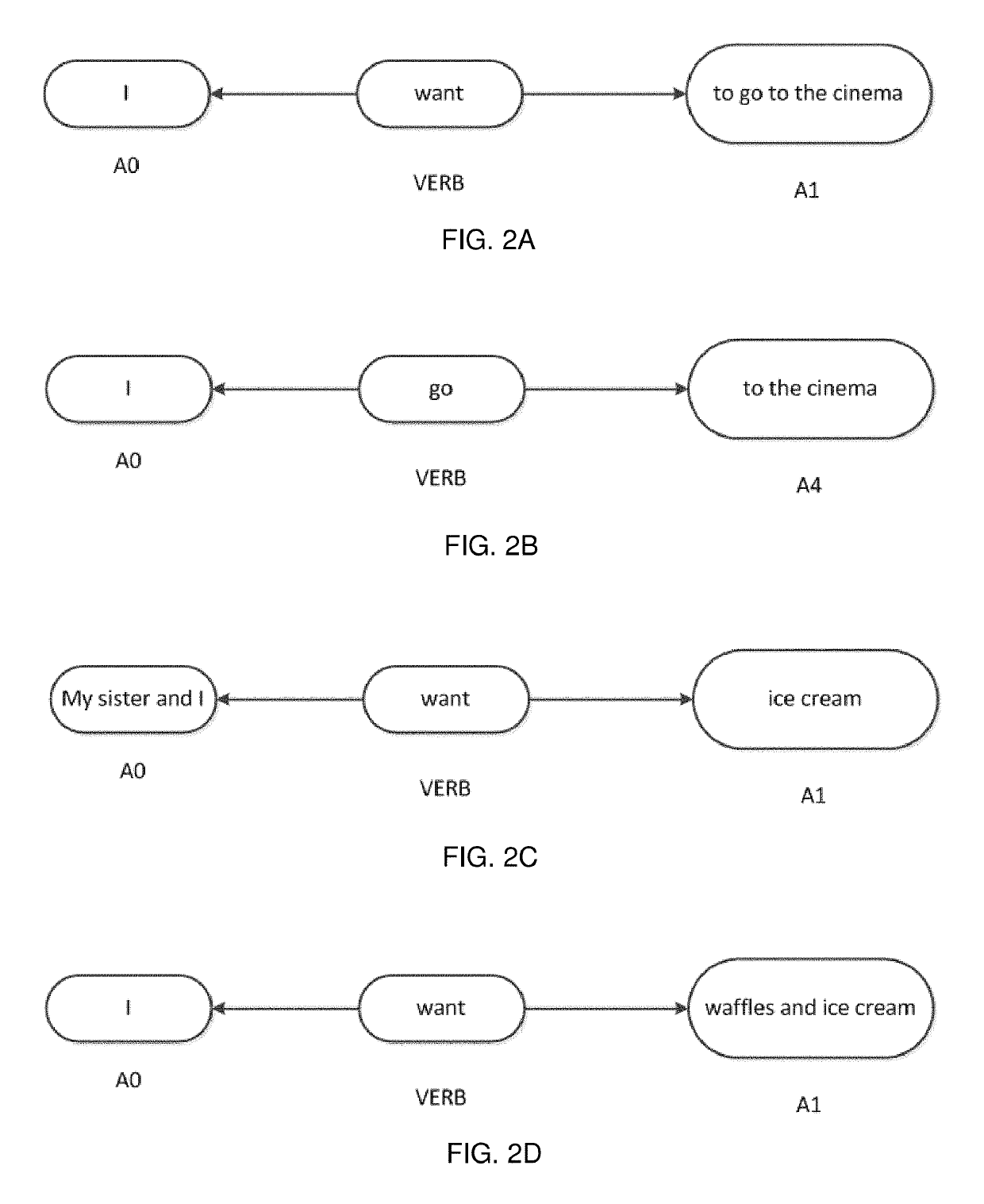

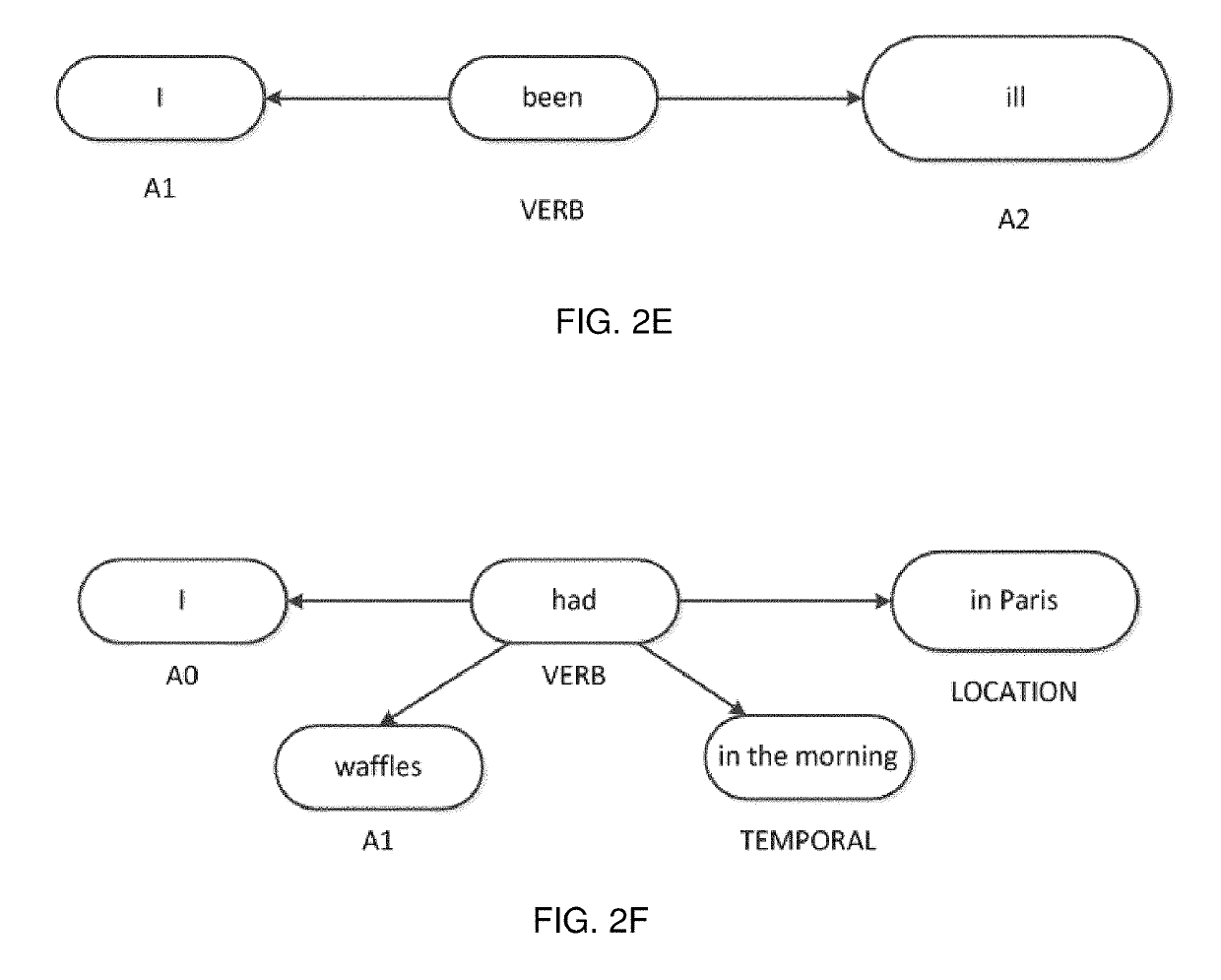

Semantic graph traversal for recognition of inferred clauses within natural language inputs

ActiveUS10387575B1Flexible and effective and computationally efficientEfficient workSemantic analysisSpecial data processing applicationsGraph traversalAlgorithm

Embodiments described herein provide a more flexible, effective, and computationally efficient means for determining multiple intents within a natural language input. Some methods rely on specifically trained machine learning classifiers to determine multiple intents within a natural language input. These classifiers require a large amount of labelled training data in order to work effectively, and are generally only applicable to determining specific types of intents (e.g., a specifically selected set of potential inputs). In contrast, the embodiments described herein avoid the use of specifically trained classifiers by determining inferred clauses from a semantic graph of the input. This allows the methods described herein to function more efficiently and over a wider variety of potential inputs.

Owner:BABYLON PARTNERS

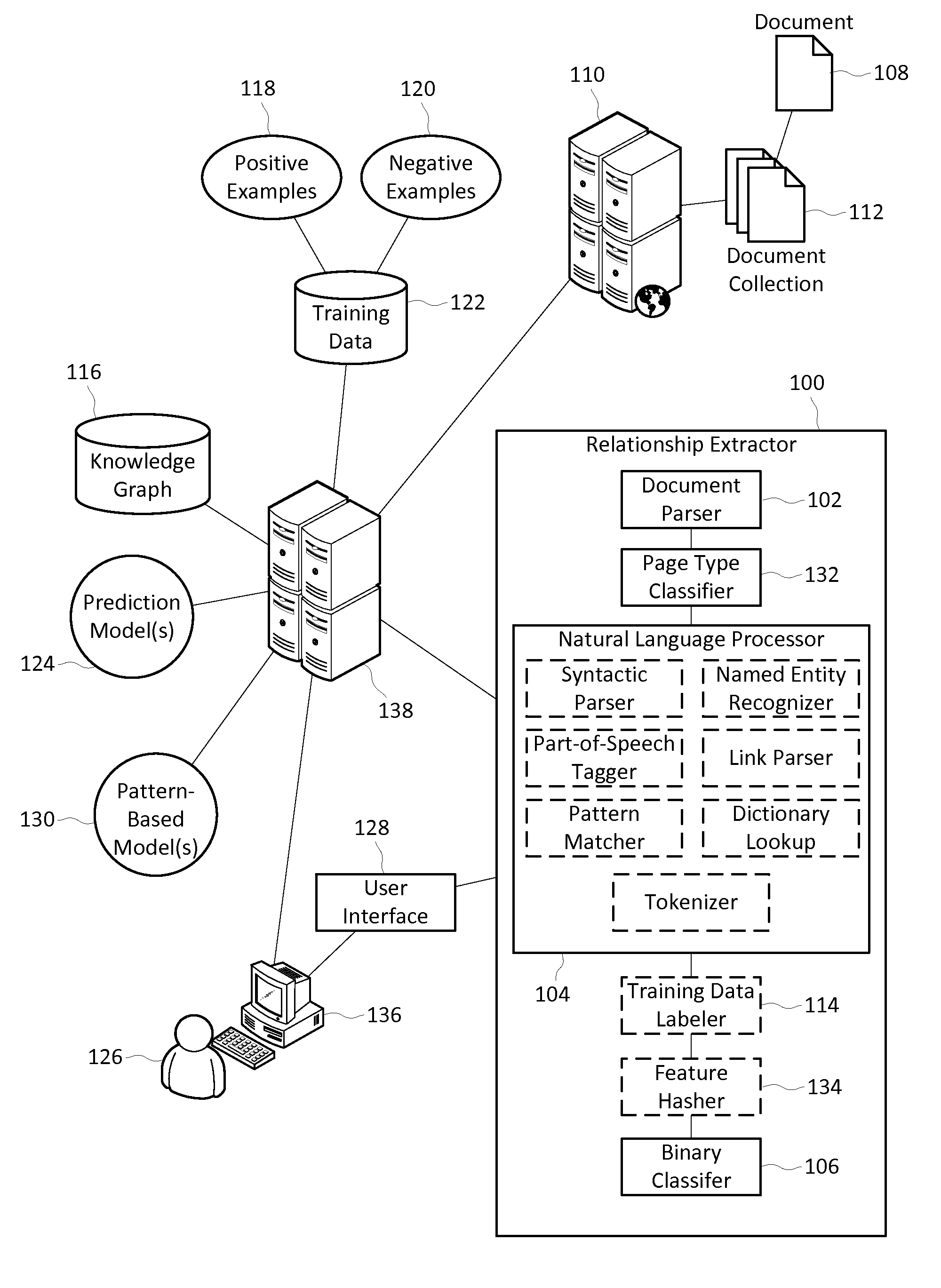

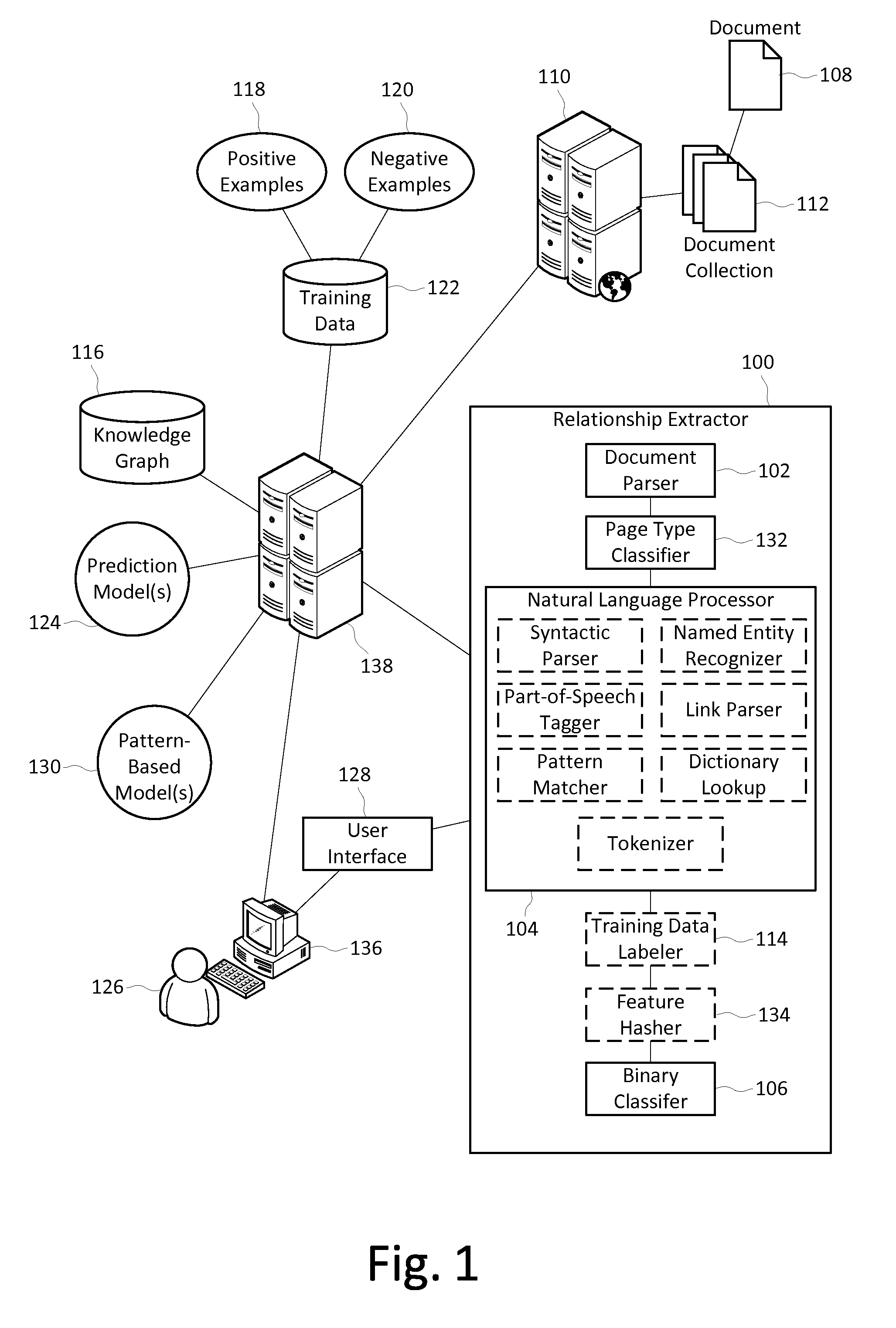

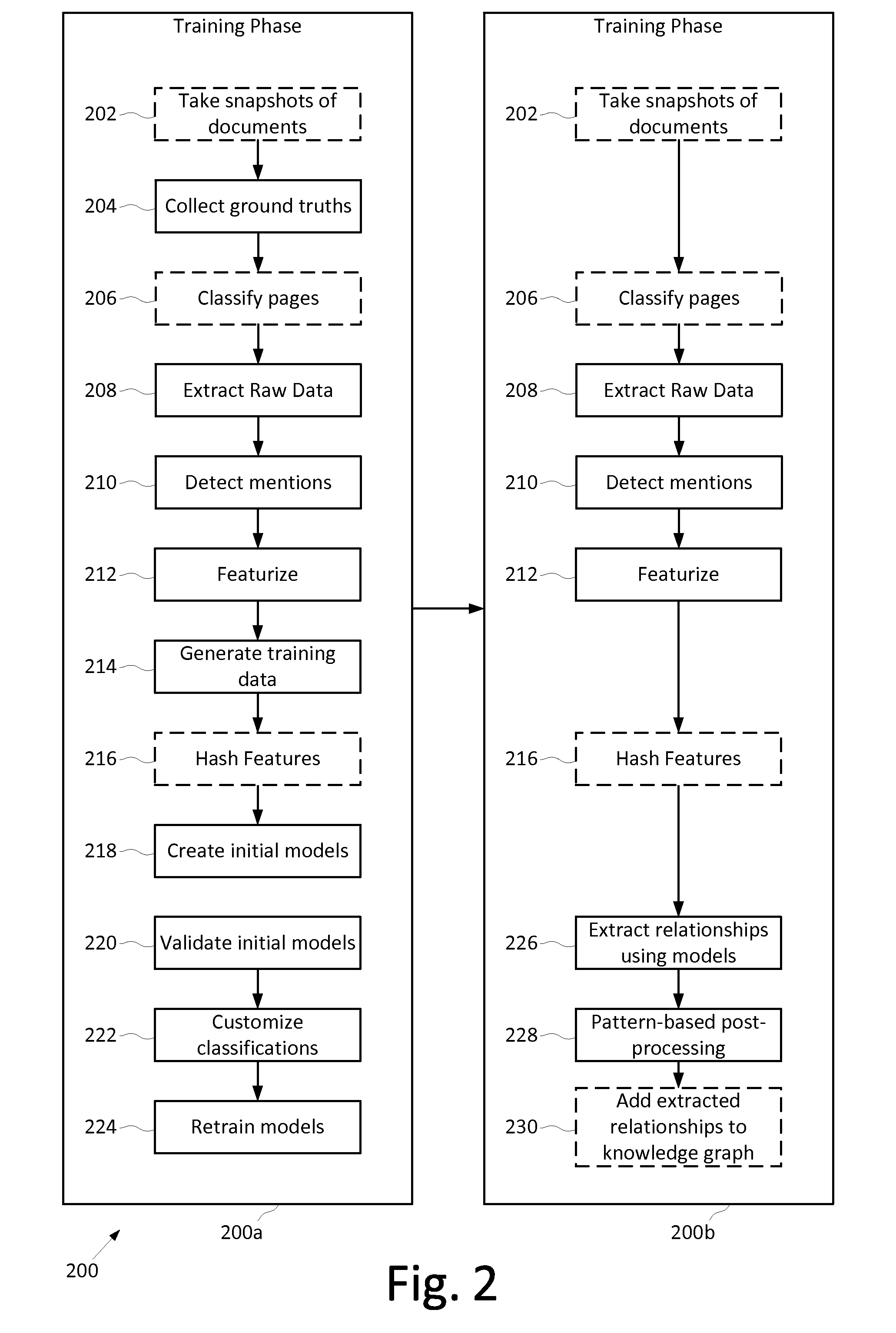

High-precision limited supervision relationship extractor

InactiveUS20160098645A1Improve recallImprove accuracyDigital computer detailsNatural language data processingGround truthEntity type

Owner:MICROSOFT TECH LICENSING LLC

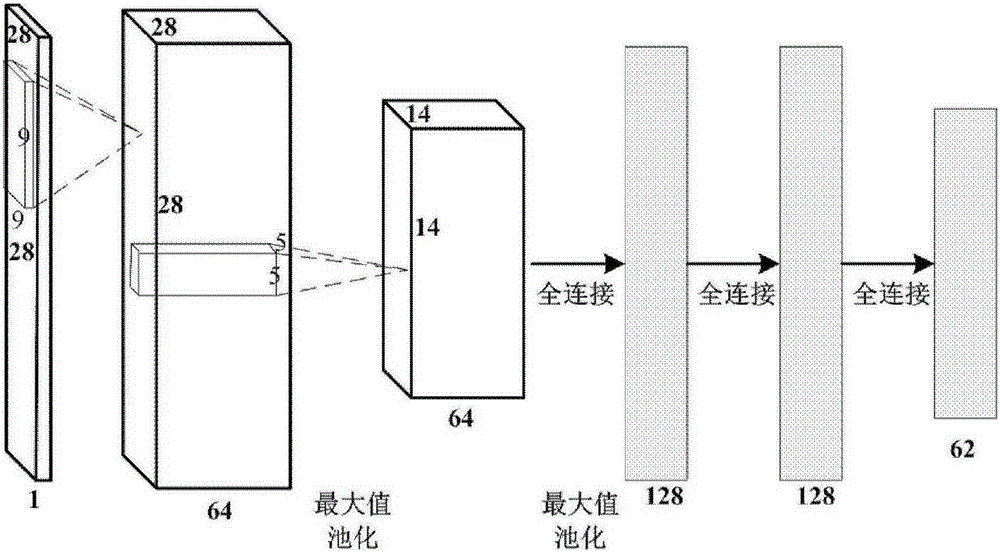

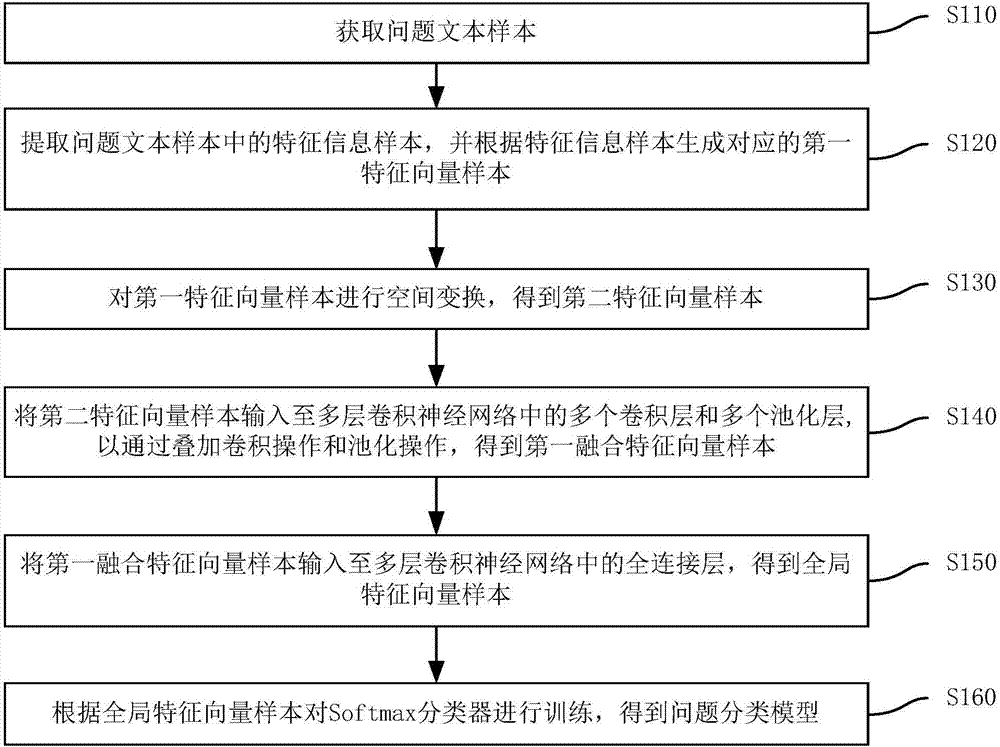

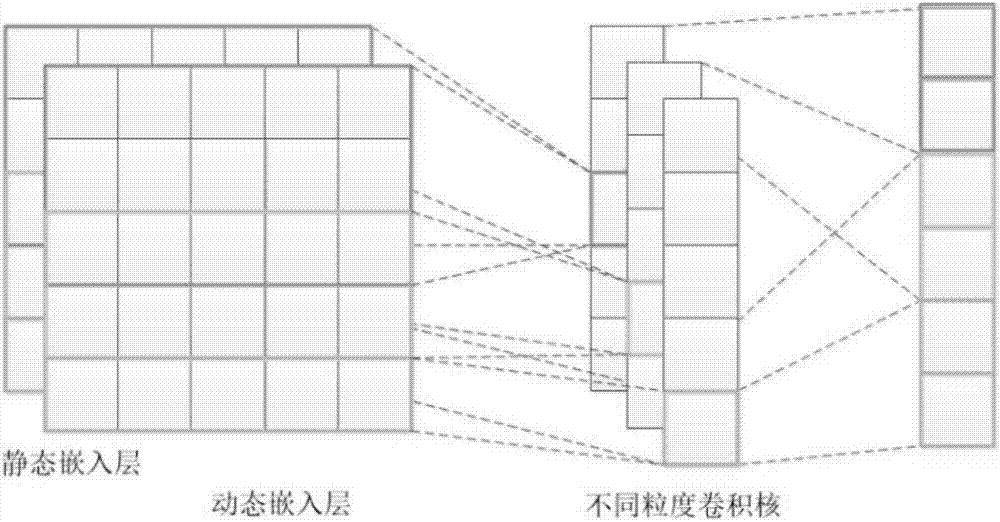

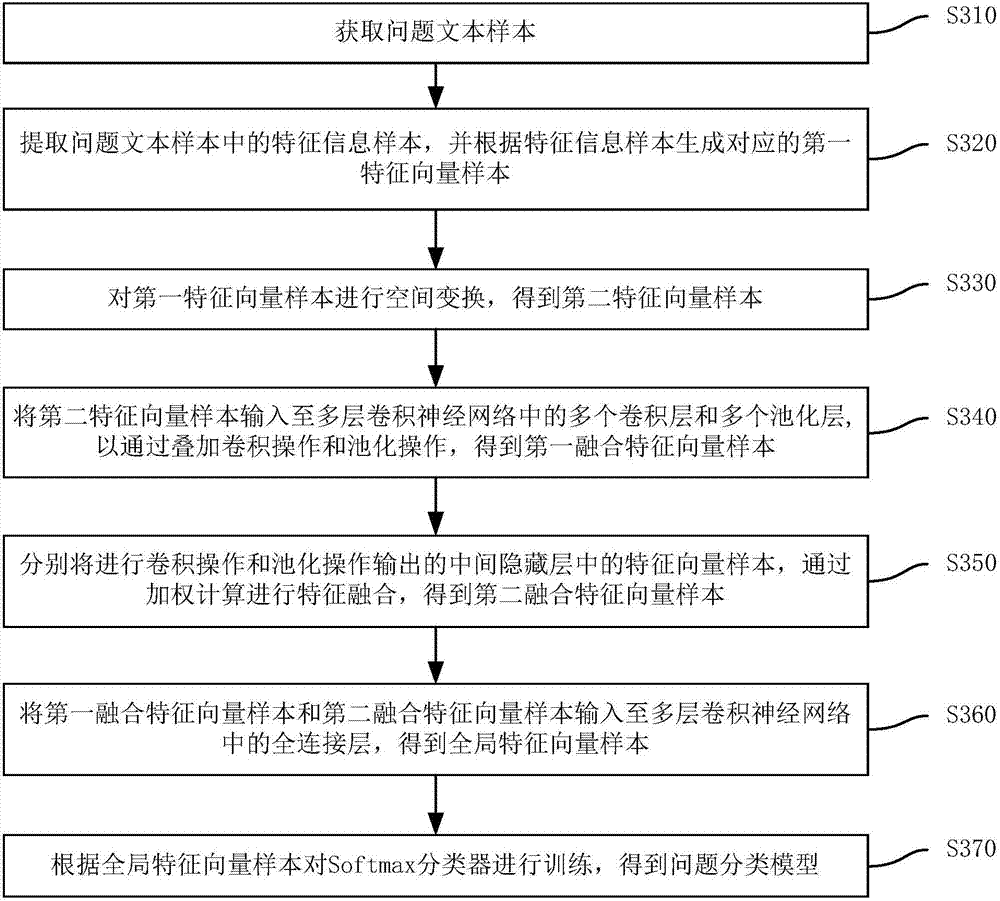

Deep learning-based question classification model training method and apparatus, and question classification method and apparatus

ActiveCN107291822AImprove experienceRapid positioningMachine learningSpecial data processing applicationsFeature vectorClassification methods

The invention discloses a deep learning-based question classification model training method and apparatus, and a question classification method and apparatus. The question classification model training method comprises the steps of extracting feature information samples in question text samples, and generating corresponding first eigenvector samples; performing spatial transformation on the first eigenvector samples to obtain second eigenvector samples; inputting the second eigenvector samples to a plurality of convolutional layers and a plurality of pooling layers in a multilayer convolutional neural network, and by superposing convolution operation and pooling operation, obtaining first fusion eigenvector samples; inputting the first fusion eigenvector samples to a full connection layer in the multilayer convolutional neural network to obtain global eigenvector samples; and training a Softmax classifier according to the global eigenvector samples to obtain a question classification model. The method can avoid a large amount of overheads of manual design of features; and through the question classification model, a more accurate classification result can be obtained, so that locating of standard question and answer is improved.

Owner:BEIJING UNIV OF POSTS & TELECOMM

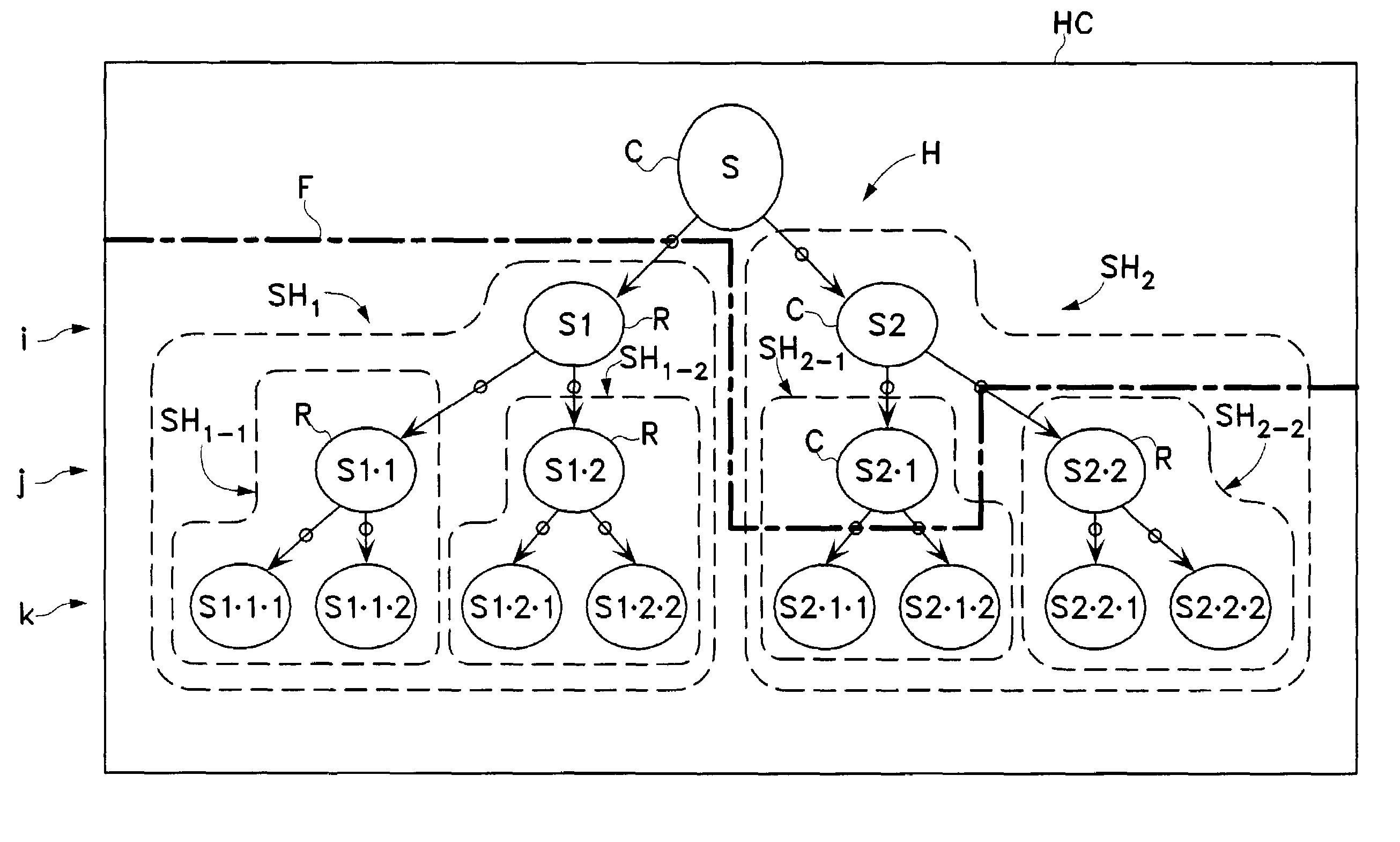

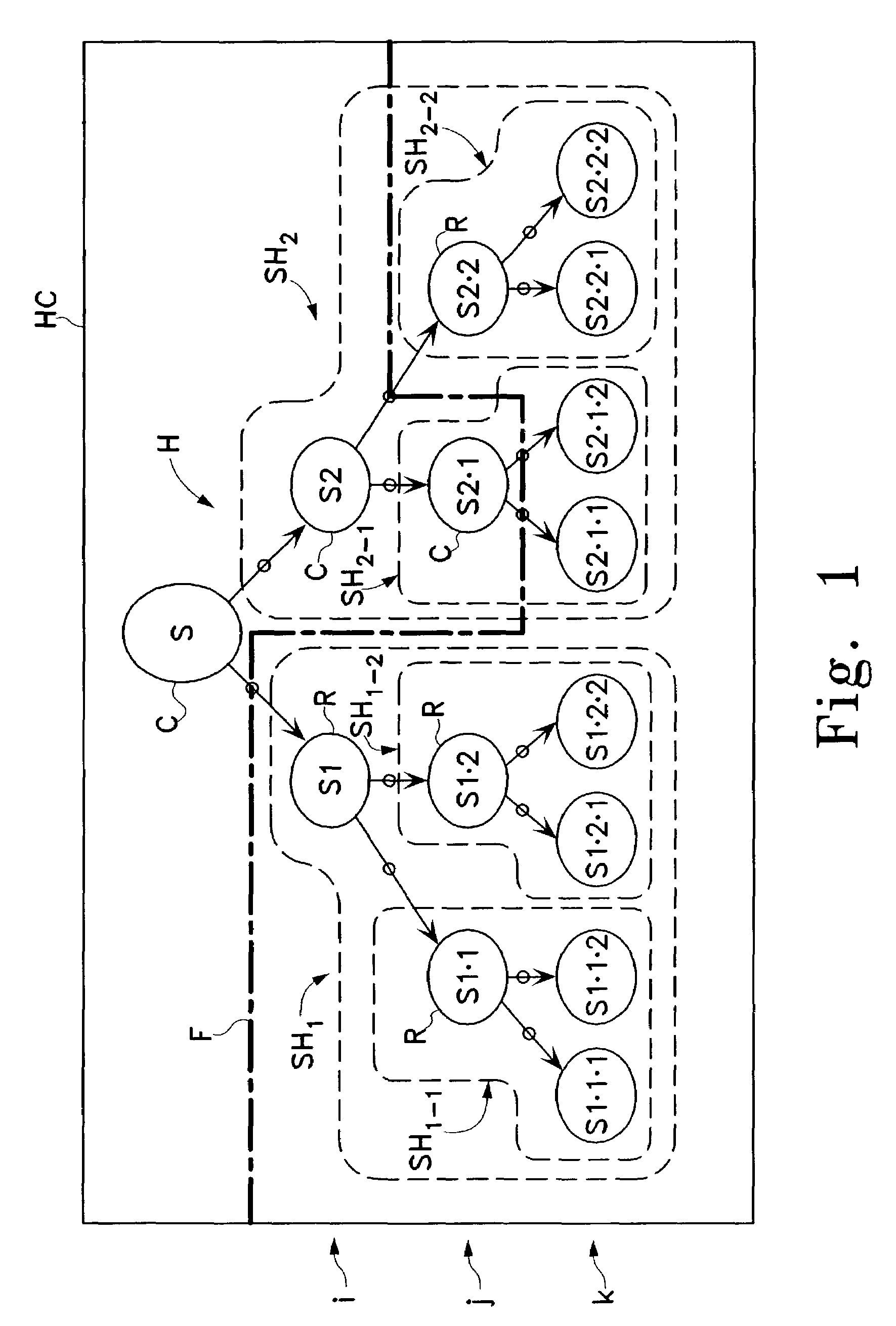

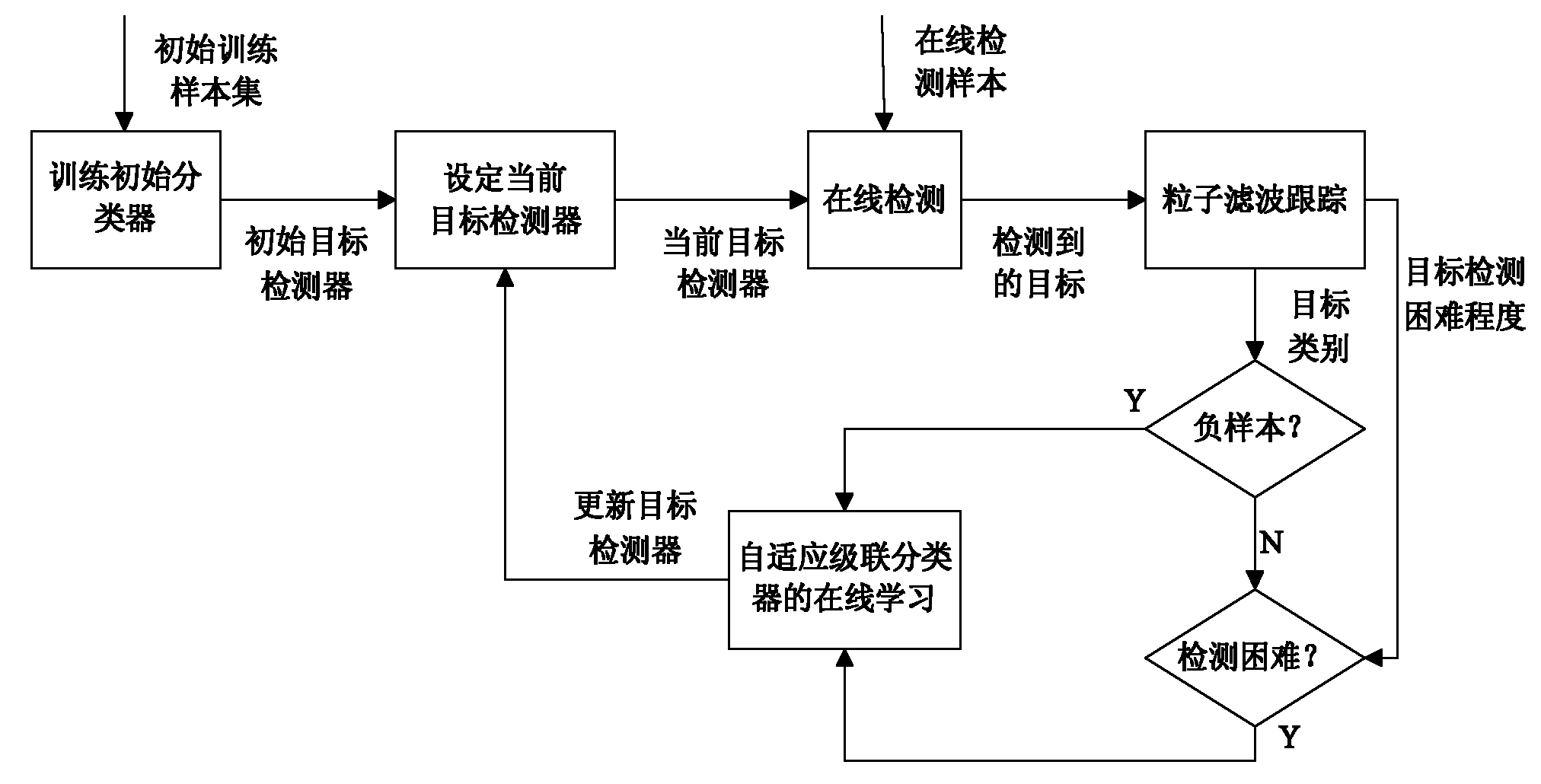

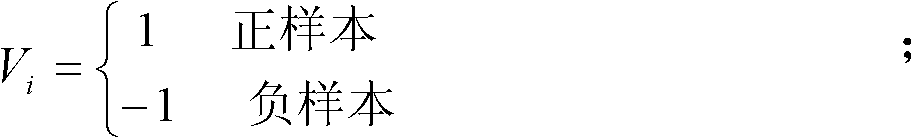

Self-adaptive cascade classifier training method based on online learning

InactiveCN101814149AHigh precisionHigh degree of intelligenceCharacter and pattern recognitionPositive sampleValue set

The invention discloses a self-adaptive cascade classifier training method based on online learning, which comprises the following steps: (1), preparing a training sample set with a small quantity of samples, and training an initial cascade classifier HC(x) in a cascade classifier algorithm; (2), using the HC(x) for traversal of image frames to be detected, extracting areas with sizes identical to the sizes of the training samples one by one, calculating a feature value set, classifying the areas with the initial cascade classifier, and judging whether the areas are target areas, thereby completing target detection; (3) tracking the detected targets in a particle filtering algorithm, verifying the target detection results through tracking, marking detection with errors as a negative sample for online learning, obtaining different attitudes of real targets through tracking and extracting a positive sample for online learning; and (4) carrying out online training and updating for the initial cascade classifier HC(x) in a self-adaptive cascade classifier algorithm when an online learning sample is obtained, thereby gradually improving the target detection accuracy of the classifier.

Owner:HUAZHONG UNIV OF SCI & TECH

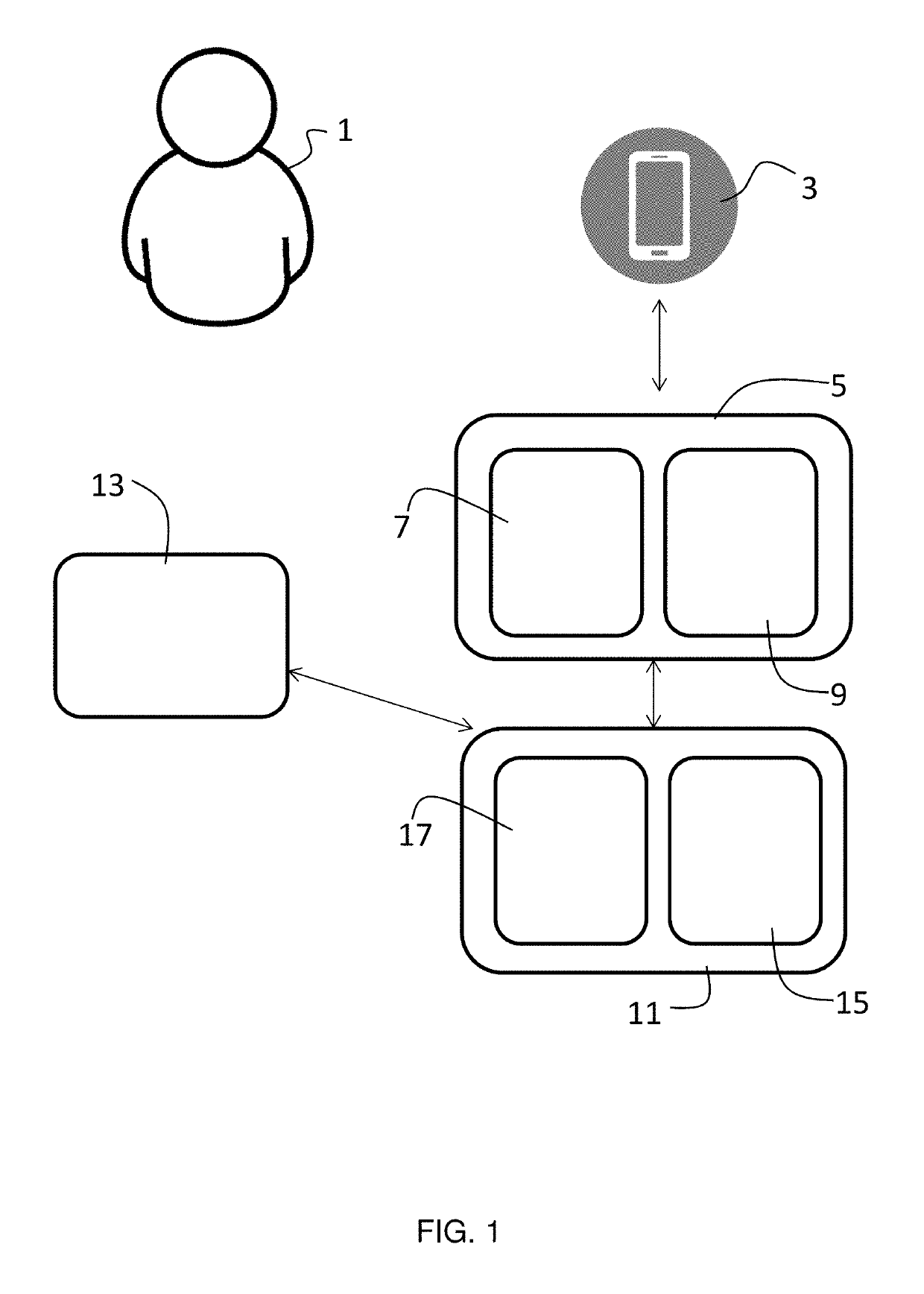

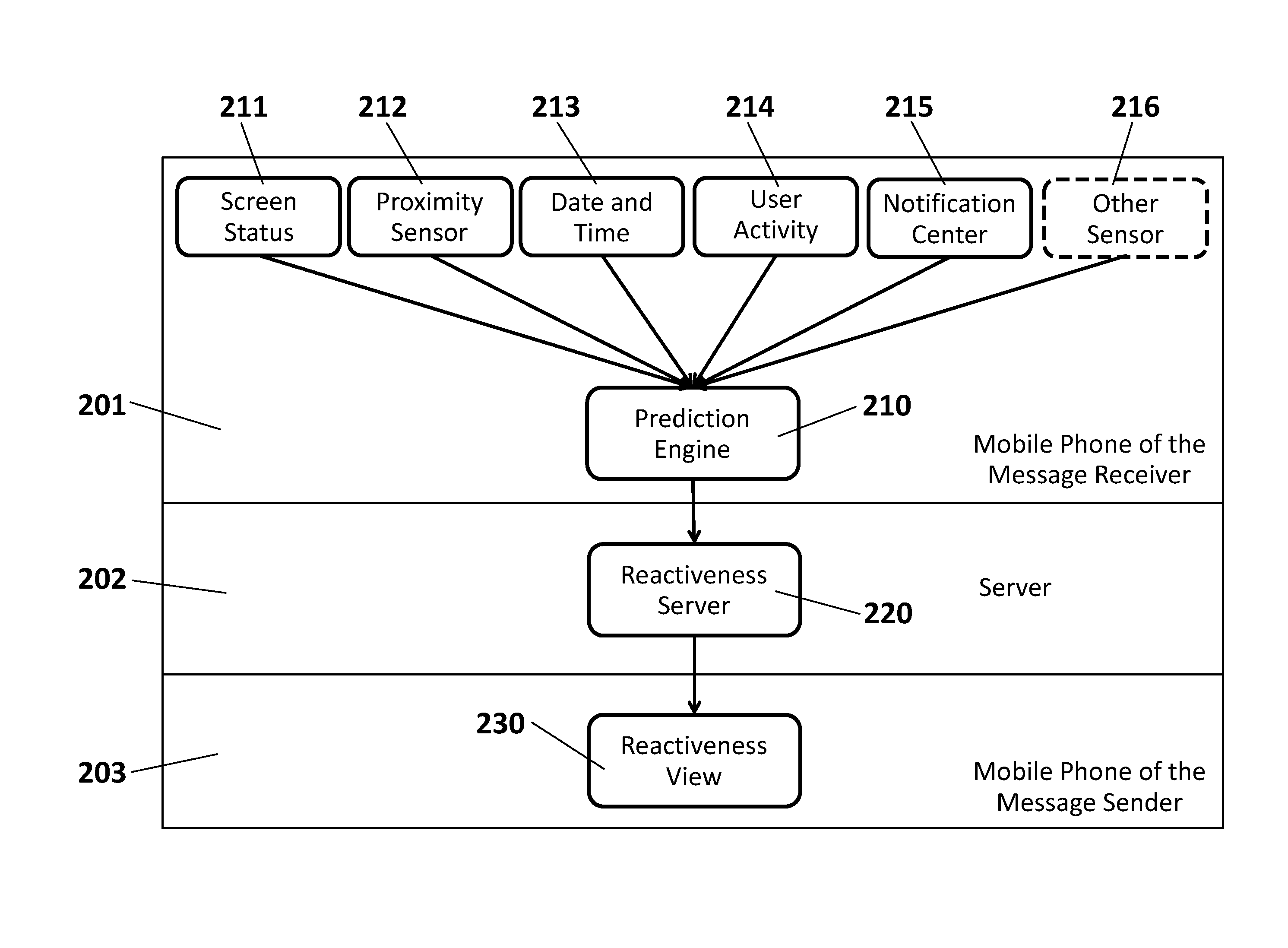

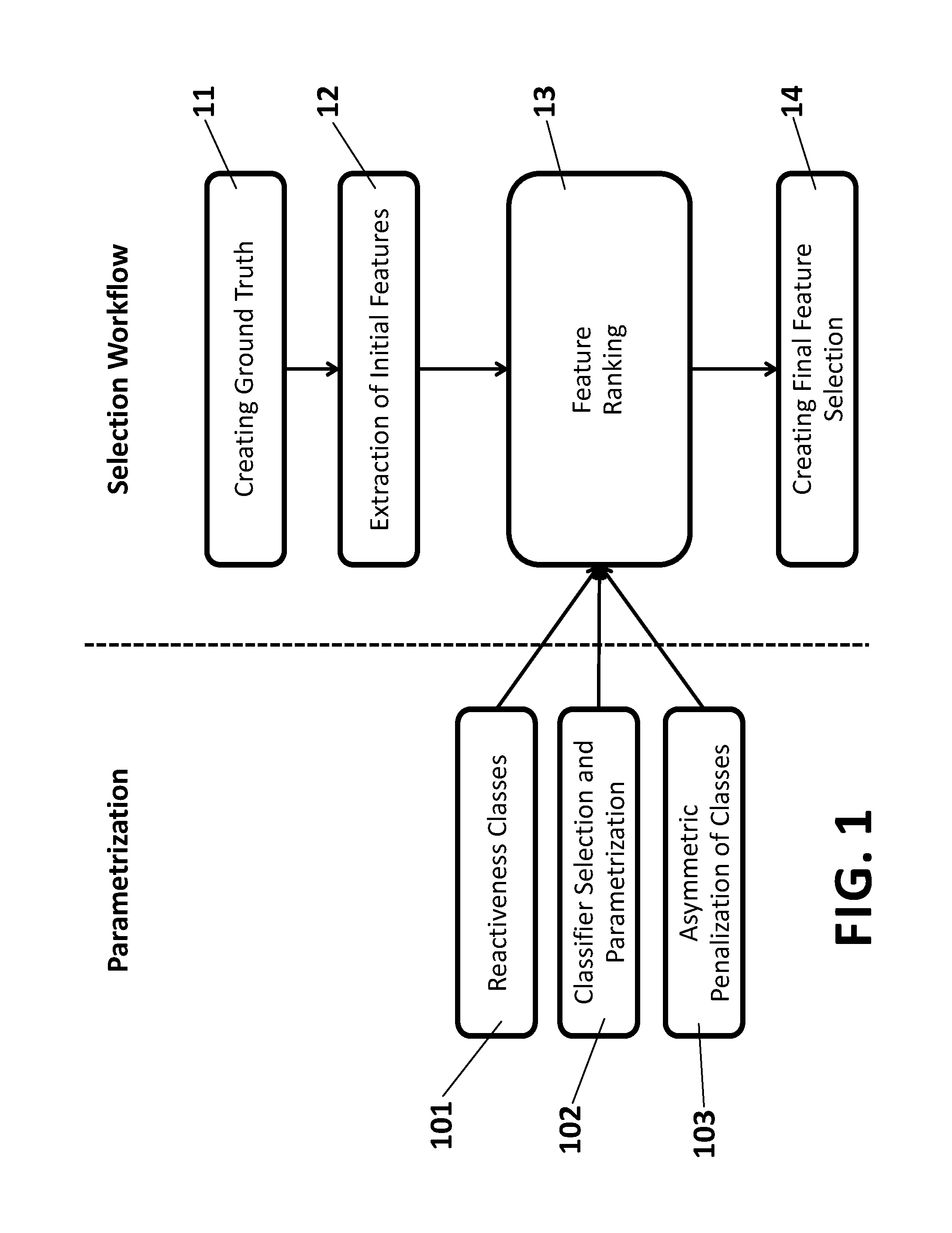

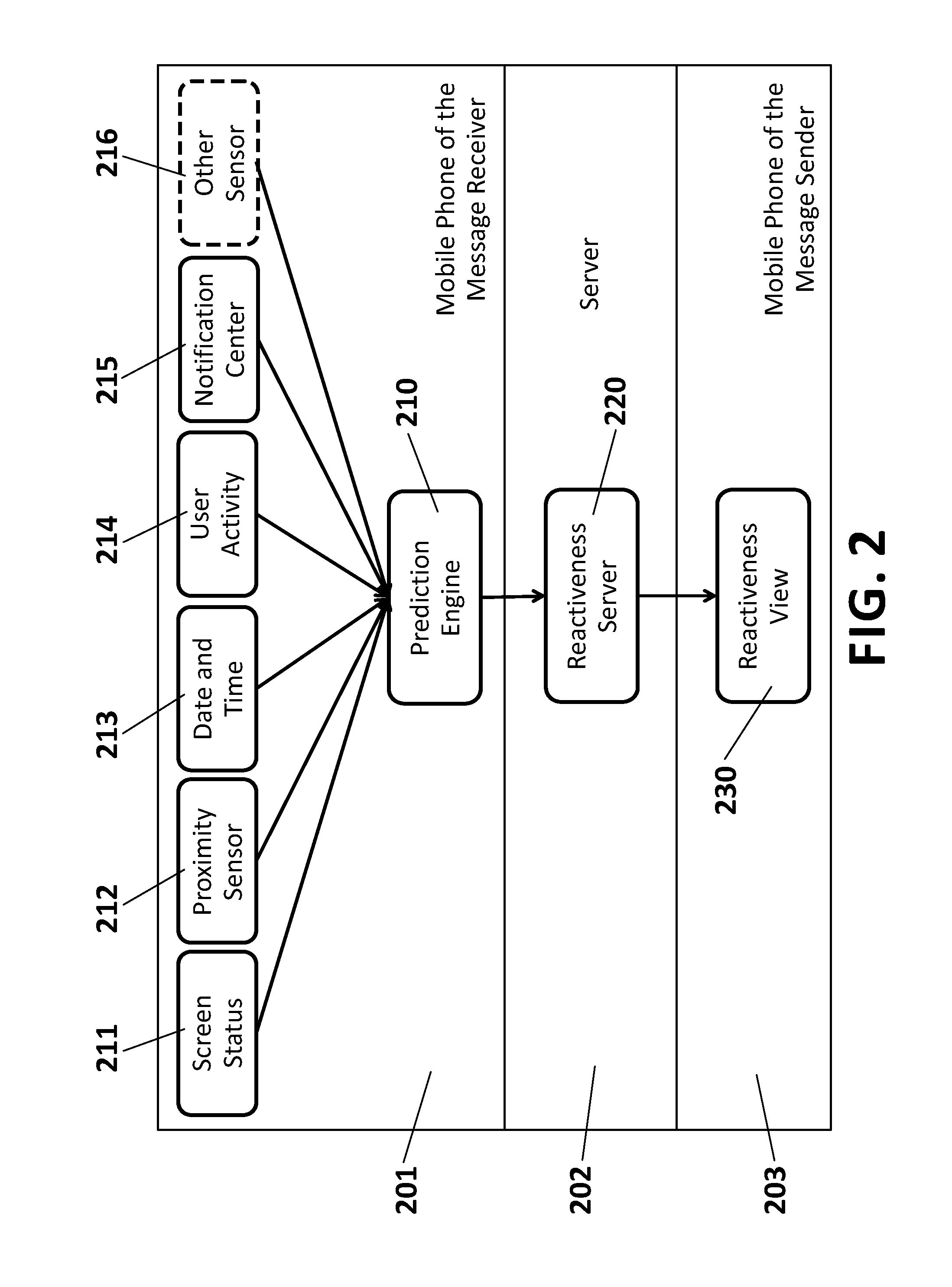

Method for predicting reactiveness of users of mobile devices for mobile messaging

InactiveUS20150178626A1Solve the real problemWork has been limitedEnsemble learningDigital computer detailsGround truthUser device

A method for predicting reactiveness of MMI users comprises:reacting to a message with a mobile user device which is a receiver of the message,collecting ground-truth data (11) for a machine-learning classifier,extracting from the collected ground-truth data (11) a list of features (12) which determines a current or past context of the user, and each feature having a feature's prediction strength calculated as fraction of classes misclassified when removing the feature;selecting the list of features (12) based on each feature's prediction strength;defining a plurality of reactiveness classes (101); both the extracted list of features (12) and the reactiveness classes (101) being input to the machine-learning classifier;classifying (102) the user according to the defined reactiveness classes (101);predicting the user's reactiveness for the given current or past context of the user by determining the most likely reactiveness class via the machine-learning classifier.

Owner:TELEFONICA DIGITAL ESPANA S L U

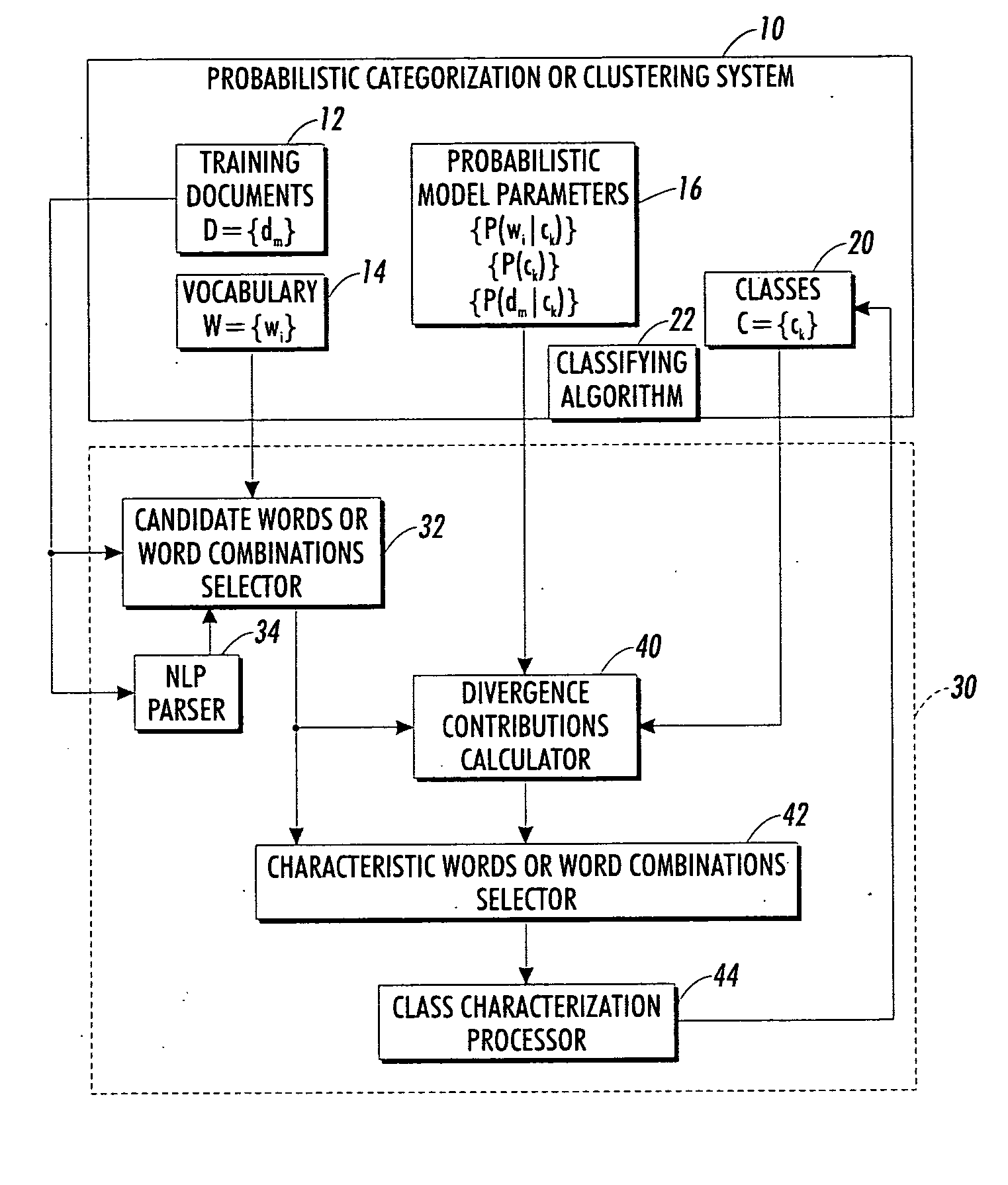

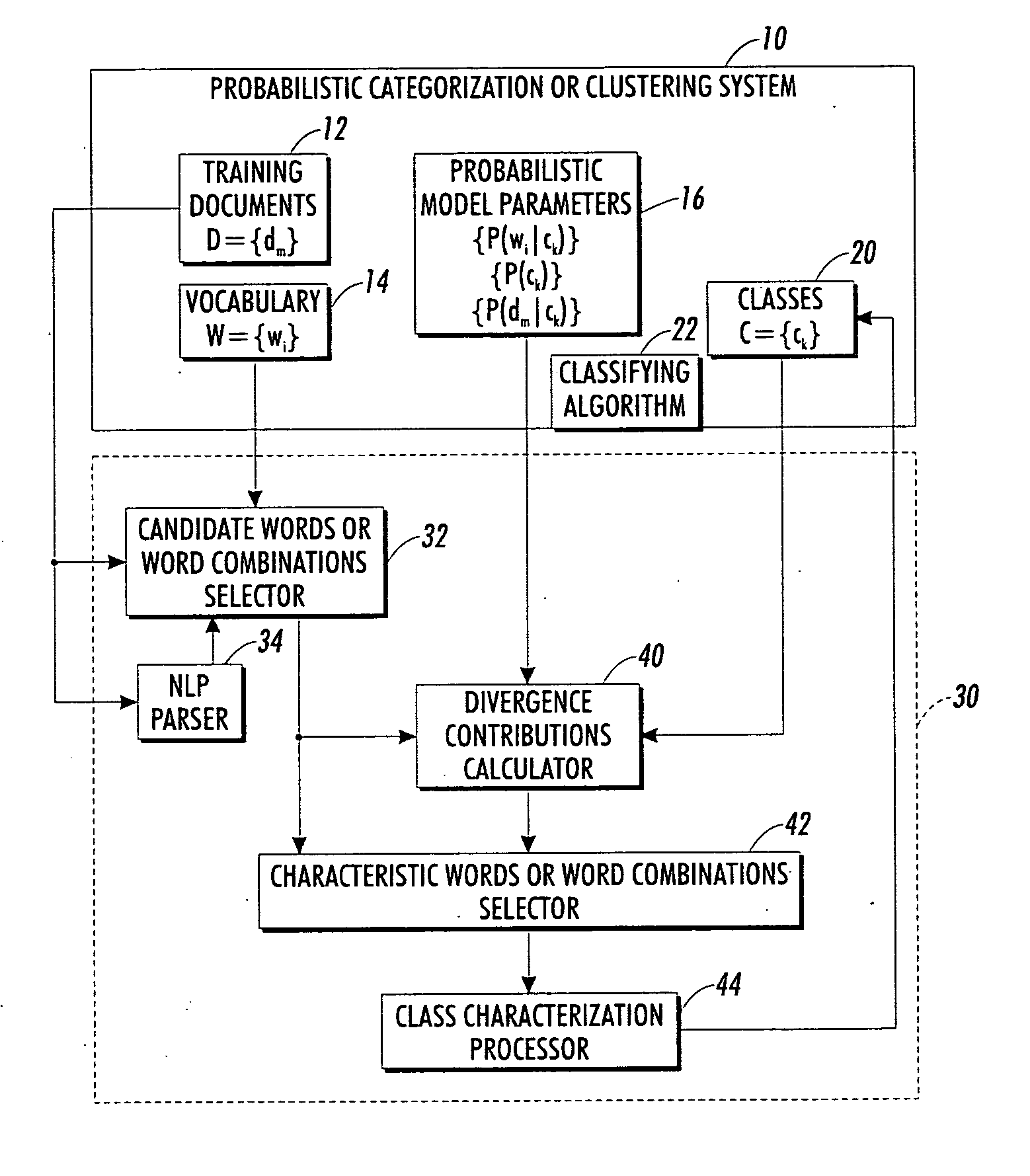

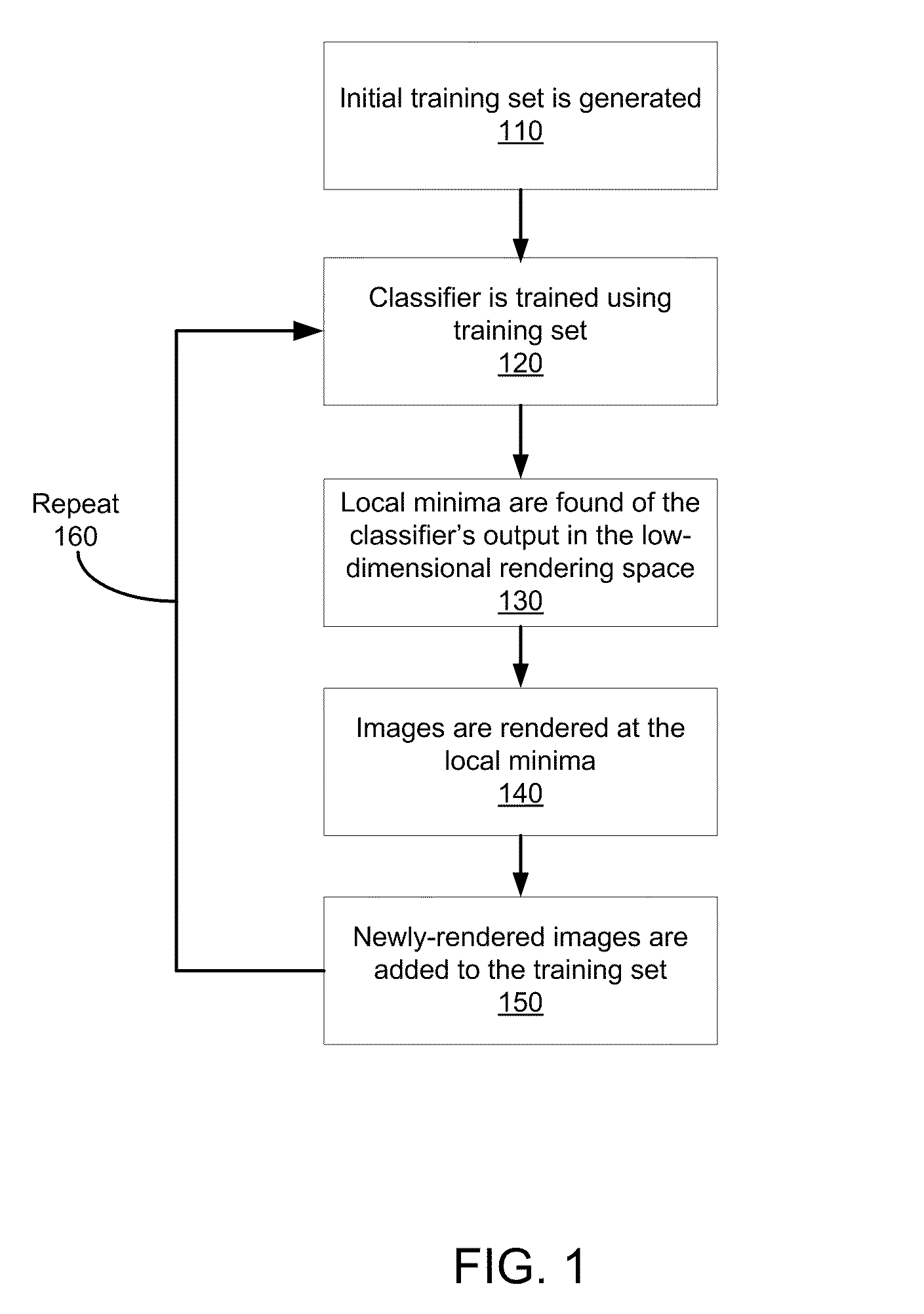

Class description generation for clustering and categorization

InactiveUS20070143101A1Digital data processing detailsNatural language data processingPattern recognitionMachine learning

A class is to be characterized of a probabilistic classifier or clustering system that includes probabilistic model parameters. For each of a plurality of candidate words or word combinations, divergence of the class from other classes is computed based on one or more probabilistic model parameters profiling the candidate word or word combination. One or more words or word combinations are selected for characterizing the class as those candidate words or word combinations for which the class has substantial computed divergence from the other classes.

Owner:XEROX CORP

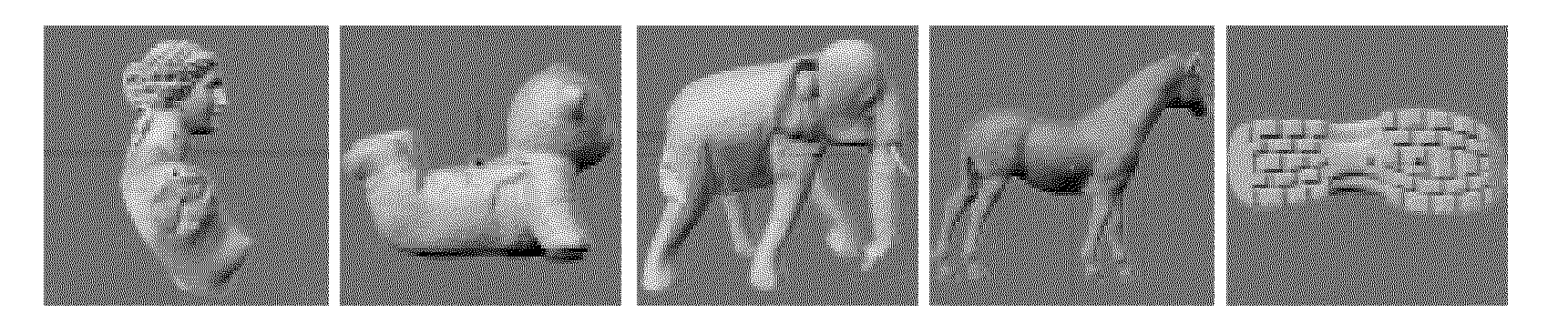

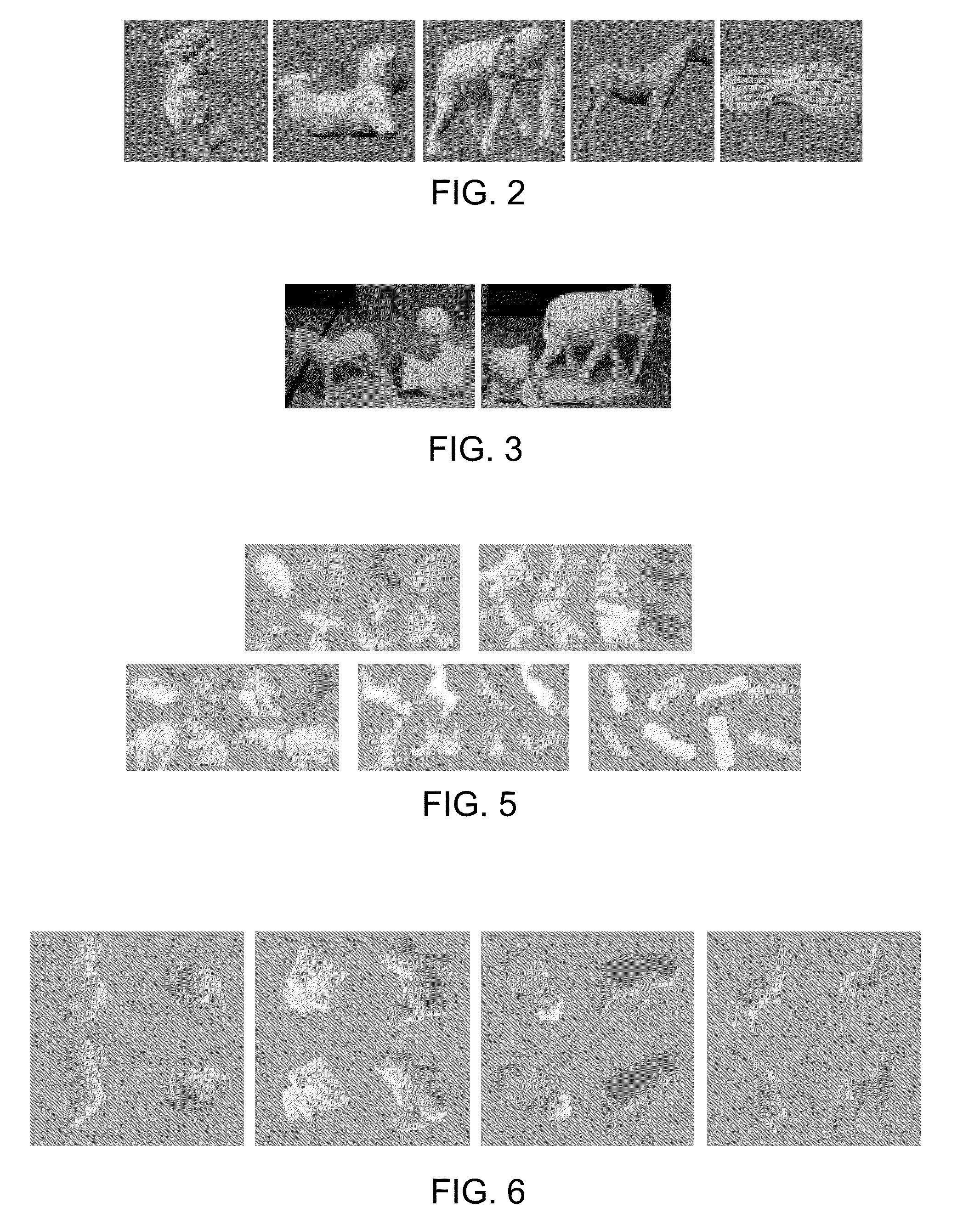

Object Recognition with 3D Models

An “active learning” method trains a compact classifier for view-based object recognition. The method actively generates its own training data. Specifically, the generation of synthetic training images is controlled within an iterative training process. Valuable and / or informative object views are found in a low-dimensional rendering space and then added iteratively to the training set. In each iteration, new views are generated. A sparse training set is iteratively generated by searching for local minima of a classifier's output in a low-dimensional space of rendering parameters. An initial training set is generated. The classifier is trained using the training set. Local minima are found of the classifier's output in the low-dimensional rendering space. Images are rendered at the local minima. The newly-rendered images are added to the training set. The procedure is repeated so that the classifier is retrained using the modified training set.

Owner:HONDA MOTOR CO LTD

Probabilistic boosting tree framework for learning discriminative models

A probabilistic boosting tree framework for computing two-class and multi-class discriminative models is disclosed. In the learning stage, the probabilistic boosting tree (PBT) automatically constructs a tree in which each node combines a number of weak classifiers (e.g., evidence, knowledge) into a strong classifier or conditional posterior probability. The PBT approaches the target posterior distribution by data augmentation (e.g., tree expansion) through a divide-and-conquer strategy. In the testing stage, the conditional probability is computed at each tree node based on the learned classifier which guides the probability propagation in its sub-trees. The top node of the tree therefore outputs the overall posterior probability by integrating the probabilities gathered from its sub-trees. In the training stage, a tree is recursively constructed in which each tree node is a strong classifier. The input training set is divided into two new sets, left and right ones, according to the learned classifier. Each set is then used to train the left and right sub-trees recursively.

Owner:SIEMENS MEDICAL SOLUTIONS USA INC

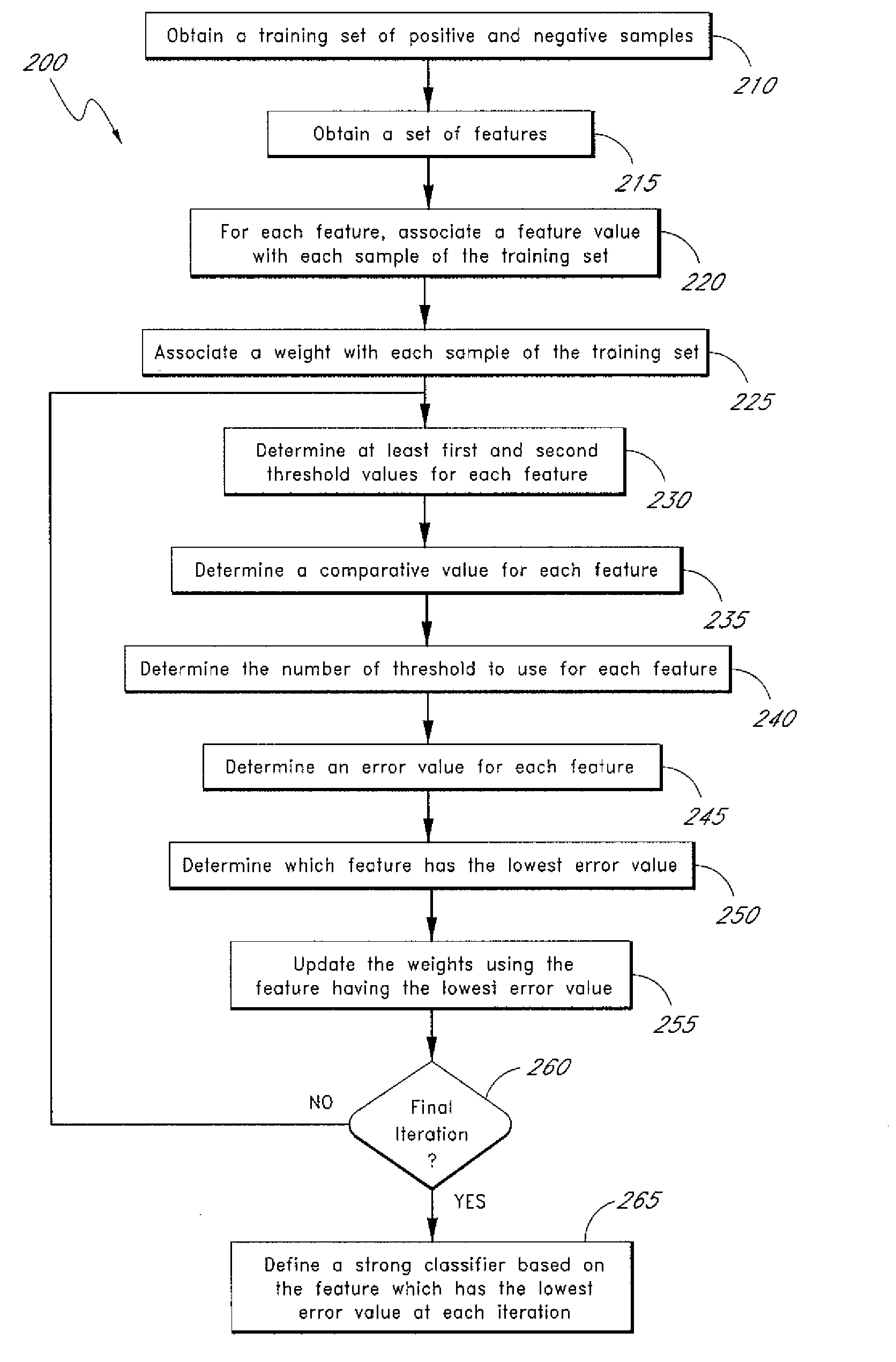

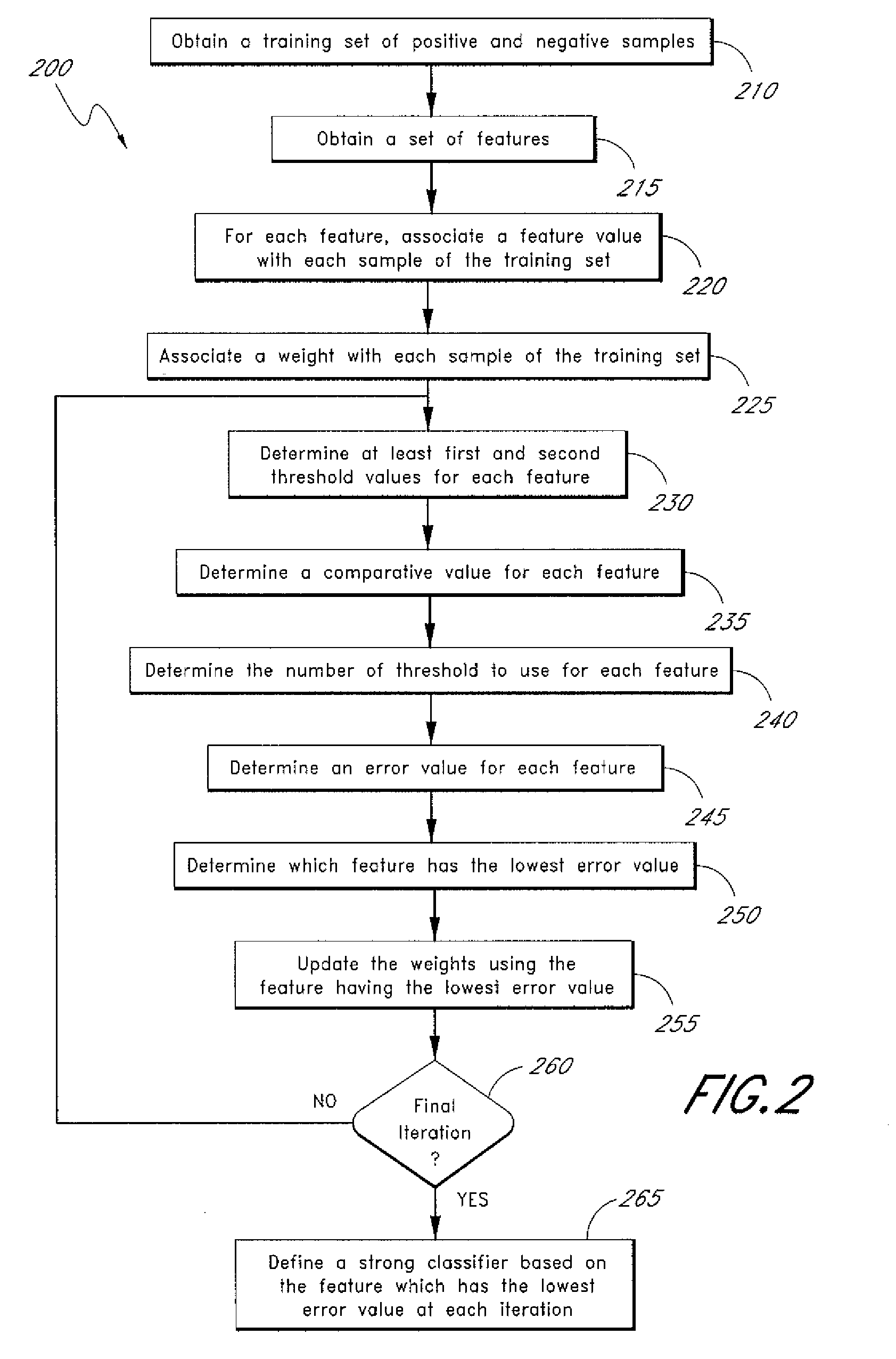

System and method for object detection and classification with multiple threshold adaptive boosting

Systems and methods for classifying a object as belonging to an object class or not belonging to an object class using a boosting method with a plurality of thresholds is disclosed. One embodiment is a method of defining a strong classifier, the method comprising receiving a training set of positive and negative samples, receiving a set of features, associating, for each of a first subset of the set of features, a corresponding feature value with each of a first subset of the training set, associating a corresponding weight with each of a second subset of the training set, iteratively i) determining, for each of a second subset of the set of features, a first threshold value at which a first metric is minimized, ii) determining, for each of a third subset of the set of features, a second threshold value at which a second metric is minimized, iii) determining, for each of a forth subset of the set of features, a number of thresholds, iv) determining, for each of a fifth subset of the set of features, an error value based on the determined number of thresholds, v) determining the feature having the lowest associated error value, and vi) updating the weights, defining a strong classifier based on the features having the lowest error value at a plurality of iterations, and classifying a sample as either belonging to an object class or not belonging to an object class based on the strong classifier.

Owner:SAMSUNG ELECTRONICS CO LTD

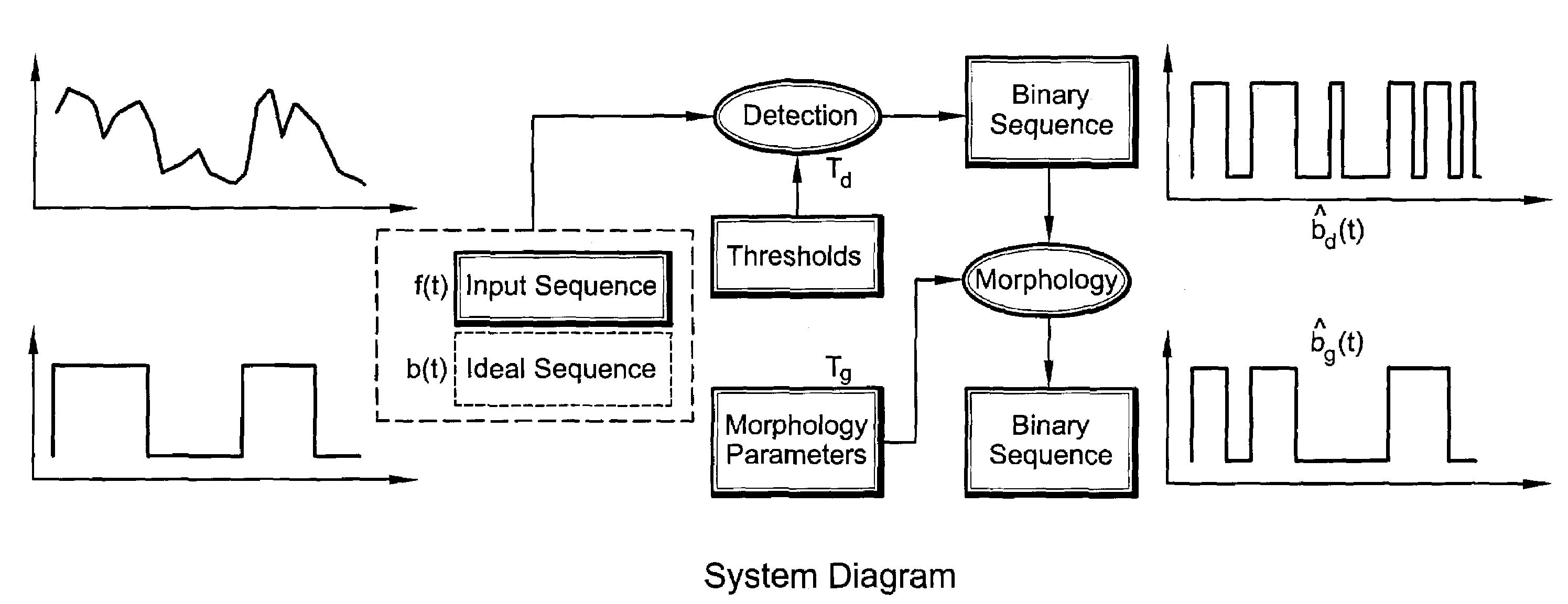

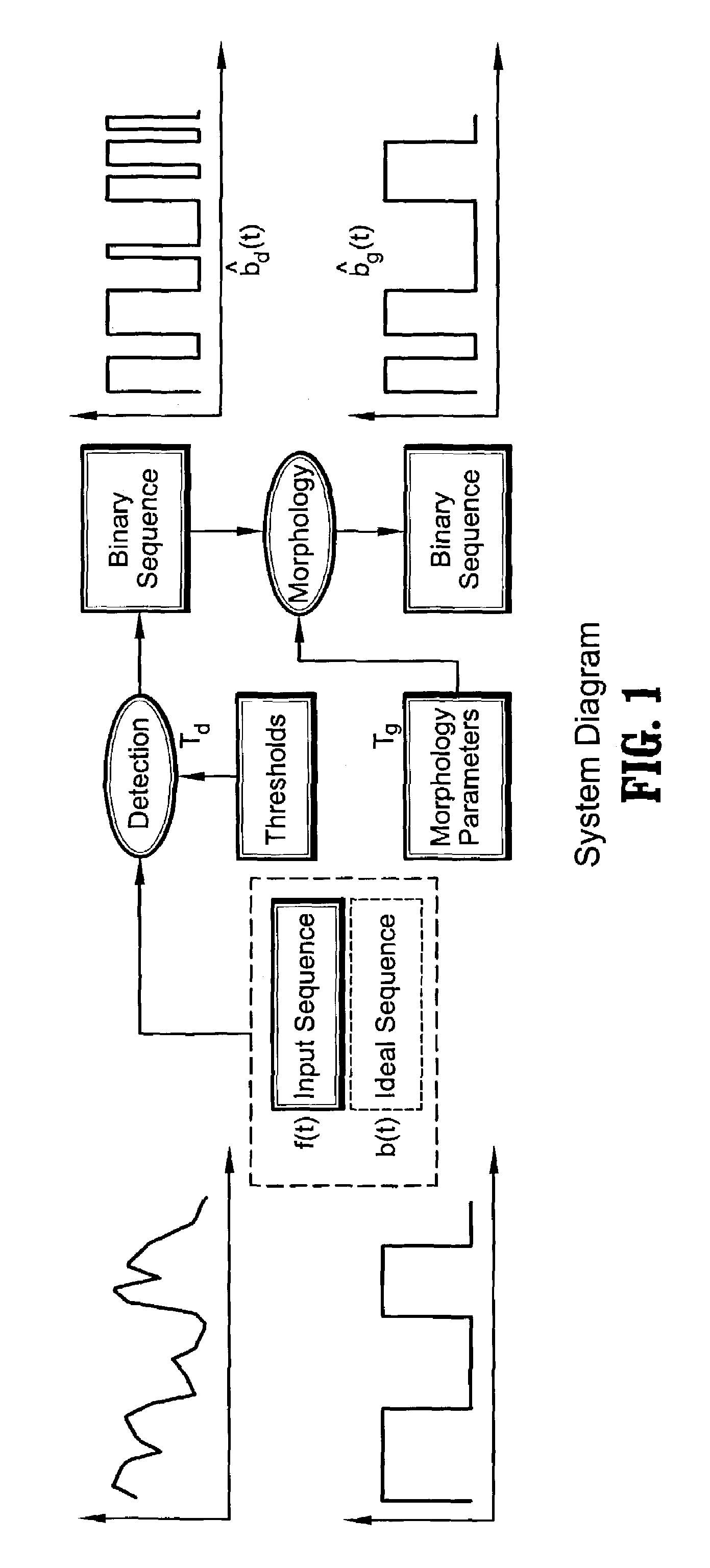

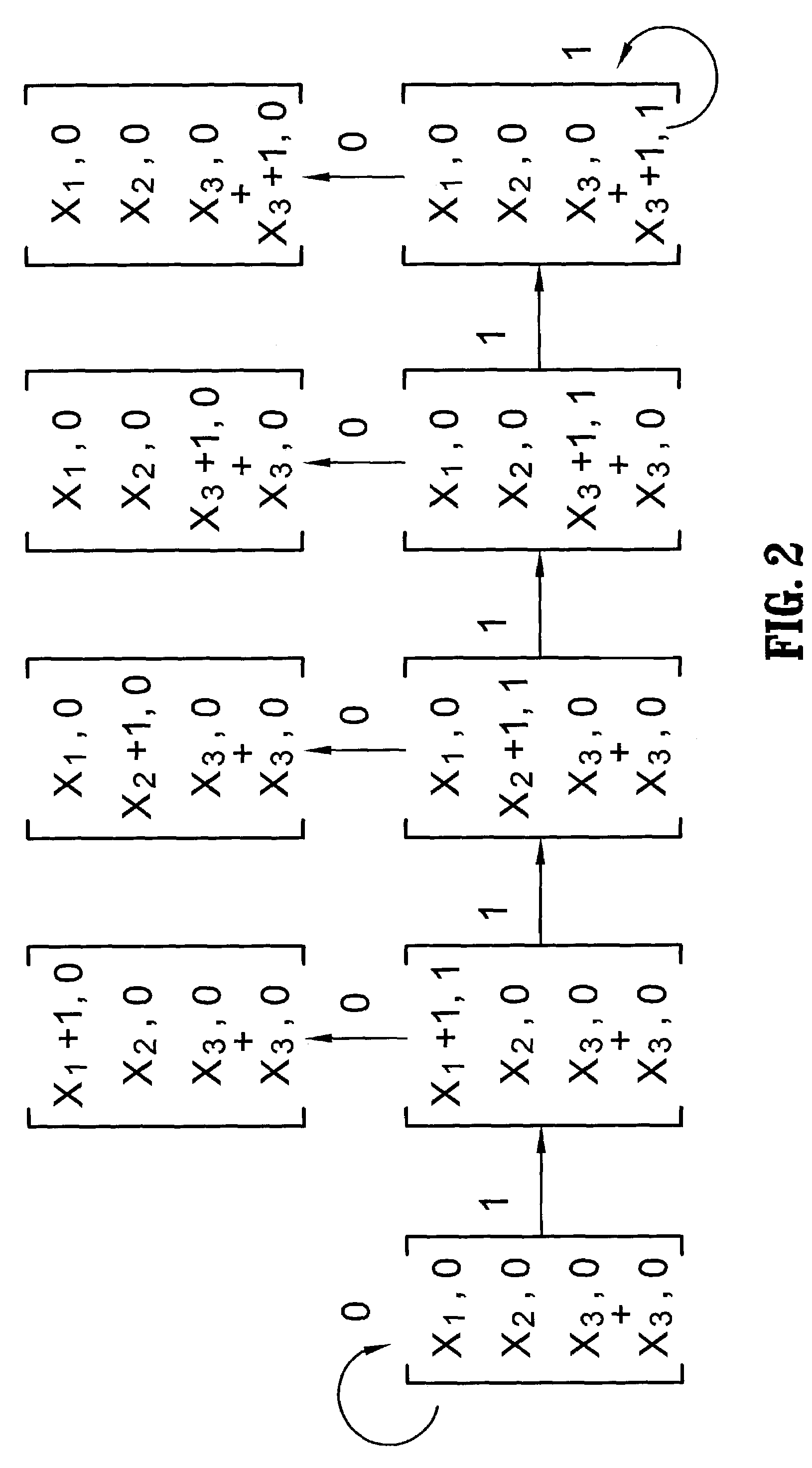

Automatic design of morphological algorithms for machine vision

ActiveUS7428337B2Maximizing expected classifier performanceImprove performanceCharacter and pattern recognitionMachine visionAlgorithm

The present invention provides a technique for automated selection of a parameterized operator sequence to achieve a pattern classification task. A collection of labeled data patterns is input and statistical descriptions of the inputted labeled data patterns are then derived. Classifier performance for each of a plurality of candidate operator / parameter sequences is determined. The optimal classifier performance among the candidate classifier performances is then identified. Performance metric information, including, for example, the selected operator sequence / parameter combination, will be outputted. The operator sequences selected can be chosen from a default set of operators, or may be a user-defined set. The operator sequences may include any morphological operators, such as, erosion, dilation, closing, opening, close-open, and open-close.

Owner:SIEMENS CORP

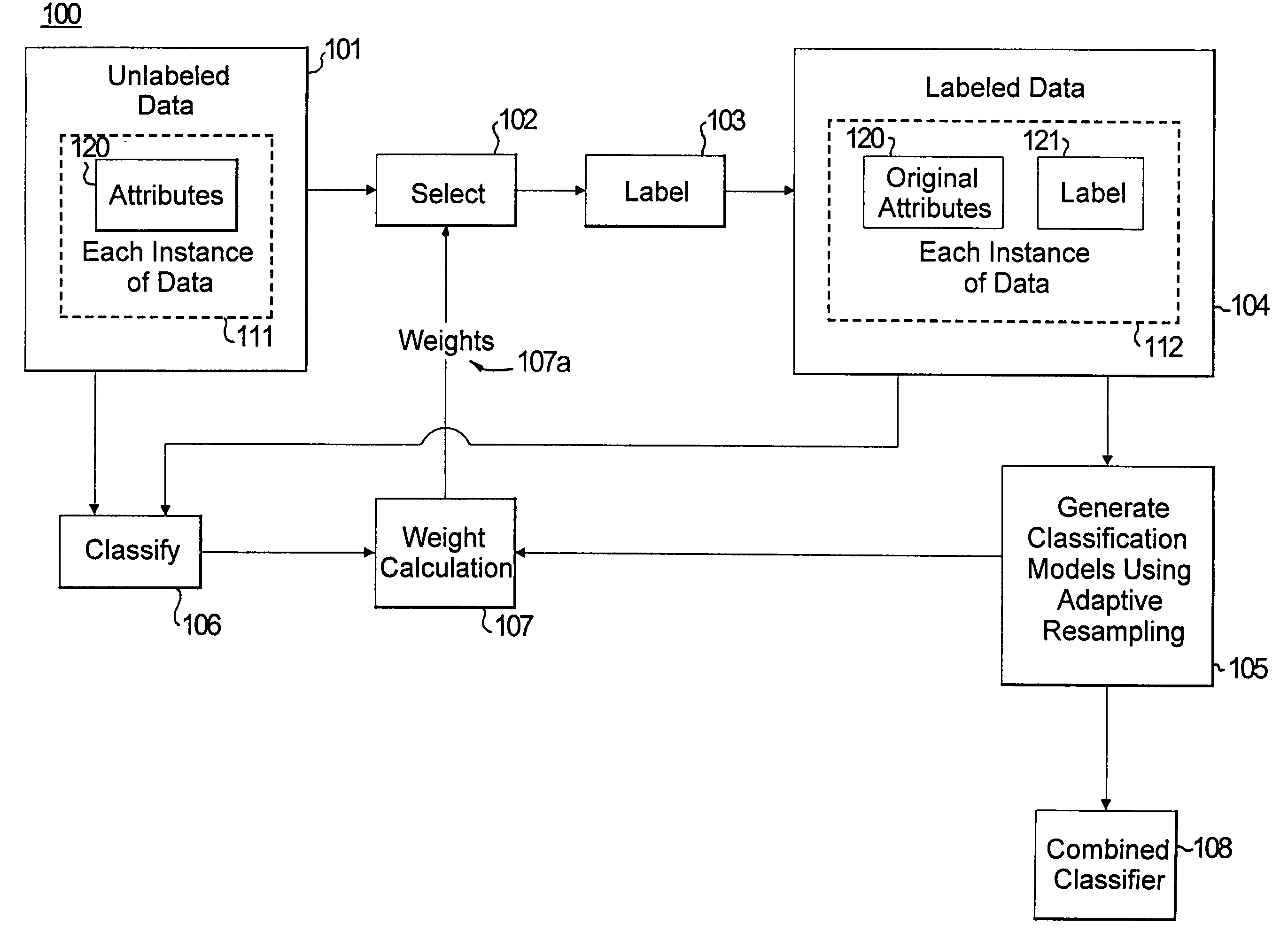

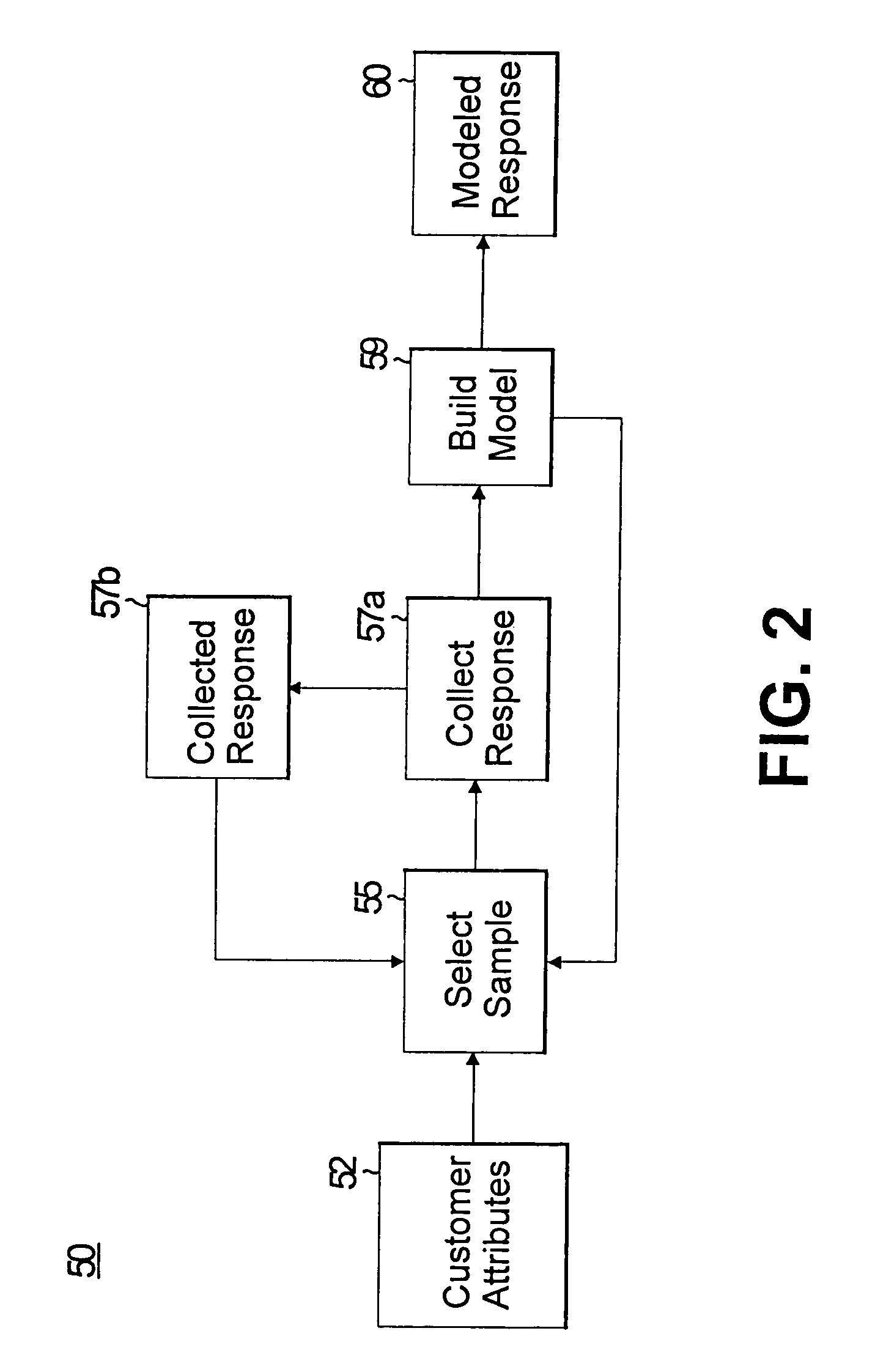

System and method for efficiently generating models for targeting products and promotions using classification method by choosing points to be labeled

InactiveUS6937994B1Improve classification accuracySmall setSpecial data processing applicationsMarket data gatheringAlgorithmCategorical models

A closed loop system is presented for selecting samples for labeling so that they can be used to generate classifiers. The sampling is done in phases. In each phase a subset of samples are chosen using information collected in previous phases and the classification model that has been generated up to that point. The total number of samples and the number of phases can be chosen by the user.

Owner:IBM CORP

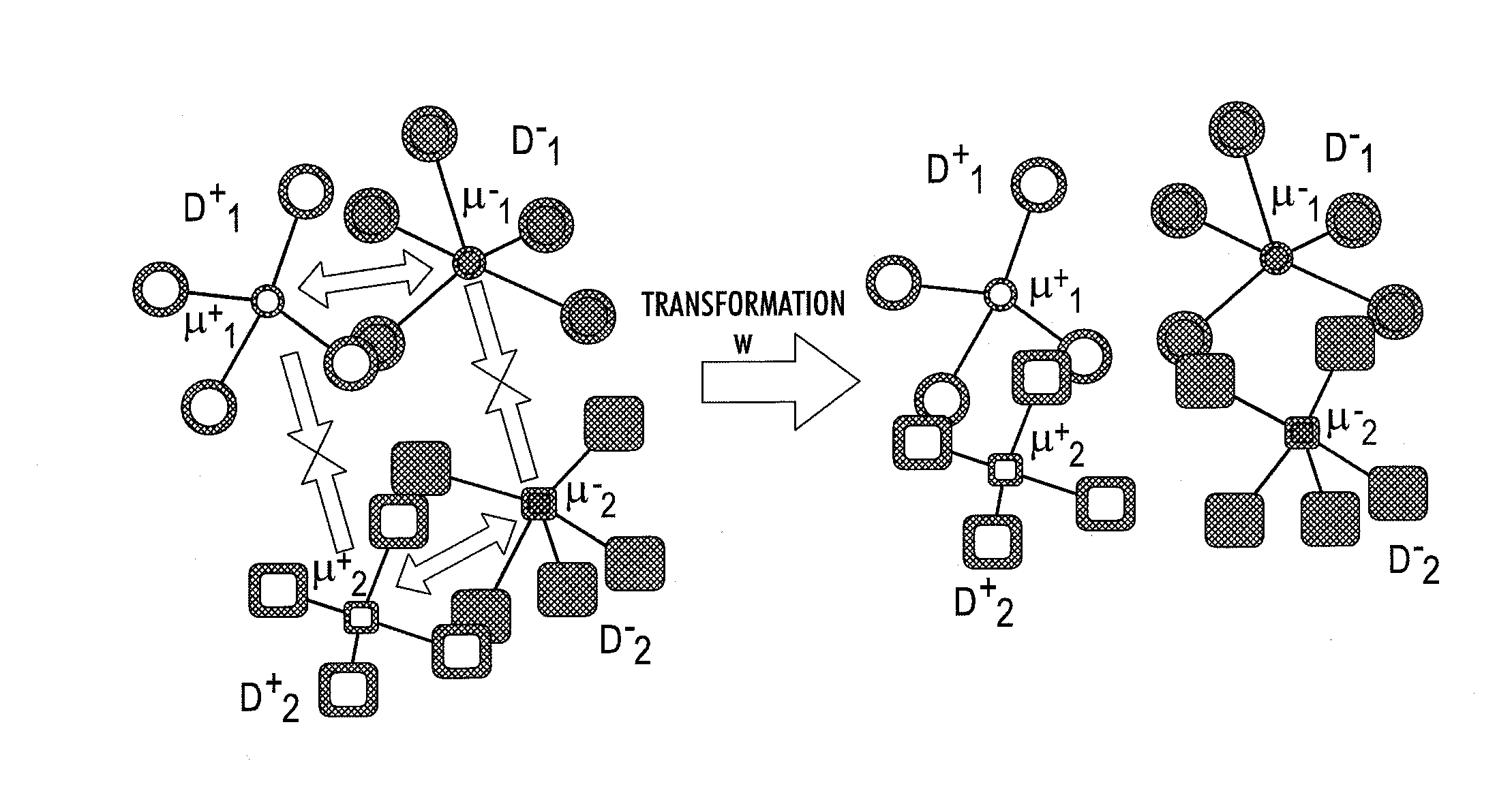

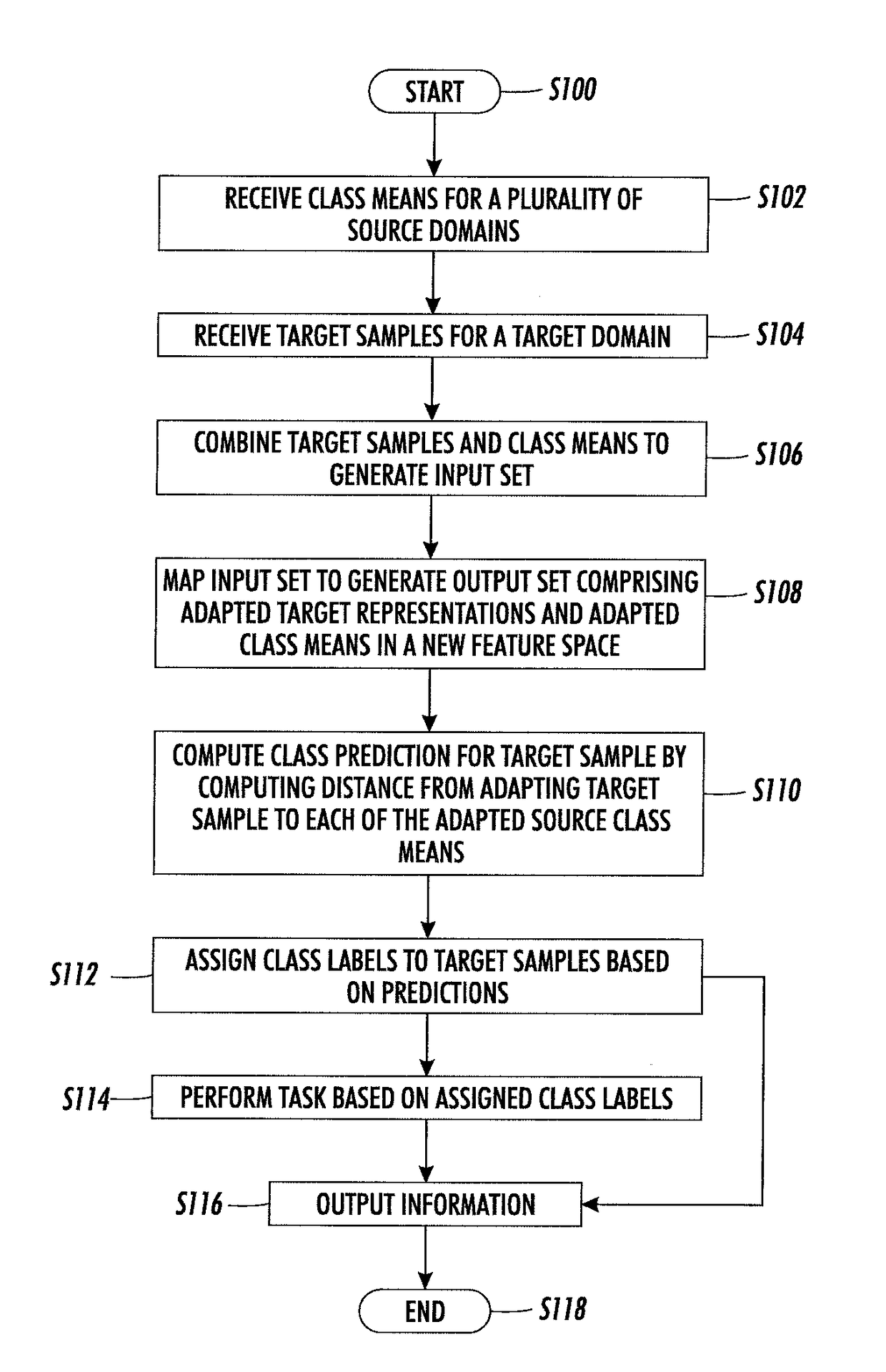

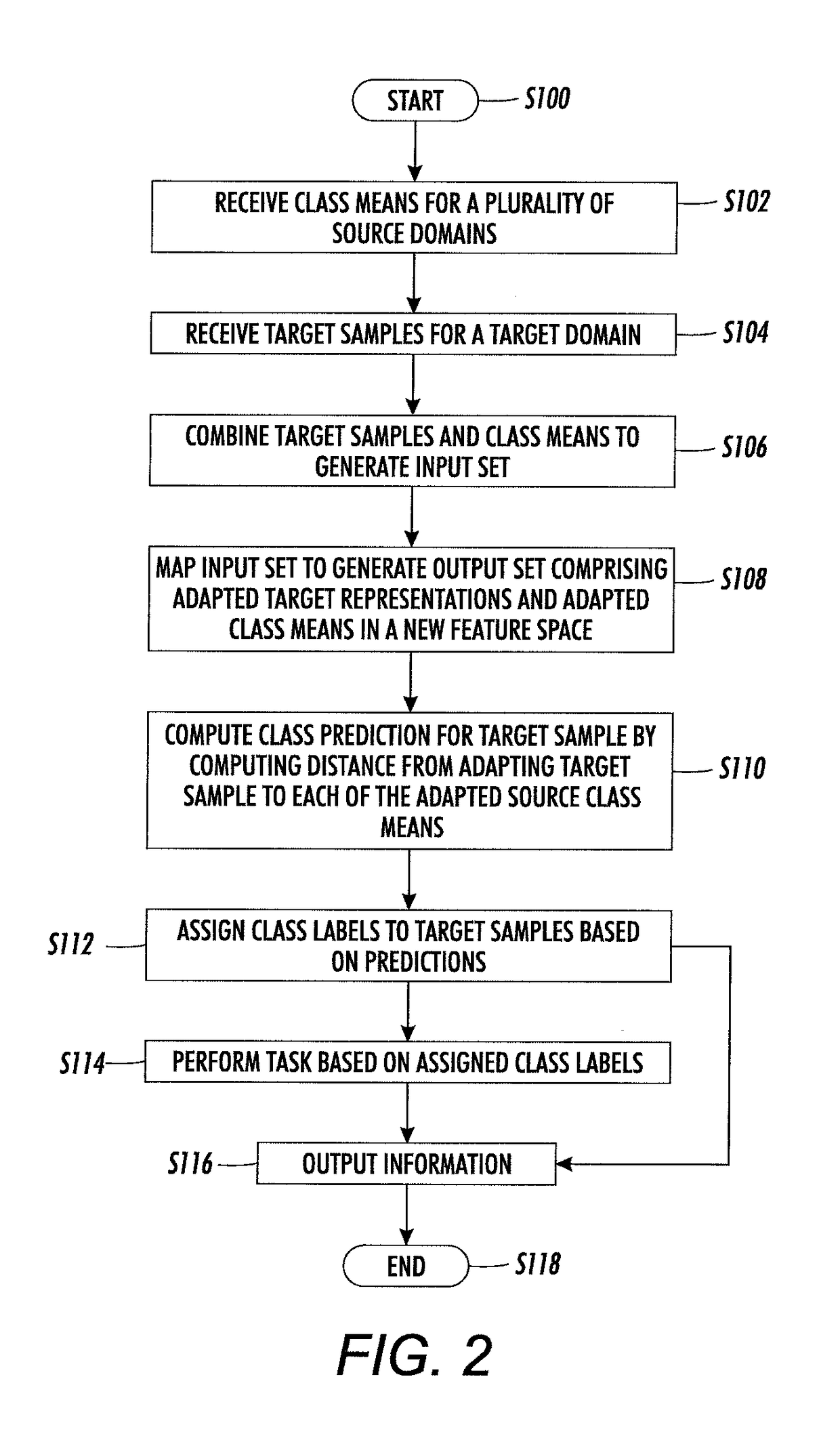

Adapted domain specific class means classifier

A domain-adapted classification system and method are disclosed. The method includes mapping an input set of representations to generate an output set of representations, using a learned transformation. The input set of representations includes a set of target samples from a target domain. The input set also includes, for each of a plurality of source domains, a class representation for each of a plurality of classes. The class representations are representative of a respective set of source samples from the respective source domain labeled with a respective class. The output set of representations includes an adapted representation of each of the target samples and an adapted class representation for each of the classes for each of the source domains. A class label is predicted for at least one of the target samples based on the output set of representations and information based on the predicted class label is output.

Owner:XEROX CORP

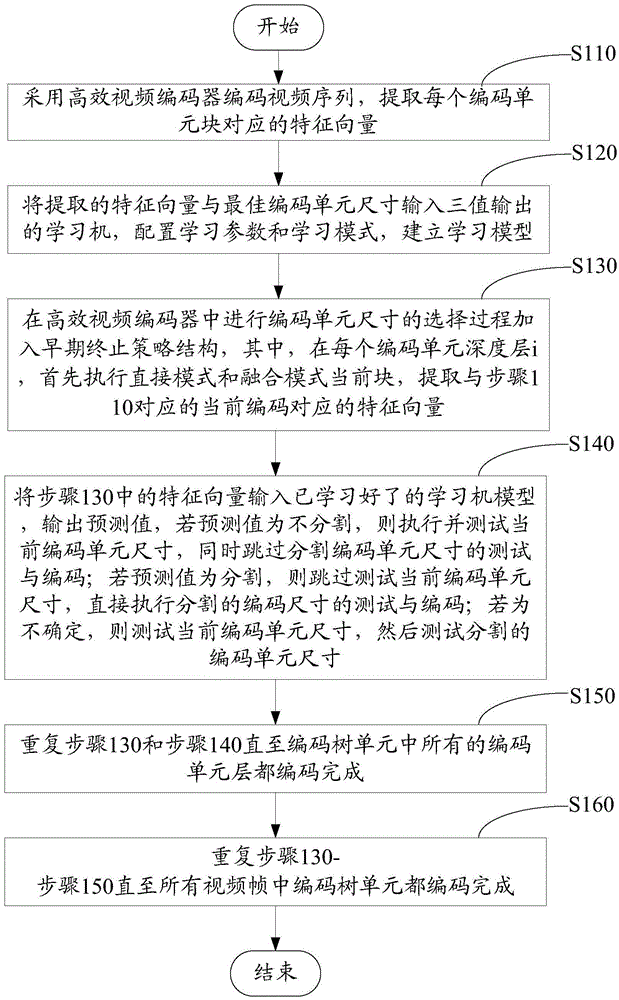

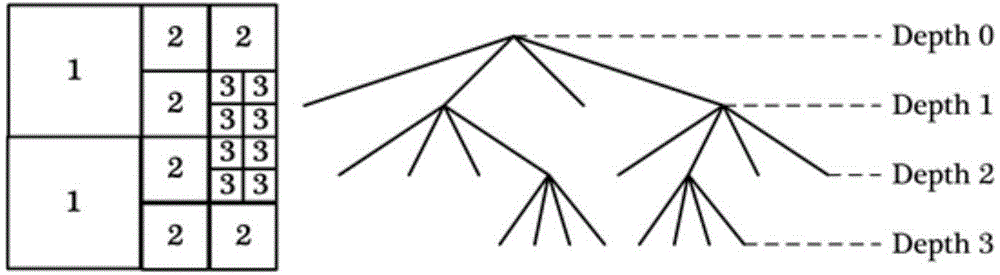

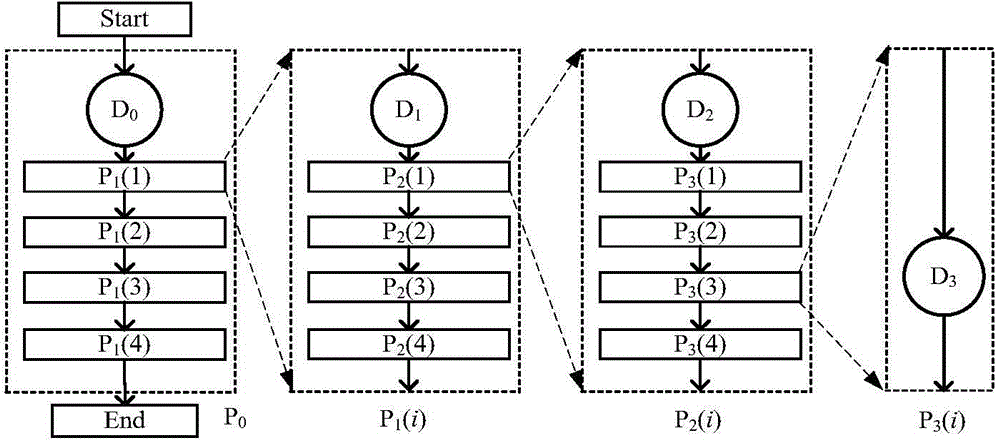

Learning-based high efficiency video coding method

ActiveCN106162167AImprove coding efficiencyEasy to learnDigital video signal modificationUnit sizeFeature vector

The invention discloses a learning-based high efficiency video coding method. The method comprises the following steps: coding a video sequence by a high efficiency video coder, and extracting feature vectors corresponding to coding unit blocks; inputting the extracted feature vectors and an optimal coding unit size into a three-value-output learning machine, and building a learning model; adding an early-abort strategy structure into a selection process of coding unit sizes in the high efficiency video coder, executing a skip mode current block and a merge mode current block firstly, and extracting feature vectors corresponding to corresponding current coding; inputting the feature vectors into a learned learning machine model, outputting a prediction value, and executing the current coding unit size according to the corresponding early-abort strategy structure till all coding unit layers in coding tree units are coded; and performing repeated execution till the coding tree units in all video frames are coded. By adoption of the method, an optimal coding process can be output correspondingly according to a rate-distortion cost and calculation complexity; the learning performance and classifying performance of a classifier are improved; and the coding efficiency of video coding is increased.

Owner:SHENZHEN INST OF ADVANCED TECH CHINESE ACAD OF SCI

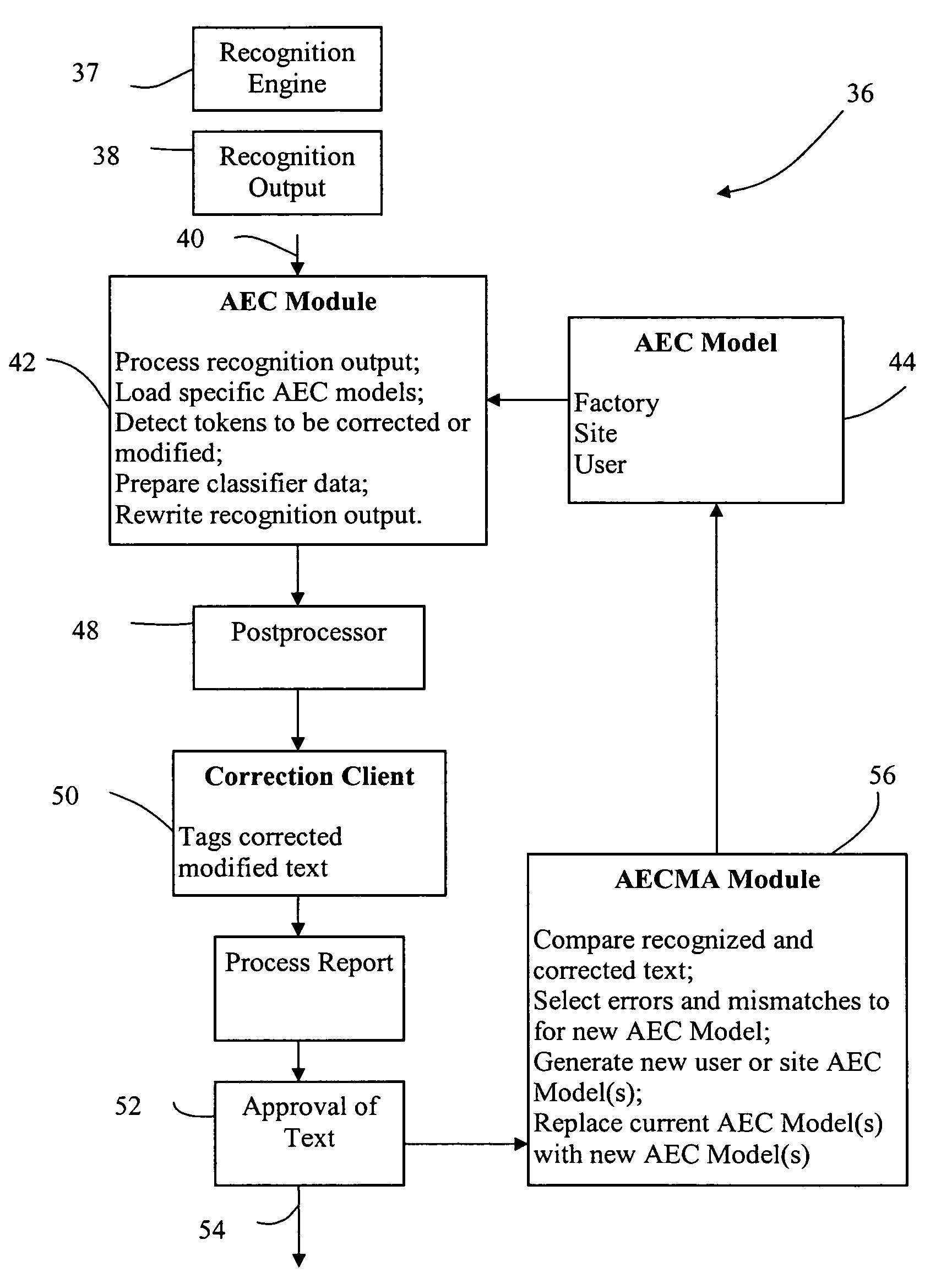

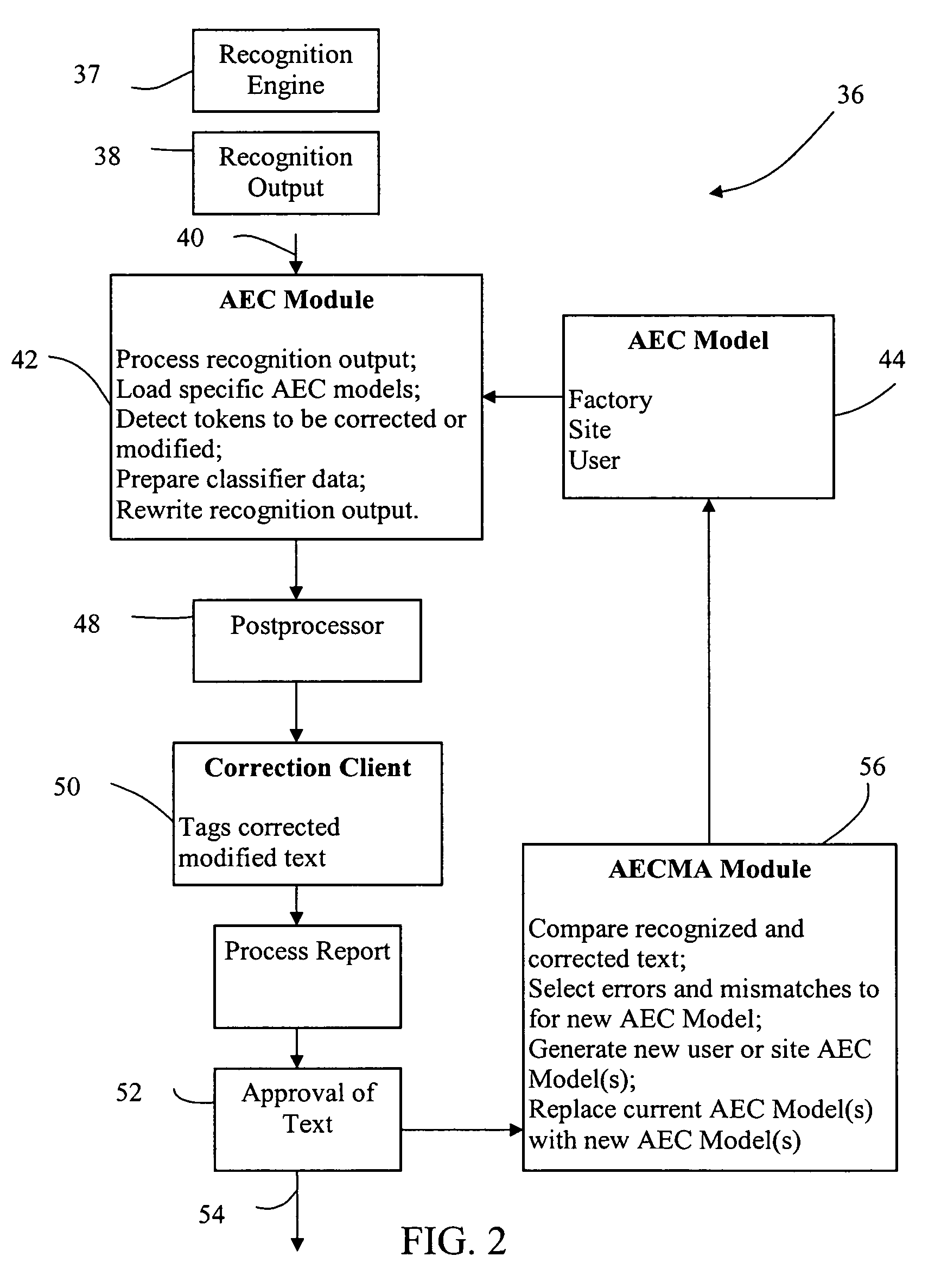

System and method for adaptive automatic error correction

InactiveUS7565282B2Speech recognitionSpecial data processing applicationsPattern recognitionEngineering

A method for adaptive automatic error and mismatch correction is disclosed for use with a system having an automatic error and mismatch correction learning module, an automatic error and mismatch correction model, and a classifier module. The learning module operates by receiving pairs of documents, identifying and selecting effective candidate errors and mismatches, and generating classifiers corresponding to these selected errors and mismatches. The correction model operates by receiving a string of interpreted speech into the automatic error and mismatch correction module, identifying target tokens in the string of interpreted speech, creating a set of classifier features according to requirements of the automatic error and mismatch correction model, comparing the target tokens against the classifier features to detect errors and mismatches in the string of interpreted speech, and modifying the string of interpreted speech based upon the classifier features.

Owner:NUANCE COMM INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com