Learning-based high efficiency video coding method

A high-efficiency video coding and coding technology, applied in the field of high-efficiency video coding based on learning, can solve problems such as compression efficiency reduction, difficult coding efficiency, computational complexity, and difficulty in adapting to coding requirements of different video systems

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

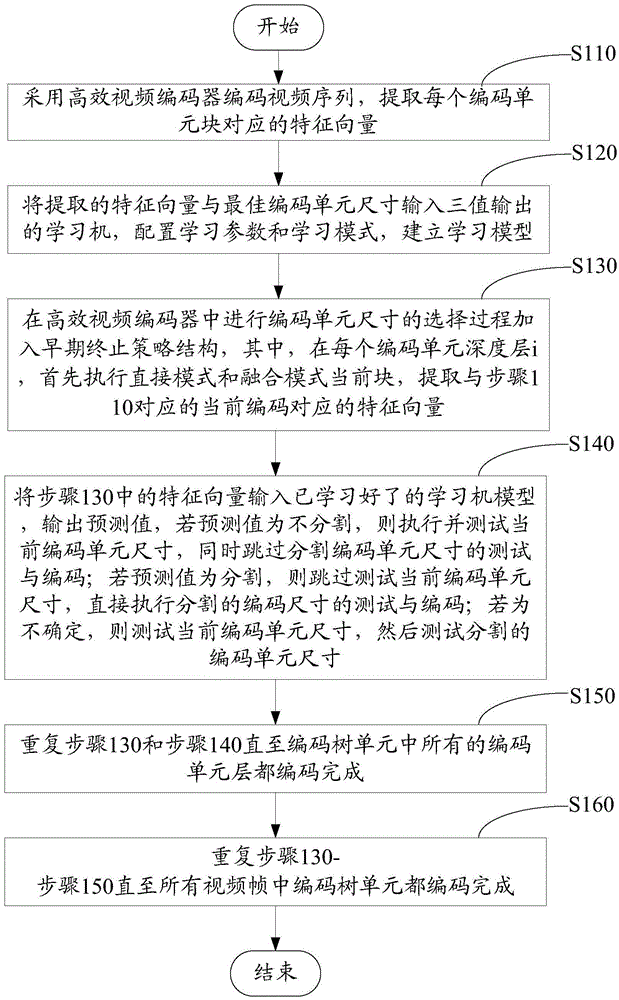

[0067] Such as figure 1 Shown is a flow chart of a learning-based high-efficiency video coding method.

[0068] A high-efficiency video coding method based on learning, comprising the following steps:

[0069] Step 110: Use a high-efficiency video encoder to encode a video sequence, and extract a feature vector corresponding to each coding unit block.

[0070] The feature vector includes features of the current coding unit block, motion information, context information, quantization parameters, etc., and an optimal coding unit size.

[0071] The characteristics of the current coding unit block include the coding block identification bit x CBF_Meg (i), rate-distortion cost value x RD_Meg (i), distortion x D_Meg (i) and the number of encoded bits x Bit_Meg (i); wherein, i is the depth of the current coding unit.

[0072] The calculation formula of motion information is x MV_Meg (i)=|MVx|+|MVy|, where MVx and MVy represent motion and vertical motion magnitudes respectively...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com