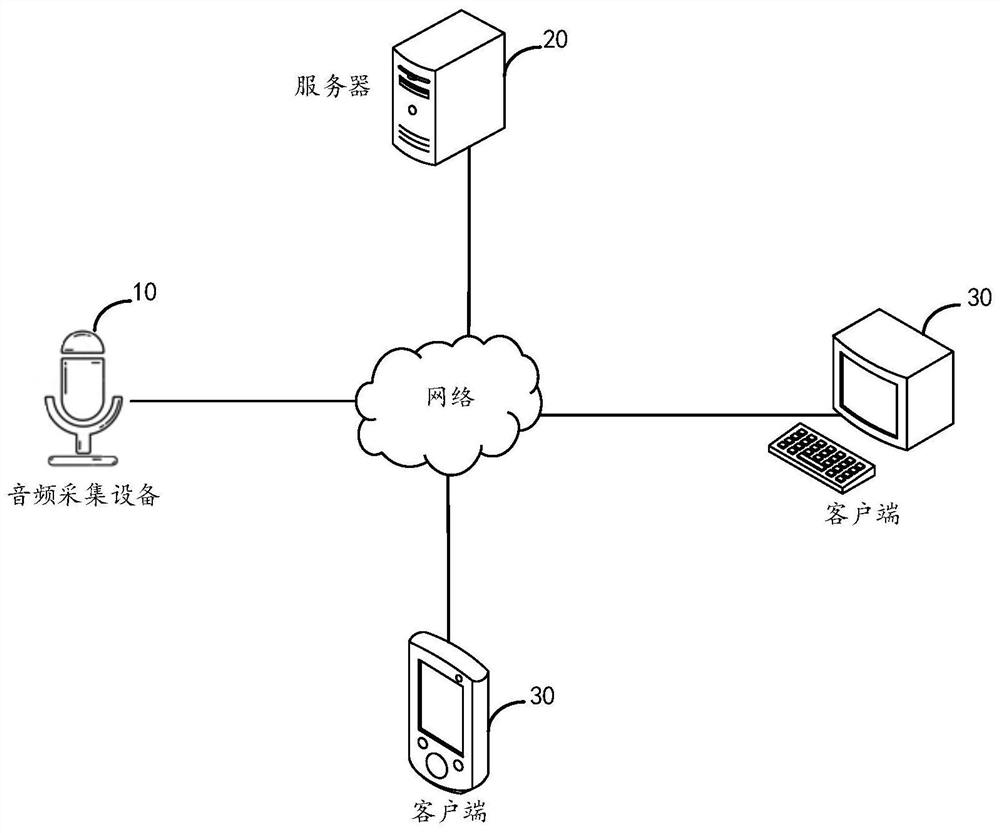

Neural network model training method, audio generation method and device and electronic equipment

A technology of neural network model and training method, which is applied in the field of sound synthesis, can solve problems such as poor sound quality, achieve the effect of improving sound quality and enhancing deep modeling ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

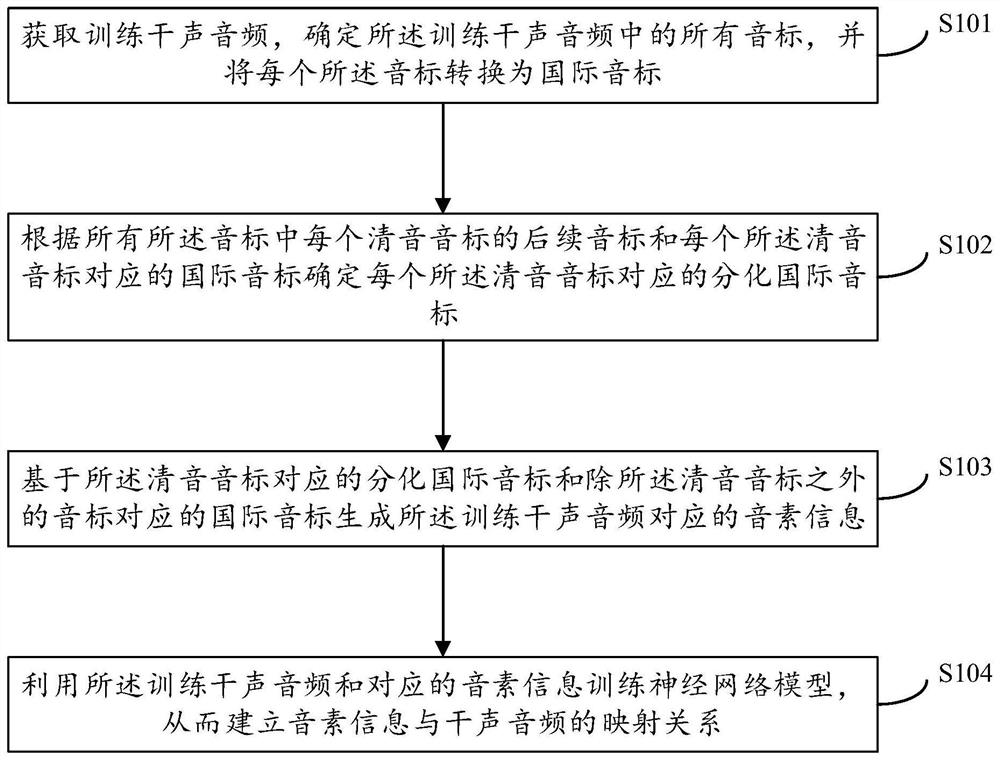

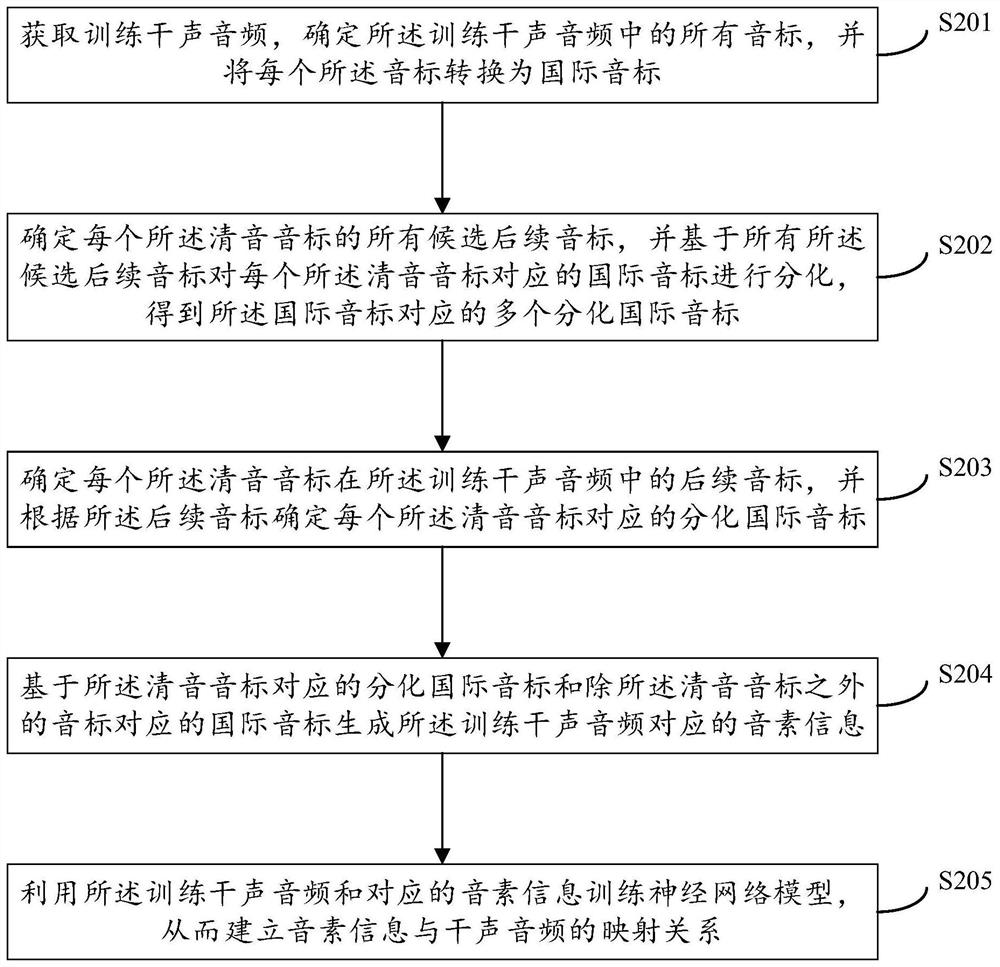

Method used

Image

Examples

Embodiment Construction

[0043] The applicant of the present application has found through research that the unvoiced phonetic symbols do not vibrate the vocal cords when they are uttered, and the pronunciation of each unvoiced phonetic symbol is different due to the difference in the subsequent phonetic symbols. For example, for the Chinese characters "春" and "茶", the corresponding pinyin are "chun" and "cha", respectively, which contain the same unvoiced phonetic symbol "ch". When mouth-pronouncing "chun" and "tea", although the unvoiced sounds are the same, the voiced sounds after the unvoiced sounds are different, that is, "un" and "a" are different, resulting in different correspondences to the unvoiced "ch". The mouth shape, that is to say, in different Chinese characters for the same unvoiced sound "ch", the sound is produced in different ways.

[0044] In related technologies, one unvoiced phonetic symbol corresponds to one IPA. In the above example, the IPA corresponding to the unvoiced phone...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com