Human body posture estimation method based on relation analysis network

A technology of human pose and relationship, applied in the field of human pose estimation based on relationship analysis network, can solve the problem of missing detailed information, and achieve the effect of improving detection accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

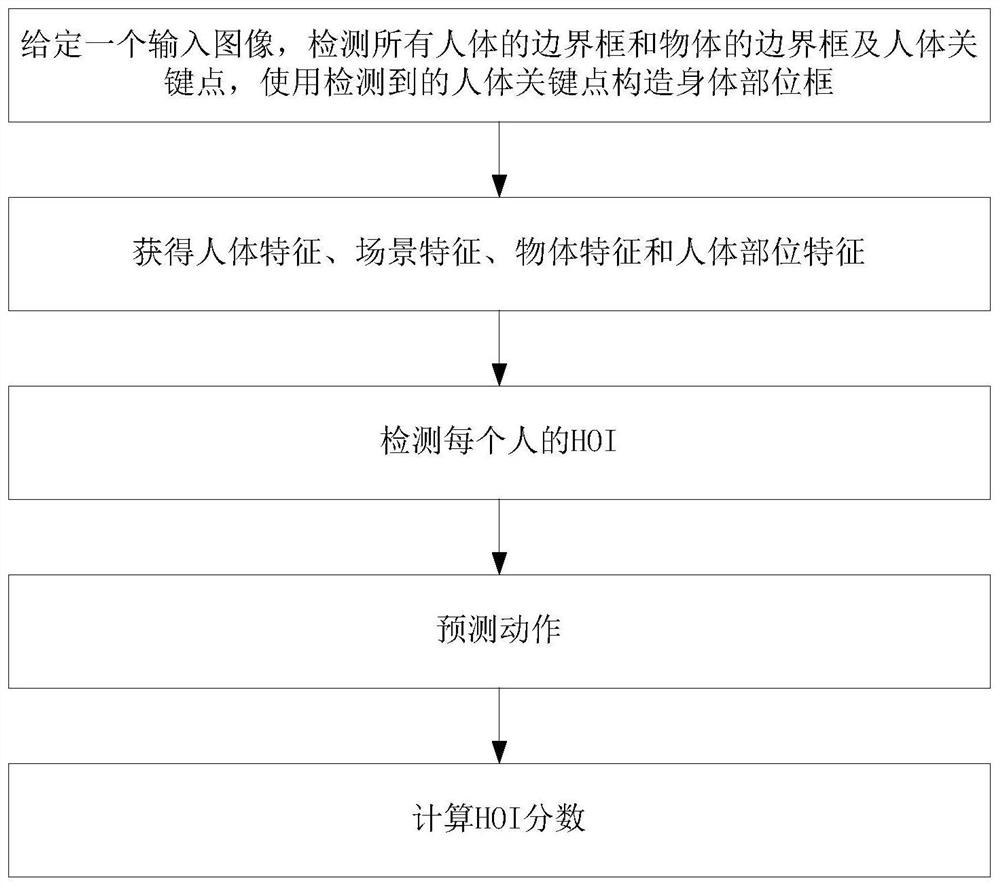

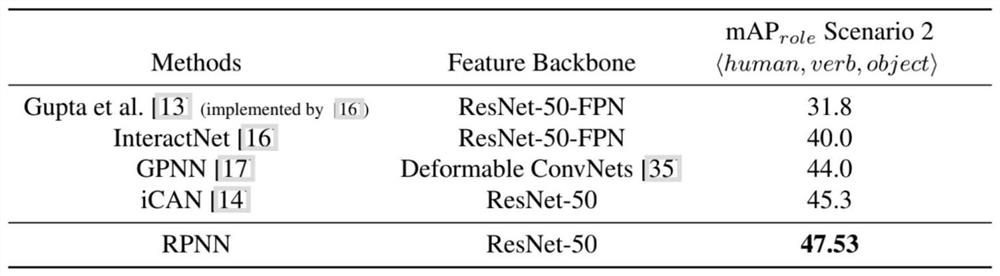

[0100] The human body posture estimation method based on the relation analysis network of the present embodiment, it specifically comprises the following steps:

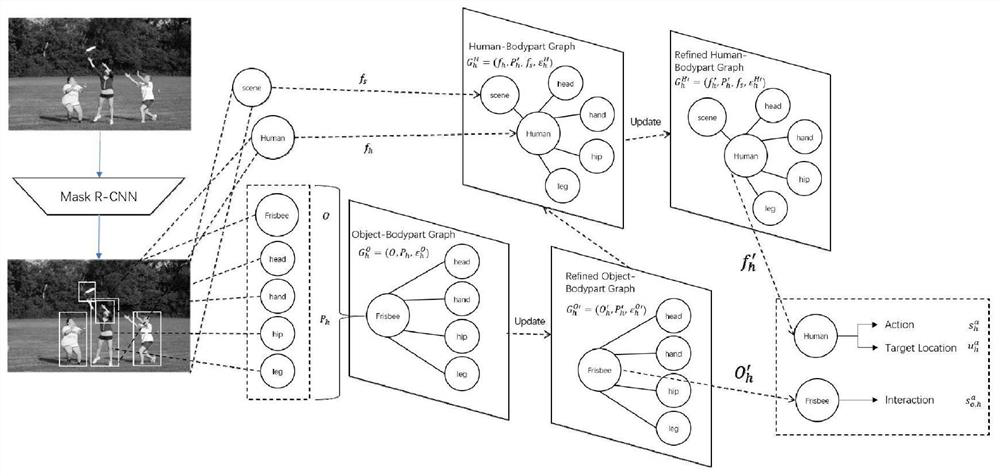

[0101] Step 1. Given an input image, use Mask R-CNN to detect the bounding boxes b of all human bodies h and the object's bounding box b o and key points of human body kp h , using the detected human key points kp h Construct body part box b p,h .

[0102] Step 2. Extract features from the shared feature map of ResNet-50 C4 through the ROI Align operation, and feed the features into ResNet-50 C5 to obtain human body features f h , scene feature f s , object feature f o and body part features f p,h . In addition, scene features f are obtained by adaptive average pooling s , also extract features from the shared feature map of ResNet-50 C4, and feed the features into ResNet-50 C5.

[0103] Step 3. For each person's HOI detection, the Object-Bodypart map is composed of body parts and objects. In most cases, a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com