Emotion recognition method based on context interaction relationship

An emotion recognition and context technology, applied in biometric recognition, character and pattern recognition, acquisition/recognition of facial features, etc., can solve the problem of ignoring the contextual emotional interaction, increasing the uncertainty of body or scene emotion, and reducing the model prediction ability, etc. question

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

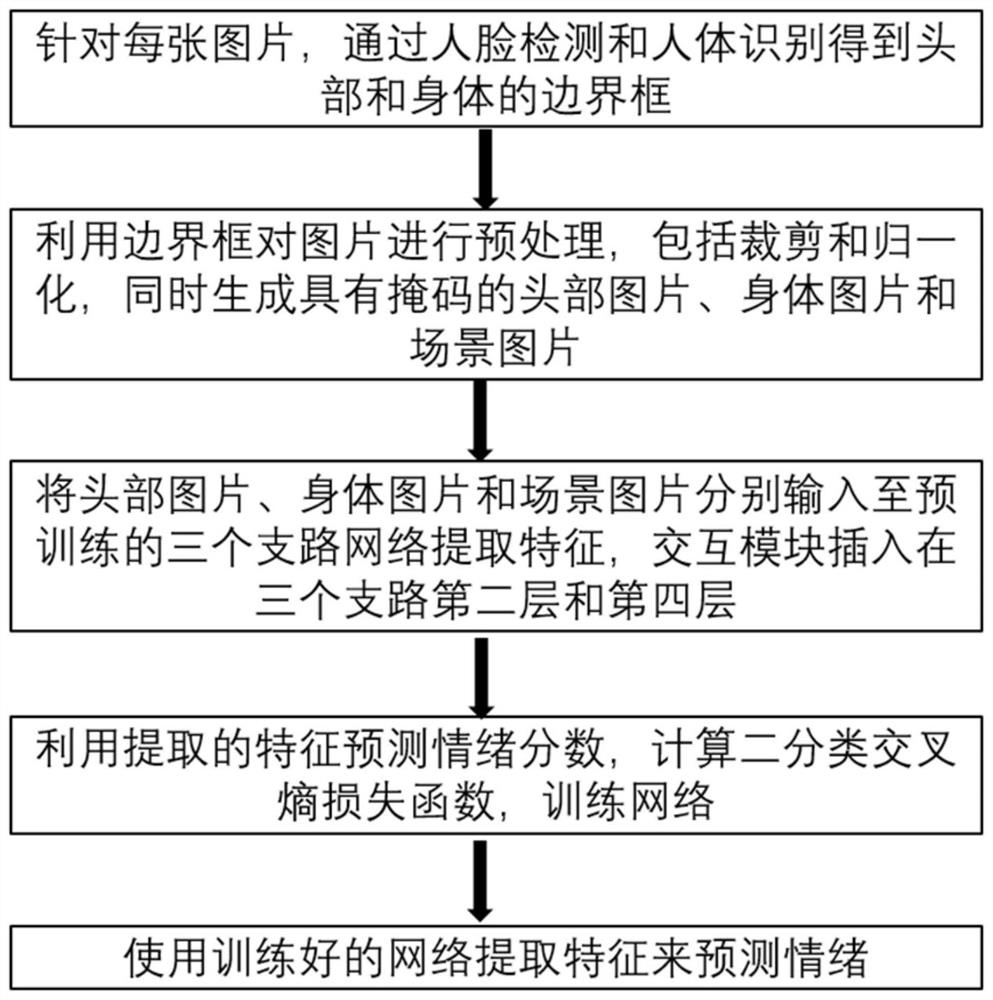

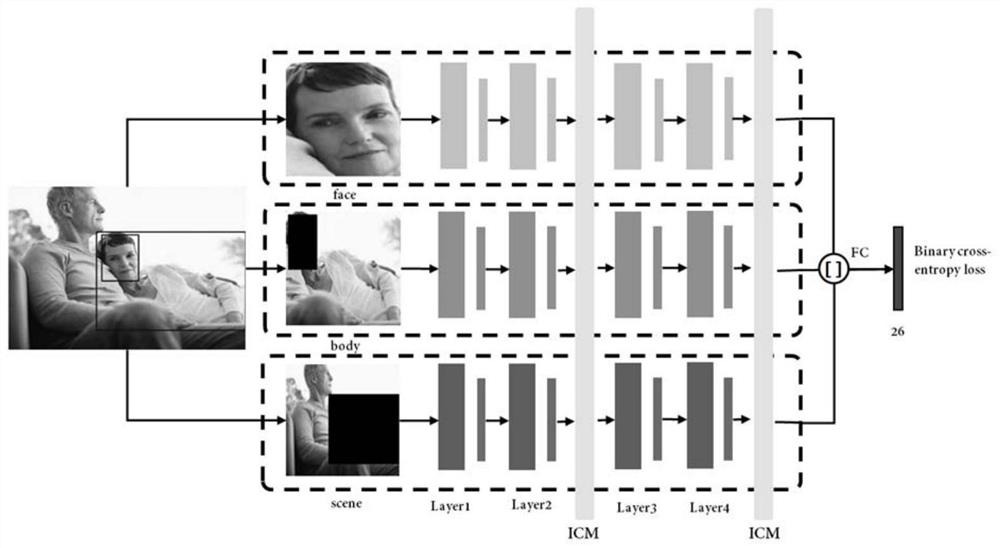

[0072] Such as figure 1 As shown, this embodiment provides an emotion recognition method based on contextual interaction, including the following steps:

[0073] S1: Detect each picture in the collected data set, including face detection and human body detection, and obtain the face bounding box and human body bounding box;

[0074] In this embodiment, OpenPose is used for human body bounding box detection and key point detection and OpenFace is used for face bounding box detection and key point detection;

[0075] If there is no human body bounding box or human face bounding box in the recognition, the coordinates [upper left abscissa, upper left ordinate, lower right abscissa, lower right ordinate] of the human body bounding box are set to [0.25 times the image width, 0.25 times the image height, 0.75 times the image width, 0.75 times the image height], the coordinates of the face bounding box [upper left abscissa, upper left ordinate, right lower abscissa, right lower ordi...

Embodiment 2

[0116] The present embodiment provides an emotion recognition system based on context interaction relations, including: a bounding box extraction module, a picture preprocessing module, a training image tuple building module, a benchmark neural network building module, a benchmark neural network initialization module, and an interaction module building module , interaction module initialization module, feature splicing and fusion module, training module and testing module;

[0117] In this embodiment, the bounding box extraction module is used to perform face detection and human body detection on the pictures in the data set to obtain a human face bounding box and a human body bounding box;

[0118] In this embodiment, the picture preprocessing module is used to preprocess the pictures of the face bounding box and the human body bounding box, and divide each real picture into a human face picture, a body picture with a mask and a scene picture with a mask ;

[0119] In this e...

Embodiment 3

[0128] This embodiment provides a storage medium, the storage medium can be a storage medium such as ROM, RAM, magnetic disk, optical disk, etc., and the storage medium stores one or more programs. A method for emotion recognition based on context interaction.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com