Three-dimensional scene semantic analysis method based on HoloLens space mapping

A technology for 3D scene and semantic analysis, applied in the field of computer vision, can solve problems such as inability to analyze 3D scene semantics, and achieve the effect of improving spatial mapping capabilities

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

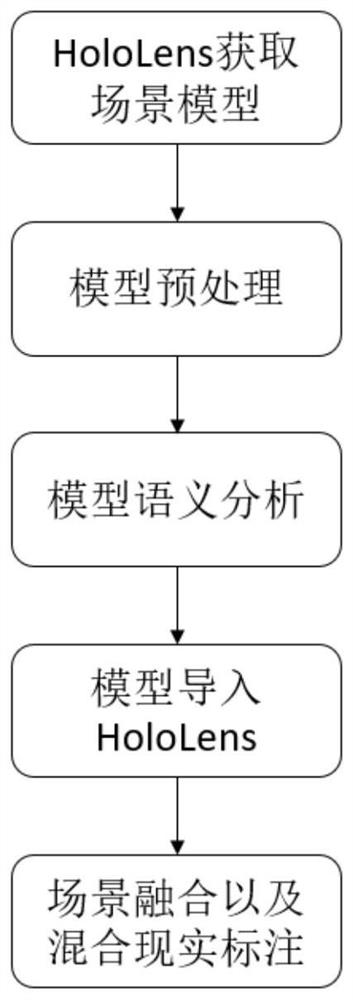

[0057] The present invention is based on the three-dimensional scene semantic analysis method of HoloLens space mapping, such as figure 1 As shown, the specific steps are as follows:

[0058] Step 1: scan and reconstruct the indoor real scene through HoloLens, and obtain the grid data a of the three-dimensional space mapping of the scene;

[0059] Step 2: Convert grid data a to point cloud data b, and complete the preprocessing and data labeling of point cloud data b;

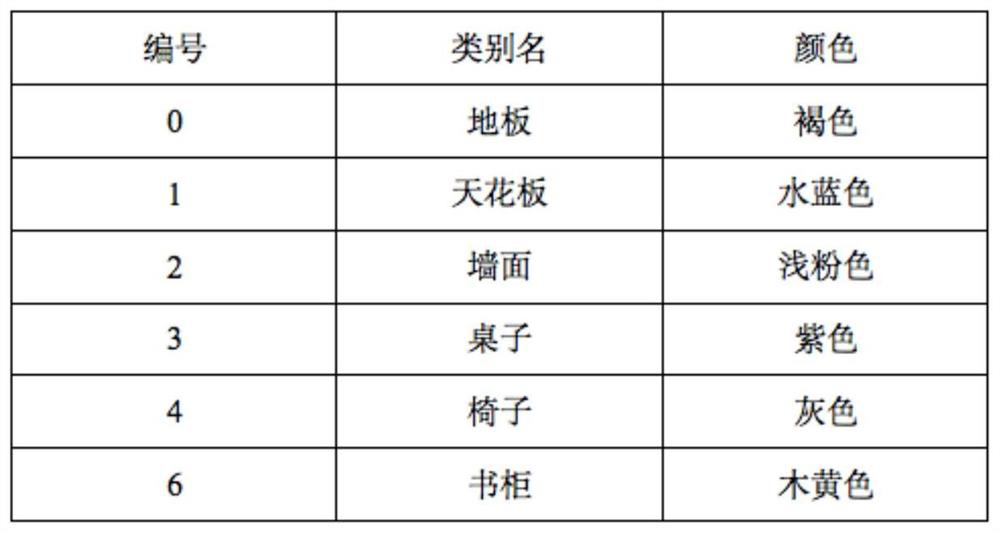

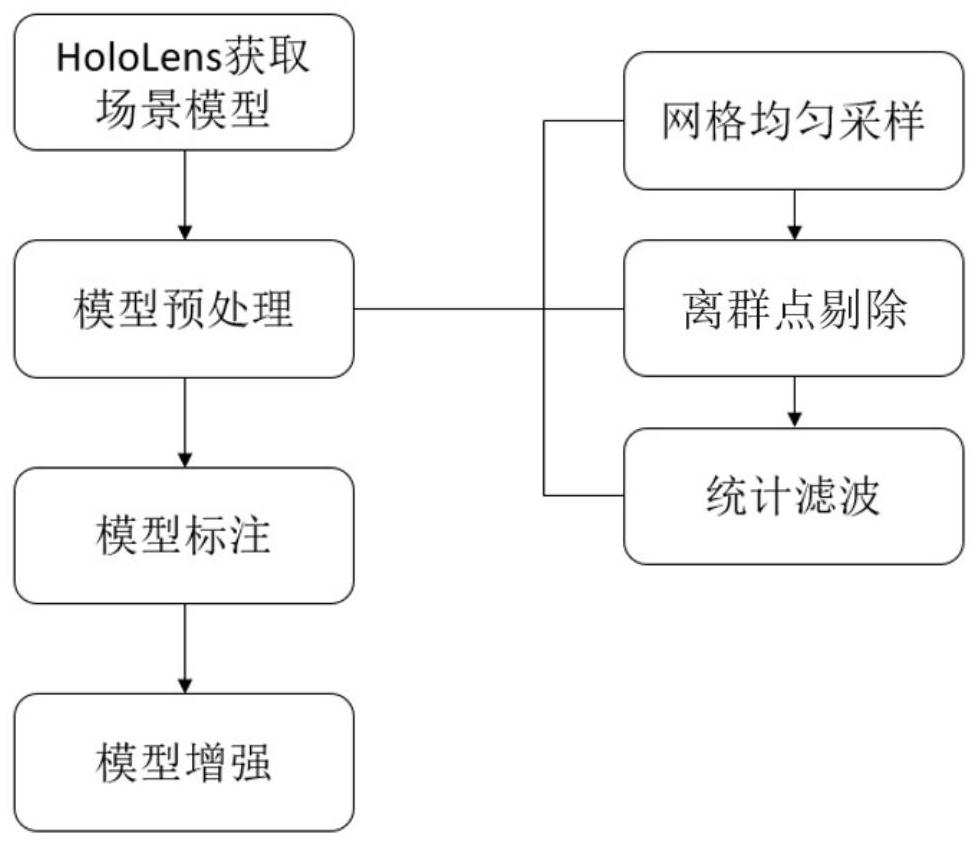

[0060]Step 3: Repeat step 1 and step 2 continuously until the collection and labeling of the required indoor data is completed, and an indoor point cloud dataset and a category information lookup table are made. The category information lookup table is as follows: figure 2 As shown, the data set production process is as follows image 3 shown;

[0061] Step 4: Carry out model training on the 3D scene semantic neural network, and save the training model M;

[0062] Step 5: Create the HoloLens scene semantic...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com