Video face fat and thin editing method

A video, fat and thin technology, applied in the field of video face fat and thin editing, can solve the problem of not being able to obtain face shape parameters, and achieve efficient and stable 3D face reconstruction

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

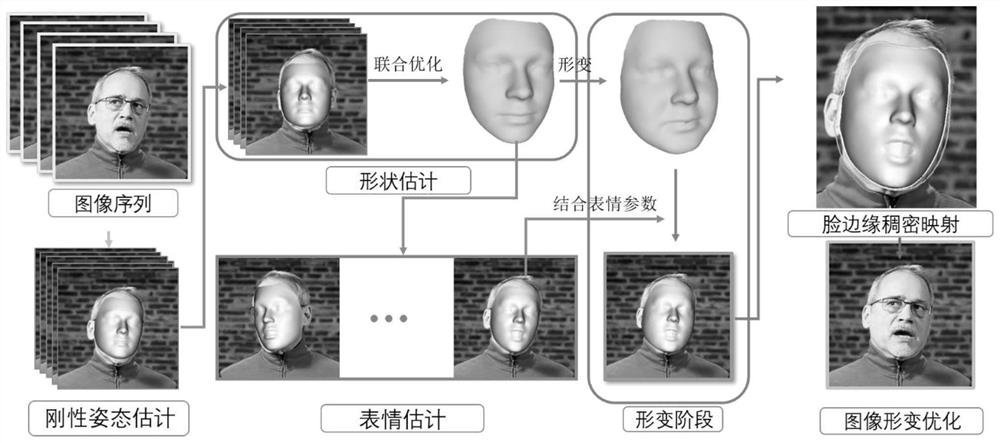

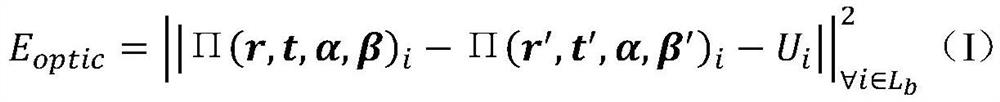

[0073] Such as figure 1 As shown, the video face fat and thin editing method includes the following steps:

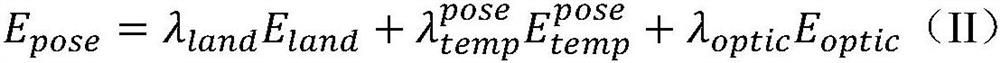

[0074] (1) Reconstruct the 3D face model based on the face video, and generate the 3D face shape parameters in the face video and the facial expression parameters and face pose parameters of each video frame.

[0075] (1-1) Reconstruct the 3D face model based on the monocular vision 3D face reconstruction algorithm, and calculate the face pose parameters of each video frame in the face video; this step performs rigid pose estimation on the face video.

[0076] The monocular vision 3D face reconstruction algorithm adopts "A Multiresolution3D Morphable Face Model and Fitting Framework"

[0077] (Inthe11thJointConferenceonComputerVision, ImagingandComputerGraphicsTheoryandApplications (VISIGRAPP), Vol.4.79-86) discloses the method of the lowest resolution in the multi-resolution face three-dimensional model, and its specific steps are as follows:

[0078] (1-1-1) Reconst...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com