Patents

Literature

534 results about "Wears glasses" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Extended wear ophthalmic lens

InactiveUS5760100AExcellent ion permeabilityGood water permeabilityLiquid surface applicatorsEye implantsExtended wear contact lensesIon permeation

Owner:NOVARTIS AG

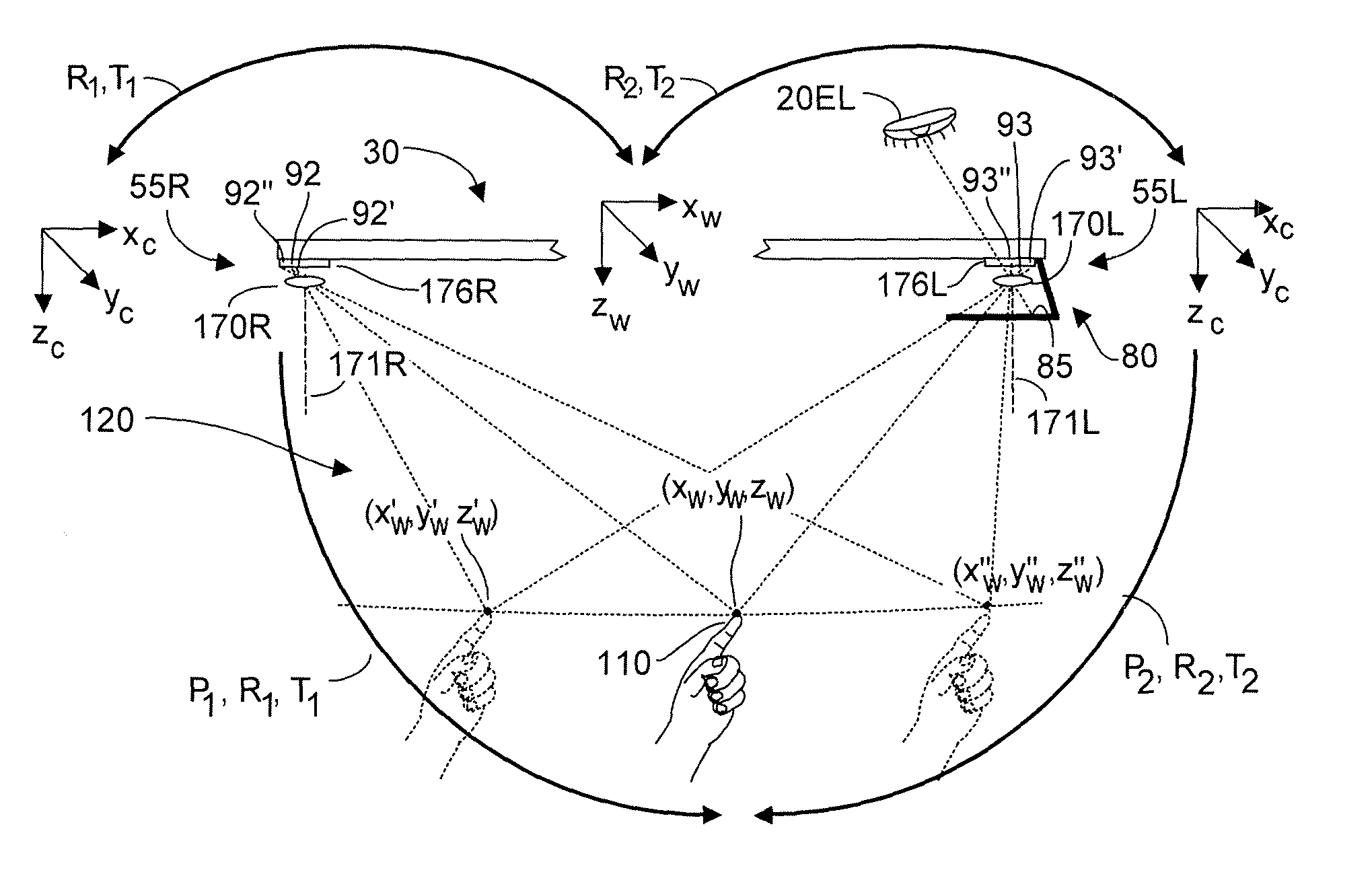

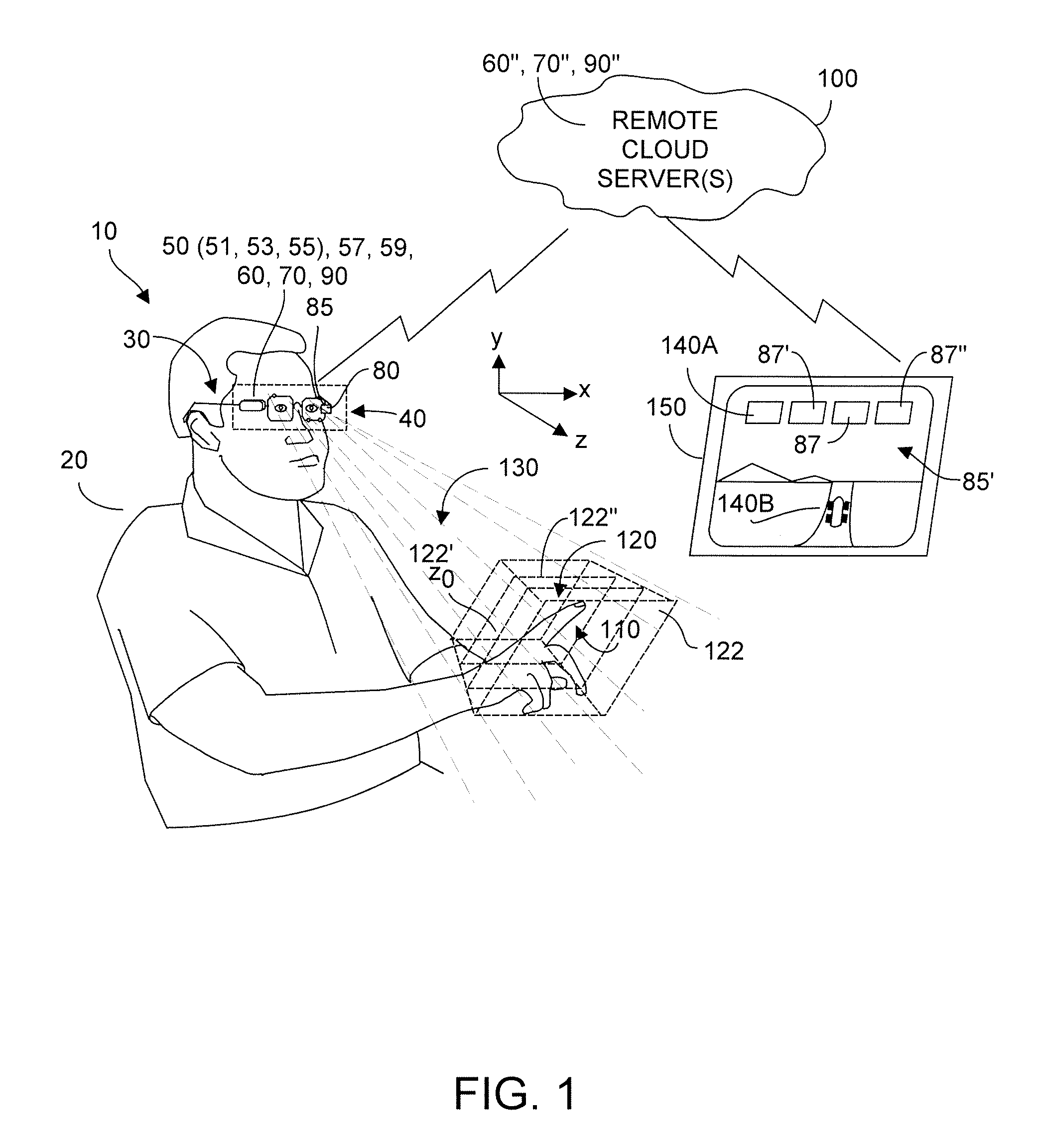

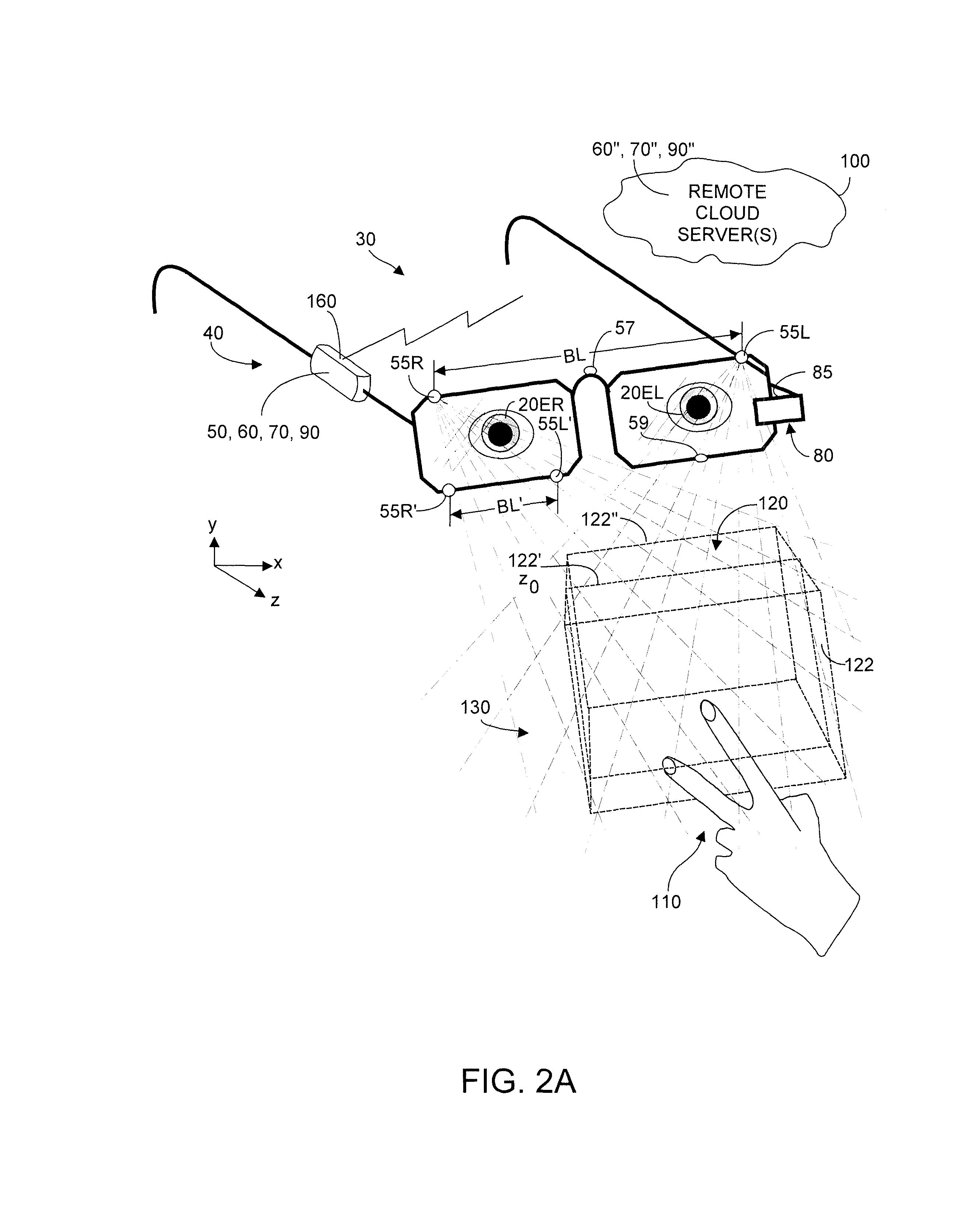

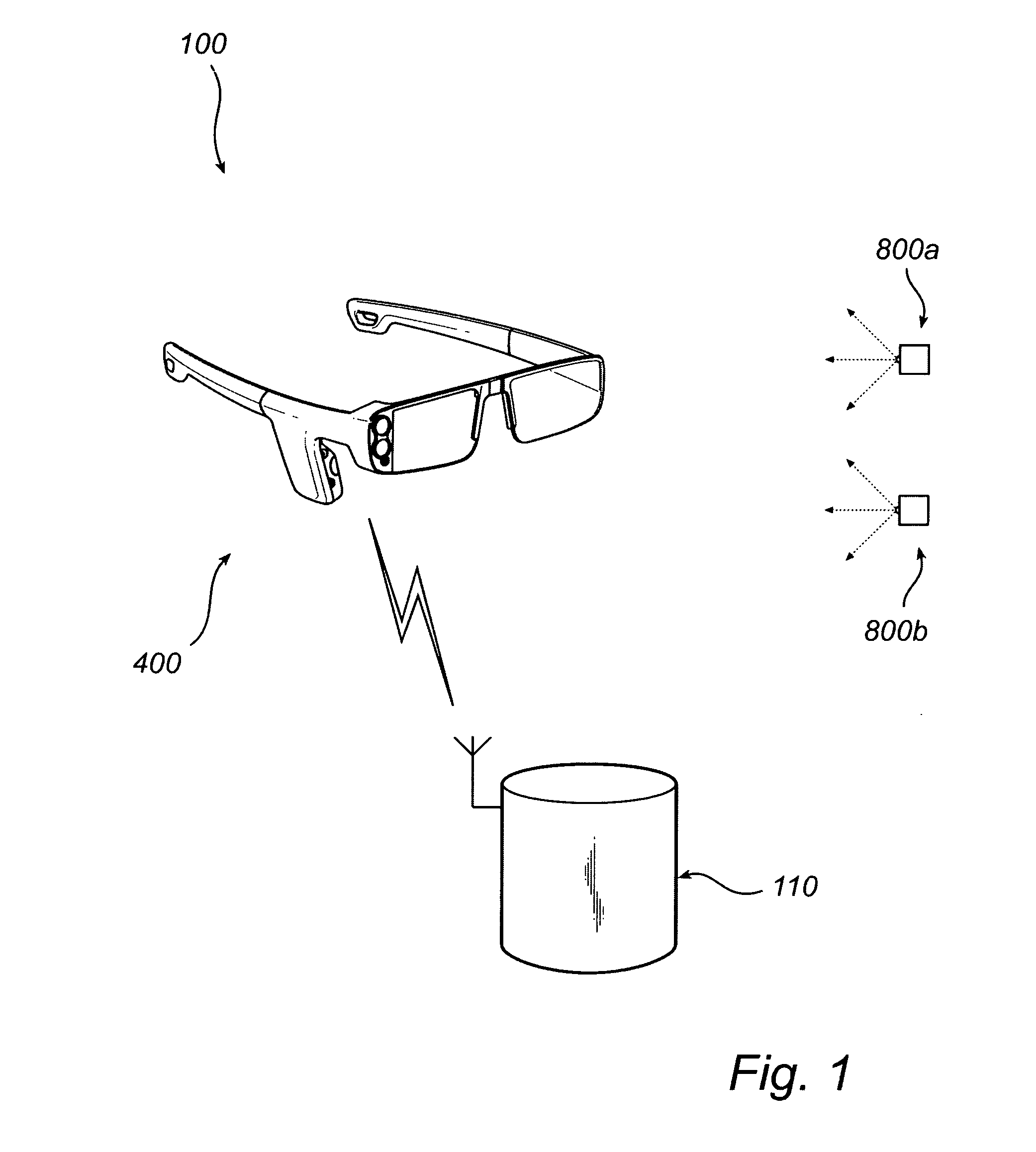

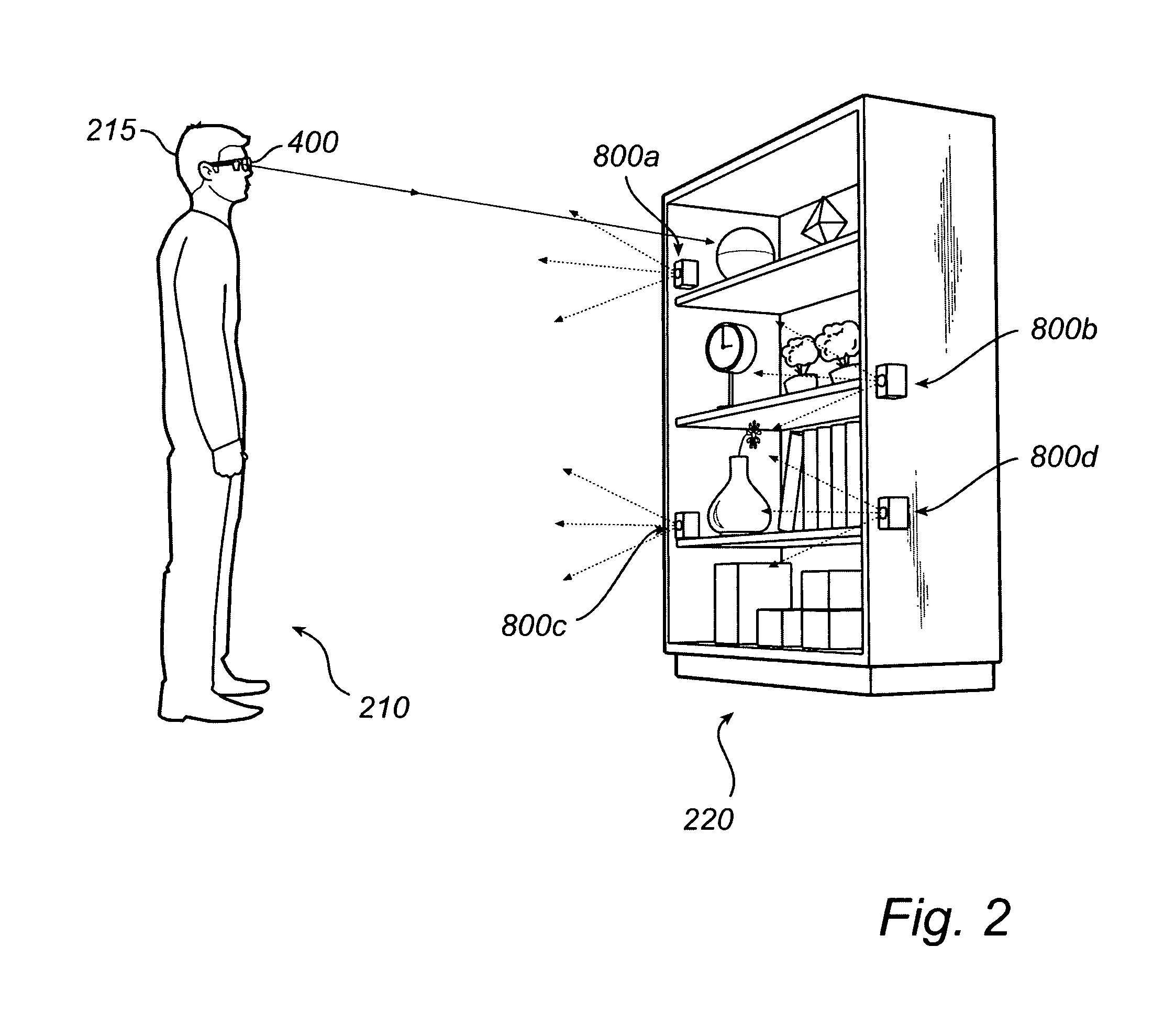

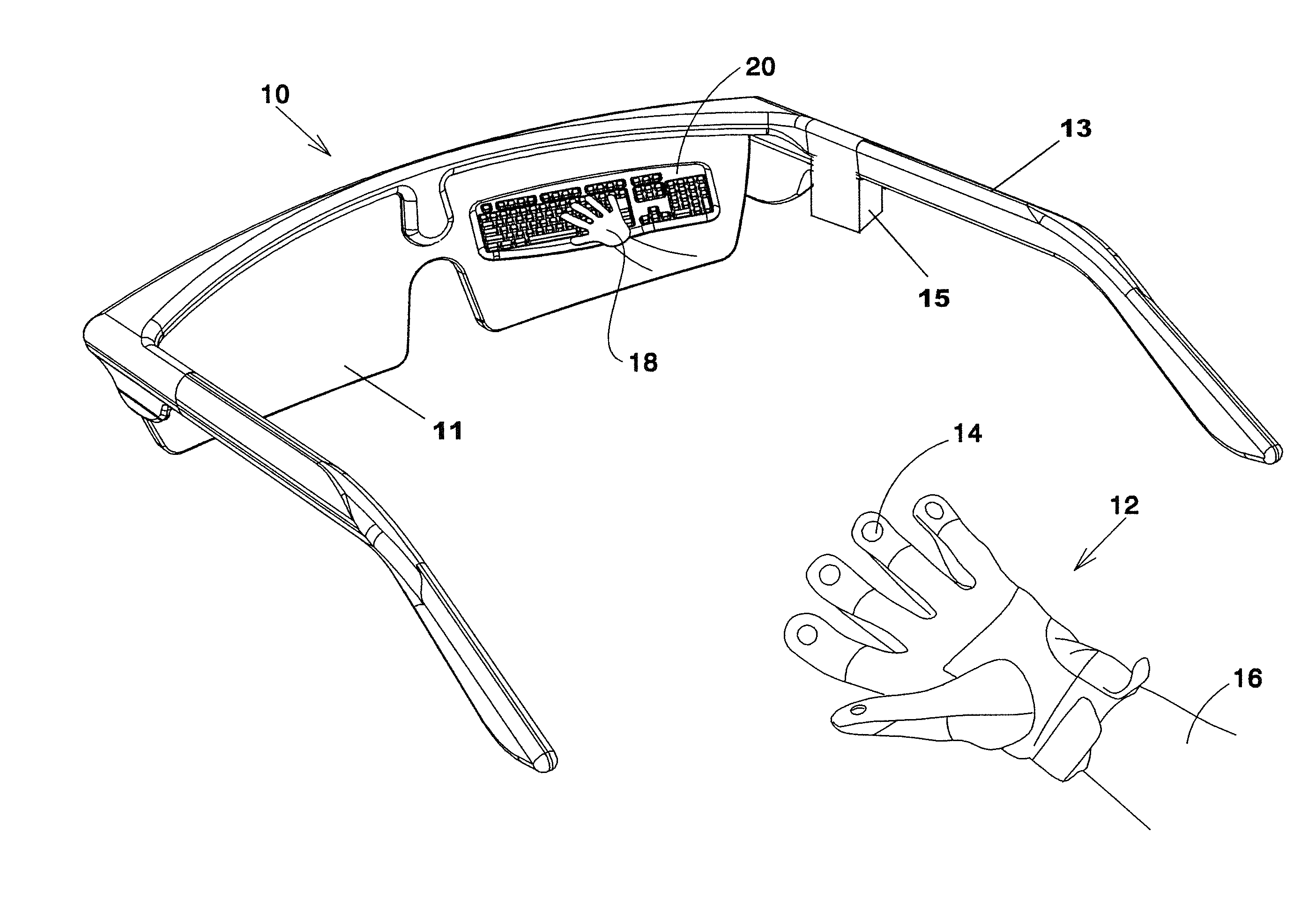

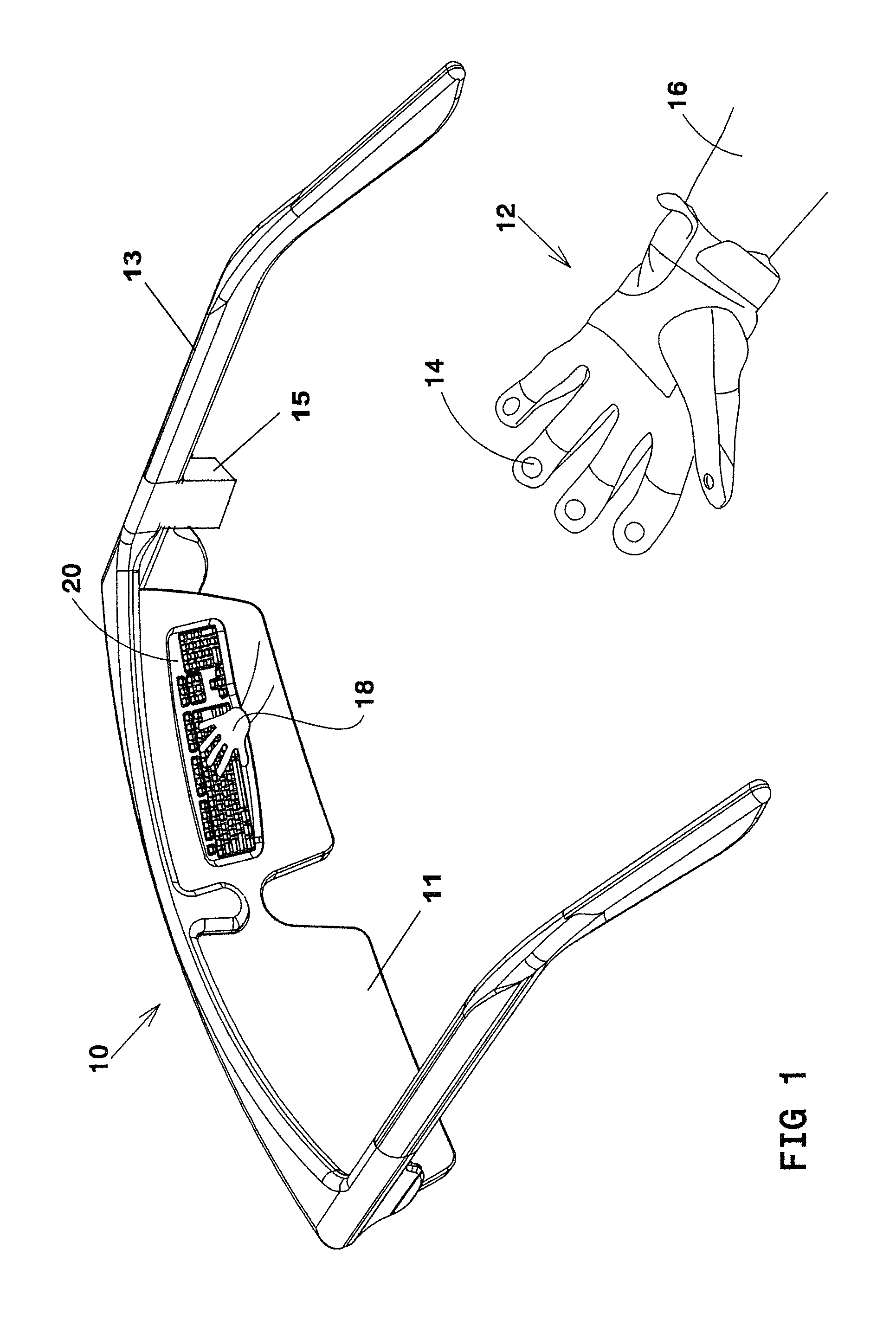

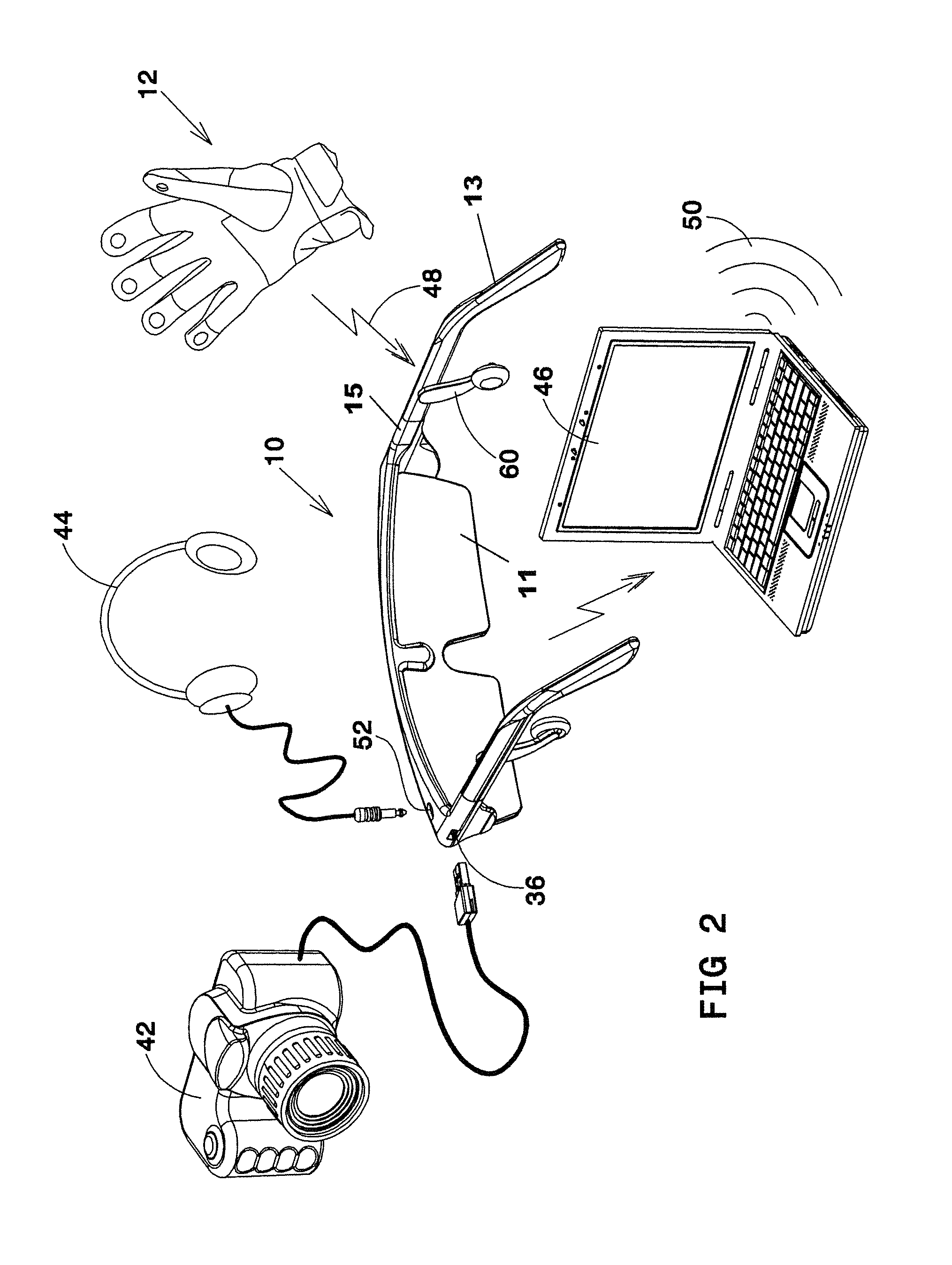

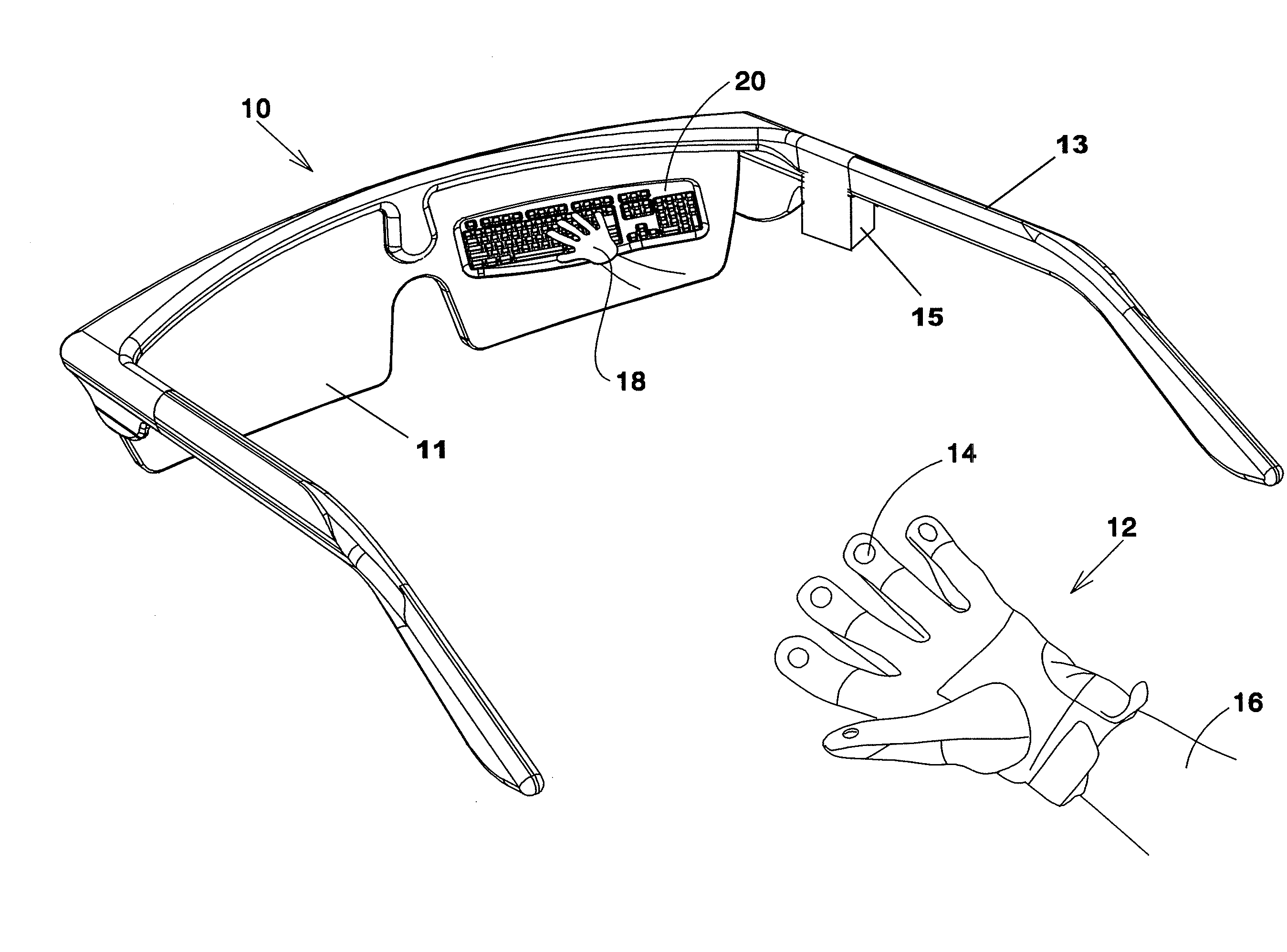

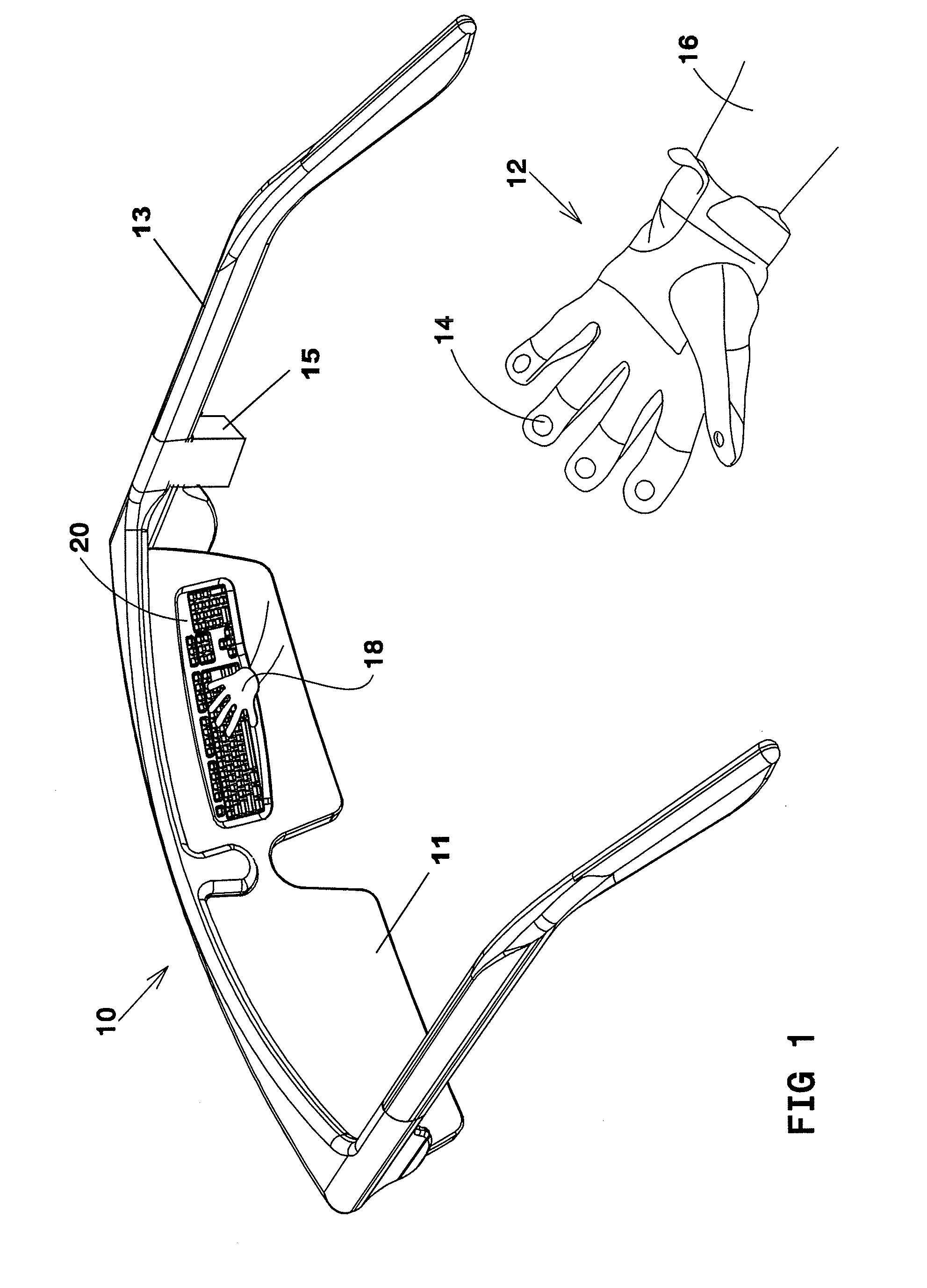

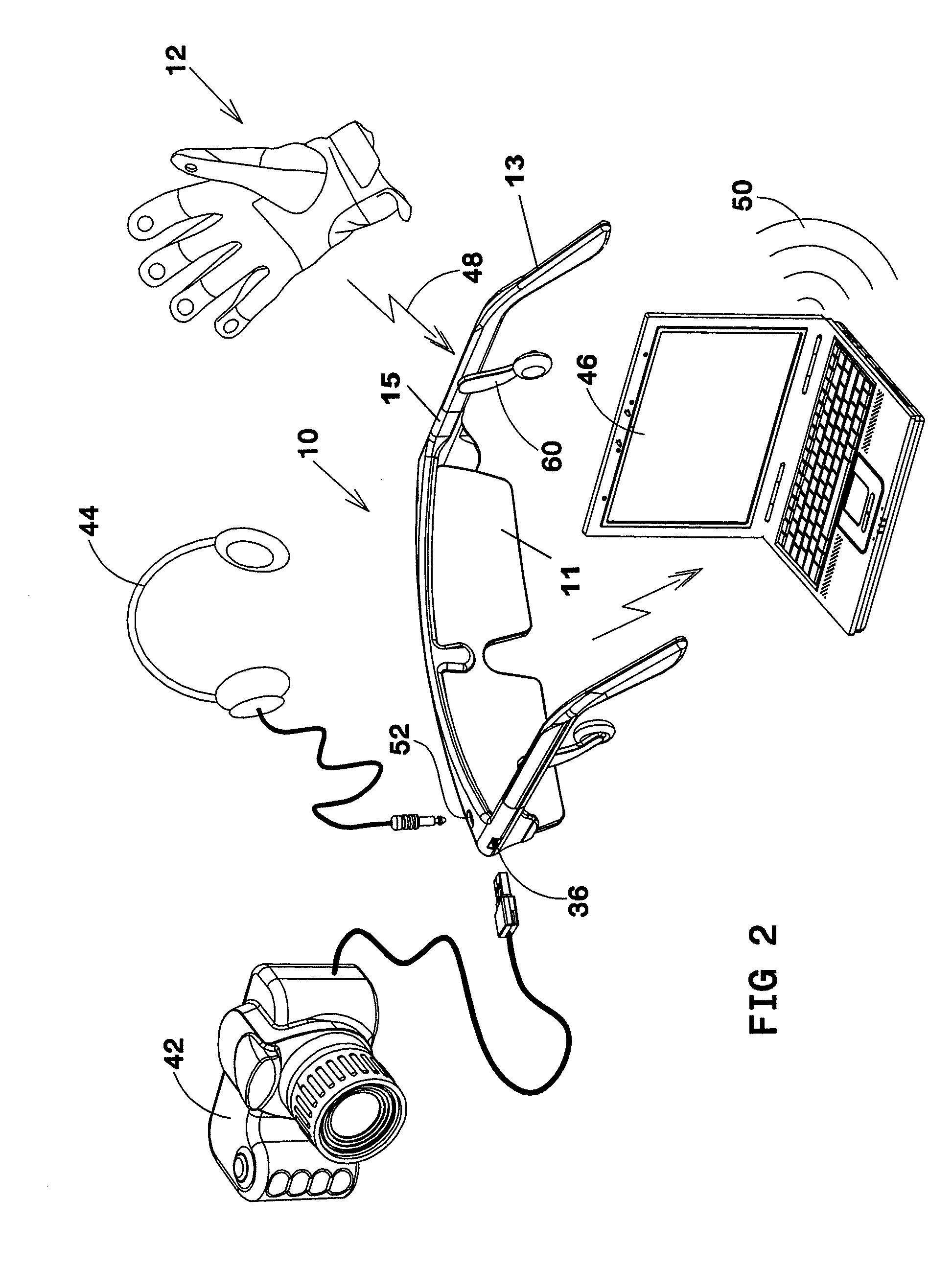

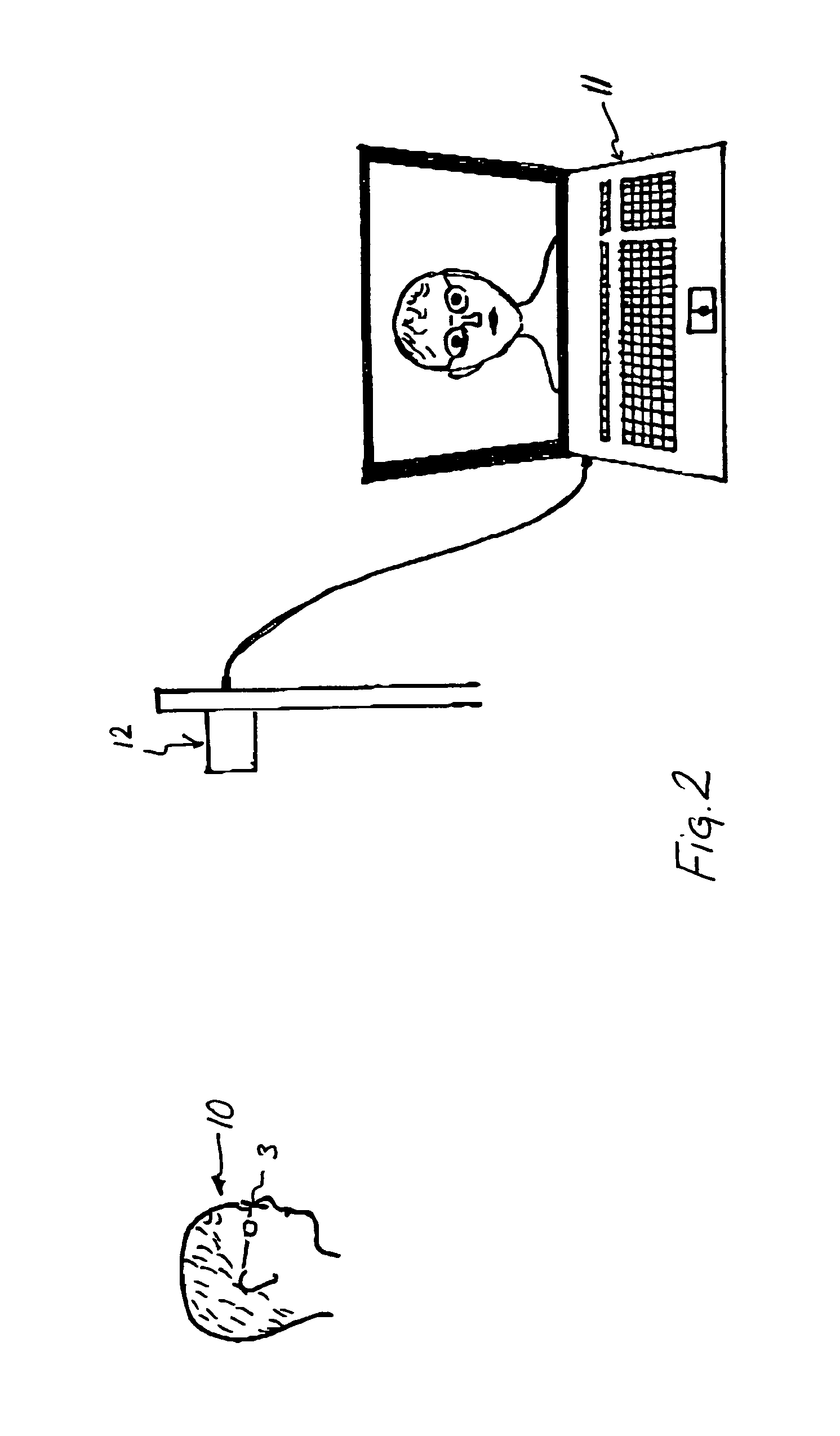

Method and system enabling natural user interface gestures with user wearable glasses

ActiveUS8836768B1Reduce power consumptionInput/output for user-computer interactionCathode-ray tube indicatorsUses eyeglassesEyewear

User wearable eye glasses include a pair of two-dimensional cameras that optically acquire information for user gestures made with an unadorned user object in an interaction zone responsive to viewing displayed imagery, with which the user can interact. Glasses systems intelligently signal process and map acquired optical information to rapidly ascertain a sparse (x,y,z) set of locations adequate to identify user gestures. The displayed imagery can be created by glasses systems and presented with a virtual on-glasses display, or can be created and / or viewed off-glasses. In some embodiments the user can see local views directly, but augmented with imagery showing internet provided tags identifying and / or providing information as to viewed objects. On-glasses systems can communicate wirelessly with cloud servers and with off-glasses systems that the user can carry in a pocket or purse.

Owner:KAYA DYNAMICS LLC

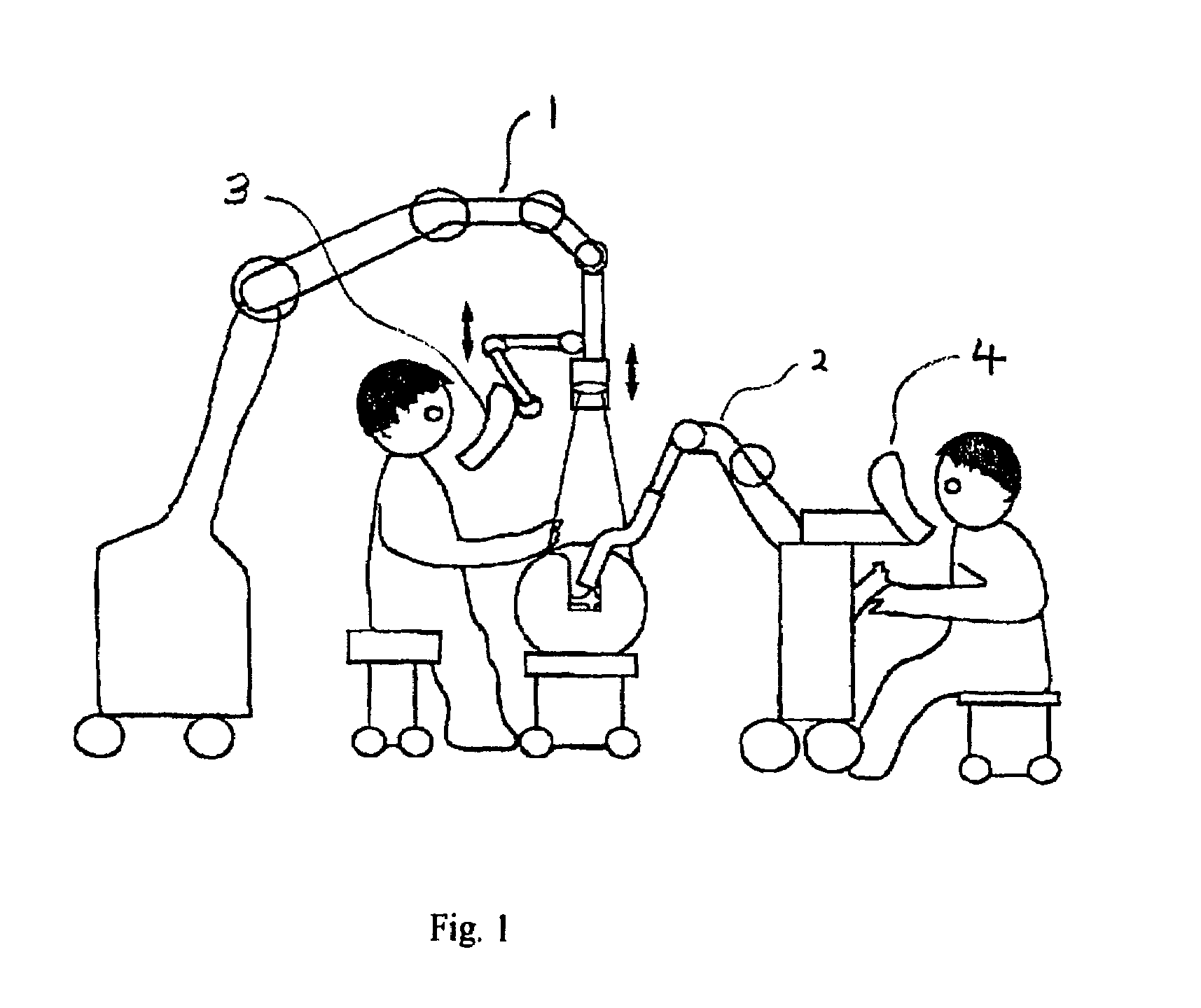

Augmented reality glasses for medical applications and corresponding augmented reality system

Owner:BADIALI GIOVANNI +3

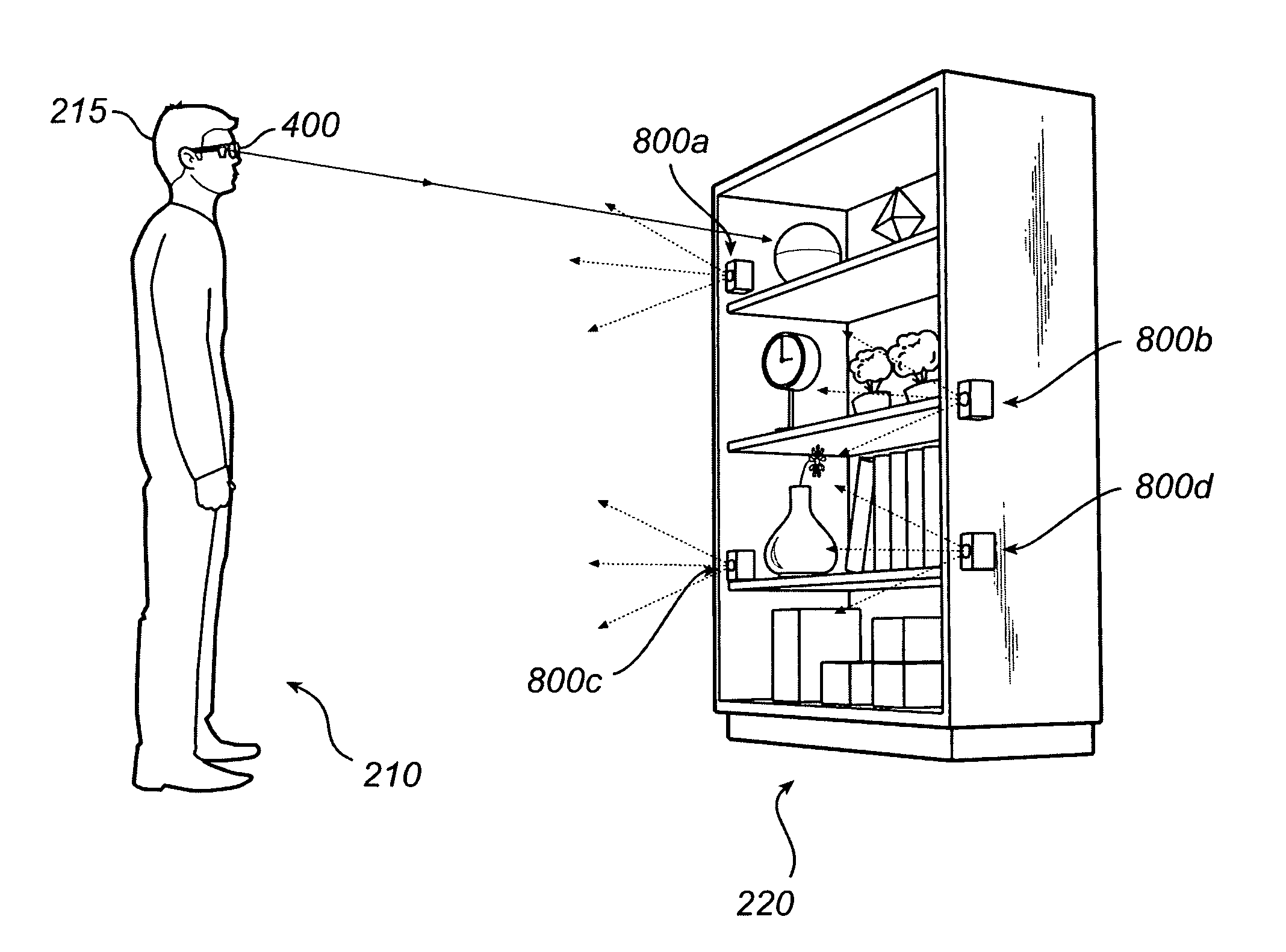

Detection of gaze point assisted by optical reference signal

ActiveUS20110279666A1Extension of timeReduce energy consumptionAcquiring/recognising eyesColor television detailsUses eyeglassesEyewear

A gaze-point detection system includes at least one infrared (IR) signal source to be placed in a test scene as a reference point, a pair of eye glasses to be worn by a person, and a data processing and storage unit for calculating a gaze point of the person wearing the pair of eye glasses. The pair of eye glasses includes an image sensor, an eye-tracking unit and a camera. The image sensor detects IR signals from the at least one IR signal source and generates an IR signal source tracking signal. The eye-tracking unit determines adapted to determine the gaze direction of the person and generates an eye-tracking signal, and the camera acquires a test scene picture. The data processing and storage unit communicates with the pair of eye glasses and calculates the gaze point relative to the test scene picture.

Owner:TOBII TECH AB

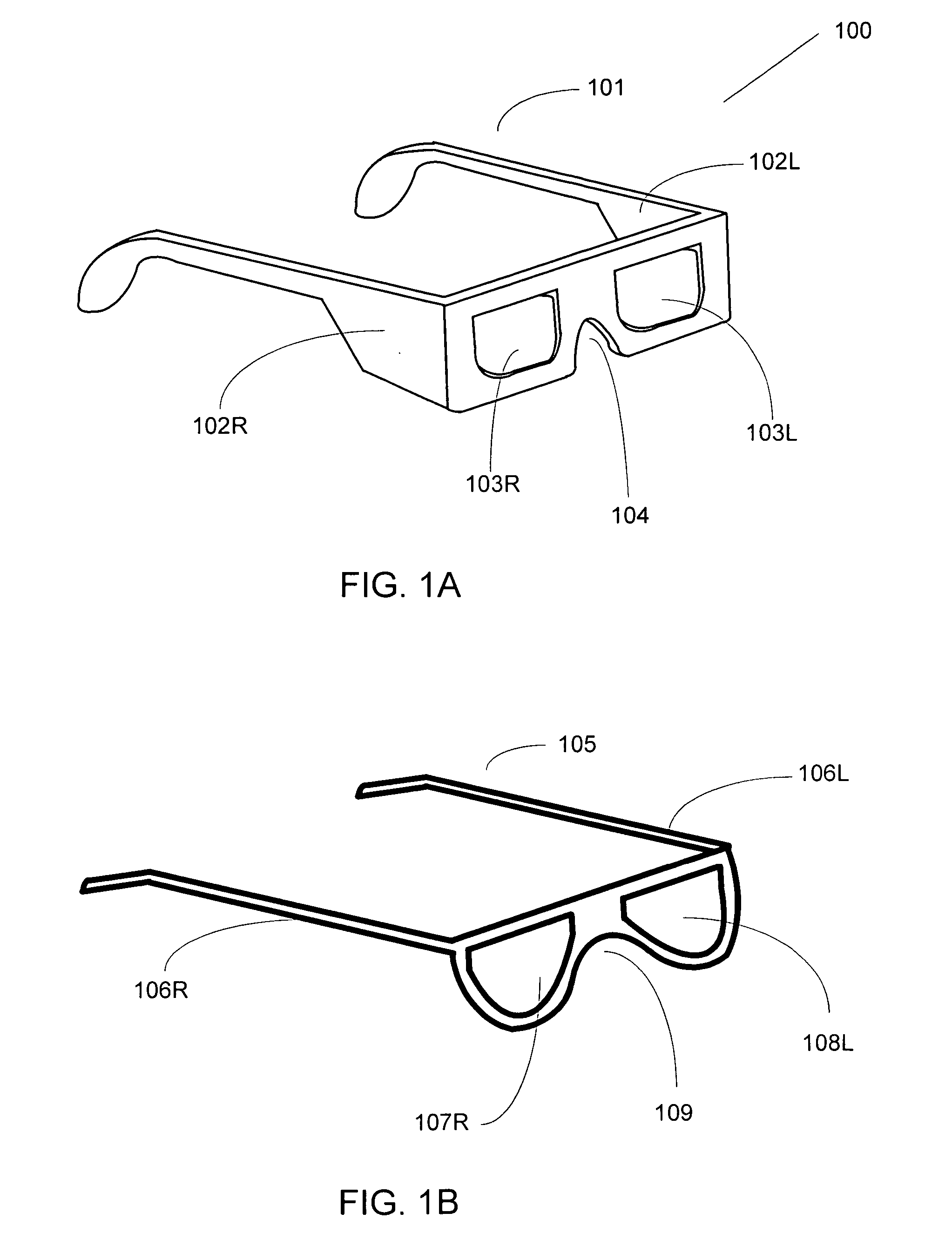

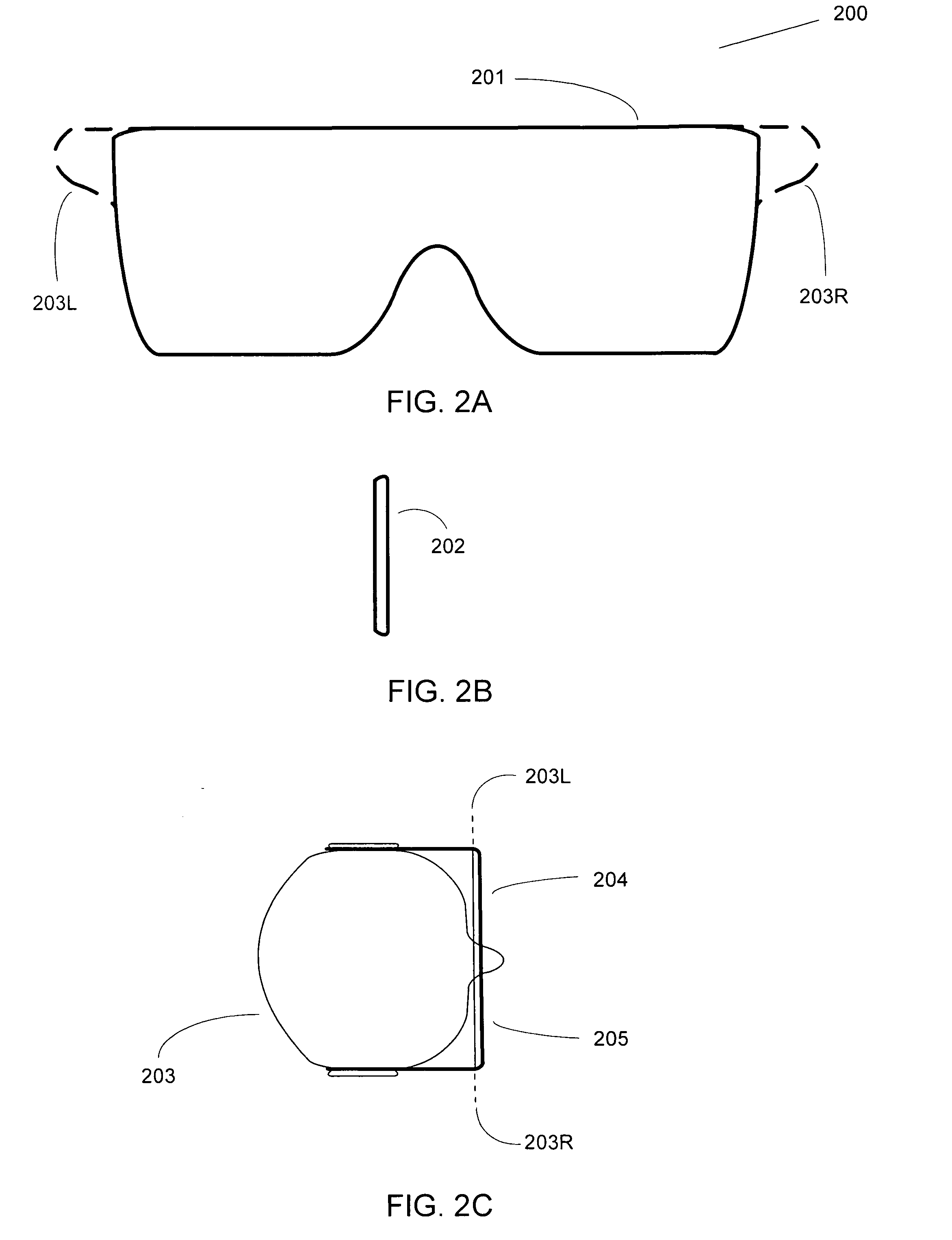

3-D eyewear

A set of eyewear is provided for use with prescription spectacles. The design comprises a plurality of selecting devices formed and configured to be attached to the spectacles to provide stereoscopic viewing of images when worn by a user wearing the spectacles. The eyewear comprises a substrate forming a first selector device and a second selector device, and optical materials provided on the substrate. The optical materials comprise first optical material associated with the first selector device and providing a first orientation along a first axis and second optical material associated with the second selector device and providing a second orientation along a second axis substantially orthogonal to the first axis. The substrate and optical materials are configured to be fixedly mountable to the spectacles.

Owner:REAID INC

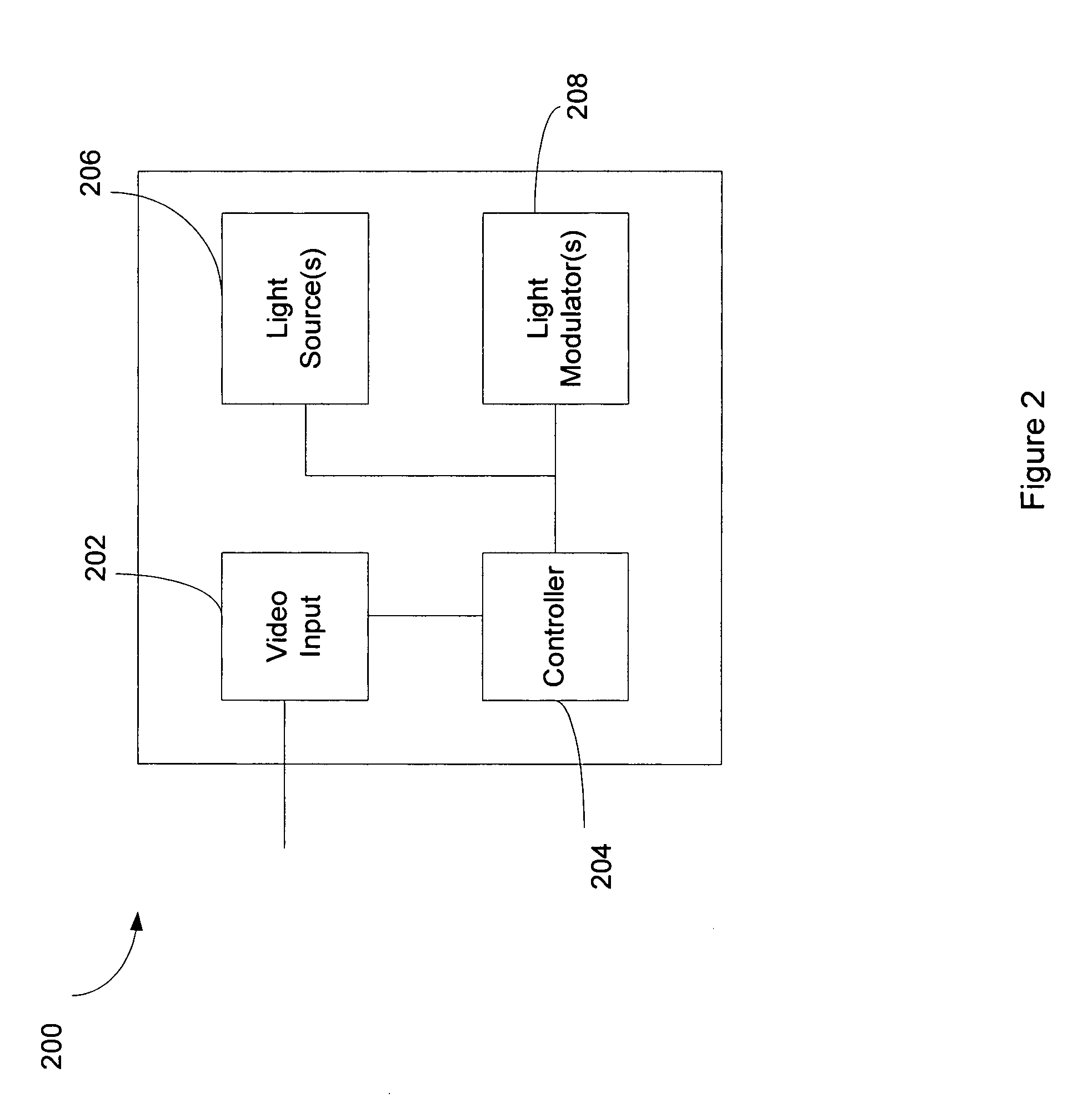

3-D projection full color multimedia display

Methods and systems are described herein which produce polarization-independent full color images suitable for rear-projection television sets and other multimedia displays. The system uses illumination with R, G, B light from two different light sources for each color. A viewer wears glasses with narrowband optical filters, preferably holographic filters. The R, G, B light from the light sources is slightly offset at each of the 3 emission wavelengths, with one set of R, G, B light being filtered by the holographic filter in front of the left eye of the, and the other set of R, G, B light being filtered by the holographic filter in front of the viewer's right eye.

Owner:CHRISTIE DIGITAL SYST USA INC +1

Perspective altering display system

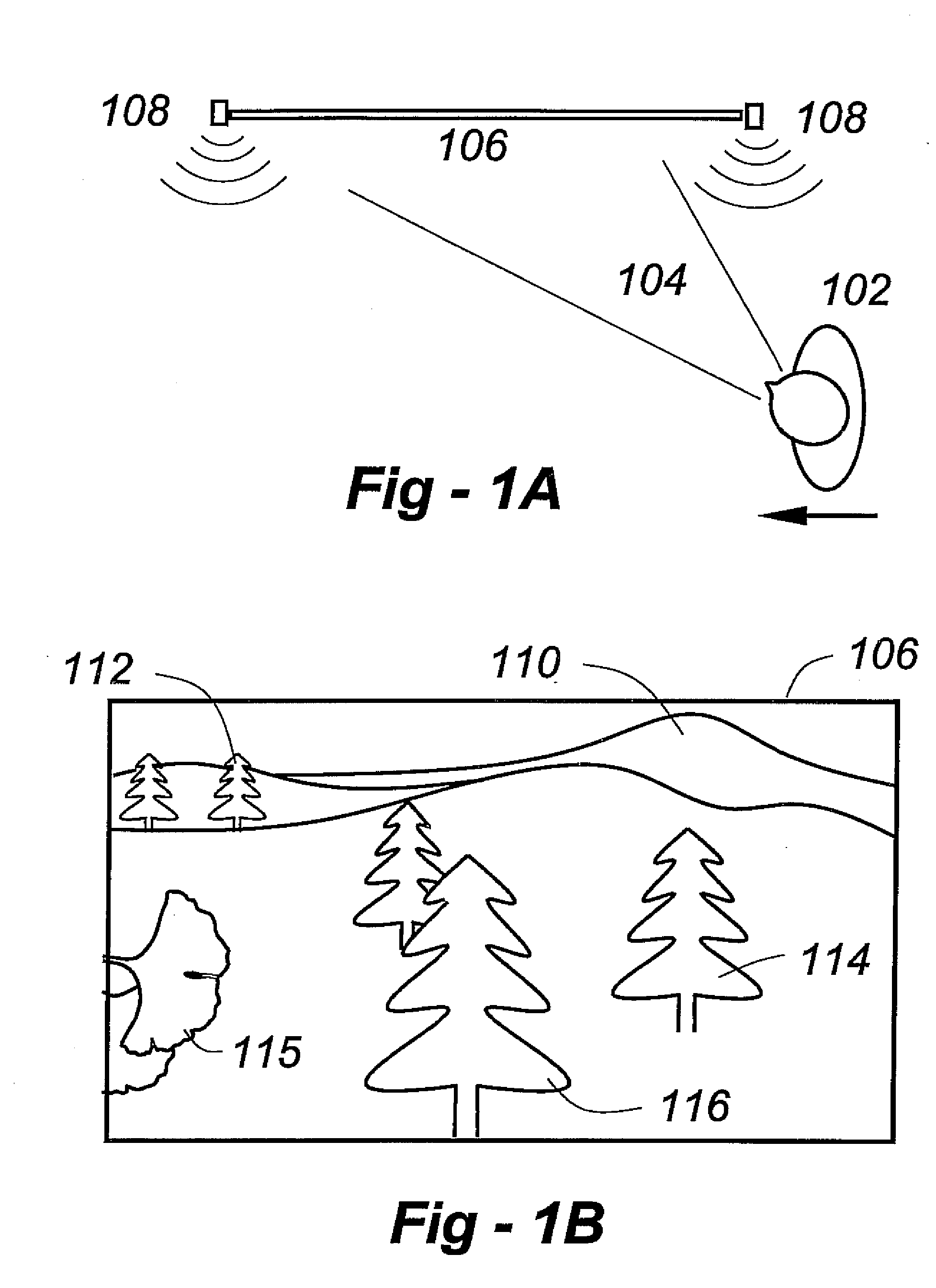

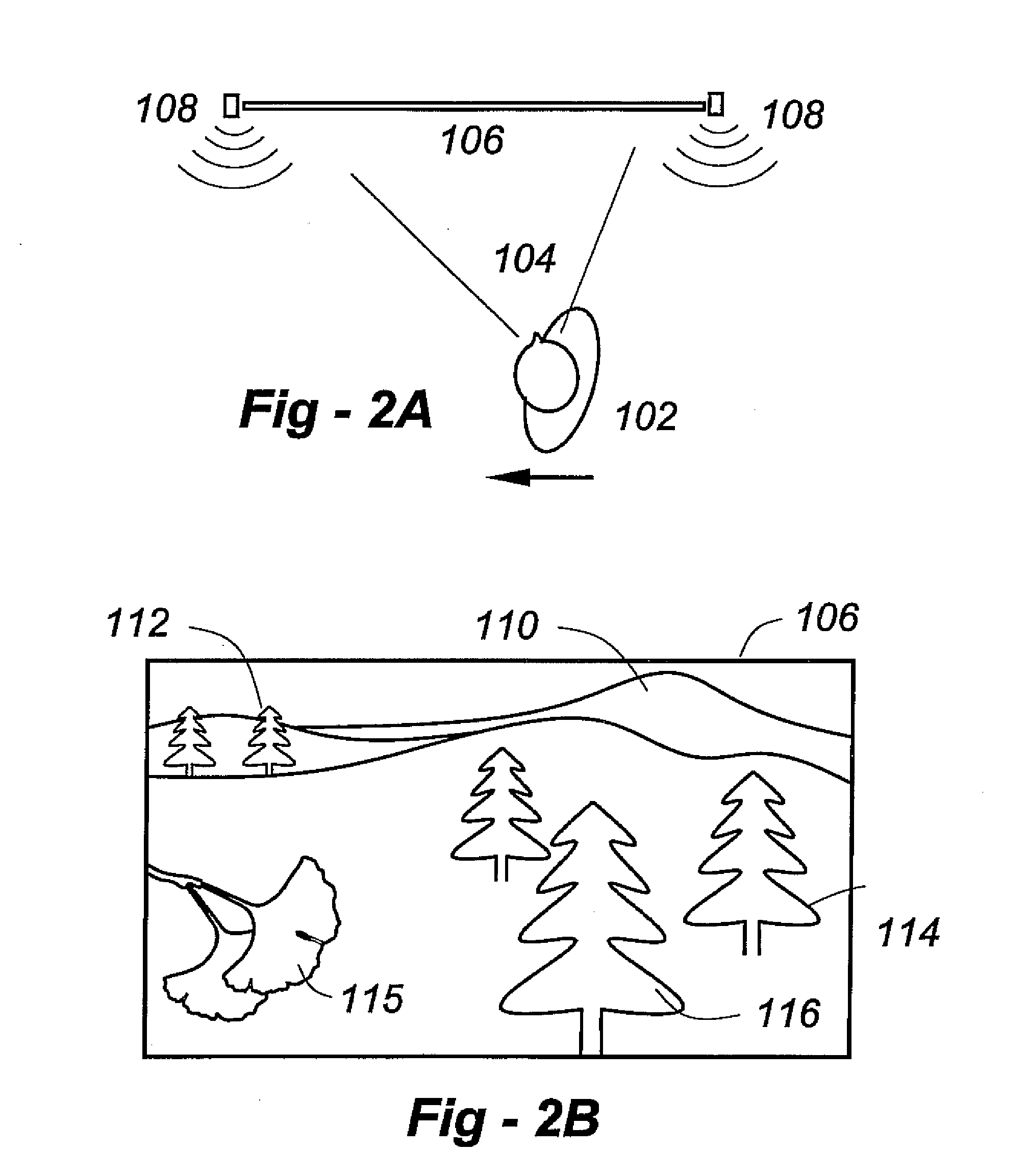

ActiveUS20090051699A1High resolutionReduce resolutionTelevision system detailsCathode-ray tube indicatorsVirtual mirrorWears glasses

The perception of a displayed image is altered for viewers moving relative to the position of the display system screen, thereby imparting a sense of three-dimensional immersion in the scene being displayed. A display generator generates a scene having foreground and background elements, and a display screen displaying the scene. A sensor detects the position of a viewer relative to the display screen, and a processor is operative to shift the relative position of the foreground and background elements in the displayed scene as a function of viewer position, such that the viewer's perspective of the scene changes as the viewer moves relative to the display screen. The foreground and background elements may be presented in the form of multiple superimposed graphics planes, and / or a camera may be used to record the scene through panning at sequential angles. The system may be used to implement virtual windows, virtual mirrors and other effects without requiring viewers or users to modify behavior or wear glasses, beacons, etc.

Owner:VIDEA

Computer device in form of wearable glasses and user interface thereof

InactiveUS20130265300A1Input/output for user-computer interactionCathode-ray tube indicatorsCamera lensEyewear

A computer device that is configured as wearable glasses and a user interface thereof, which comprises a transparent optical lens adapted to display, whenever desired, visual content on at least a portion of the lens, for enabling a user wearing the glasses to see the visual content, wherein the lens enables a user to see there through, in an optical manner, also a real-world view; a wearable frame for holding the lens and a portable computerized unit for generating the visual content and displaying or projecting the visual content on the portion, wherein the computerized unit is embedded within the frame or mounted thereon.

Owner:VARDI NEORAI

Computer device in form of wearable glasses and user interface thereof

InactiveUS20130241927A1Input/output for user-computer interactionCathode-ray tube indicatorsComputer graphics (images)Ophthalmology

A computer device that is configured as wearable glasses and a user interface thereof, which comprises a transparent optical lens adapted to display, whenever desired, visual content on at least a portion of the lens, for enabling a user wearing the glasses to see the visual content, wherein the lens enables a user to see there through, in an optical manner, also a real-world view; a wearable frame for holding the lens and a portable computerized unit for generating the visual content and displaying or projecting the visual content on the portion, wherein the computerized unit is embedded within the frame or mounted thereon.

Owner:VARDI NEORAI

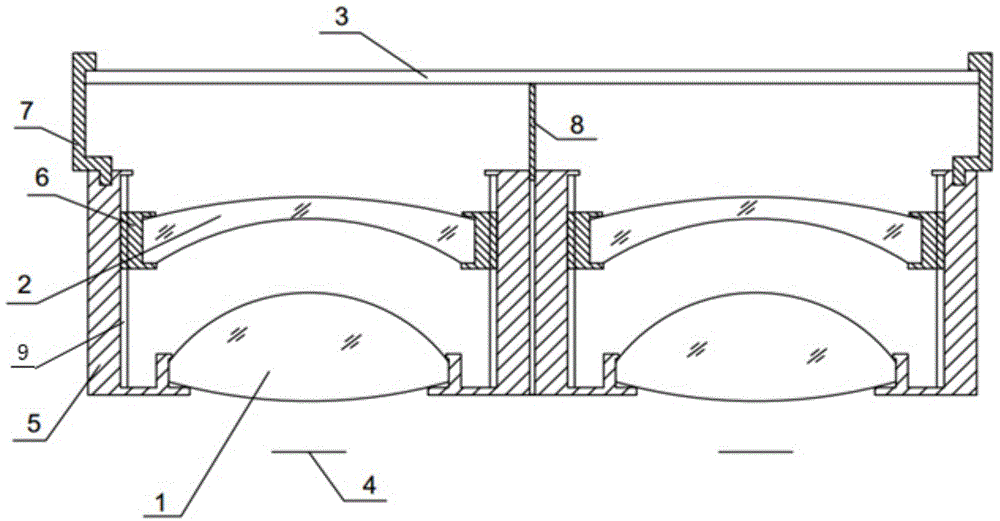

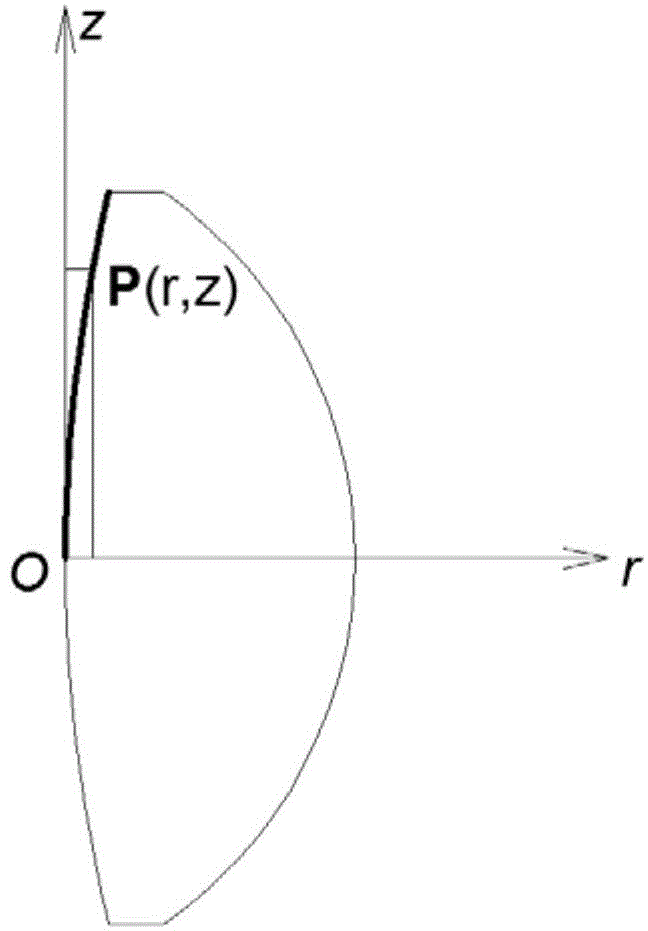

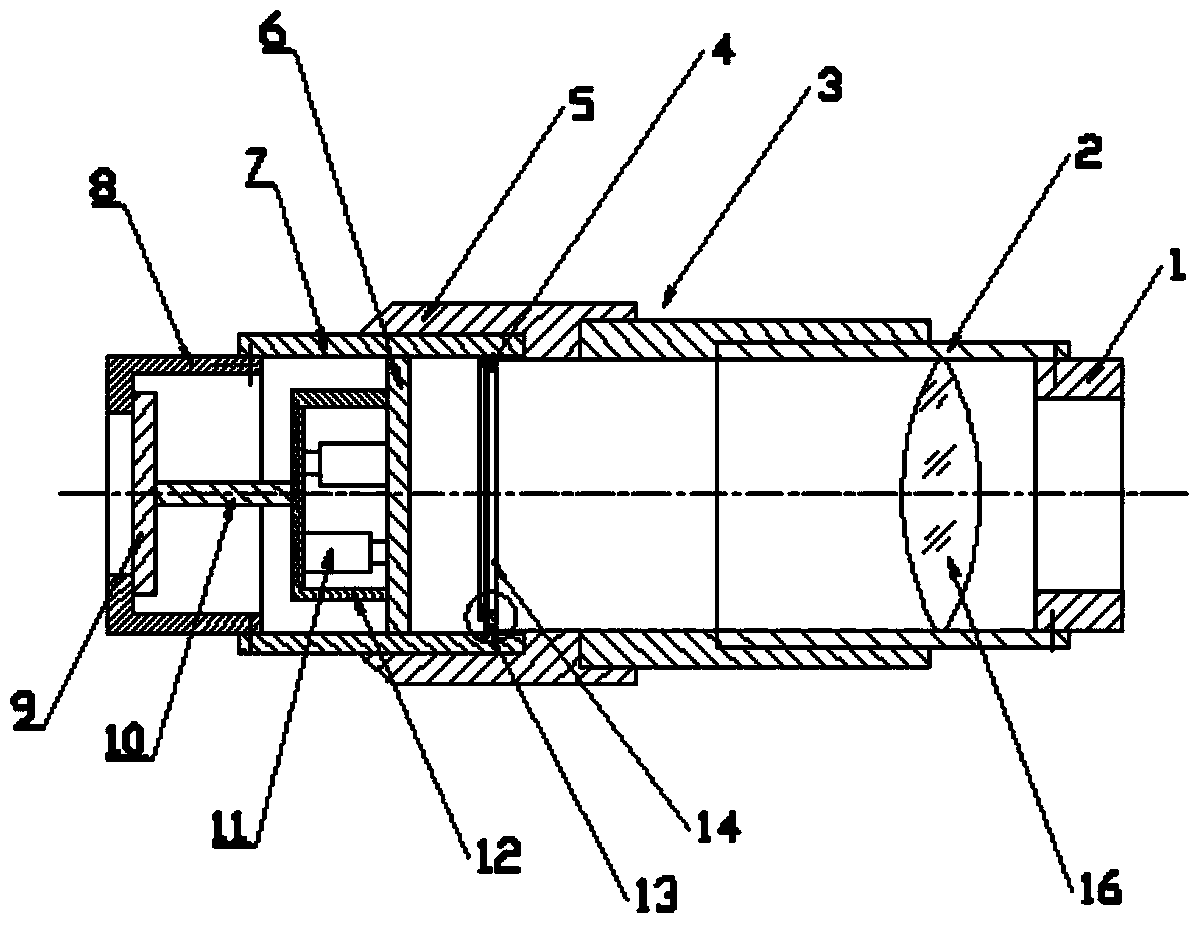

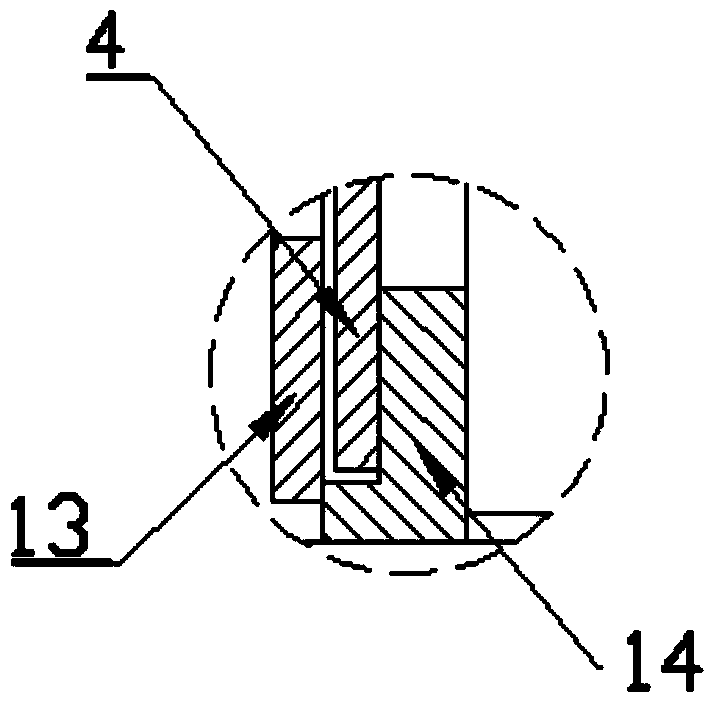

Optical lens structure of wearable virtual-reality headset capable of displaying three-dimensional scene

InactiveCN104808342AIncrease room for optimizationImprove clarityLensMagnifying glassesEyewearEngineering

The invention discloses an optical lens structure of a wearable virtual-reality headset capable of displaying three-dimensional scene. Two lenses of the left eye and the right eye are same in structure and both comprise double convex positive lenses and crescent negative lenses coaxially mounted at intervals. The double convex positive lenses close to the human eyes are mounted on a fixed temple, the crescent negative lenses are mounted on a movable temple, a guide rail is arranged on the inner wall of the fixed temple, and the movable temple is mounted on the guide rail movably. A display screen in front of the crescent negative lens is connected on the front portion of the fixed temple by a connecting frame, and the two lens structures are spaced by an intermediate partition. The two lenses are made of different kinds of optical plastics, and the optical surfaces of the front side and the rear side are aspheric surfaces. The optical lens structure can adjust diopter, a user can see the content on the screen without wearing glasses, chromatic aberration and distortion of the single lens are eliminated, the images inputted into a left screen and a right screen are not necessary to be preprocessed, image frames are improved and the user can see common left-right split-screen stereoscopic movies.

Owner:杭州映墨科技有限公司

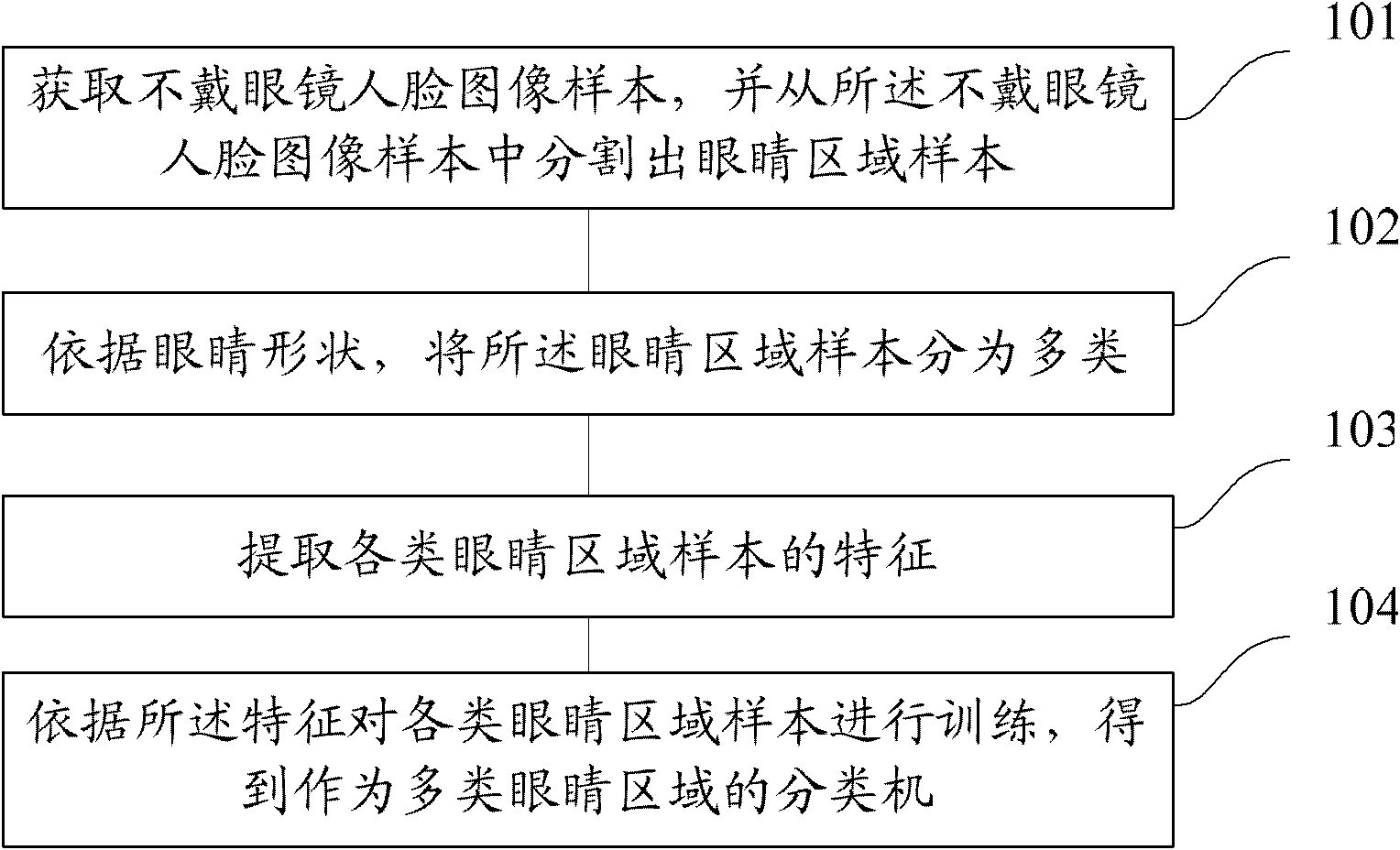

Method and device for removing glasses from human face image, and method and device for wearing glasses in human face image

ActiveCN102163289AReduce varianceRealize natural splicingCharacter and pattern recognitionUses eyeglassesPrincipal component analysis

The invention provides a method and a device for removing glasses from a human face image, and a method and a device for wearing the glasses in the human face image. The removal method comprises the following steps of: acquiring a glasses-worn eye area image and a non-eye area image from a glasses-worn human face image; matching the glasses-worn eye area image by using a classifier to acquire a corresponding eye area type, wherein the classifier is used for multiple types of eye areas which are constructed according to glasses-free human face image samples, and the multiple types of eye areas are classified according to eye shapes; acquiring a base vector corresponding to the eye area type, wherein the base vector is acquired by performing principal component analysis (PCA) study aiming at the sample of the eye area type; mapping the glasses-worn eye area image to the base vector, and reconstructing to acquire a glasses-free eye area image; and splicing the non-eye area image with the glasses-free eye area image to acquire a glasses-removed human face image. By the method, the glasses can be relatively effectively removed from the human face image.

Owner:重庆中星微人工智能芯片技术有限公司

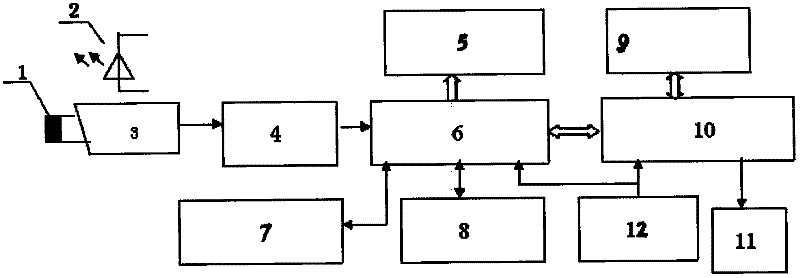

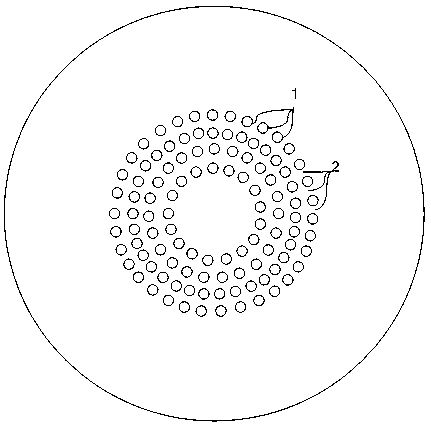

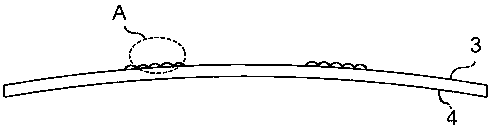

Real-time monitoring system of driver working state

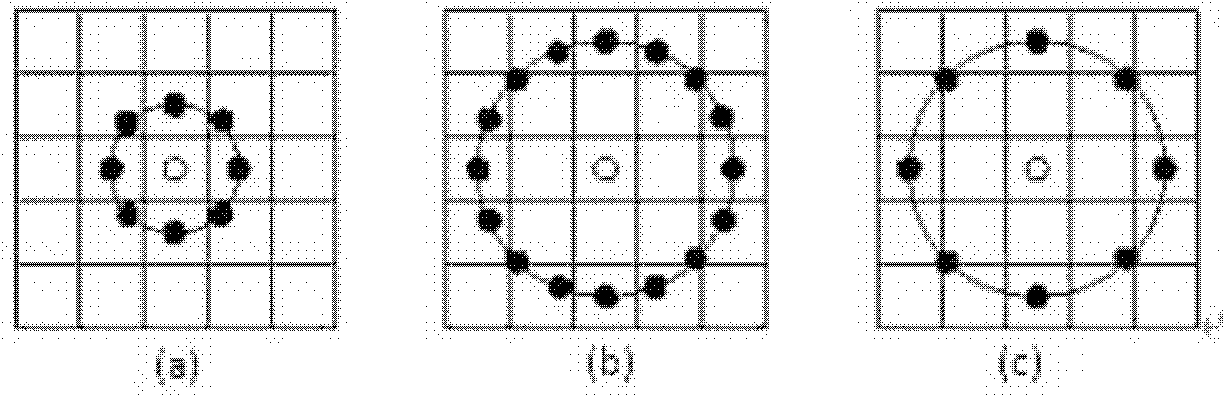

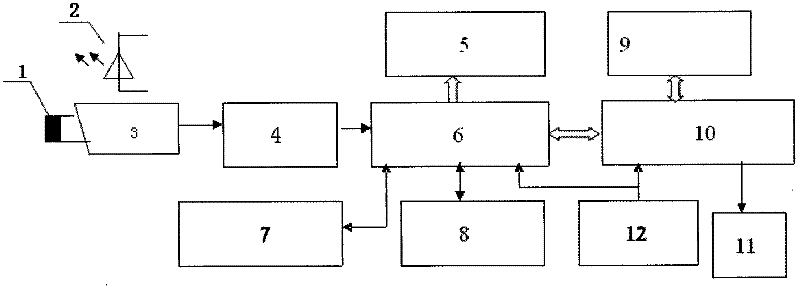

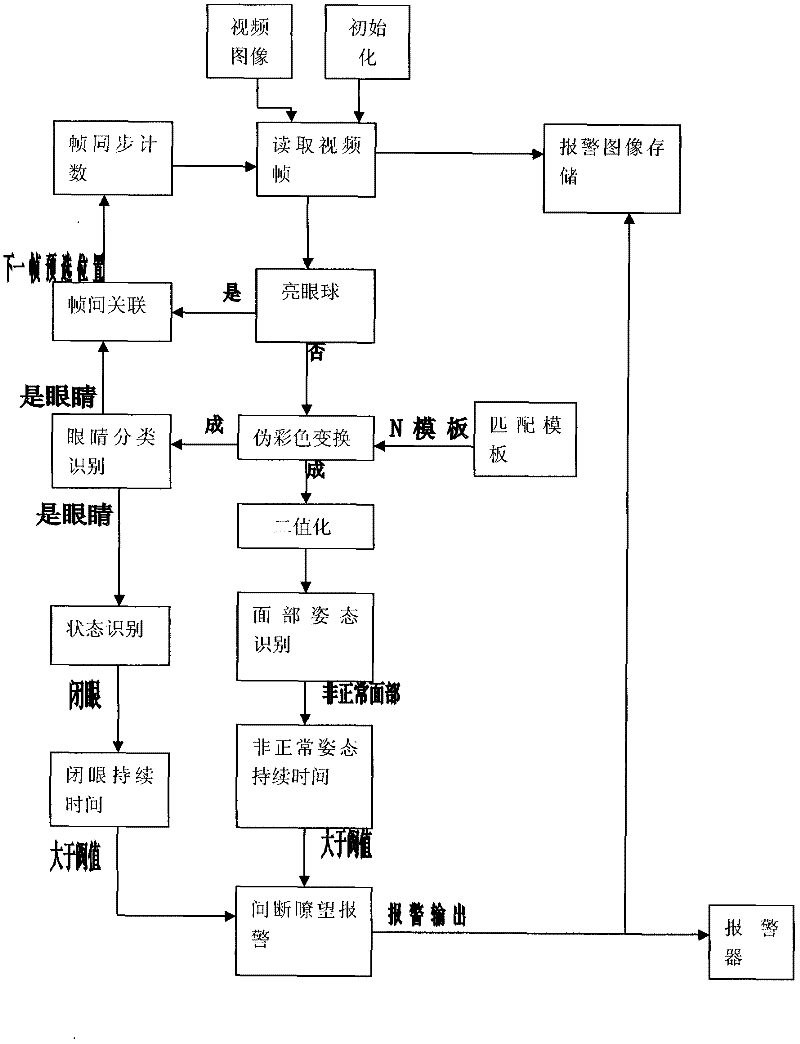

ActiveCN102393989ADoes not interfere with normal working conditionsEliminate distractionsCharacter and pattern recognitionAlarmsBand-pass filterCcd camera

The invention relates to a real-time monitoring system of a driver working state. The real-time monitoring system is characterized in that a band-pass filter (1) is arranged on a CCD (charge-coupled device) camera (3); an infrared active light source (2) is arranged above the CCD camera (3); the CCD camera (3) is connected with a video decoder (4); the video decoder (4) is connected with an imagepre-processor (6); the image pre-processor (6) is respectively connected with a voice alarm (5), an image processor (7), an alarming image processor (8) and a multimedia digital memory (10); the multimedia digital memory (10) is respectively connected with a program memory (9) and a 100M network interface (11); and a zero-speed processor is respectively connected with the multimedia digital memory (10) and the image pre-processor (6). According to the real-time monitoring system, real-time monitoring to working states of locomotive drivers under the conditions of different driving postures, different lighting status, wearing glasses and sunglasses and the like can be achieved, and voice alarm prompt is provided when the locomotive drivers intermittently watch outside or nap.

Owner:SHANXI ZHIJI ELECTRONICS TECH

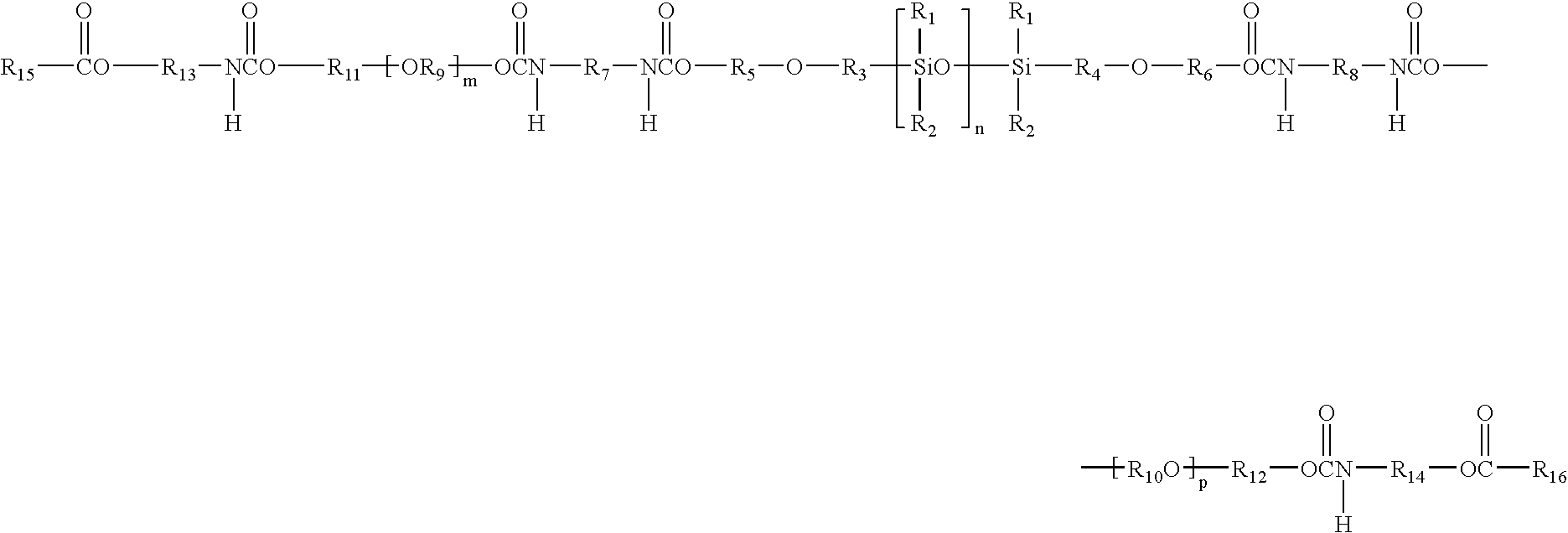

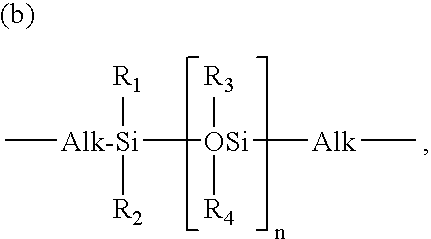

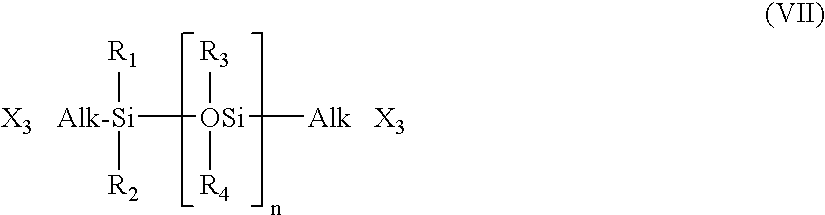

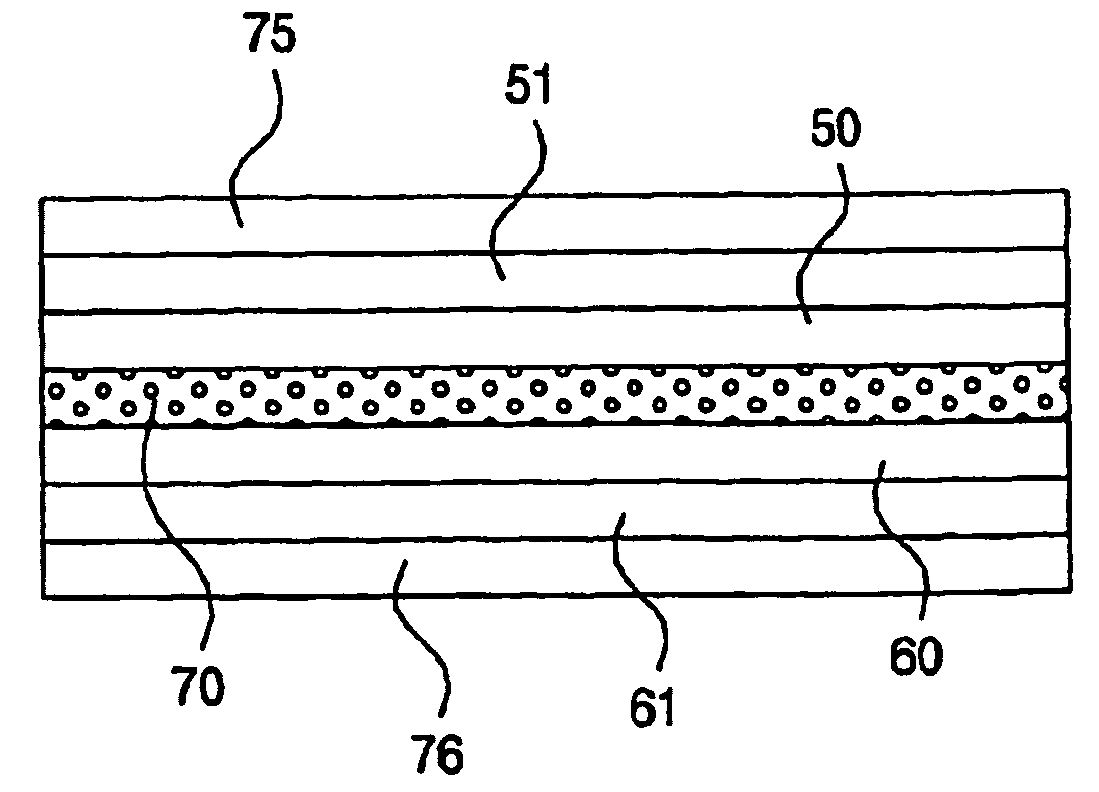

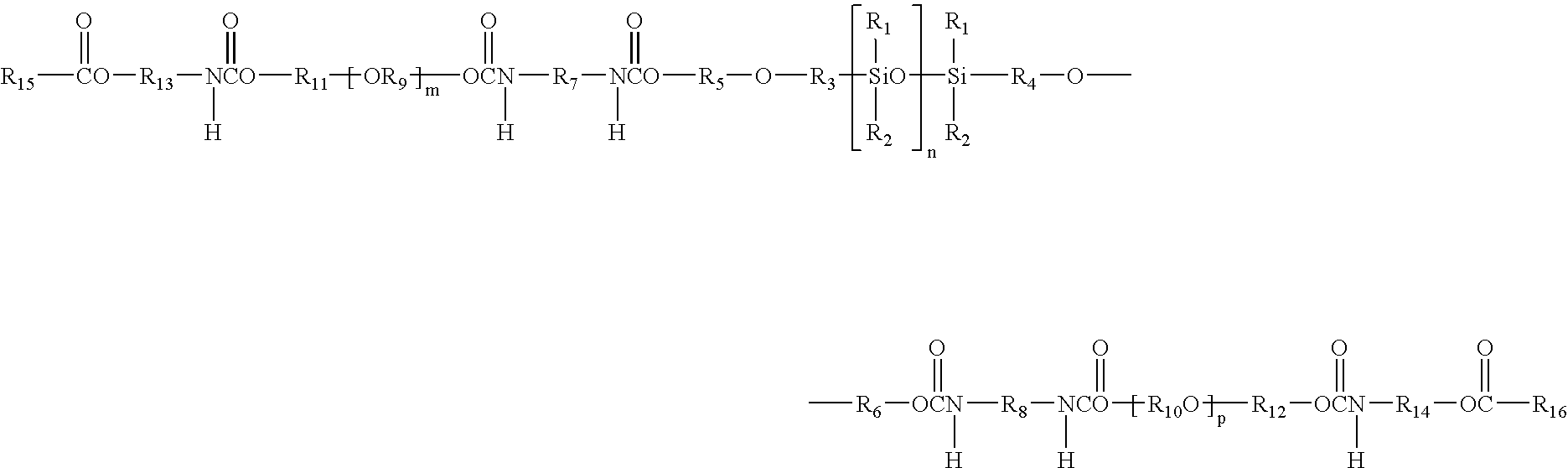

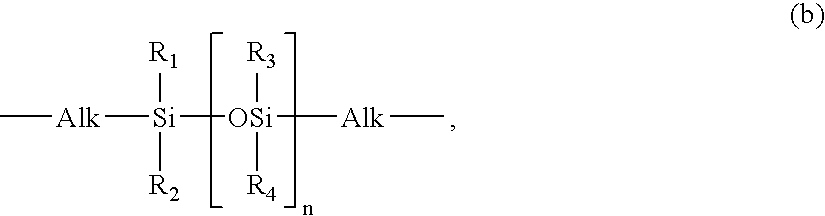

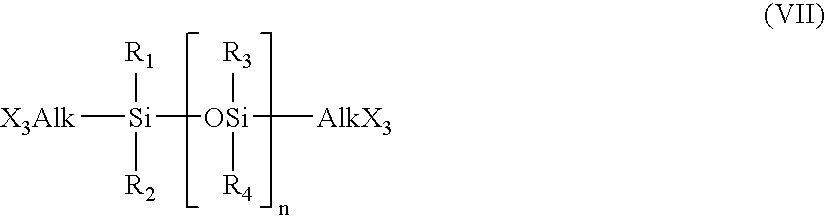

Extended Wear Ophthalmic Lens

InactiveUS20070105973A1Sufficient for corneal healthSubstantial adverse impact on ocular health or consumerOptical articlesProsthesisExtended wear contact lensesEye movement

An ophthalmic lens suited for extended-wear periods of at least one day on the eye without a clinically significant amount of corneal swelling and without substantial wearer discomfort. The lens has a balance of oxygen permeability and ion or water permeability, with the ion or water permeability being sufficient to provide good on-eye movement, such that a good tear exchange occurs between the lens and the eye. A preferred lens is a copolymerization product of a oxyperm macromer and an ionoperm monomer. The invention encompasses extended wear contact lenses, which include a core having oxygen transmission and ion transmission pathways extending from the inner surface to the outer surface.

Owner:NOVARTIS AG

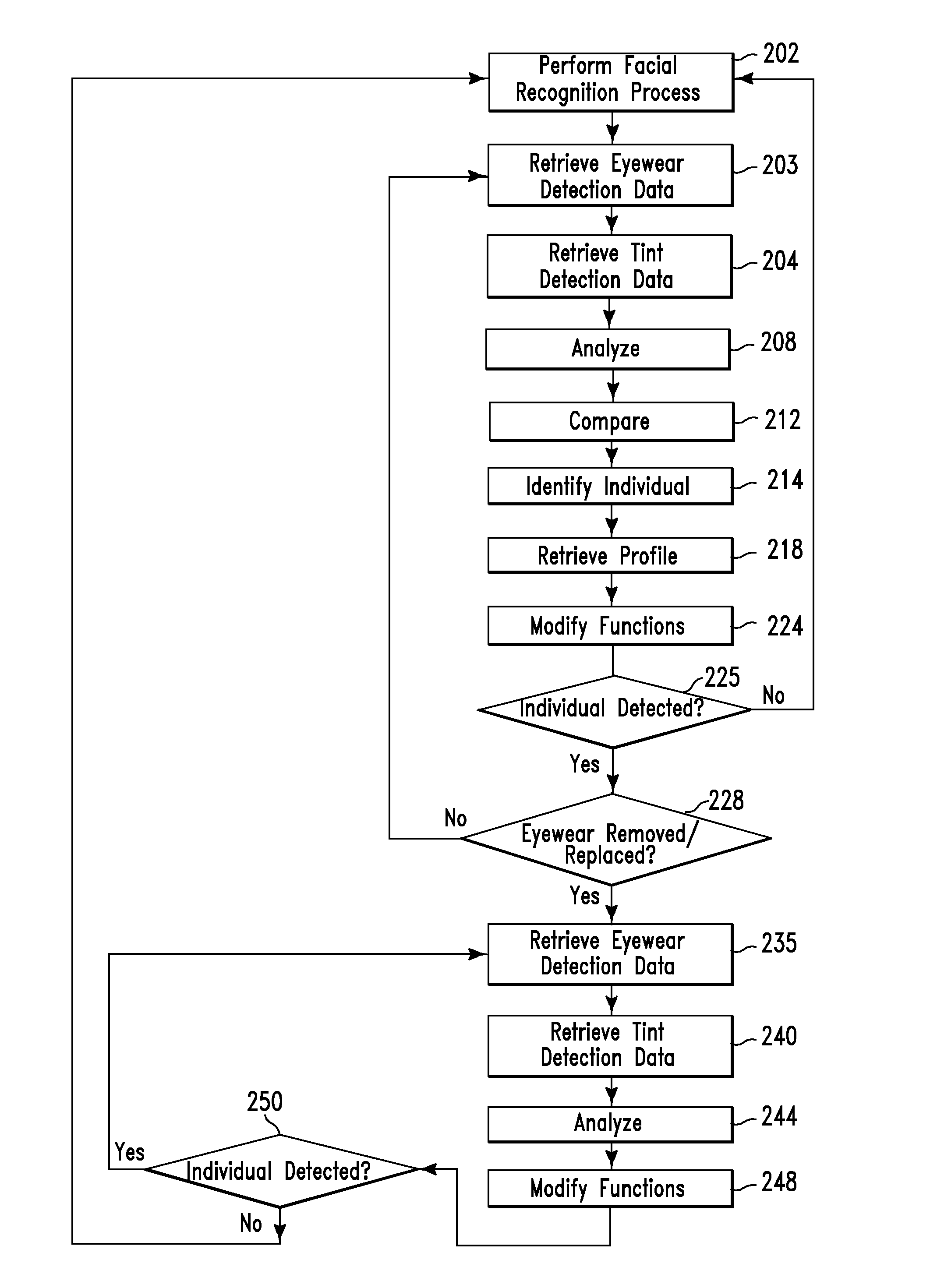

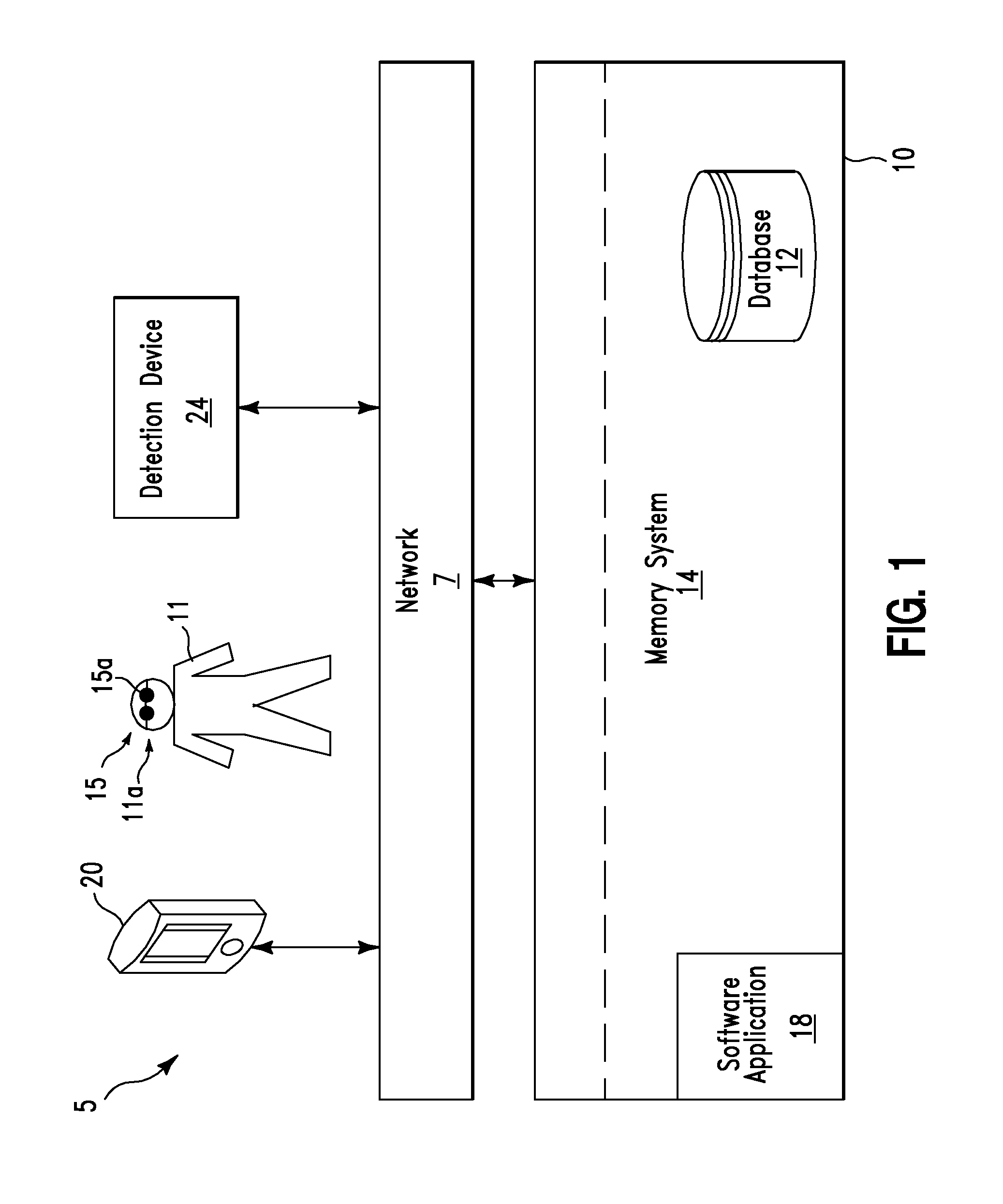

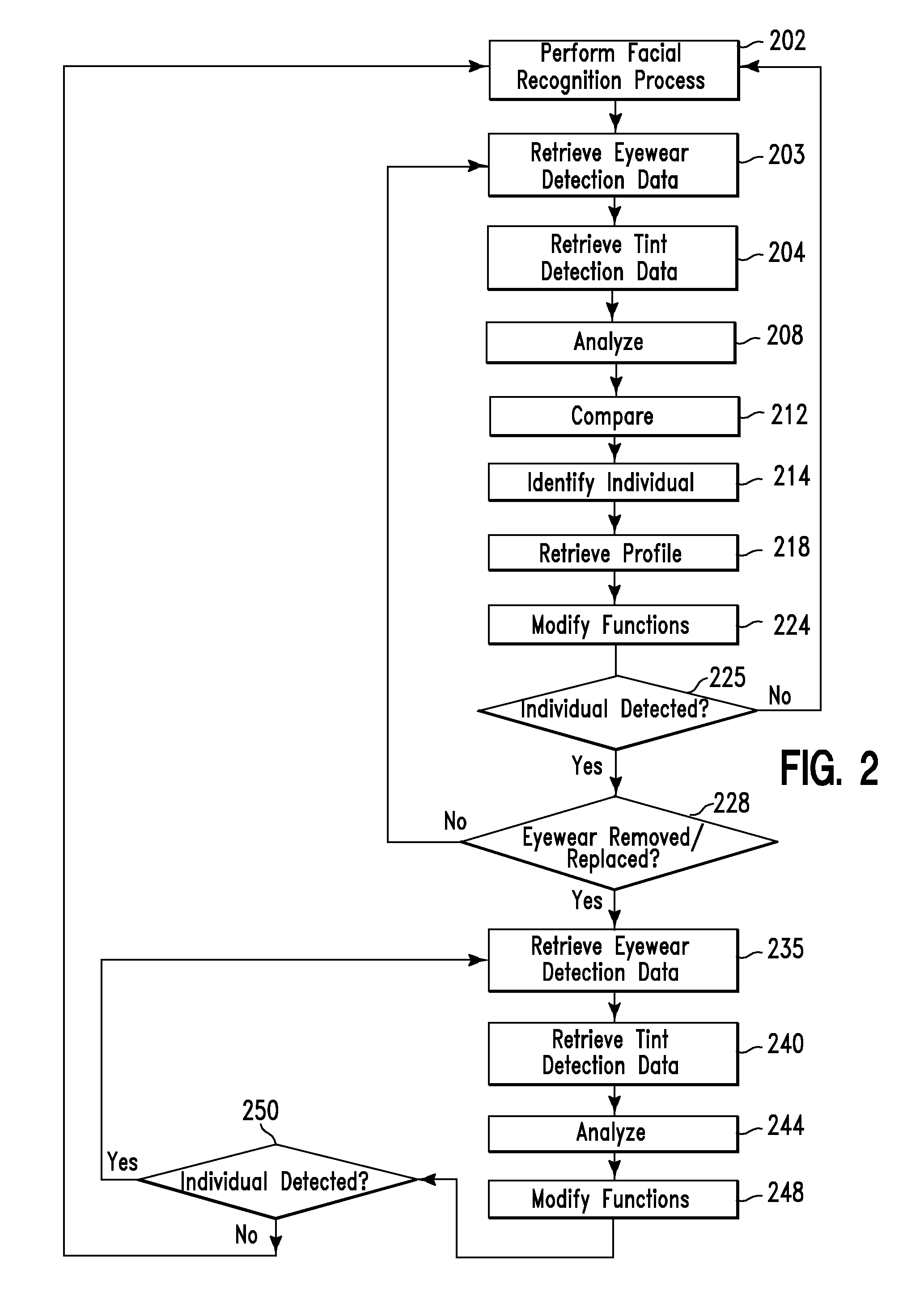

Device function modification method and system

A modification method and system. The method includes performing a computer processor of a computing system, a facial recognition process of an individual associated with a device. The computer processor retrieves from a detection device, eyewear detection data indicating that the individual is correctly wearing eyewear and tint detection data indicating that the eyewear comprises tinted lenses. In response, the computer processor analyzes results of the facial recognition process, the eyewear detection data, and the tint detection data. The computer processor modifies functions associated with the first device in response to results of the analysis.

Owner:KYNDRYL INC

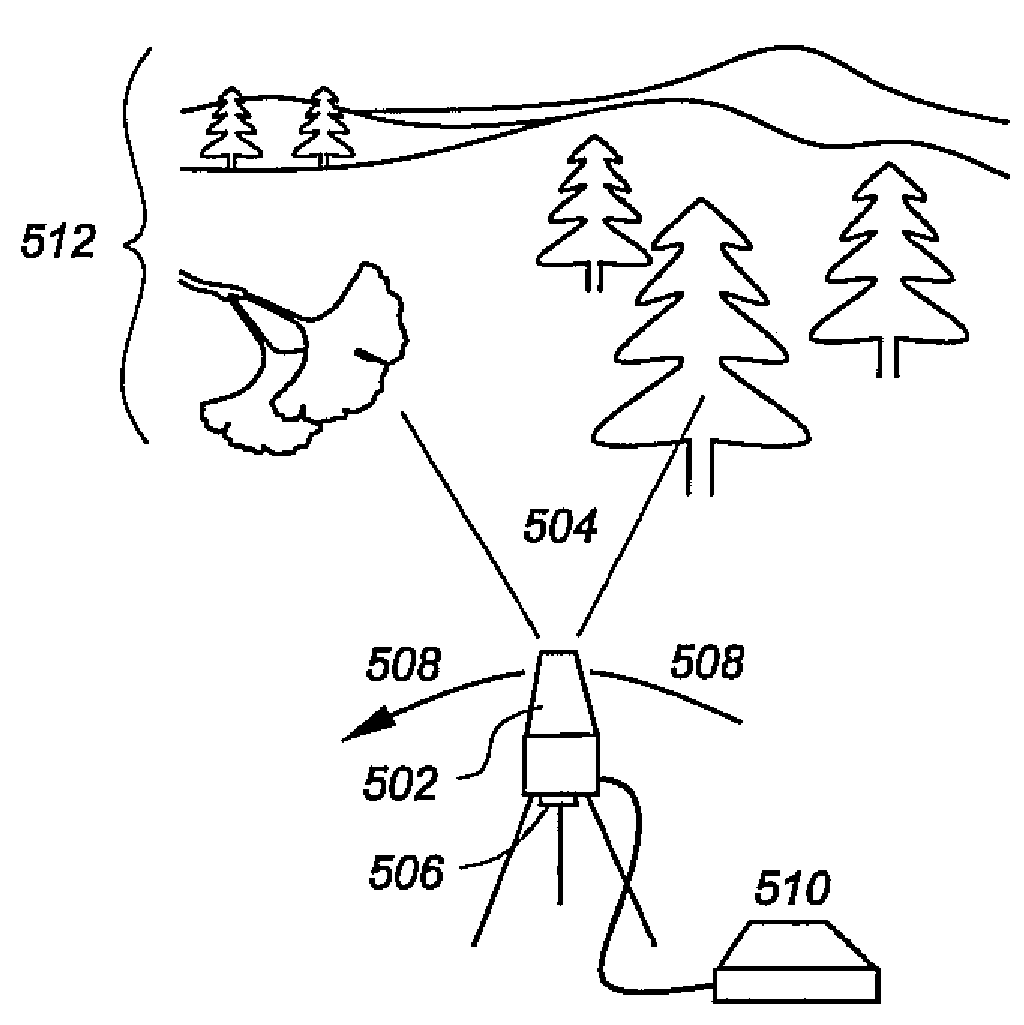

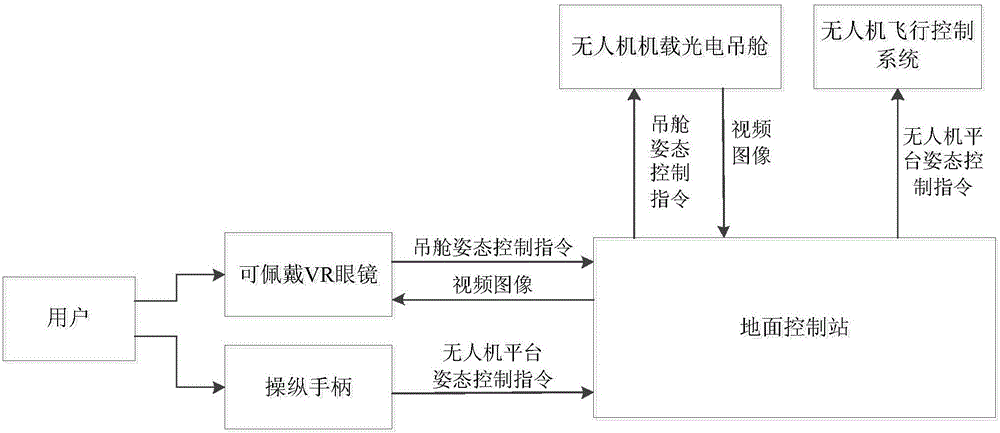

Unmanned aerial vehicle on-vehicle first visual angle follow-up nacelle system based on VR interaction

PendingCN106125747ARealize visual manipulation interactionRealize linkageAttitude controlWireless transceiverControl system

The invention discloses an unmanned aerial vehicle on-vehicle first visual angle follow-up nacelle system based on VR interaction. The system comprises an on-vehicle photoelectric nacelle, an unmanned aerial vehicle flight control system, a ground control station, wearable VR glasses and a control handle. The VR glasses are worn on the head of a user and are connected with the ground control station through a USB bus. The control handle is used for manual operation by the user and is connected with the ground control station through Bluetooth. The on-vehicle photoelectric nacelle is connected with the ground control station through a wireless transceiver device. The unmanned aerial vehicle flight control system is connected with the ground control station through the wireless transceiver device. The unmanned aerial vehicle on-vehicle first visual angle follow-up nacelle system is a novel unmanned aerial vehicle on-vehicle follow-up nacelle system. Technical advantages of VR are sufficiently integrated at a man-machine interaction aspect. The unmanned aerial vehicle on-vehicle first visual angle follow-up nacelle system is substantially different from a traditional third-visual-angle task nacelle system. The unmanned aerial vehicle on-vehicle first visual angle follow-up nacelle system realizes uniqueness of first-visual-angle visual control and furthermore improves vivid sensory shock of the user. Furthermore the unmanned aerial vehicle on-vehicle first visual angle follow-up nacelle system has advantages of relatively flexible realization manner, simple operation, high control precision, low cost and high real-time performance.

Owner:STATE GRID FUJIAN ELECTRIC POWER CO LTD +3

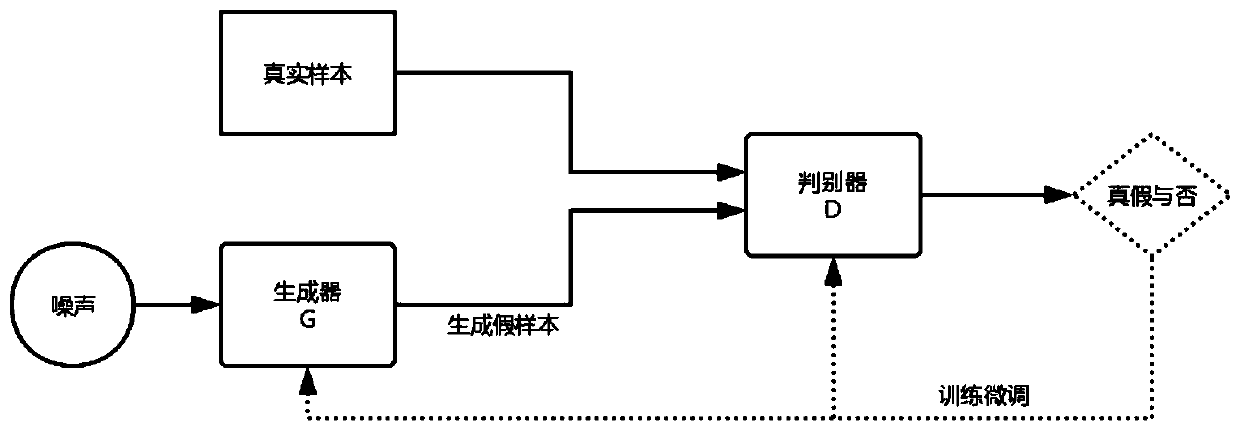

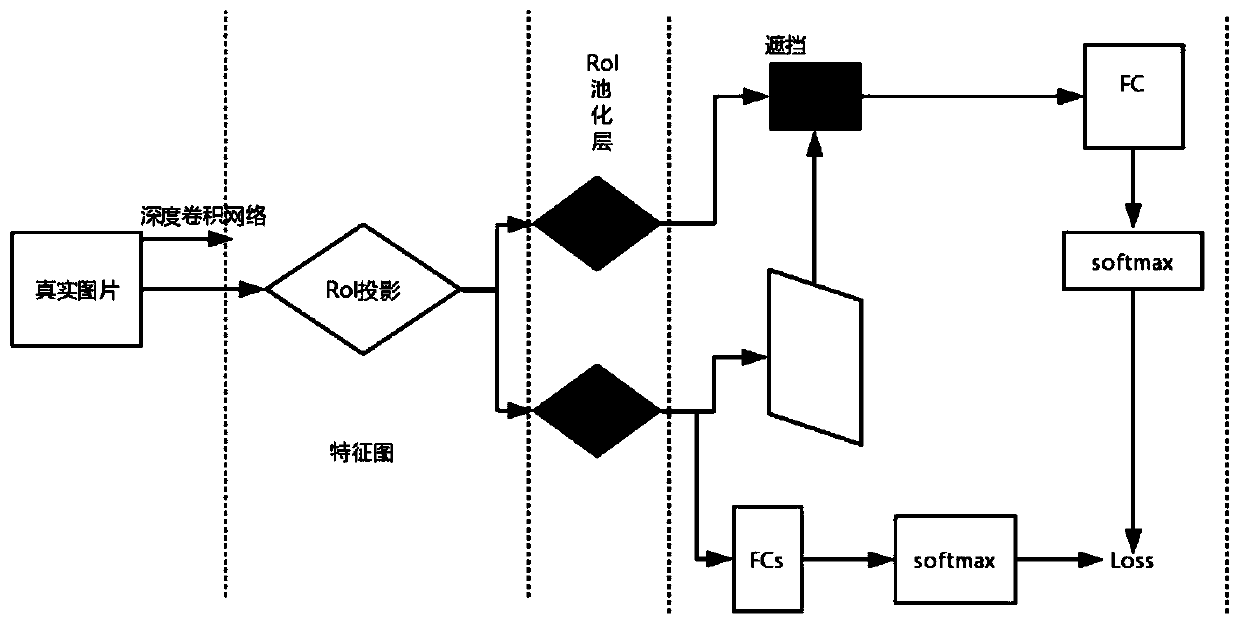

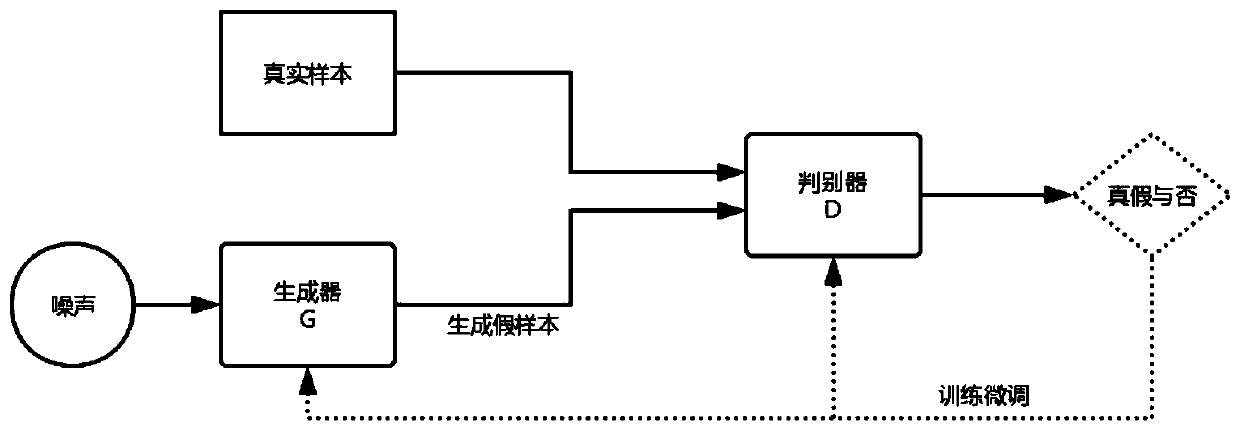

Face recognition method based on adversarial deep learning network

InactiveCN109977841AReduce restrictionsImprove accuracyCharacter and pattern recognitionNeural architecturesGenerative adversarial networkAdversarial network

The patent designs a CNN and generative adversarial network combined network framework, realizing detection of the human face under the condition of shielding. In recent years, the deep convolutionalneural network (CNN) significantly improves the accuracy of image classification and target detection. The invention aims to apply a method of combining target detection and an adversarial network todetection of a human face under a shielding condition. Although face recognition is applied to many occasions, most of current face recognition systems can only be applied to some strict and standardlimited environments, for example, when a detected main body is detected, posture adjustment needs to be carried out, and glasses or masks cannot be worn. In the method, the generative adversarial network is mainly used for data enhancement, and learning is carried out by generating a shielding difficulty case. According to the method, the original detector and confrontation are learned in a common mode, the detection performance is enhanced, and the precision is improved on the test result.

Owner:CENT SOUTH UNIV

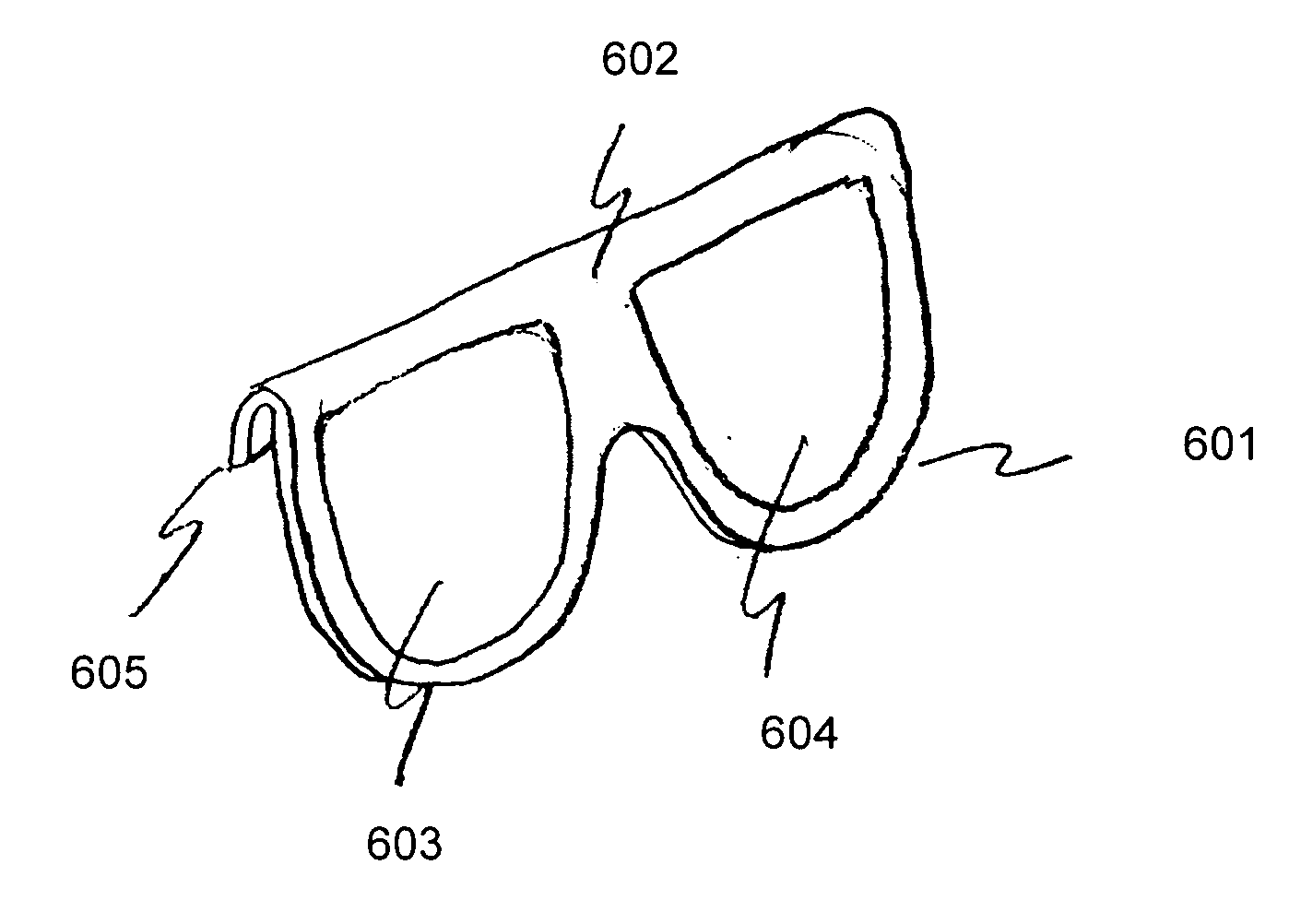

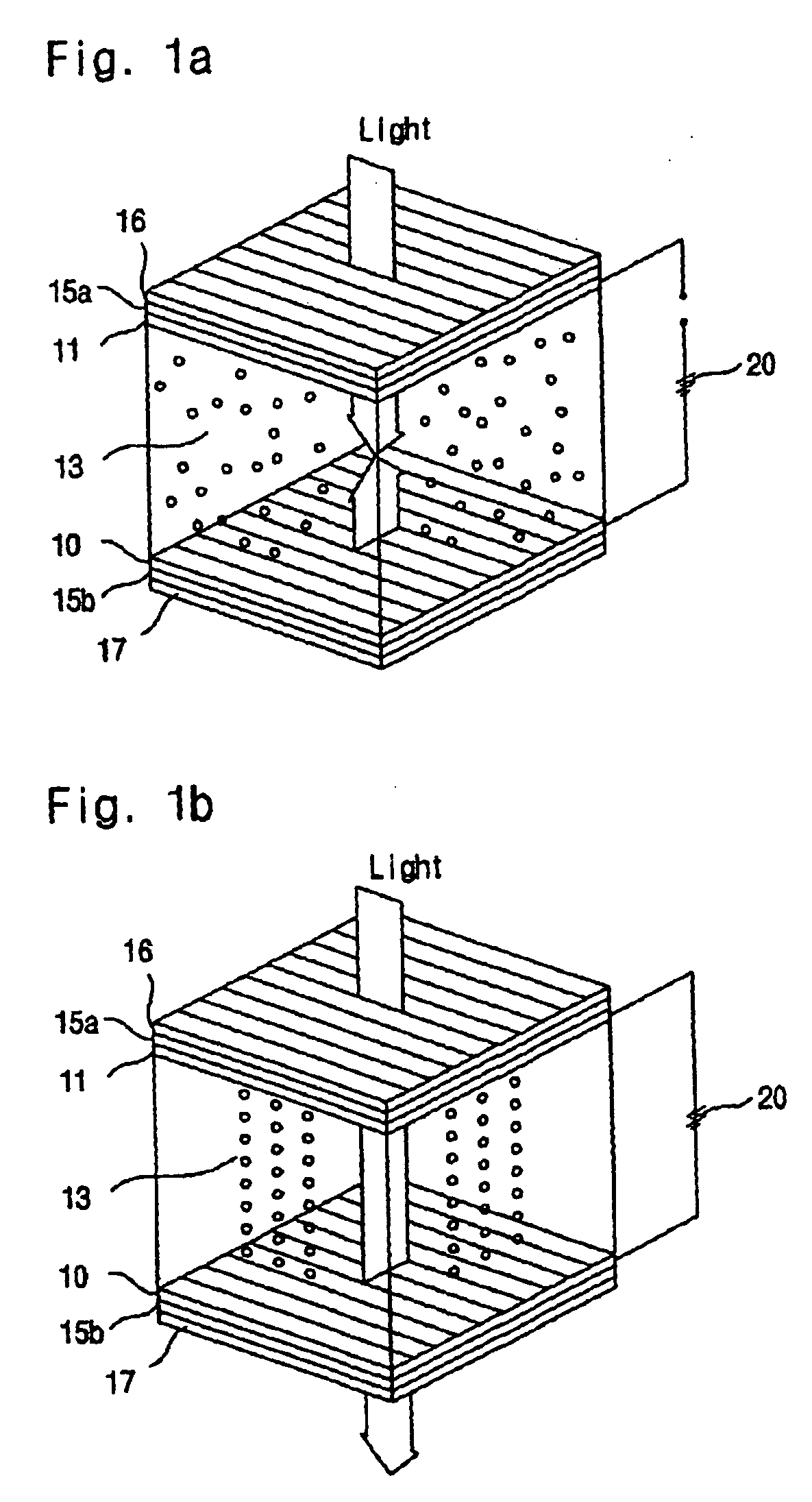

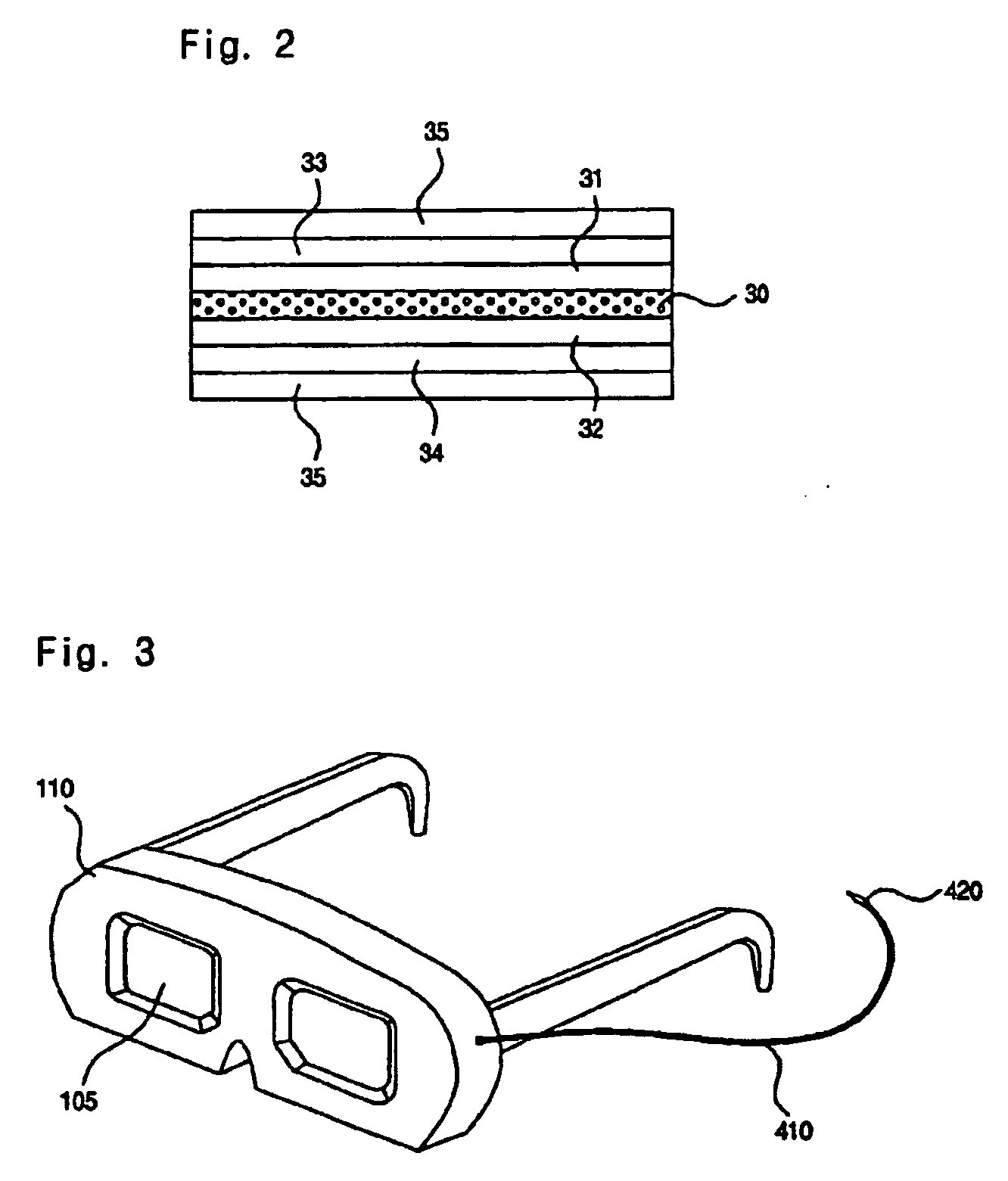

Glasses and classes lenses for stereoscopic image and system using the same

InactiveUS20050018095A1Thin thicknessLight weightSteroscopic systemsNon-linear opticsComputer graphics (images)Eyewear

Glasses lenses for stereoscopic image and glasses using the same relates to a liquid crystal layer with flexible films formed on transparent electrode. This invention makes the lenses flexible, and by bending the lenses, fashionable glasses can be made. In this invention, the glasses' breakage has decreased because the film's material is unbreakable by impact and the viewer wearing the glasses is comfortable because of thin thickness and lightweight. Also, the glasses for stereoscopic image are equipped with a connector holder. Thus, in case of cable malfunction, a user only needs to change the cable, not the whole unit.

Owner:SOFTPIXEL

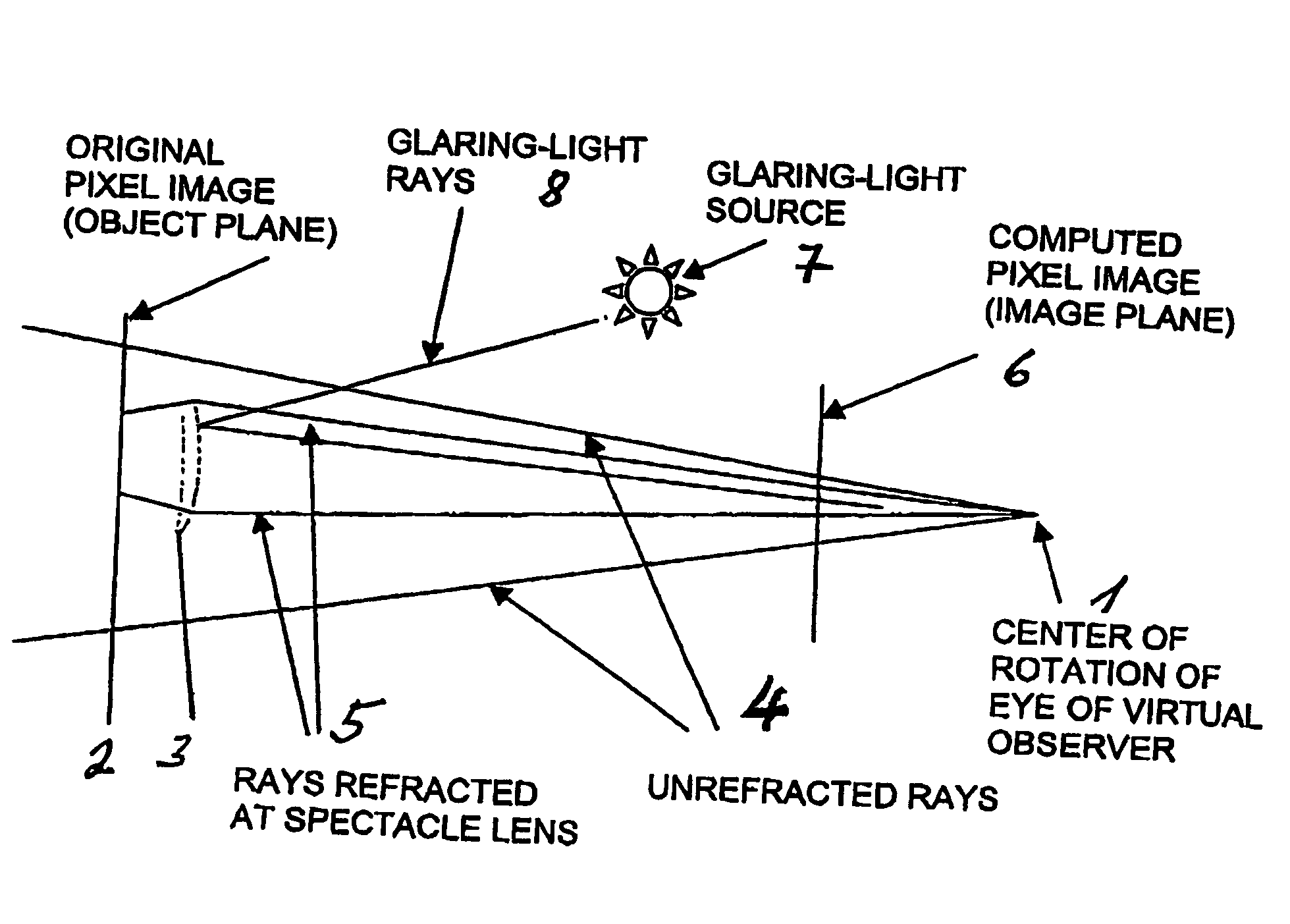

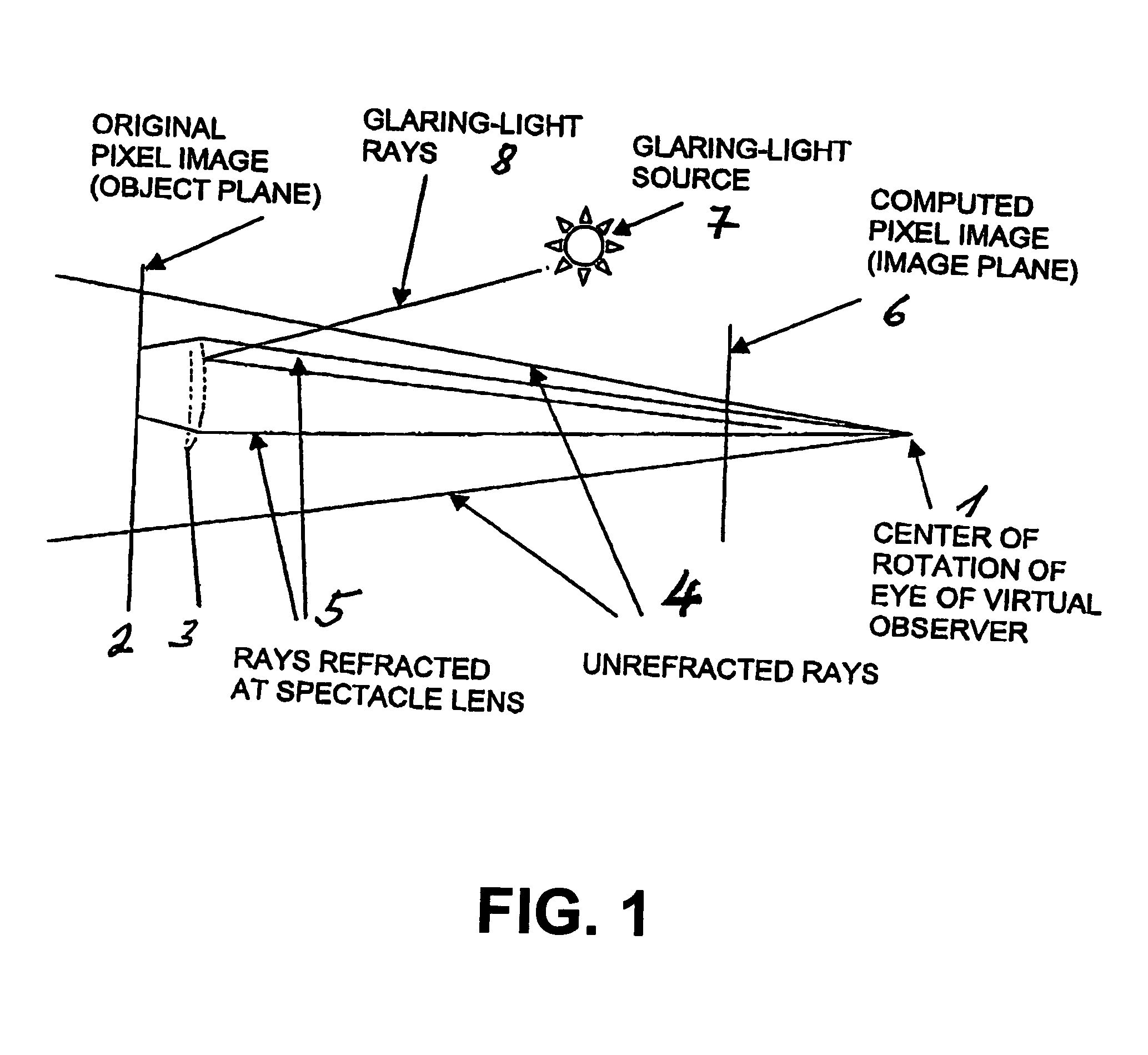

Method for simulating and demonstrating the optical effects of glasses on the human face

A method is provided for demonstrating an effect of a particular spectacle frame and of optical lenses fitted into this spectacle frame on the appearance of a spectacles wearer as it would be perceived by another person (virtual observer). An image of a face of the spectacles wearer is prepared in such manner that the image can be processes in a computer. An arrangement of the respective spectacle frame in front of the eyes is determined. The image of the face is projected onto a plane by a computation (ray-tracing) of principal rays passing through a center of rotation of an eye of the (virtual) observer to produce a planar image of the face in this plane. Taking into account an optical power of a region, through which a principal ray passes, of each spectacle lens (virtually) fitted in the respective lens rim, and it arrangement in front of the eye, the paths of prinipcal rays which lie within lens rims of the spectacle frame or edges of the spectacle lenses are computed so that an observer of the thus-produced planar image of the face with “worn” spectacles can assess a distortion of the eyes portion of the face by the spectacle lenses and therewith a quality of the spectacle lenses.

Owner:RODENSTOCK GMBH

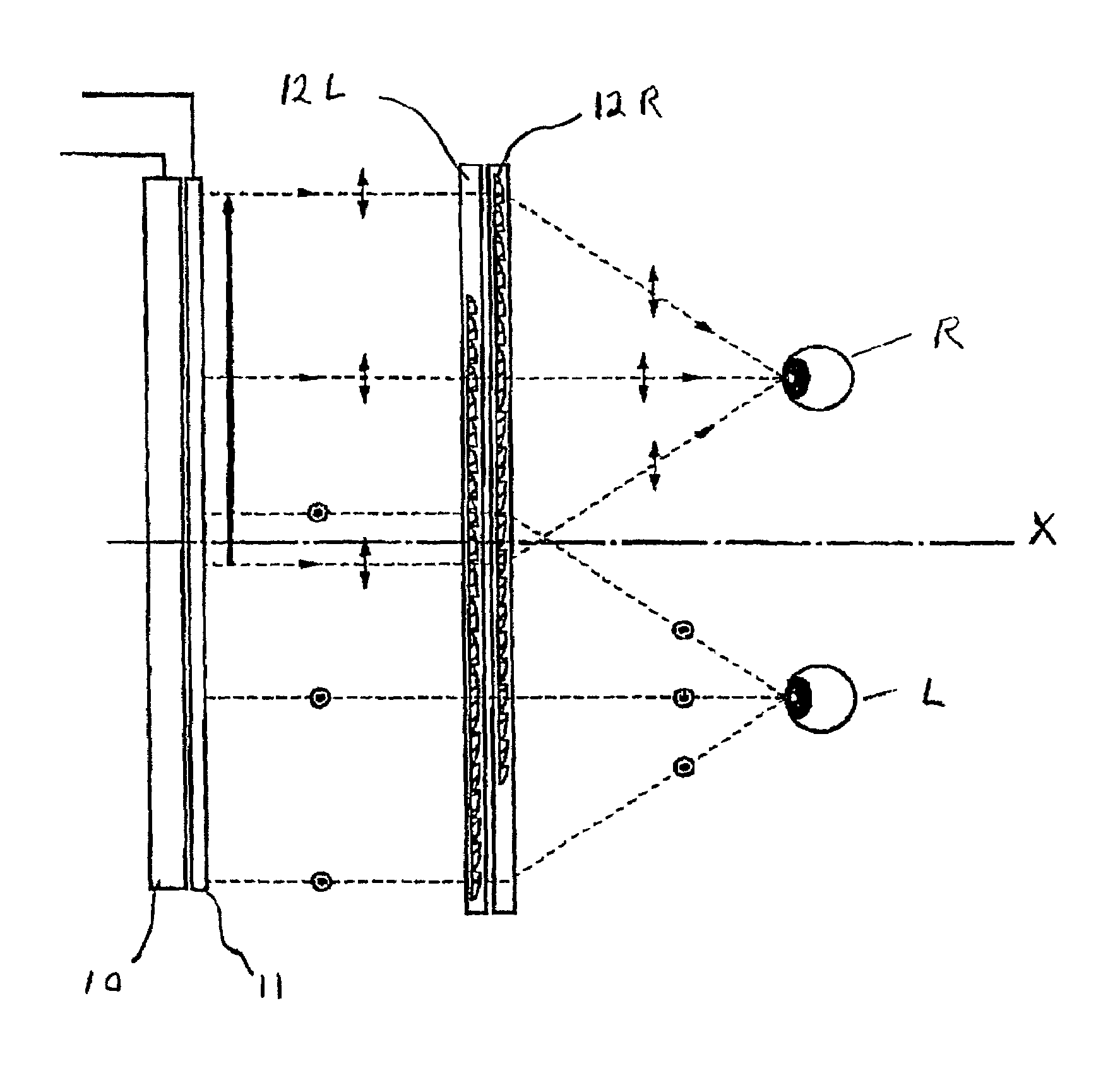

3-D display device

Various embodiments of a 3-D display device are disclosed which simultaneously provide a wide field of view and a large eye relief and yet do not require that the viewer wear glasses or the like in order to keep the images intended for only the left eye from entering the view field of the right eye and vice-versa. The light that forms left and right display images is made independent in left and right optical paths, either by having orthogonal polarizations, wavelengths that do not overlap, or by time-multiplexing the left and right images in different time periods that do not coincide.

Owner:OLYMPUS CORP

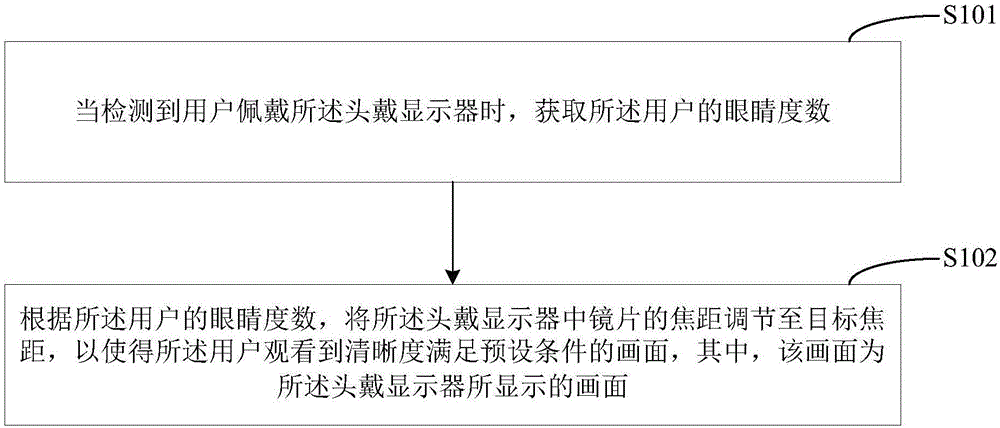

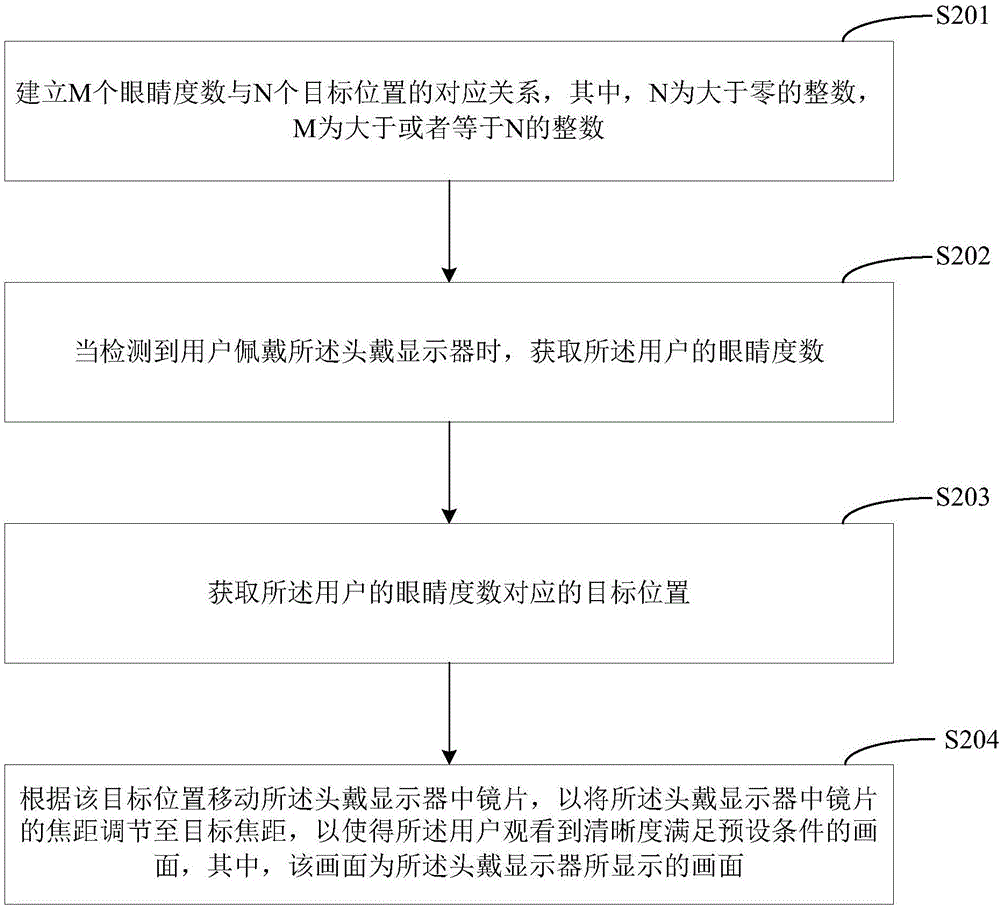

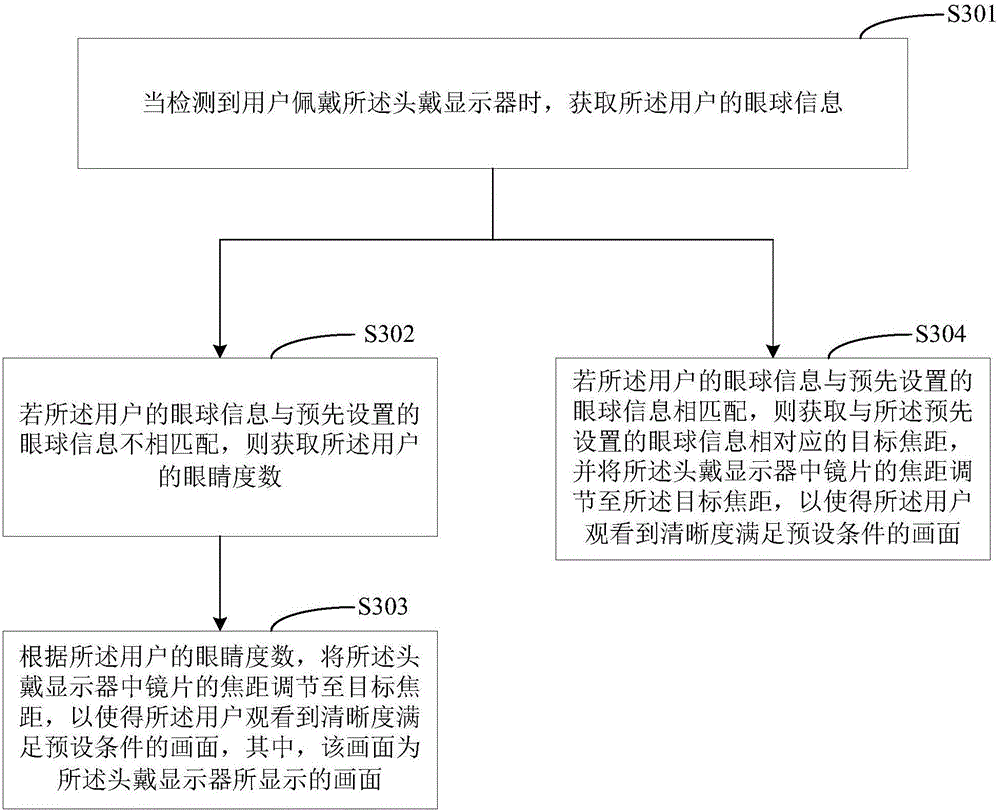

Focal length adjusting method and headset display

The invention belongs to the technical filed of wearable equipment and provides a focal length adjusting method and a headset display. The method includes acquiring the eye degree of a user when it is detected the user wears the headset display; adjusting the focal length of the lens in the headset display to a target focal length according to the eye degree of the user so that the user views a frame whose resolution satisfies a preset condition, wherein the frame is the frame displayed by the headset display. According to the present invention, a user who has a vision problem can clearly view the frame displayed on the headset display without wearing glasses, so that the wearing effect of the headset display and the visual immersion feeling can be improved.

Owner:GUANGDONG XIAOTIANCAI TECH CO LTD

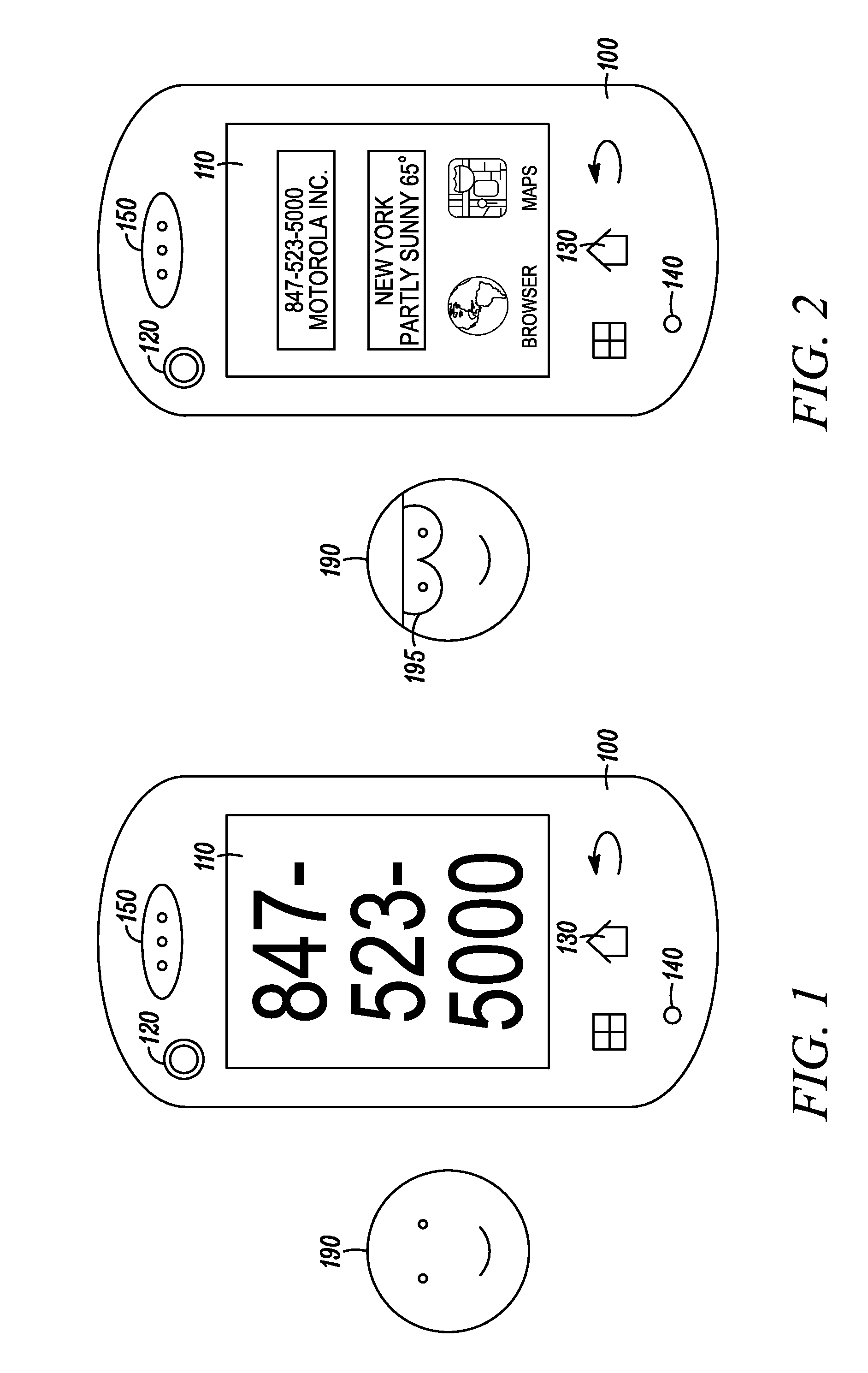

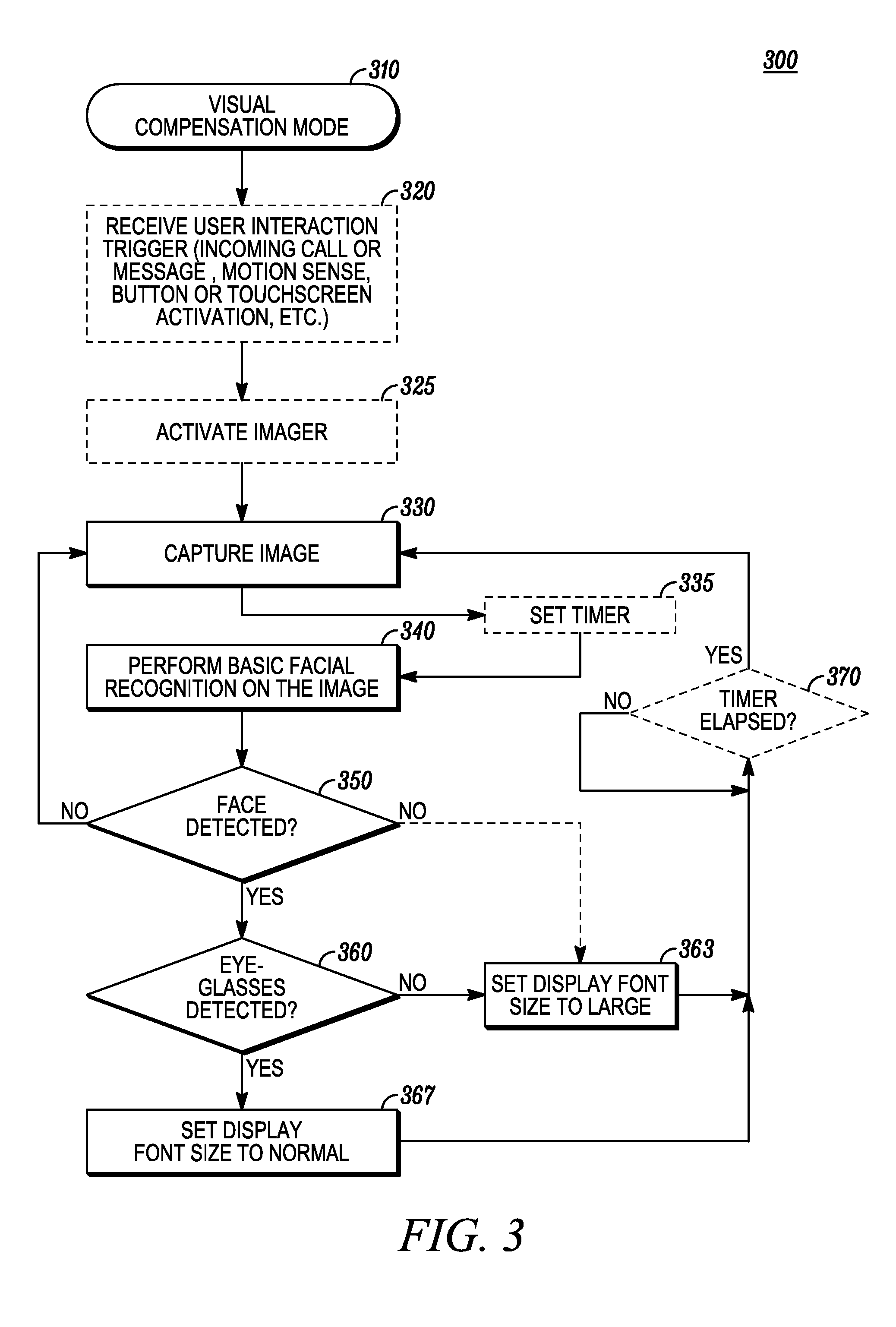

Method and Device for Visual Compensation

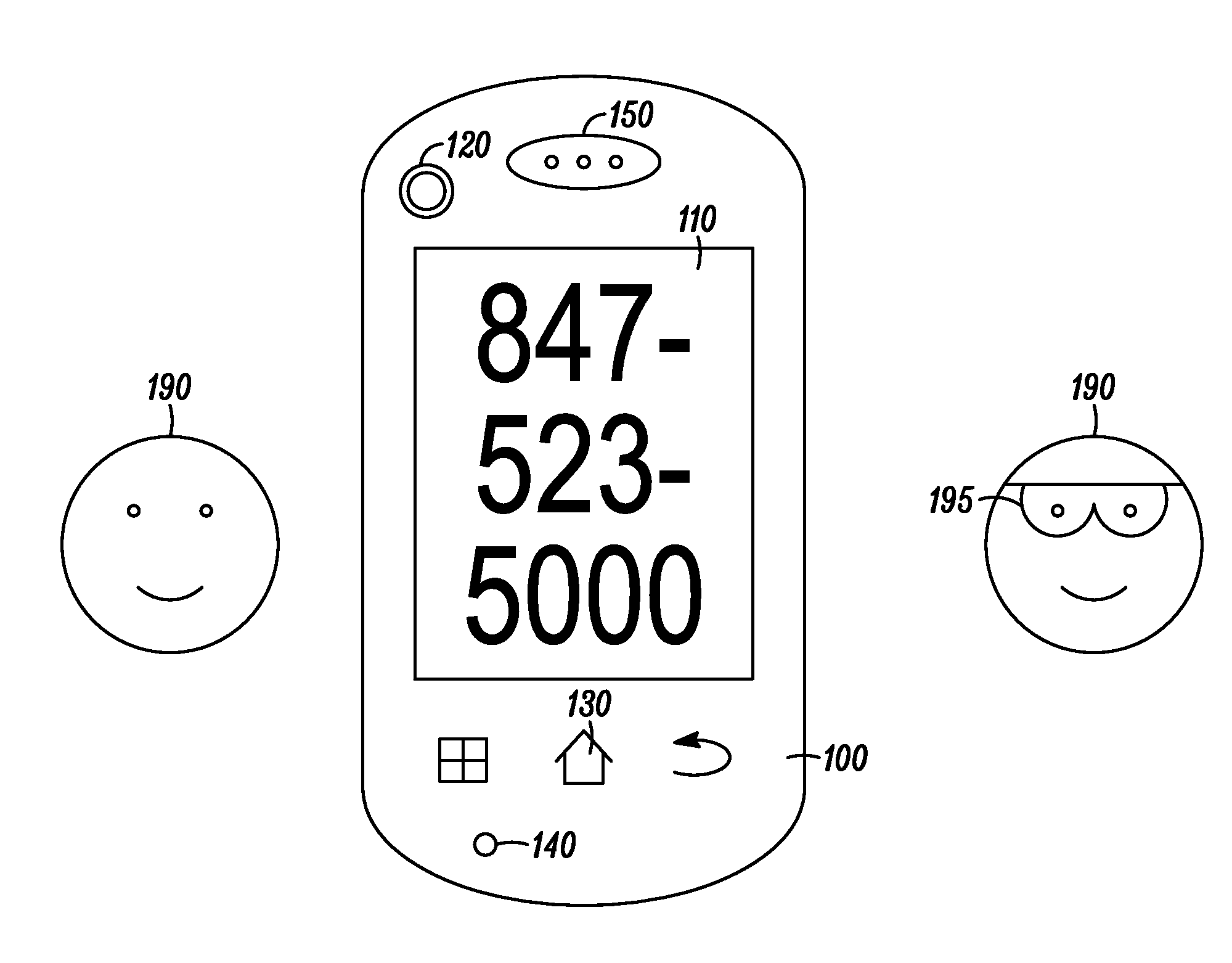

InactiveUS20110149059A1Digital data processing detailsCharacter and pattern recognitionKey pressingVia device

A method 300 and device for visual compensation captures 330 an image using an imager, detects 360 whether eyeglasses are present in the image, and sets 363 an electronic visual display to a larger font size, if eyeglasses are not detected as present in the image. If eyeglasses are detected as present in the image, the electronic visual display is set 367 to a normal font size. The method and device can be triggered 320 (for example) by an incoming call or message, by a touch screen activation, a key press, or by a sensed motion of the device. The method can be repeated from time to time to detect whether a user has put on eyeglasses (or taken off eyeglasses) after the first image capture. The method and device compensates for users with presbyopia (and some other types of visual impairments) who intermittently wear glasses.

Owner:GOOGLE TECH HLDG LLC

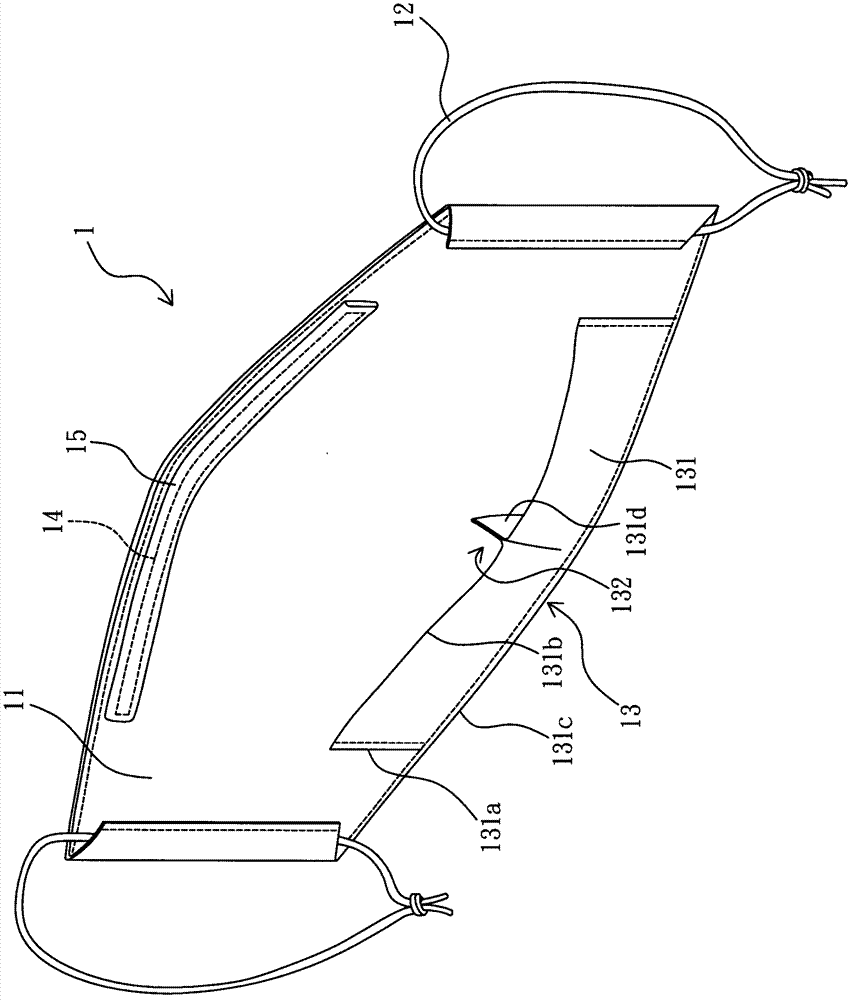

Antifog mask

InactiveCN102948934AImprove the defect of unclear line of sightProtective garmentRespiratorEngineering

The invention discloses an antifog mask. The antifog mask consists of a mask body and an exhausting structure arranged on the lower portion of the inner side surface of the mask body. Two side edges of the mask body are provided with tying cords oppositely; the exhausting structure is a strip-shaped pushing contact sheet and the two ends of the pushing contact sheet are fixedly connected with the two lower sides or ends of the inner side surface of the mask body; and the top edge of the pushing contact sheet is provided with at least one bevel, so that the length of the top edge is shorter than that of the bottom edge of the pushing contact sheet and therefore a through hole is formed between the pushing contact sheet and the lower portion of the inner side surface of the mask body. When the antifog mask is in use, the air expired by a user who wears glasses is exhausted outside from the bottom edge of the inner side surface of the mask body through the through hole, and therefore the defect of vision blurring caused by the fogging of the glasses is overcome.

Owner:陈丽芬 +1

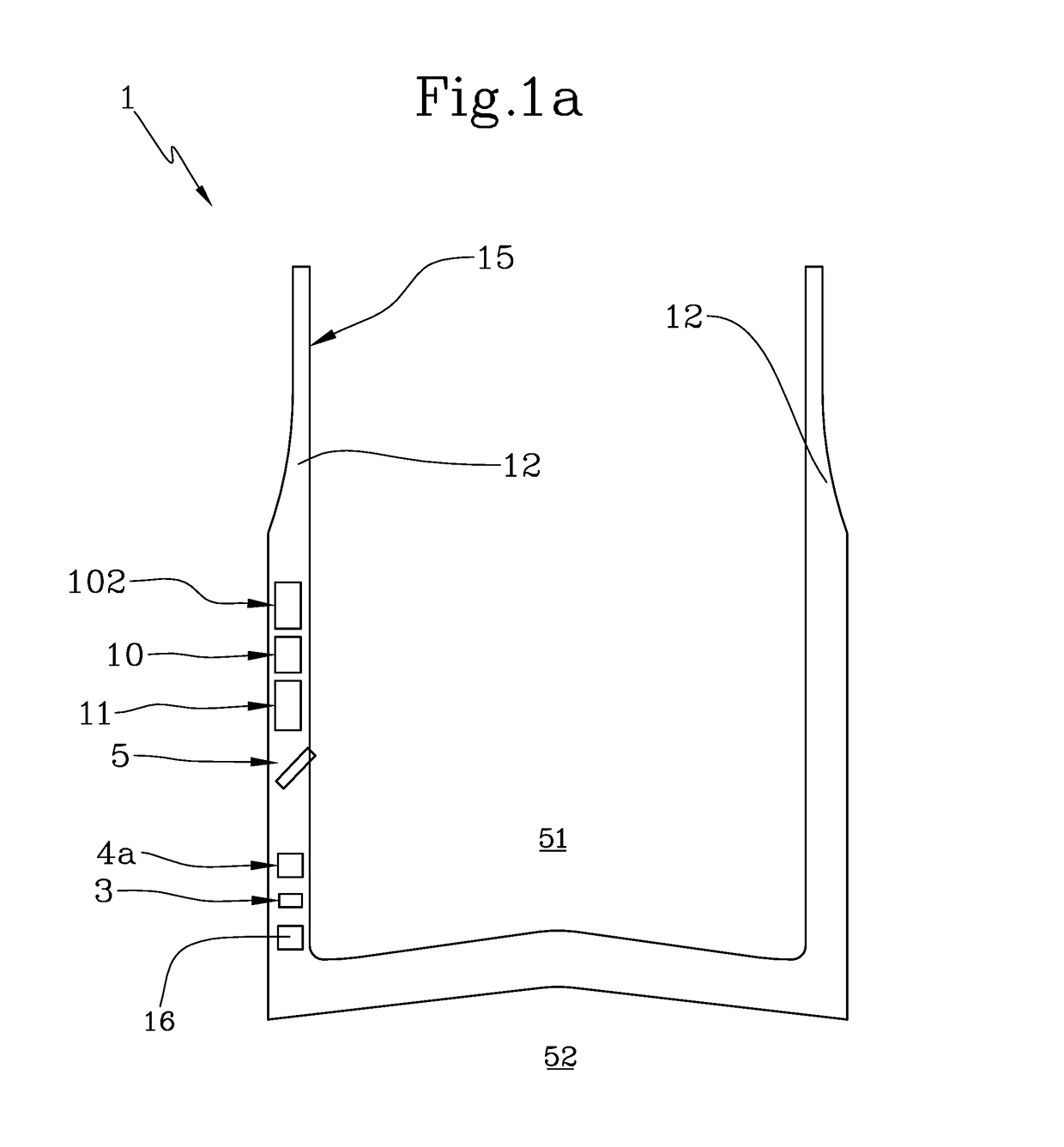

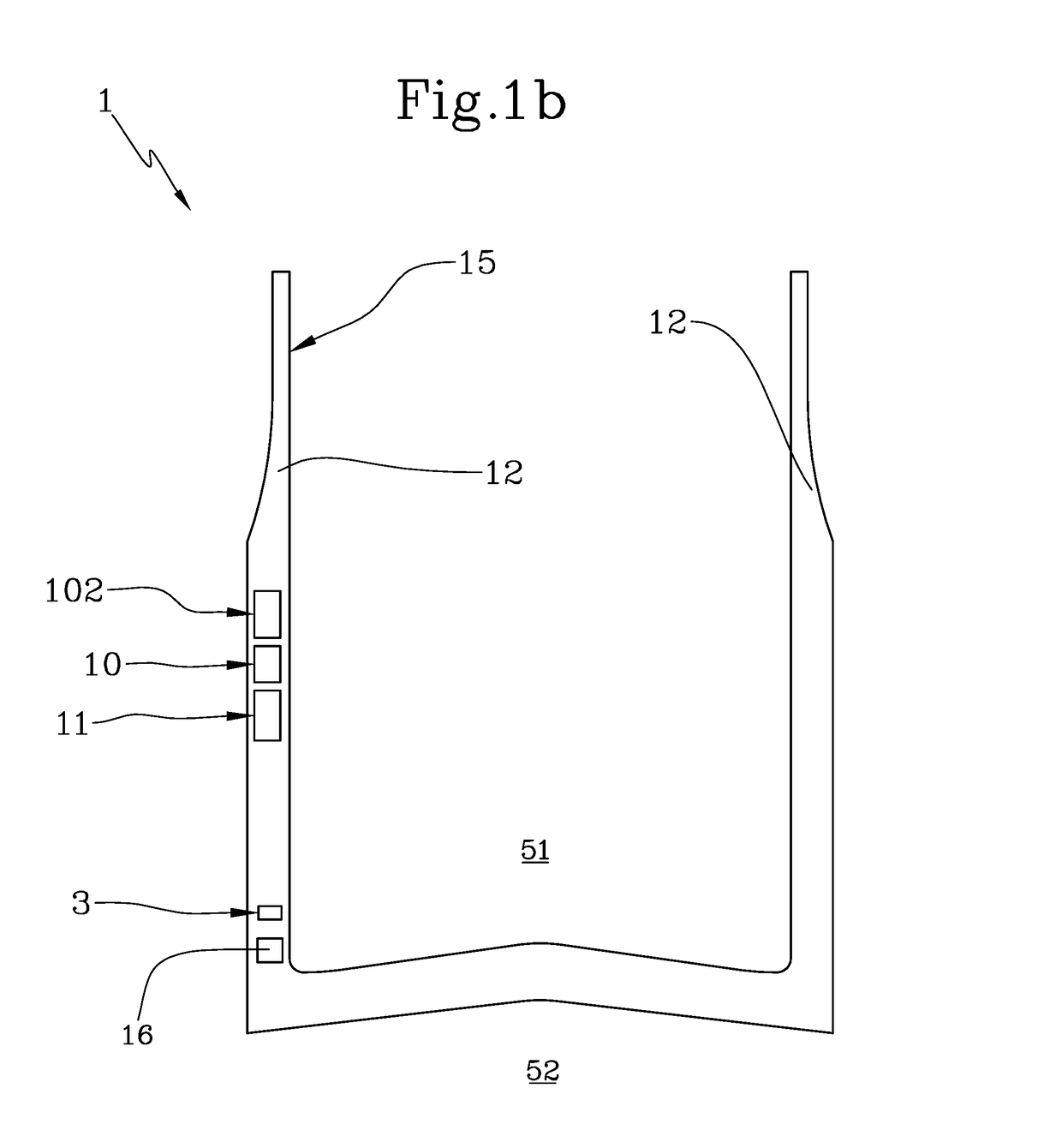

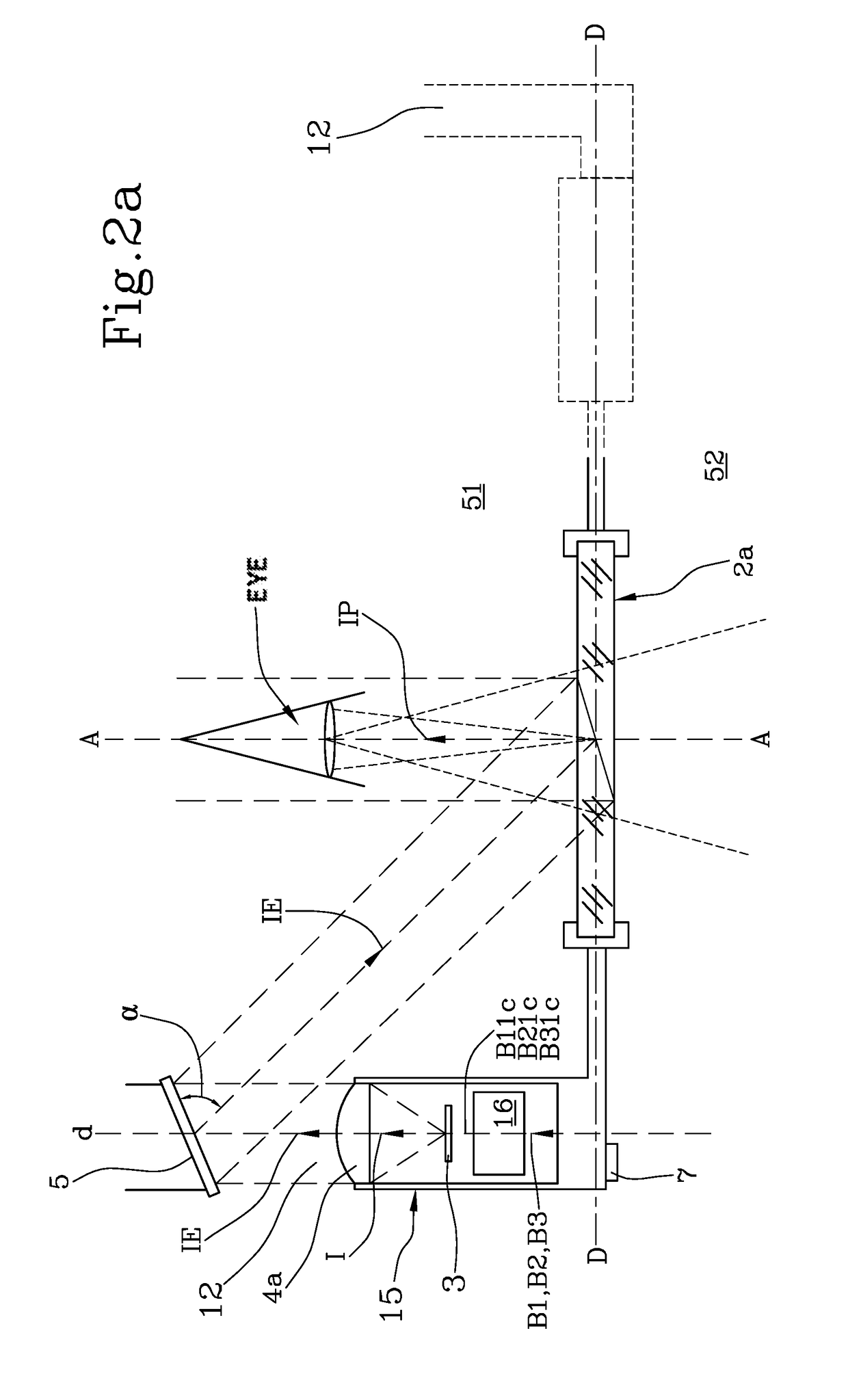

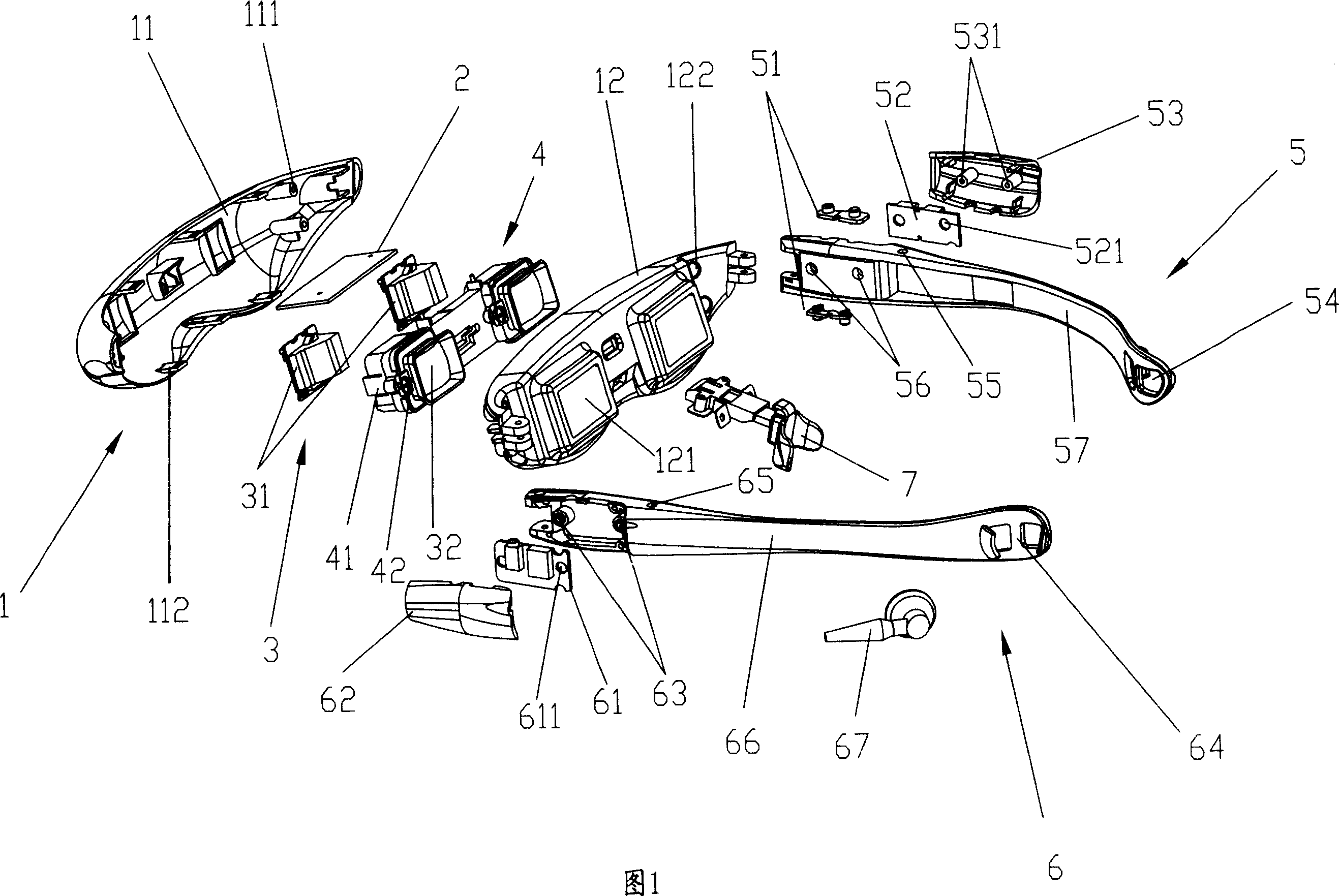

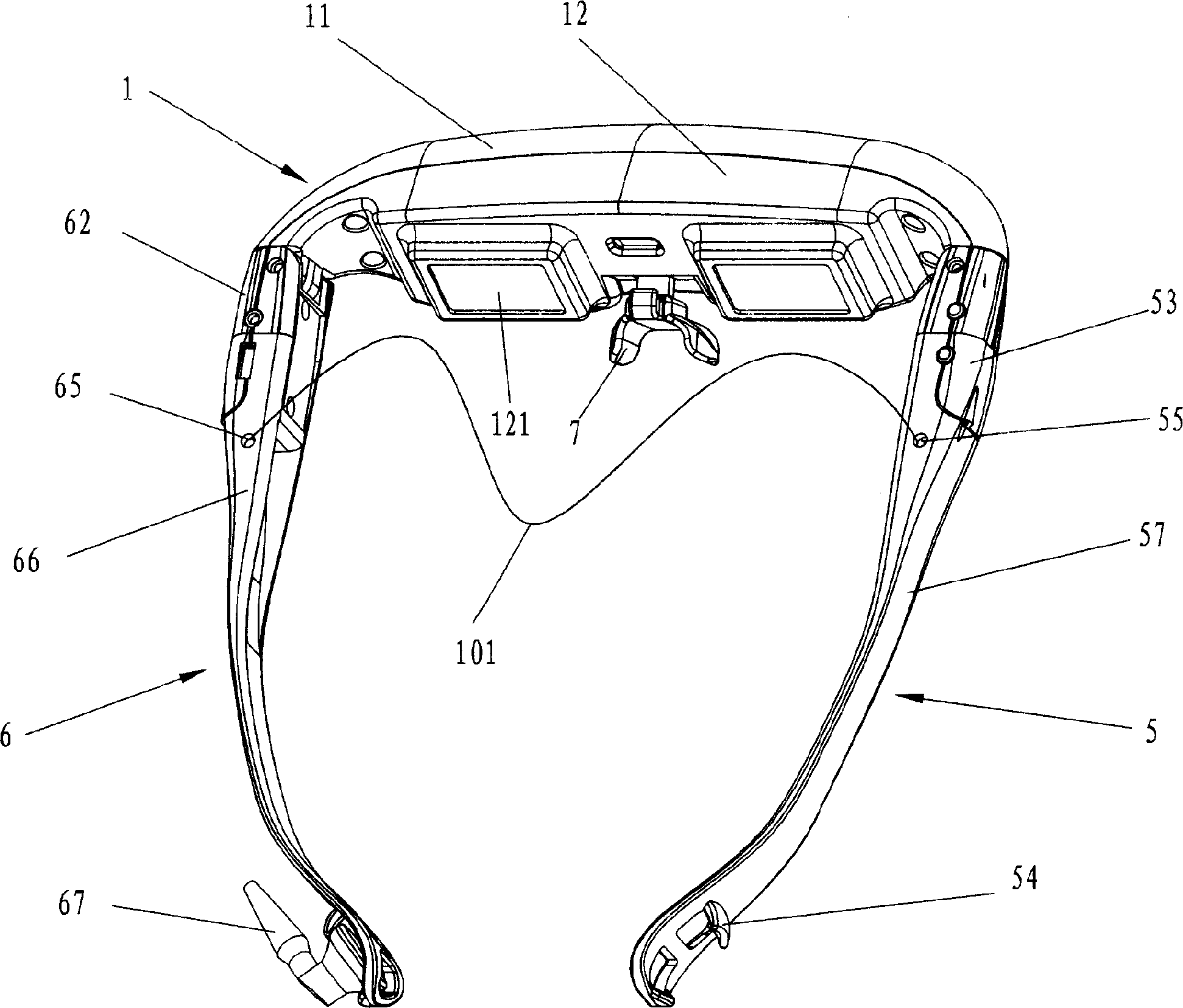

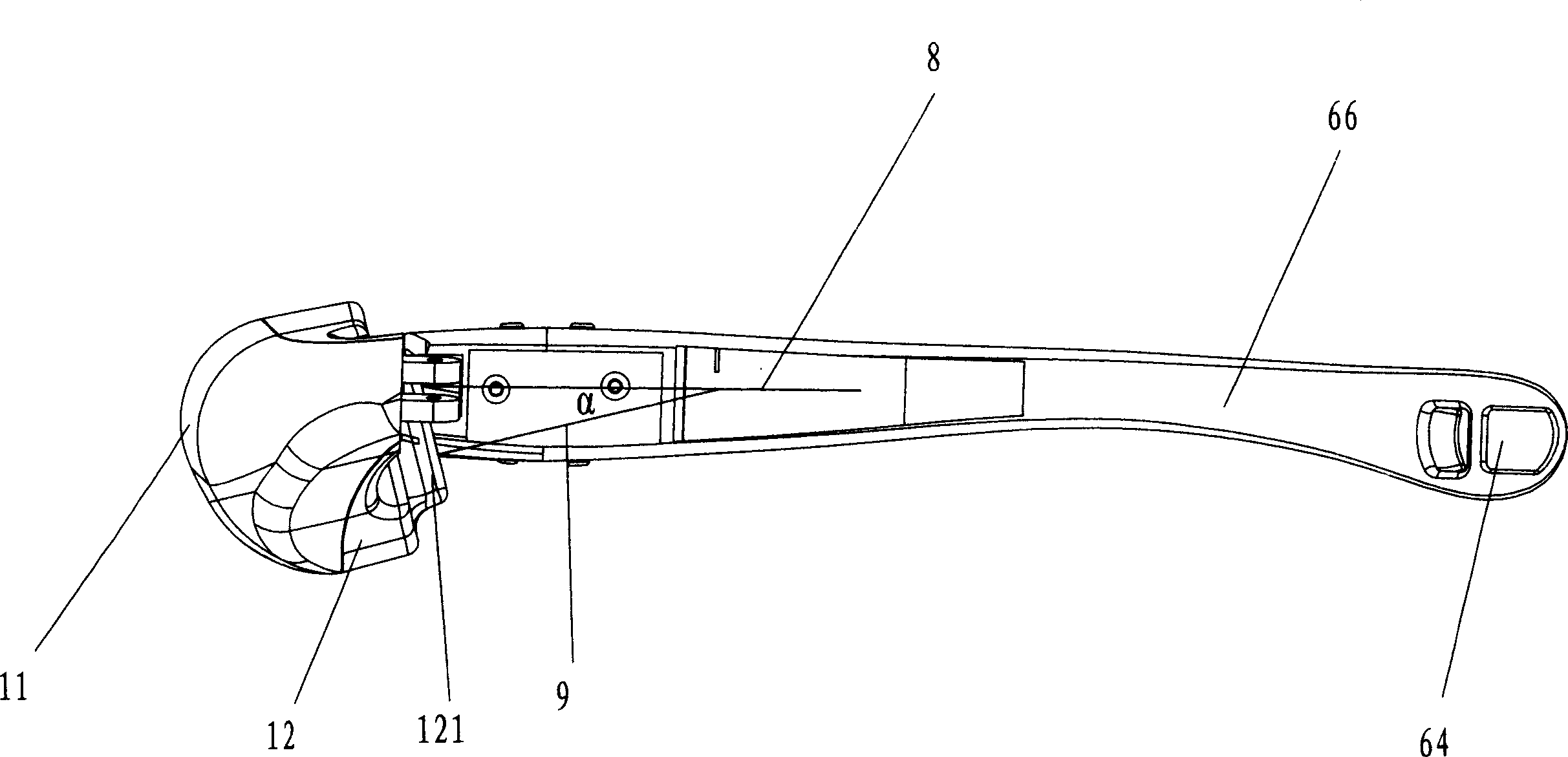

Method and device for preventing eye tiredness in wearing head-wearing glasses display

InactiveCN1908729ADoes not cause eye fatigueEliminate or reduce stressOptical elementsGlasses typeNose

The disclosed method to prevent fatigue when wearing glass-type display comprises: letting the top edge of system image level or lower than people horizontal sight line, and keeping the included angle alpha between horizontal sight line and image normal line in -25~-10deg, wherein the device comprises: a body 1 with front / back shell 11 / 12, a left / right lens root 6 / 5, a glass frame 4, a PCB 2, an image display module 3, a glass interface 61 connected with input end of PCB 2 and external signal, an image wafer 3 connected with output of 61, a window plane 121 behind 12, and a nose frame 7. This invention has benefit for people health.

Owner:深圳市亿特联合显示技术有限公司

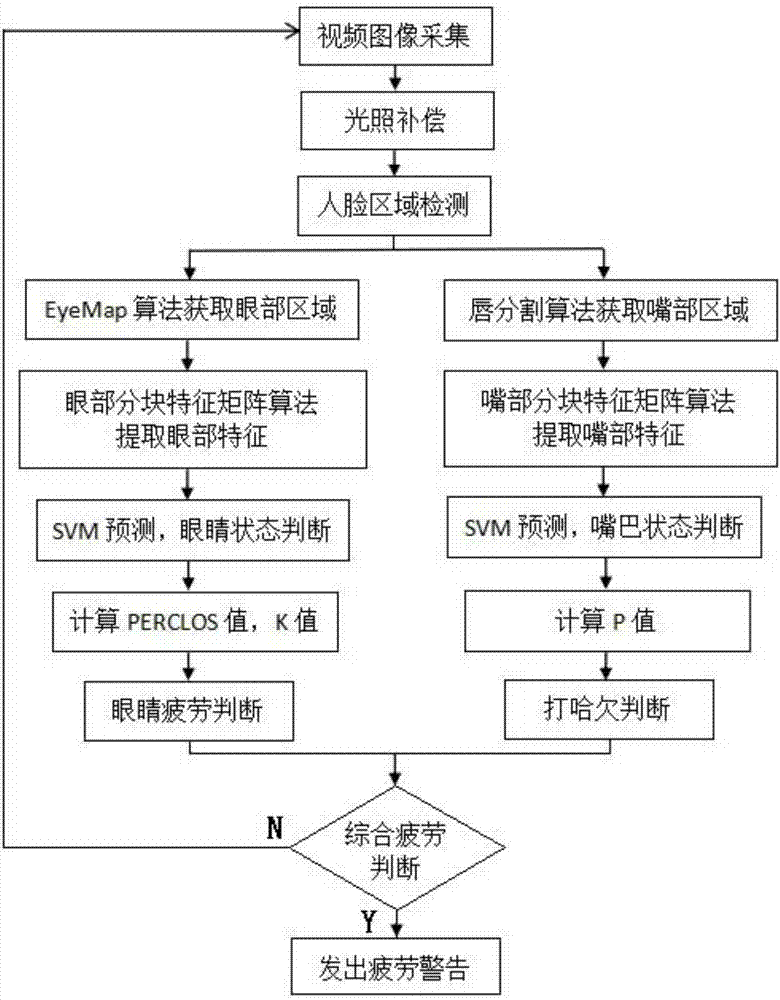

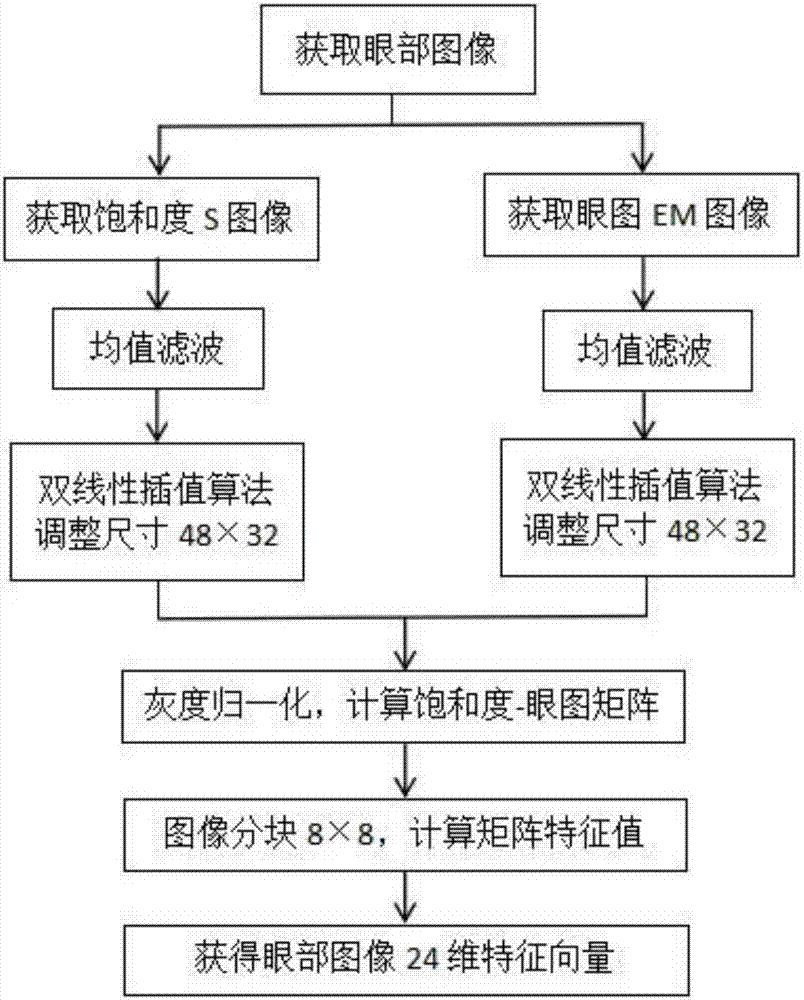

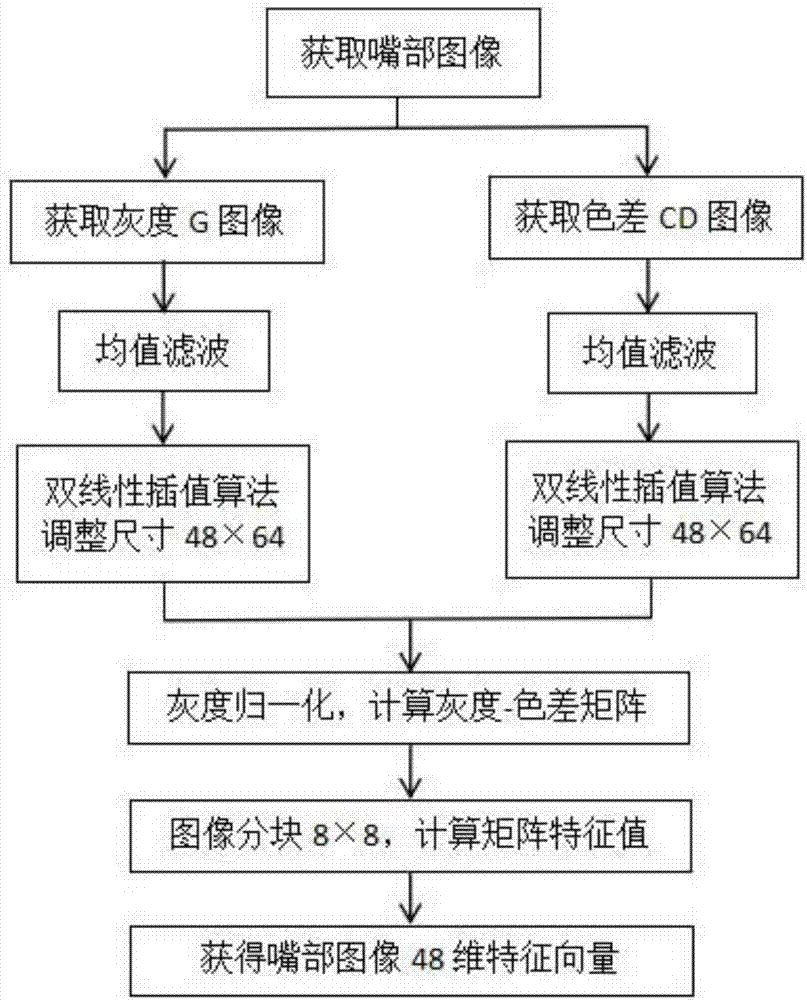

Fatigue state detection method based on sub-block characteristic matrix algorithm and SVM (support vector machine)

ActiveCN107578008AHigh similarityYawning state detection is accurateCharacter and pattern recognitionSupport vector machineSmall sample

The invention discloses a fatigue state detection method based on a sub-block characteristic matrix algorithm and an SVM (support vector machine), and belongs to the technical field of image processing and mode recognition. The method analyzes and judges whether a driver is in a fatigue state or not through facial features. The method includes the steps: firstly, acquiring a driver video image, and performing illumination compensation and face area detection; secondly, performing eye and mouth area detection in a face area. According to the method, characteristic extraction of an eye image isperformed by an eye sub-block characteristic matrix algorithm, influence of illumination conditions and glasses wearing on detection can be reduced, characteristic extraction of a mouth image is performed by a mouth sub-block characteristic matrix algorithm, interference of tooth appearing and mouth beard in detection can be reduced, images after characteristic extraction are classified by an SVMalgorithm, and reliability is improved under the condition of a small sample training set. According to the method, fatigue characteristics are analyzed according to the eyes and the mouth, the methodtransmits warning information when the driver is in a fatigue state, and traffic accidents can be decreased.

Owner:JILIN UNIV

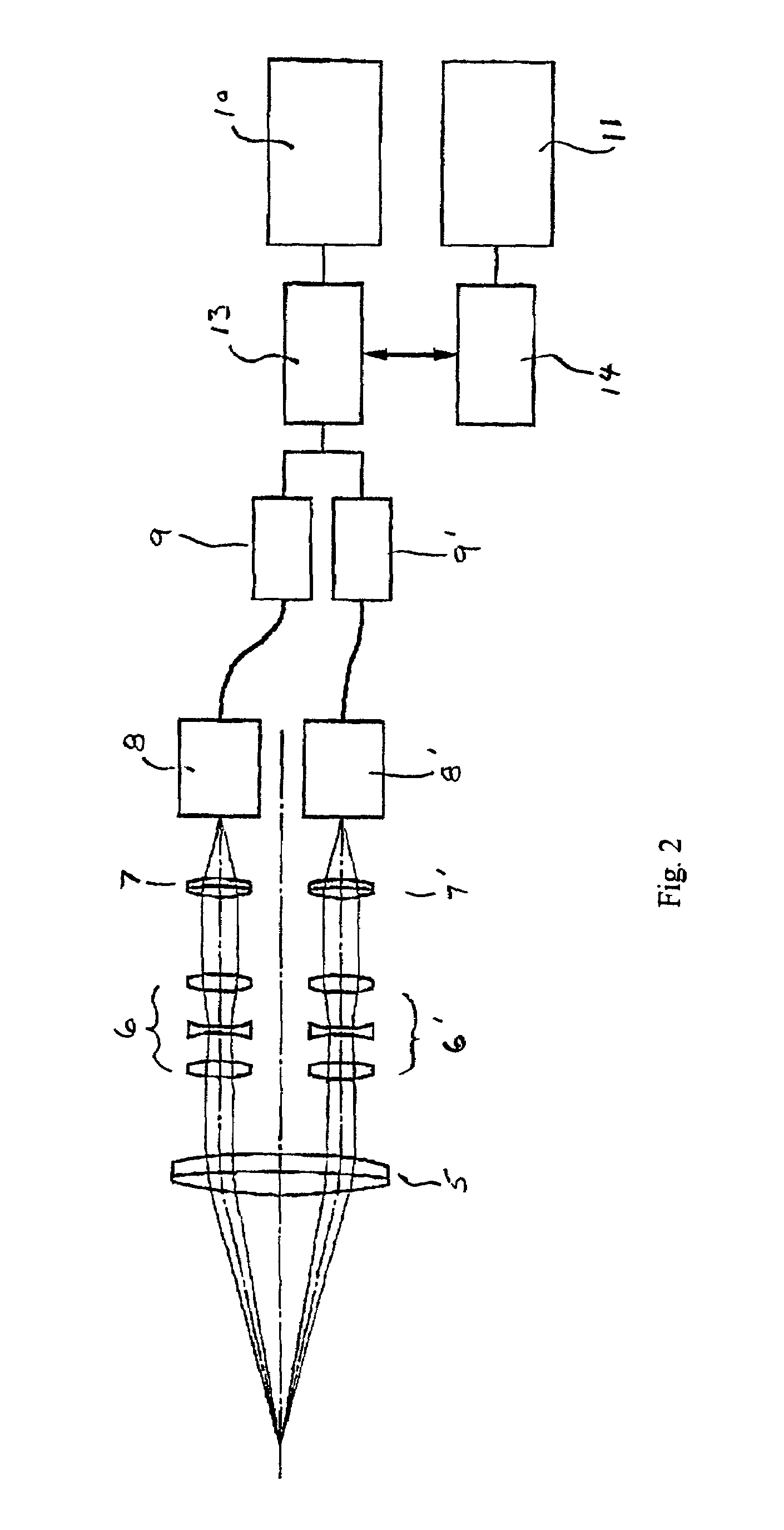

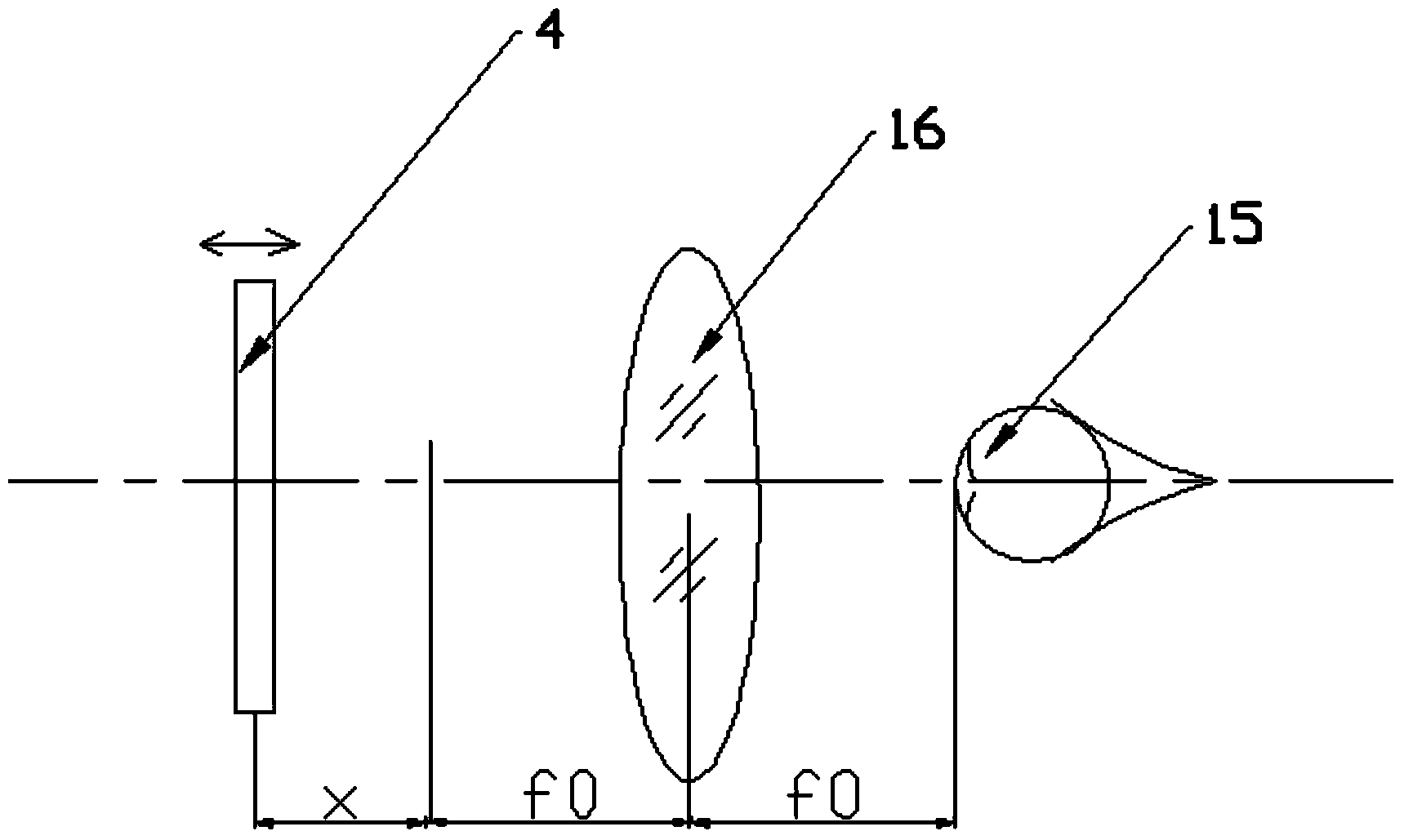

Handheld vision detecting device and vision detecting method

The invention discloses a handheld vision detecting device which at least comprises an imaging lens set and a vision chart, wherein the imaging lens set is composed of at least one imaging lens. The cornea of a detected person can be located at the position of the focus on one side of the imaging lens set, the center of the vision chart is arranged on the other side of the imaging lens set, and the vision chart can move in a front-and-back mode along a light path. The invention further discloses a vision detecting method and the steps which can measure various visions with the handheld vision detecting device without wearing glasses. By means of the device, the functions that the naked eye vision is measured, the corrected vision is measured, the worn-glass degree are measured when the corrected vision achieves 1.0, the low vision and the weak vision are detected, and the astigmatism is detected can be achieved. By means of the handheld vision detecting device and the vision detecting method, users can detect the vision at any time and any place, the detecting results are accurate, and the operation is conveniently and easily achieved.

Owner:SHENZHEN CERTAINN TECH CO LTD

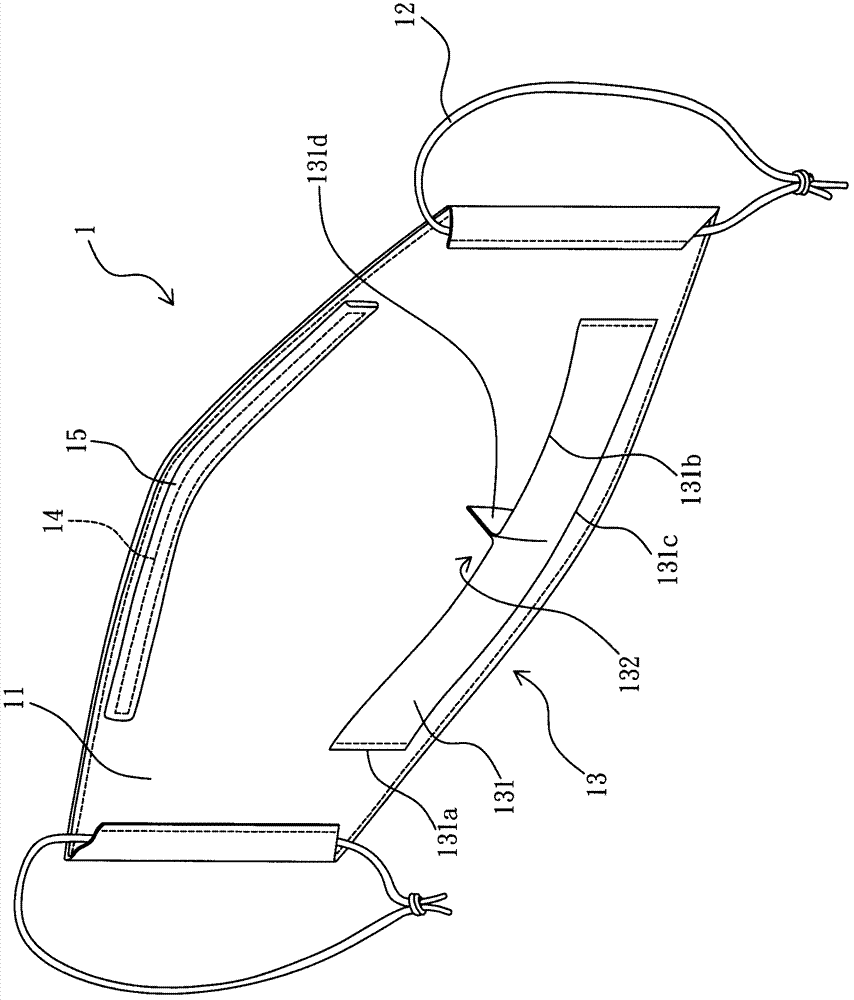

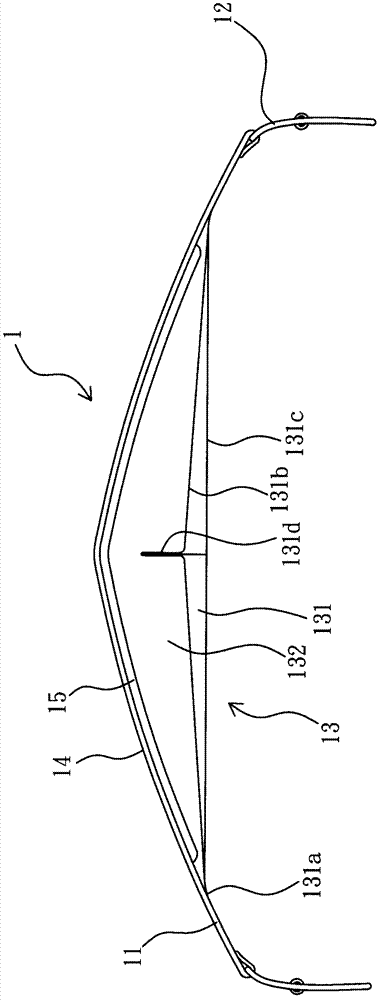

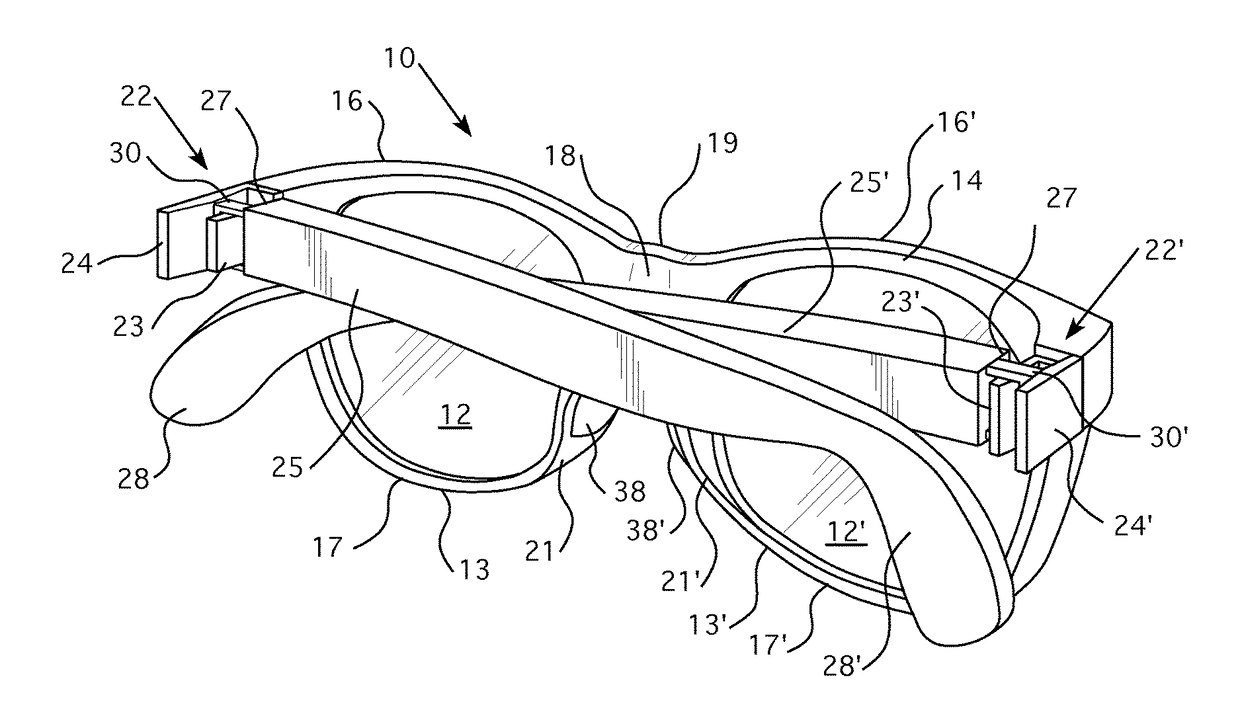

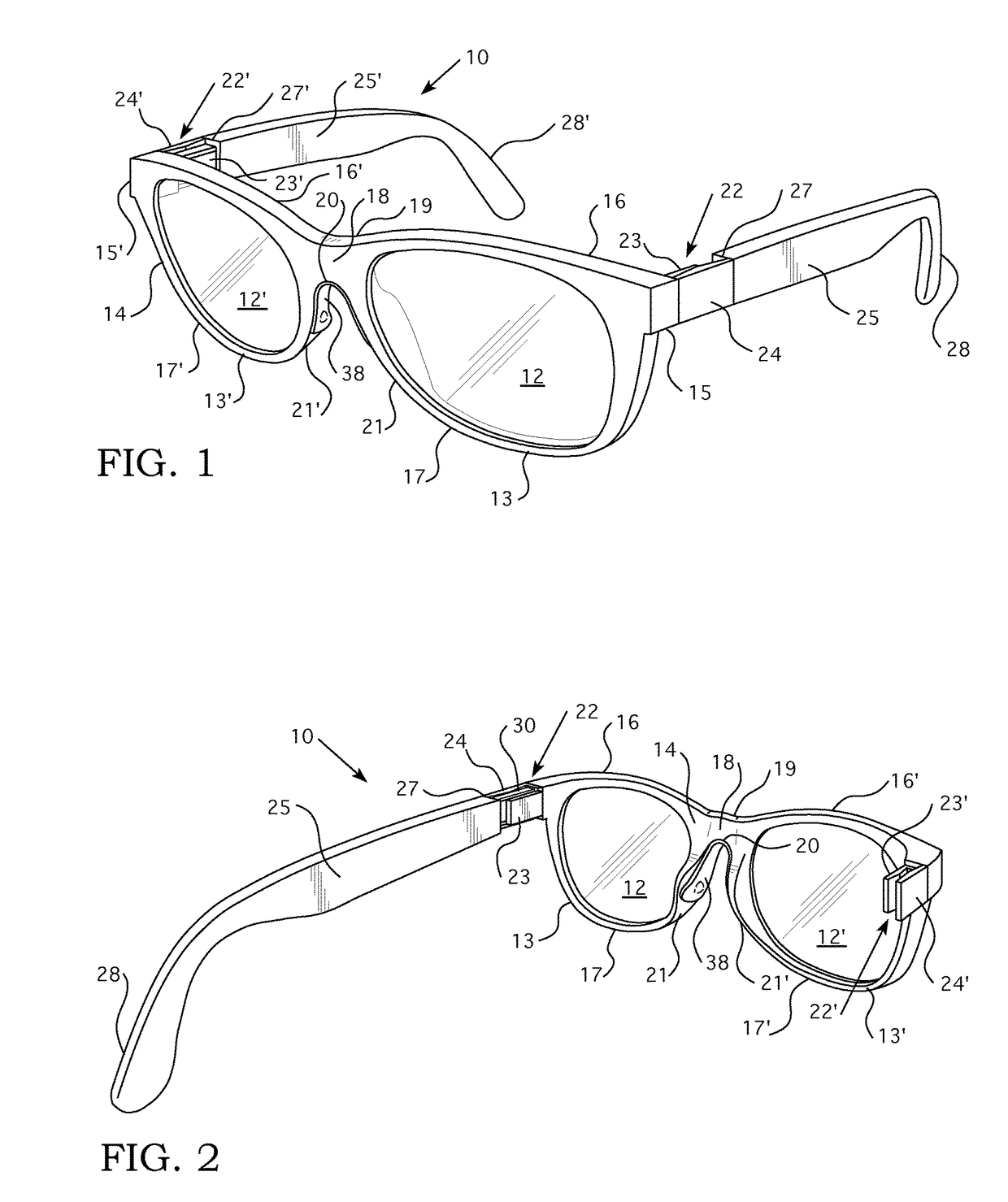

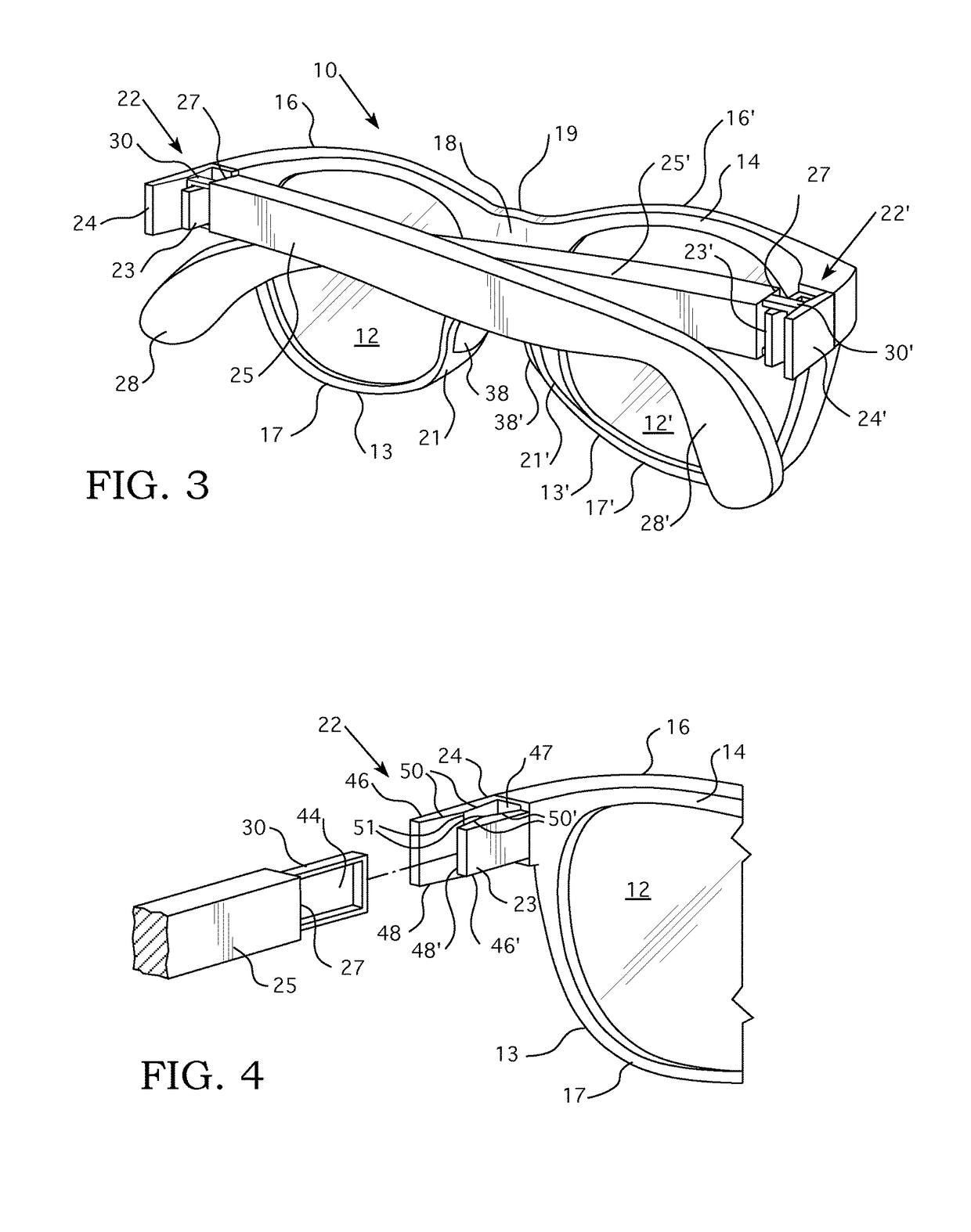

Eyeglasses with Detachable Temples and Nose Grip and Method of Use

ActiveUS20170293157A1Convenient lengthIncrease widthSpectales/gogglesNon-optical partsNoseEngineering

The present invention provides an eyeglasses system and method of use comprising a frame, a temple attachment assembly positioned on the ends of the frame, detachable temples having a hollow member extending therefrom for attachment to the temple attachment assembly, and a bridge nose grip assembly so as to allow a wearer to detach a temple from one side of the frame and to wear the eyeglasses comfortably, securely and without movement of the eyeglasses while reclining on the same side as the detached temple.

Owner:ARDER PAMELA

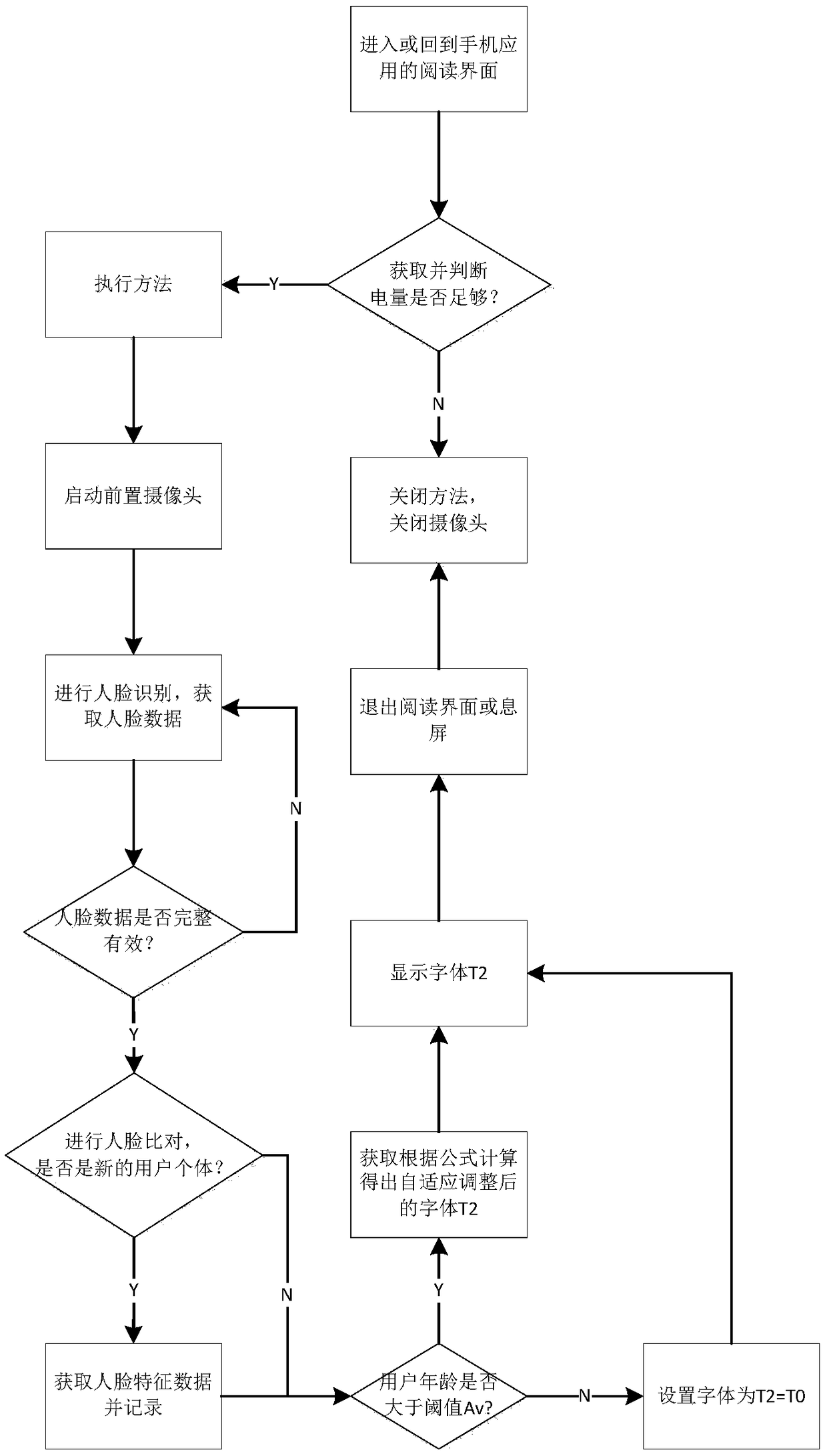

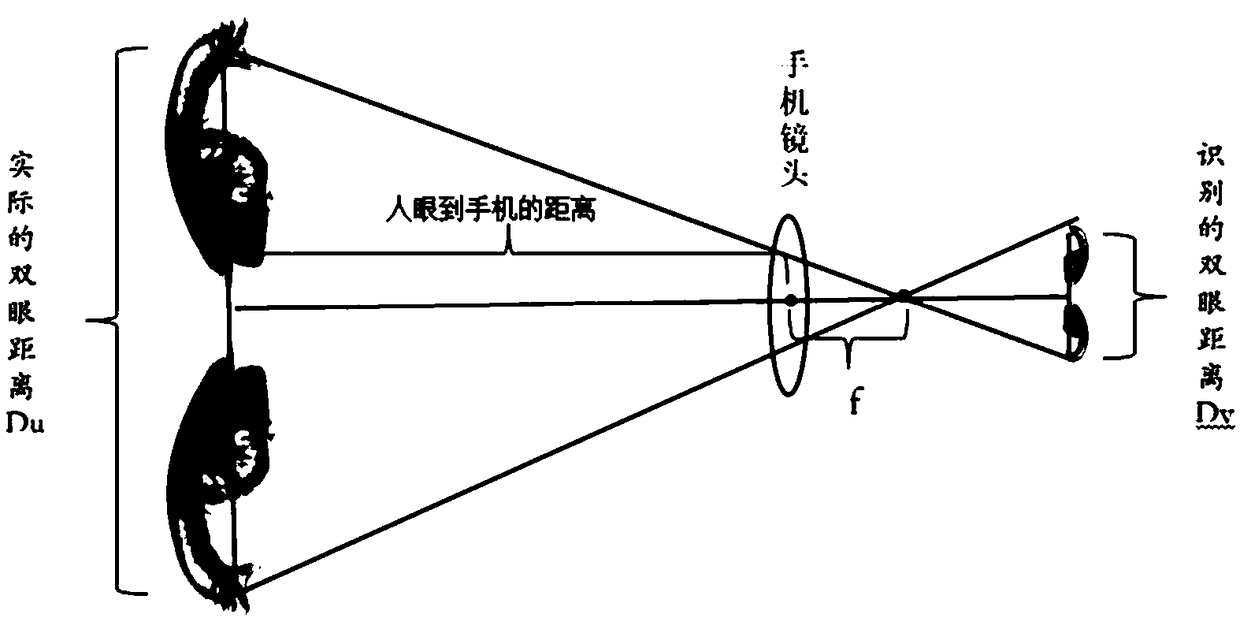

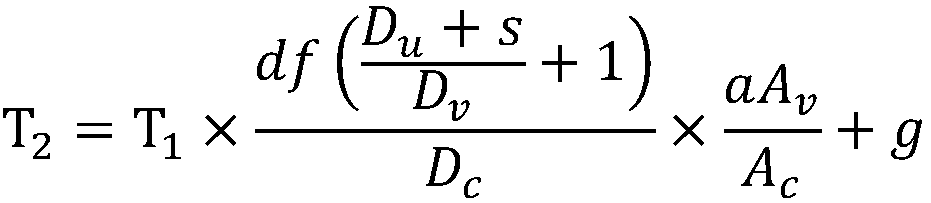

An adaptive font adjustment method and a device for mobile phone text reading

ActiveCN108989571AAutomatic instant adjustmentImprove applicabilityCharacter and pattern recognitionNatural language data processingData acquisitionPostural orientation

The invention discloses an adaptive font adjustment method and device for mobile phone text reading. The method comprises: carrying out face recognition of a user through a front-mounted camera, tracking and recording the latest facial information in real time, such as binocular distance, age, gender and whether the user wears glasses and other facial features data, further calculating the actualdistance between the screen and the user's eyes, and finally according to the actual distance and other factors, adjusting the display font suitable for the current user (especially the elderly) to read in real time. Compared with the prior art, the invention utilizes face recognition technology, the principle of optical imaging to automatically collect and calculates data, except for the mobile phone camera, other ranging elements are not needed, which makes the method and device suitable for most of the mobile phones on the market at present, the user is not required to actively input or preset the relevant value, and can automatically adjust the font display in real time according to the user posture change or the user individual change in one method cycle.

Owner:BINHAI IND TECH RES INST OF ZHEJIANG UNIV

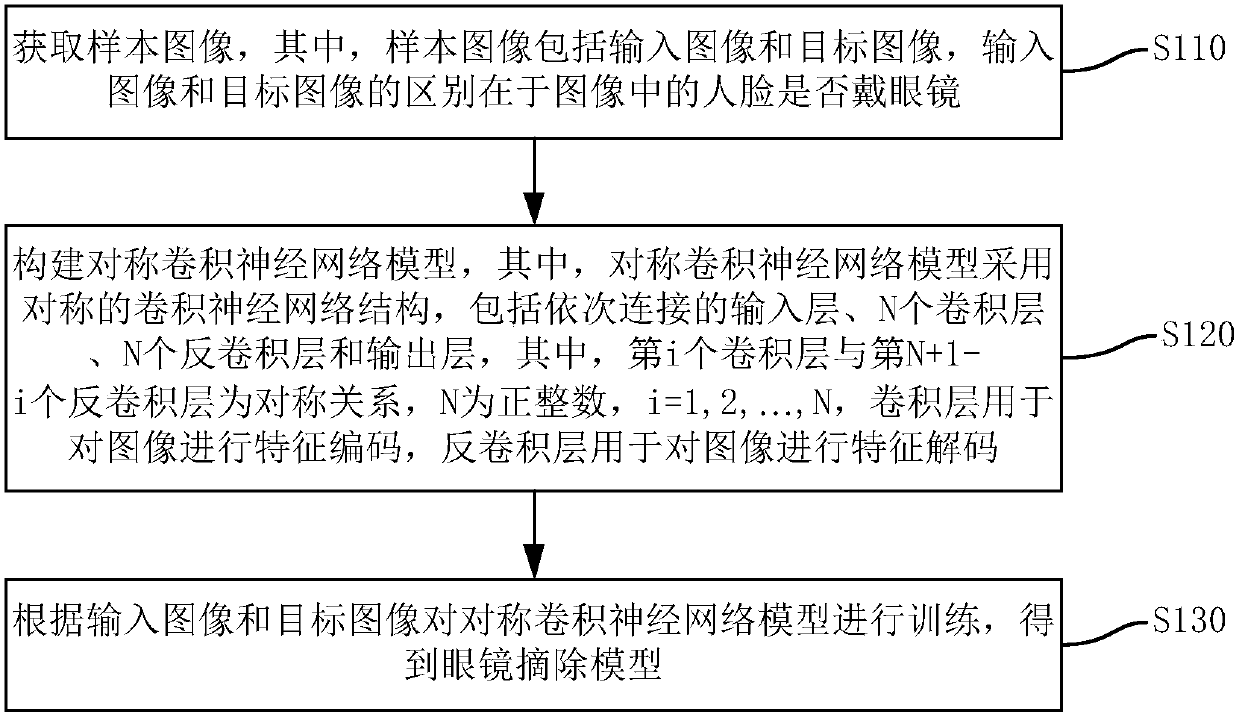

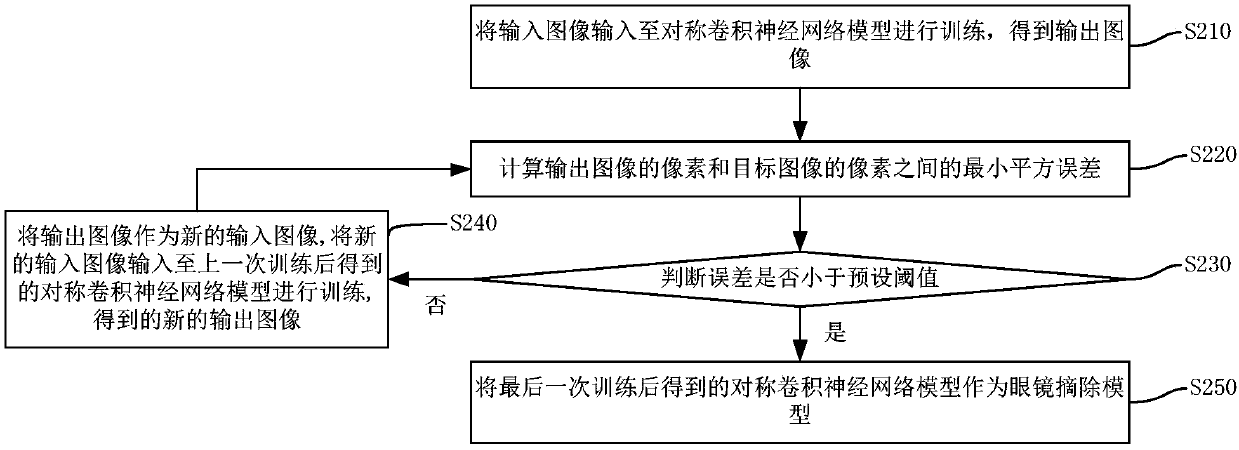

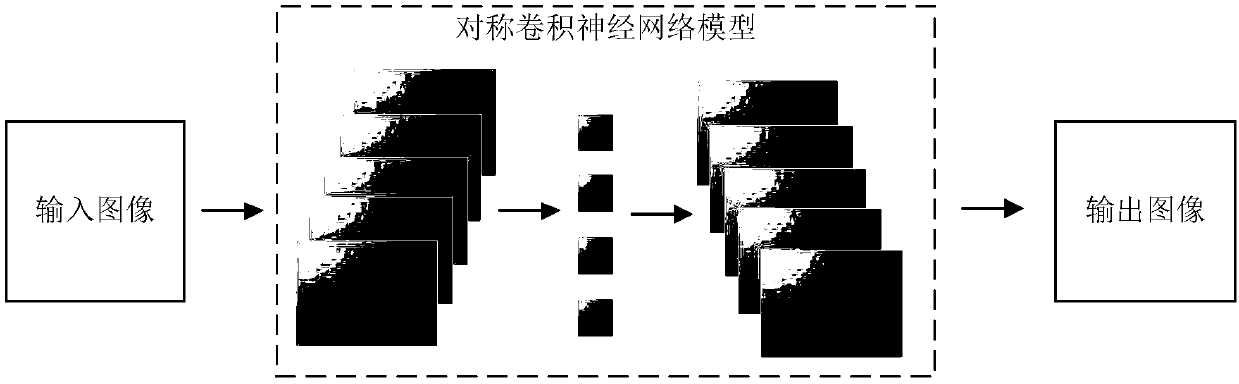

Glasses removal model training method, face recognition method, device and equipment

InactiveCN109934062AGeometric image transformationCharacter and pattern recognitionFeature codingEyewear

The invention discloses a training method of a glasses removal model, a face recognition method and a face recognition device. The training method comprises the steps that a sample image is acquired,the sample image comprises an input image and a target image, the difference between the input image and the target image is whether a human face in the image wears glasses or not, the input image isused for indicating a human face image wearing glasses, and the target image is used for indicating a human face image not wearing glasses; a symmetric convolutional neural network model is constructed, the model adopts a symmetric convolutional neural network structure and comprises an input layer, N convolutional layers, N deconvolutional layers and an output layer which are sequentially connected, and the ith convolutional layer and the (N+1-i)th deconvolutional layers are in a symmetric relationship, the convolution layers are used for carrying out feature coding on the image, and the deconvolution layers are used for carrying out feature decoding on the image; and the model is trained according to the input image and the target image to obtain a glasses removal model. The method can enable the trained model to have a better effect.

Owner:BYD CO LTD

Extended Wear Ophthalmic Lens

InactiveUS20070105974A1Sufficient for corneal healthSubstantial adverse impact on ocular health or consumerOptical articlesProsthesisExtended wear contact lensesEye movement

An ophthalmic lens suited for extended-wear periods of at least one day on the eye without a clinically significant amount of corneal swelling and without substantial wearer discomfort. The lens has a balance of oxygen permeability and ion or water permeability, with the ion or water permeability being sufficient to provide good on-eye movement, such that a good tear exchange occurs between the lens and the eye. A preferred lens is a copolymerization product of a oxyperm macromer and an ionoperm monomer. The invention encompasses extended wear contact lenses, which include a core having oxygen transmission and ion transmission pathways extending from the inner surface to the outer surface.

Owner:NOVARTIS AG

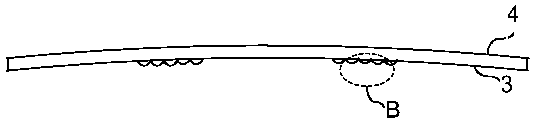

Flexible refractive film patch with microstructure

InactiveCN109633925AAlleviate the effect of peripheral defocusVarious designsOptical partsLensEye lensSpectacle lenses

The invention relates to a flexible refractive film patch with a microstructure. Besides having the performance of a conventional film patch, the flexible refractive film patch has a certain refractive effect in a prescribed area; after being attached onto the spectacle lens of a spectacle frame, the flexible refractive film maintains a good refractive correction effect at the central region of the spectacle lens; and the flexible refractive film patch can also realize peripheral defocusing, and therefore, the flexible refractive film patch can alleviate the peripheral defocusing effect of theretina of a person who wears glasses. The film patch of the invention can be applied to various spectacle lenses, and is flexible and variable in shape; and the micro-structured ring zone distribution area of the film patch can be designed into various forms, and therefore, the correction needs of different groups can be met.

Owner:WENZHOU MEDICAL UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com