Planetary landing collaborative navigation feature matching method

A collaborative navigation and feature matching technology, applied in the field of deep space exploration, can solve the problems of inability to meet the real-time requirements of landing tasks, sensitivity to illumination changes in landing images, poor matching robustness, etc. The effect of high matching accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0067] In order to better illustrate the purpose and advantages of the present invention, the content of the invention will be further described below in conjunction with the accompanying drawings and examples.

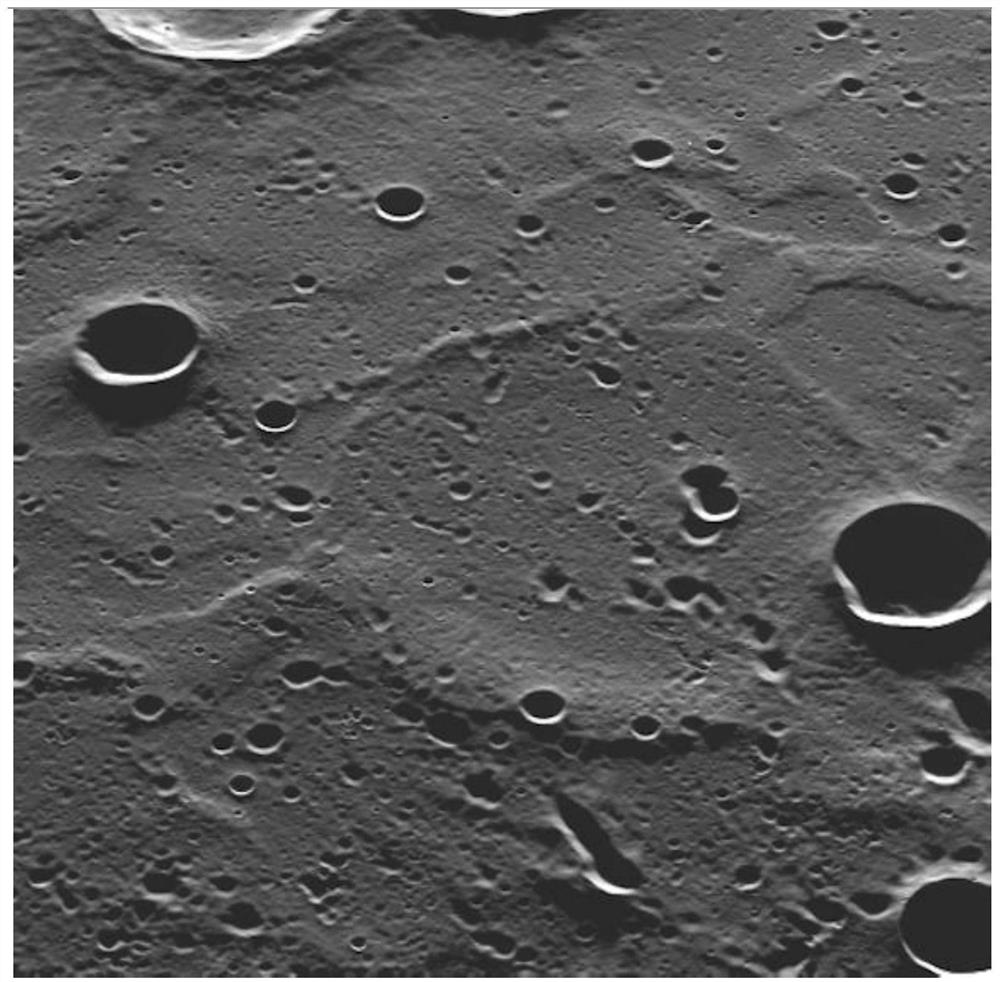

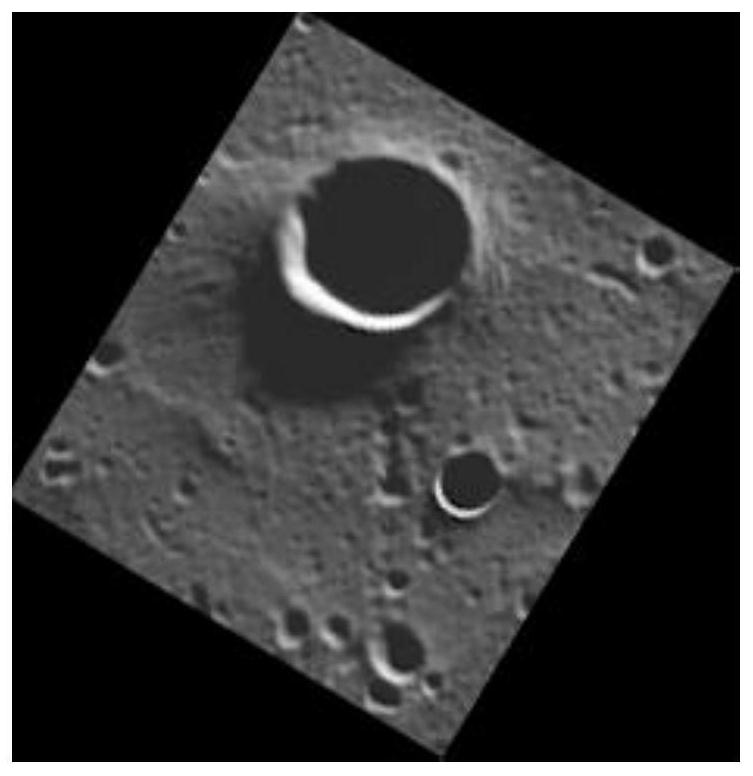

[0068] In order to verify the feasibility of the present invention, based on the actual image of the surface of Mercury taken as the optical image taken by camera A, such as figure 2 , for mathematical simulation verification. use figure 2 The image constructed by affine transformation of part of the image is taken as the optical image taken by camera B, such as image 3 , where the clipped figure 2 Part of the image rotation angle is 31 degrees, the u-direction scaling factor of the image is 0.8, and the v-direction is 1.2.

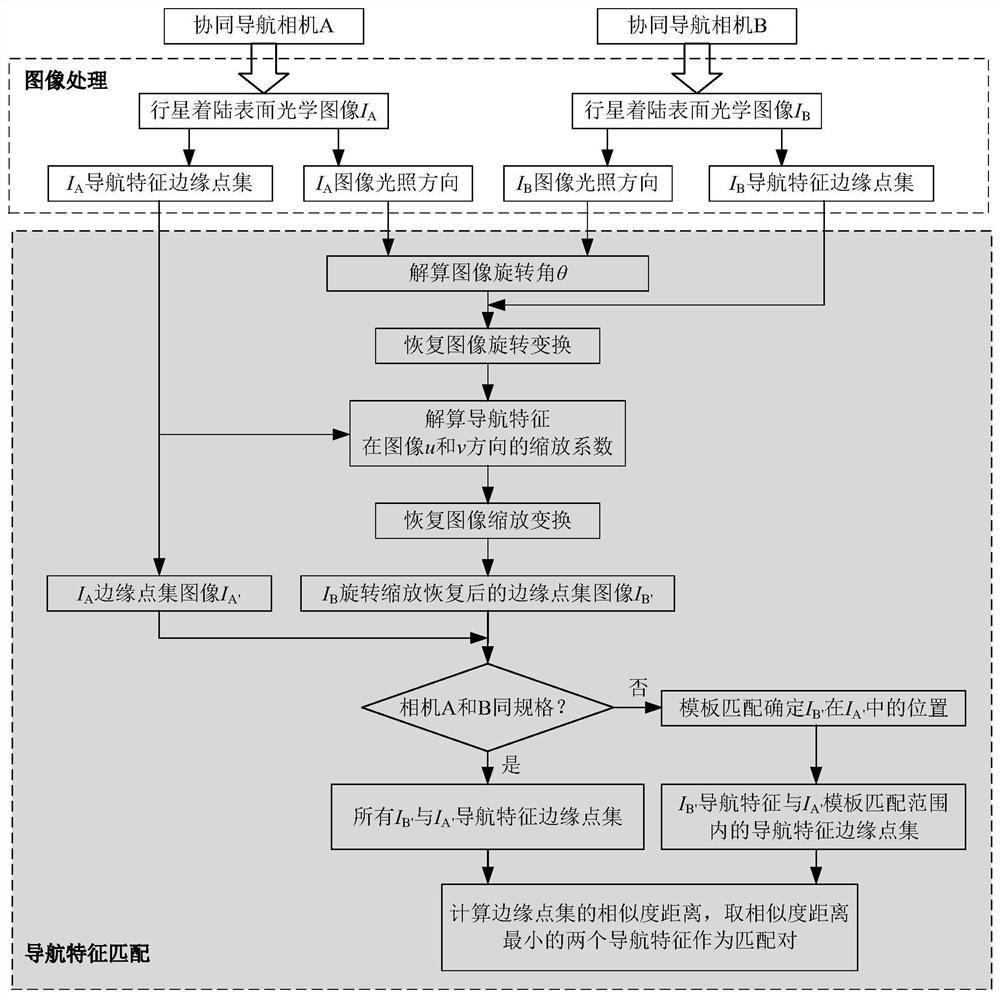

[0069] Such as figure 1 As shown, the specific implementation steps of the planetary landing cooperative navigation feature matching method disclosed in this embodiment are as follows:

[0070] Step 1: The two optical navigation cameras ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com