Method for realizing scene structure prediction, target detection and lane level positioning

A target detection and lane-level technology, applied in the field of neural networks, can solve problems such as poor ability to adapt to unfamiliar scenes, inability to complete accurate positioning in tunnels or poor signal scenes, and low GPS positioning accuracy, so as to reduce prediction time and avoid manual labeling The effect of work

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0055] The technical solutions in the embodiments of the present invention will be described clearly and in detail below with reference to the drawings in the embodiments of the present invention. The described embodiments are only some of the embodiments of the invention.

[0056] The technical scheme that the present invention solves the problems of the technologies described above is:

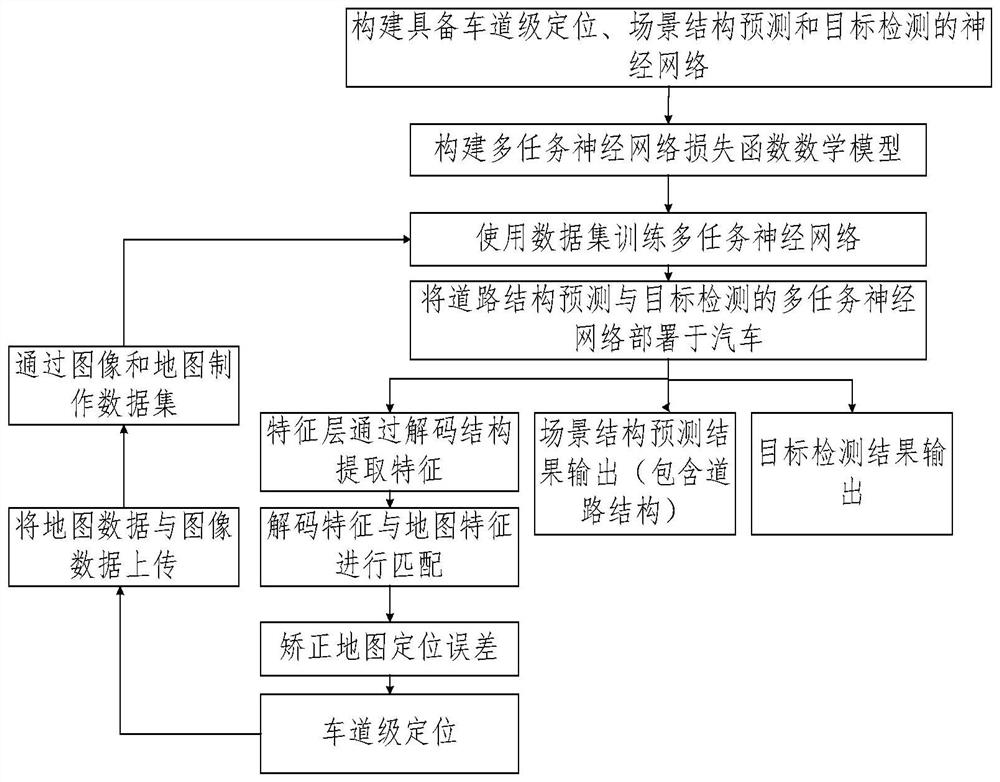

[0057] Such as figure 1 As shown, a neural network for lane-level positioning, scene structure prediction and target detection provided by an embodiment of the present invention includes the following steps:

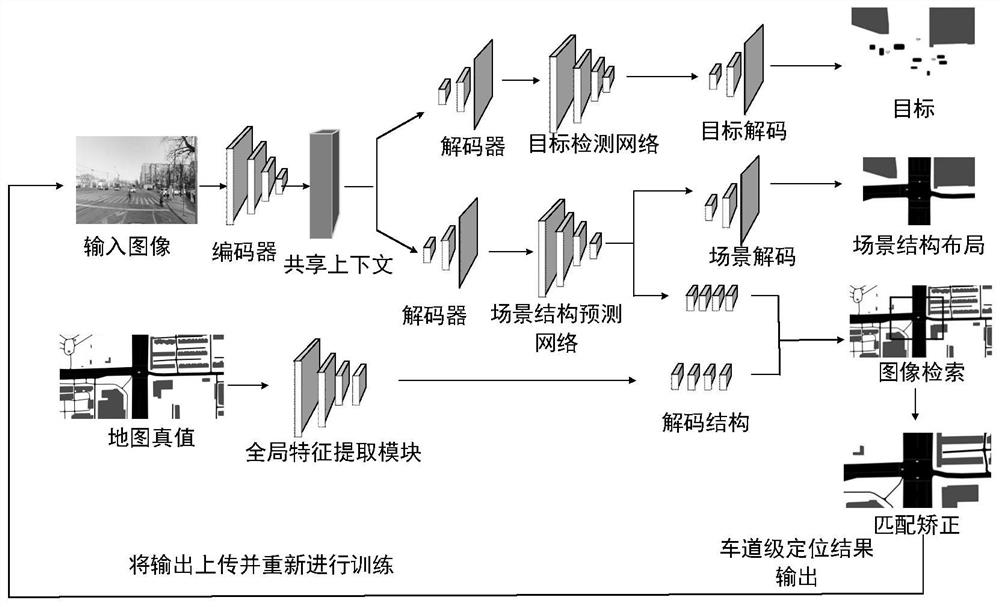

[0058] 1. Construct a multi-task neural network with lane-level positioning, scene structure prediction and target detection. The multi-task neural network structure of scene structure prediction and target detection is as follows: figure 2 As shown, the scene structure prediction and target detection multi-task neural network in the method of the present invention adopts the contex...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com