Text classification method, classifier and system based on key information and dynamic routing

A text classification and key information technology, applied in text database clustering/classification, neural learning methods, digital data information retrieval, etc., can solve the problems of text key information waste, key information auxiliary classification, BERT waste, etc. Accuracy, enhance expression ability, improve the effect of accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

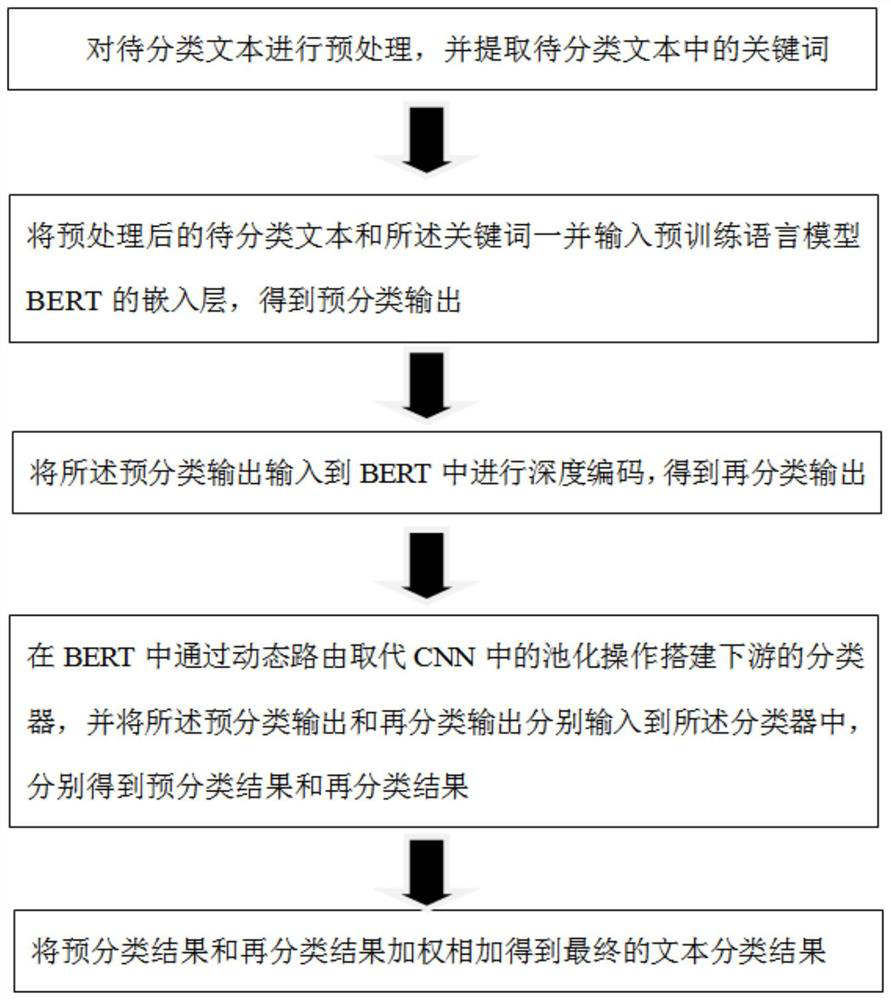

[0058] In an exemplary embodiment, a text classification method based on key information and dynamic routing is provided, such as figure 1 As shown, the method includes the following:

[0059] Preprocess the text to be classified, and extract keywords in the text to be classified;

[0060] Input the pre-processed text to be classified and the keywords into the embedding layer of the pre-trained language model BERT to obtain a pre-classification output;

[0061] Inputting the pre-classification output into BERT for deep encoding to obtain reclassification output;

[0062] In BERT, the pooling operation in CNN is replaced by dynamic routing to build a downstream classifier, and the pre-classification output and re-classification output are respectively input into the classifier to obtain pre-classification results and re-classification results respectively;

[0063] The final text classification result is obtained by weighting the pre-classification results and the re-classifi...

Embodiment 2

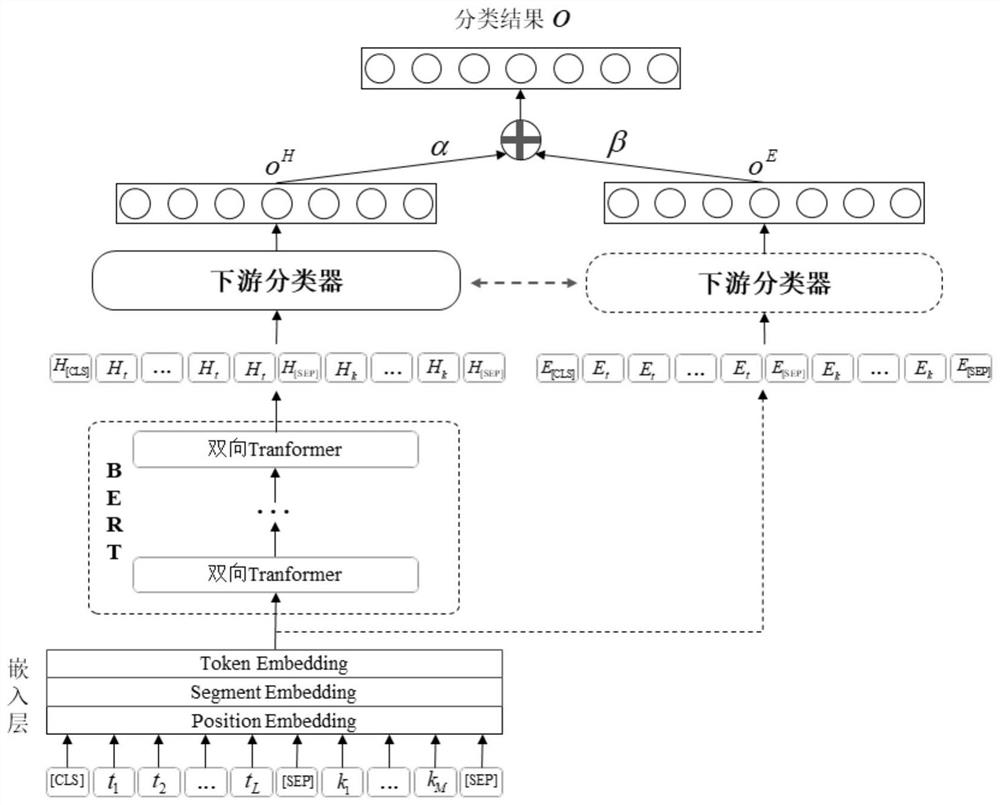

[0068] Based on Embodiment 1, a text classification method based on key information and dynamic routing is provided, such as figure 2 As shown, the preprocessing of the text to be classified includes:

[0069] Perform data cleaning, word segmentation, removal of stop words and features on the text to be classified, and convert each text to be classified into a word sequence;

[0070] Let T = {t 1 ,t 2 ,...,t L} represents the preprocessed word sequence of the text to be classified, where t i Indicates the word at the i-th position in the word sequence, and L indicates the maximum length of the text to be classified allowed by the model.

[0071] Further, said extracting keywords in the text to be classified includes:

[0072] Use the TextRank algorithm to extract M keywords in the word sequence T of the text to be classified, let K={k 1 ,k 2 ,...,k M} represents the extracted M keywords and arranges them according to their relative positions in the original word seque...

Embodiment 3

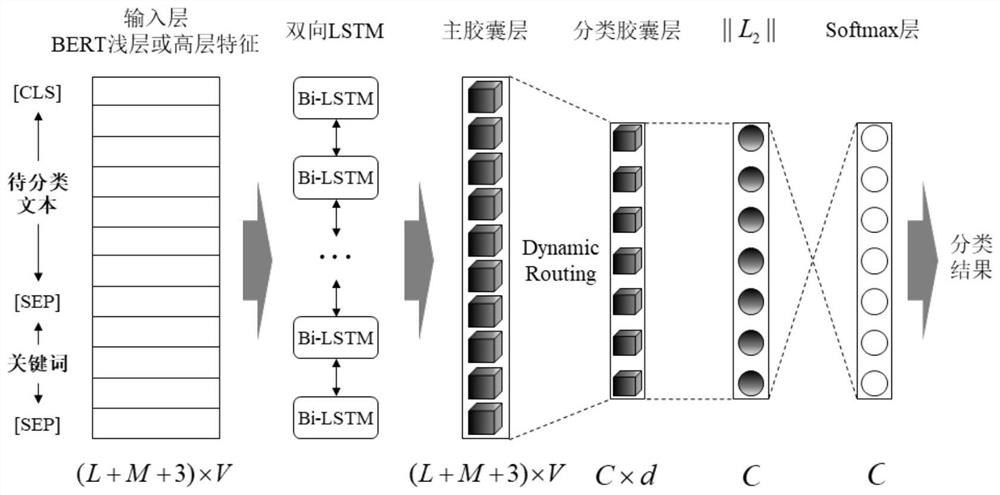

[0087] In this embodiment, a BERT downstream text classifier is provided. In this embodiment, the text classifier is built based on a bidirectional long-short time sequence memory network and a capsule neural network, such as image 3 As shown, the text classifier includes sequentially connected input layer, bidirectional LSTM operation layer, main capsule layer, dynamic routing layer, classification capsule layer and Softmax layer; the main capsule layer is used as the parent capsule layer through voting dynamic routing The mechanism establishes a non-linear mapping relationship with the classification capsule layer.

[0088] Further, the bidirectional LSTM operation layer uses a bidirectional long-short-term sequence memory network to perform sequence modeling on the input features to capture bidirectional interaction relationships in the sequence.

[0089] Specifically, the steps to use this classifier are as follows:

[0090] (1) Let X∈R (L+M+3)×V Represents the input fe...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com