Scale-extensible convolutional neural network acceleration system

A technology of convolutional neural network and acceleration system, which is applied to biological neural network models, neural architectures, neural learning methods, etc., can solve problems such as reducing accelerator efficiency, high idle rate of computing units, and poor scalability, and achieve simplified hardware design The effect of structure, large resource reuse, and good flexibility

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

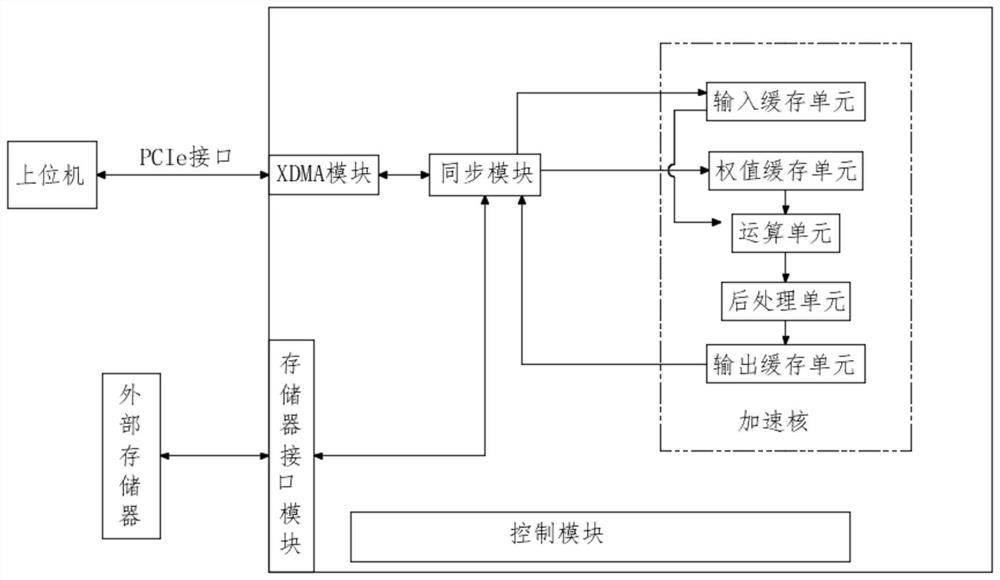

[0043] refer to figure 1 , in this embodiment, a scalable convolutional neural network acceleration system is proposed, including an XDMA module, a memory interface module, a synchronization module, a control module, an external memory, and at least one acceleration core;

[0044] XDMA module is used for data transmission between host computer and FPGA;

[0045] The memory interface module is used to realize the logic function of controlling external memory read and write;

[0046] The synchronization module is used to solve the problem of cross-clock domain data transmission between the XDMA module and the acceleration core and memory interface module;

[0047] The control module is used to control the operation of each functional module;

[0048] The off-chip main memory is used to store the data needed to accelerate the core operation and the data generated after the operation process is completed.

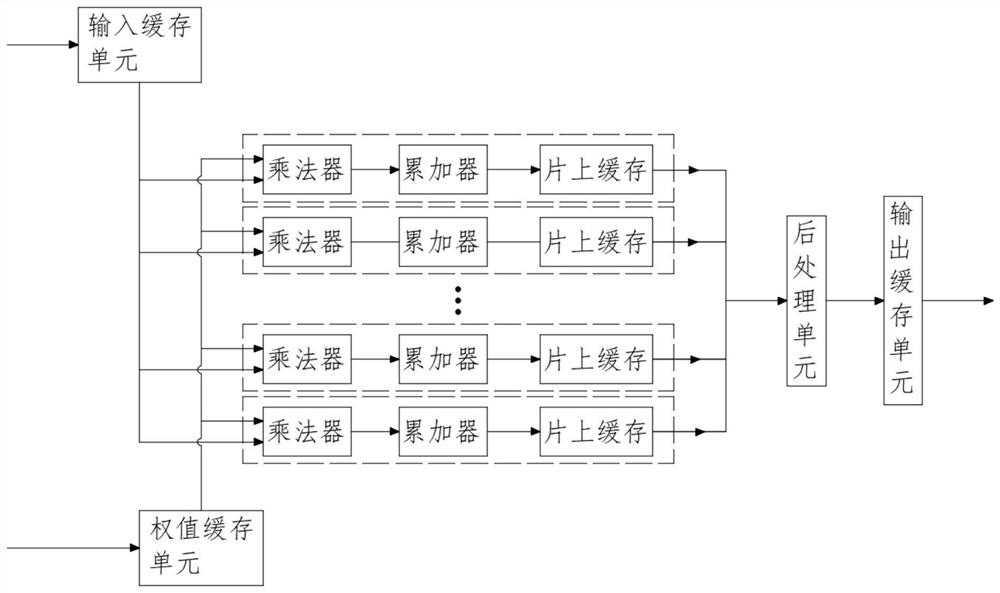

[0049] The acceleration core includes an operation unit, an input cache...

Embodiment 2

[0099] refer to figure 1 and figure 2 , in this embodiment, a scalable convolutional neural network acceleration method is proposed,

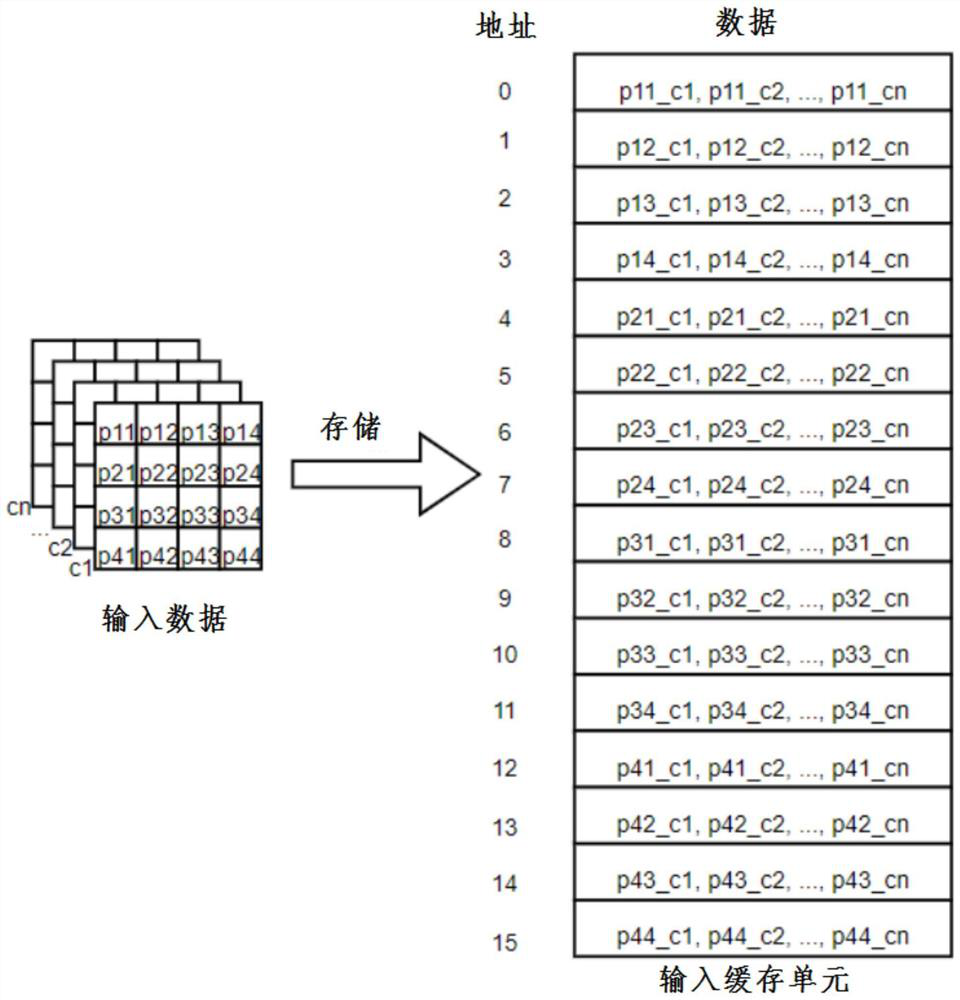

[0100] S1: The XDMA module receives the original data (including image data and weight data) from the host computer from the PCIe interface and stores it in the corresponding address space of the external memory through the synchronization module;

[0101] S2: After preparing the original data required for the operation, the control module starts the acceleration core to perform the operation, and the control module controls the input cache unit and the weight cache unit to read and store the first set of data from the external memory.

[0102] S3: The multiplier will read a set of data from the input cache unit and the weight cache unit for multiplication, and store the calculated result in the on-chip cache. While performing calculations, the input cache unit and the weight cache The unit reads and stores the second set of data from the ex...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com