Facial expression capturing method and device in weak light environment

A facial expression and environment technology, applied in the field of computer vision, can solve problems such as poor facial expression recognition methods, and achieve the effects of enhancing model generalization, strong generalization, and avoiding overfitting.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

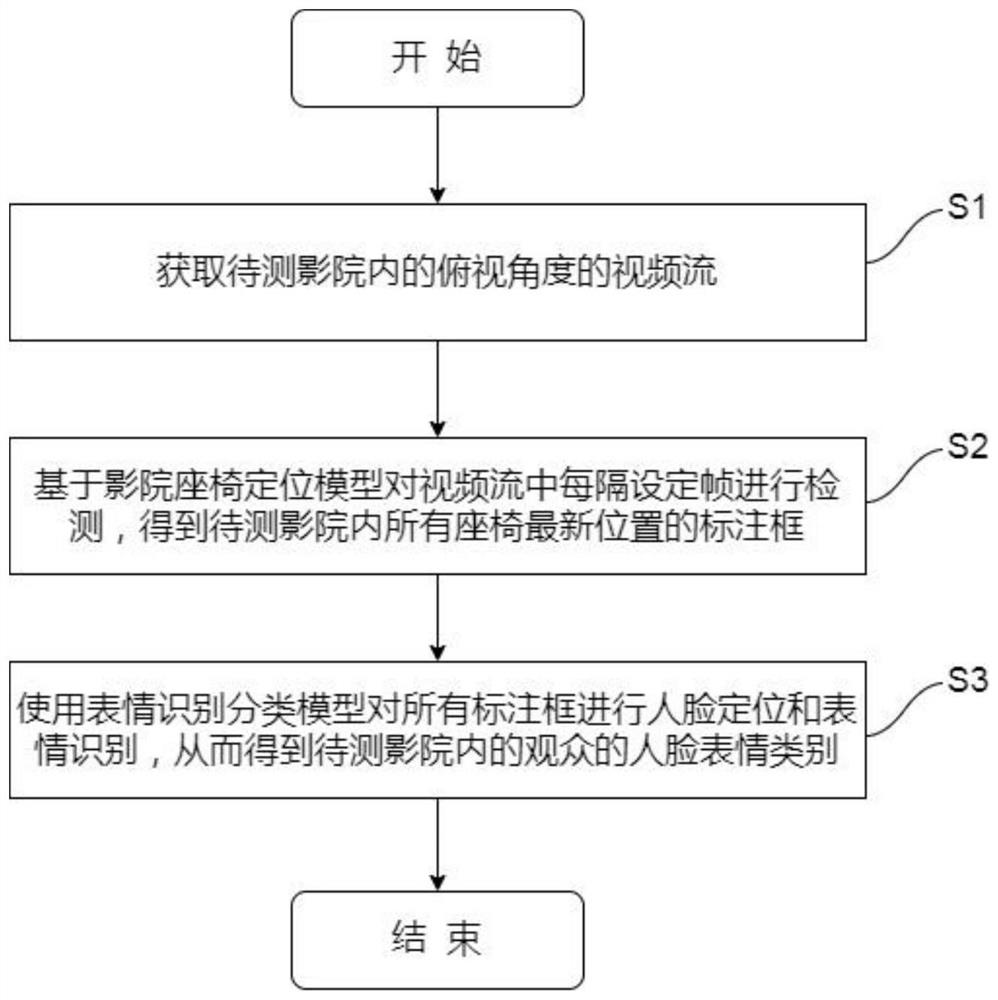

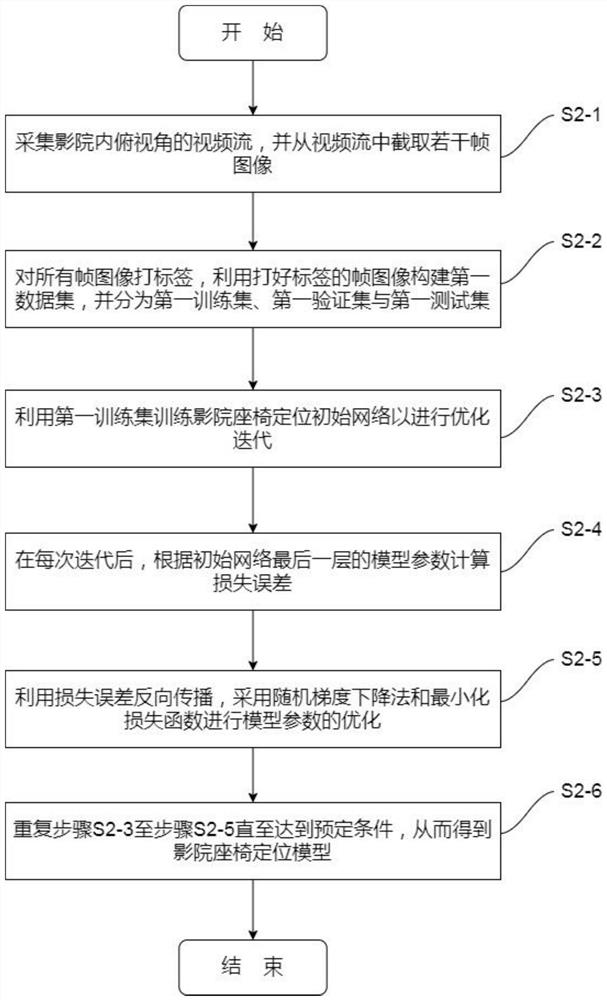

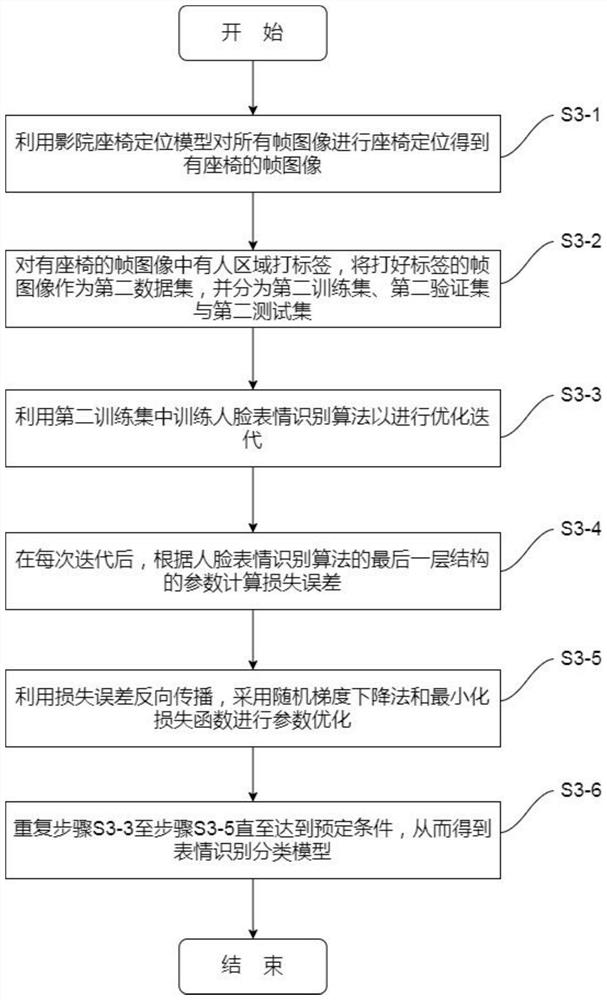

[0018] In order to make the technical means, creative features, goals and effects of the present invention easy to understand, a method and device for capturing human facial expressions in a low-light environment of the present invention will be described in detail below in conjunction with the embodiments and accompanying drawings.

[0019]

[0020] In this embodiment, a method and device for capturing human facial expressions in a low-light environment are run by a computer, and the computer needs at least one graphics card for GPU acceleration to complete the training process of the model. The trained theater seat positioning model The expression recognition classification model and the image recognition process are stored in the computer in the form of executable code. The computer can call these models through the executable code and process the image data frames in multiple scenes in batches at the same time, and obtain and output the image data in each scene. Facial ex...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com