Image description method based on adaptive enhanced self-attention network

An adaptive enhancement and image description technology, applied in neural learning methods, biological neural network models, instruments, etc., can solve problems such as the inability to cover semantic relationships, and the difficulty of image description models to predict reliable descriptions of semantic relationships. Feature representation, high-quality image description generation effect, high-precision credible image description generation effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0032] A preferred embodiment of the present invention will be described in detail below with reference to the accompanying drawings.

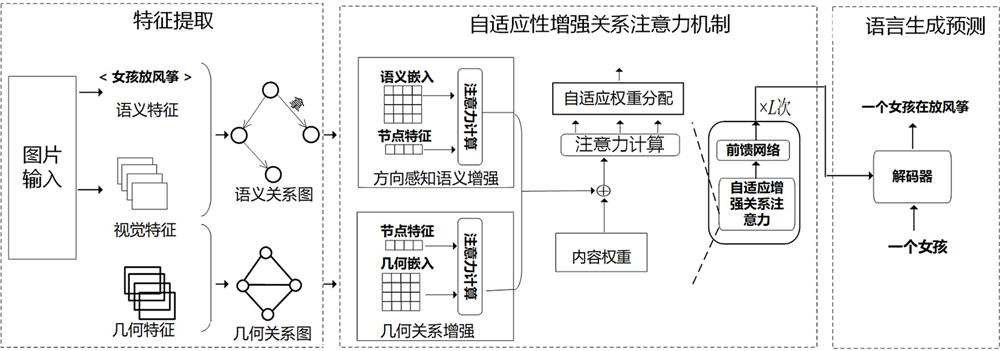

[0033] figure 1 The overall network structure is shown. For a given natural scene image, the present invention obtains the final image description through the processing of three modules. First, (a) feature extraction: a semantic relation graph is constructed using a scene graph extractor, and a geometric relation graph is constructed using a pretrained object detector Faster-RCNN to detect regions of interest and bounding boxes. Second, (b) an encoder with an adaptive relation-enhanced attention mechanism: 1) Direction-sensitive semantic enhancement considers both the bidirectional association of regional features to semantic relations and semantic relations to regional features, using them to jointly represent the complete triplet group (subject-predicate-object) information; 2) geometric relationship enhancement dynamically calculates the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com