Input recognition method and device in virtual scene and storage medium

A virtual input and virtual scene technology, applied in the input/output process of data processing, input/output of user/computer interaction, character and pattern recognition, etc., can solve the problems of poor immersion and realism, and improve immersion The effect of feeling and realism

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach 1

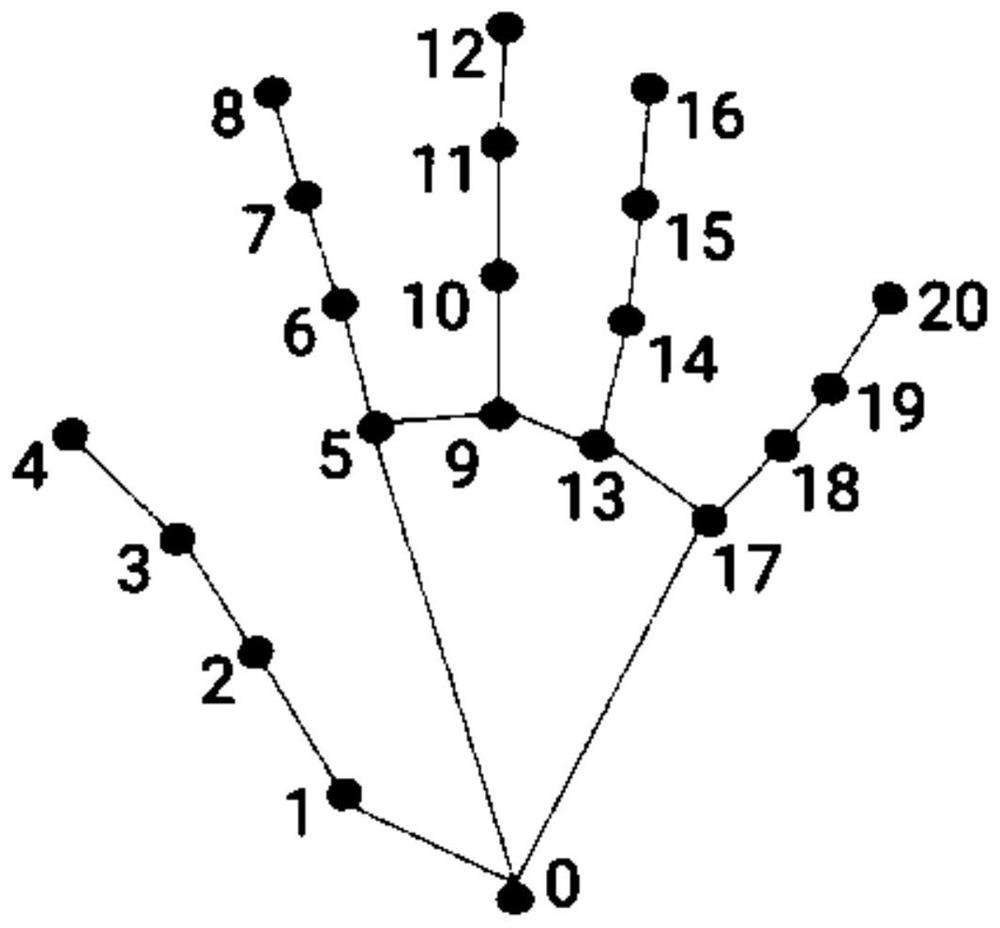

[0080] Embodiment 1. If the bending angle of the finger is less than 90 degrees, the finger can be calculated according to the position of the starting joint point of the second phalanx, the bending angle of the finger, the actual length of the second phalanx and the actual length of the fingertip. fingertip coordinates.

[0081] like Figure 8 As shown, the starting joint point of the second phalanx is R2, and if R2 can be observed, the binocular positioning algorithm can be used to calculate the position of R2. Knowing the position of R2, the actual length of the second phalanx and the bending angle b of the second phalanx, the position of the starting joint point R5 of the fingertip can be obtained. Furthermore, the fingertip position R6 can be obtained by calculation according to the position of R5, the bending angle c of the fingertip segment, and the actual length of the fingertip segment.

Embodiment approach 2

[0082] Embodiment 2: If the bending angle of the finger is 90 degrees, the position of the fingertip is calculated according to the position of the starting joint point of the second knuckle and the distance that the first knuckle moves to at least one virtual input interface.

[0083] It should be noted that when the bending angle of the finger is 90 degrees, the user's fingertip can move in the same way with the first knuckle. For example, if the first knuckle moves down by 3cm, the fingertip will also go down. Moved 3cm. Based on this, the position of the fingertip is calculated when the position of the starting joint point of the second phalanx and the distance that the first phalanx moves to the at least one virtual input interface are known, and the problem of calculating the position of the fingertip can be transformed into The geometric problem of calculating the end position when the position of the starting point, the moving direction of the starting point and the mo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com