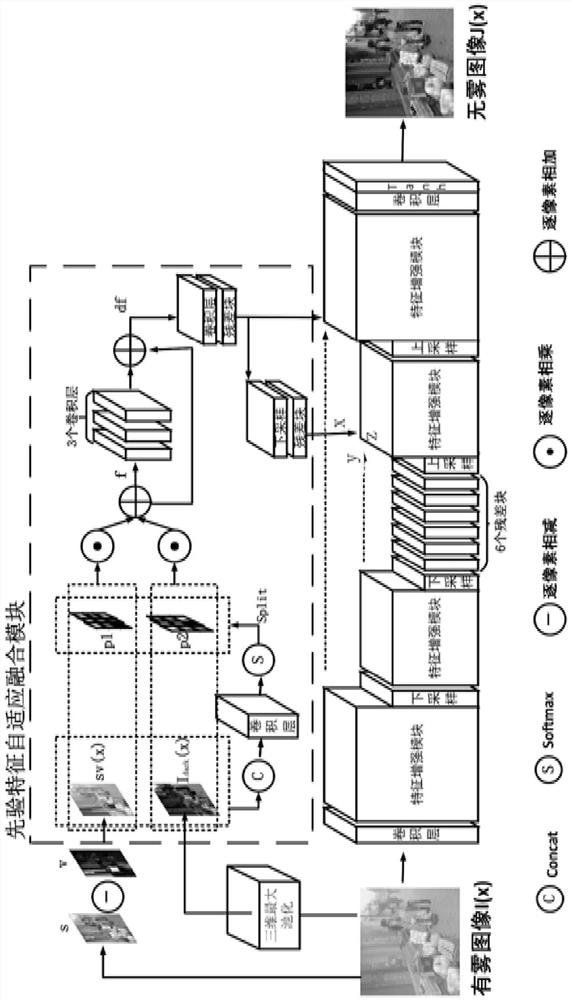

End-to-end image defogging method based on multi-feature fusion

A multi-feature fusion and image technology, applied in image enhancement, image data processing, neural learning methods, etc., can solve the problems of model versatility and effectiveness, and achieve the effect of improving the performance of defogging

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

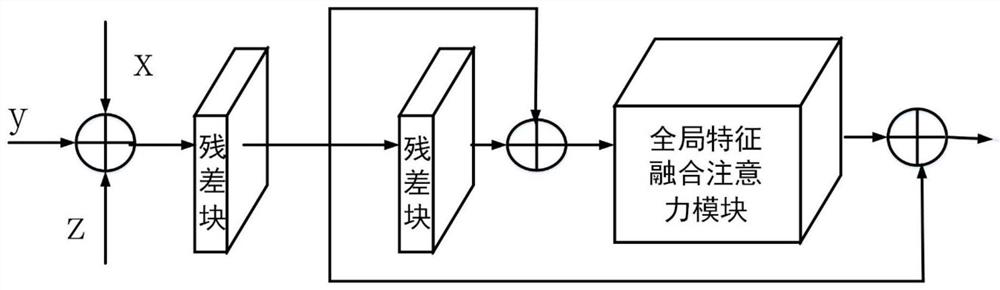

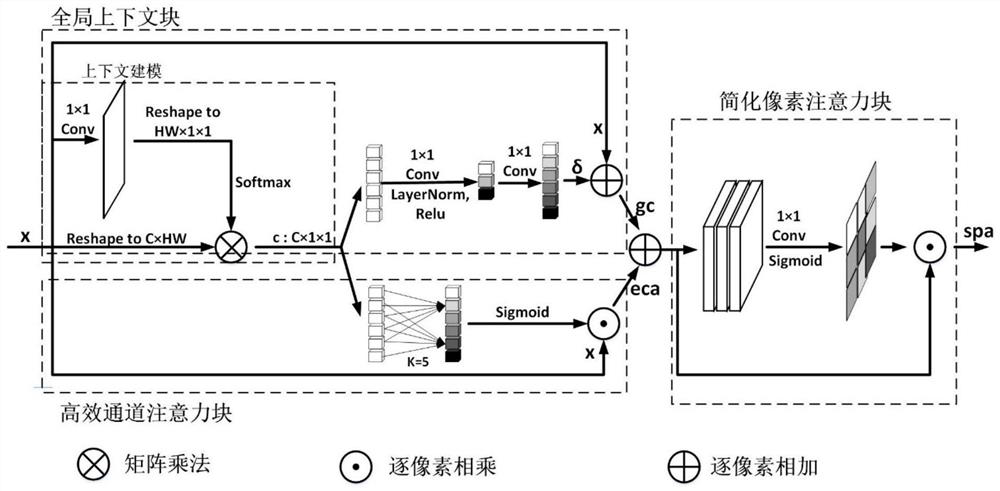

Method used

Image

Examples

Embodiment Construction

[0088] This embodiment provides an end-to-end image dehazing method based on multi-feature fusion, including the following steps:

[0089] Step 1, get the sample data set:

[0090] (1) Synthetic datasets

[0091] Get the dataset used by MSBDN after data augmentation on the RESIDE dataset. MSBDN selects 9000 outdoor foggy / clear image pairs and 7000 indoor foggy / clear image pairs as training sets from the RESIDE training dataset by removing redundant images from the same scene. And to further enhance the training data, each pair of images is resized using three random scales in the range [0.5, 1.0], 256×256 image patches are randomly cropped from the foggy image, and they are flipped horizontally and vertically to the model’s enter. The OTS sub-dataset in the RESIDE dataset is obtained as a test set, which contains 500 pairs of outdoor synthetic images.

[0092] (2) Real-world datasets

[0093] Get the O-HAZE dataset in the NTIRE2018 Dehazing Challenge and the NH-HAZE datas...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com