Appts. for memory communication during runhead execution

A technology of memory and look-ahead instructions, which is applied in the field of processor architecture and can solve problems such as unavailability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

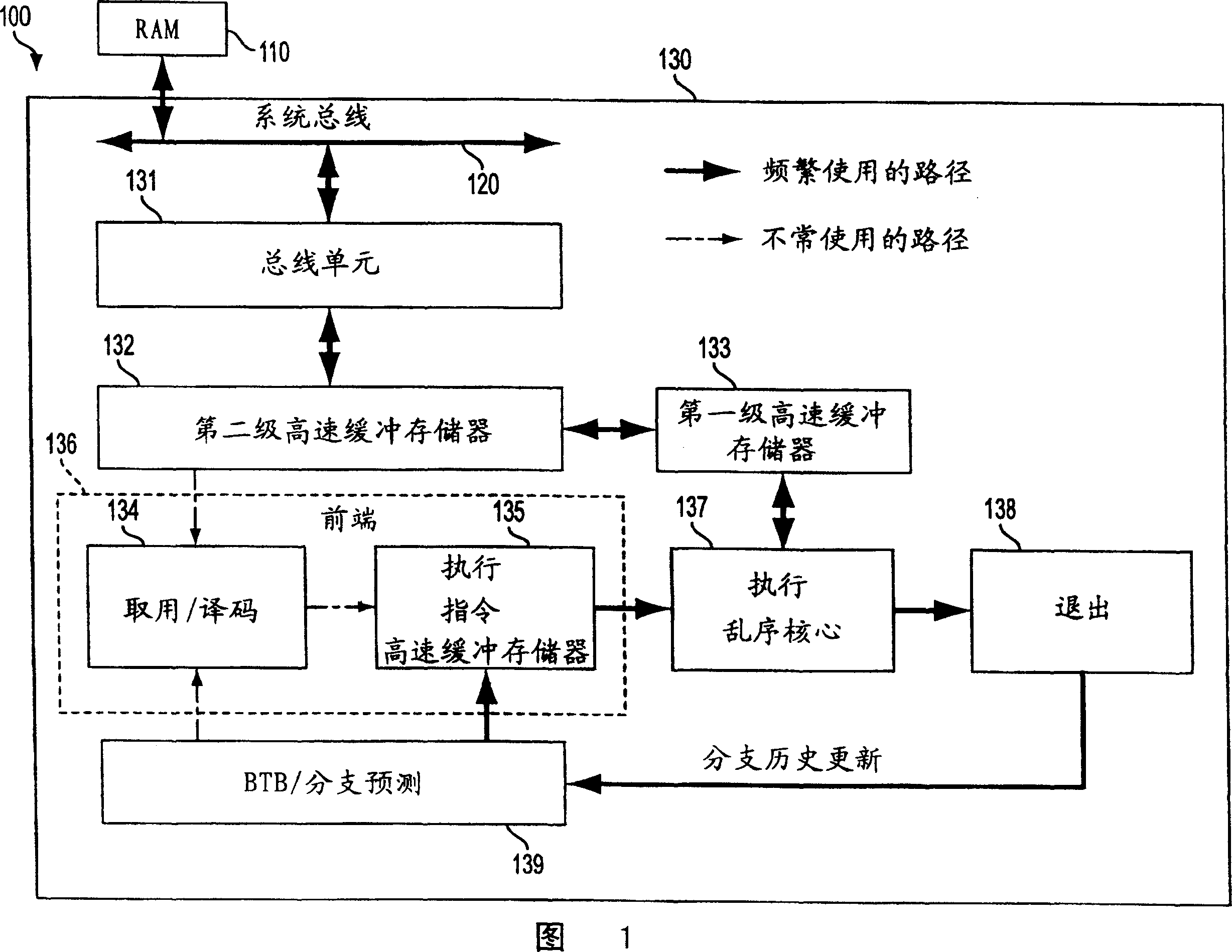

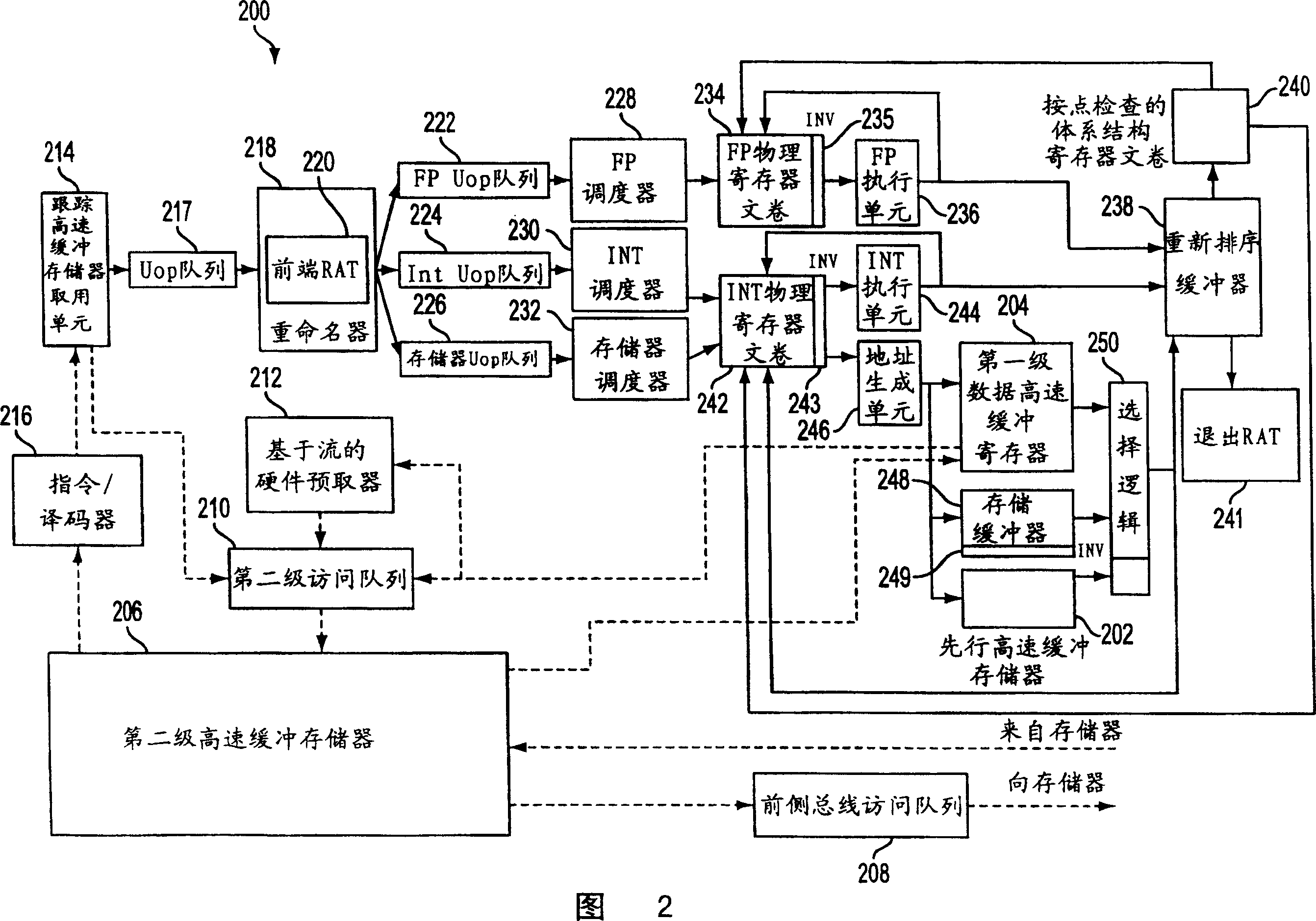

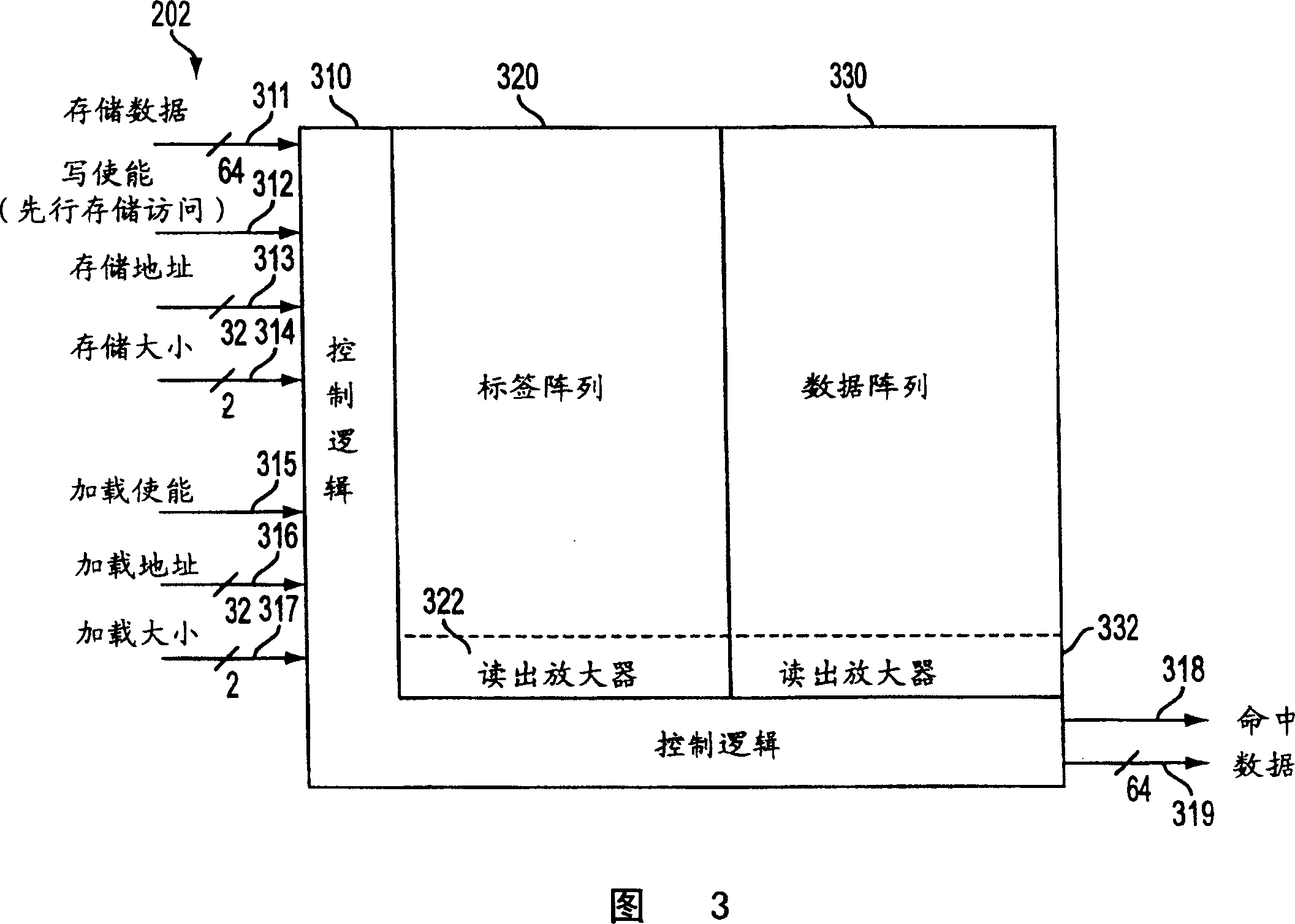

[0030] According to one embodiment of the present invention, in order to tolerate operations with very long latency, lookahead execution may be used instead of building a larger instruction window. Instead of making the long-latency operation "non-blocking" (which requires buffering it and the instructions following it into the instruction window), lookahead execution on an out-of-order processor can simply drop it out of the instruction window .

[0031] According to one embodiment of the present invention, the state of an architectural register file can be checked point-by-point when an instruction window is blocked by a long-latency operation. The processor may then enter "lookahead mode" and may issue a false (ie, invalid) result for the blocking operation and may discard the blocking operation out of the instruction window. The instruction following the blocking operation can then be fetched, executed, and pseudo-retired from the instruction window. "Pseudo-retirement" ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com