Patents

Literature

31 results about "Runahead" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Runahead is a technique that allows a microprocessor to pre-process instructions during cache miss cycles instead of stalling. The pre-processed instructions are used to generate instruction and data stream prefetches by detecting cache misses before they would otherwise occur by using the idle execution resources to calculate instruction and data stream fetch addresses using the available information that is independent of the cache miss.

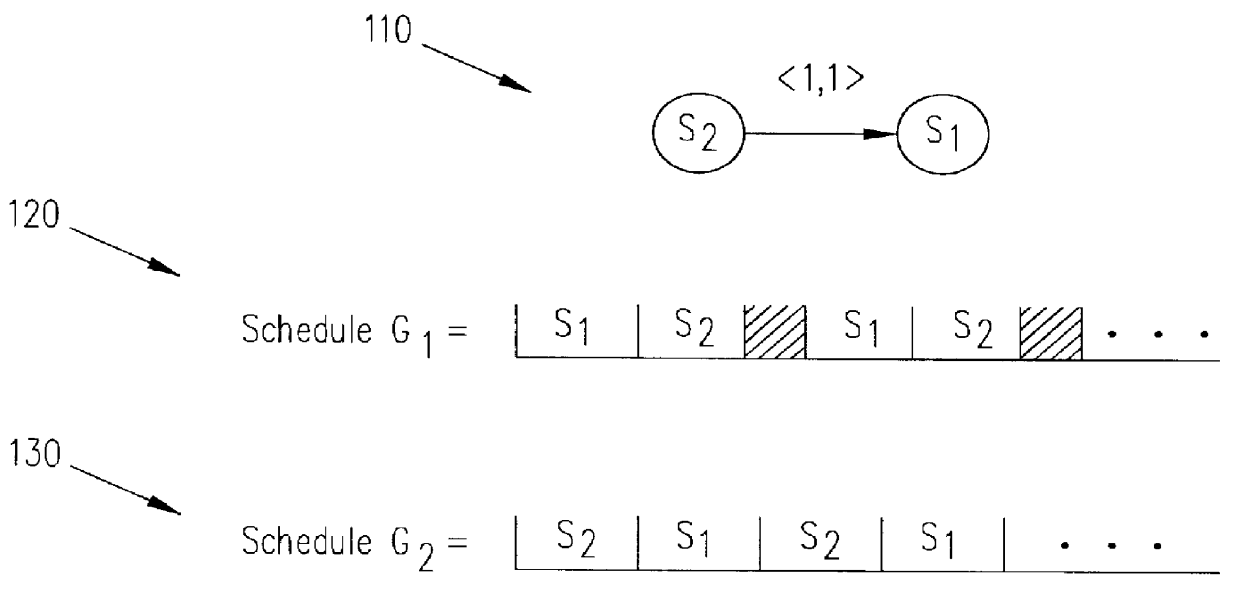

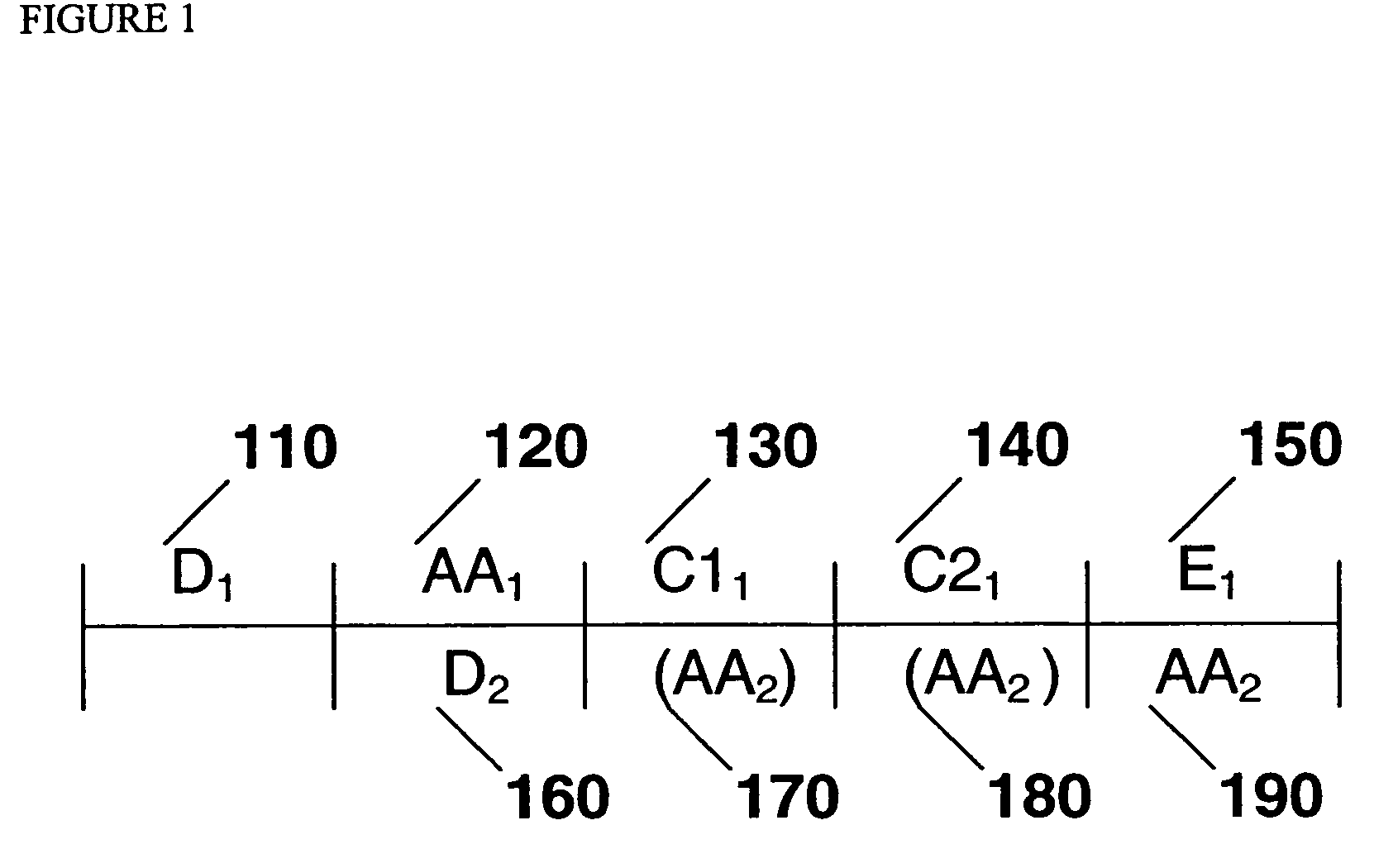

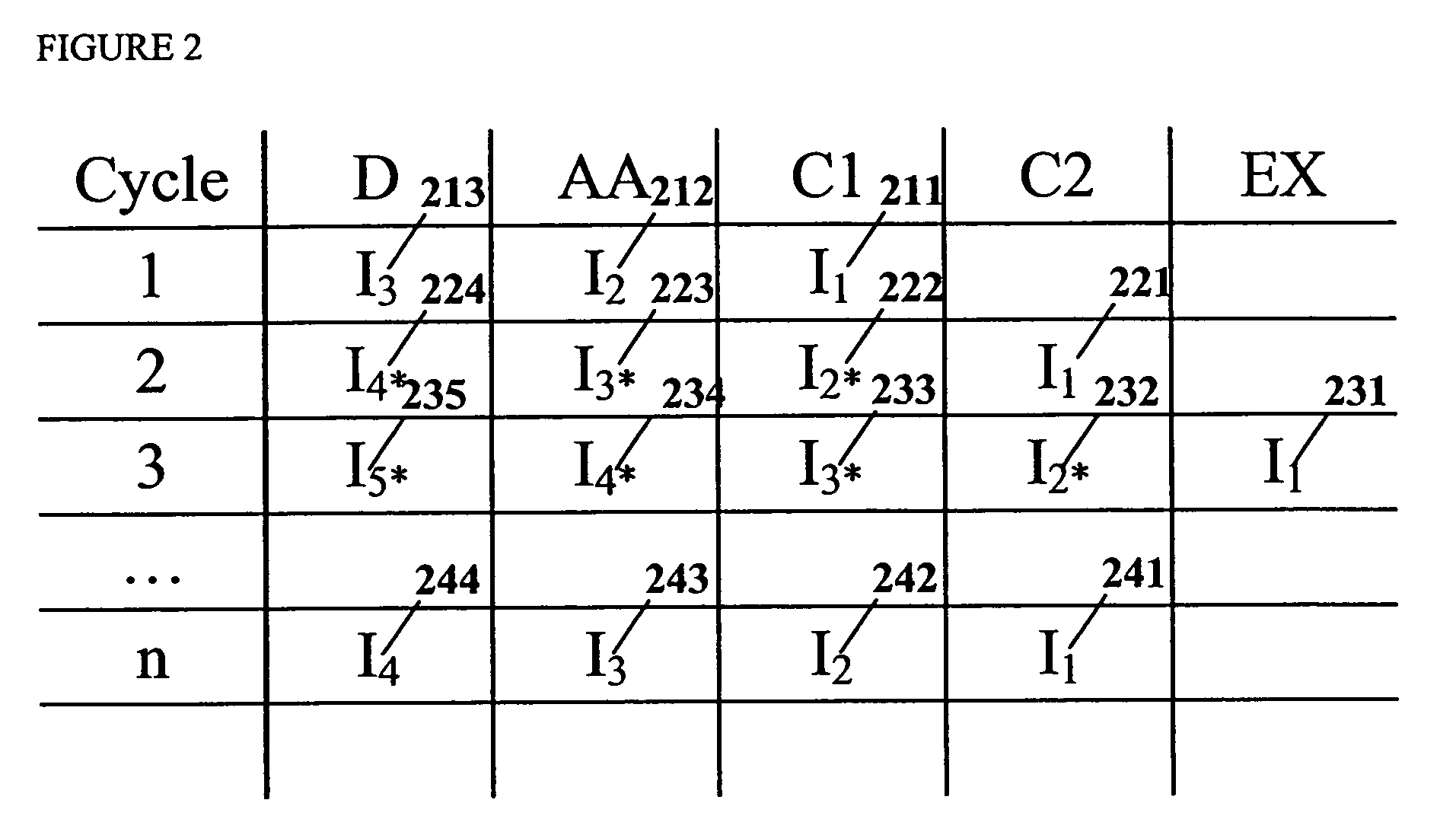

System, method, and program product for loop instruction scheduling hardware lookahead

InactiveUS6044222ASoftware engineeringDigital computer detailsScheduling instructionsComputerized system

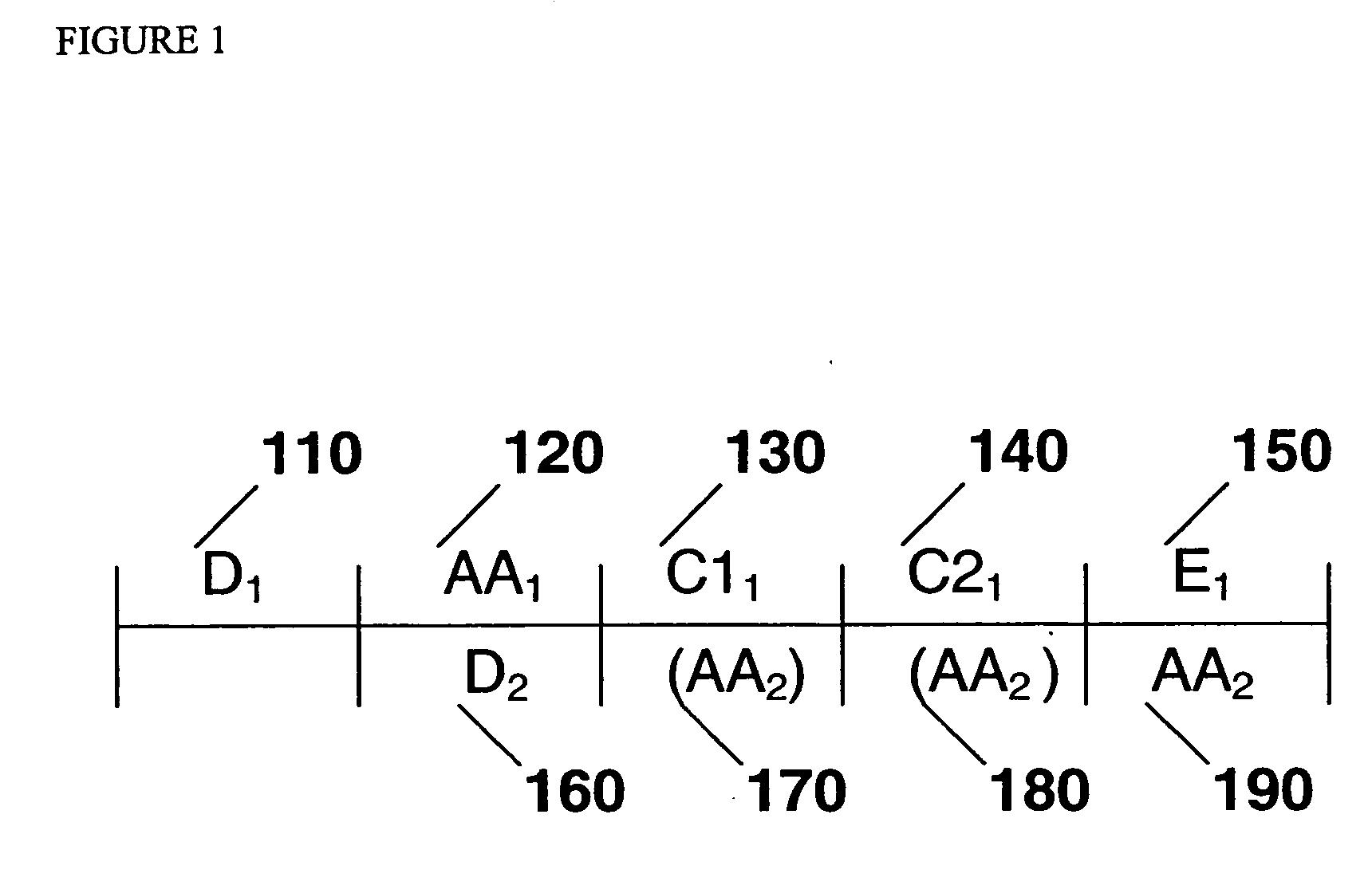

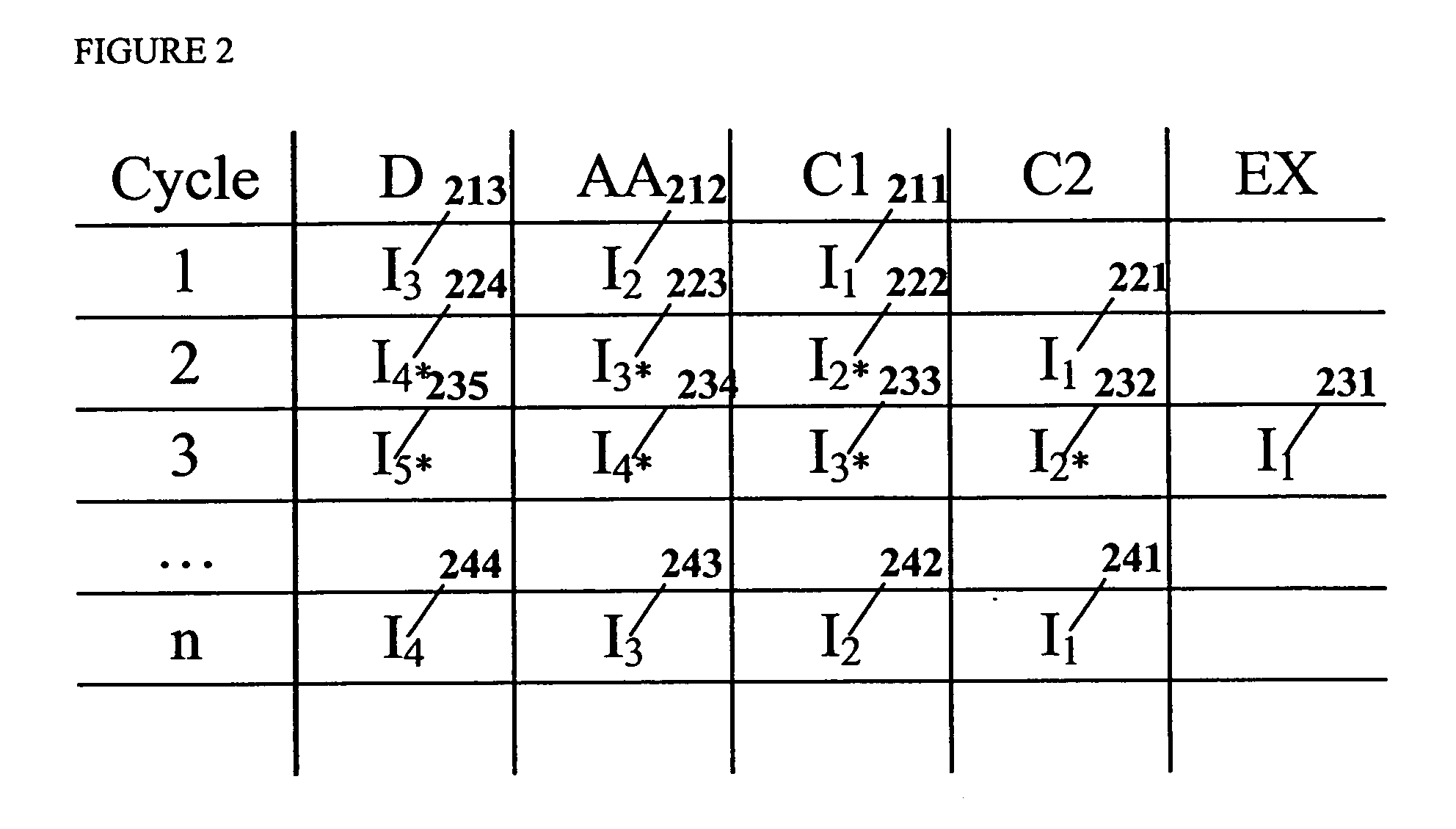

Improved scheduling of instructions within a loop for execution by a computer system having hardware lookahead is provided. A dependence graph is constructed which contains all the nodes of a dependence graph corresponding to the loop, but which only contains loop-independent dependence edges. A start node simulating a previous iteration of the loop may be added to the dependence graph, and an end node simulating a next iteration of the loop may also added to the dependence graph. A loop-independent edge between a source node and the start node is added to the dependence graph, and a loop-independent edge between a sink node and the end node is added to the dependence graph. Loop-carried edges which satisfy a computed lower bound on the time required for a single loop iteration are eliminated from a dependence graph, and loop-carried edges which do not satisfy the computed lower bound are replaced by a pair of loop-independent edges. Instructions may be scheduled for execution based on the dependence graph.

Owner:IBM CORP

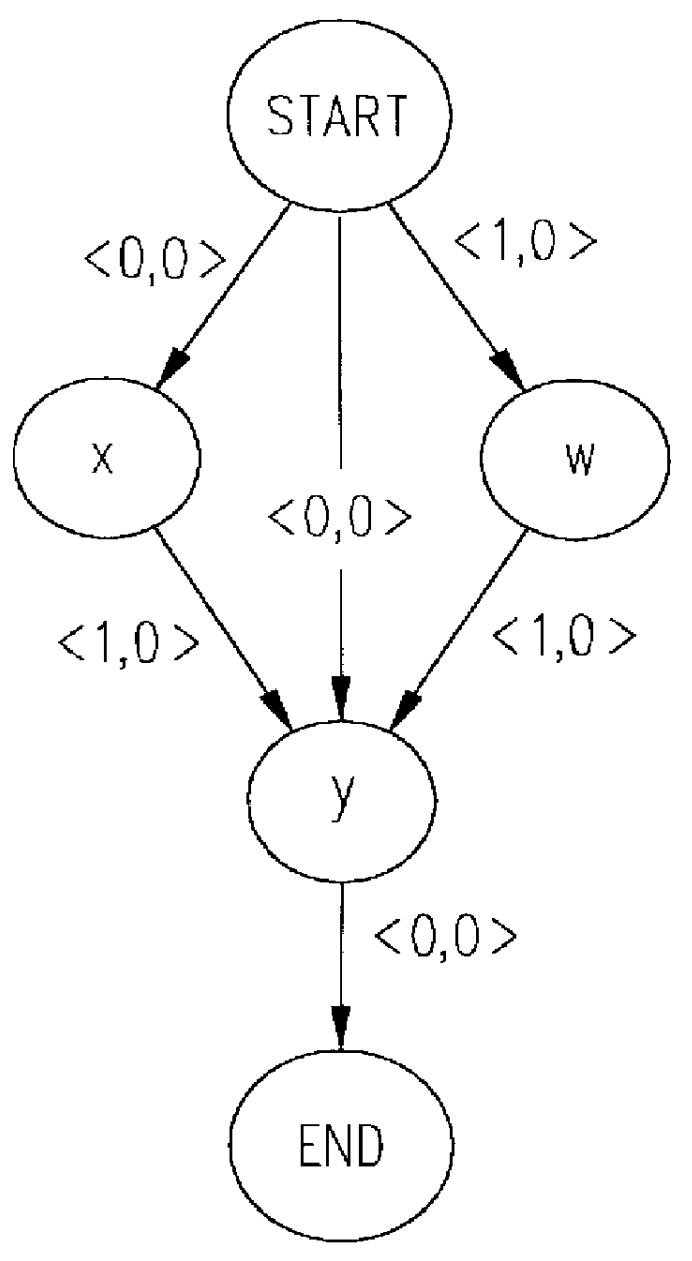

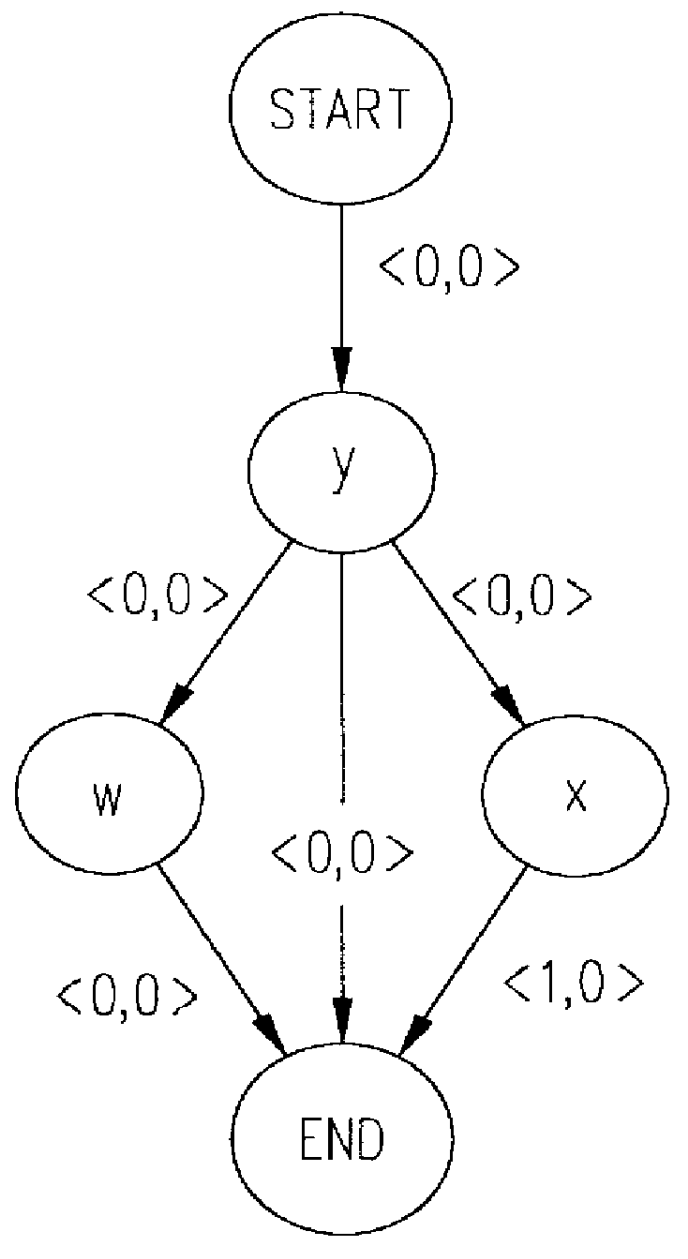

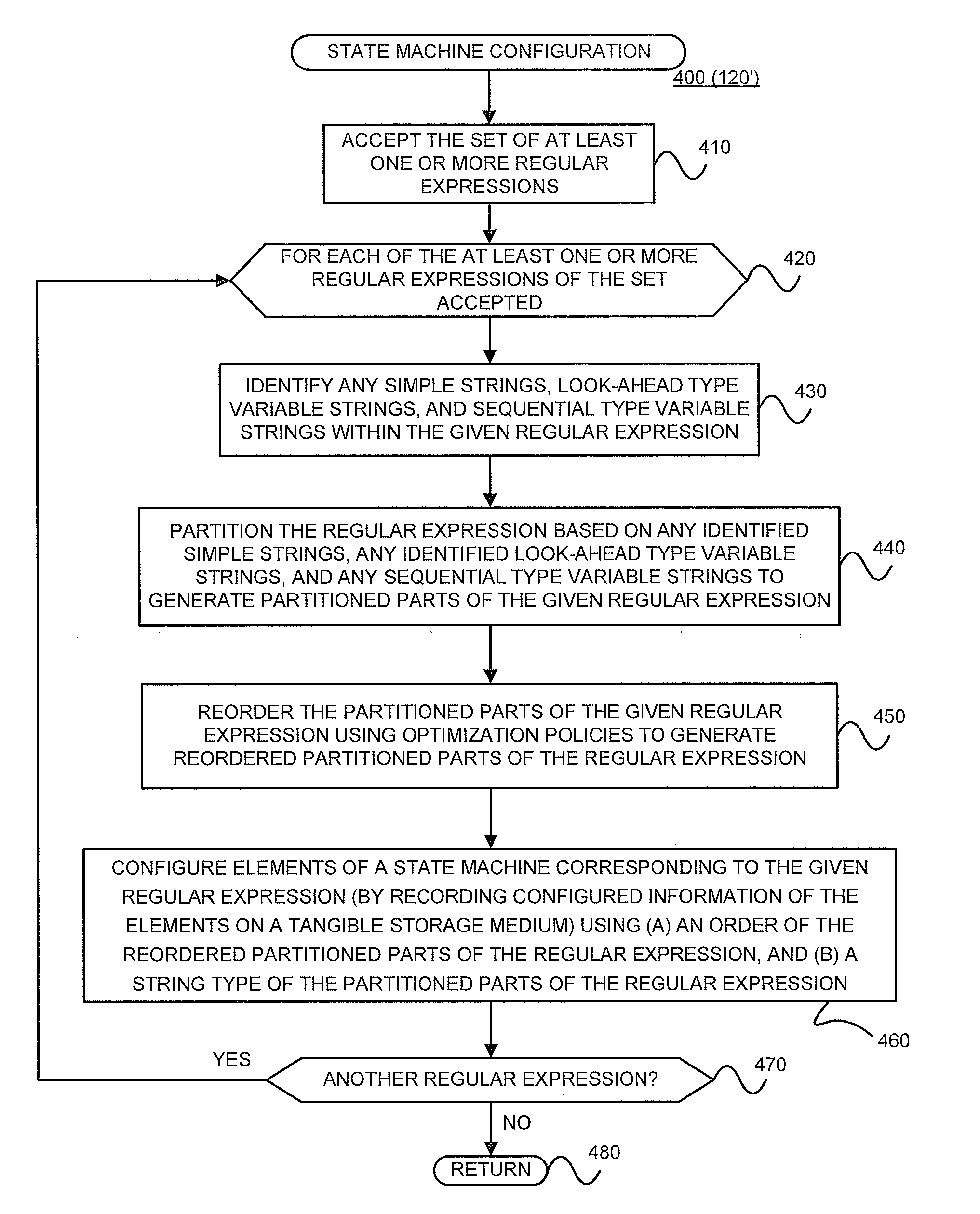

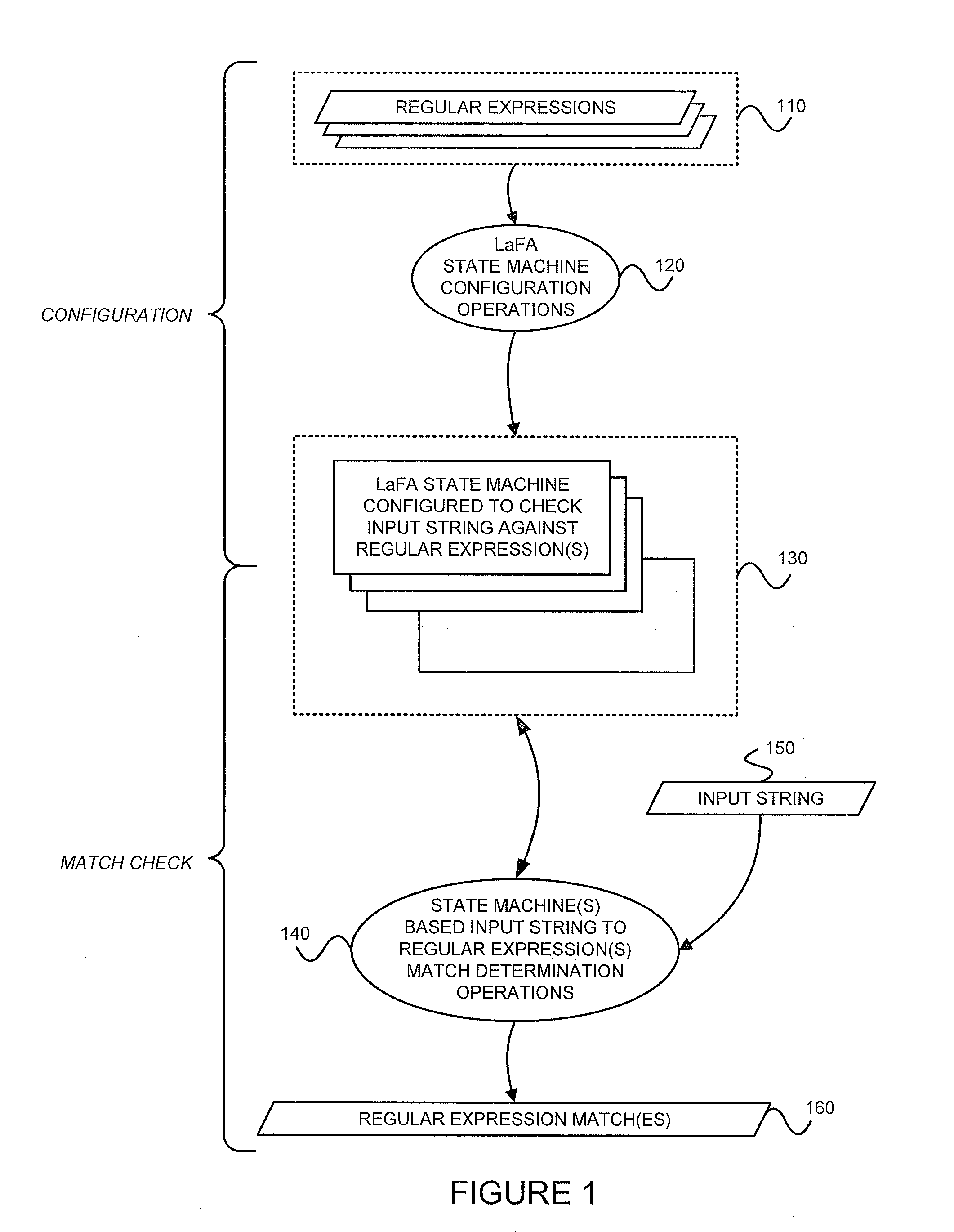

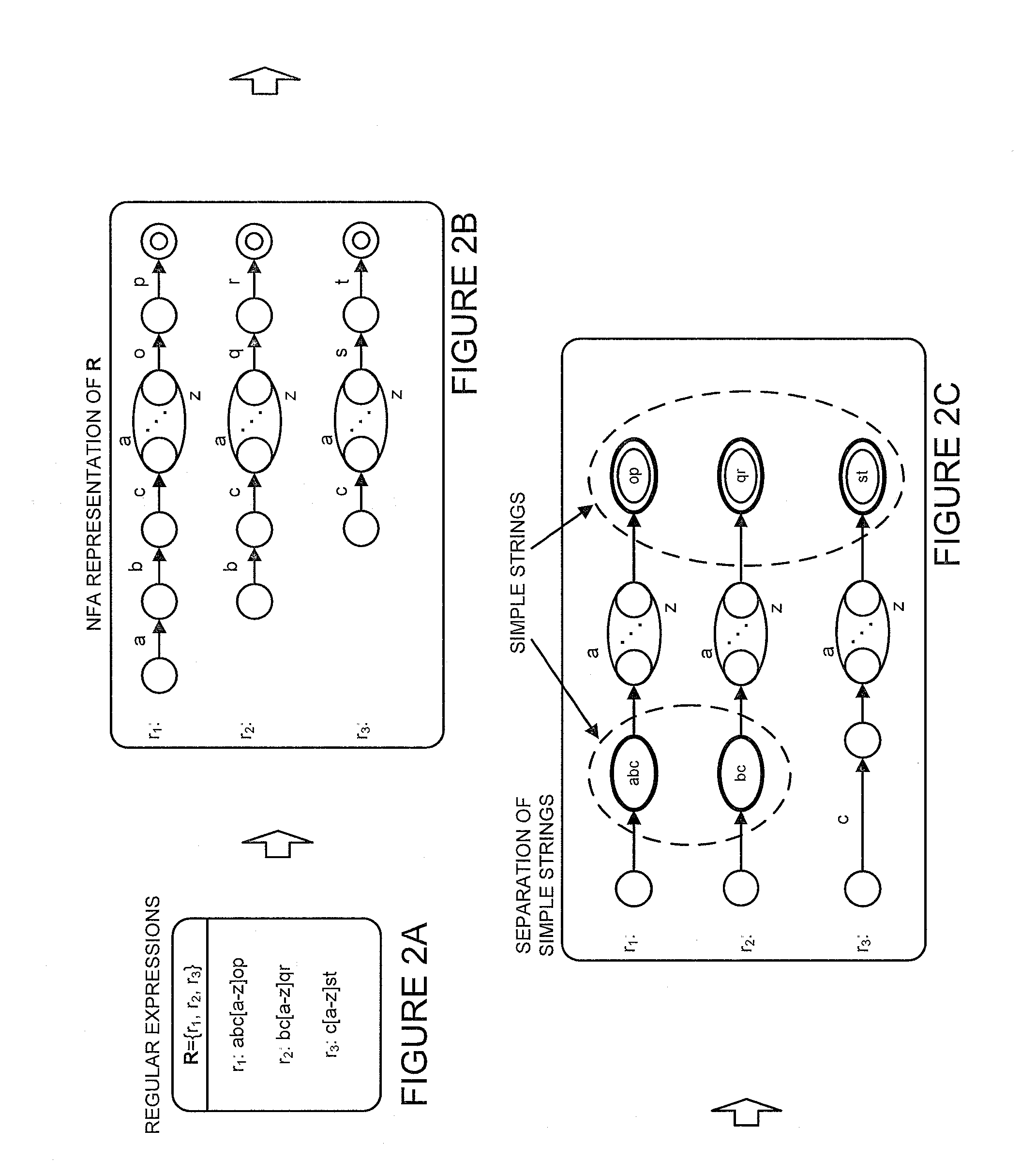

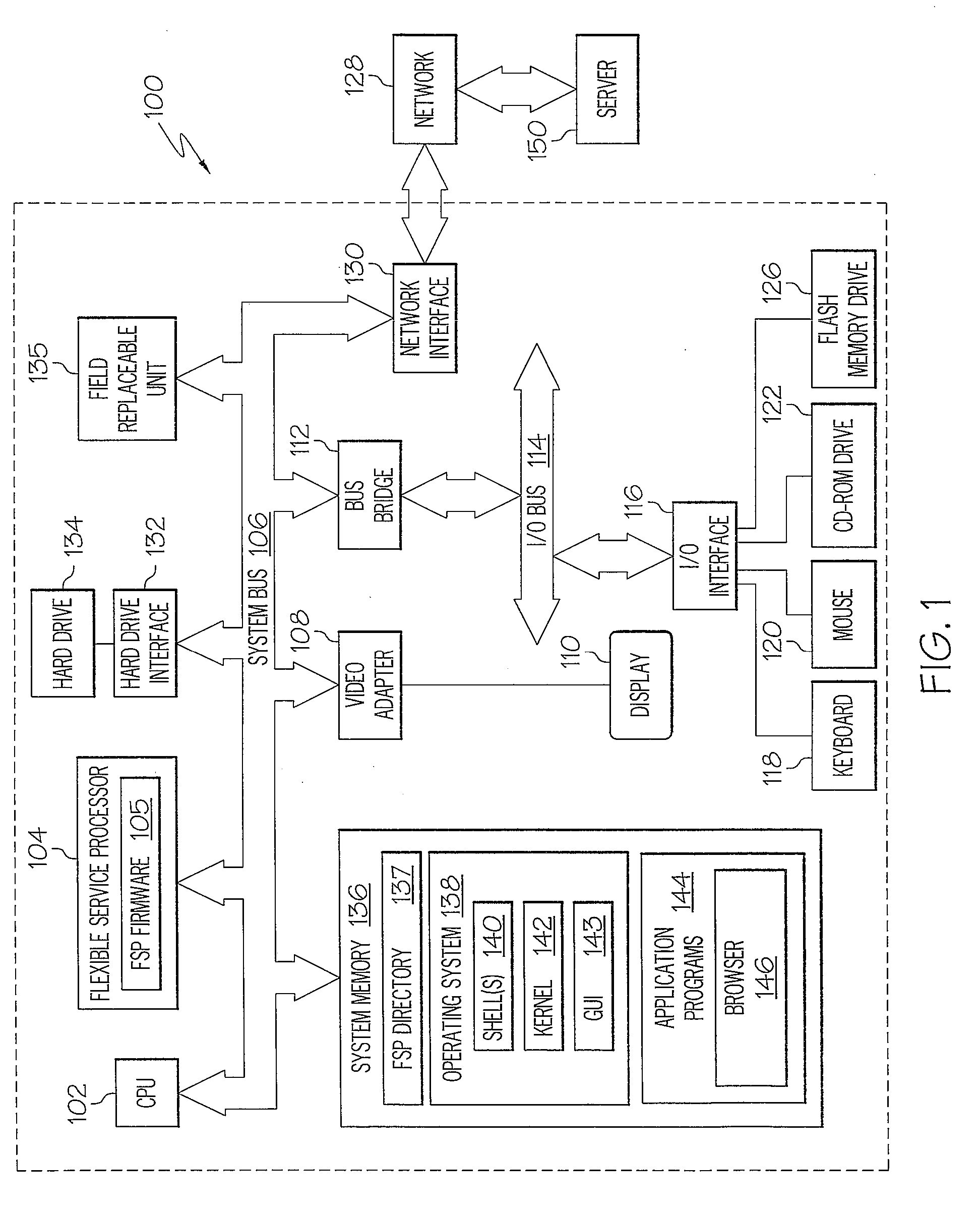

Configuring state machines used to order and select matching operations for determining whether an input string matches any of at least one regular expression using lookahead finite automata based regular expression detection

InactiveUS20110093484A1Less memorySmall memory requirementDigital data information retrievalDigital data processing detailsNODALAutomaton

State machines used to order and select matching operations for determining whether an input string matches any of at least one regular expression are configured by (1) accepting the set of regular expression(s), and (2) for each of the regular expression(s) of the set accepted, (A) identifying any look-ahead type strings within the given regular expression, (B) identifying any sequential type strings within the given regular expression, (C) partitioning the regular expression based on any identified simple strings, any identified look-ahead type variable strings, and any sequential type variable strings to generate partitioned parts of the given regular expression, (D) reordering the partitioned parts of the given regular expression using optimization policies to generate reordered partitioned parts of the regular expression, and (E) configuring nodes of a state machine corresponding to the given regular expression, by recording configured information of the nodes on a tangible storage medium, using (i) an order of the reordered partitioned parts of the regular expression, and (ii) a string type of the partitioned parts of the regular expression. Once configured, the state machines may accept an input string, and for each of the regular expression(s), check for a match between the input string accepted and the given regular expression using the configured nodes of the state machine corresponding to the given regular expression. Checking for a match between the input string accepted and the given regular expression using configured nodes of a state machine corresponding to the given regular expression by using the configured nodes of the state machine may include (1) checking detection events from a simple string detector, (2) submitting queries to identified modules of a variable string detector, and (3) receiving detection events from the identified modules of the variable string detector.

Owner:POLYTECHNIC INSTITUTE OF NEW YORK UNIVERSITY

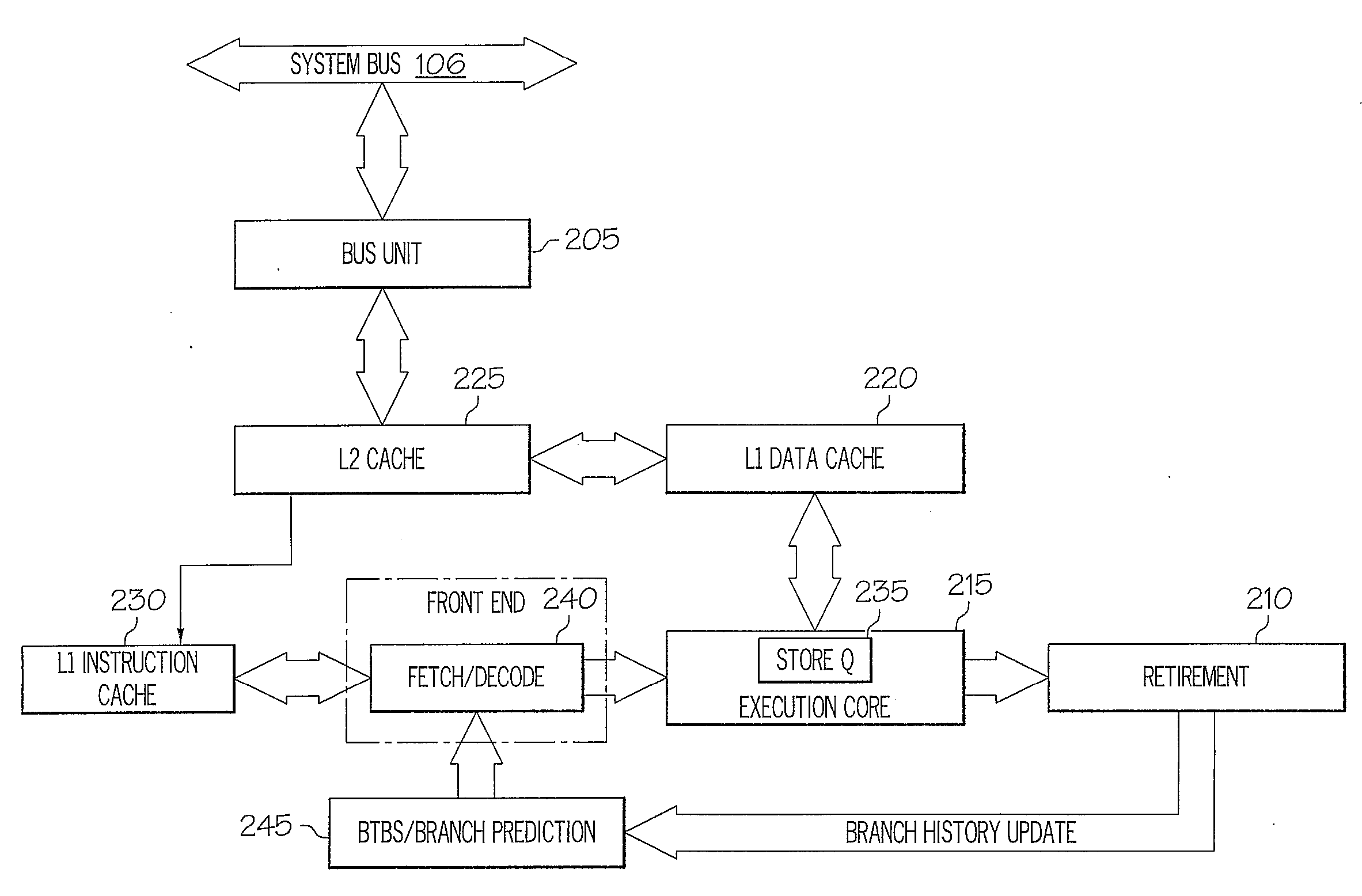

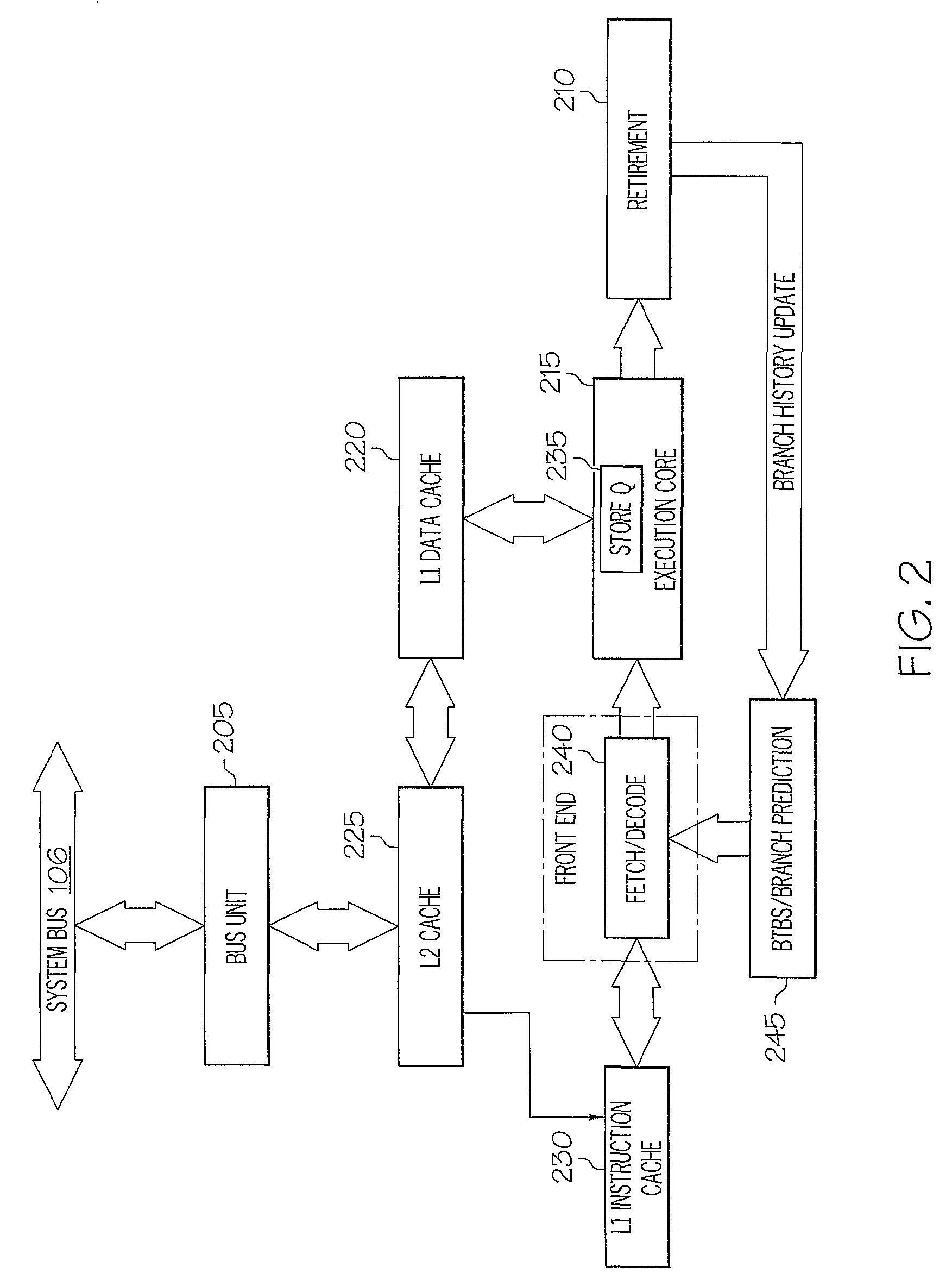

Store-to-load forwarding mechanism for processor runahead mode operation

InactiveUS20100199045A1Memory adressing/allocation/relocationDigital computer detailsLoad instructionParallel computing

A system and method to optimize runahead operation for a processor without use of a separate explicit runahead cache structure. Rather than simply dropping store instructions in a processor runahead mode, store instructions write their results in an existing processor store queue, although store instructions are not allowed to update processor caches and system memory. Use of the store queue during runahead mode to hold store instruction results allows more recent runahead load instructions to search retired store queue entries in the store queue for matching addresses to utilize data from the retired, but still searchable, store instructions. Retired store instructions could be either runahead store instructions retired, or retired store instructions that executed before entering runahead mode.

Owner:IBM CORP

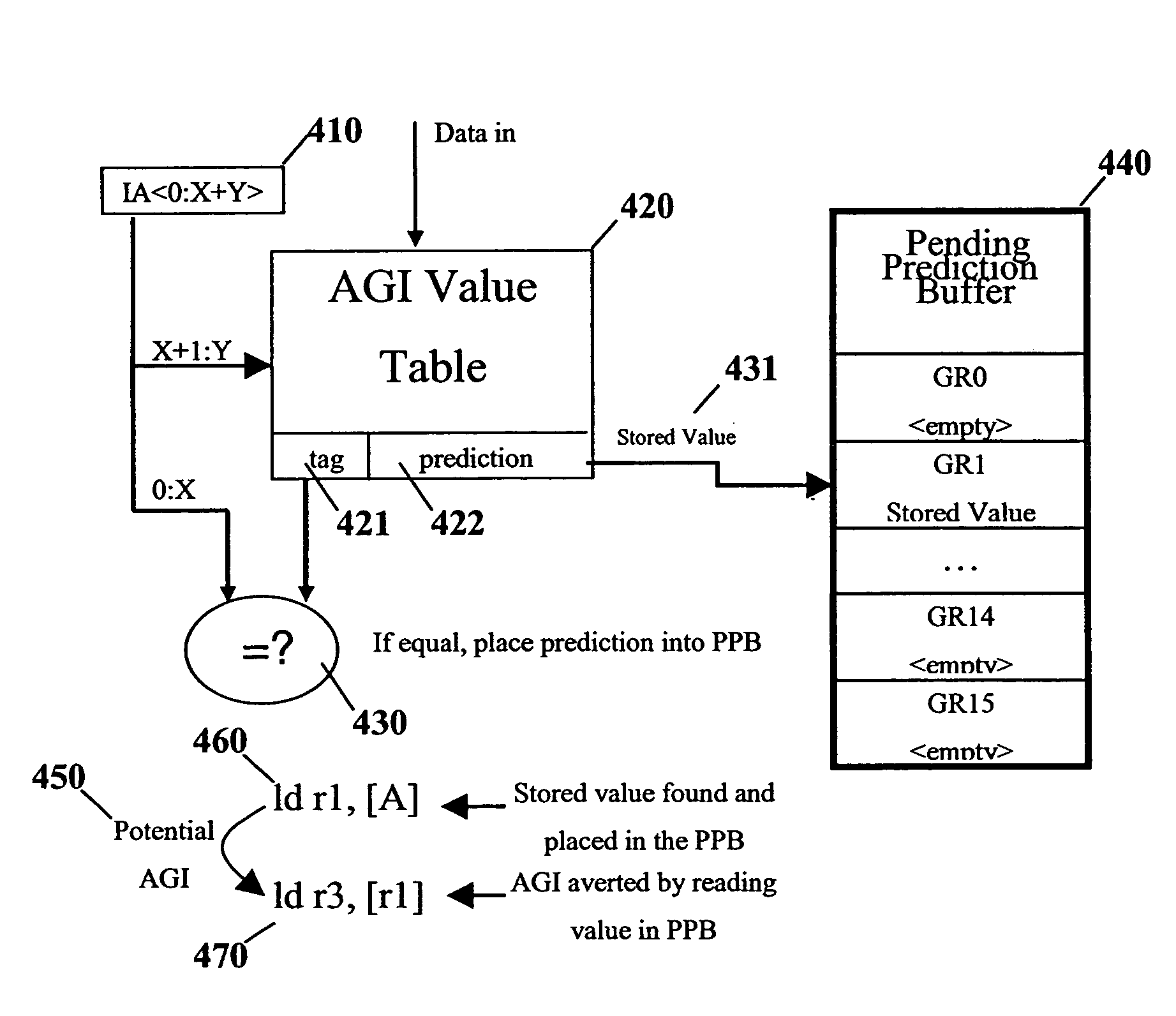

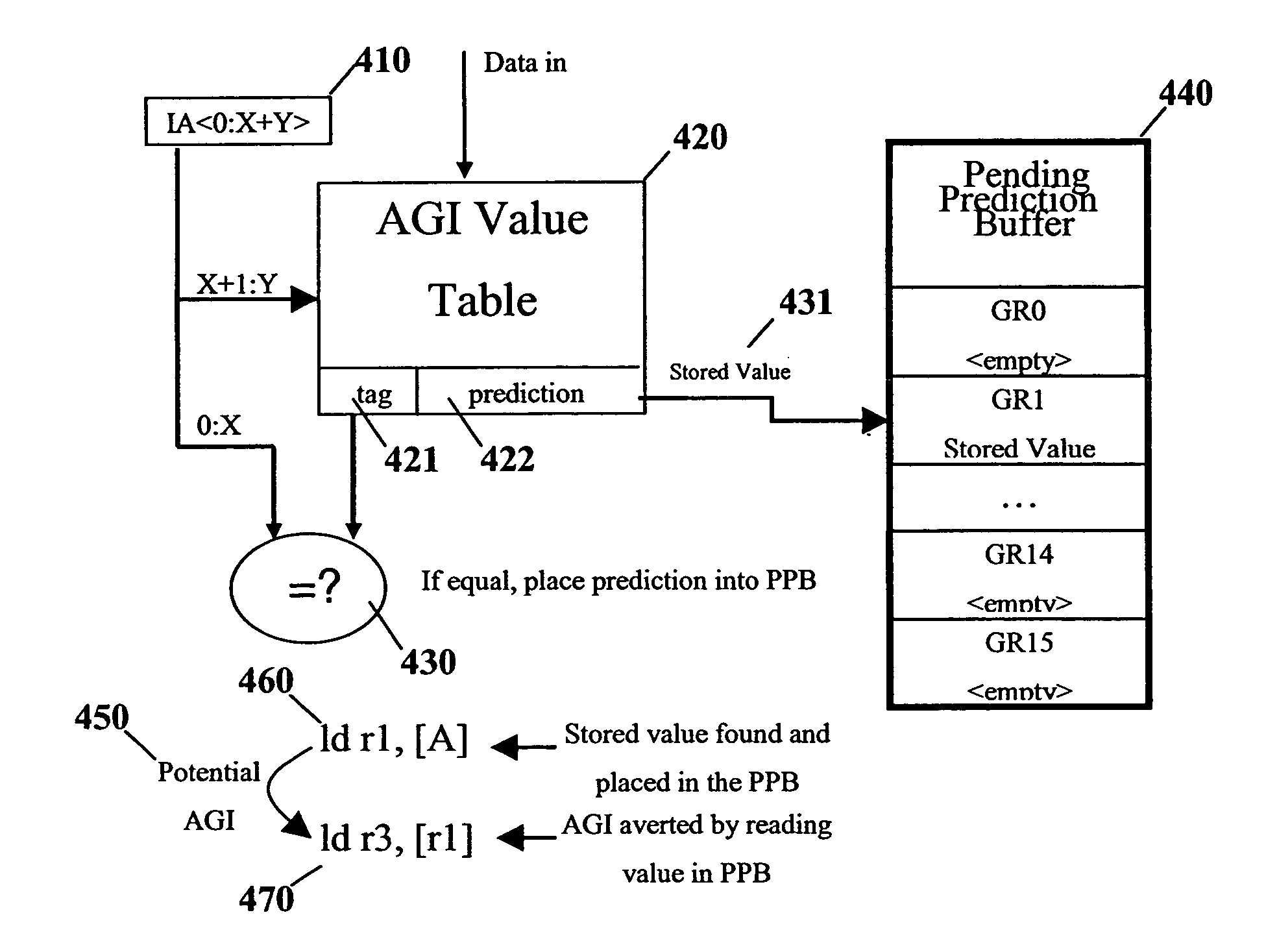

Address generation interlock resolution under runahead execution

InactiveUS7194604B2Minimize impactReduce impactDigital computer detailsMemory systemsMulti processorParallel computing

Disclosed is a method and apparatus providing a microprocessor the ability to reuse data cache content fetched during runahead execution. Said data is stored and later retrieved based upon the instruction address of an instruction which is accessing the data cache. The reuse mechanism allows the reduction of address generation interlocking scenarios with the ability to self-correct should the stored values be incorrect due to subtleties in the architected state of memory in multiprocessor systems.

Owner:IBM CORP

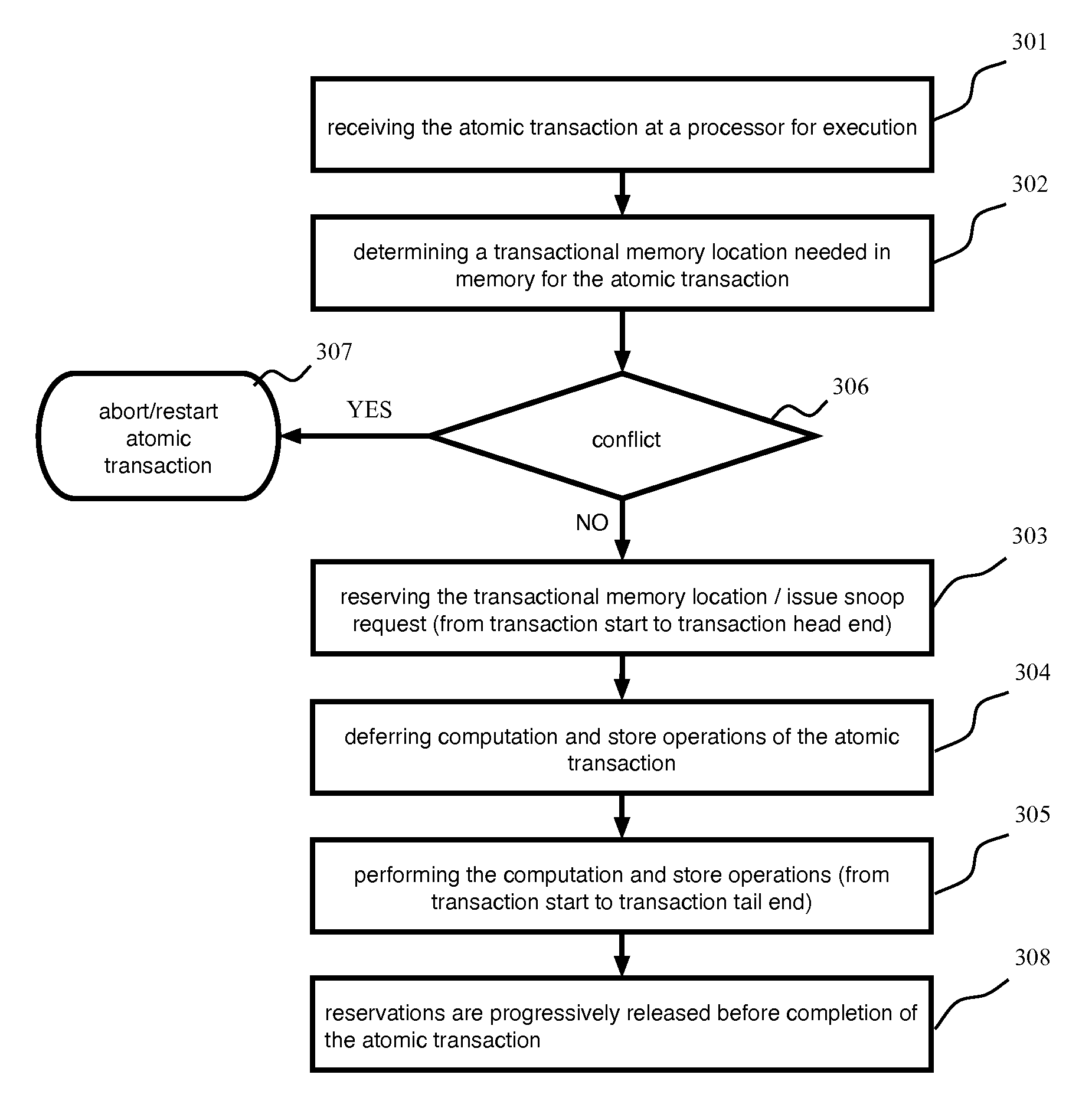

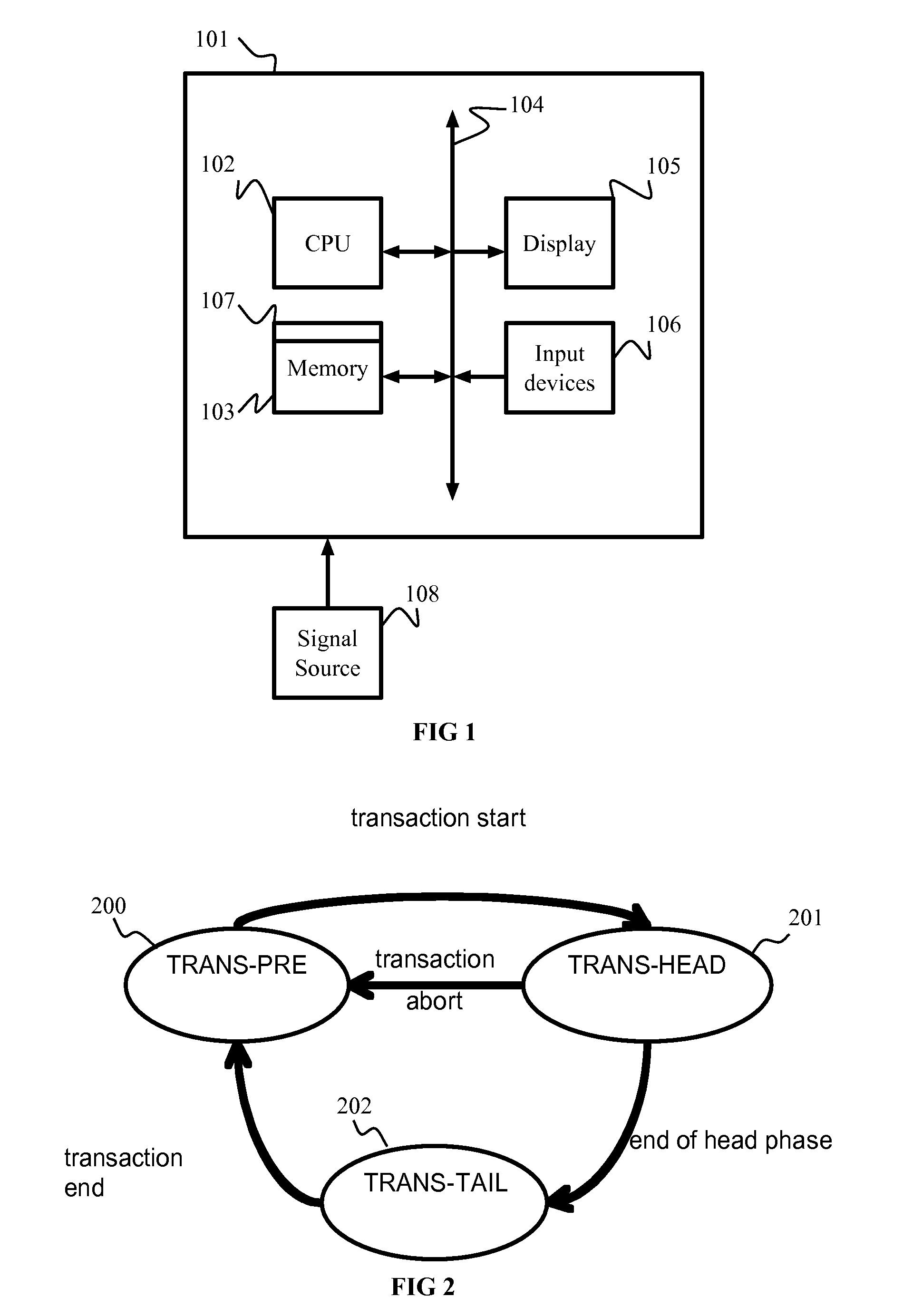

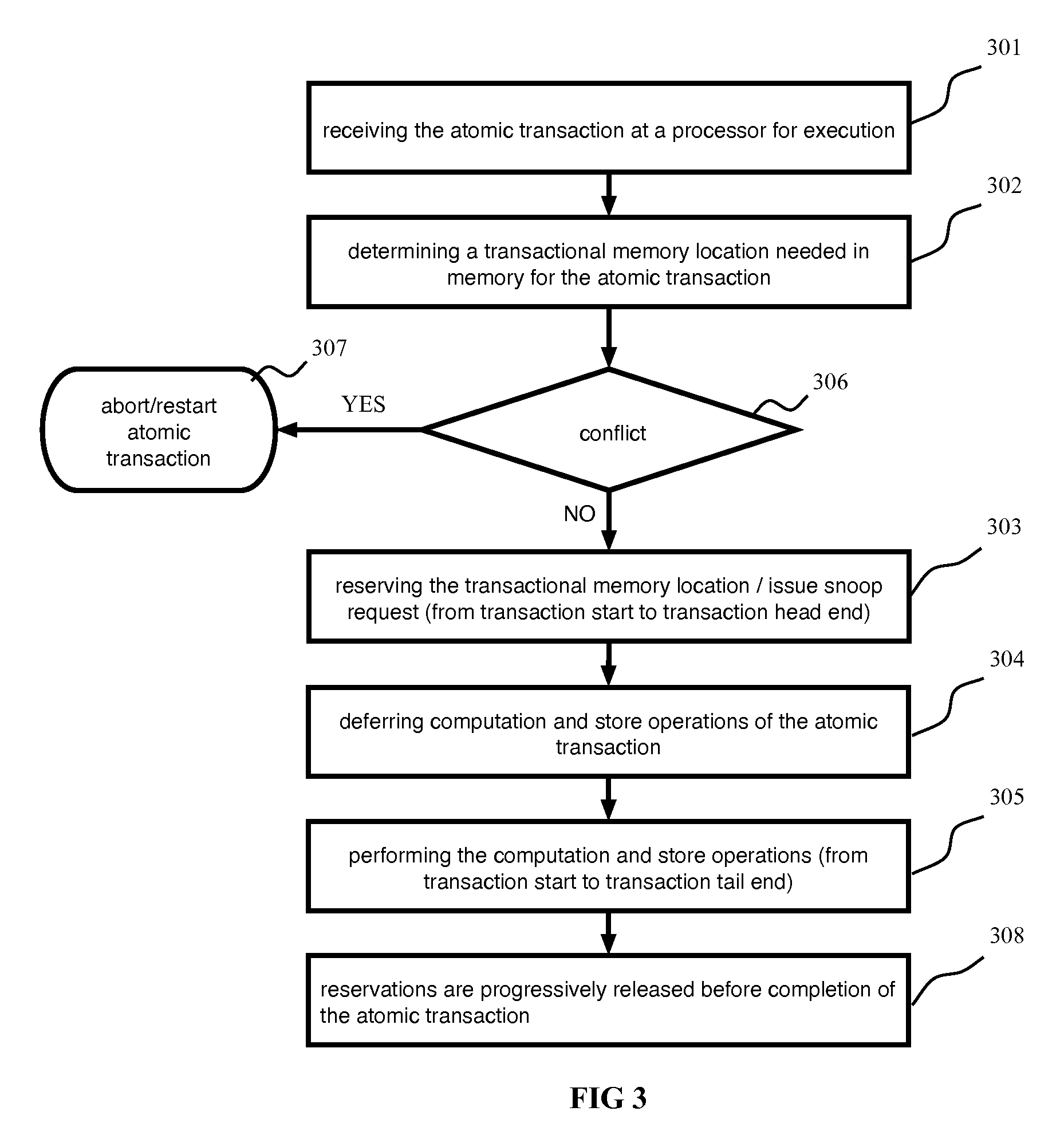

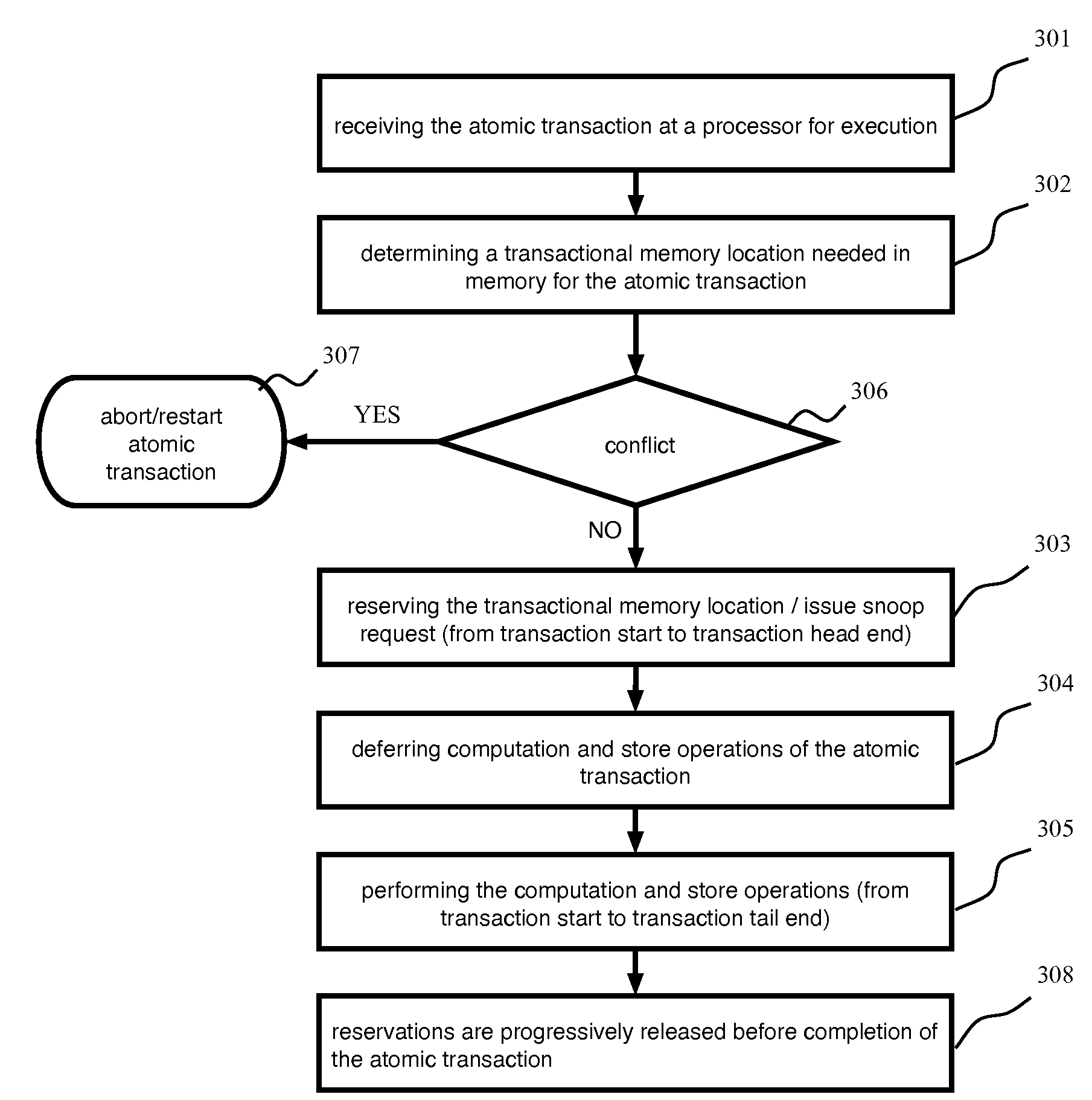

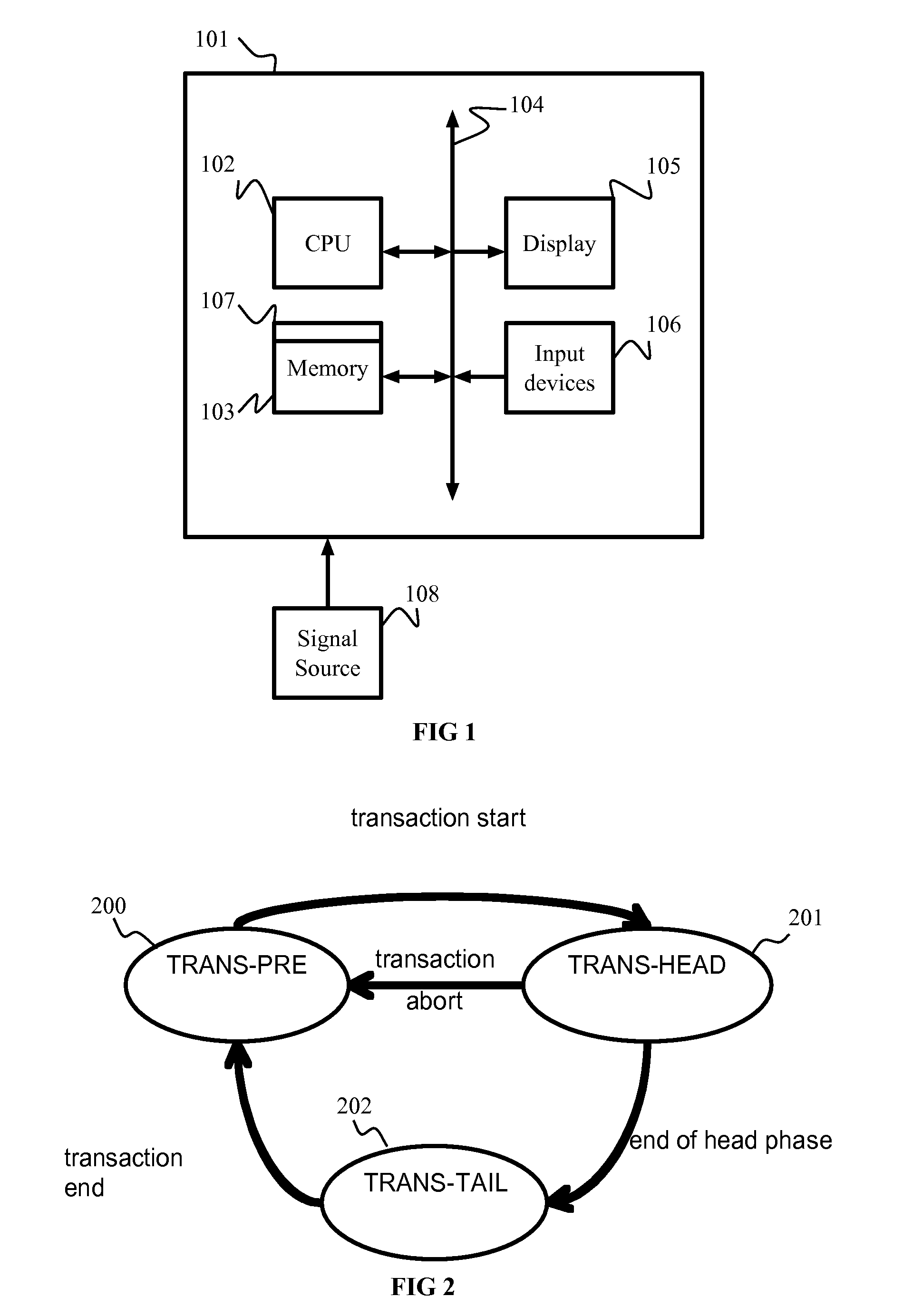

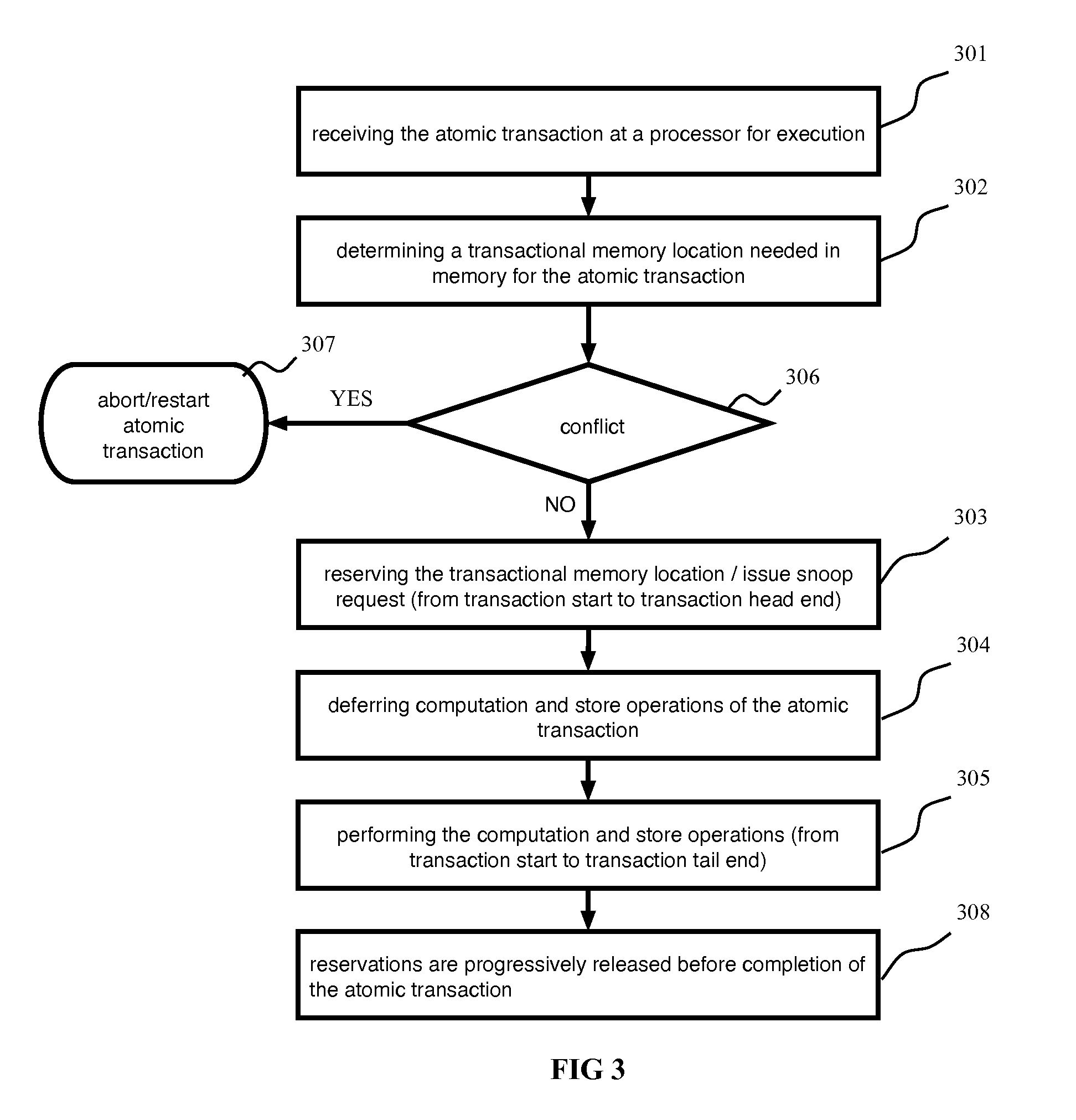

Bufferless transactional memory with runahead execution

ActiveUS7890725B2Unauthorized memory use protectionProgram controlParallel computingTransactional memory

A method for executing an atomic transaction includes receiving the atomic transaction at a processor for execution, determining a transactional memory location needed in memory for the atomic transaction, reserving the transactional memory location while all computation and store operations of the atomic transaction are deferred, and performing the computation and store operations, wherein the atomic transaction cannot be aborted after the reservation, and further wherein the store operation is directly committed to the memory without being buffered.

Owner:IBM CORP

Address generation interlock resolution under runahead execution

InactiveUS20060095678A1Minimize impactReduce the impactDigital computer detailsUnauthorized memory use protectionMulti processorTerm memory

Disclosed is a method and apparatus providing a microprocessor the ability to reuse data cache content fetched during runahead execution. Said data is stored and later retrieved based upon the instruction address of an instruction which is accessing the data cache. The reuse mechanism allows the reduction of address generation interlocking scenarios with the ability to self-correct should the stored values be incorrect due to subtleties in the architected state of memory in multiprocessor systems.

Owner:IBM CORP

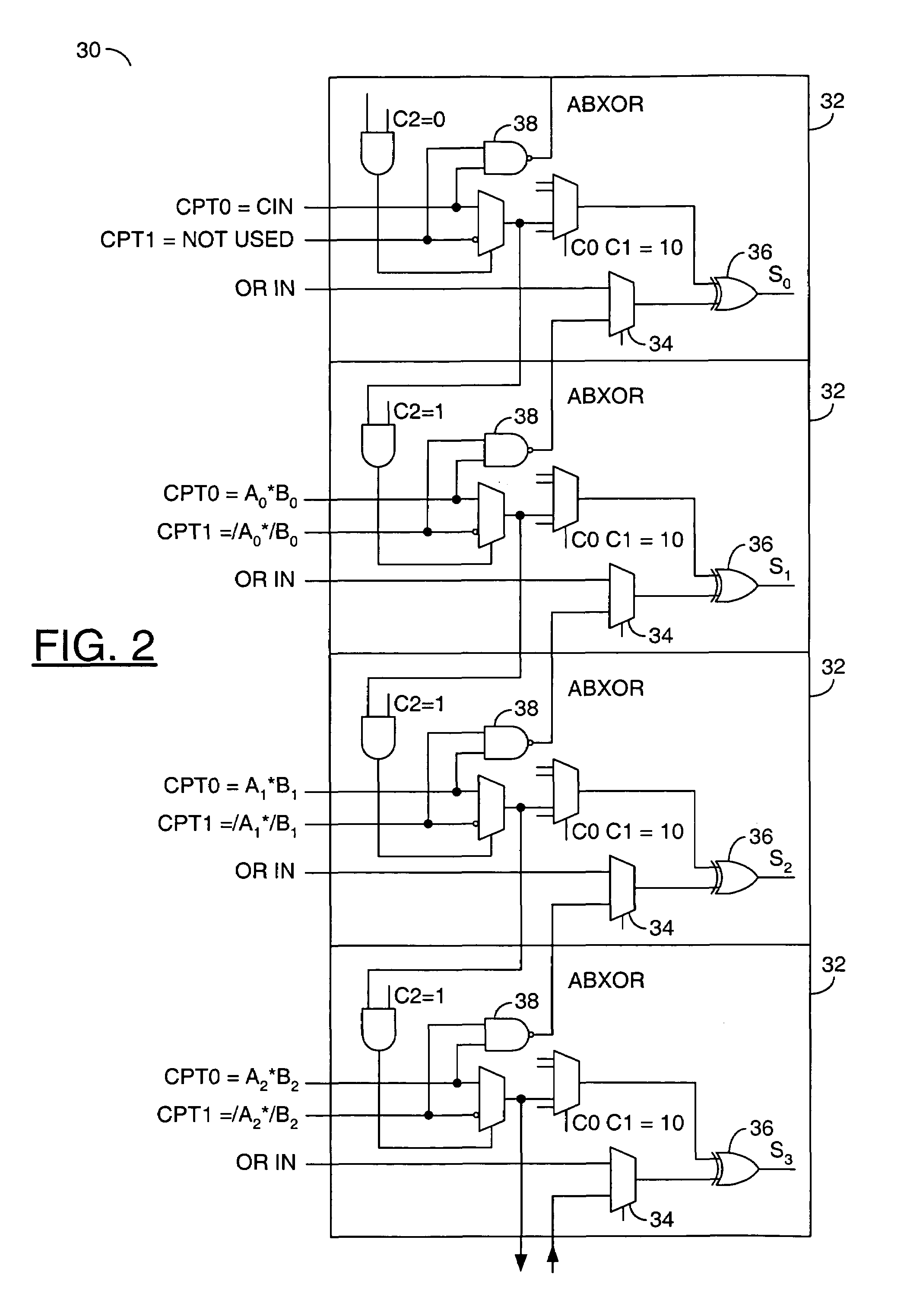

High performance carry chain with reduced macrocell logic and fast carry lookahead

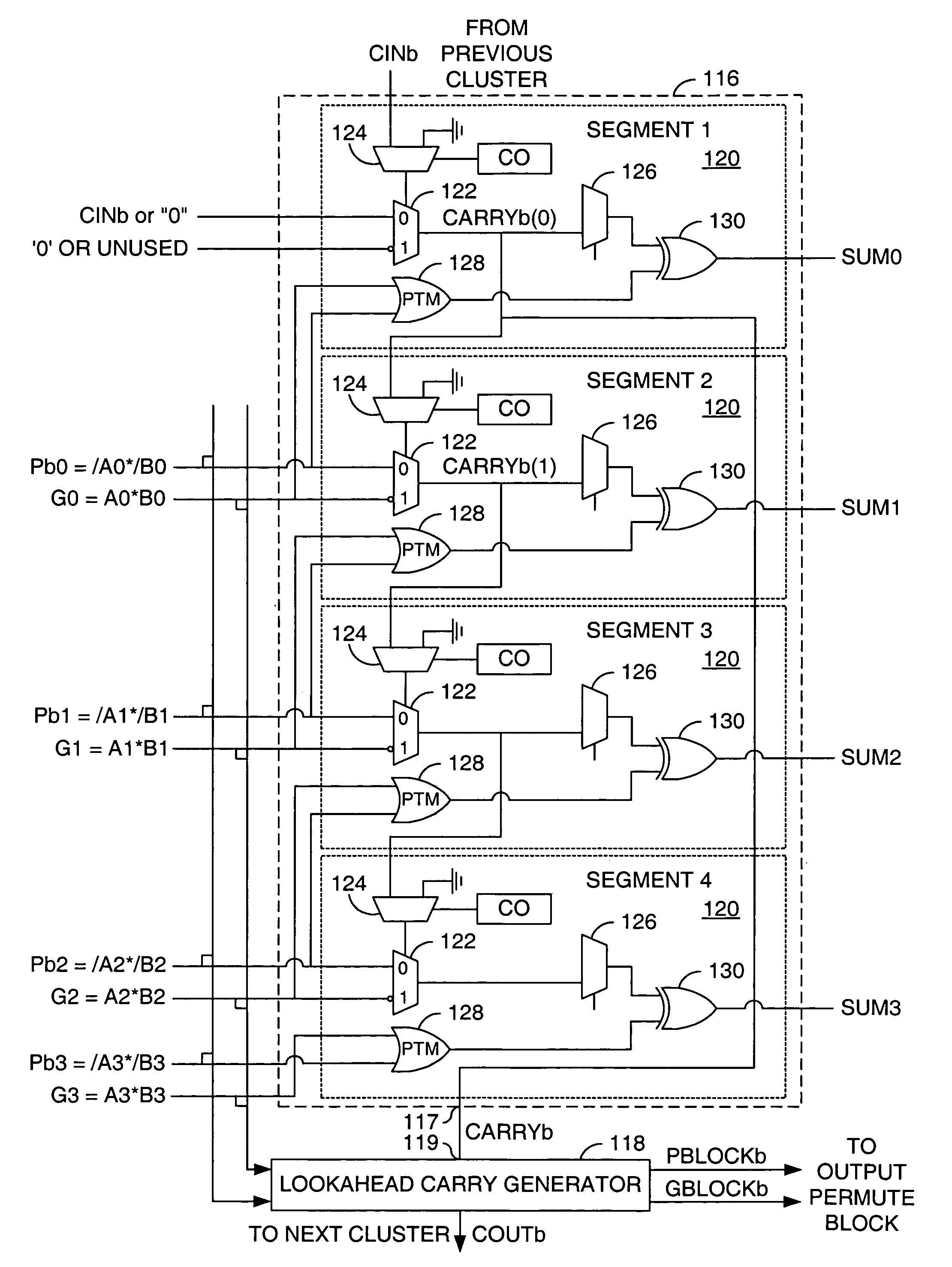

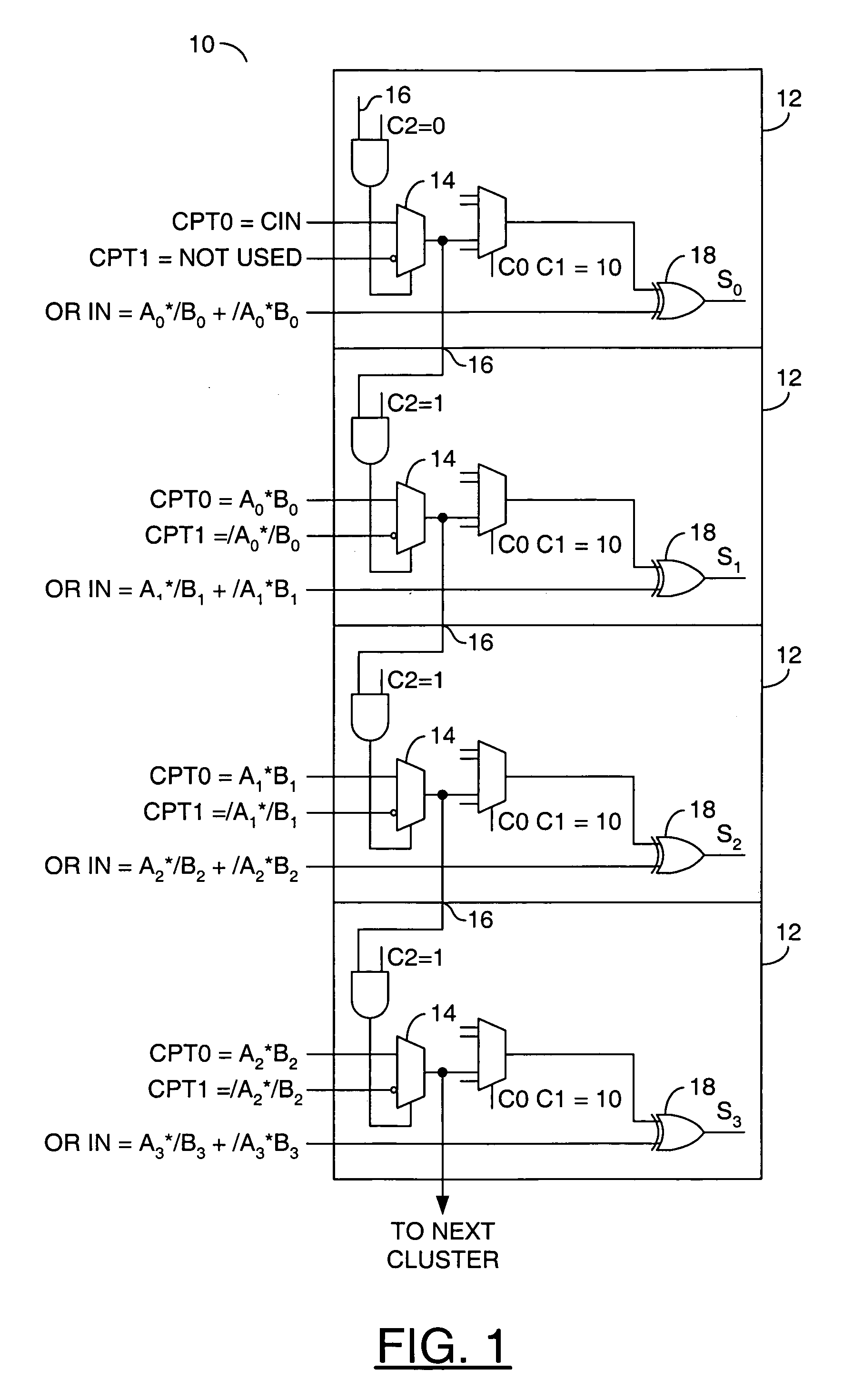

InactiveUS7003545B1Improve performanceReduced macrocell logicComputation using non-contact making devicesComputer hardwareMacro cell

A method for computing a sum or difference and a carry-out of numbers in product-term based programmable logic comprising the steps of: (A) generating (i) a portion of the sum or difference and (ii) a lookahead carry output in each of a plurality of logic blocks; (B) communicating the lookahead carry output of each of the logic blocks to a carry input of a next logic block; (C) presenting the lookahead carry output of a last logic block as the carry-out.

Owner:CYPRESS SEMICON CORP

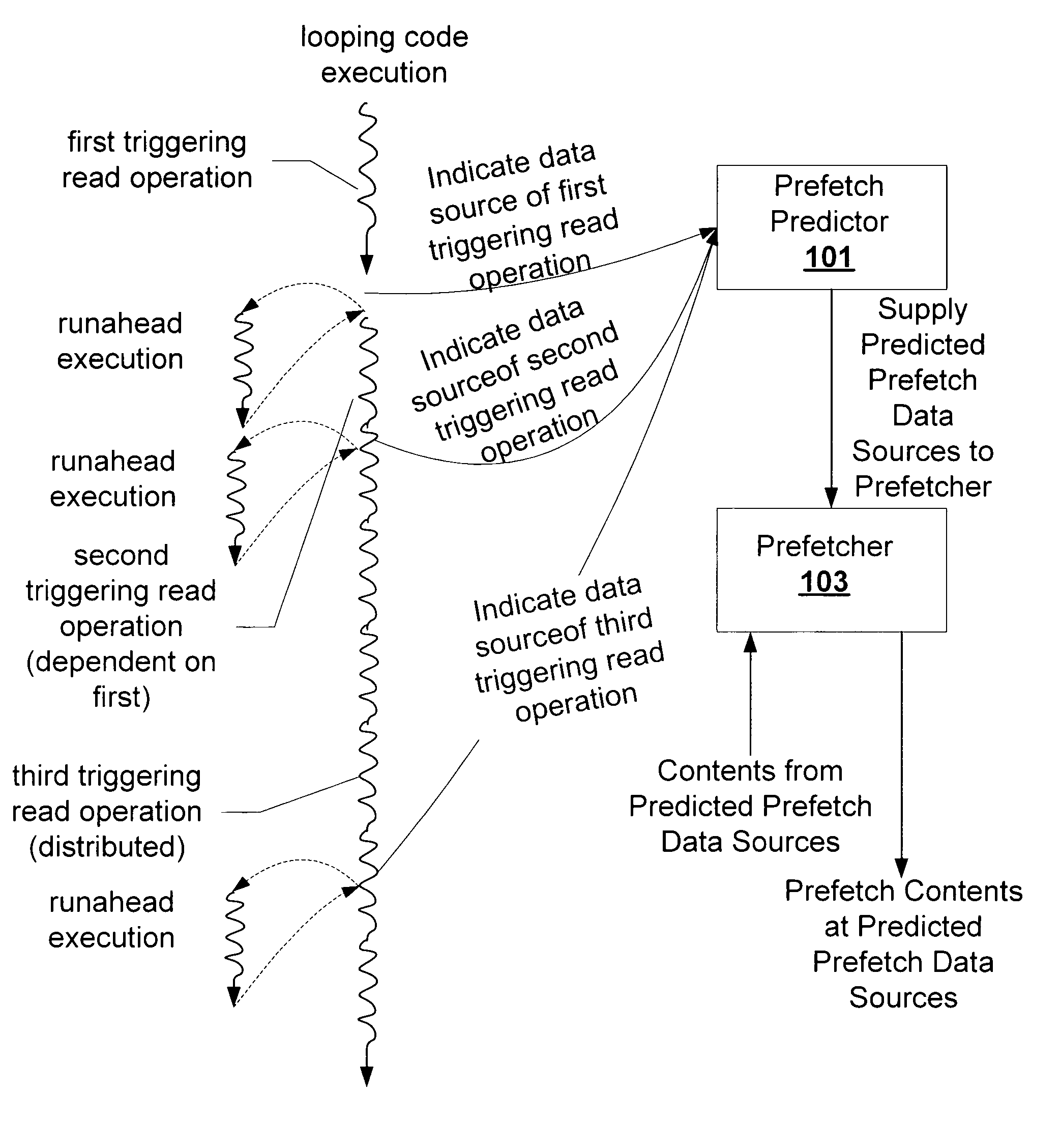

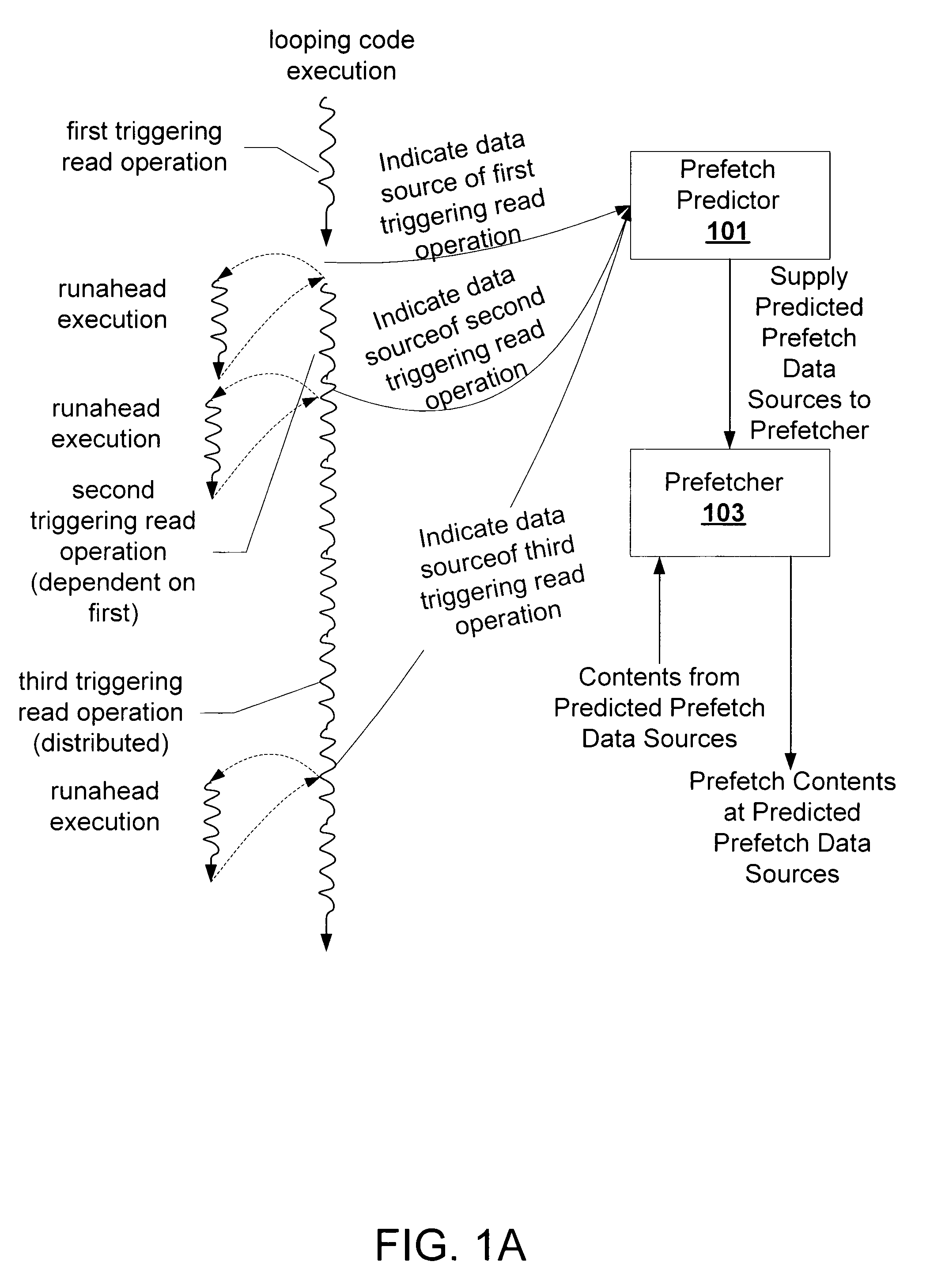

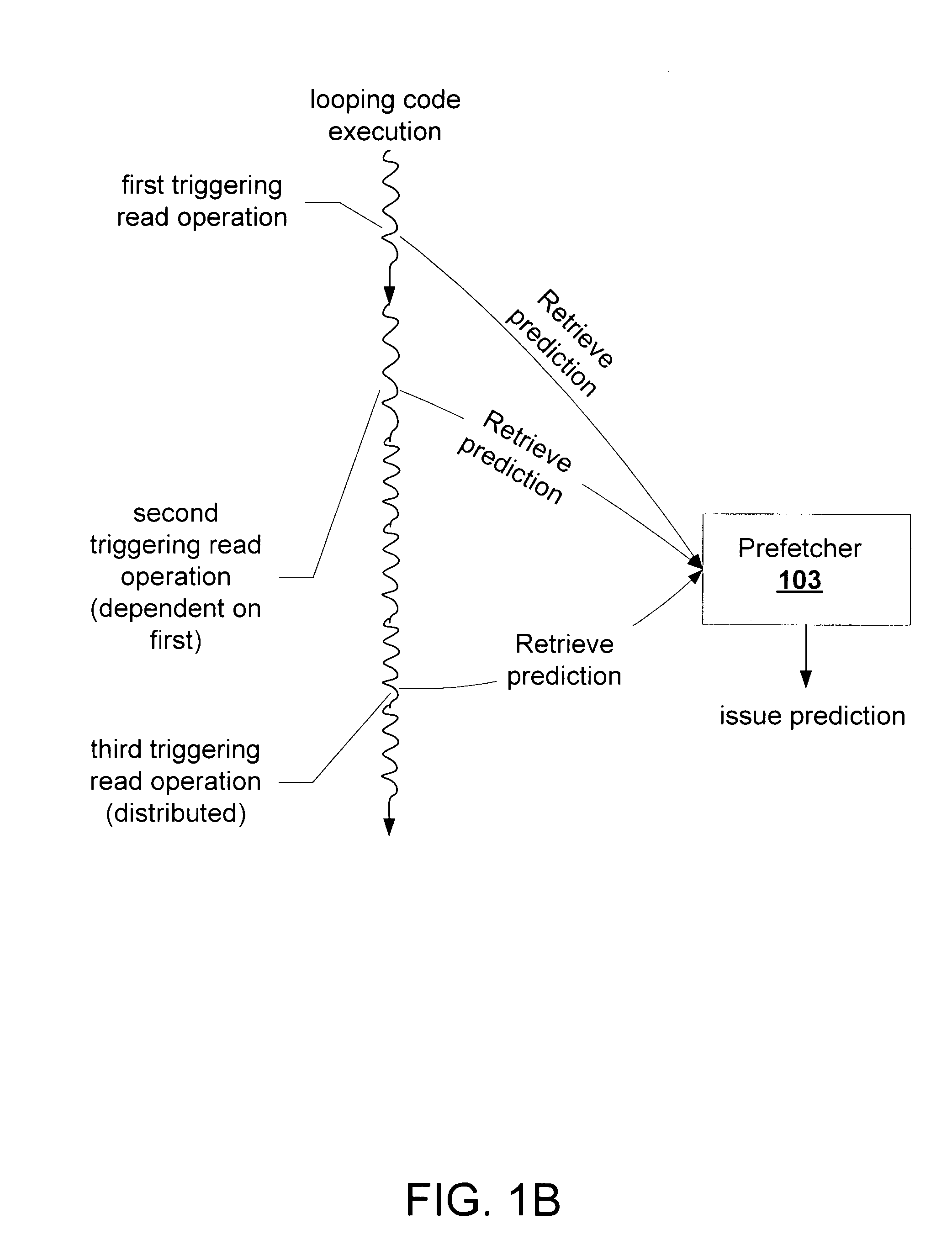

Prefetch prediction

ActiveUS7434004B1Memory architecture accessing/allocationDigital computer detailsData sourceOperating system

Predicting prefetch data sources for runahead execution triggering read operations eliminates the latency penalties of missing read operations that typically are not addressed by runahead execution mechanisms. Read operations that most likely trigger runahead execution are identified. The code unit that includes those triggering read operations is modified so that the code unit branches to a prefetch predictor. The prefetch predictor observes sequence patterns of data sources of triggering read operations and develops prefetch predictions based on the observed data source sequence patterns. After a prefetch prediction gains reliability, the prefetch predictor supplies a predicted data source to a prefetcher coincident with triggering of runahead execution.

Owner:ORACLE INT CORP

Selective poisoning of data during runahead

InactiveCN103793205AError detection/correctionConcurrent instruction executionOperating systemPoison ingestion

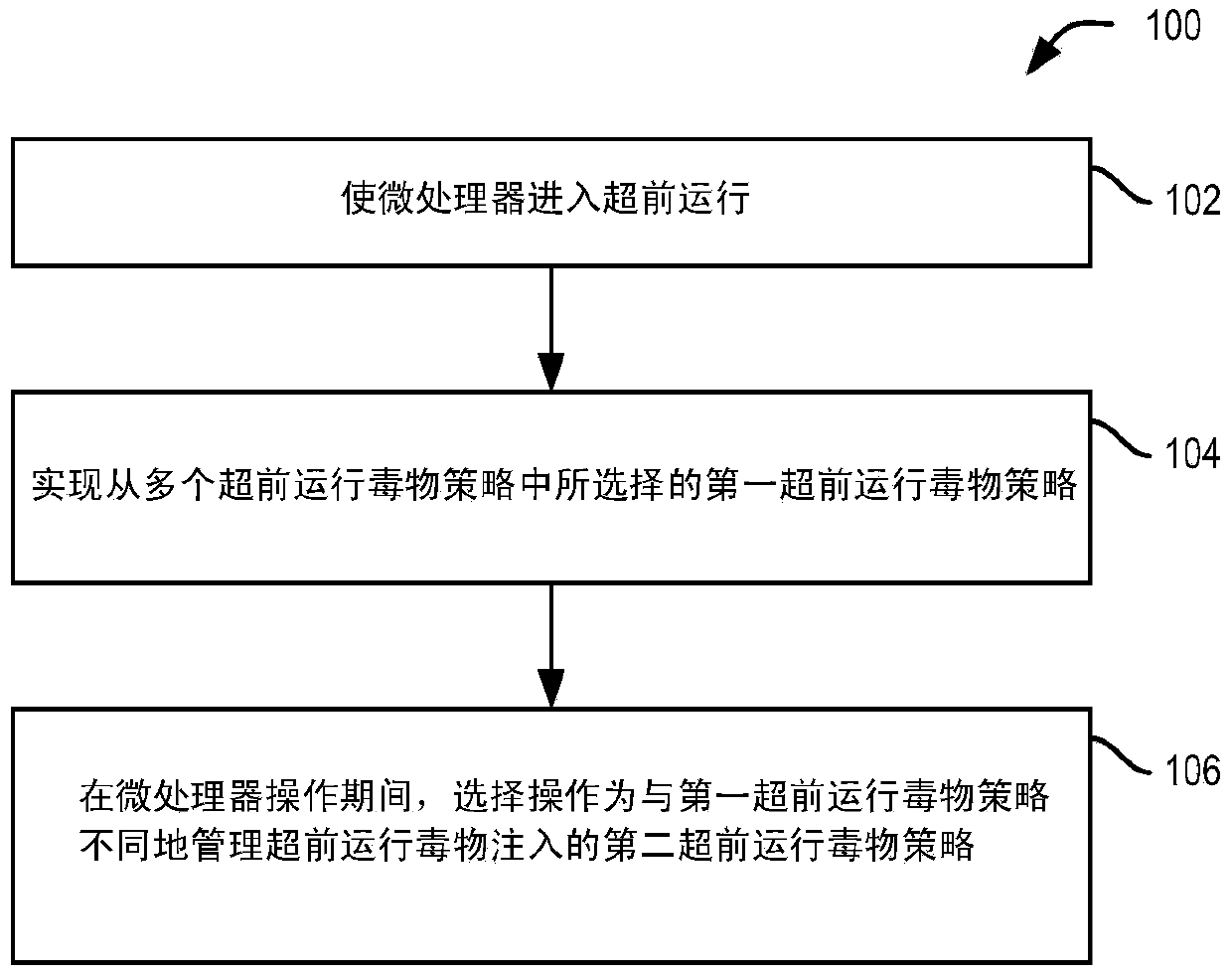

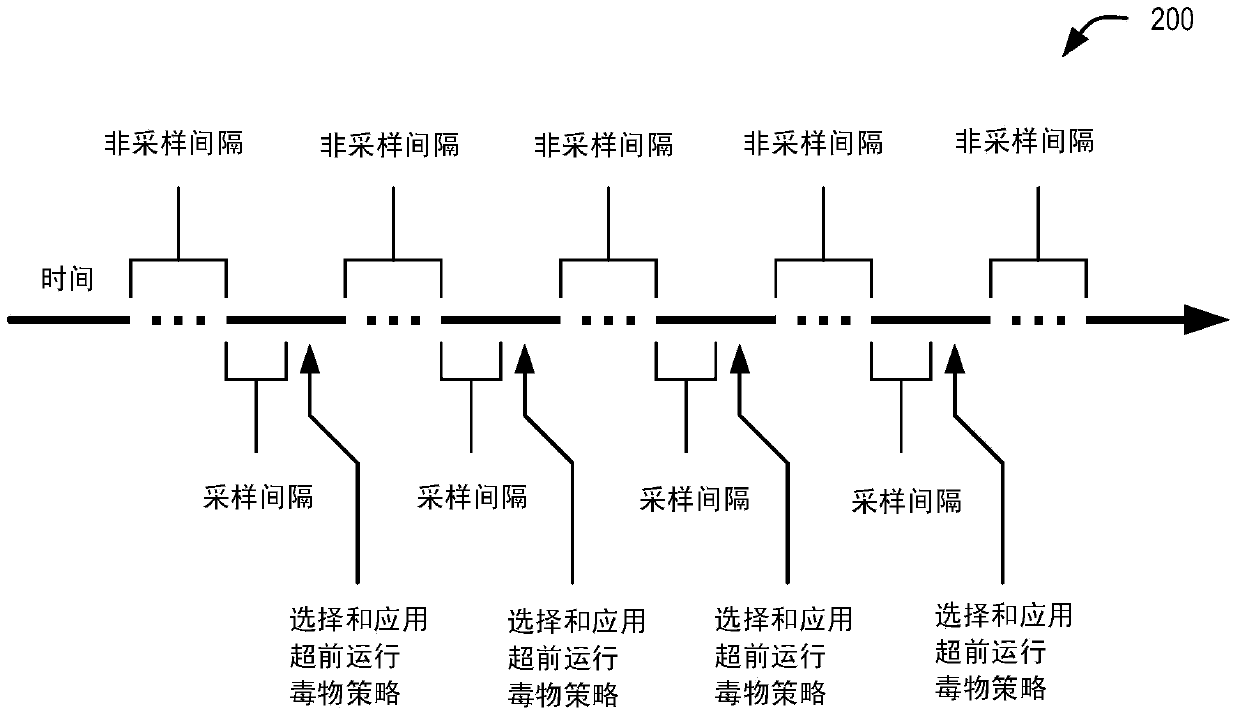

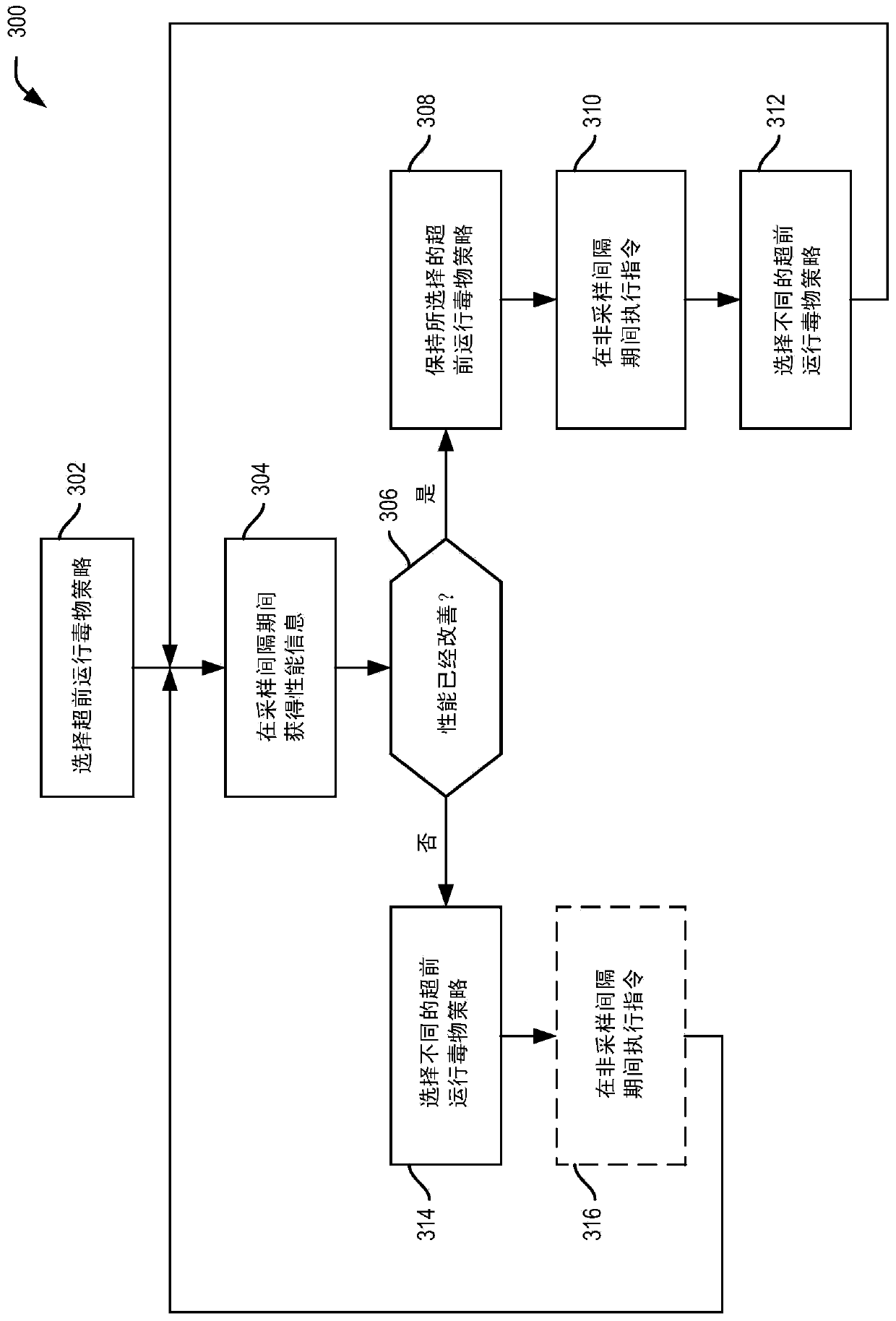

Embodiments related to selecting a runahead poison policy from a plurality of runahead poison policies during microprocessor operation are provided. An example method includes causing the microprocessor to enter runahead upon detection of a runahead event and implementing a first runahead poison policy selected from a plurality of runahead poison policies operative to manage runahead poison injection during runahead. The example method also includes during microprocessor operation, selecting a second runahead poison policy operative to manage runahead poison injection differently from the first runahead poison policy.

Owner:NVIDIA CORP

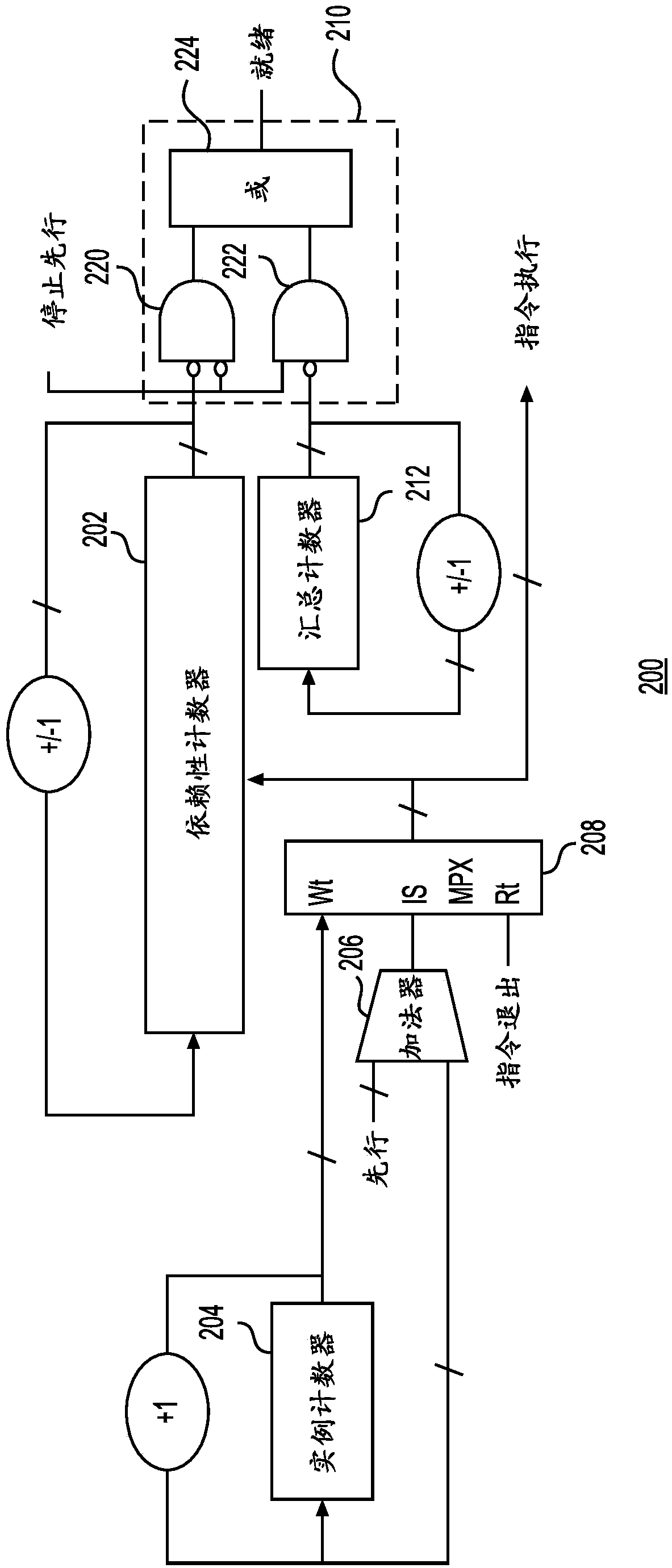

Reducing hardware costs for supporting miss lookahead

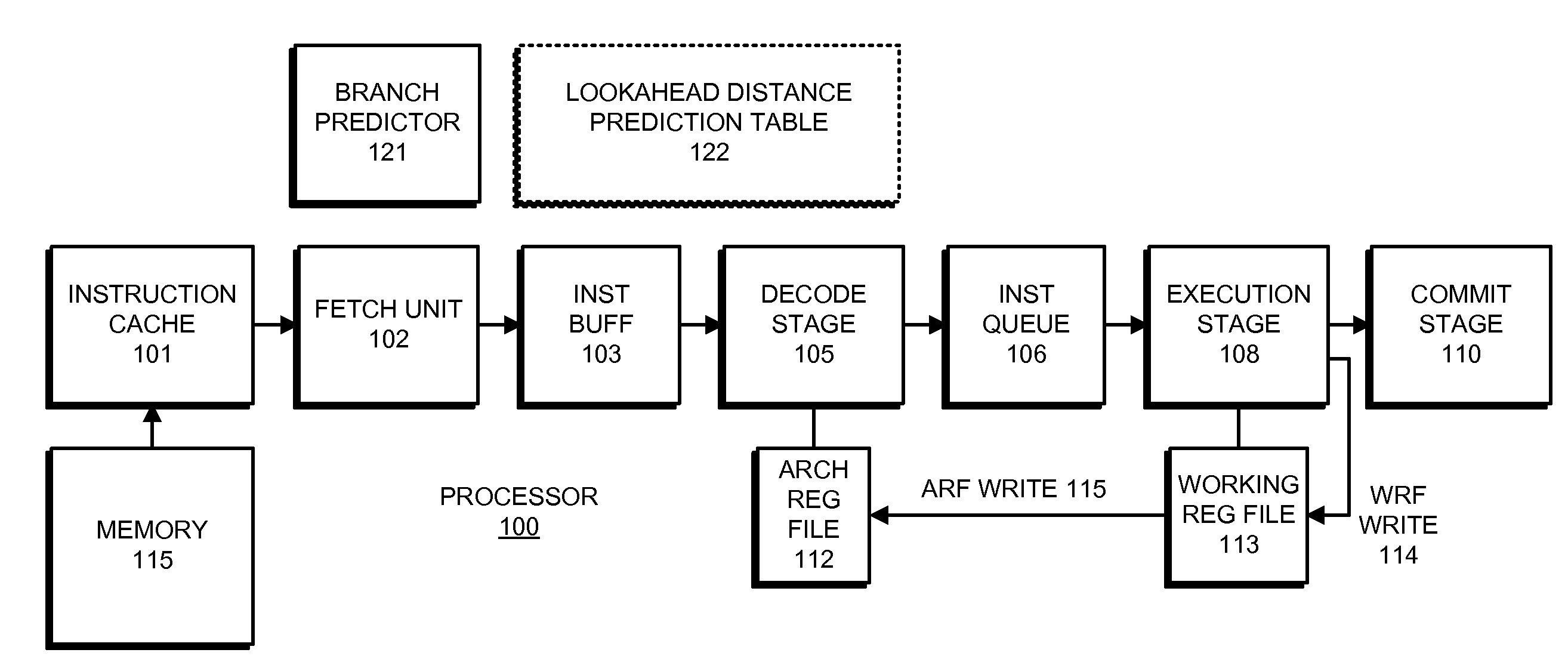

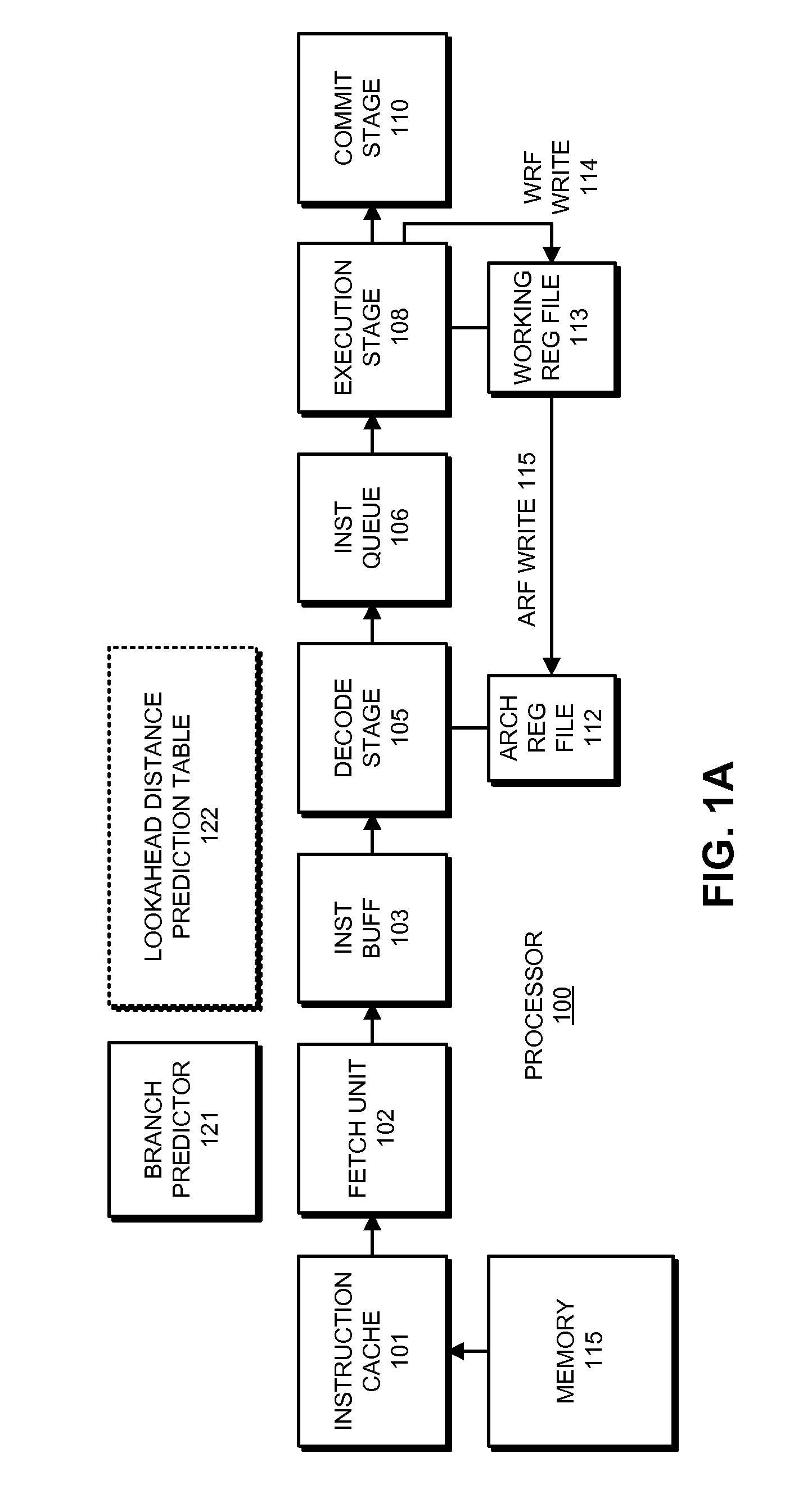

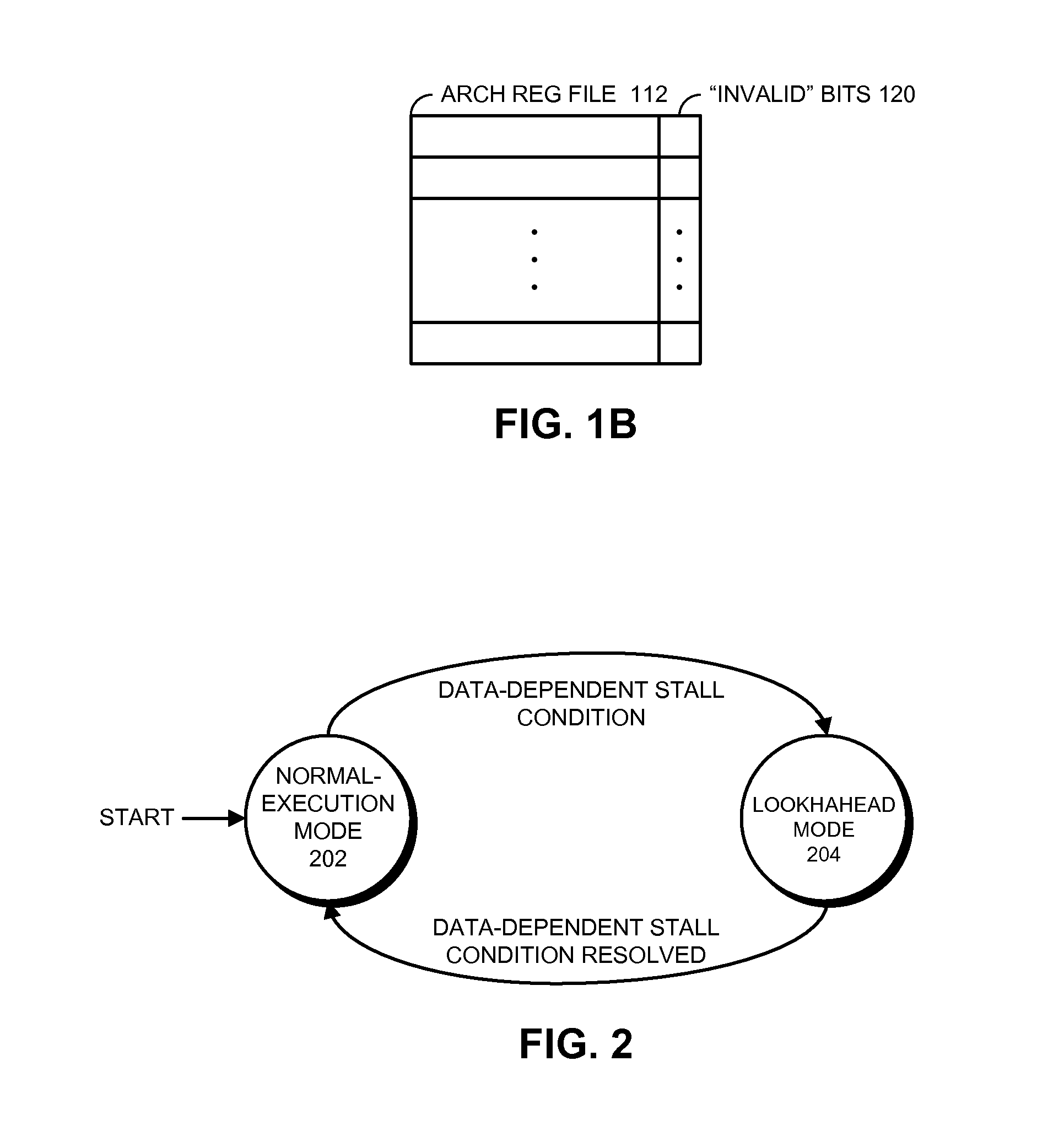

ActiveUS20130124828A1Eliminate needDigital computer detailsConcurrent instruction executionProgram instructionData dependency

The disclosed embodiments relate to a system that executes program instructions on a processor. During a normal-execution mode, the system issues instructions for execution in program order. Upon encountering an unresolved data dependency during execution of an instruction, the system speculatively executes subsequent instructions in a lookahead mode to prefetch future loads. When an instruction retires during the lookahead mode, a working register which serves as a destination register for the instruction is not copied to a corresponding architectural register. Instead the architectural register is marked as invalid. Note that by not updating architectural registers during lookahead mode, the system eliminates the need to checkpoint the architectural registers prior to entering lookahead mode.

Owner:ORACLE INT CORP

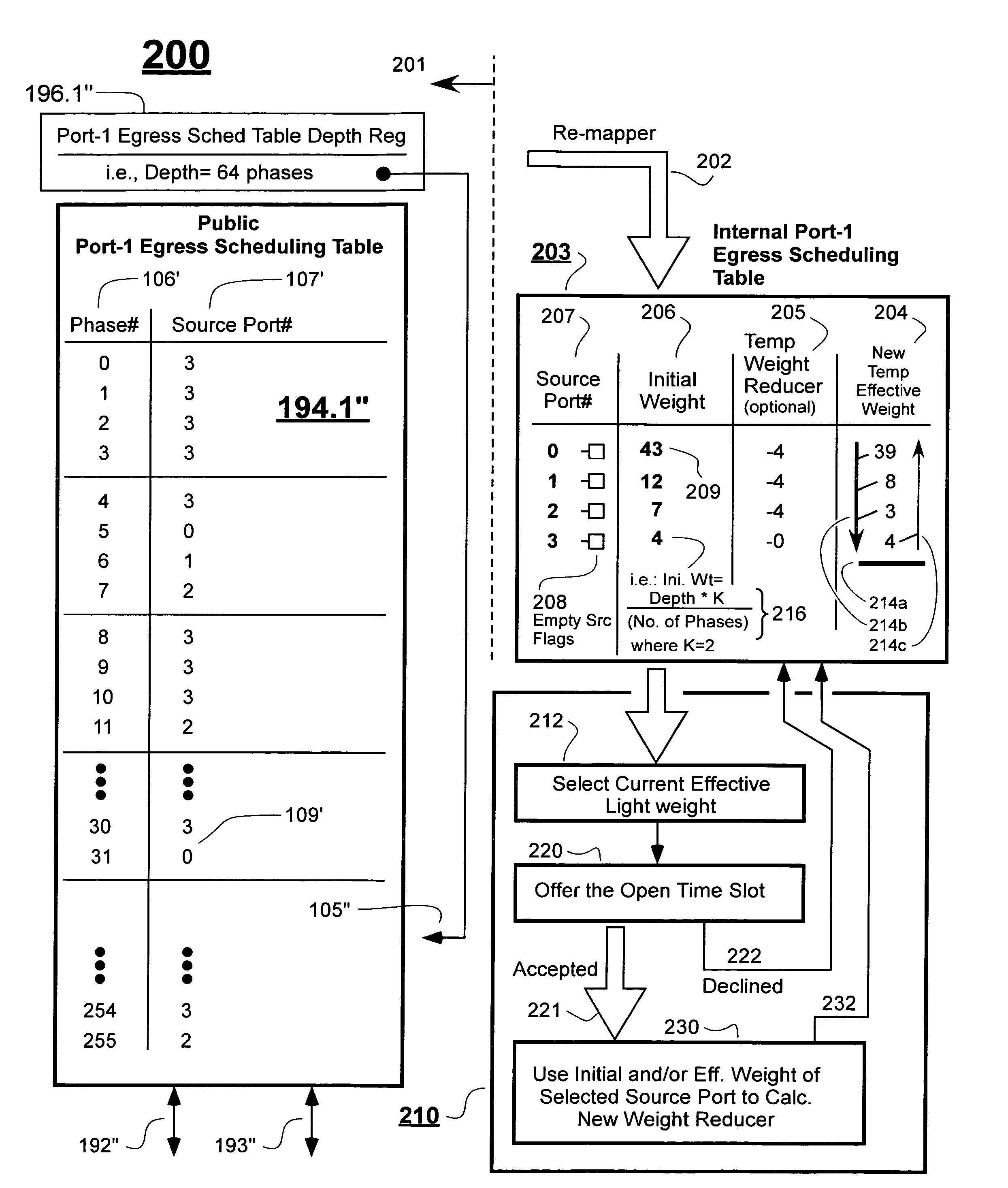

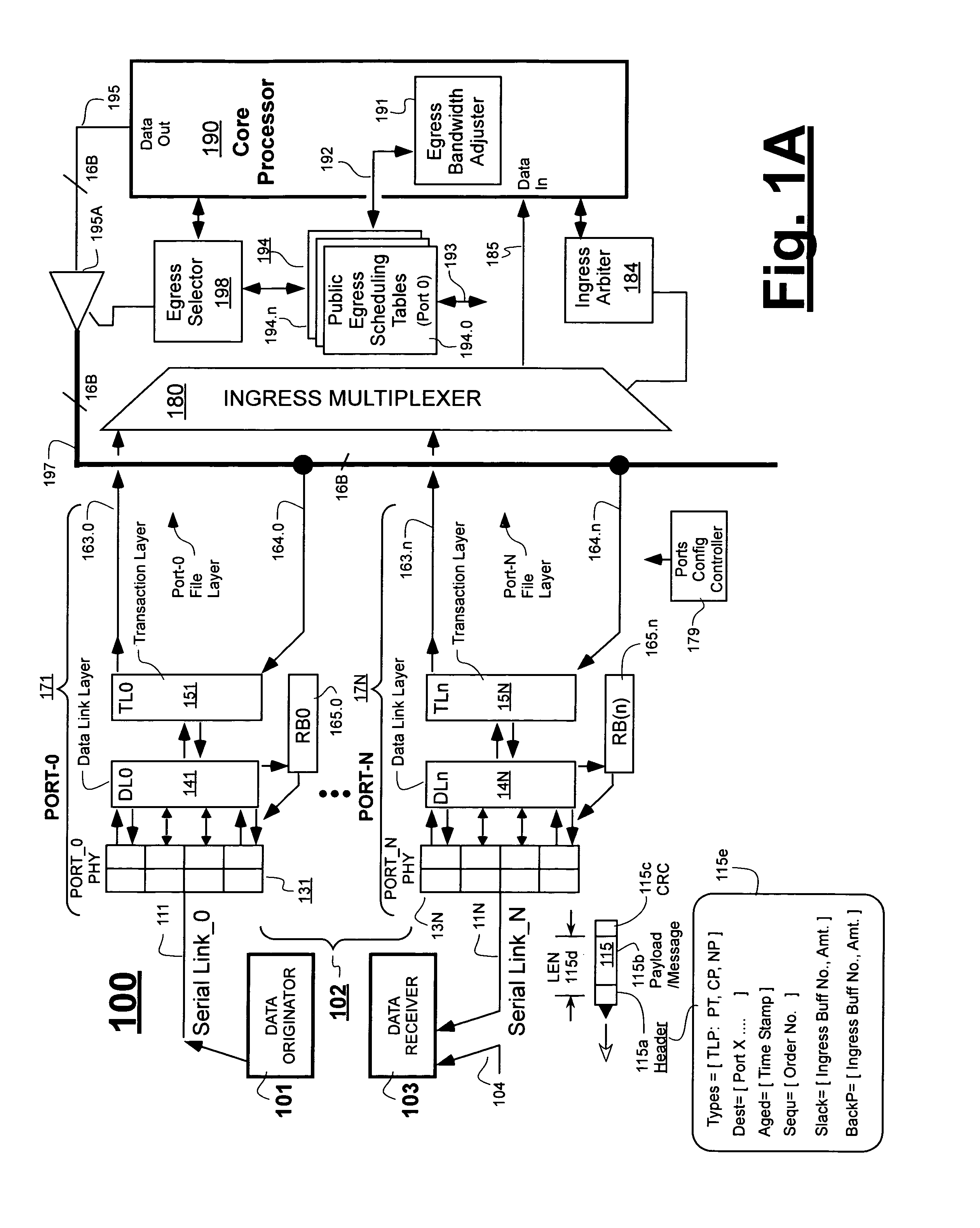

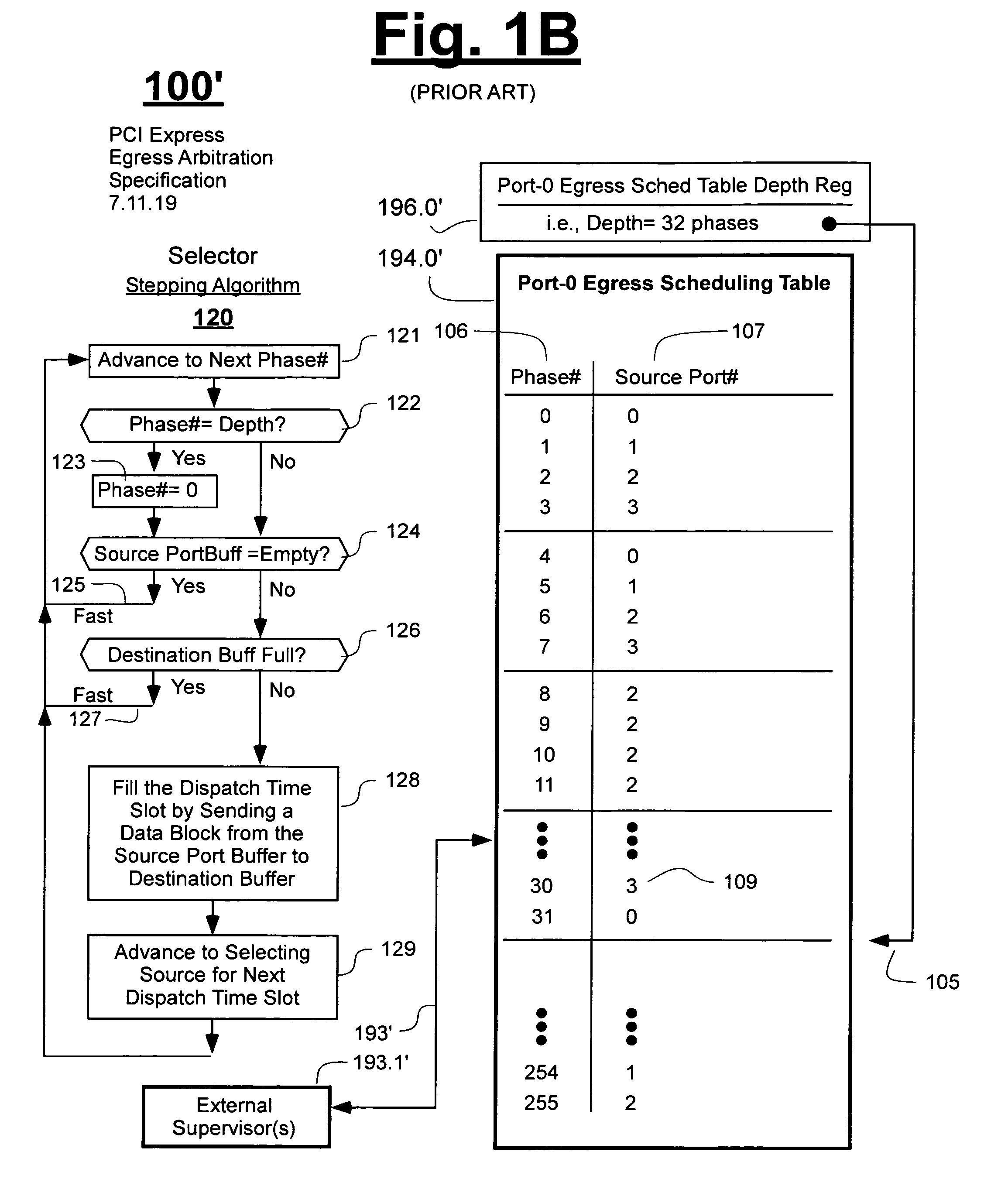

Method of improving over protocol-required scheduling tables while maintaining same

ActiveUS8462628B2Different data structureEfficient and cost-effective and flexibleError preventionTransmission systemsData sourceParallel processing

Owner:MICROSEMI STORAGE SOLUTIONS US INC

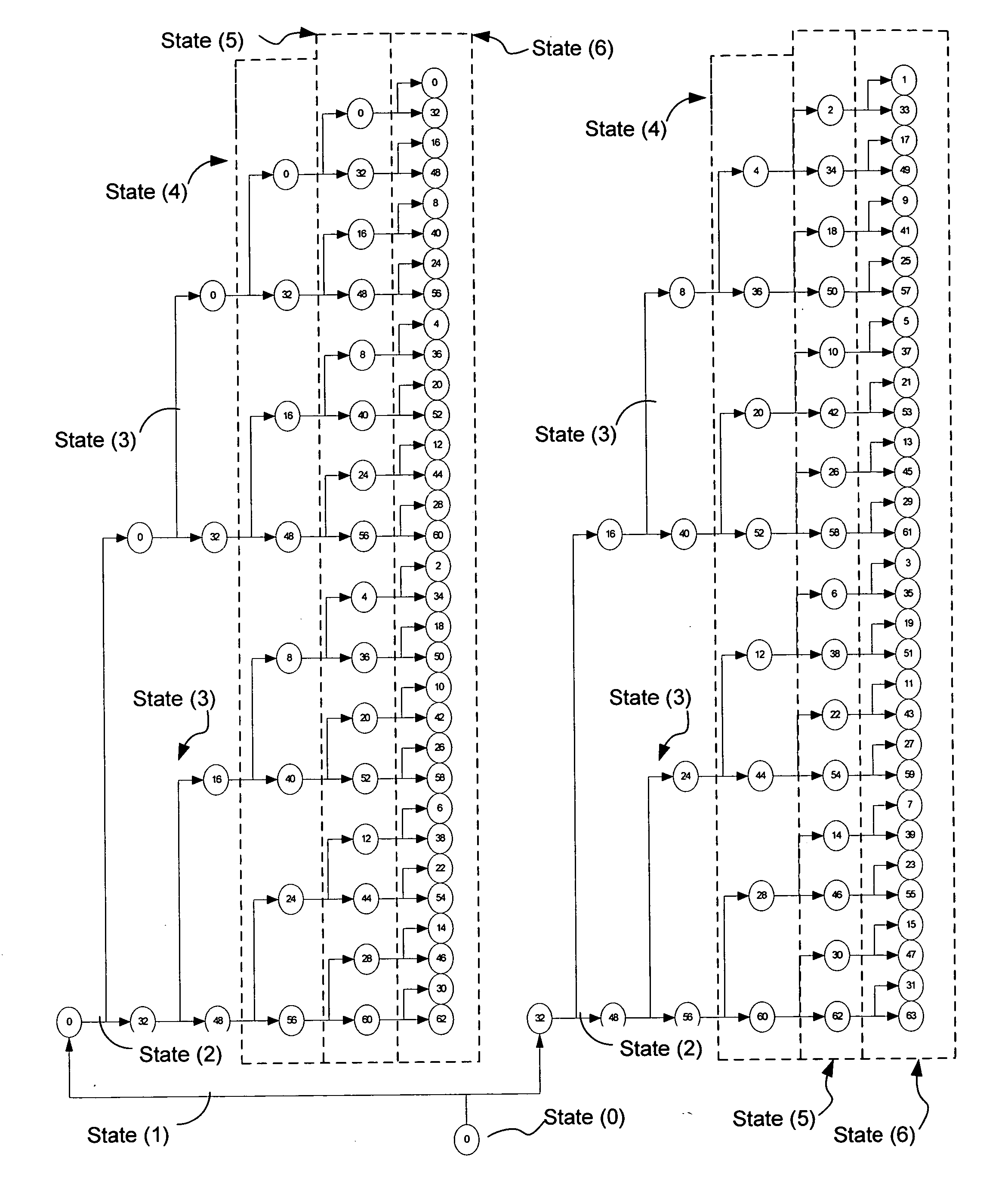

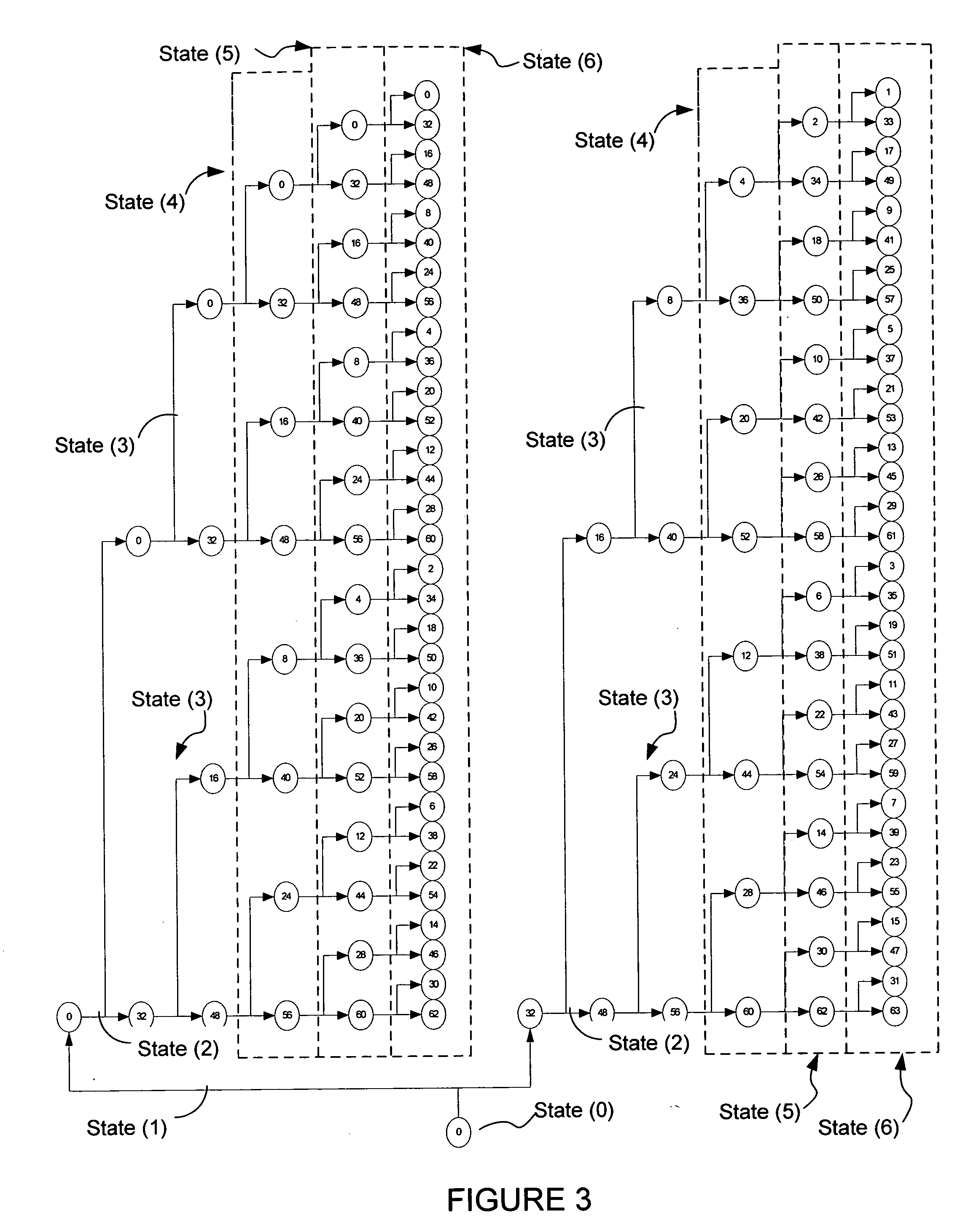

Viterbi decoder with survivor bits stored to support look-ahead addressing

ActiveUS20050182999A1Improve throughputRule out the possibilityError correction/detection using convolutional codesOther decoding techniquesViterbi decoderData integrity

In accordance with an embodiment of the present invention, a Viterbi decoder is described that operates on convolutional error correcting codes. The decoder allows for a pipelined architecture and a unique partitioning of survivor memory to maintain data integrity. Throughput rate is improved and stalling minimized by accessing memory words using a look-ahead function to fill the pipeline.

Owner:NVIDIA CORP

Bufferless Transactional Memory with Runahead Execution

ActiveUS20090019247A1Unauthorized memory use protectionProgram controlParallel computingTransactional memory

A method for executing an atomic transaction includes receiving the atomic transaction at a processor for execution, determining a transactional memory location needed in memory for the atomic transaction, reserving the transactional memory location while all computation and store operations of the atomic transaction are deferred, and performing the computation and store operations, wherein the atomic transaction cannot be aborted after the reservation, and further wherein the store operation is directly committed to the memory without being buffered.

Owner:IBM CORP

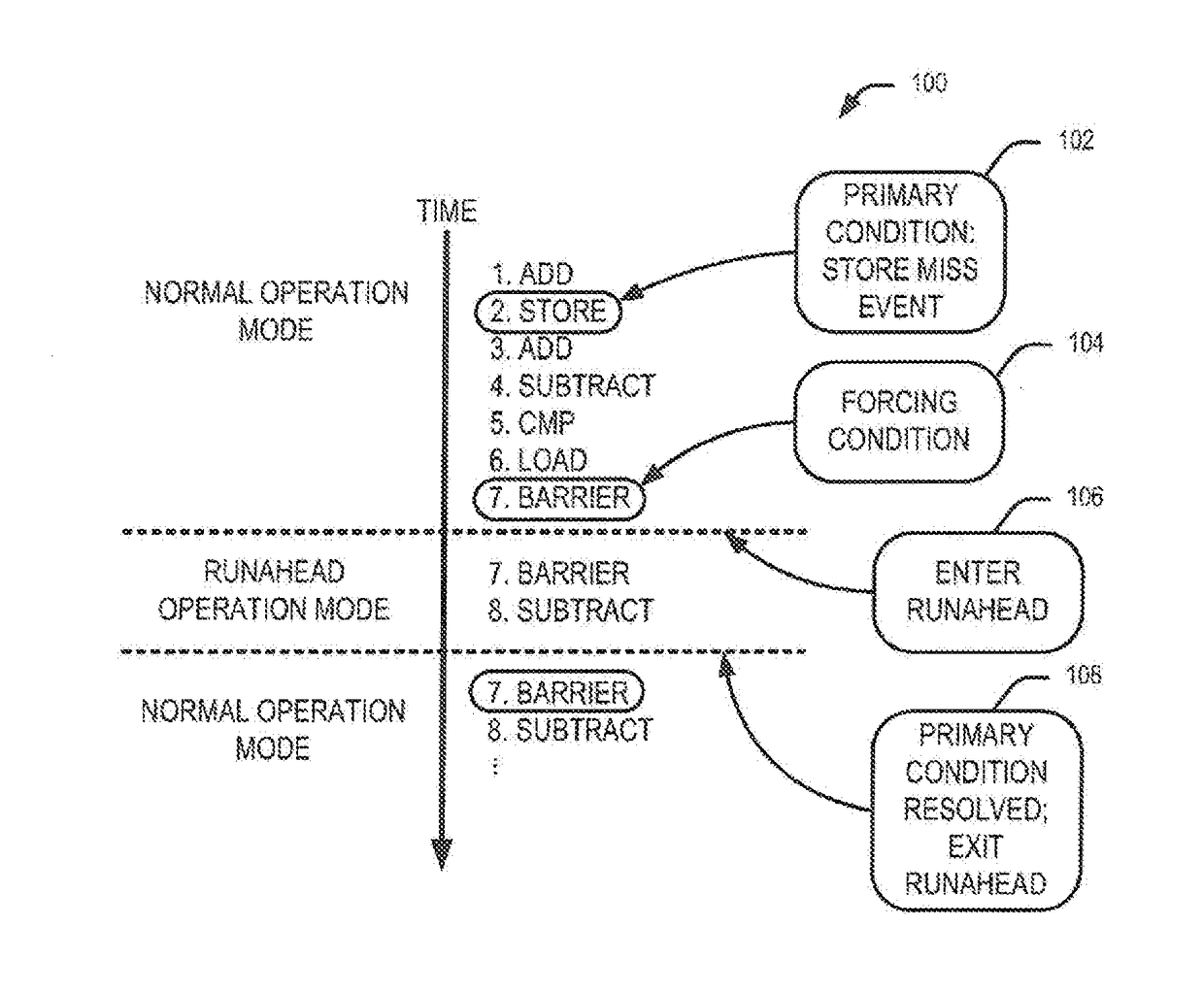

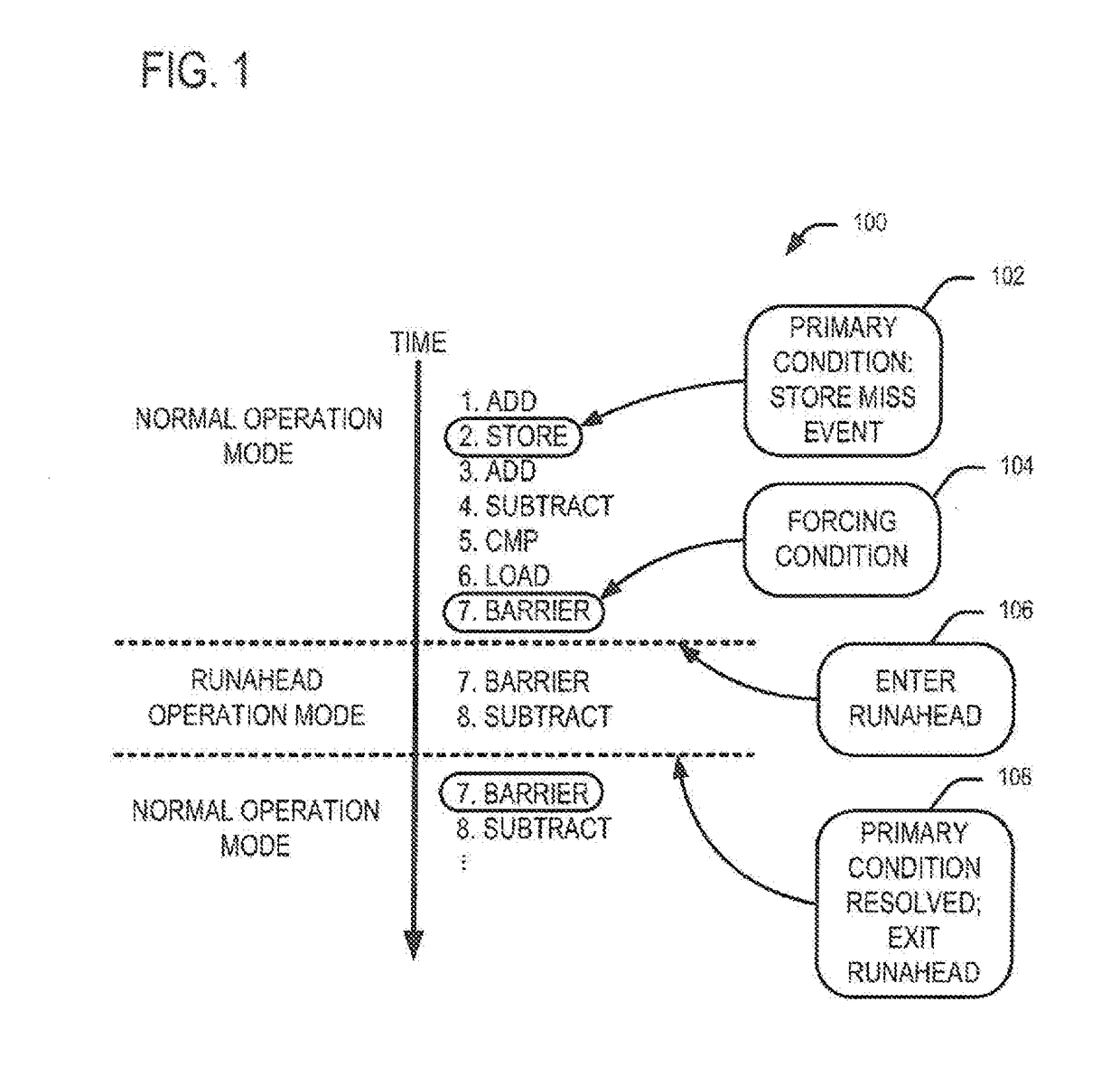

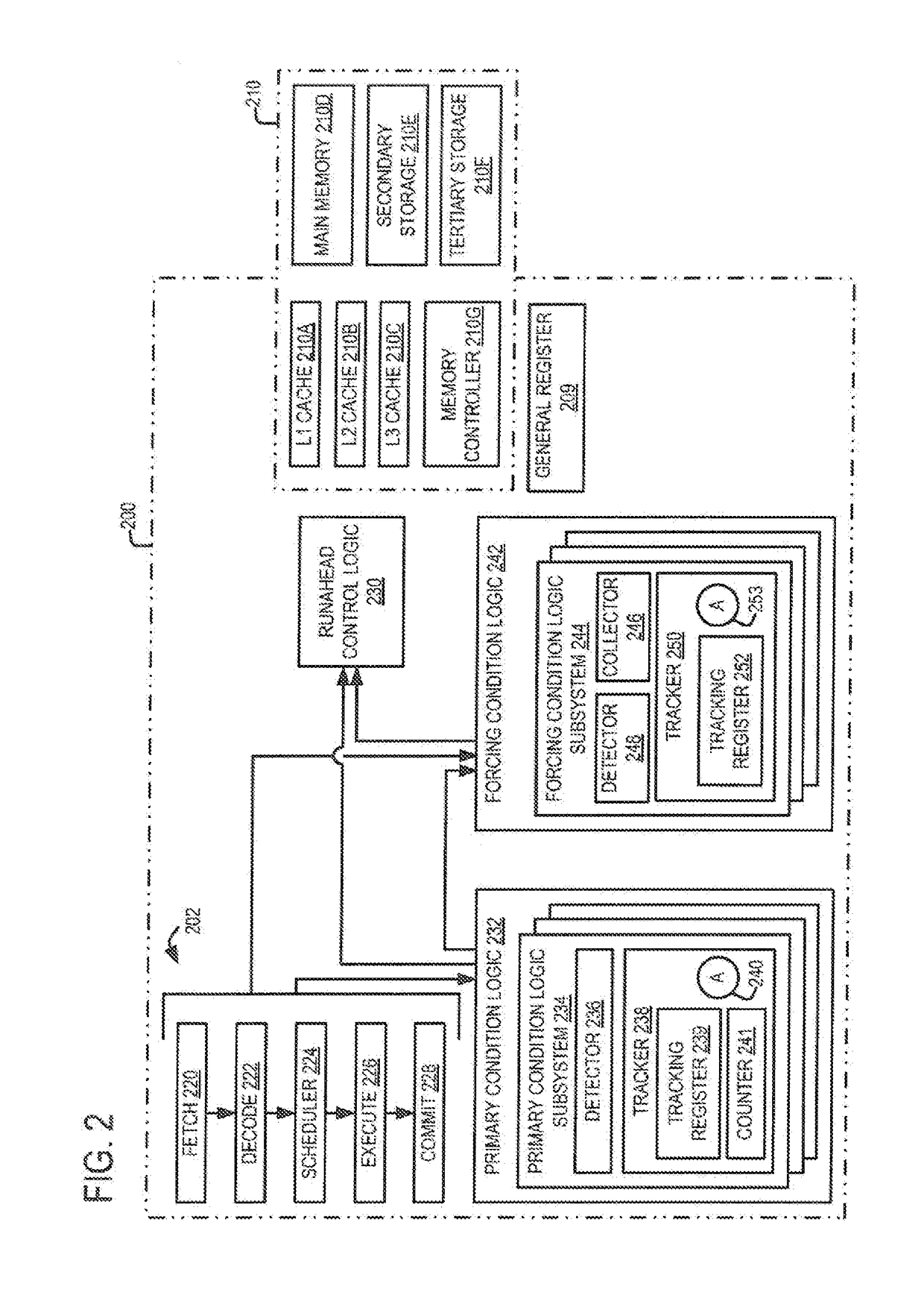

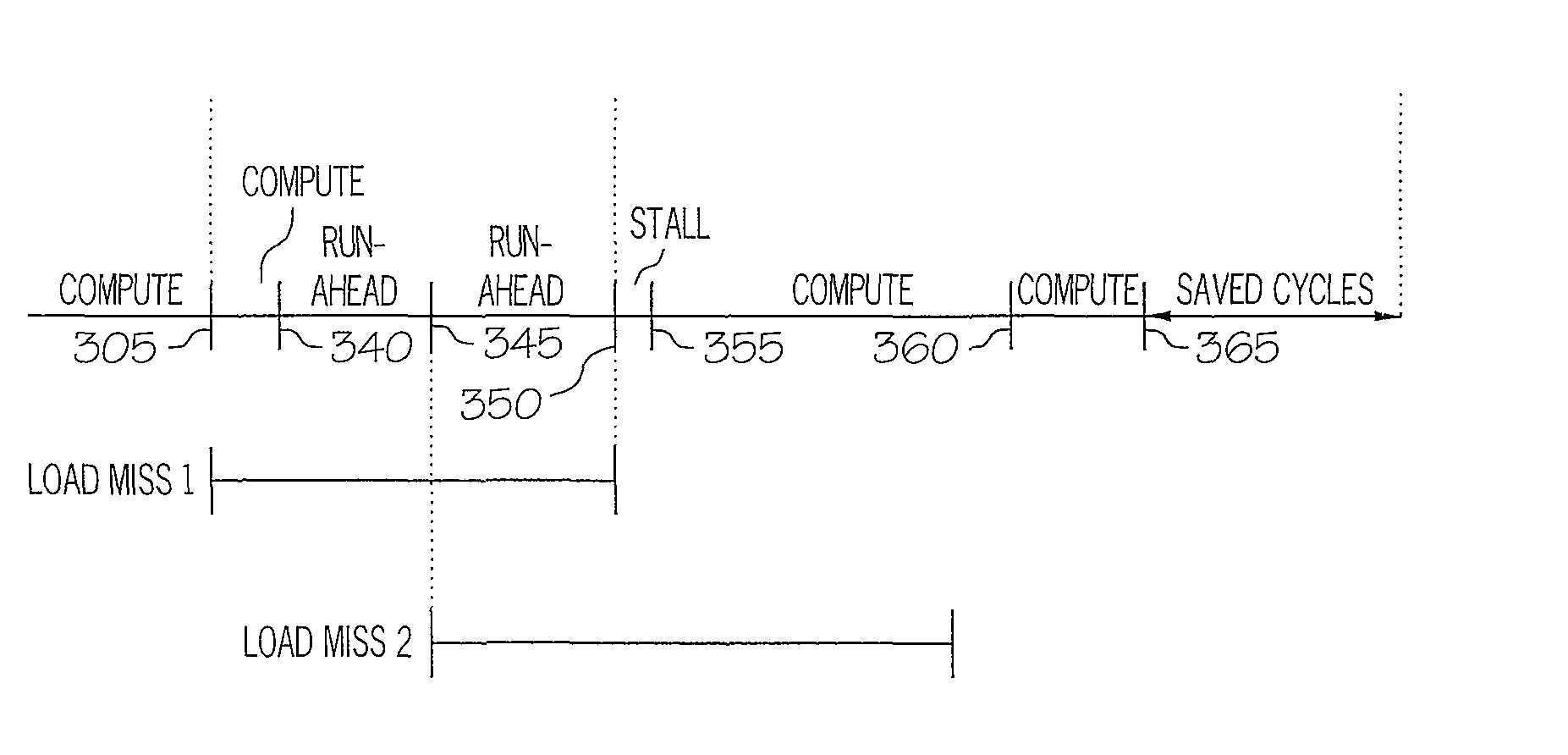

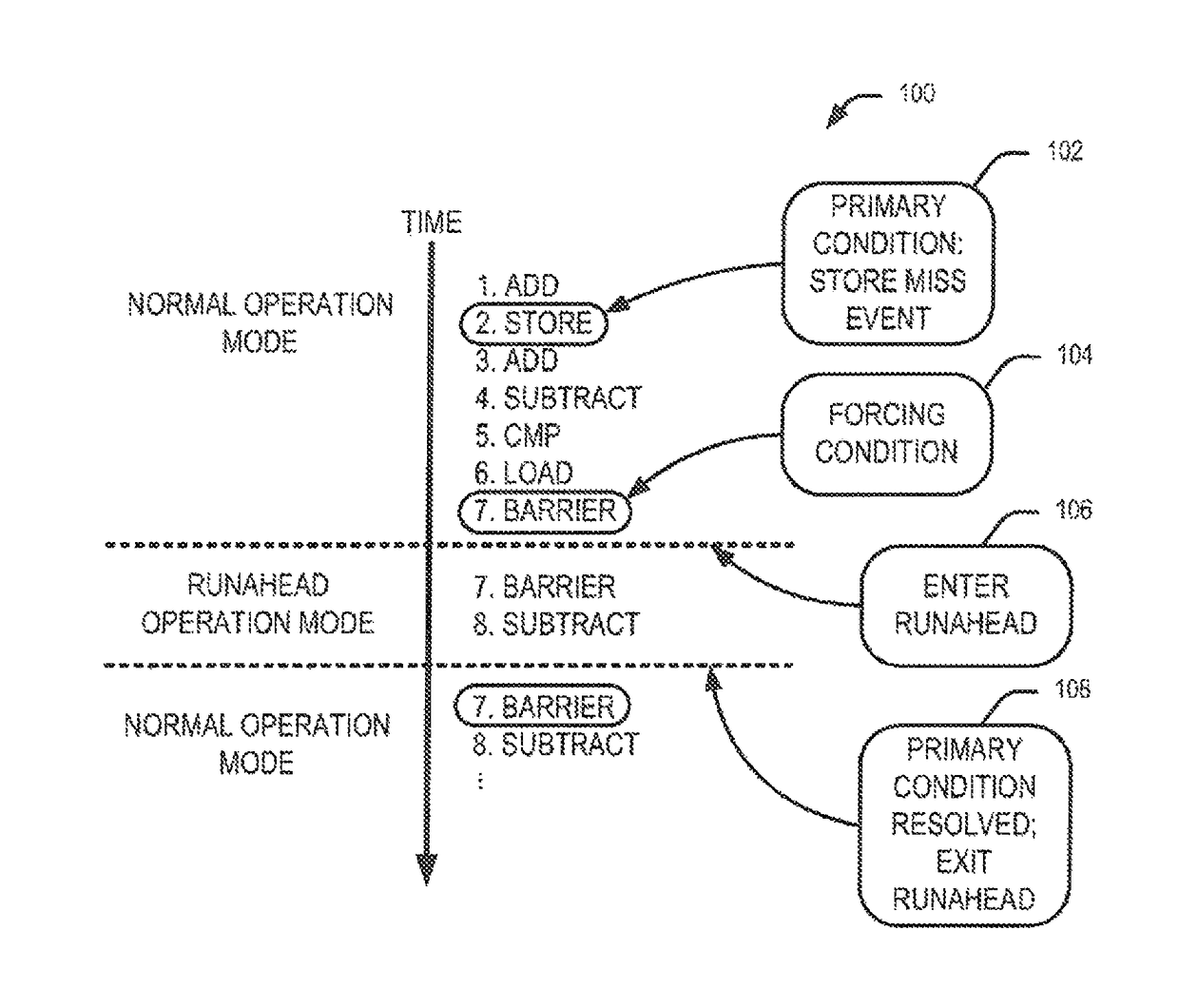

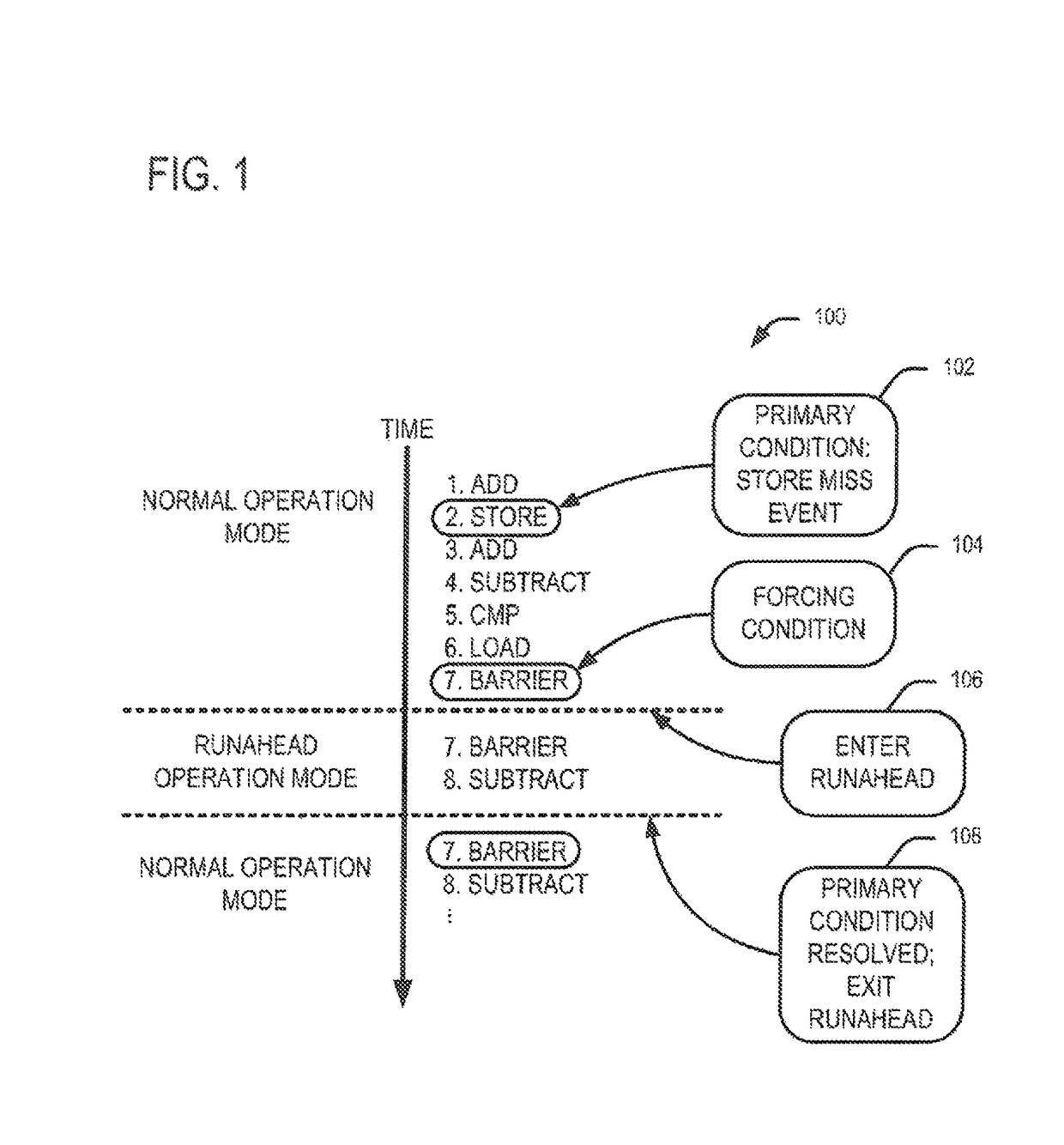

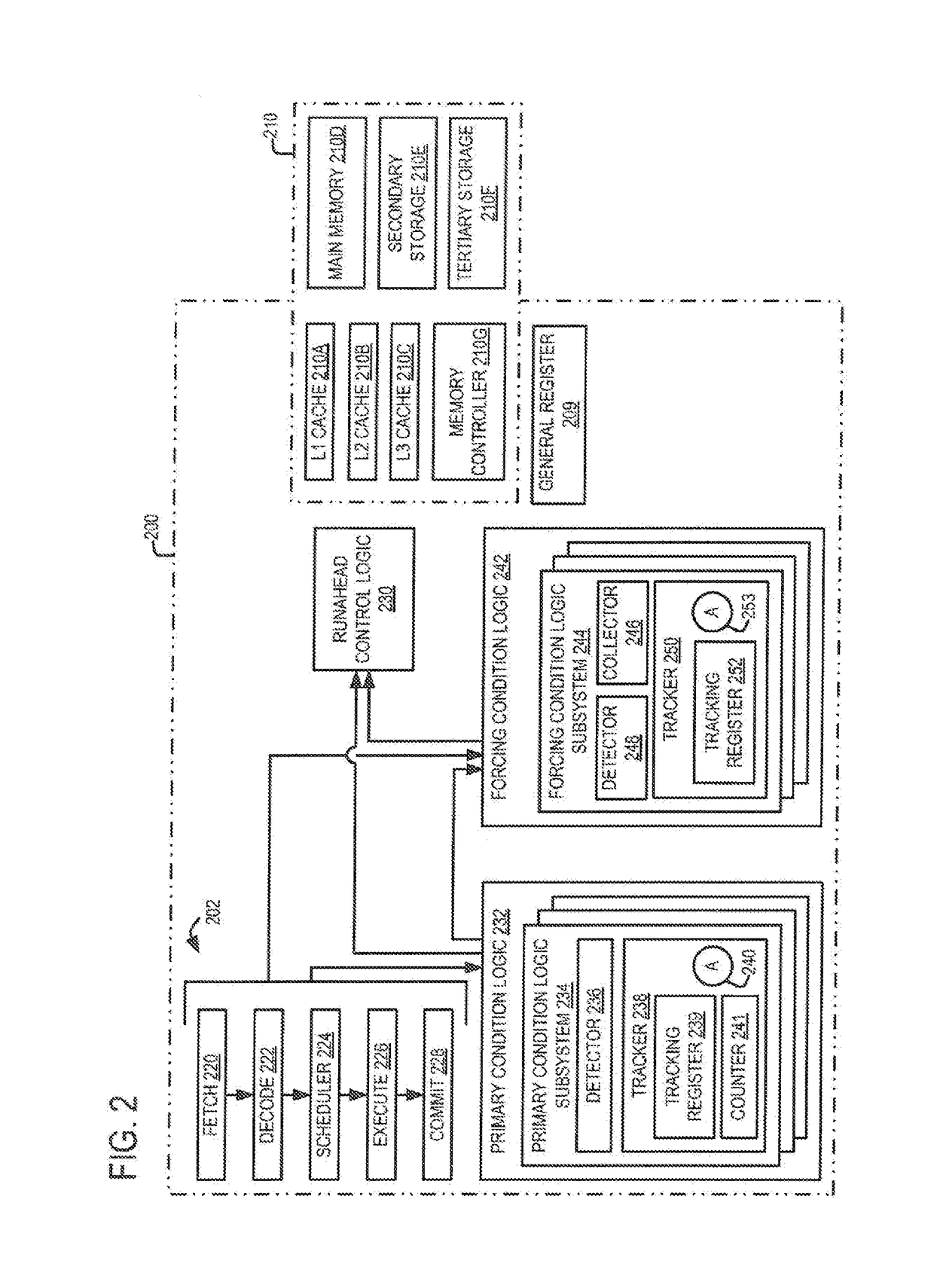

Lazy runahead operation for a microprocessor

ActiveUS20170199778A1Concurrent instruction executionNon-redundant fault processingSoftware engineeringOperating system

Embodiments related to managing lazy runahead operations at a microprocessor are disclosed. For example, an embodiment of a method for operating a microprocessor described herein includes identifying a primary condition that triggers an unresolved state of the microprocessor. The example method also includes identifying a forcing condition that compels resolution of the unresolved state. The example method also includes, in response to identification of the forcing condition, causing the microprocessor to enter a runahead mode.

Owner:NVIDIA CORP

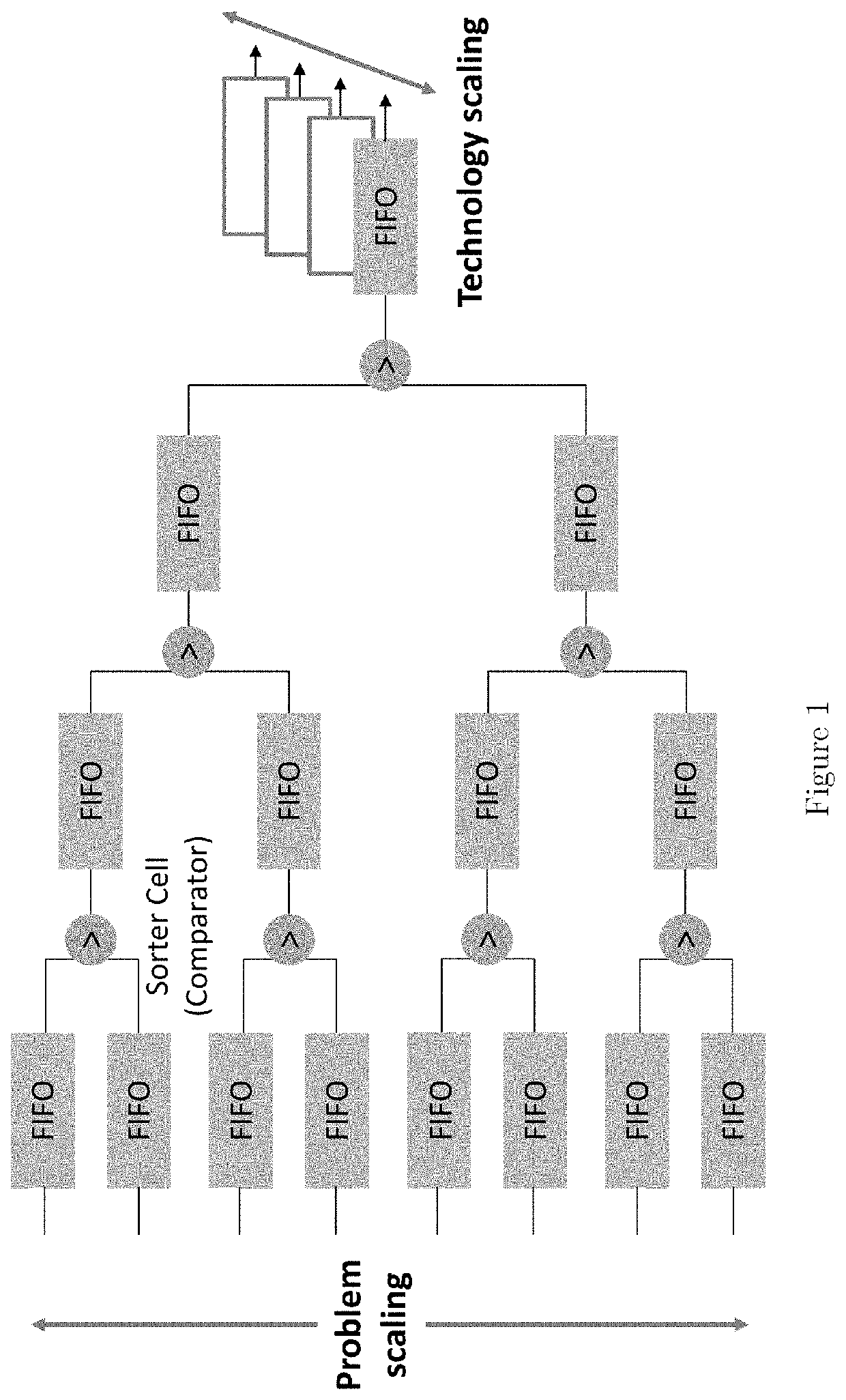

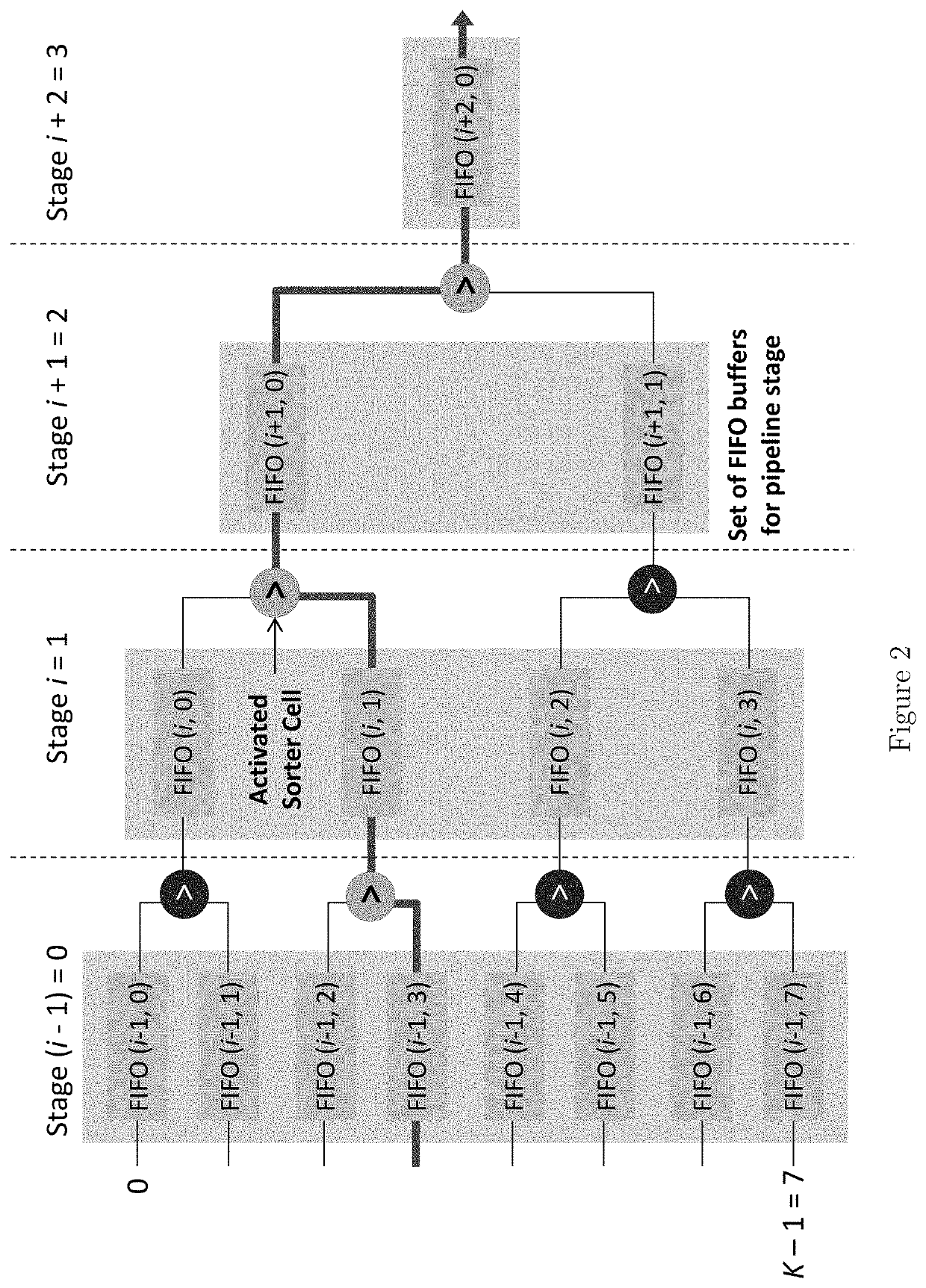

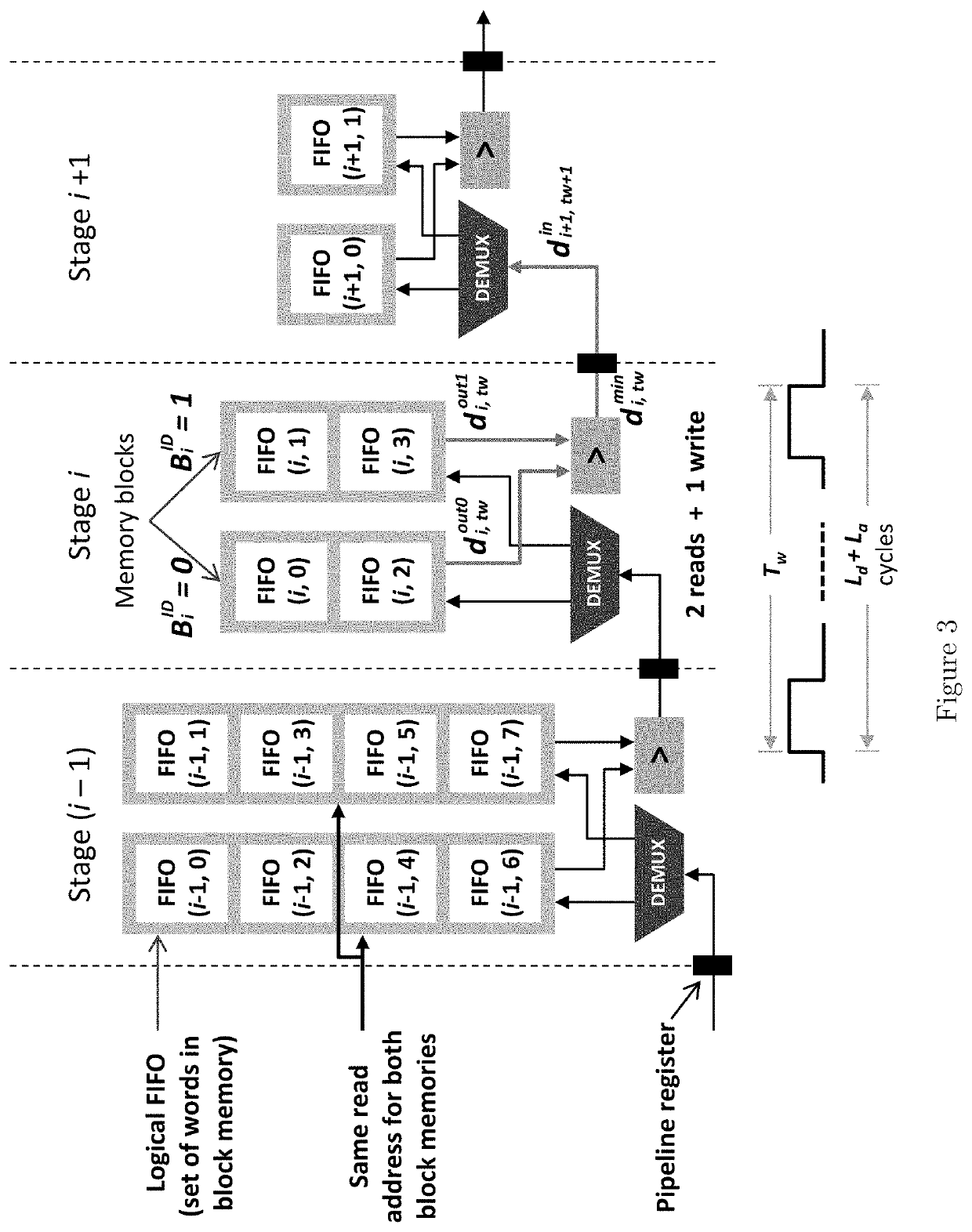

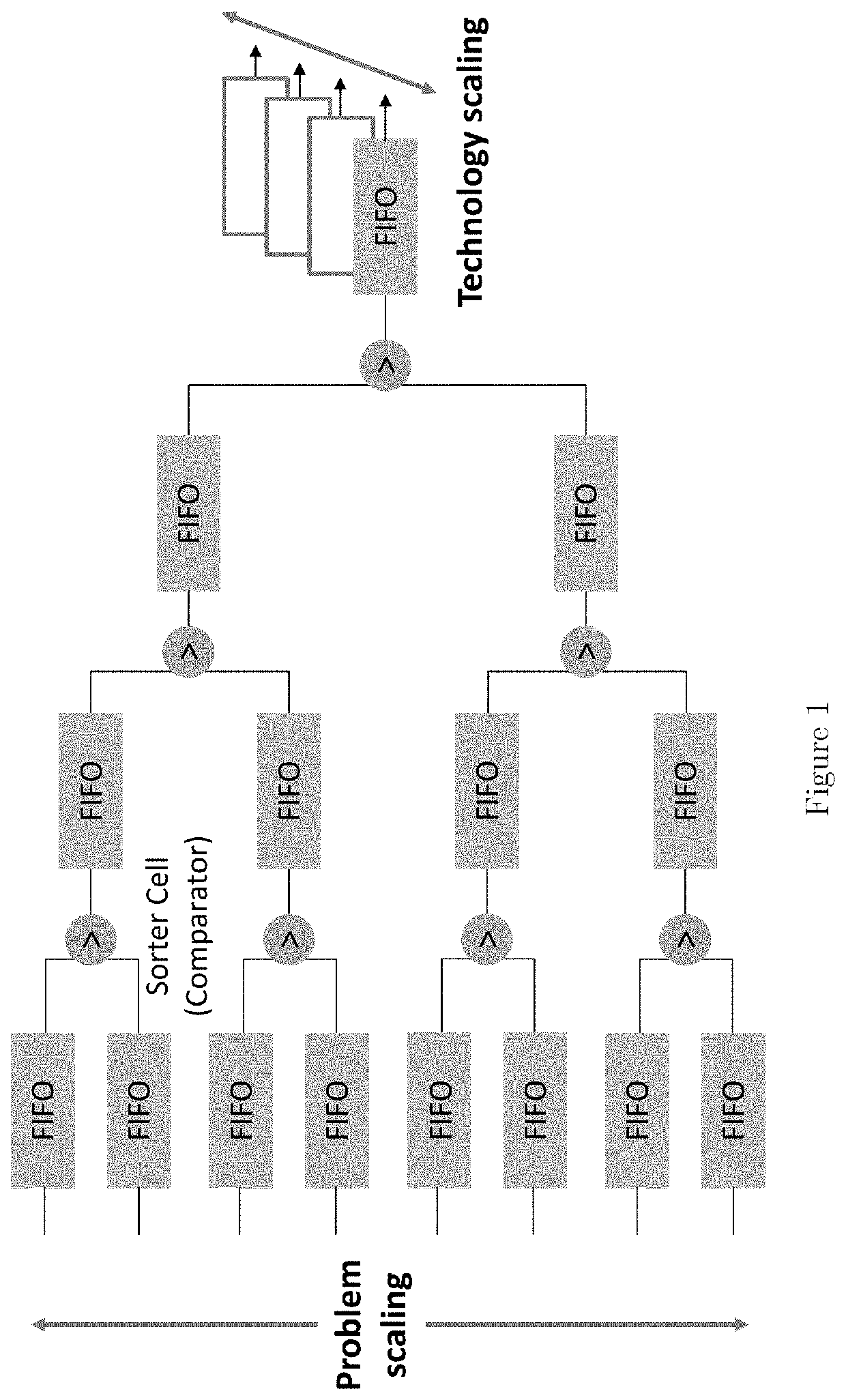

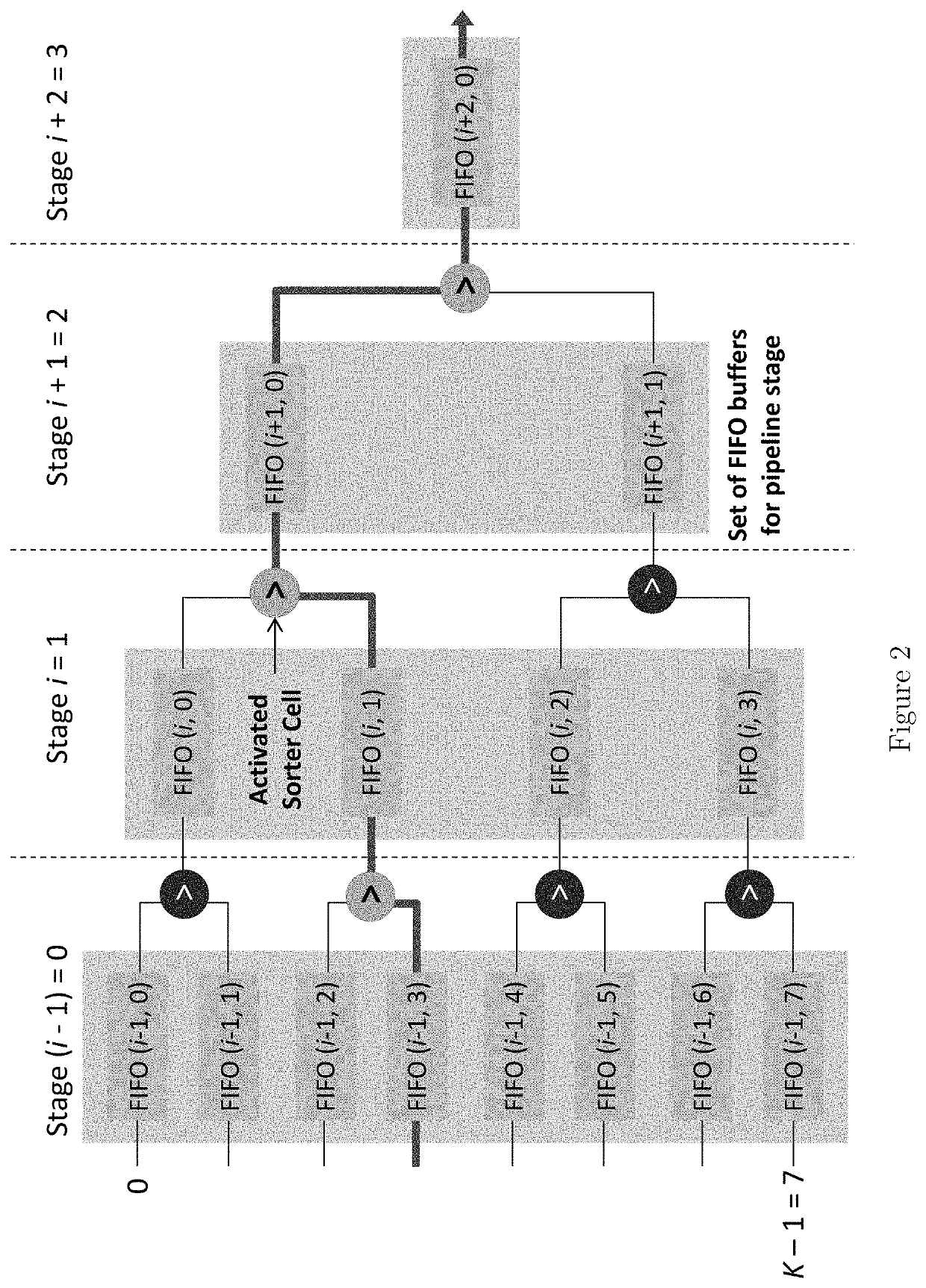

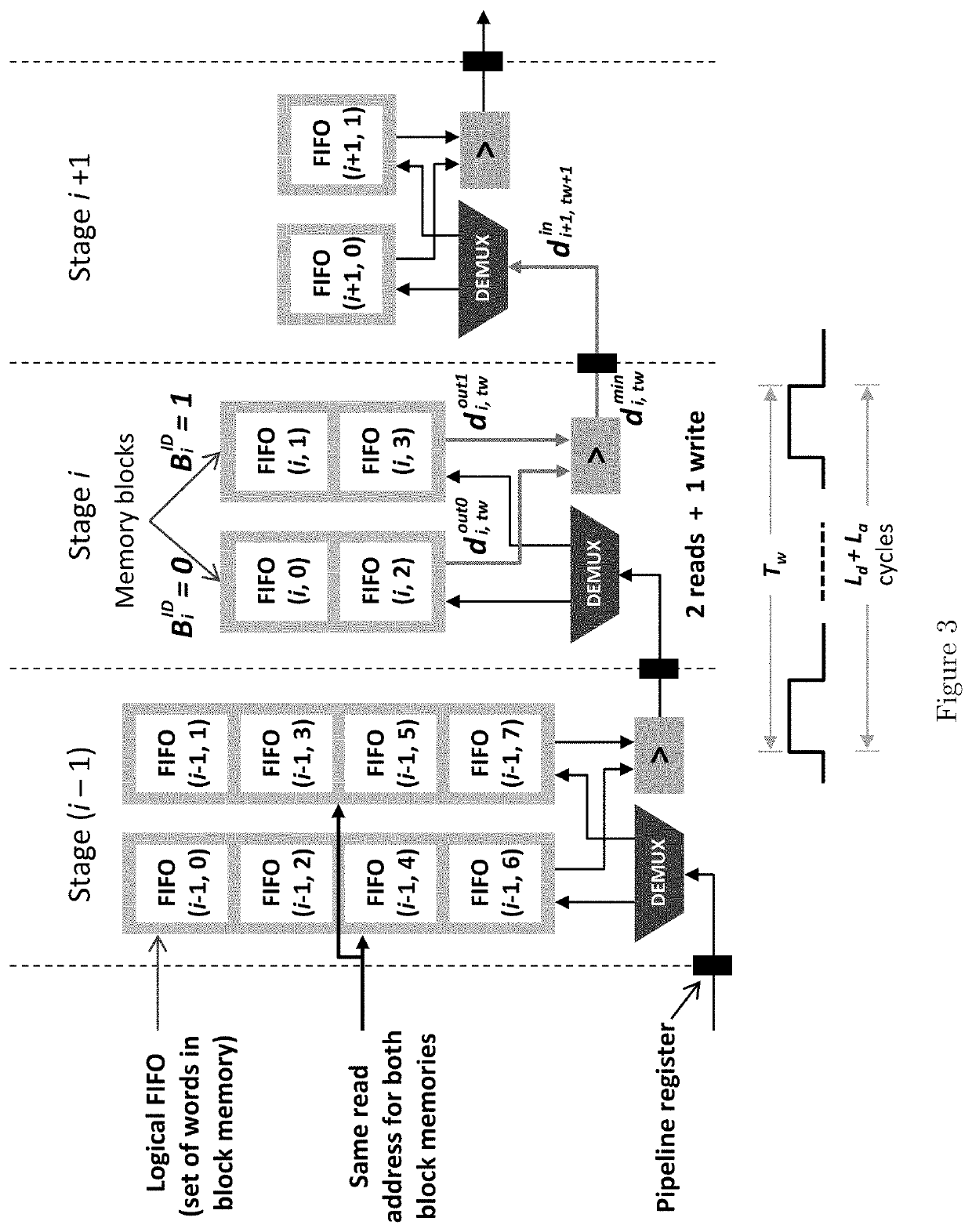

High performance merge sort with scalable parallelization and full-throughput reduction

ActiveUS20200159492A1Improve performanceMore scalableComputation using non-contact making devicesInterprogram communicationTransmission throughputParallel computing

Disclosed herein is a novel multi-way merge network, referred to herein as a Hybrid Comparison Look Ahead Merge (HCLAM), which incurs significantly less resource consumption as scaled to handle larger problems. In addition, a parallelization scheme is disclosed, referred to herein as Parallelization by Radix Pre-sorter (PRaP), which enables an increase in streaming throughput of the merge network. Furthermore, high performance reduction scheme is disclosed to achieve full throughput.

Owner:CARNEGIE MELLON UNIV

Store-to-load forwarding mechanism for processor runahead mode operation

InactiveUS8639886B2Memory adressing/allocation/relocationProgram controlLoad instructionParallel computing

A system and method to optimize runahead operation for a processor without use of a separate explicit runahead cache structure. Rather than simply dropping store instructions in a processor runahead mode, store instructions write their results in an existing processor store queue, although store instructions are not allowed to update processor caches and system memory. Use of the store queue during runahead mode to hold store instruction results allows more recent runahead load instructions to search retired store queue entries in the store queue for matching addresses to utilize data from the retired, but still searchable, store instructions. Retired store instructions could be either runahead store instructions retired, or retired store instructions that executed before entering runahead mode.

Owner:IBM CORP

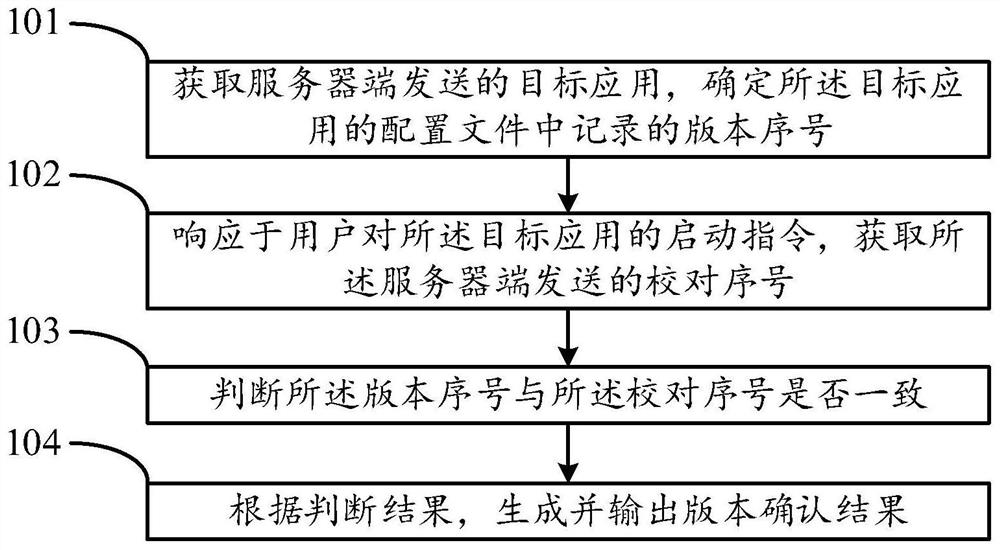

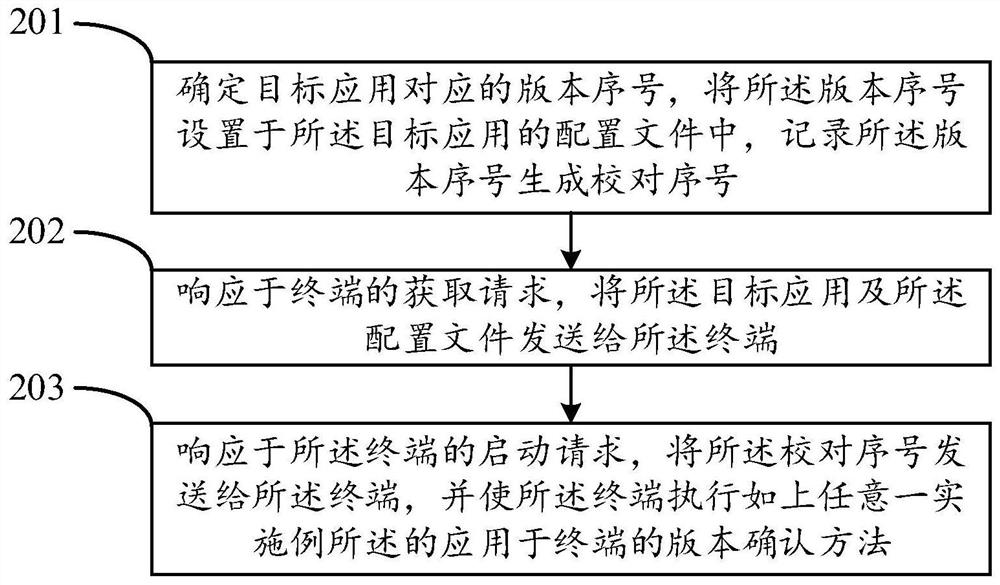

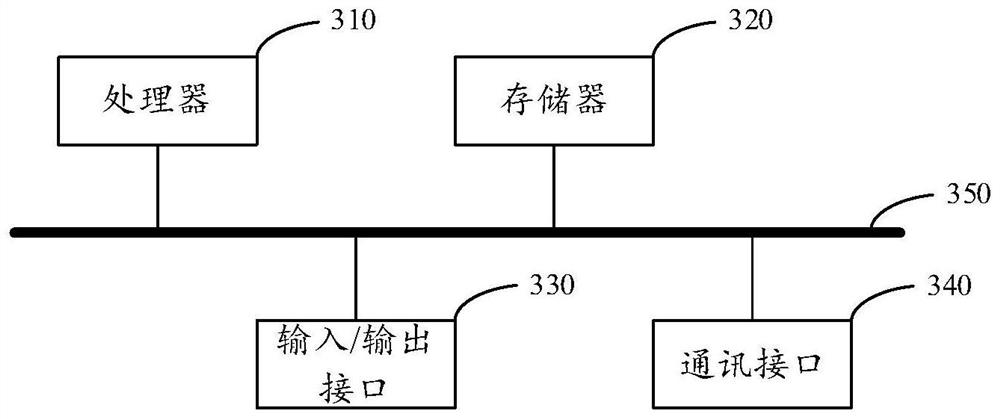

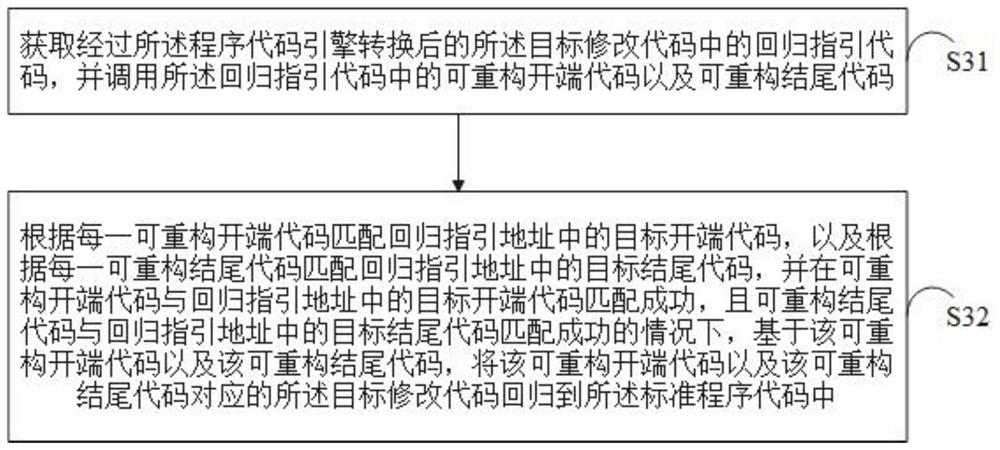

Version confirmation method and system, electronic equipment and storage medium

PendingCN112486946AQuick proofreadingSolving Consistency IssuesSpecial data processing applicationsDatabase design/maintainanceSoftware engineeringCorrection number

One or more embodiments of the invention provide a version confirmation method and system, electronic equipment and a storage medium, and the method comprises the steps: obtaining a target applicationsent by a server, and determining a version serial number recorded in a configuration file of the target application; in response to a starting instruction of a user for the target application, obtaining a proofreading serial number sent by the server side; judging whether the version serial number is consistent with the proofreading serial number or not; and generating and outputting a version confirmation result according to the judgment result. According to one or more embodiments of the invention, the version serial number is recorded in the configuration file of the target application inadvance, so that the version serial number can be obtained in advance when the user starts the application, and the version serial number can be quickly corrected with the correction number sent by the server. Therefore, the situation that version consistency is determined through manual version checking is avoided, the checking efficiency is improved, the situation of missing checking or wrong checking is prevented, and the checking accuracy is improved.

Owner:CHINA LIFE INSURANCE COMPANY

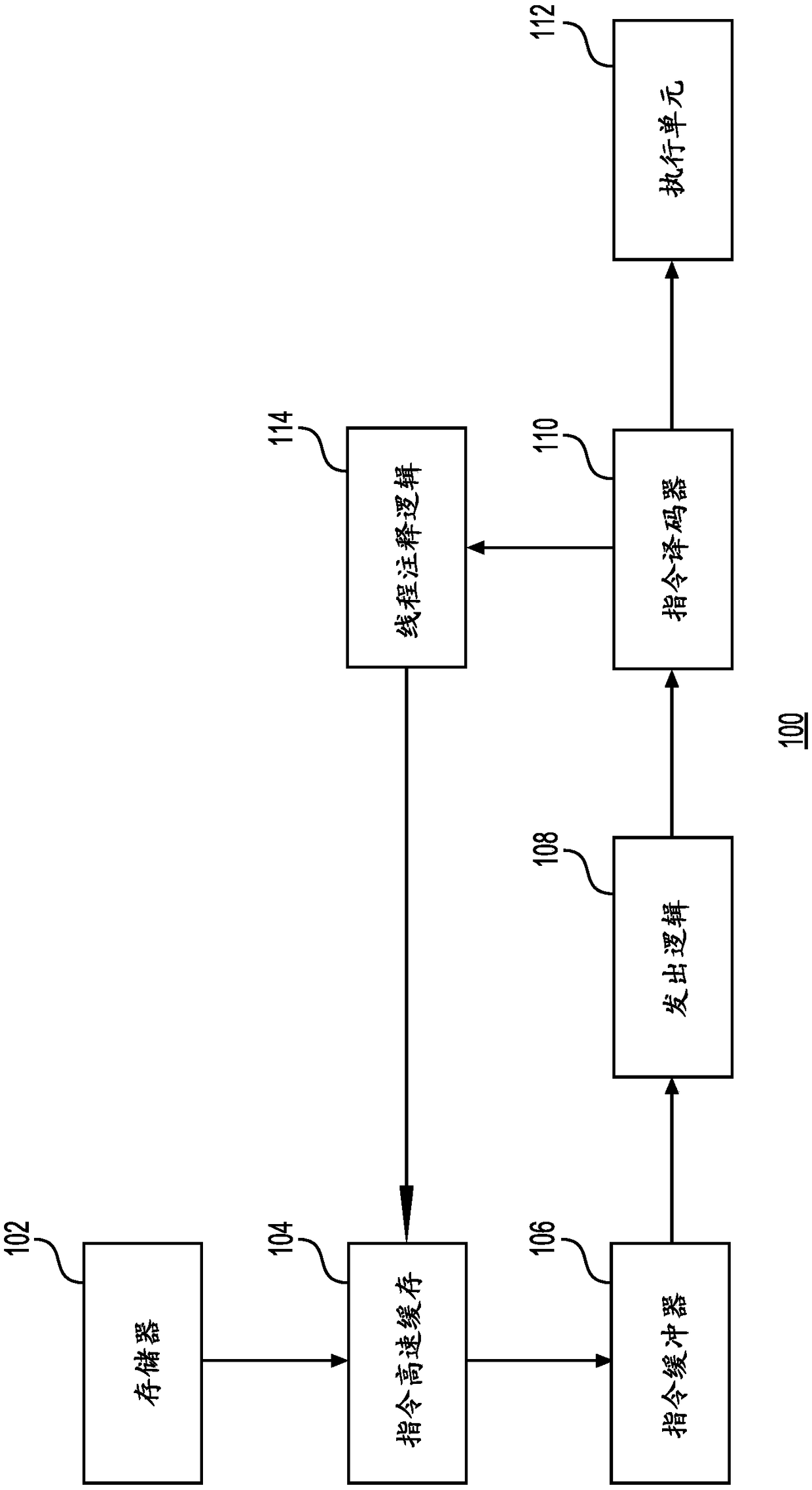

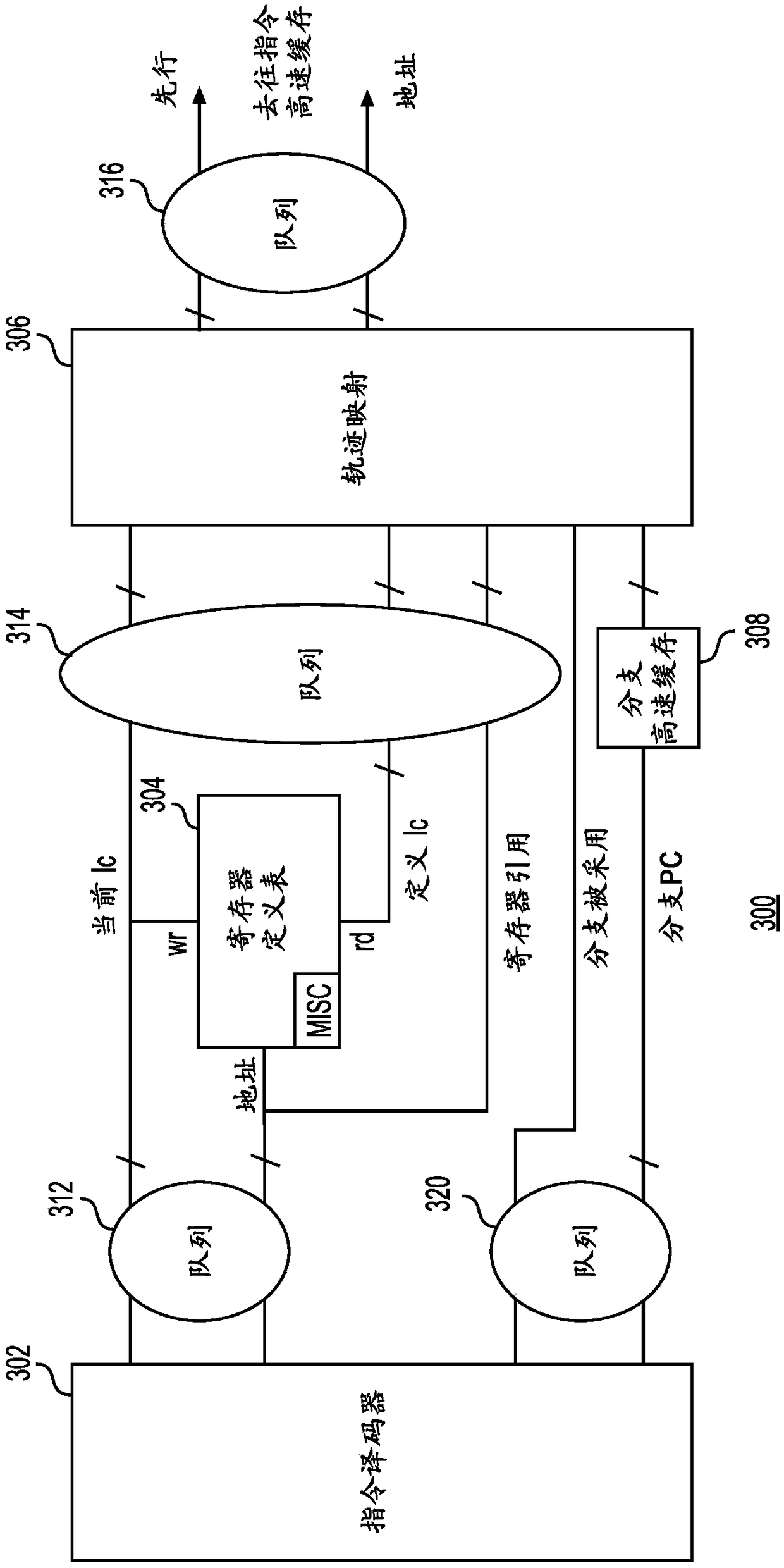

Processor with instruction lookahead issue logic

ActiveCN109074258AMemory architecture accessing/allocationInstruction analysisComputer architectureParallel computing

A processor having an instruction cache for storing a plurality of instructions is provided. The processor further includes annotation logic configured to determine a lookahead distance associated with an instruction and annotate the at least one instruction cache with the lookahead distance. The lookahead distance may correspond to a number of instructions that separates an instruction that references a register from the most recent register definition. The lookahead distance may indicate the shortest distance to a later instruction that references a register that this instruction defines.

Owner:MICROSOFT TECH LICENSING LLC

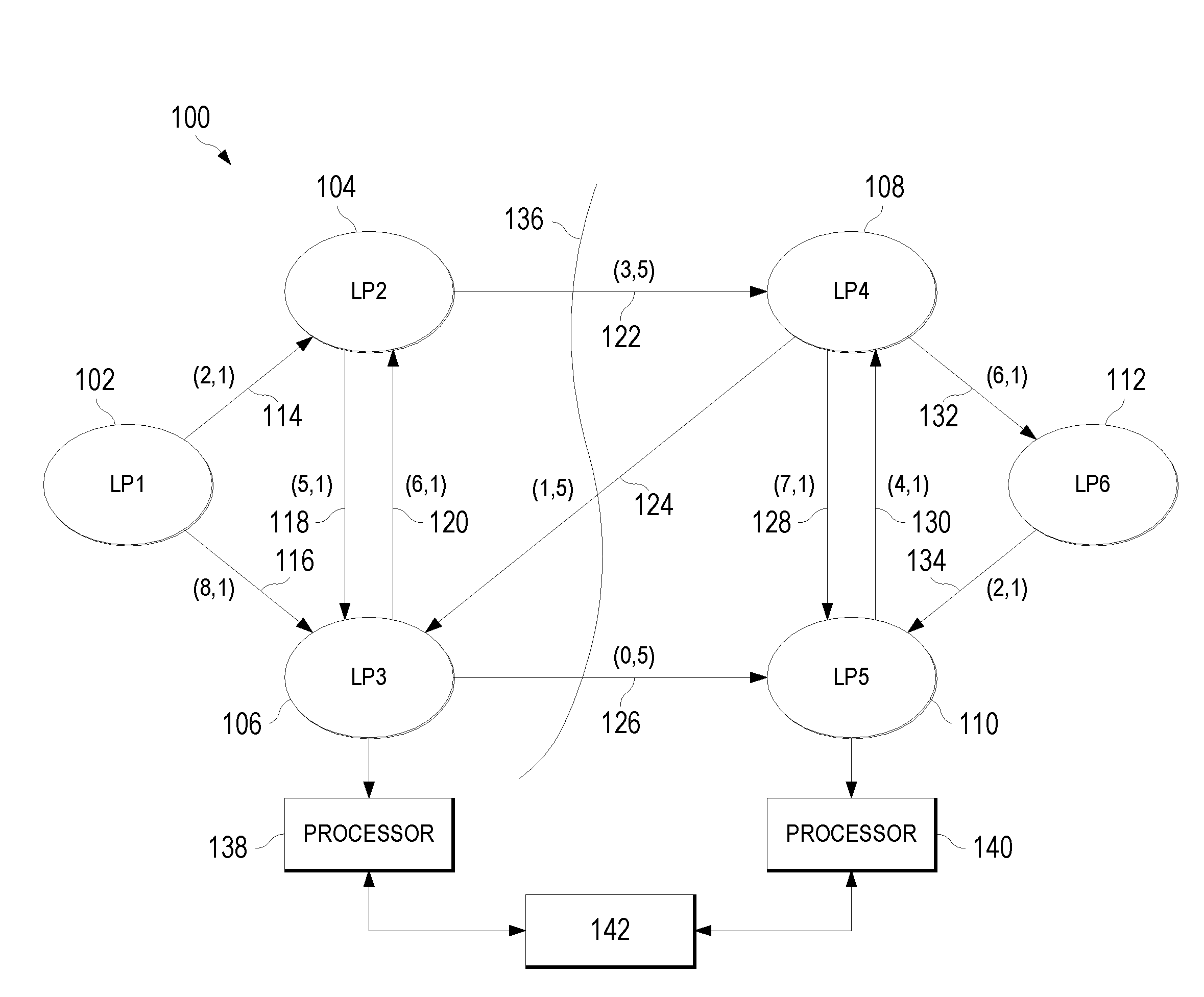

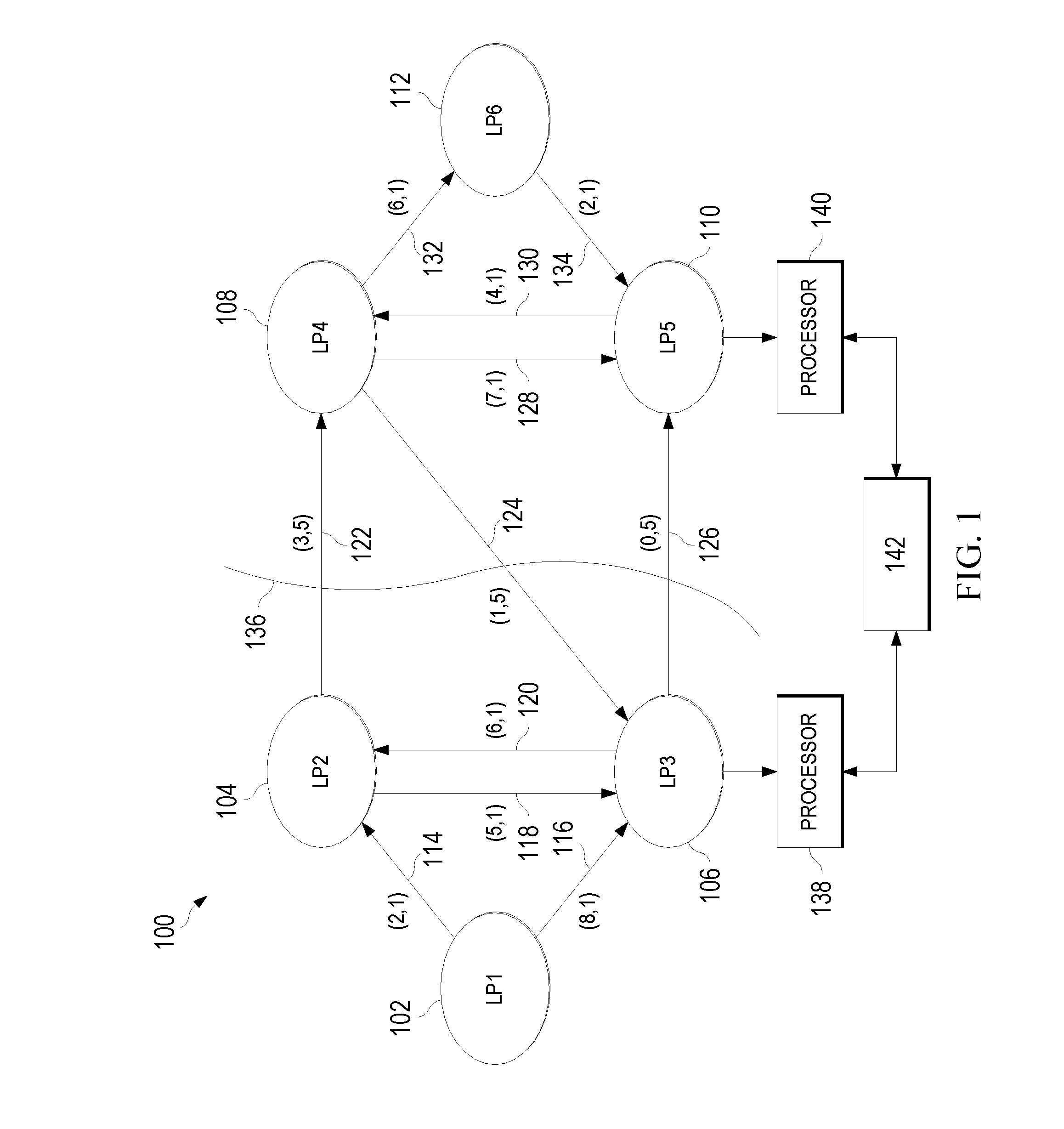

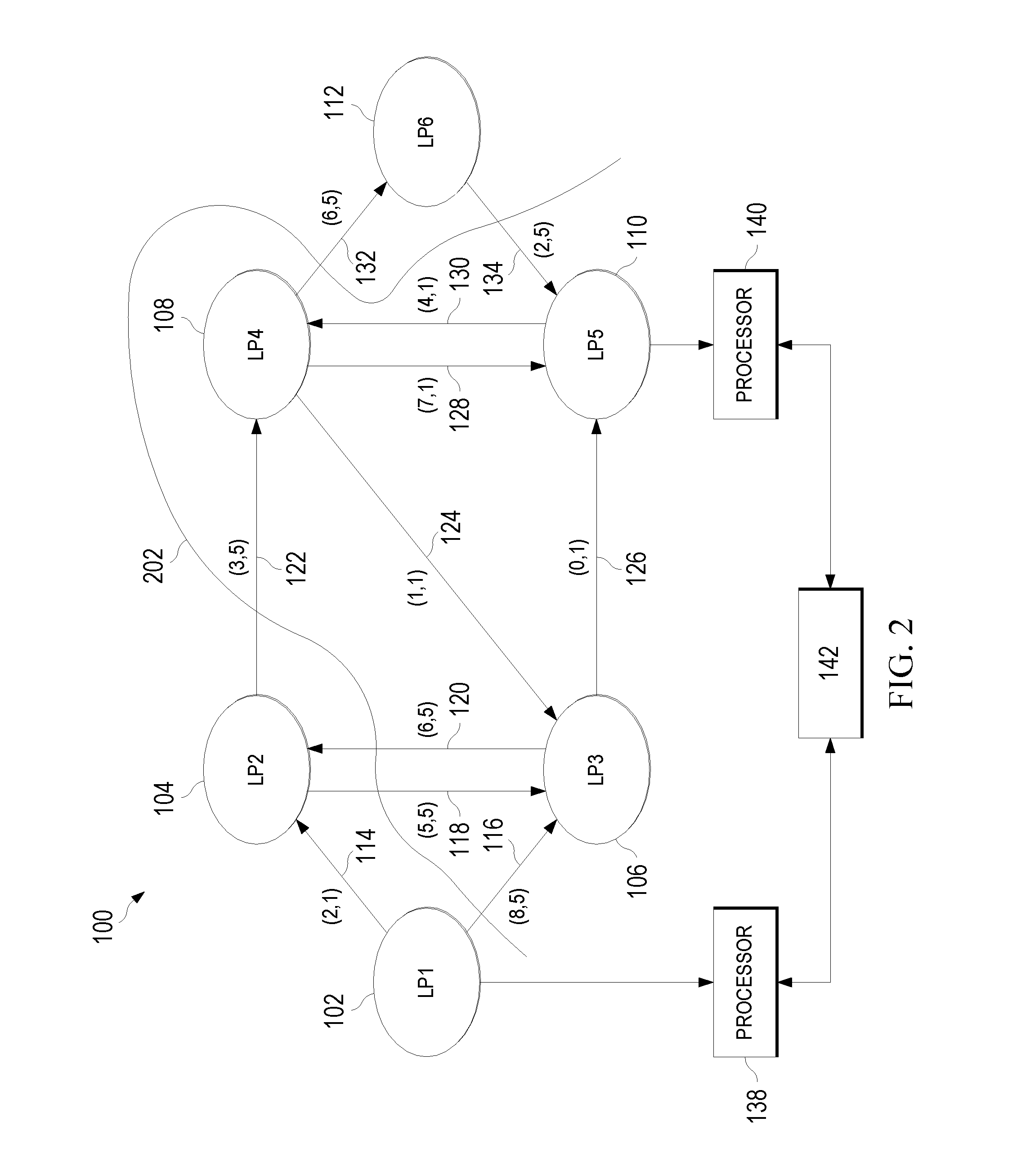

Workload Partitioning Procedure for Null Message-Based PDES

ActiveUS20160110226A1Accurate assessmentAccurate Performance EvaluationResource allocationCAD circuit designWorkloadComputer science

Owner:INT BUSINESS MASCH CORP

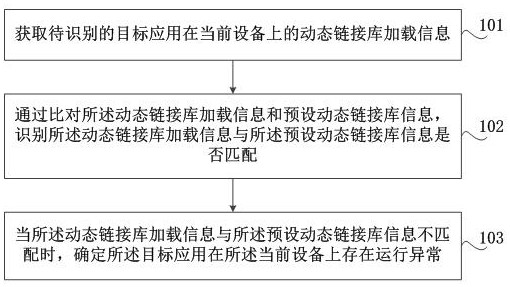

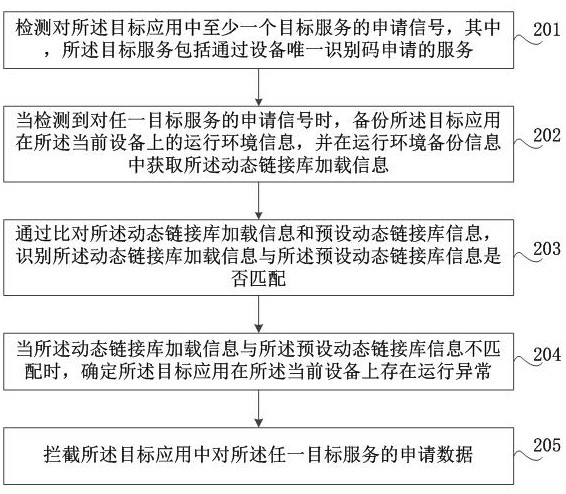

Abnormal recognition method and device, storage medium and computer equipment

InactiveCN114860351ADetect malicious behavior in timeProtect interestsPlatform integrity maintainanceProgram loading/initiatingEngineeringData mining

The invention discloses an exception recognition method and device, a storage medium and computer equipment. The method comprises the steps that dynamic link library loading information of a to-be-recognized target application on current equipment is acquired; identifying whether the dynamic link library loading information is matched with preset dynamic link library information or not by comparing the dynamic link library loading information with the preset dynamic link library information; and when the dynamic link library loading information is not matched with the preset dynamic link library information, determining that the target application runs abnormally on the current equipment. The malicious behavior of the virtual device unique identification code in the device can be found in time, a beforehand role is played in processing malicious persons and discovering malicious discount behaviors, and benefits of a platform, merchants and vast consumers are further protected.

Owner:ZHEJIANG KOUBEI NETWORK TECH CO LTD

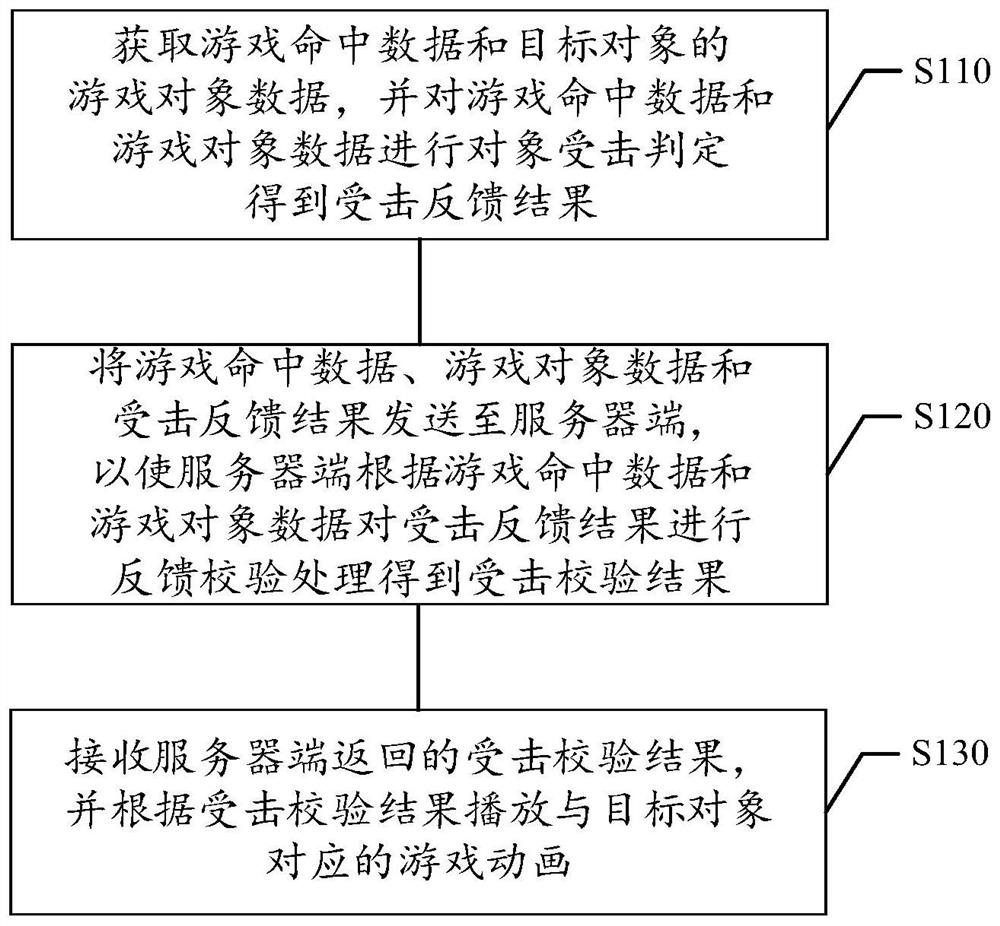

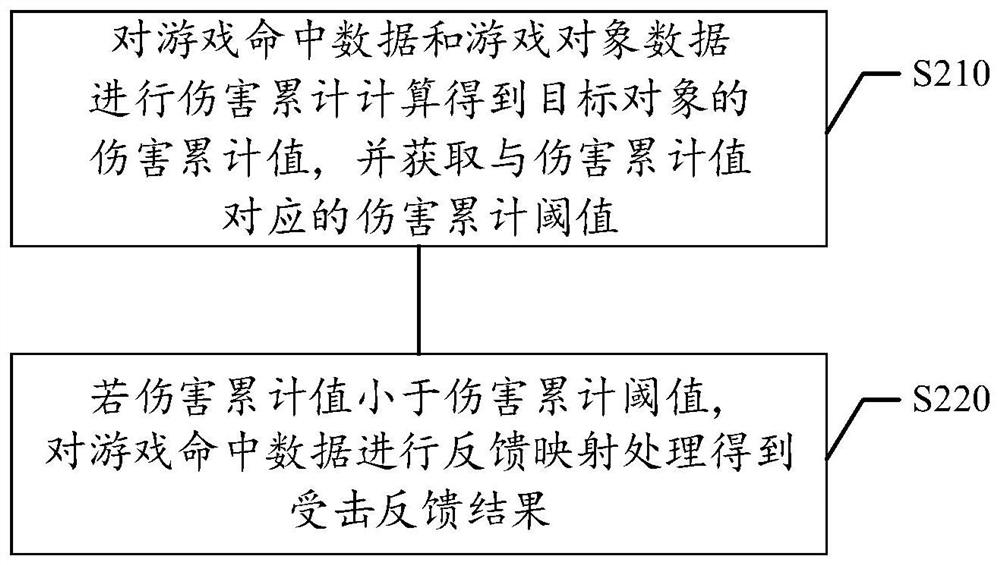

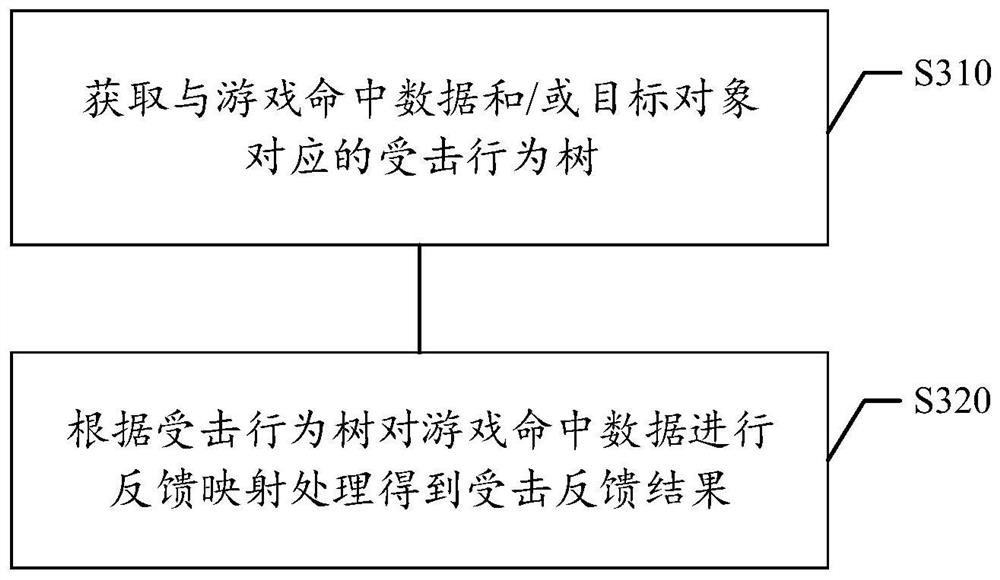

Game data processing method and device, storage medium and electronic equipment

The invention belongs to the technical field of computers, and relates to a game data processing method and device, a storage medium and electronic equipment. The method comprises the steps of obtaining game hit data and game object data of a target object, and performing object hit judgment on the game hit data and the game object data to obtain a hit feedback result; sending the game hit data, the game object data and the hit feedback result to a server side, so that the server side performs feedback verification processing on the hit feedback result according to the game hit data and the game object data to obtain a hit verification result; and receiving a hit verification result returned by the server side, and playing a game animation corresponding to the target object according to the hit verification result. According to the method and the device, the timeliness and the quickness of animated playing of the preceding game can be ensured, the problem that data of the terminal equipment and the server side are inconsistent is solved, meanwhile, the accuracy of the game data and the calculation result is ensured, and the situation of data cheating of the terminal equipment is prevented.

Owner:NETEASE (HANGZHOU) NETWORK CO LTD

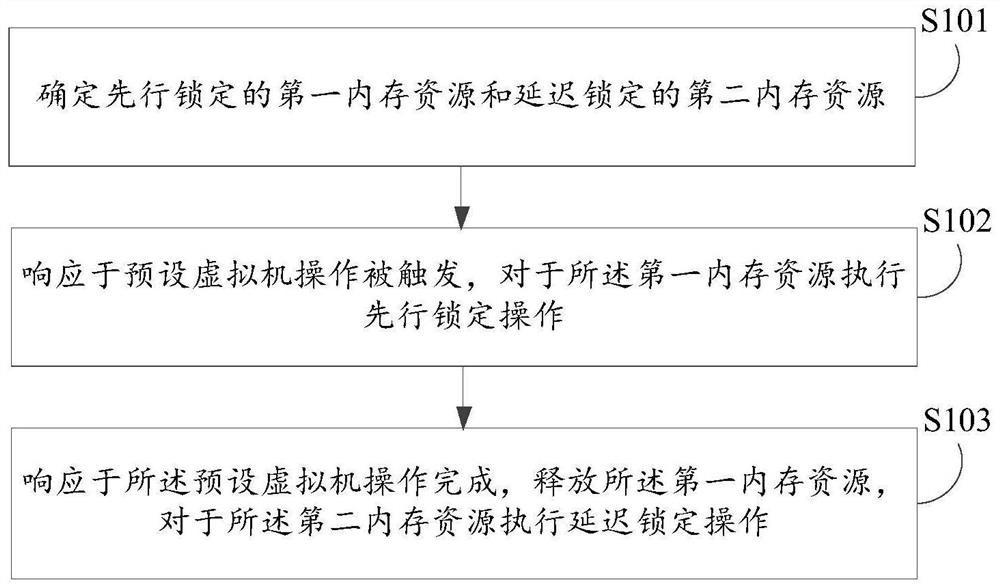

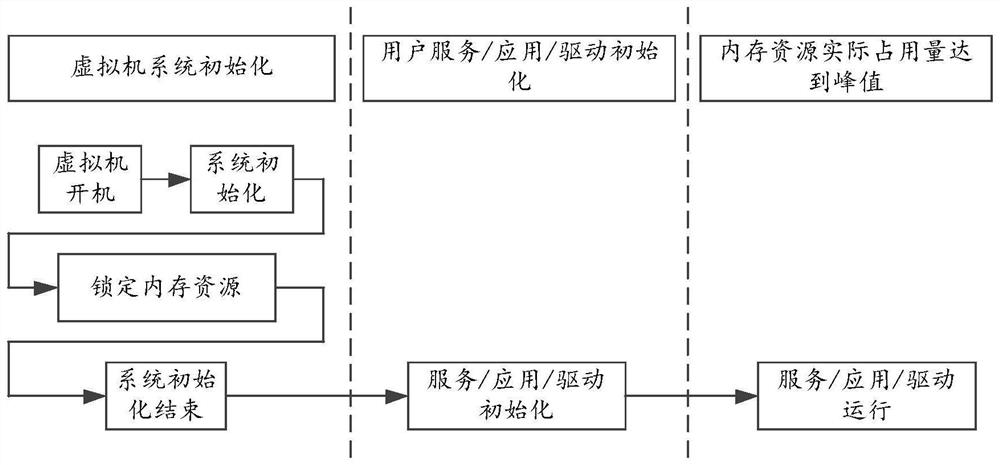

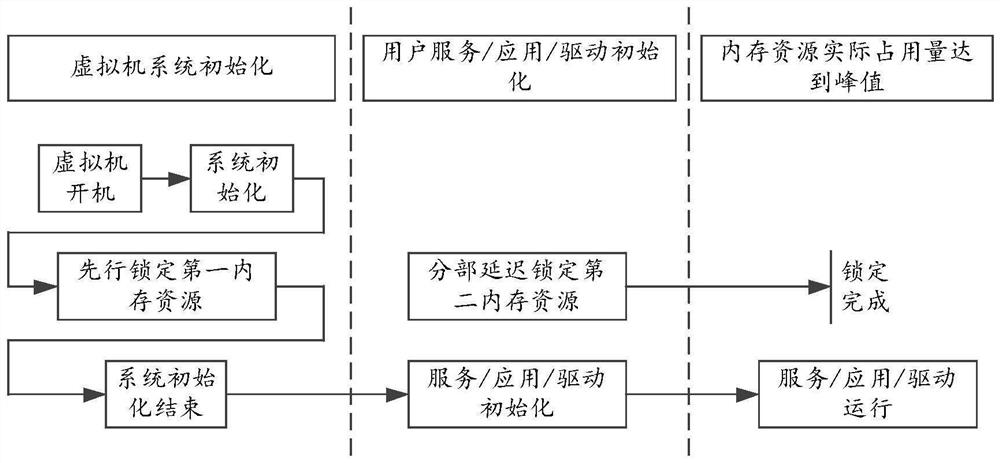

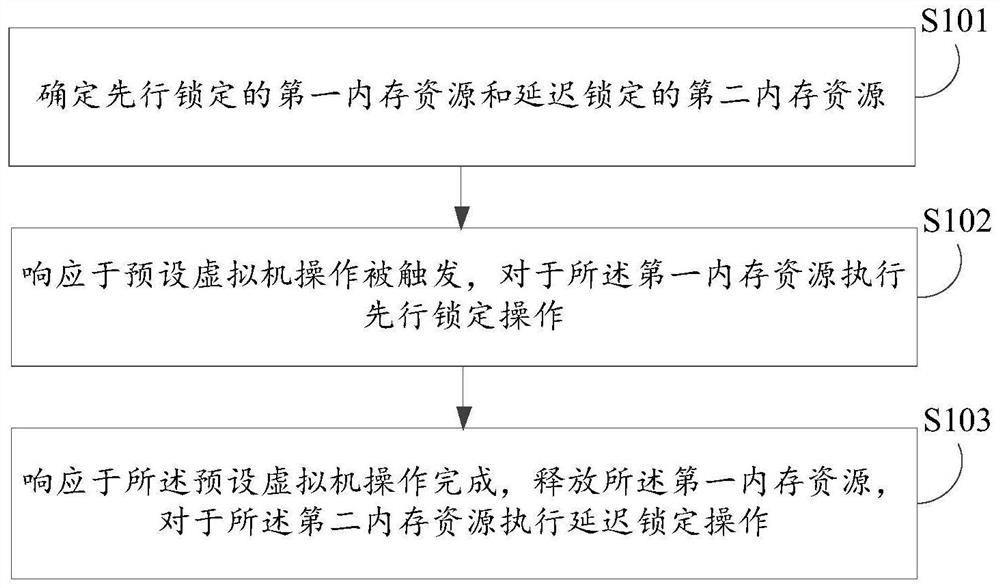

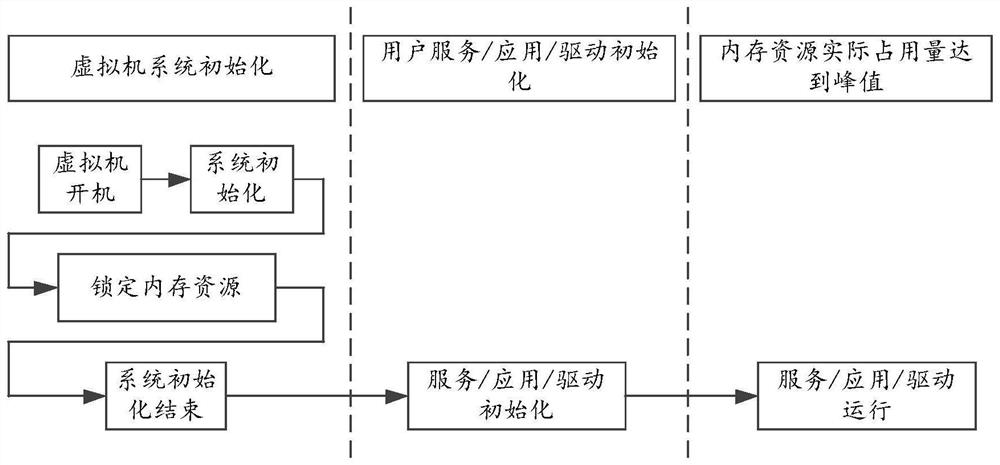

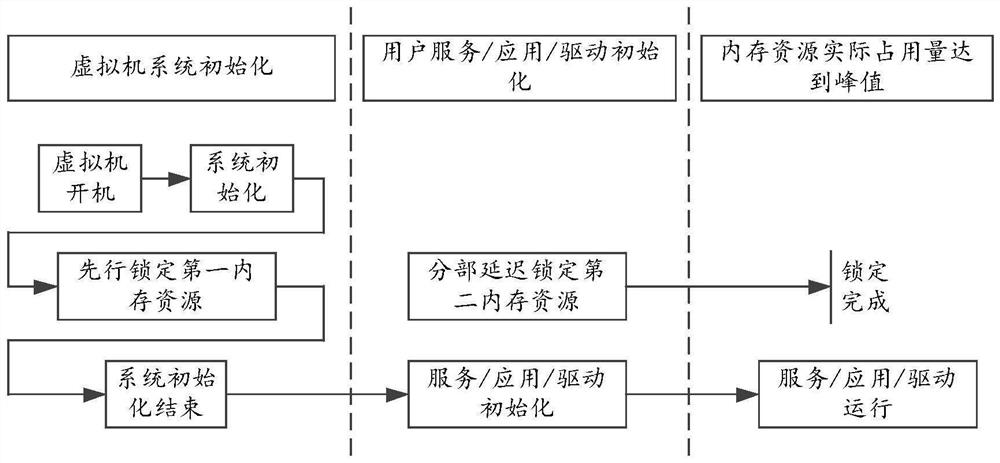

Memory management method, device, electronic device, and computer-readable storage medium

ActiveCN113377492BDoes not significantly increase startup timeGuarantee the quality of operationResource allocationSoftware simulation/interpretation/emulationTerm memoryComputer engineering

Embodiments of the present invention disclose a memory management method, apparatus, electronic device, and computer-readable storage medium. The method includes: determining a first memory resource locked in advance and a second memory resource locked by delay; A machine operation is triggered, and a pre-lock operation is performed on the first memory resource; in response to the completion of the preset virtual machine operation, the first memory resource is released, and a delayed lock operation is performed on the second memory resource. The technical solution does not significantly increase the startup time of the virtual machine even for large-capacity memory resources, thereby improving the startup and running speed and efficiency of the virtual machine on the premise of ensuring the running quality of the virtual machine.

Owner:ALIBABA CLOUD COMPUTING LTD

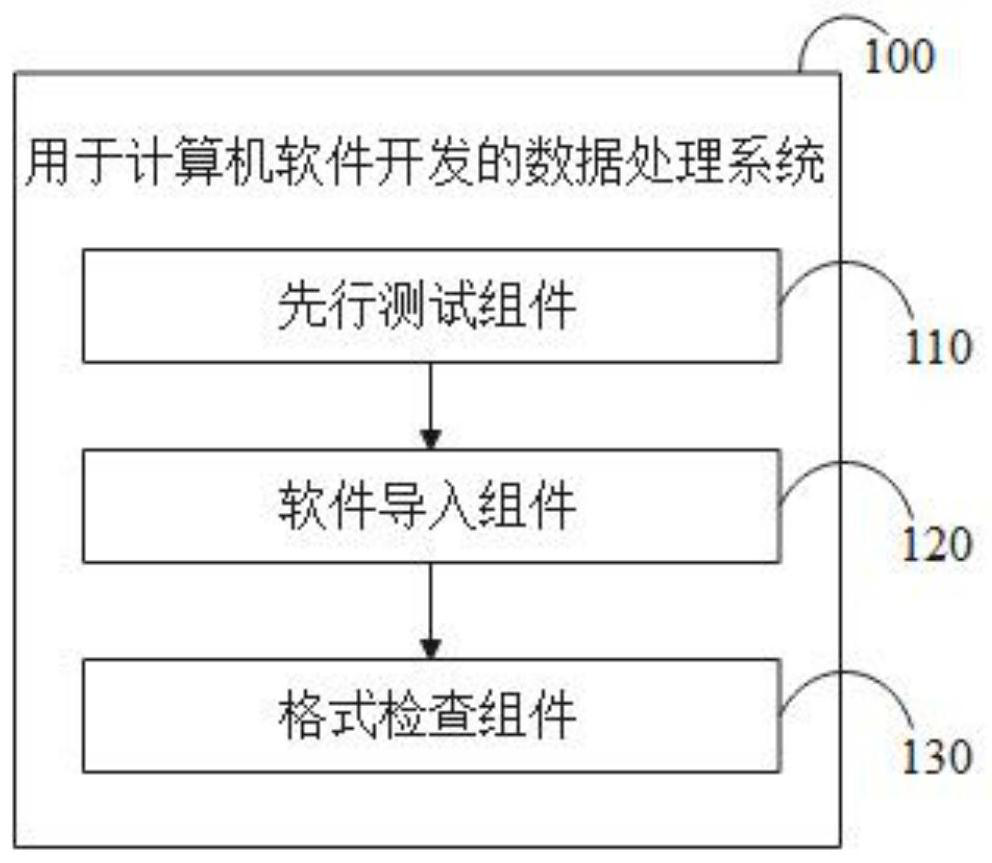

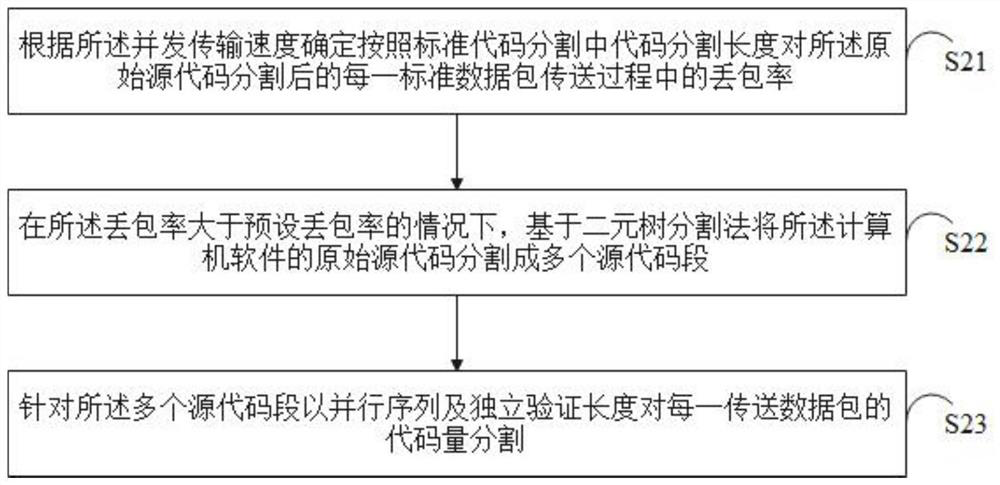

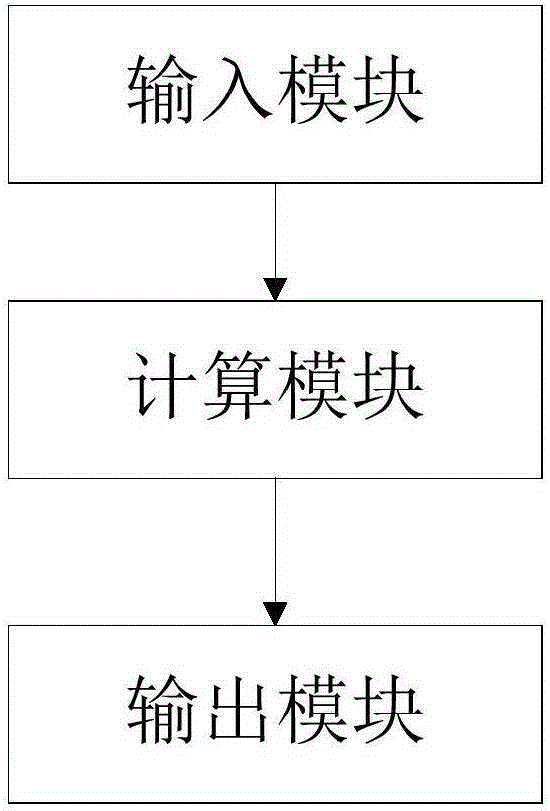

Data processing system for computer software development

ActiveCN112905157AAvoid wastingSpeed up the development processSoftware designCode compilationData packData processing system

The invention relates to a data processing system for computer software development, relates to the technical field of computer software development, and aims at solving the problems that in related scenes, the time cost of computer software development is high, and the accuracy of computer software for a computer operating system is low. The system includes a pre-test component, a software import component, and a format check component. A code quantity partition of a transfer packet of an original source code is determined based on the pre-test component, based on the software import component, the program code engine is imported according to the code quantity of each transmission data packet to generate a standard program code for an operating system of a computer, and abnormal codes which do not conform to a calling relation in the standard program code are marked based on the format check component, so that the computer software development process can be accelerated; therefore, the time cost of computer software development is reduced, waste of development resources is avoided, and the accuracy of the computer software for the computer operating system can be improved.

Owner:中山市明源云科技有限公司

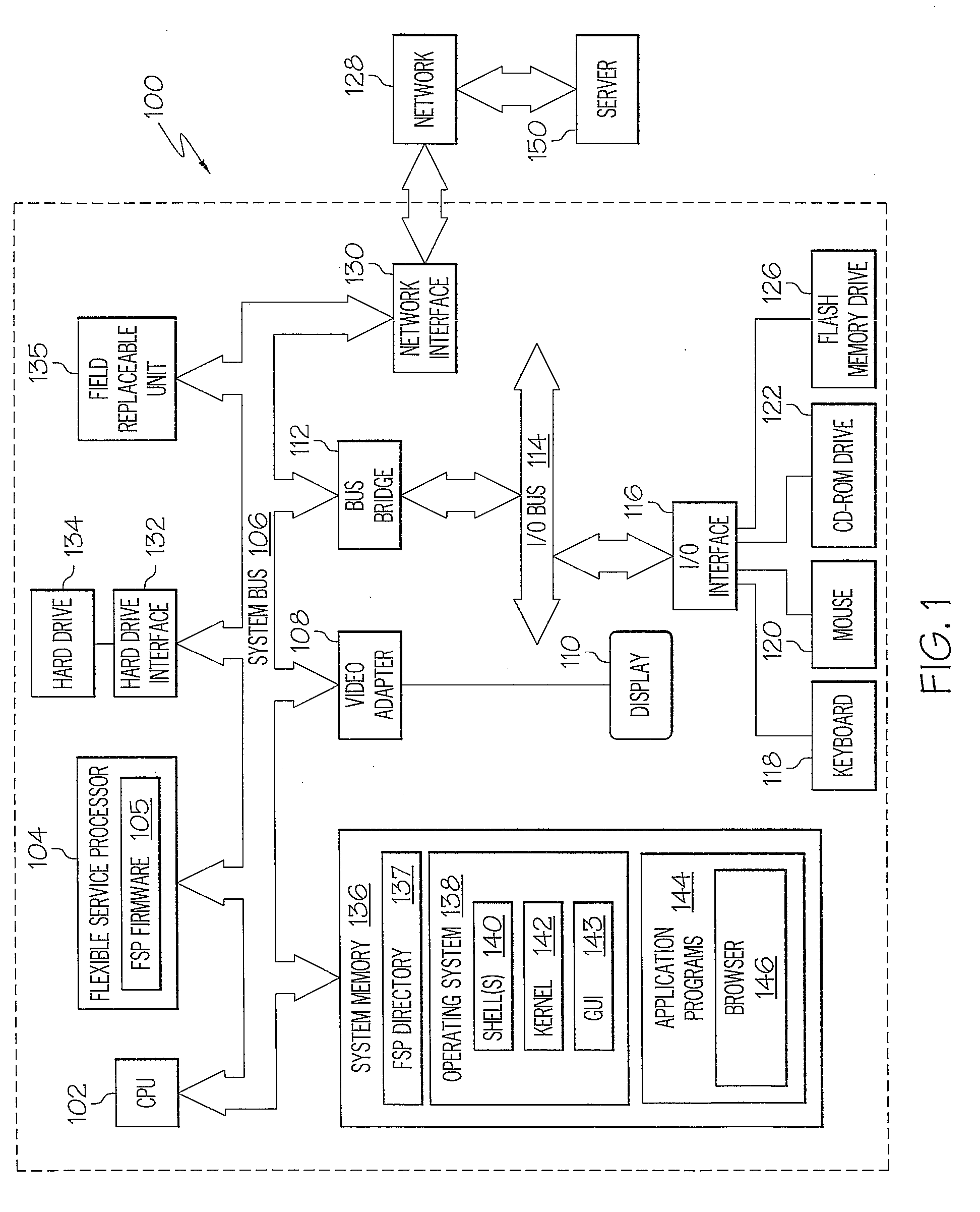

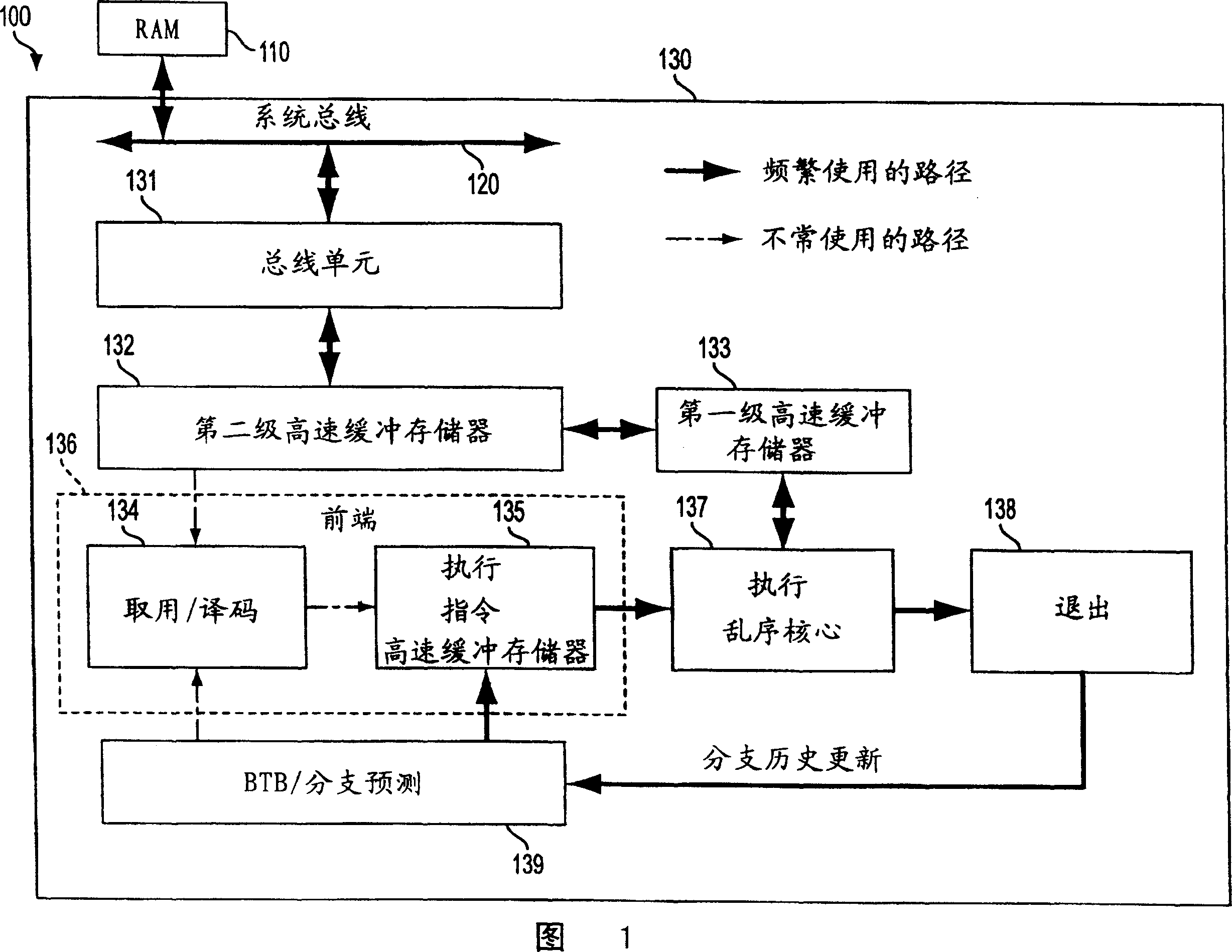

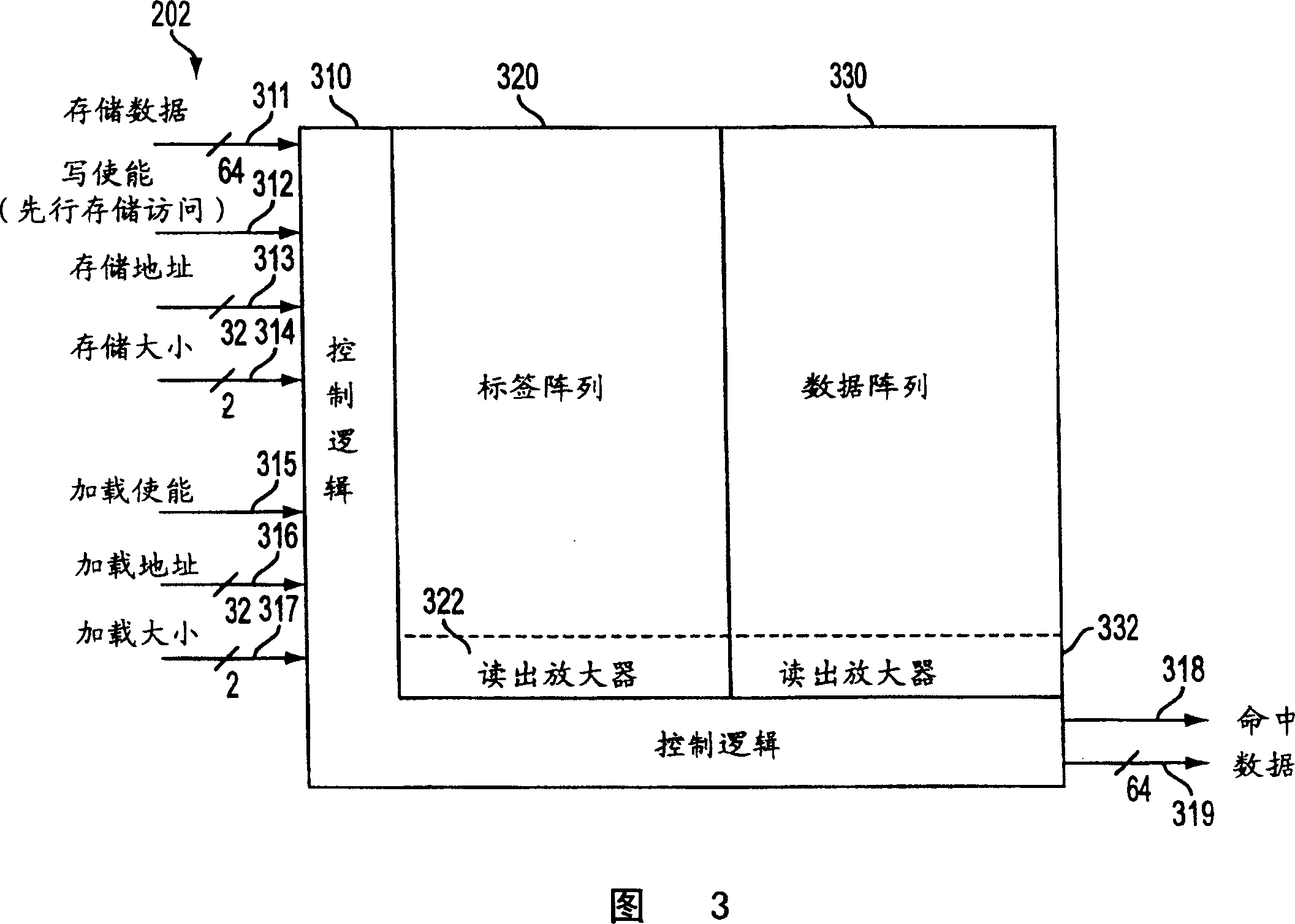

Appts. for memory communication during runhead execution

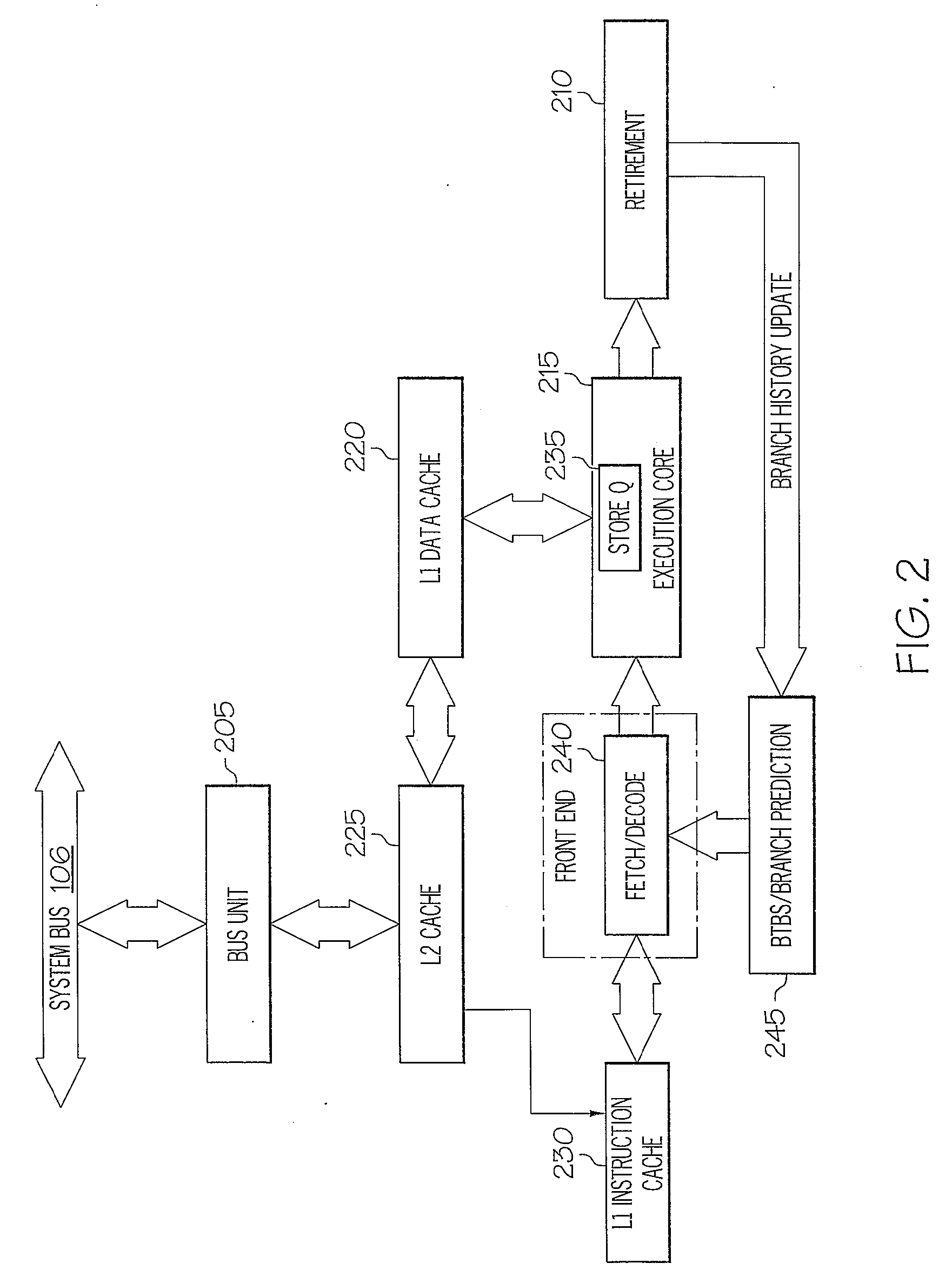

Processor architectures, and in particular, processor architectures with a cache-like structure to enable memory communication during runahead execution. In accordance with an embodiment of the present invention, a system including a memory; and an out-of-order processor coupled to the memory. The out-of-order processor including at least one execution unit, at least one cache coupled to the at least one execution unit; at least one address source coupled to the at least one cache; and a runahead cache coupled to the at least one address source.

Owner:INTEL CORP

High performance merge sort with scalable parallelization and full-throughput reduction

ActiveUS11249720B2Improve performanceMore scalableComputation using non-contact making devicesInterprogram communicationTransmission throughputParallel computing

Disclosed herein is a novel multi-way merge network, referred to herein as a Hybrid Comparison Look Ahead Merge (HCLAM), which incurs significantly less resource consumption as scaled to handle larger problems. In addition, a parallelization scheme is disclosed, referred to herein as Parallelization by Radix Pre-sorter (PRaP), which enables an increase in streaming throughput of the merge network. Furthermore, high performance reduction scheme is disclosed to achieve full throughput.

Owner:CARNEGIE MELLON UNIV

Lazy runahead operation for a microprocessor

ActiveUS9891972B2Concurrent instruction executionNon-redundant fault processingComputer scienceMicroprocessor

Owner:NVIDIA CORP

Memory management method and device, electronic equipment and computer readable storage medium

ActiveCN113377492ADoes not significantly increase startup timeGuarantee the quality of operationResource allocationSoftware simulation/interpretation/emulationStart timeEngineering

The embodiment of the invention discloses a memory management method and device, electronic equipment and a computer readable storage medium. The method comprises the steps that a first memory resource locked in advance and a second memory resource locked in a delayed mode are determined; in response to the fact that preset virtual machine operation is triggered, an advanced locking operation is executed on the first memory resource; and in response to the completion of the operation of the preset virtual machine, the first memory resource is released, and a delay lock operation is executed on the second memory resource. According to the technical scheme, the starting time of the virtual machine cannot be obviously increased even for large-capacity memory resources, and then the starting and running speed and efficiency of the virtual machine can be improved on the premise that the running quality of the virtual machine is guaranteed.

Owner:ALIBABA CLOUD COMPUTING LTD

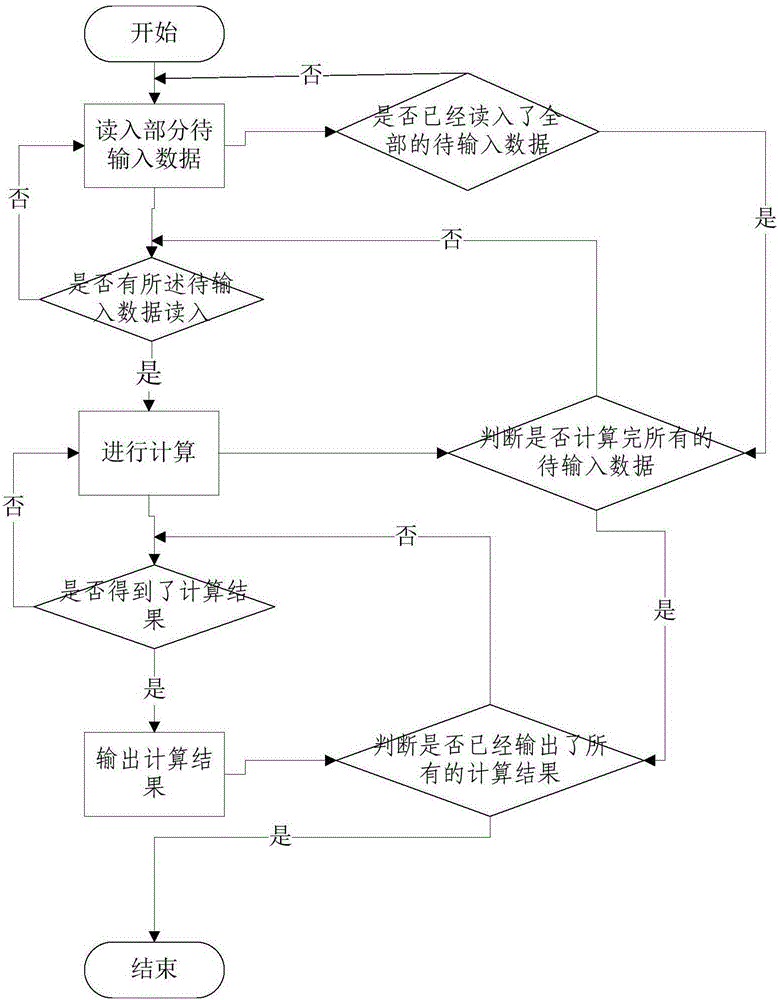

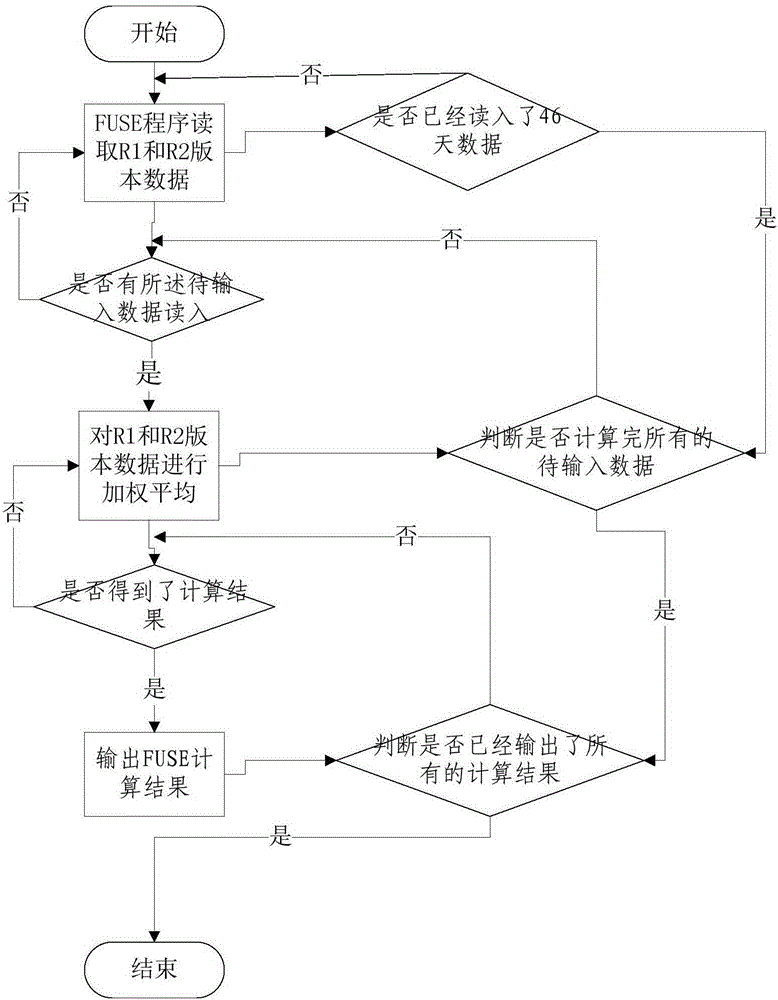

Data analysis processing method and system based on pre-read and post-write

ActiveCN105183366AAvoid storage jamsImprove operational efficiencyInput/output to record carriersTerm memoryMultithreading

The invention discloses a data analysis processing method and system based on pre-read and post-write. The data analysis processing method comprises the following steps: firstly, reading one part of data to be input; then, according to the input data, carrying out calculation in advance, and meanwhile, reading one rest part of data to be input or all data to be input to continue to carry out calculation; and meanwhile, when a calculation result can be output, outputting the result of the part in advance. The processing mode is subjected to parallel running through multithread programming so as to finish program running in a shortest time, and therefore, a memory can be dynamically and efficiently used. Program running efficiency can be improved by 75% through the introduction of a pre-read and post-write mechanism, and storage congestion caused when the data are subjected to batch input and output is avoided.

Owner:BEIJING NORMAL UNIVERSITY

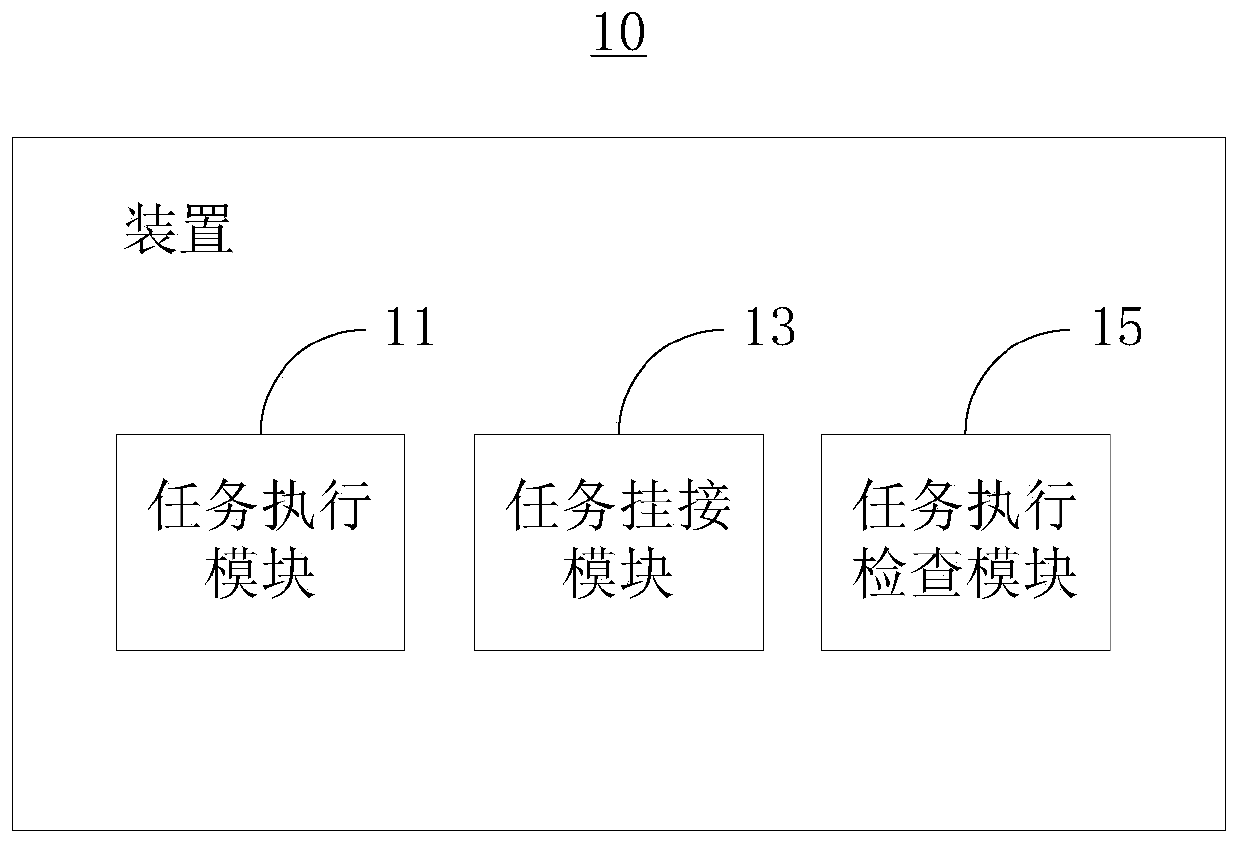

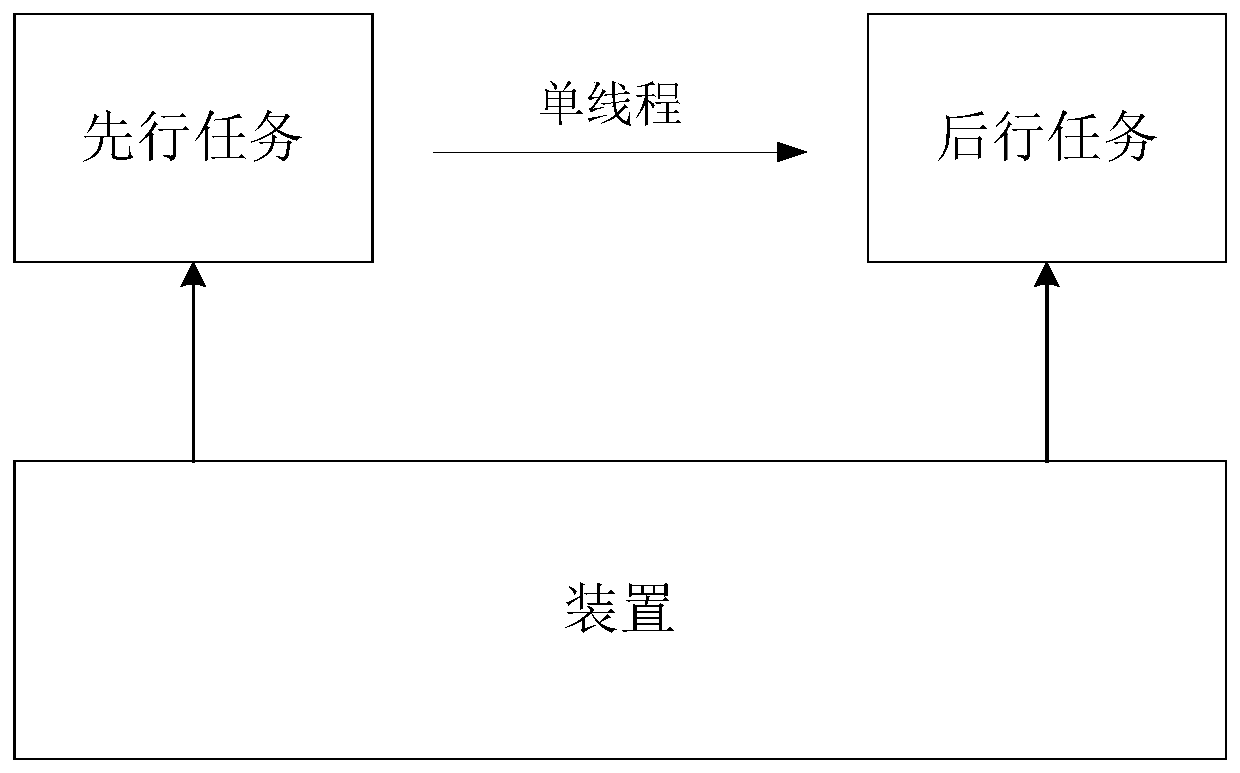

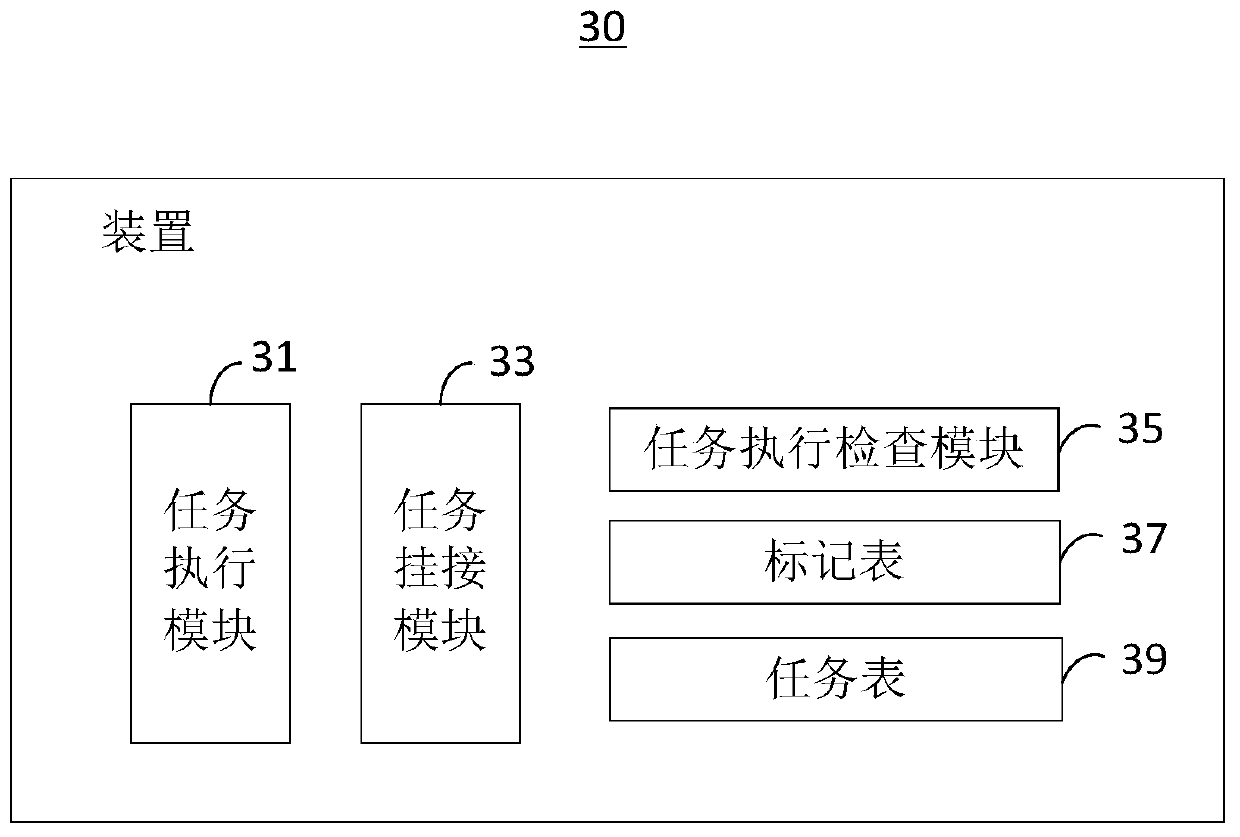

Program execution control device and method, terminal and storage medium

PendingCN111538577ASolving Execution Sequencing IssuesLow costProgram initiation/switchingExecution controlOperating system

The invention provides a program execution control device and method, a terminal and a storage medium. The device comprises a task execution module, a task hooking module and a task execution detection module. The method comprises the following steps: obtaining a to-be-executed task; when the task is a preceding task, executing the preceding task, and after the preceding task is executed, checkingwhether a subsequent task associated with the preceding task is obtained or not, if so, executing the subsequent task, and if not, recording the preceding task, and executing the subsequent task until the subsequent task is checked to be obtained; and when the task is a backward task, checking whether the preceding task associated with the backward task is executed or not, if so, executing the backward task, and if not, hooking the backward task until it is checked that the preceding task is executed completely, and then executing the backward task. According to the program execution controlscheme, the problem of execution sequencing between the associated tasks can be solved, and it is guaranteed that the preceding task is executed before the following task is executed.

Owner:BEIJING BYTEDANCE NETWORK TECH CO LTD

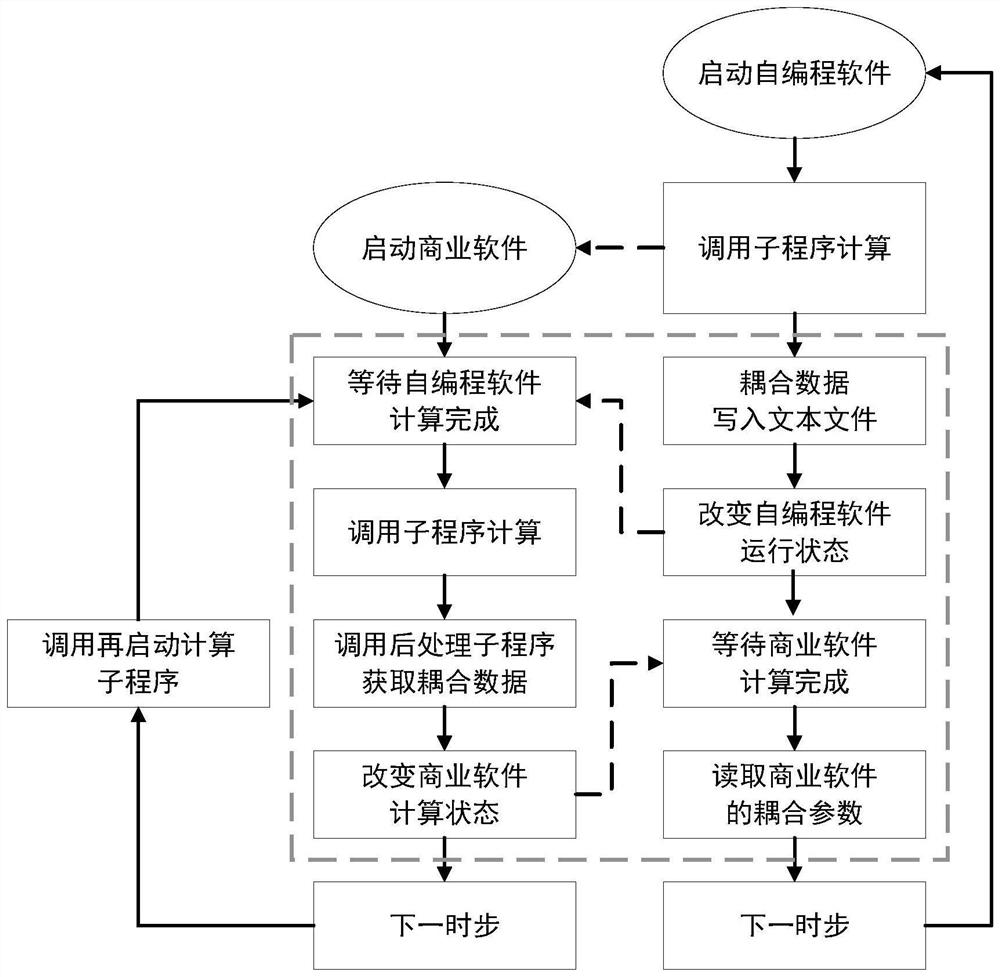

Method for coupling commercial software and self-programming software

PendingCN114860235AAchieve running stateRealize analysisNuclear energy generationVisual/graphical programmingControl flowAnalytic model

A method for coupling commercial software and self-programming software mainly comprises the following steps that 1, an analysis model of a research object is established based on a commercial software automatic modeling script and the self-programming software, and coupling transmission parameters and control process parameters are determined; 2, selecting one of the commercial software and the self-programming software, namely the software A, calculating once in advance, outputting a result file, and waiting for the other software, namely the software B, to output a result file; 3, the software B reads the result file of the software A through the coupling interface, the coupling transmission parameters of the software A are mapped to the model nodes of the software B through a data mapping method, the software B calculates once and outputs the result file, and the calculation result of the software B is read and mapped by the software A, and the next calculation is carried out; 4, circularly executing the steps 2 and 3 until the total time step number; by means of the calculation method, coupling calculation and multi-physics field refined simulation between commercial software and self-programming software can be achieved.

Owner:XI AN JIAOTONG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com