Methods for multi-class cost-sensitive learning

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Example

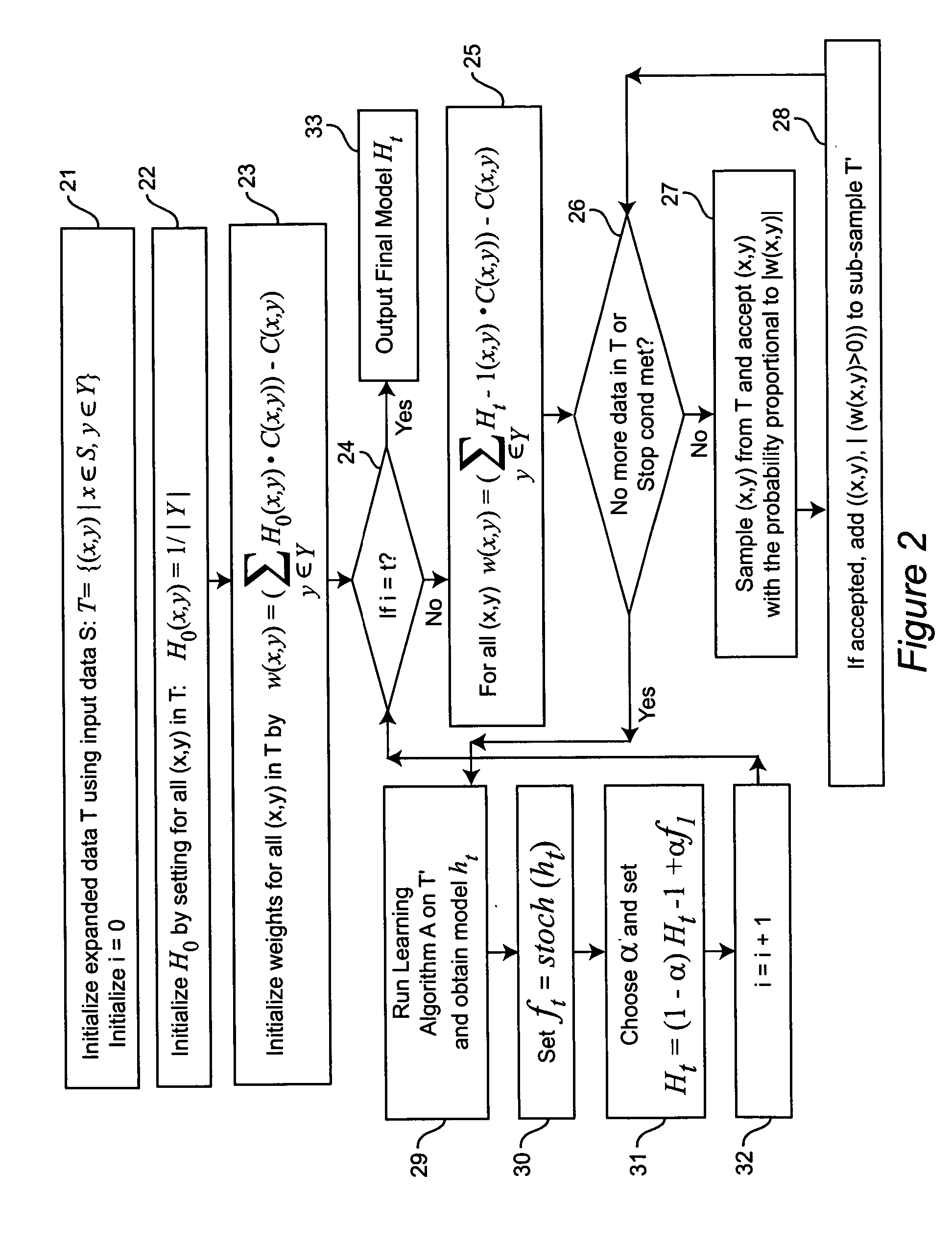

[0018] We begin by introducing some general concepts and notation we use in the rest of the description.

Cost-Sensitive Learning and Related Problems

[0019] A popular formulation of the cost-sensitive learning problem is via the use of a cost matrix. A cost matrix, C(y1, y2), specifies how much cost is incurred when misclassifying an example labeled y2 as y1, and the goal of a cost-sensitive learning method is to minimize the expected cost. Zadrozny and Elkan (B. Zadrozny and C. Elkan, “Learning and making decisions when costs and probabilities are both unknown”, Proceedings of the seventh International Conference on Knowledge Discovery and Data Mining, pp. 204-213, ACM Press, 2001) noted that this formulation is not applicable in situations in which misclassification costs depend on particular instances, and proposed a more general form of cost function, C(x, y1 , y2), that allows dependence on the instance x. Here we adopt this general formulation, but note that in the reasonable ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com