User-directed navigation of multimedia search results

a multimedia search and user-directed technology, applied in multimedia data indexing, metadata video data retrieval, instruments, etc., can solve the problems of search engine inability to provide the corresponding audio/video podcast, and the limited metadata information that describes the audio content or video content is typically limited

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

Generation of Enhanced Metadata for Audio / Video

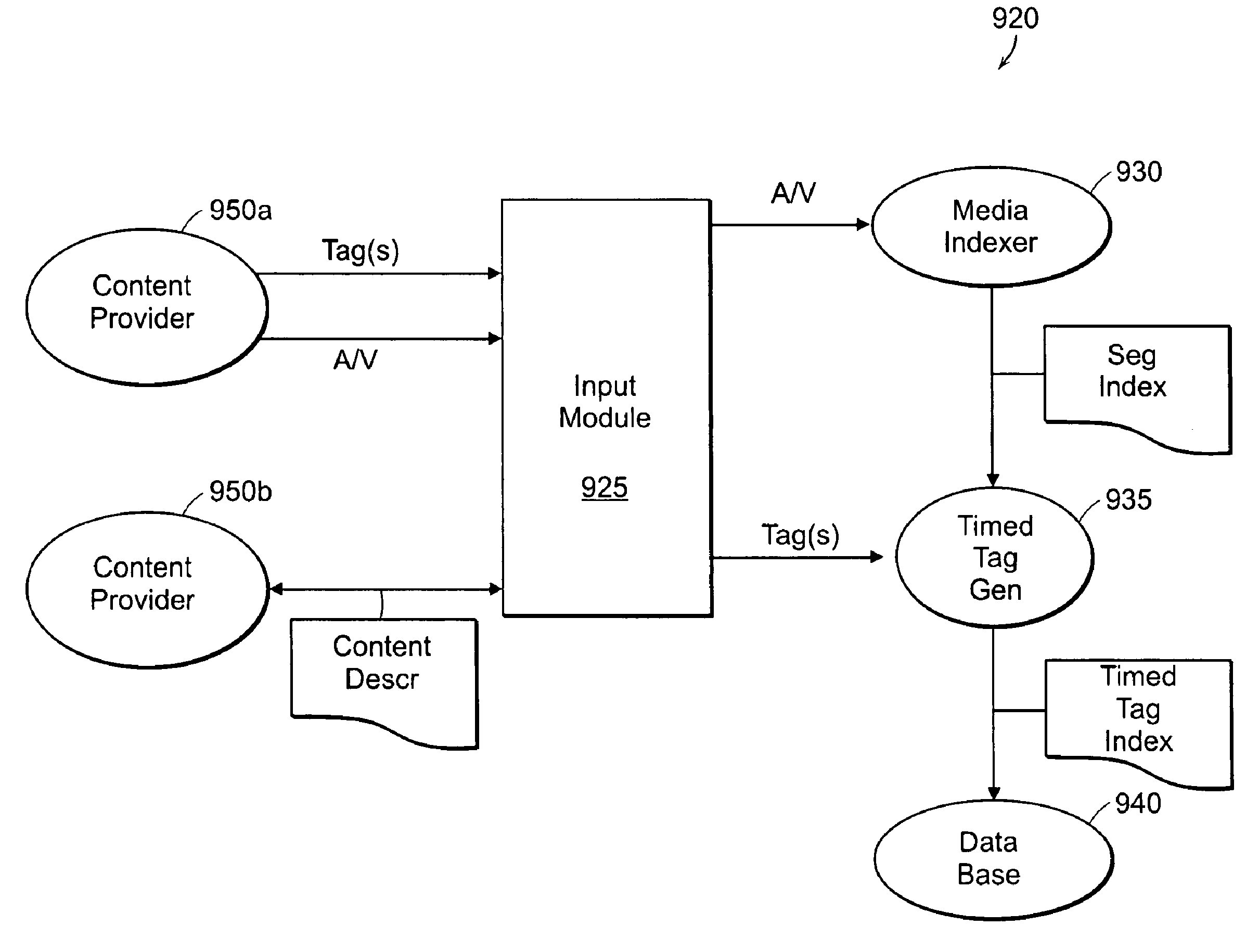

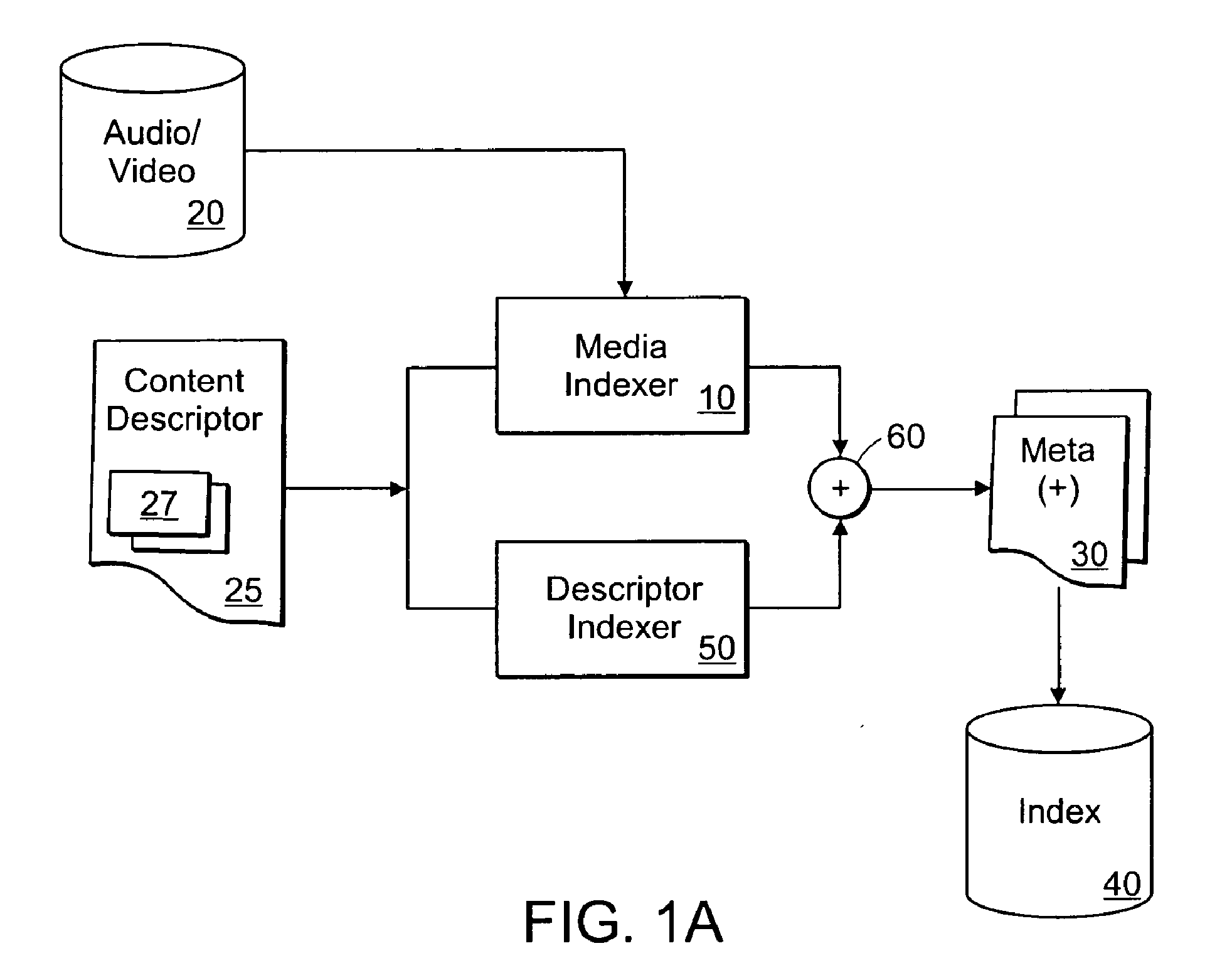

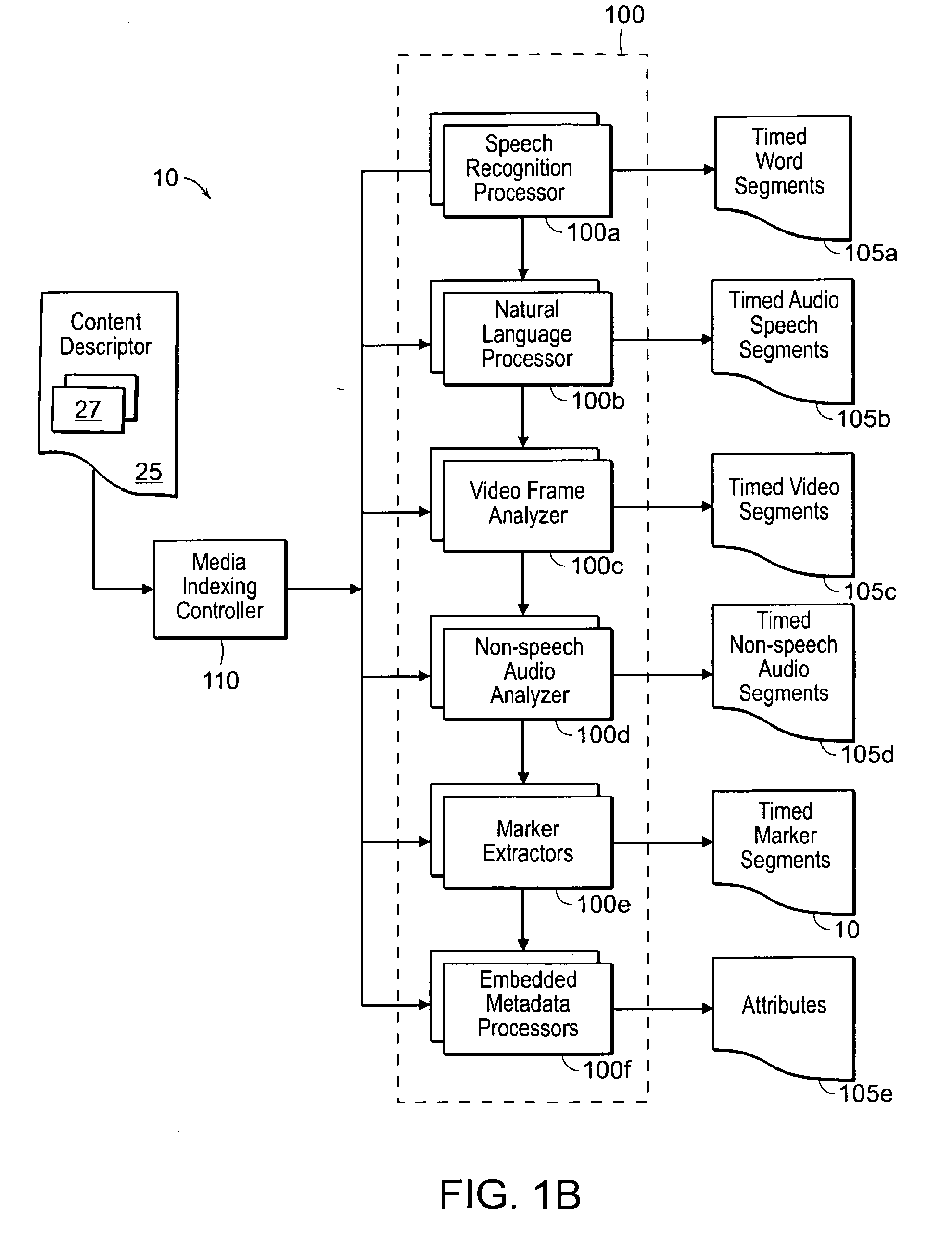

[0026]The invention features an automated method and apparatus for generating metadata enhanced for audio / video search-driven applications. The apparatus includes a media indexer that obtains an media file / stream (e.g., audio / video podcasts), applies one or more automated media processing techniques to the media file / stream, combines the results of the media processing into metadata enhanced for audio / video search, and stores the enhanced metadata in a searchable index or other data repository.

[0027]FIG. 1A is a diagram illustrating an apparatus and method for generating metadata enhanced for audio / video search-driven applications. As shown, the media indexer 10 cooperates with a descriptor indexer 50 to generate the enhanced metadata 30. A content descriptor 25 is received and processed by both the media indexer 10 and the descriptor indexer 50. For example, if the content descriptor 25 is a Really Simple Syndication (RSS) document, th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com