Emotional Speaker Recognition Method Based on Spectrum Shifting

A speaker recognition and spectrum technology, applied in speech analysis, instruments, etc., can solve problems such as non-compliance with application requirements

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0064] When the inventive method is implemented:

[0065] Step 1: Audio Preprocessing

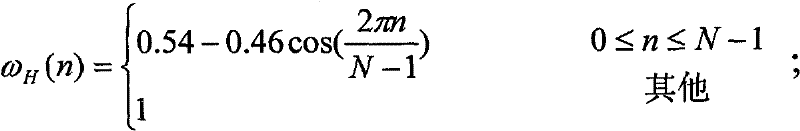

[0066] Audio preprocessing is divided into four parts: sample quantization, zero drift removal, pre-emphasis and windowing.

[0067] 1. Sampling and quantization

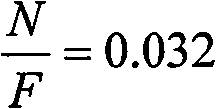

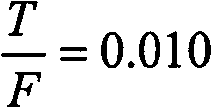

[0068] A) Use a sharp cut-off filter to filter the collected audio signal to be tested so that its Nyquist frequency F N 4KHZ;

[0069] B) Set audio sampling rate F=2F N ;

[0070] C) For audio signal s a (t) Sampling by period to obtain the amplitude sequence of the digital audio signal s ( n ) = s a ( n F ) ;

[0071] D) Use pulse code modulation (PCM) to quantize and encode s(n), and obtain the quantized representation of the amplitude sequence s'(n).

[0072] 2. Go to zero drift

[0073] A) Calculate the mean of the quanti...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com